Abstract

This chapter describes a methodological strategy for studying the influence of coupling constraints on interpersonal coordination using cross-recurrence quantification analysis (CRQA). In Study 1, we investigated interpersonal coordination during conversation in virtual-reality (VR) and real-world environments. Consistent with previous studies, we found enhanced coordination when participants were talking to each other compared to when they were talking to experimenters. In doing so we also demonstrated the utility of VR in studying interpersonal coordination involved in cooperative conversation. In Study 2, we investigated the influence of mechanical coupling on interpersonal coordination and communication, in which conversing pairs were coupled mechanically (standing on the same balance board) or not (they stood on individual balance boards). We found a relationship between movement coordination and performance in a conversational task in the coupled condition, suggesting a functional link between coordination and communication. We offer these studies as methodological examples of how CRQA can be used to study the relation between interpersonal coordination and conversation.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Task Performance

- Couple Condition

- Postural Coordination

- Movement Coordination

- Recurrence Quantification Analysis

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

When people converse they exhibit a tendency to coordinate with their conversational partners. For example, classic studies using videotaped evaluations of dyadic interactions have shown that people are likely to nod and gesture in synchrony and reciprocation with their partners (interactional synchrony [1, 2]), and when they listen to an engaging speaker they are likely to share postural configurations with that speaker [3, 4]. In subsequent years, available technology has evolved considerably beyond the hand scoring of video tapes, creating both new possibilities and new challenges for interpersonal coordination research. For example, the movements that people produce exhibit meaningful structure at many scales of variation [5, 6], and this can be captured objectively and accurately using modern motion tracking technology. The high-resolution, continuous signals afforded by this equipment yield a rich source of data for detailed analysis of interpersonal coupling. However, these types of continuous time-domain signals can present a range of challenges, including non-stationarity and irregularity, making them unsuitable for many conventional analyses that are based on assumptions of stationarity and normality. Moreover, typical summary measures, such as mean and standard deviation of individual time series statistics—statistics that work well for quantifying individual performance—are not designed to index the degree of coordination between individuals. In answer to these challenges, this chapter describes a methodological strategy for studying interpersonal coordination that attempts to overcome problems presented by complex time-series.

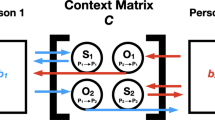

In subsequent sections, we demonstrate two applications of cross-recurrence quantification analysis (CRQA [7–10]), an extension of recurrence quantification analysis (RQA; [8, 11–14]), both of which are methods that are well-suited for the analysis of complex, irregular time series data. Importantly, CRQA, is especially well-suited to capture the coupling between the time-evolving dynamics of noisy, nonstationary time series data. While there are several sophisticated time series methods that may be used to analyze interpersonal coordination, including wavelet analysis [15, 16] and cross-spectral coherence [17], CRQA methods are particularly useful for studying postural sway dynamics which are notoriously irregular and non-stationary (e.g. [11]), and these methods have proven invaluable for quantifying interpersonal coordination across a range of contexts (see [18] for a recent review). In the present work, we therefore utilize CRQA to quantify interpersonal postural coordination.

Previous research using CRQA to quantify interpersonal coordination has shown that spontaneous coordination of postural sway—the continuous, low-amplitude, and complex pattern of fluctuation of the position of the body’s center of mass—arises when two people engage in cooperative conversation [19–21]. Shockley et al. [19] developed an experimental paradigm that involves tracking participants’ postural sway while they converse to jointly solve a find-the-differences puzzle, a task in which each participant views a picture that is similar to that viewed by the other, except for a few subtle differences which they are asked to find via conversation. When participants converse with each other to solve the task, their postural sway becomes coordinated, regardless of whether they can see each other. This postural coordination emerges from constraints imposed by the cooperative nature of the tasks which go beyond purely biomechanical constraints resulting from coordinated speech patterns [22]. In other words, postural coordination seems to reflect cognitive coordination and the intention of the participants to work together to solve the puzzle [23]. This latter idea is complemented by findings suggesting that motor behavior is causally related to the evolution of cognitive trajectories [24, 25]. Given this understanding, evaluating changes in interpersonal movement coordination may prove to be a means of detecting otherwise covert variations in cognitive alignment between conversing individuals.

The present chapter reports two experiments that used CRQA to quantify how interpersonal coordination between conversing dyads changed in response to manipulations of certain coupling parameters. A variety of factors might serve to couple the activities of two conversing individuals. In Study 1, we focused on perceptual factors that might be involved in coupling two conversants’ activities using a relatively simple virtual-reality (VR) environment. Study 2 focused on factors that might play a role in coupling when individuals are mechanically linked to each other via the support surface.

In Study 1, we implemented the find-the-differences task of Shockley et al. [19] to determine whether interpersonal coordination occurs when individuals interact in a VR environment. It has been shown that direct visual access to a conversational partner may not be a necessary condition for emergent interpersonal coordination [19], though several other studies investigating rhythmic interpersonal coordination have shown that visual coupling parameters are important for interpersonal coordination [16, 26]. The consequence of the nature of visual coupling in interpersonal contexts, therefore, requires further investigation. Moreover, Study 1 is important for methodological reasons—if interpersonal coupling is equivalent in a VR setting as in a real environment, then the powerful tools of VR can be implemented to study interpersonal coordination. This is important because VR allows for manipulations that may not be possible or easy to achieve in a real environment, such as manipulating one person’s movements artificially to attempt to enhance or disrupt interpersonal coordination.

In Study 2, individuals performed the same find-the-differences task while coupled mechanically (they stood on the same balance board) or not (they stood on individual balance boards). It has been shown that standing on unstable support surfaces adversely affects the stability of postural sway [27], measures of interpersonal coordination [21], and performance on cognitive tasks [28]. It is thus important to determine how biomechanical factors influence interpersonal coordination and, thus, effective communication.

2 Study One: The Influence of Informational Coupling on Interpersonal Coordination

This experiment quantified interpersonal coordination between participants who interacted in a virtual-reality (VR) environment while performing find-the-differences tasks. Our experimental design partly followed that of Shockley et al. [19] by investigating the effects on interpersonal coordination of task-partner, crossed with the additional factor of task environment. Participants performed the find-the-differences task either with each other, or with confederate task partners, but while in the presence of the other participant and his or her task partner (interpersonal coordination was only assessed between the two participants). Following Shockley et al., interpersonal coordination was measured in this study via CRQA of postural sway. We also directly compared interpersonal coordination in VR with interpersonal coordination in the real world to determine if the overall amount of coordination, the temporal structure of the coordination, and the overall stability of the coordination were of similar magnitudes in VR and the real world.

With respect to task environment, we predicted that we would find the same patterns of coordination in both the real world and VR conditions, and that these patterns would be comparable to those that were found in the original investigation by Shockley et al. [19]. This prediction was motivated, in part, by the finding in Shockley et al. that participants did not have to see each other to coordinate with each other. Regarding the task-partner manipulation, we predicted (again following Shockley et al.) that coordination would be higher within pairs when they were discussing the pictures together rather than with confederate partners.

2.1 Method

Twenty-eight participants (14 pairs), recruited from the University of Cincinnati Psychology Department Participation Pool, took part in this IRB-approved study after giving written consent to participate. Participants received course credit in return for their participation. All participants were screened to ensure the absence of neurological and movement disorders.

Motion data were obtained using an Optotrak Certus system (Northern Digital Industries, Waterloo, Ontario, Canada) with a sampling rate of 30 Hz. Participants wore spandex bodysuits with 34 motion-tracking markers affixed to their legs, arms, and torso. They also wore running gloves with motion tracking markers affixed to the dorsal sides of the gloves. This full-body motion was projected onto the movements of virtual avatars in a custom OpenGL program. Participants wore Vissette 45 head-mounted displays (Cybermind, Maastricht, the Netherlands) with a resolution of 1,280 \(\,\times \,\) 1,024 pixels and a transparent visor. In the VR conditions, the displays were turned on and an occluding cover was placed over the transparent visor, while in the real-world conditions the displays were turned off and the occluding covers removed.

During their conversational tasks, participants stood 195 cm apart from each other with their heels approximately shoulder-width apart, and were allowed to move their upper bodies freely. The pictures on a given trial were identical, except for 10 differences in each set. Participants were asked to find the differences by discussing the details of their pictures with one another or with their confederate partners. Different picture sets were employed for each trial. The order of picture presentation was completely randomized, with the exception of the very first picture, which was the same practice picture for all pairs.

In the real-world condition, the pictures were attached to wooden stands to the immediate left of each participant at approximately eye-level. Participants looked at the picture to the side of their partner and could not see their partner’s picture on their own left. In the VR condition, the pictures were in the same relative location, but were hi-definition digital copies of the real-world pictures (color bitmap images measuring 960 \(\,\times \,\) 720 pixels). During the task, pairs were instructed to say “That’s a difference” whenever they discovered, or thought they discovered, a difference. To index task performance, researchers kept track of the conversation in real time and verified the pair’s self-reported performance against picture puzzles identical to those being discussed.

The environment manipulation was crossed with a task-partner manipulation, in which participants either solved the puzzles with each other or with a confederate partner. In these conditions, each confederate stood to the side of their respective partner, out of the direct line of sight of either of the participants. Confederates were instructed to record differences found and to attempt to perform as naïve participants during the trials by engaging in each conversation as if they had never seen a given picture before. The purpose of the confederate condition was to serve as a de facto control condition, in that participants were performing the same basic task but were not interacting with each other. Trials were blocked by condition and the blocks were randomized. There were four trials in each condition, plus one practice trial, yielding 17 trials per pair, each lasting 130 s.

Measures of anterior-posterior (AP) movements from the torso (the lower back) and from the front of the head were analyzed. The phase space of each time series was reconstructed using the method of delays [29] by unfolding the recorded time series into a 7-dimensional phase space using a time delay of 62 samples. For CRQA, a radius of 27 % of the mean distance separating points in the phase space was considered a recurrent point (i.e., a shared postural configuration).

Prior to CRQA, the first and last 5 s of data were truncated to remove transients. Data were then analyzed for anomalies that sometimes occur with optical motion tracking systems, which appear as very large amplitude spikes of short duration. To do this, a 14 Hz, 2nd order, high-pass Butterworth filter was applied to the data and deviations between the filter and the data that were determined to be outliers via the Grubb’s outlier test were removed from the original signal. Time series were then analyzed for amount of missing data, and any trial with greater than 40 contiguous missing samples was removed from the analysis. Any missing data points in retained observations were then interpolated with a cubic spline and the signal was then filtered with a 14 Hz, 2nd-order, high-pass Butterworth filter. The marker at the torso location resulted in a large amount of lost data due to its relative positioning with respect to the camera configuration, resulting in a decreased sample for that location compared to the head.

Of the dependent measures that are available using CRQA, three were used: Percent recurrence (%REC), percent determinism (%DET), and maximum diagonal line length (LMAX). %REC is the total number of instances that two time series visit coinciding regions in their phase spaces, and in the context of human movement coordination has been shown to be a measure of global coordination that doesn’t take into account the temporal patterning of the recurrent points [30, 31]. %DET is a measure of the total number of recurrent points that are located along diagonal lines, defined in the reported analyses as two contiguous recurrent points. This measure indexes the probability that a given recurrent point forms part of a recurring series, which gives insight into the structure of the coordination, since single chance recurrences lower %DET. LMAX is the longest diagonal line in the recurrence matrix—literally how long the two time series can maintain a common pattern—and is thus a measure of the stability of the observed coordination.

2.2 Results

Results from CRQA and task performance were sorted by condition and analyzed for outliers at the trial level, with any observation that was an outlier for any variable being removed from all measures obtained for that trial (e.g., if an observation was an outlier in the %REC distribution for a given condition, then that trial was removed from %REC, %DET, LMAX and task performance analyses). Data from each pair were then averaged over trials in each condition, yielding one observation per pair per condition. These reduced data were again assessed for outliers, with any observation that was an outlier for a given variable being removed for all variables, removing that pair from subsequent analyses. Data were then submitted to separate two-way within-subjects analyses of variance (ANOVAs) with environment (real or VR) and task-partner (confederate or participant) as factors.

Task performance data are presented in Table 1. There were no significant main effects of environment or task partner on task performance, nor was there an interaction of environment and task partner on task performance (all p \(>\) 0.05).

The CRQA variables were affected by both the environment and task partner manipulations. At the torso, there was a main effect of task partner on %REC, F(1, 6) \(=\) 6.28, p \(=\) 0.046, %DET, F(1, 6) \(=\) 18.35, p \(=\) 0.005, and LMAX, F(1, 6) \(=\) 7.51, p \(=\) 0.034, with all three being higher when participants were talking with each other than when they were talking with the confederates (see Fig. 1; an example CRQ plot is shown in Fig. 2).

With respect to head movement, we found that being immersed in the virtual environment rather than completing the task in the real world resulted in increased %REC, F(1,11)\(=\) 10.12, p \(=\) 0.009, %DET, F(1,11) \(=\) 14.61, p \(=\) 0.003, and LMAX, F(1,11) \(=\) 11.96, p \(=\) 0.005, indicating that head coordination patterns were altered by VRimmersion in a way that increased the overall similarity of the two participants’ head movements as well as the shared structure and pattern stability of head movements within interacting pairs.There was also a main effect of task-partner on LMAX measured at the head, F(1,11) \(=\) 8.27, p \(=\) 0.015—coordination patterns between participants were higher when they were talking to each other versus when they were talking to the confederates.

There was also an interaction between environment and task partner on LMAX measured at both the torso, F(1, 6) \(=\) 7.89, p \(=\) 0.031, and the head, F(1, 11) \(=\) 5.53, p \(=\) 0.038. Simple-effects analyses found no differences in LMAX of head or torso coordination when participants were talking with each other or with confederates in the real world condition (ps \(>\) 0.05), but coordination pattern stability was greater when participants were talking to each other than when talking with confederates in the VR condition for both the head, p \(=\) 0.027, and the torso, p \(=\) 0.014.

2.3 Discussion of Study One

Similar to the original findings of Shockley et al. [19], we found a main effect of task partner on %REC measured at the torso, meaning that participants shared more postural configurations when they were discussing the pictures as a pair rather than when they were separately discussing the pictures with confederate partners. We also found that task partner affected %DET and LMAX in the same manner on measurements at the torso. Thus, in line with our hypothesis, we found evidence of the influence of informational coupling via verbal communication on movement patterns using CRQA measures. This suggests that VR settings are sufficient to support interpersonal postural coordination, and, apparently, to support interpersonal cognitive coordination, given that performance of the joint find-the-differences task was equivalent across the VR and real-world conditions. This finding may have many important practical implications for using VR technologies for collaborative work and training.

Unexpectedly, we also found that when pairs were working in the VR condition their head movements exhibited greater similarity and more shared patterns than when they performed the task in the real world condition. This finding complements findings of Stoffregen et al. [20], in which participants performed the same find-the-differences task, but under different visual constraints. Those authorsevaluated the influence of target distance and target size on postural coordination crossed with the same task-partner manipulation reported here and in Shockley et al. [19]. They found that when participants were discussing closer targets or larger targets they exhibited greater shared head configurations than when they discussed targets that were farther away or smaller. Their findings, along with the present findings, show the sensitivity of interpersonal coordination to visual constraints. Specifically, the effect of the environment manipulation was more pronounced at the head, where it affected all three CRQA variables in the same direction, with enhanced coordination in the VR condition.

Additionally, we found interactions between task-partner and environment at both the head and the torso, in that when pairs discussed their pictures with each other in the VR they had a greater amount of coordination stability compared to when they conversed with confederates, but this did not hold in the real-world condition. One interpretation of these findings may be that the relatively sparse nature of the virtual environment allowed the individuals to focus more intently on the task, thereby strengthening the stability of coordination that governed the interaction. However, prior research has also shown a differential influence of virtual and real-world conditions on visual entrainment [32], indicating that more research in this area is required. It is possible that these differences are attributable to different demands for ocular convergence and accommodation—objects in VR headsets that are projected at different distances require variations in convergence but mostly invariant accommodation, which can lead to discomfort and salient differences between VR displays and real environments [33]. However, in the current study, both the avatar and pictures were displayed at a constant distance in the VR, which likely diminishes this concern.

3 Study Two: The Influence of Mechanical Coupling on Interpersonal Coordination and Communication

Shockley et al. [23] hypothesized that postural coordination during cooperative conversations may reflect the functional organization that supports the joint goals of individuals engaged in the conversation. In other words, the postural coordination during conversation may embody coordination of cognitive process in communication. This embodiment thesis implies the mutual influence of body and cognition: Cognition or emotion alters bodily processes, and the bodily processes can also bring about change in cognition or emotion [34]. In particular, bodily coordination is not just a by-product of cognitive processes of communication, but an integral aspect of cognition that unfolds across different scales [35]. Hence, an alteration of interpersonal postural coordination should in turn influence the linguistic coordination that occurs during conversation, which in turn may influence the effectiveness of communication.

In the present study, we manipulated parameters affecting postural coordination by requiring participants to stand and balance on balance boards (long wooden boards secured atop circular dowels running along their length) while engaging in the find-the-differences conversational task described in study one. During half of the trials, both participants balanced on the same balance board (coupled condition). In the other half of the trials, participants balanced on separate balance boards (uncoupled condition; see Fig. 3).

An overview of the method used in study 2. a The positions of participants in the coupled and uncoupled conditions. An opaque curtain was hung from the ceiling to prevent participants from seeing each other’s picture, and small pieces of foam were attached to each corner of the balance boards to dampen the rocking motion. b A pair of puzzle stimuli used in the experiment. Participants discussed their respective pictures in order to find differences while their movements and task performance were recorded

The goal was to investigate how mechanical coupling would influence postural coordination and, ultimately, communication. During all trials, participants were able to become informationally coupled through conversation, and during half of the trials they were coupled mechanically via a shared balance board. We predicted this physical coupling should increase postural coordination above levels brought about by the informational coupling established by verbal communication alone. According to the functional organization thesis outlined above, shared postural configurations should facilitate better communication and, hence, task performance. We thus predicted that when CRQA measures were higher (i.e., greater coordination), the pairs would find more differences (i.e., communication would be more effective).

3.1 Method

Thirty-four participants (17 pairs) performed the same find-the-differences task described in Study 1. This study employed a repeated-measures design, in which individuals stood on either the same or separate balance boards, depending upon the coupling condition. Each board was made of a 60.96\(\,\times \,\)243.84\(\,\times \,\)1.90 cm flat plywood sheet. Two 121.92 cm length dowels with 5.08 cm diameter were secured underneath the boards along their major axes in order to create a pivot point. Four 5 \(\times \) 7 \(\times \) 10.5 cm pieces of foam were attached underneath four corners of the board with the 5 \(\,\times \,\) 7 cm surface attached to the board. These foam pieces damped the rocking motion of the board and simplified the balancing task. Thin carpeting was secured to the top of the surface of each board to protect participants from splinters and from slipping. A picture stand for holding the find-the-differences task stimuli was placed 195 cm in front of each participant at approximately eye-level (155 cm from the floor). A black opaque curtain was hung between the two participants spanning from the board to the picture stands in order to prevent pairs from seeing both pictures, though they could still see each other in their peripheral vision. Participants were asked to actively balance the board throughout each trial, and were discouraged from simply resting their weight at a fully tilted position.

The experimental sessions consisted of a single practice trial and two blocks of four trials each of the coupled and uncoupled conditions. Each trial lasted for three minutes. The order of the blocks was randomized. In the practice trial, the same puzzle was used for all pairs. In the remaining eight experimental trials, the order of the puzzles was randomized. In the practice trial, the dyads stood on the same balance board, each facing in the same direction, with their AP axes orthogonal to the major axis of the board, meaning that postural sway was destabilized primarily in the AP direction. Participants were positioned such that one participant stood approximately 60 cm from the left edge and the other stood the same distance from the right edge. The coupled condition was identical to the practice session, except that new puzzles were used. In the uncoupled condition, participants stood on two different balance boards, which were aligned along their major axes and positioned with their minor axes adjacent. During these trials, each participant stood about a quarter of the board length from the adjacent ends of the two boards. Hence, they were standing in at the same relative distance from one another as they were in the coupled condition.

The movements of the participant’s heads and torsos were tracked at a 50 Hz sampling rate with the same motion tracking system described in study one. Task performance was also indexed in the same manner as in study one. Each sensor was attached to the back of participant’s heads and torsos using elastic Velcro belts and headbands. The time series data for analyses were taken from the AP movement of the head and torso. These data were prepared in the same manner as in study one, and then submitted to CRQA using a delay of 103 samples, an embedding dimension of 7, and a radius of 27 % of the mean distance separating points in reconstructed space.

3.2 Results

A log transformation was applied to %REC and LMAX to correct positively skewed data. Extreme outlier values of CRQA in each condition were detected and removed via Tukey’s method with a three interquartile range threshold. A linear mixed-effects model was employed to analyze the obtained measures [36], with conditions and trial numbers included as repeated-measure factors with an unstructured covariance structure. Additionally, CRQA measures and trial numbers (to control for potential learning effects) were used to predict task performance. These analyses were conducted separately for the coupled and uncoupled conditions. Only significant effects (p \(<\) 0.05) are reported.

Estimated marginal means for CRQA measures and task performance can be seen in Table 2. Analyses showed that increases in %REC of the two participants’ head movements positively predicted increases in performance in the coupled condition, t(15.83) \(=\) 6.59, p \(<\) 0.001. The relation between %DET for interpersonal head movement coordination and performance showed a similar pattern, where increases in %DET predicted increases in performance in the coupled condition, t(16.31) = 3.15, p \(=\) 0.006. Finally, LMAX for interpersonal head movement coordination also positively predicted performance in the coupled condition, t(21.60) \(=\) 3.46, p \(=\) 0.002.

3.3 Discussion of Study 2

Contrary to our predictions, CRQA measures of coordination between participants were not greater in the coupled versus the uncoupled condition. Nonetheless, the balance board was found to moderate the relation between coordinated head movements and the effectiveness of communication (operationalized as the number of differences during the task). In the coupled condition, coordinated head movement exhibited a positive relation to the number of differences found, while no such relation was found in the uncoupled condition. It is important to note that this relation held for log-transformed values of %REC and MAXLINE, meaning that the relation was not strictly linear.

With respect to the lack of effect of coupling condition on coordination, we observe that when participants shared a board, they had to continuously compensate for each other’s movements. In other words, the mechanical link between two participants may have disrupted, rather than enhanced, coordination. This is consistent with comments from several participants who said that balancing on a shared board was more difficult than balancing independently on separate boards. This is also consistent with the findings of Stoffregen et al. [21], who found that when participants were standing on an unstable rather than a stable surface, the enhanced coordination that normally occurs during conversation was absent. Additionally, interpersonal coordination has been observed to become attenuated in difficult stance conditions [37]. These observations might suggest that interpersonal coordination is a fragile phenomenon. We note that, at some level, interpersonal postural coordination surely must depend on postural stability and coordination at the intra-personal level, which depends in part on mechanical support. However, it might not be the case that mechanical constraints necessarily override interpersonal constraints. Instead, a variety of sources of constraint, stemming from task demands, perceptual factors, biomechanical variables, and other sources, may interact to determine postural stability and coordination at the intrapersonal and interpersonal levels [38]. The nested relation between intrapersonal postural stability and interpersonal postural coordination is an important issue for future research.

Regarding the relation between movement coordination and task performance, one way to interpret the divergent influence between balance board conditions is that when participants were mechanically coupled, they met two simultaneously shared task demands: balancing the board together and maintaining effective communication. In that effective communication required stable visual access to the target pictures, it is likely that the tasks were functionally dependent. In this case, the positive relation between head movement coordination and task performance in the coupled condition may indicate that successful participant pairs were able to coordinate their postural movements in order to obtain the visual information necessary for ongoing problem solving during the conversational task.

4 Conclusion

The present experiments illustrate how CRQA contributes a broader robust methodology that can be used to study human interactions (see [18] for a recent review). The results of the two studies described show that manipulations of perceptual (i.e., informational) and mechanical coupling variables can influence the coordination observed between two conversing individuals, and that CRQA measures are sensitive to these changes.

With respect to perceptual coupling (Study 1), we demonstrated that visual constraints influence the coordination observed between two conversing individuals. In doing so, we also showed that virtual environments are suitable for evaluating the influence of visual information on interpersonal coordination and communication. This finding presents potential for new possibilities for manipulating visual information beyond those offered in real world contexts, which would allow finer evaluation of how visual constraints influence the movement coordination that occurs during communication. Moreover, the present results, considered along with the findings of Stoffregen et al. [20], may indicate that visual manipulations influence the coordinative structure that emerges during cooperative conversation. Specifically, both studies found that talking to a task partner enhanced interpersonal movement coordination, but only at the head (cf. Shockley et al. [19], who only found enhanced coordination at the waist). Given that gaze coordination has been shown as an index of joint attention (e.g., [39]), future studies may also be warranted to directly manipulate head coordination while tracking participants gaze patterns. This could serve to address why changes in head coordination did not influence communication in Study 1, in spite of the fact that previous studies have shown an influence of gaze coordination on effective communication [40, 41].

Finally, in Study 2, we demonstrated that manipulating movement coordination can impact effective communication, and we showed how measures obtained from CRQA are related to this outcome. This finding is consistent with Shockley et al.’s [23] suggestion that the movement coordination observed during conversation may embody the cognitive coordination required for effective communication, and implies that the relation between coordination and communication may be bidirectional. We suggest that this finding invites a more comprehensive investigation of how movement coordination (as indexed by CRQA and other methods) influences effective communication.

References

Condon, W.S., Ogston, W.D.: J. Psychiatr. Res. 5(3), 221 (1967). doi:10.1016/0022-3956(67)90004-0

Kendon, A.: Acta Psychol. 32, 101 (1970). doi:10.1016/0001-6918(70)90094-6

Bernieri, F.J.: J. Nonverbal Behav. 12(2), 120 (1988). doi:10.1007/BF00986930

LaFrance, M.: Soc. Psychol. Q. 42(1), 66 (1979). doi:10.2307/3033875

Riley, M.A., Turvey, M.T.: J. Mot. Behav. 34(2), 99 (2002). doi:10.1080/00222890209601934

Van Orden, G.C., Kloos, H., Wallot, S.: In philosophy of complex systems: handbook of the philosophy of science, pp. 639–682. Elsevier, Amsterdam (2011)

Marwan, N., Kurths, J.: Phys. Lett. A 302(5), 299–307 (2002). doi:10.1016/S0375-9601(02)01170-2

Marwan, N., Romano, M.C., Thiel, M., Kurths, J.: Phys. Rep. 438(5), 237 (2007). doi:10.1016/j.physrep.2006.11.001

Shockley, K., Butwill, M., Zbilut, J.P., Webber Jr, C.L.: Phys. Lett. A 305(1), 59 (2002). doi:10.1016/S0375-9601(02)01411-1

Zbilut, J.P., Giuliani, A., Webber Jr, C.L.: Phys. Lett. A 246(1), 122 (1998). doi:10.1016/S0375-9601(98)00457-5

Riley, M.A., Balasubramaniam, R., Turvey, M.T.: Gait Posture 9(1), 65 (1999). doi:10.1016/S0966-6362(98)00044-7

Webber Jr, C.L., Zbilut, J.P.: J. Appl. Physiol. 76(2), 965 (1994)

Webber Jr, C.L., Zbilut, J.P.: In Bioengineering approaches to pulmonary physiology and medicine, pp. 137–148. Plenum Press, New York (1996)

Webber Jr, C.L., Zbilut, J.P.: In: Tutorials in contemporary nonlinear methods for the behavioral sciences, pp. 26–94. http://www.nsf.gov/sbe/bcs/pac/nmbs/nmbs.jsp (2005)

Issartel, J., Marin, L., Cadopi, M.: Neurosci. Lett. 411(3), 174 (2007). doi:10.1016/j.neulet.2006.09.086

Varlet, M., Marin, L., Lagarde, J., Bardy, B.G.: J. Exp. Psychol. Hum. Percept. Perform. 37(2), 473 (2011). doi:10.1037/a0020552

Schmidt, R.C., Morr, S., Fitzpatrick, P., Richardson, M.J.: J. Nonverbal Behav. 36(4), 263 (2012). doi:10.1007/s10919-012-0138-5

Shockley, K., Riley, M.A.: In Recurrence quantification analysis: theory and best practices, Springer, New York (in press)

Shockley, K., Santana, M.V., Fowler, C.A.: J. Exp. Psychol. Hum. Percept. Perform. 29(2), 326 (2003). doi:10.1037/0096-1523.29.2.326

Stoffregen, T.A., Giveans, M.R., Villard, S.J., Shockley, K.: Ecol. Psychol. 25(2), 103 (2013). doi:10.1080/10407413.2013.753806

Stoffregen, T.A., Giveans, M.R., Villard, S., Yank, J.R., Shockley, K.: Mot. Control 13(4), 471 (2009)

Shockley, K., Baker, A.A., Richardson, M.J., Fowler, C.A.: J. Exp. Psychol. Hum. Percept. Perform. 33(1), 201 (2007). doi:10.1037/0096-1523.33.1.201

Shockley, K., Richardson, D.C., Dale, R.: Top. Cogn. Sci. 1(2), 305–319 (2009). doi:10.1111/j.1756-8765.2009.01021.x

Spivey, M.J., Grosjean, M., Knoblich, G.: Proc. Nat. Acad. Sci. U.S.A. 102(29), 10393 (2005). doi:10.1073/pnas.0503903102

McKinstry, C., Dale, R., Spivey, M.J.: Psychol. Sci. 19(1), 22 (2008). doi:10.1111/j.1467-9280.2008.02041.x

Richardson, M.J., Marsh, K.L., Isenhower, R.W., Goodman, J.R., Schmidt, R.C.: Hum. Mov. Sci. 26(6), 867 (2007). doi:10.1016/j.humov.2007.07.002

Schmit, J.M., Regis, D.I., Riley, M.A.: Exp. Brain Res. 163(3), 370 (2005). doi:10.1007/s00221-004-2185-6

Woollacott, M., Shumway-Cook, A.: Gait Posture 16(1), 1 (2002). doi:10.1016/S0966-6362(01)00156-4

Abarbanel, H.D.I.: Anal. observed chaotic data. Springer, New York (1996)

Pellecchia, G.L., Shockley, K.: In: Tutorials in contemporary nonlinear methods for the behavioral sciences, pp. 95–141. http://www.nsf.gov/sbe/bcs/pac/nmbs/nmbs.jsp (2005)

Richardson, M.J., Schmidt, R.C., Kay, B.A.: Biol. Cybern. 96(1), 59 (2007). doi:10.1007/s00422-006-0104-6

Stoffregen, T.A., Bardy, B.G., Merhi, O.A., Oullier, O.: Presence: Teleoperators Virtual Environments 13(5), 601 (2004). doi:10.1162/1054746042545274

Edgar, G.K., Bex, P.J.: In Simulated and virtual realities: elements of perception, pp. 85–102. Taylor & Francis, Bristol (1995)

Niedenthal, P.M.: Science 316(5827), 1002 (2007). doi:10.1126/science.1136930

Van Orden, G.C., Hollis, G., Wallot, S.: Front. physiol. 3(207), 1 (2012). doi:10.3389/fphys.2012.00207

Baayen, R.H., Davidson, D.J., Bates, D.M.: J. Mem. Lang. 59, 390 (2008). doi:10.1016/j.jml.2007.12.005

Ramenzoni, V.C., Davis, T.J., Riley, M.A., Shockley, K., Baker, A.A.: 211(3–4), 447 (2011). doi:10.1007/s00221-011-2653-8

Riley, M.A., Kuznetsov, N., Bonnette, S.: Sci. Motricité 74, 518 (2011). doi:10.1051/sm/2011117

Grant, E.R., Spivey, M.J.: Psychol. Sci. 14(5), 462 (2003). doi:10.1207/s15516709cog0000_29

Richardson, D.C., Dale, R.: Cogn. Sci. 29(6), 1045 (2003). doi:10.1207/s15516709cog0000_29

Richardson, D.C., Dale, R., Kirkham, N.Z.: Psychol. Sci. 18, 407 (2007). doi:10.1111/j.1467-9280.2007.01914.x

Acknowledgments

These studies were sponsored by NFS grant BCS-0926662.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer International Publishing Switzerland

About this paper

Cite this paper

Tolston, M., Ariyabuddhiphongs, K., Riley, M.A., Shockley, K. (2014). Cross-Recurrence Quantification Analysis of the Influence of Coupling Constraints on Interpersonal Coordination and Communication. In: Marwan, N., Riley, M., Giuliani, A., Webber, Jr., C. (eds) Translational Recurrences. Springer Proceedings in Mathematics & Statistics, vol 103. Springer, Cham. https://doi.org/10.1007/978-3-319-09531-8_10

Download citation

DOI: https://doi.org/10.1007/978-3-319-09531-8_10

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-09530-1

Online ISBN: 978-3-319-09531-8

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)