Abstract

A fully algebraic framework for constructing coarse spaces for multilevel preconditioning techniques is proposed. An issue of multilevel techniques is their application to linear system encountered in industrial applications which can be derived from non-elliptic PDEs. Drawing our inspiration from the Aitken-SVD methodology, dedicated to Schwarz methods, we proposed to construct an approximation space by computing the Singular Value Decomposition of a set of iterated solutions of the Richardson process associated to a given preconditioner.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

We focus on the solution of a general linear system Au = f by a Krylov type iterative method, where \(A \in \mathbb{R}^{m\times m}\) is non-singular, \(u,\,f \in \mathbb{R}^{m}\). The major drawback of the GCR (Generalized Conjugate Residual) [6] and the GMRES (General Minimum Residual) [7] methods is their convergence rate that depends on the conditioning number \(\kappa (A) =\| A\|\;\|A^{-1}\|\).

The convergence rate of these techniques decreases while κ increases and the use of such methods needs preconditioning. In the following we consider left preconditioning. The goal is to solve \(M^{-1}\mathit{Au} = M^{-1}f\) with M −1 such that κ(M −1 A) ≪ κ(A).

Preconditioning can be enhanced by multilevel techniques. Multilevel techniques are known to be robust for scalar elliptic Partial Differential Equations with standard discretization and to enhance the scalability of domain decomposition method such as Restricted Additive Schwarz preconditioning techniques. An issue is their application to linear system encountered in industrial applications which can be derived from non-elliptic PDEs. Moreover, the building of coarse levels algebraically becomes an issue since the only known information is contained in the operator to inverse.

One can consider a coarse space as a space to represent an approximated solution of a smaller dimension than the leading dimension of the system. It is possible to build a coarse level based on a coarse representation of the solution. Drawing our inspiration from the Aitken-SVD methodology [8] dedicated to Schwarz methods, we proposed to construct an approximation space by computing the Singular Value Decomposition of a set of iterated solutions of the Richardson process associated to a given preconditioner.

From a preconditioner M −1 associated to a Richardson process:

We propose to build a two-level additive preconditioner \(M_{2L}^{-1}\):

where for a basis \(\mathbb{U}_{q} \in \mathbb{R}^{m\times q}\), \(M_{c}^{-1} = \mathbb{U}_{q}(\mathbb{U}_{q}^{T}A\mathbb{U}_{q})^{-1}\mathbb{U}_{q}^{T}\).

The plan of the paper is the following. Section 2 describes the methodology to compute an algebraic coarse level from successive iterations of a Richardson process. Numerical investigations with the RAS preconditioner built for real non-symmetric indefinite operator, are performed in Sect. 3. Section 4 concludes the study.

2 Methodology

The idea is to compute a coarse representation of the solution. In [8] a fully algebraic computation of a coarse space is proposed to perform an Aitken acceleration of vectorial sequence generated with an iterative domain decomposition method. In [5] Aitken-SVD Schwarz algorithms were derived for the Aitken Restricted Additive Schwarz preconditioning technique [4].

The choice of constructing the coarse space with the SVD is based on the following properties. Let \(G \in \mathbb{R}^{m\times l}\). Assume that the values σ k , 1 ≤ k ≤ l are ordered in decreasing order and there exists a q such that σ q > 0 while \(\sigma _{q} + 1 = 0\). Then G can be decomposed in a dyadic decomposition:

This means that SVD provides a way to find optimal lower dimensional approximations of a given series of data. More precisely, it produces an orthonormal base for representing the data series in a certain least squares optimal sense. This can be summarized by the theorem of Schmidt-Eckart-Young-Mirsky:

Theorem 1.

A non-unique minimizer X ∗ of the problem \(\min _{X,\mathit{rank}X=q}\|G - X\|_{2} =\sigma _{q+1}(G)\) , provided that σ q > σ q+1 , is obtained by truncating the dyadic decomposition of 3 to contain the first q terms: \(X_{{\ast}} =\sigma _{1}U_{1}V _{1}^{{\ast}} +\sigma _{2}U_{2}V _{2}^{{\ast}} +\ldots +\sigma _{q}U_{q}V _{q}^{{\ast}}\)

Moreover, the SVD of a matrix is well-conditioned with respect to perturbations of its entries. Consider the matrix \(G,B \in \mathbb{R}^{m\times l}\), the Fan inequalities write \(\sigma _{q+s+1}(G + B) \leq \sigma _{q+1}(G) +\sigma _{s+1}(B)\) with \(q,s \geq 0,\,q + s + 1 \leq l\). Considering the perturbation matrix E such that | | E | | = O(ε), then \(\vert \sigma _{k}(G + E) -\sigma _{k}(G)\vert \leq \sigma _{1}(E) =\| E\|_{2},\,\forall k\). This property does not hold for eigenvalues decomposition where small perturbations in the matrix entries can cause a large change in the eigenvalues.

These properties allow us to search an approximation of the solution in the base linked to the SVD of a sequence of vectors obtained by iterating a linearly convergent iterative process.

Here, we propose a general framework which enables to compute algebraically a two-level additive preconditioner from any preconditioner that can be used in a Richardson iterative process. Algorithm 1 shows the steps to compute \(M_{2L}^{-1}\) that way. In step 1, we compute the SVD of l successive iterations stored in a matrix \(G \in \mathbb{R}^{m\times l}\) of a Richardson process (1) having a linear convergence, i.e. we compute a dyadic decomposition of G, as \(G = \mathbb{U}_{l}\varSigma _{l}\mathbb{V}_{l}^{T}\), with \(\mathbb{U}_{l} \in \mathbb{R}^{m\times l}\) \(\varSigma _{l} \in \mathbb{R}^{l\times l}\) and \(\mathbb{V}_{l} \in \mathbb{R}^{m\times l}\). In step 2, \(\mathbb{U}_{q}\) is made of the first q columns of \(\mathbb{U}_{l}\) with respect to the decreasing of the singular values Σ i, i , such that \(\mathbb{U}_{q}\) is full rank. This selection is done according to Theorem 1 where \(X_{q} \in \mathbb{R}^{m\times q}\) is a non-unique minimizer of the problem \(\min _{X,\mathit{rank}X=q}\|G - X\|_{2} =\sigma _{q+1}(G)\), such that \(X_{q} = \mathbb{U}_{q}\varSigma _{q}\mathbb{V}_{q}^{T}\) and \(\mathit{rk}(\mathbb{U}_{q}) = q\), with \(\mathbb{U}_{q} \in \mathbb{R}^{m\times q}\), \(\varSigma _{q} \in \mathbb{R}^{q\times q}\) and \(\mathbb{V}_{q} \in \mathbb{R}^{m\times q}\). Once this basis of the coarse space is defined, one can compute the coarse operator (step 3) and solve the coarse problem (step 4).

Algorithm 1 Computation of \(M_{2L}^{-1}\) with SVD of solutions of a Richardson process

Require: \((u^{k})_{0\leq k\leq l-1}\), l successive iterates satisfying \(u^{k+1} - u^{\infty } = \left (I -\alpha M^{-1}A\right )\left (u^{k} - u^{\infty }\right )\) starting from any initial guess u 0

1: Compute the Singular Value Decomposition of the snapshots \(G = \left [u^{0},\ldots,u^{l-1}\right ] = \mathbb{U}_{l}\varSigma _{l}\mathbb{V}_{l}^{T}\)

2: Set the index q such that \(q =\max _{0\leq i\leq l-1}\{\varSigma (i,i) > \mathit{tol}\}\), to define the full rank matrix \(\mathbb{U}_{q} = \left [U_{0},\,U_{1},\,\ldots,\,U_{q}\right ]\)

{ex.: \(\mathit{tol} = 10^{-12}\).}

3: Define the coarse operator \(A_{c} \in \mathbb{R}^{q\times q}\) such that \(A_{c} = \mathbb{U}_{q}^{T}A\mathbb{U}_{q}\)

4: Define the two-level additive preconditioner \(M_{2L}^{-1} = M^{-1} + \mathbb{U}_{q}A_{c}^{-1}\mathbb{U}_{q}^{T}\)

It is possible to see this approach as a way to approximate a Krylov subspace. Basically, the solution of the linear system Au = f defined in Sect. 1 consists on minimizing \(F(u^{k}) = \left (f -\mathit{Au}^{k},f -\mathit{Au}^{k}\right )\) on a Krylov space \(K_{l}\left (A,r^{0}\right ) = \{r^{0},\,\mathit{Ar}^{0},\,\ldots,\,A^{l-1}r^{0}\} = \{d^{0},\,\ldots,\,d^{l-1}\}\), where from an arbitrary initial solution \(u^{0} \in \mathbb{R}^{m}\), \(r^{0} = f -\mathit{Au}^{0}\).

Let choose u 0 = 0. Each iterate u k of the Richardson process can be written in a Krylov subspace:

Following Algorithm 1, we can write that

Then the solution of the coarse problem is an approximation of a solution in \(\mathit{span}(K_{l}(M^{-1}A,M^{-1}f))\).

This link enable us to choose a good initial guess for the Krylov method preconditioned by this two-level preconditioning approach by computing the solution \(u_{c} \in \mathbb{R}^{q}\) of the coarse linear system: \(A_{c}u_{c} = f_{c},\mbox{ with }f_{c} = \mathbb{U}_{q}f\).

Then we can set the initial guess for the Krylov method such that,

3 Numerical Experiments

In this section we propose to apply the methodology for a RAS preconditioner for the solution of CFD problems. The considered matrices A are real, non-symmetric, indefinite and possibly not positive.

The Additive Schwarz (AS) preconditioning is built from the adjacency graph G = (W, E) of A, where \(W = \{1,2,\ldots,m\}\) and \(E = \{(i,j): a_{\mathit{ij}}\neq 0\}\) are the edges and vertices of G. Starting with a non-overlapping partition \(W = \cup _{i=1}^{p}W_{i,0}\) and δ ≥ 0 given, the overlapping partition \(\{W_{i,\delta }\}\) is obtained defining p partitions W i, δ ⊃ W i, δ−1 by including all the immediate neighbouring vertices of the vertices in the partition W i, δ−1. Then the restriction operator R i, δ : W → W i, δ defines the local operator \(A_{i,\delta } = R_{i,\delta }\mathit{AR}_{i,\delta }^{T},A_{i,\delta } \in \mathbb{R}^{m_{i,\delta }\times m_{i,\delta }}\) on W i, δ . The AS preconditioning writes: \(M_{\mathit{AS},\delta }^{-1} =\sum _{ i=1}^{p}R_{ i,\delta }^{T}A_{ i,\delta }^{-1}R_{ i,\delta }\). Introducing \(\tilde{R}_{i,\delta }\) the restriction matrix on a non-overlapping subdomain W i, 0, the Restricted Additive Schwarz (RAS) iterative process [2] writes:

When the number of subdomains increases the convergence rate of RAS decreases. When it is applied to linear problems, the RAS has a pure linear rate of convergence.

First we study the robustness and scalability of the preconditioner on a 2D driven cavity problem. Second we propose a test of the quality of our coarse space on an 2D industrial problem.

3.1 Robustness

Here, we want to study the numerical scalability of the method for the domain decomposition preconditioner chosen. We fix the number of Richardson iteration to perform while decreasing the convergence rate of the preconditioner, i.e. we set a coarse space size l and increases the number of partitions p.

We consider a test case called e30r2000 coming from modeling 2D fluid flow in a driven cavity proposed in the Matrix Market data collection [3] referenced under the name DRIVCAV. The flow is modeled using the incompressible Navier Stokes equations discretized using Finite Element Method and linearized using Newton’s method. The unit square on what the problem is solved is dicretized by 30 elements on the edges. The Reynolds number is set to 2000.

The matrix A is real, non-symmetric and indefinite of size m = 9, 661 and has 306, 356 entries. The estimated condition number given by the condest function of MATLAB is \(\kappa _{\infty }(A) = 6.77\,e + 11\).

We partition the operator with the METIS software for partitioning graphs with a multilevel recursive-bisection algorithm, in \(p = \{4,\,8,\,12\}\) partitions. We compute l = 60 iterations of a RAS iterative process starting from an initial guess u 0 = 0, and perform the SVD of the corresponding sequence of vectors.

Figure 1 (top) shows the singular values profile. When p increases the spectrum coverage decreases which implies a decreasing of the quality of the solution on the coarse space.

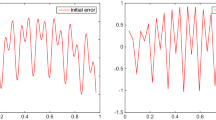

Figure 1 (bottom) shows the convergence to the solution of a GCR method preconditioned by a RAS preconditioning technique on the left and enhanced by the given algebraic two-level approach with initialisation of the Krylov method by the solution of the coarse system written on \(\mathbb{R}^{m}\). The convergence rate of the RAS method is reduced for each choice of partitioning. For p = 4 and p = 8 the initialization by the coarse solution is efficient and we observe an enhancement about 8 and 2 orders of convergence at the initialization respectively. For all partitioning the accuracy is better than for the RAS, i.e. the GCR reaches greater convergences and, although there is still a plateau due to the bad conditioning of the system, the convergence to the solution for p = 12 can reach 10−7 instead of 10−5.

3.2 Quality

Here, we want to observe the influence of the quality of the coarse space on the convergence rate of the preconditioned solution method.

We apply our technique on the case GT01R proposed by a CFD company called FLUOREM, on [1], which deals with steady flow parametrization. From a steady RANS simulation (compressible Navier–Stokes equations) on a reference configuration they obtain linear systems with real, square and indefinite matrices. Those matrices, generated through automatic differentiation of the flow solver around a steady state, correspond to the Jacobian with respect to the conservative fluid variables of the discretized governing equations (finite-volume discretization). The right hand side represents the derivative of the equations with respect to a parameter (of operation or shape).

The CASE_004 GT01 operator comes from a 2D inviscid case in the context of a linear cascade turbine. The solution of the discrete system is defined over five variables per node. The discretisation is done among 1, 596 nodes, describing one inter-blade channel. The stencil involved by the convective scheme uses nine nodes. Thus, there are nine non-zero blocks for each node in the matrix. The peculiarity is that the computational domain is periodic, which introduces some non-zero elements far away from the diagonal. The resulting matrix is real, non-symmetric and not positive definite, of size m = 7, 980.

Figure 2 shows the singular values (left) obtained after 20, 40 or 60 iterations of a RAS iterative process with p = 8. For l = 60, σ covers 15 orders of magnitude, while it covers 10 orders of magnitude for l = 40 and 5 for l = 20. For each we choose l = q. As expected, the convergence of the GMRES (right) is better when q increases. Nevertheless, the convergence plots for 20 and 40 singular values kept are similar.

Table 1 shows the coarse solution accuracy compared to a solution given using LU factorization. The greater the number of iterations of a Richardson process is, the better the coarse solution accuracy is.

Those results shows that, although the quality of the coarse space is increasing with the number of Richardson iterations, it is not necessary to compute a lot of singular values to enhance the convergence with this technique.

4 Conclusion

As in [8] and [5] the principle of using the SVD of successive solutions of an iterative process enables to compute a coarse solution without the knowledge of the underlying equations but it not used to accelerate a sequence of vectors but to construct a Krylov subspace. Then it can also be used to construct algebraic coarse levels for a two-level preconditioning technique based on any preconditioner which can be used in an iterative Richardson process.

Numerical results have been shown for the RAS preconditioning technique on two fluid flow problems. The algebraic framework enables to deal with real, non-symmetric and not positive definite operators. The two-level preconditioners produced are numerically scalable for domain decomposition technique such as RAS and the coarse space enables to compute an approximation of the solution which is used to initialize the chosen Krylov method.

Further work concerns the study of the non-singularity of the coarse operators built with this approach. Moreover, a discussion about the choice of the SVD algorithms and the quality of the coarse space produced should be studied.

References

Boisvert, R.F., Pozo, R., Remington, K., Barrett, R.F., Dongarra, J.J.: Matrix market: a web resource for test matrix collections. In: The Quality of Numerical Software: Assessment and Enhancement, pp. 125–137. Chapman & Hall, London (1997). http://math.nist.gov/MatrixMarket

Cai, X.C., Sarkis, M.: A restricted additive Schwarz preconditioner for general sparse linear systems. SIAM J. Sci. Comput. 21(2), 792–797 (1999). doi:10.1137/S106482759732678X. http://dx.doi.org/10.1137/S106482759732678X

Davis, T.A., Hu, Y.: The university of florida sparse matrix collection. ACM Trans. Math. Softw. 38(1) (2011). http://www.cise.ufl.edu/research/sparse/matrices

Dufaud, T., Tromeur-Dervout, D.: Aitken’s acceleration of the restricted additive Schwarz preconditioning using coarse approximations on the interface. C. R. Math. Acad. Sci. Paris 348(13–14), 821–824 (2010). doi:10.1016/j.crma.2010.06.021. http://dx.doi.org/10.1016/j.crma.2010.06.021

Dufaud, T., Tromeur-Dervout, D.: Efficient parallel implementation of the fully algebraic multiplicative Aitken-RAS preconditioning technique. Adv. Eng. Softw. 53, 33–44 (2012)

Eisenstat, S.C., Elman, H.C., Schultz, M.H.: Variational iterative methods for nonsymmetric systems of linear equations. SIAM J. Numer. Anal. 20(2), 345–357 (1983). doi:10.1137/0720023. http://dx.doi.org/10.1137/0720023

Saad, Y., Schultz, M.H.: GMRES: a generalized minimal residual algorithm for solving nonsymmetric linear systems. SIAM J. Sci. Stat. Comput. 7(3), 856–869 (1986). doi:10.1137/0907058. http://dx.doi.org/10.1137/0907058

Tromeur-Dervout, D.: Meshfree adaptative aitken-schwarz domain decomposition with application to darcy flow. In: Topping, B., Ivanyi, P. (eds.) Parallel, Distributed and Grid Computing for Engineering. Computational Science Engineering and Technology Series, vol. 21, pp. 217–250. Saxe-Coburg Publications, Stirlingshire (2009)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer International Publishing Switzerland

About this paper

Cite this paper

Dufaud, T. (2014). A Two-Level Preconditioning Framework Based on a Richardson Iterative Process. In: Erhel, J., Gander, M., Halpern, L., Pichot, G., Sassi, T., Widlund, O. (eds) Domain Decomposition Methods in Science and Engineering XXI. Lecture Notes in Computational Science and Engineering, vol 98. Springer, Cham. https://doi.org/10.1007/978-3-319-05789-7_59

Download citation

DOI: https://doi.org/10.1007/978-3-319-05789-7_59

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-05788-0

Online ISBN: 978-3-319-05789-7

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)