Abstract

In this note, the performances of a framework for two-level overlapping domain decomposition methods are assessed. Numerical experiments are run on Curie, a Tier-0 system for PRACE, for two second order elliptic PDE with highly heterogeneous coefficients: a scalar equation of diffusivity and the system of linear elasticity. Those experiments yield systems with up to ten billion unknowns in 2D and one billion unknowns in 3D, solved on few thousands cores.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Developing an efficient and versatile framework for finite elements domain decomposition methods can be a hard task because of the mathematical genericity of finite element spaces, the complexity of handling arbitrary meshes and so on. The purpose of this note is to present one way to implement such a framework in the context of overlapping decompositions. In Sect. 2, the basics for one-level overlapping methods is introduced, in Sect. 3, a second level is added to the original framework to ensure scalability using a portable C++ library, and Sect. 4 gathers some numerical results. FreeFem++ will be used for the computations of finite element matrices, right hand side and mesh generation, but the work here is also applicable to other Domain-Specific (Embedded) Language such as deal.II [3], Feel++ [12], GetFem++ ….

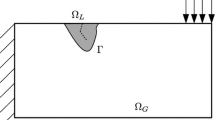

2 One-Level Methods

Let \(\varOmega \subset \mathbb{R}^{d}\) (d = 2 or 3) be a domain whose associated mesh can be partitioned into N non-overlapping meshes \(\left \{\mathcal{T}_{i}\right \}_{1\leqslant i\leqslant N}\) using graph partitioners such as METIS [10] or SCOTCH [5]. Let V be the finite element space spanned by the finite set of n basis functions \(\left \{\phi _{i}\right \}_{1\leqslant i\leqslant n}\) defined on Ω, and \(\left \{V _{i}\right \}_{1\leqslant i\leqslant N}\) be the local finite element spaces defined on the domains associated to each \(\left \{\mathcal{T}_{i}\right \}_{1\leqslant i\leqslant N}\). Typical finite element discretizations of a symmetric, coercive bilinear form \(a: V \times V \rightarrow \mathbb{R}\) yield the following system to solve:

where \(\left (A_{ij}\right )_{1\leqslant i,j\leqslant n} = a(\phi _{j},\phi _{i})\), and \(\left (b_{i}\right )_{1\leqslant i\leqslant n} = (f,\phi _{i})\), f being in the dual space V ∗. Let an integer δ be the level of overlap: \(\left \{\mathcal{T}_{i}^{\delta }\right \}_{1\leqslant i\leqslant N}\) is an overlapping decomposition and if we consider the restrictions \(\left \{R_{i}\right \}_{1\leqslant i\leqslant N}\) from \(V\) to \(\left \{V _{i}^{\delta }\right \}_{1\leqslant i\leqslant N}\), the local finite element spaces on \(\left \{\mathcal{T}_{i}^{\delta }\right \}_{1\leqslant i\leqslant N}\), and a local partition of unity \(\left \{D_{i}\right \}_{1\leqslant i\leqslant N}\) such that

Then a common one-level preconditioner for system (1) introduced in [4] is

The global matrix A is never assembled, instead, we build locally \(A_{i}^{\delta +1}\) the stiffness matrix yielded by the discretization of a on V i δ+1, and we remove the columns and rows associated to degrees of freedom lying on elements of \(\mathcal{T}_{i}^{\delta +1}\setminus \mathcal{T}_{i}^{\delta }\), this yields \(A_{i} = R_{i}AR_{i}^{T}\). The distributed sparse matrix-vector product Ax for \(x \in \mathbb{R}^{n}\) can be computed using point-to-point communications and the partition of unity without having to store the global distributed matrix A. Indeed, using (2), if one looks at the local components of Ax, that is R i Ax, then one can write, introducing \(\mathcal{O}_{i}\) the set of neighboring subdomains to i, i.e. \(\left \{j\;:\; \mathcal{T}_{i}^{\delta } \cap \mathcal{T}_{j}^{\delta }\neq \emptyset \right \}\):

since it can be checked that

The sparse matrix-sparse matrix products \(R_{i}R_{j}^{T}\) are nothing else than point-to-point communications from neighbors j to i.

In FreeFem++, stiffness matrices such as \(A_{i}^{\delta +1}\) and right-hand sides are assembled as follows (a simple 2D Laplacian is considered here):

mesh Th; // Th is a local 2D mesh (for example \(\mathcal{T}_{i}^{\delta +1}\))

fespace Vh(Th, Pk); // Vh is a local finite element space

varf a(u, v) = int2d(dx(u) * dx(v) + dy(u) * dy(v))

+ int2d(f * v) + BC;

matrix A = a(Vh, Vh); // A is a sparse matrix stored in the CSR format

Vh rhs; // rhs is a function lying in the FE space Vh

rhs[] = a(0, Vh); // Its values are set to solve A x = rhs

The mesh Th can either be created on the fly by FreeFem++, or it can be loaded from a file generated offline by Gmsh [6], for example when dealing with complex geometries. By default, FreeFem++ handles continuous piecewise linear, quadratic, cubic, quartic finite elements, and other traditional FE like Raviart-Thomas 1, Morley, etc. The boundary conditions depend on the label set on the mesh. For example, if one wants to impose penalized homogeneous Dirichlet boundary conditions on the label 1 of the boundary of Th, then one just has to add + on(1, u = 0) in the definition of the varf. For a more detailed introduction to FreeFem++ with abundant examples, interested readers should visit http://www.freefem.org/ff++ or see [9]. The partition of unity D i is built using a continuous piecewise linear approximation of

where \(\tilde{\chi }_{i}\) is defined as

3 Two-Level Methods

It is well known that one-level domain decomposition methods as depicted in Sect. 2 do suffer from poor conditioning when used with many subdomains, [16]. In this section, we present a new C++ library, independent of the finite element backend used, that assembles efficiently a coarse operator that will be used in Sect. 4 to ensure scalability of our framework. The theoretical foundations for the construction of the coarse operator are presented in [14]. From a practical point of view, after building each local solver A i , three dependent operators are needed:

-

(i)

the deflation matrix Z,

-

(ii)

the coarse operator E = Z T AZ,

-

(iii)

the actual preconditioner \(\mathcal{P}_{\text{A-DEF1}}^{-1} = \mathcal{P}_{\text{RAS}}^{-1}(I - AZE^{-1}Z^{T}) + ZE^{-1}Z^{T}\), thoroughly studied in [15].

In [14], the deflation matrix is defined as:

where

ν i is a threshold criterion used to select the eigenvectors Λ i associated to the smallest eigenvalues in magnitude of the following local generalized eigenvalue problem:

where \(A_{i}^{\delta }\) is the matrix yielded by the discretization of a on \(V _{i}^{\delta }\), and R i, 0 is the restriction operator from \(\mathcal{T}_{i}^{\delta }\) to the overlap \(\mathcal{T}_{i}^{\delta } \cap \left (\cup _{j\in \mathcal{O}_{i}}\mathcal{T}_{j}^{\delta }\right )\). In FreeFem++, sparse eigenvalue problems are solved either with SLEPc [8] or ARPACK [11]. The latter seems to yield better performance in our simulations. Given, for each MPI process, the local matrix A i , the local partition of unity D i , the set of eigenvalues \(\left \{\varLambda _{i_{j}}\right \}_{1\leqslant j\leqslant \nu _{i}}\) and the set of neighboring subdomains \(\mathcal{O}_{i}\), our library assembles E without having to assemble A and to store Z, and computes its LU or LDL T factorization using either MUMPS [1, 2], PARDISO [13] or PaStiX [7]. Moreover, all linear algebra related computations (e.g. sparse matrix-vector products) within our library are performed using Intel MKL, or can use user-supplied functions, for example those from within the finite element Domain-Specific (Embedded) Language. Assembling E is done in two steps: local computations and then renumbering.

-

first, compute local vector-sparse matrix-vector triple products which will be used to assemble the diagonal blocks of E. For a given row in E, off-diagonal values are computed using local sparse matrix-vector products coupled with point-to-point communications with the neighboring subdomains: the sparsity pattern of the coarse operator is similar to the dual graph of the mesh partitioning (hence it is denser in 3D than in 2D),

-

then, renumber the local entries computed previously in the distributed matrix E.

Only few processes are in charge of renumbering entries into E. Those processes will be referred to in the rest of this note as master processes. Any non master process has to send the rows it has previously computed to a specific master process. The master processes are then able to place the entries received at the right row and column indices. To allow an easy incremental matrix construction, E is assembled using the COO format. If need be, it is converted afterwards to the CSR format. Note here that MUMPS only supports the COO format while PARDISO and PaStiX work with the CSR format.

After renumbering, the master processes are also the one in charge of computing the factorization of the coarse operator. The number of master processes is a runtime constant, and our library is in charge of creating the corresponding MPI communicators. Even with “large” coarse operators of sizes of around 100, 000 × 100, 000, less than few tens of master processes usually perform the job quite well: computing all entries, renumbering and performing numerical factorization take around 15 s when dealing with thousands of slave processes.

A routine is then callable to solve the equation Ex = y for an arbitrary \(y \in \mathbb{R}^{\sum _{i=1}^{N}\nu _{ i}}\), which in our case is used at each iteration of our Krylov method preconditioned by \(\mathcal{P}_{\text{A-DEF1}}^{-1}\). Once again, the deflation matrix Z is not stored as the products \(Z^{T}x \in \mathbb{R}^{\sum _{i=1}^{N}\nu _{ i}}\) and \(Zy \in \mathbb{R}^{n}\) can be computed explicitly with a global matrix-free method (we only use the local W i plus point-to-point communications with neighboring subdomains).

4 Numerical Results

Results in this section were obtained on Curie, a Tier-0 system for PRACE composed of 5,040 nodes made of 2 eight-core Intel Sandy Bridge processors clocked at 2.7 GHz. The interconnect is an InfiniBand QDR full fat tree network. We want here to assess the capability of our framework to scale:

-

(i)

strongly: for a given global mesh, the number of subdomains increases while local mesh sizes are kept constant (i.e. local problems get smaller and smaller),

-

(ii)

weakly: for a given global mesh, the number of subdomains increases while local mesh sizes are refined (i.e. local problems have a constant size).

We don’t time the generation of the mesh and partition of unity. Assembly and factorization of the local stiffness matrices, resolution of the generalized eigenvalue problems, construction of the coarse operator and time elapsed for the convergence of the Krylov method are the important procedures here. The Krylov method used is the GMRES, it is stopped when the relative residual error is inferior to \(\varepsilon = 10^{-6}\) in 2D, and 10−8 in 3D. All the following results where obtained using a LDL T factorization of the local solvers A i δ and the coarse operator E using MUMPS (with a MPI communicator set to respectively MPI_COMM_SELF or the communicator created by our library binding master processes).

First, the system of linear elasticity with highly heterogeneous elastic moduli is solved with a minimal geometric overlap of one mesh element. Its variational formulation reads:

where

-

λ and μ are the Lamé parameters such that \(\mu = \dfrac{E} {2(1+\nu )}\) and \(\lambda = \dfrac{E\nu } {(1+\nu )(1 - 2\nu )}\) (E being Young’s modulus and ν Poisson’s ratio). They are chosen to vary between two sets of values, \((E_{1},\nu _{1}) = (2 \cdot 10^{11},0.25)\), and \((E_{2},\nu _{2}) = (10^{8},0.4)\).

-

\(\varepsilon\) is the linearized strain tensor and f the volumetric forces (here, we just consider gravity).

Because of the overlap and the duplication of unknowns, increasing the number of subdomains means that the number of unknowns increases also slightly, even though the number of mesh elements (triangles or tetrahedra in the case of FreeFem++) is the same. In 2D, we use piecewise cubic basis functions on an unstructured global mesh made of 110 million elements, and in 3D, piecewise quadratic basis functions on an unstructured global mesh made of 20 million elements. This yields a symmetric system of roughly 1 billion unknowns in 2D and 80 million unknowns in 3D. The geometry is a simple [0; 1]d × [0; 10] beam (d = 1 or 2) partitioned with METIS.

Solving the 2D problem initially on 1,024 processes takes 227 s, on 8,192 processes, it takes 31 s (quasioptimal speedup). With that many subdomains, the coarse operator E is of size 121, 935 × 121, 935. It is assembled and factorized in 7 s by 12 master processes. For the 3D problem, it takes initially 373 s. At peak performance, near 6,144 processes, it takes 35 s (superoptimal speedup). This time, the coarse operator is of size 92, 160 × 92, 160 and is assembled and factorized by 16 master processes in 11 s (Fig. 1).

Moving on to the weak scaling properties of our framework, the problem we now solve is a scalar equation of diffusivity with highly heterogeneous coefficients (varying from 1 to 105) on [0; 1]d (d = 2 or 3). Its variational formulation reads:

The targeted number of unknowns per subdomains is kept constant at approximately 800 thousands in 2D, and 120 thousands in 3D (once again with \(\mathbb{P}_{3}\) and \(\mathbb{P}_{2}\) finite elements respectively) (Fig. 2).

In 2D, the initial extended system (with the duplication of unknowns) is made of 800 million unknowns and is solved in 141 s. Scaling up to 12,288 processes yields a system of 10 billion unknowns solved in 172 s, hence an efficiency of \(\frac{141} {172} \approx 82\,\). In 3D, the initial system is made of 130 million unknowns and is solved in 127 s. Scaling up to 8,192 processes yields a system of 1 billion unknowns solved in 152 s, hence an efficiency of \(\frac{127} {152} \approx 83\,\).

5 Conclusion

This note clearly shows that our framework scales on very large architectures for solving linear positive definite systems using overlapping decompositions with many subdomains. It is currently being extended to support nonlinear problems (namely in the field of nonlinear elasticity) and we should be able to provide similar functionalities for non-overlapping decompositions. It should be noted that the heavy use of threaded (sparse) BLAS and LAPACK routines (via Intel MKL, PARDISO, and the Reverse Communication Interface of ARPACK) has already helped us to get a quick glance at how the framework performs using hybrid parallelism. We are confident that using this novel paradigm, we can still improve our scaling results in the near future by switching the value of OMP_NUM_THREADS to a value greater than 1.

References

Amestoy, P., Duff, I., L’Excellent, J.Y., Koster, J.: A fully asynchronous multifrontal solver using distributed dynamic scheduling. SIAM J. Matrix Anal. Appl. 23(1), 15–41 (2001)

Amestoy, P., Guermouche, A., L’Excellent, J.Y., Pralet, S.: Hybrid scheduling for the parallel solution of linear systems. Parallel Comput. 32(2), 136–156 (2006)

Bangerth, W., Hartmann, R., Kanschat, G.: deal.II—a general-purpose object-oriented finite element library. ACM Trans. Math. Softw. 33(4), 24–27 (2007)

Cai, X.C., Sarkis, M.: Restricted additive Schwarz preconditioner for general sparse linear systems. SIAM J. Sci. Comput. 21(2), 792–797 (1999)

Chevalier, C., Pellegrini, F.: PT-Scotch: a tool for efficient parallel graph ordering. Parallel Comput. 34(6), 318–331 (2008)

Geuzaine, C., Remacle, J.F.: Gmsh: a 3-d finite element mesh generator with built-in pre- and post-processing facilities. Int. J. Numer. Methods Eng. 79(11), 1309–1331 (2009)

Hénon, P., Ramet, P., Roman, J.: PaStiX: a high performance parallel direct solver for sparse symmetric positive definite systems. Parallel Comput. 28(2), 301–321 (2002)

Hernandez, V., Roman, J., Vidal, V.: SLEPc: a scalable and flexible toolkit for the solution of eigenvalue problems. ACM Trans. Math. Softw. 31(3), 351–362 (2005)

Jolivet, P., Dolean, V., Hecht, F., Nataf, F., Prud’homme, C., Spillane, N.: High performance domain decomposition methods on massively parallel architectures with FreeFem++. J. Numer. Math. 20(4), 287–302 (2012)

Karypis, G., Kumar, V.: A fast and high quality multilevel scheme for partitioning irregular graphs. SIAM J. Sci. Comput. 20(1), 359–392 (1998)

Lehoucq, R., Sorensen, D., Yang, C.: ARPACK Users’ Guide: Solution of Large-Scale Eigenvalue Problems with Implicitly Restarted Arnoldi Methods, vol. 6. Society for Industrial and Applied Mathematics, Philadelphia (1998)

Prud’homme, C., Chabannes, V., Doyeux, V., Ismail, M., Samake, A., Pena, G.: Feel++: a computational framework for Galerkin methods and advanced numerical methods. In: ESAIM: Proceedings, vol. 38, pp. 429–455 (2012)

Schenk, O., Gärtner, K.: Solving unsymmetric sparse systems of linear equations with PARDISO. Future Gener. Comput. Syst. 20(3), 475–487 (2004)

Spillane, N., Dolean, V., Hauret, P., Nataf, F., Pechstein, C., Scheichl, R.: A robust two-level domain decomposition preconditioner for systems of PDEs. C. R. Math. 349(23), 1255–1259 (2011)

Tang, J., Nabben, R., Vuik, C., Erlangga, Y.: Comparison of two-level preconditioners derived from deflation, domain decomposition and multigrid methods. J. Sci. Comput. 39(3), 340–370 (2009)

Toselli, A., Widlund, O.: Domain Decomposition Methods—Algorithms and Theory. Series in Computational Mathematics, vol. 34. Springer, Berlin (2005)

Acknowledgements

This work has been supported in part by ANR through COSINUS program (project PETALh no. ANR-10-COSI-0013 and projet HAMM no. ANR-10-COSI-0009). It was granted access to the HPC resources of TGCC@CEA made available within the Distributed European Computing Initiative by the PRACE-2IP, receiving funding from the European Community’s Seventh Framework Programme (FP7/2007-2013) under grant agreement RI-283493.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer International Publishing Switzerland

About this paper

Cite this paper

Jolivet, P., Hecht, F., Nataf, F., Prud’homme, C. (2014). Overlapping Domain Decomposition Methods with FreeFem++ . In: Erhel, J., Gander, M., Halpern, L., Pichot, G., Sassi, T., Widlund, O. (eds) Domain Decomposition Methods in Science and Engineering XXI. Lecture Notes in Computational Science and Engineering, vol 98. Springer, Cham. https://doi.org/10.1007/978-3-319-05789-7_28

Download citation

DOI: https://doi.org/10.1007/978-3-319-05789-7_28

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-05788-0

Online ISBN: 978-3-319-05789-7

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)