Abstract

Hyperspectral imaging provides measurement of a scene in contiguous bands across the electromagnetic spectrum. It is an effective sensing technology having vast applications in agriculture, archeology, surveillance, medicine and forensics. Traditional document imaging has been centered around monochromatic or trichromatic (RGB) sensing often through a scanning device. Cameras have emerged in the last decade as an alternative to scanners for capturing document images. However, the focus has remained on mono-/tri-chromatic imaging. In this paper, we explore the new paradigm of hyperspectral imaging for document capture. We outline and discuss the key components of a hyperspectral document imaging system, which offers new challenges and perspectives. We discuss the issues of filter transmittance and spatial/spectral non-uniformity of the illumination and propose possible solutions via pre and post processing. As a sample application, the proposed imaging system is applied to the task of writing ink mismatch detection in documents on a newly collected database (UWA Writing Ink Hyperspectral Image Database http://www.csse.uwa.edu.au/%7Eajmal/databases.html). The results demonstrate the strength of hyperspectral imaging in capturing minute differences in spectra of different inks that are very hard to distinguish using traditional RGB imaging.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Image scanning devices are currently the major source of creating digitized versions of documents both black and white as well as color. Traditional scanners are fairly limited with regards to the color information that they can capture as their imaging systems are designed to replicate the trichromatic RGB human visual system. In many situations high fidelity spectral information can be can be very useful, for example where it is required to distinguish between two similar inks [1] or determine the age of a writing or the document itself.

Natural materials exhibit a characteristic spectral response to incident light. The spectral response of a material is responsible for its specific color. It is a signature property which can be used for material identification. Spectral imaging is an effective technique for measurement of the spectra of objects in the real world. A hyperspectral (HS) image of a scene is a series of contiguous narrow-band images in the electro-magnetic spectrum. In contrast to a three channel RGB image, an HS image captures finer spectral information of a scene.

Satellite based multispectral imaging sensors have long been used for astronomical and remote sensing applications. Due to the high cost and complexity of these multispectral imaging sensors, various techniques have been proposed to utilize conventional imaging systems combined with a few off the shelf optical devices for multispectral imaging. In this paper, we discuss new challenges in the development of hyperspectral document imaging system. Various spectral imaging techniques have been developed over the years. An overview about different technologies for capturing hyperspectral images is given in Sect. 2. We focus on the HS imaging specific issues of spatial/spectral illumination variation and filter transmission variation and propose possible solutions to reduce these artifacts in Sect. 3. We apply the proposed HS imaging system to the task of ink mismatch detection (Sect. 4) on a newly developed writing ink hyperspectral image database. The paper is concluded in Sect. 5.

2 Overview of Hyperspectral Imaging

Strictly speaking, an RGB image is a three channel spectral image. An image acquired at more than three specific wavelengths in a band is referred to as a Multispectral Image. Generally, multispectral imaging sensors acquire more than three spectral bands. An image having finer spectral resolution or higher number of bands is regarded as a Hyperspectral Image. There is no clear demarcation with regards to the number of spectral bands/resolution between multispectral and hyperspectral images. However, hyperspectral sensors may acquire a few dozen to several hundred spectral measurements per scene point. For example, the AVIRIS (Airborne Visible/Infrared Imaging Spectrometer) of NASA has 224 bands in 400–2500 nm range [2].

A hyperspectral image has three dimensions: two spatial dimensions (\(x,y\)) and one spectral dimension (\(\lambda \)) as shown in Fig. 1. A hyperspectral image can be presented in the form of a Hyperspectral Cube. The basic concept for capturing hyperspectral images is to filter incoming light by the use of bandpass filters or dispersion optics. In the following we present a brief overview of different methods/technologies used for hyperspectral imaging, categorized based on the underlying optical phenomenon of bandpass filtering or chromatic dispersion. The overview presented here is limited to the hyperspectral imaging systems used in ground-based computer vision applications. Therefore, high cost and complex sensors for remote sensing employed in astronomy and other geo-spatial applications are not considered.

2.1 Bandpass Filtering

In filter based approach, the objective is to allow light in a specific wavelength range to pass through the filter and reach the imaging sensor. This phenomenon is illustrated in Fig. 2. This can be achieved by using optical devices generally named bandpass filters or simply filters. The filters can be categorized into two types depending on the filter operating mechanism. The first type is the tunable filter or specifically the electrically tunable filter. The pass-band of such filters can be electronically tuned at a very high speed which allows for measurement of hyperspectral data in a wide range of wavelengths. The second type is the non-tunable filters. Such filters have a fixed pass-band of frequencies and are not recommended for use in time constrained applications. These filters require physical replacement either manually, or mechanically by a filter wheel. However, they are easy to use in relatively simple and unconstrained applications.

Non-Tunable Filters. A common approach to acquire multispectral images is by sequential replacement of bandpass filters between a scene and the imaging sensor. The process of filter replacement can be mechanized by using a wheel of filters. Such filters are useful where time factor is not involved and the goal is to image a static scene. Kise et al. [3] developed a three band multispectral imaging system by using interchangeable filter design; two in the visible range (400–700 nm) and one in the near infrared range (700–1000 nm). The interchangeable filters allowed for selection of three bands. The prototype was applied to the task of poultry contamination detection.

Tunable Filters. Electronically tunable filters come in different base technologies. One of the most common is the Liquid Crystal Tunable Filter (LCTF). The LCTF is characterized by its low cost, high throughput and slow tuning time. On the other hand, the Acousto-Optical Tunable Filter is known for high cost, low throughput, and faster tuning time. For a detailed description of the composition and operating principles of the tunable filters, the readers are encouraged to read [4, 5].

Fiorentin et al. [6] developed a hyperspectral imaging system using a combination of CCD camera and LCTF in the visible range with a resolution of 5 nm. The device was used in the analysis of accelerated aging of printing color inks. The system is also applicable of monitoring the variation (especially fading) of color in artworks with the passage of time. The idea can be extended to other materials that may exhibit changes due to exposure to artificial or daylight illumination, such as document paper and ink.

Comelli et al. [7] developed a portable UV-fluorescence hyperspectral imaging system to analyze painted surfaces. The imaging setup comprises a UV-florescence source, an LCTF and a low noise CCD sensor. A total of 33 spectral images in the range (400–720 nm) in 10 nm steps were captured. The accuracy of the system was determined by comparison with the fluorescence spectra of three commercially available fluorescent samples measured with a bench-top spectro-fluorometer. The system was tested on a 15th century renaissance painting to reveal latent information related to the pigments used for finishing decorations in painting at various times.

2.2 Chromatic Dispersion

In dispersion based filtering, the objective is to decompose an incoming ray of light into its spectral constituent as shown in Fig. 3. This can be achieved by optical devices like diffraction gratings, prisms, grisms (grating and prism combined) and interferometers. We further outline chromatic dispersion based on refraction or interferometric optics.

Refraction Optics. Refraction is an intrinsic property of glass-like materials such as prisms. A prism separates the incoming light ray into its constituent colors. Du et al. [8] proposed a prism-based multispectral imaging system in the visible and infrared bands. The system used an occlusion mask, a triangular prism and a monochromatic camera to capture multispectral image of a scene. Multispectral images were captured at high spectral resolution while trading off the spatial resolution. The use of occlusion mask also reduced the amount of light available to the camera and thus decreased the signal to noise ratio (SNR). The prototype was evaluated for the tasks of human skin detection and physical material discrimination.

Gorman et al. [9] developed an Image Replicating Imaging Spectrometer (IRIS) using an arrangement of a Birefringent Spectral De-multiplexer (BSD) and off-the-shelf compound lenses to disperse the incoming light into its spectral components. The system was able to acquire spectral images in a snapshot. It could be configured to capture 8, 16 or 32 bands by increasing the number of stages of the BSD. It has, however, a Field-of-View limited by the width of a prism used in the BSD. A high spectral resolution is achieved by trading-off spatial resolution since a 2D detector is used.

Interferometric Optics. The optics such as interferometers can also be used as light dispersion devices by constructive and destructive interference. Burns et al. [10] developed a seven-channel multispectral imaging device using 50 nm bandwidth interference filters and a standard CCD camera. Mohan et al. proposed the idea of Agile Spectral Imaging [11]. Using a diffraction grating to disperse the incoming rays, a geometrical mask pattern was used to allow specific wavelengths to pass through and reach the sensor.

Descour et al. [12] presented a Computed Tomography Imaging Spectrometer (CTIS) design using three sinusoidal phase gratings to disperse light into multiple directions and diffraction orders. Assuming the dispersed images to be two dimensional projections of three dimensional multispectral cube, the multispectral cube is reconstructed using maximum-likelihood expectation maximization algorithm assuming Poisson likelihood law. The prototype works in the visible range (470–770 nm) and is able to reconstruct multispectral images of a simple target.

3 Hyperspectral Document Imaging

By carefully analyzing different technologies/methods for capturing hyperspectral images, we chose the tunable filter due to its easy integration with off-the-shelf machine vision cameras and programmatic control over the hardware (e.g. exposure time, spectral resolution, etc.). This section provides an overview of our hyperspectral document imaging setup and presents our approach for tackling various hyperspectral-imaging-specific challenges.

3.1 Acquisition Setup

Our system comprises of a monochrome machine vision CCD camera at a base resolution of \(752 \times 480\) pixels. A focusing lens (1:1.4/16 mm) lies in front of the CCD camera. In order to capture images in discrete wavelength channels, a Liquid Crystal Tunable Filter (LCTF) is placed in front of the lens as shown in Fig. 4. The filter can tune to any wavelength in the visible range (400-720 nm) with an average tuning time of 50 ms. The bandwidth of the filter varies with the center wavelength, such that it is low at shorter wavelengths and high at longer wavelengths as shown in Fig. 5. It is measured in terms of Full Width at Half Maximum (FWHM) which ranges from 7 to 20 nm corresponding to 400 and 720 nm. Thus, the first few bands have very low SNR combined with the filter transmission loss (see Fig. 5). To compensate for the low SNR images, the document is illuminated by two halogen lamps.

To achieve sufficient fidelity in the spectral dimension, we capture hyperspectral images comprising 33 bands in the visible range (400–720 nm at steps of 10 nm). The target is captured in a sequential manner so that the total capture time is the sum of acquisition and filter tuning time for each band (5 s, several times faster than a commercial system [13]).

3.2 Compensation for Filter Transmittance

Typically, each band of a hyperspectral image is captured with a constant exposure time. Since different spectral bands are captured sequentially in our imaging setup, it is possible to vary exposure before each acquisition is triggered. Looking at the filter response at different wavelengths in Fig. 5, it can be observed that the amount of light transmitted is a function of the wavelength such that – with some minor glitches – the longer the wavelength \(\lambda \), the higher the transmittance \(\tau (\lambda )\). Extremely small values of \(\tau (\lambda )\) for \(\lambda \in [400, 450]\) result in insufficient energy captured by the imaging system in those bands corresponding to the blue region of the spectrum (see Fig. 1). To compensate for this effect, we model the exposure time \(t_e(\lambda )\) as an inverse function of the wavelength such that the shorter the wavelength, the longer the exposure time:

where \(\tau _\mathrm{max }\) is the maximum transmission of the filter at any wavelength (i.e. transmission at \(\lambda =700\) nm for the filter used in this work – see Fig. 5), \(\bar{t}_e\) is the corresponding exposure time, and \(\alpha \) is a balancing coefficient. \(\bar{t}_e\) is computed as the maximum possible exposure time for the band corresponding to \(\tau _\mathrm{max }\) which ensures no image saturation. In order to keep each band unsaturated, we keep \(\alpha \) to be small (\(\alpha =8\) in this work) and experimentally find a suitable value for \(\bar{t}_e\).

3.3 Compensation for Non-Uniform Illumination Intensity

In hyperspectral document imaging, the use of a nearby illumination source induces a scalar field over the target image. This means that there is a spatially non-uniform variation in illumination. The result is that the pixels near the center of the image will be brighter (have higher energy) as compared to the pixels farther away towards the edges. This effect can be seen in Fig. 1. Let \(\mathbf {p}(x,y)\) be the spectral response at the image location \((x,y)\). It can be reasonably assumed here that the non-uniformity in illumination is only a function of pixel coordinates \((x,y)\) and does not depend on the wavelength \(\lambda \). This assumption will hold for each \((x,y)\) as long as \(\mathbf {p}(x,y)\) is not saturated. Hence, normalizing the spectral response at each pixel to the unit vector:

will largely compensate for the effect of non-uniform illumination intensity.

3.4 Compensation for Illuminant’s Non-Uniform Spectral Power Distribution

Assuming Lambertian surface reflectance, the hyperspectral image of a document can be modeled as follows. The formation of an \(N\) channel hyperspectral image \(\mathcal {I}(x,y,\lambda ),\lambda =1,2,...,N\) of a document is mainly dependent on four factors: the illuminant spectral power distribution \(\mathcal {L}(\lambda )\), the scene spectral reflectance \(\mathcal {S}(x,y,\lambda )\), the filter transmittance \(\tau (\lambda )\), and the sensor spectral sensitivity \(\mathcal {C}(\lambda )\). Hence, image intensity of a particular spectral band \(\lambda \) can be calculated as

where \(\lambda _{\min }\) and \(\lambda _{\max }\) define the bandwidth of the spectral band \(\lambda \).

Most of the illumination sources do not have a flat power distribution across different wavelengths (see Fig. 6 for spectral power distribution of some common illuminants). To compensate for non-uniform spectral power distribution of the illuminant, color constancy methods are applied. Van de Weijer et al. [14] proposed a unified formulation for different color constancy algorithms. Varying the parameters of the following formulation, leads to estimation of the illuminant spectra

where \(n\) is the order of differential, \(p\) is the Minkowski norm and \(\sigma \) is the scale of the Gaussian filter. \(\mathcal {I}_\sigma (x,y) = \mathcal {I}(x,y) *G(x,y:\sigma )\) is the Gaussian filtered image. \(\kappa \) is a constant, chosen such that the estimated illuminant spectra has a unit \(\ell _2\)-norm. The illumination corrected hyperspectral image is obtained by a simplified linear transformation

where \(\mathcal {M}\) is a diagonal matrix such that

Color constancy can be achieved by making assumptions on the first or higher order statistics of the image. There is no strict rule as to which assumption is the best. Rather it mainly depends which particular assumption suits the given image content. Following is a brief overview of assumptions made by different color constancy algorithms.

Gray World (GW) algorithm [15] assumes that the average image spectra is gray, so that the illuminant spectra can be estimated as the deviation from the gray of average.

Gray Edge (GE) algorithm [14] assumes that the mean spectra of the edges is gray so that the illuminant spectra can be estimated as the shift from gray of the mean of the edges.

White Point algorithm [16] assumes the presence of a white patch in the scene such that the maximum value in each channel is the reflection of the illuminant from that white patch.

Shades-of-Gray (SoG) algorithm [17] is based on the assumption that the \(\ell _p\)-norm of a scene is a shade of gray.

general Gray World (gGW) algorithm [15] is based on the assumption that the \(\ell _p\)-norm of a scene after smoothing is gray.

Based on the assumptions behind each of these algorithms, the White Point algorithm appears to be the most appropriate for estimating illuminant spectral power distribution from document images. Since documents are often printed on white paper, the assumption made by the WP algorithm about the presence of a white patch in the image would be mostly satisfied.

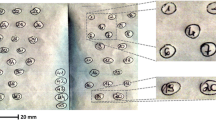

4 Application to Ink Mismatch Detection

As a sample application of hyperspectral imaging in document analysis, we chose ink mismatch detection (please refer to [1] for more details). In this paper, we specifically address the challenges associated with hyperspectral imaging of documents. The main focus is on compensating the effects of spatial and spectral non-uniformity of illumination. We perform additional experiments to observe the effects of illumination normalization using proposed compensation techniques. Using the imaging setup described in Sect. 3.1, a database consisting of 70 hyperspectral images of a hand-written note in 10 different inks by 7 subjects was collected. All subjects were instructed to write the same sentence, once in each ink on a white paper. The pens included 5 varieties of blue ink and 5 varieties of blank ink pens. It was ensured that the pens came from different manufacturers while the inks still appeared visually similar. Then, we produced mixed writing ink images from single ink notes by joining equally sized image portions from two inks written by the same subject. This made roughly the same proportion of the two inks under question.

The pre-processed mixed-ink images were first binarized using an adaptive thresholding method [18] and then fed to the \(k\)-means clustering algorithm with a fixed value of \(k=2\). Finally, ink mismatch detection accuracy was computed as

The mismatch detection accuracy is averaged over seven samples for each ink combination C\(_{ij}\). It is important to note that according to this evaluation metric, the accuracy of a random guess (in a two class problem) will be 1/3. This is different to common classification accuracy metrics where the accuracy of a random guess is 1/2. This is because our chosen metric additionally penalizes false negatives which is critical to observe in a our problem.

As discussed in Sect. 3.3, a spatially varying illumination is not desirable and modulates the spectral responses of the image pixels. In order to undo the effect of a non-uniform illumination, the images are normalized using Eq. 2. Figure 7 presents the mismatch detection accuracies on raw and normalized hyperspectral images. The improvement in correctly segmenting mismatching inks is highly evident for a majority of ink combinations of the blue and black ink, respectively.

Comparison of ink mismatch detection accuracies between raw and normalized (using Eq. 2) images. Note that the normalization significantly improves accuracy.

Comparison of mismatch detection accuracies using constant or adaptive (using Eq. 1) exposure. Observe that the adaptive exposure strategy results in a more accurate discrimination between inks of the same color.

Comparison of ink mismatch detection accuracies between no cc and cc (using Eq. 5) images. Note that the normalization by color constancy does not improve mismatch detection accuracy.

In Sect. 3.2, an adaptive exposure scheme was proposed to compensate for the varying filter transmittance. The adaptive exposure results in a higher SNR for bands with a low transmittance. We compare the use of adaptive exposure with constant exposure for hyperspectral ink mismatch detection. It can be noticed from Fig. 8 that the use of adaptive exposure either slightly improves the accuracy or remains close to the performance achieved by constant exposure.

We now evaluate the ink mismatch detection accuracy after compensating for illuminant spectral non-uniformity (color constancy) to that of no compensation as outlined in Sect. 3.4. It can be seen in Fig. 9 that there is only a slight improvement for some of the ink combinations after using color constancy.

The efficacy of the proposed hyperspectral document imaging system can be visually appreciated by a qualitative analysis of the example images. Figure 10 shows two example images of blue and black inks. The images are made by joining samples of ink 1 and ink 2 for both blue and black inks, separately. The original images are shown in RGB for clarity. The ground truth images are labeled in different colors to identify the constituent inks in the mixture.

Observe that the raw HS images are yellowish due to the strong illuminant bias as well as low filter transmittance for the wavelengths in the blue spectrum range. Besides, spatial non-uniformity of the illumination can be observed from the center to the edges. The mismatch detection results on raw images indicate that the clustering is biased by the illumination intensity, instead of the ink color. After normalization of the raw HS images, it is evident that the effect of illumination is highly depreciated. This results in an accurate mismatch detection result that closely follows the ground truth.

An illustration of ink mismatch detection on a blue ink and a black ink handwritten notes, acquired using adaptive exposure. The ground truth ink pixels are labeled in pseudo colors (red: ink 1, green: ink 2). The spatially non-uniform illumination pattern can be observed in raw HS images, with high energy in the center and low towards the edges. Normalization removes the illumination bias and greatly improves segmentation accuracy. Color constancy improves the visualization of HS images, while resulting in comparable accuracy.

We finally observe the effect of color constancy on ink mismatch detection. Notice that the mismatch detection result is largely unaffected except for a few noisy pixels which are misidentified as being from a different ink. One of the clear benefits of color constancy is that it highly improves the visual appearance of the images by removing the illumination bias.

5 Conclusion and Future Work

Hyperspectral imaging of documents has potentially numerous applications in document analysis. The spatial non-uniformity of illuminant source was compensated to a great extent by the proposed normalization strategy. The variable filter transmission was compensated for by a linear adaptive exposure function. Further improvements could be expected by introducing non-linear adaptive exposure functions. We also explored color constancy for illuminant spectral normalization which greatly improved the HS image visualization. More research attention is required to the limitations of current hardware to address challenges of illumination variation and variable filter transmission.

References

Khan, Z., Shafait, F., Mian, A.: Hyperspectral imaging for ink mismatch detection. In: Proceedings of the International Conference on Document Analysis and Recognition (ICDAR) (2013)

Shippert, P.: Introduction to hyperspectral image analysis. Online J. Space Commun. 3, 1–13 (2003)

Kise, M., Park, B., Heitschmidt, G.W., Lawrence, K.C., Windham, W.R.: Multispectral imaging system with interchangeable filter design. Comput. Electron. Agric. 72(2), 61–68 (2010)

Gat, N.: Imaging spectroscopy using tunable filters: a review. In: AeroSense 2000, International Society for Optics and Photonics, pp. 50–64 (2000)

Poger, S., Angelopoulou, E.: Multispectral sensors in computer vision. Technical Report CS-2001-3, Stevens Institute of Technology (2001)

Fiorentin, P., Pedrotti, E., Scroccaro, A.: A multispectral imaging device for monitoring of colour in art works. In: Proceedings of the International Instrumentation and Measurement Technology Conference (I2MTC), pp. 356–360. IEEE (2009)

Comelli, D., Valentini, G., Nevin, A., Farina, A., Toniolo, L., Cubeddu, R.: A portable UV-fluorescence multispectral imaging system for the analysis of painted surfaces. Rev. Sci. Instrum. 79(8), 086112 (2008)

Du, H., Tong, X., Cao, X., Lin, S.: A prism-based system for multispectral video acquisition. In: Proceedings of the International Conference on Computer Vision (ICCV), pp. 175–182 (2009)

Gorman, A., Fletcher-Holmes, D.W., Harvey, A.R., et al.: Generalization of the Lyot filter and its application to snapshot spectral imaging. Opt. Express 18(6), 5602–5608 (2010)

Burns, P.D., Berns, R.S.: Analysis of multispectral image capture. In: Proceedings of the 4th IS&T/SID Color Imaging Conference, pp. 19–22 (1996)

Mohan, A., Raskar, R., Tumblin, J.: Agile spectrum imaging: programmable wavelength modulation for cameras and projectors. Comput. Graph. Forum 27(2), 709–717 (2008)

Descour, M., Dereniak, E.: Computed-tomography imaging spectrometer: experimental calibration and reconstruction results. Appl. Opt. 34(22), 4817–4826 (1995)

Joo Kim, S., Deng, F., Brown, M.S.: Visual enhancement of old documents with hyperspectral imaging. Pattern Recogn. 44(7), 1461–1469 (2011)

Van De Weijer, J., Gevers, T., Gijsenij, A.: Edge-based color constancy. IEEE Trans. Image Process. 16(9), 2207–2214 (2007)

Buchsbaum, G.: A spatial processor model for object colour perception. J. Franklin inst. 310(1), 1–26 (1980)

Land, E.: The retinex theory of color vision. Science Center, Harvard University (1974)

Finlayson, G., Trezzi, E.: Shades of gray and colour constancy. In: Twelfth Color Imaging Conference: Color Science and Engineering Systems, Technologies, and Applications, pp. 37–41 (2004)

Shafait, F., Keysers, D., Breuel, T.M.: Efficient implementation of local adaptive thresholding techniques using integral images. In: Document Recognition and Retrieval XV, pp. 681510–681510-6 (2008)

Acknowledgment

This research work was partially funded by the ARC Grant DP110102399 and the UWA Grant 00609 10300067.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer International Publishing Switzerland

About this paper

Cite this paper

Khan, Z., Shafait, F., Mian, A. (2014). Hyperspectral Document Imaging: Challenges and Perspectives. In: Iwamura, M., Shafait, F. (eds) Camera-Based Document Analysis and Recognition. CBDAR 2013. Lecture Notes in Computer Science(), vol 8357. Springer, Cham. https://doi.org/10.1007/978-3-319-05167-3_12

Download citation

DOI: https://doi.org/10.1007/978-3-319-05167-3_12

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-05166-6

Online ISBN: 978-3-319-05167-3

eBook Packages: Computer ScienceComputer Science (R0)