Abstract

Uncertainty in the behavior of quantities of interest causes risk. Therefore statistics is used to estimate these quantities and assess their variability. Classical statistical inference does not allow to incorporate expert knowledge or to assess the influence of modeling assumptions on the resulting estimates. This is however possible when following a Bayesian approach which therefore has gained increasing attention in recent years. The advantage over a classical approach is that the uncertainty in quantities of interest can be quantified through the posterior distribution. We first introduce the Bayesian approach and illustrate its use in simple examples, including linear regression models. For more complex statistical models Markov Chain Monte Carlo methods are needed to obtain an approximate sample from the posterior distribution. Due to the increase in computing power over the last years such methods become more and more attractive for solving complex problems which are intractable using classical statistics, for instance spam e-mail filtering or the analysis of gene expression data. We illustrate why these methods work and introduce two most commonly used algorithms: the Gibbs sampler and Metropolis Hastings algorithms. Both methods are derived and applied to statistical models useful in risk analysis. In particular a Gibbs sampler is developed for a change point detection in yearly counts of events and for a regression model with time dependence, while a Metropolis Hastings algorithm is derived for modeling claim frequencies in an insurance context.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

Mathematics Subject Classification (2010)

FormalPara The Facts-

Risk is regarded as induced by the uncertainty in the behavior of quantities of interest. Therefore this random behavior has to be modeled using probability models and characteristics such as expected value and variance to be estimated.

-

An introduction to Bayesian statistics is given, which—in contrast to classical statistics—can accommodate prior knowledge about the risk parameters under consideration, in particular using Bayes’ famous theorem. Especially expert knowledge can be incorporated.

-

Bayesian inference is based on the posterior distribution of the risk parameters which summarizes the knowledge about the risk quantity after the data is observed. Common Markov Chain Monte Carlo methods for deriving the Bayesian posterior distribution are discussed, namely the Gibbs sampler and Metropolis Hastings algorithms.

-

Concepts are illustrated by examples from insurance, health care, mining and agriculture involving the risk quantities number of claims, complication rate of new medical treatment, number of coal-mining disasters and crop yield, respectively.

1 The Bayesian Approach

In this chapter we are interested in the study of quantities which are subject to uncertainty. In this context we understand risk as a process which is induced by uncertainty or randomness in the behavior of these quantities. To be more precise we will consider among other the following risk quantities: yearly crop rates, number of complications following a new medical treatment and the annual number of claims for a car insurance company. For the statistical risk analyst these quantities are random variables for which a probability distribution has to be chosen which depends on unknown population parameters and fits the observed data well. These population parameters determine the expectation and variance of the risk quantity. Classical—usually called frequentist—statistics uses solely the observed data to estimate the unknown population parameters. This is a sensible approach, however, the randomness in the observations and the limited number of observations available can lead to errors in subsequent inference. We assume that the reader has basic knowledge in probability and statistics; for convenience a glossary is provided in Appendix. Three illustrative examples are presented after this first short introduction.

In the simplest possible setting, we assume that observations come from a population whose members follow a specific probability distribution which depends on a single parameter θ. Given that we know this particular underlying distribution, we are interested in estimating θ based on the observed data. We denote such an estimate by \(\hat {\theta}\). For example, if θ is the expectation of the distribution, we can estimate it by the average of all observations, that is

where n is the number of observations with values x 1,…,x n .

In practice, the estimate \(\hat{\theta}\) will however pretty much never equal the true parameter θ, that is, in general \(\hat{\theta}\neq \theta\). Moreover, we might obtain an estimated value \(\hat{\theta}\) which is unbelievable because it maybe lies outside a range where we expected the parameter to be in. If we however still believe that our probability model for the observed data is correct, we are in the dilemma that we have to decide between our belief in the data model and our prior belief in the parameter.

Bayesian statistics solves this problem by combining prior expert knowledge with information obtained from the observations. From now on, let θ=(θ 1,…,θ k )′∈Θ be the unknown parameter of interest belonging to the parameter space Θ, where usually \(\Theta\subset\mathbb{R}^{k}\). Then we a priori assign a probability to each parameter value θ according to the prior expert knowledge available, that is, we treat the population parameter as random variable and not as a fixed unknown quantity. Statistically speaking, this means that we choose an appropriate prior distribution with density or probability function p(θ), which summarizes the knowledge about the parameter of interest. We now observe a random sample x=(x 1,…,x n )′, which are realizations of random variables X=(X 1,…,X n )′ with true probability density f(⋅|θ). For example x i is the observed crop yield in plot i of the random crop yield X i . Considering f(x|θ) as a function of the parameter θ for given observations x yields the likelihood denoted as

which summarizes the available information in the data about the parameter.

Note that in frequentist statistics, parameters are often estimated by so-called maximum likelihood estimation which means finding the parameter values \(\hat{\boldsymbol{\theta}}\) that maximize (1.2), that is, finding the value of θ which makes the observations “most likely”. For example, the quantity in (1.1) is the maximum likelihood estimate of the expectation μ of a normal distribution (see Illustration 1.1 below).

In Bayesian statistics, we however would like to incorporate prior knowledge about the parameter θ, that is the prior distribution, into the estimation procedure. Since the observations x contain information about θ, we update our knowledge about θ by considering the conditional distribution of θ given observations x. This distribution is called the posterior distribution and can be calculated by Bayes’ theorem as

where

is the unconditional density function of the observations x, called the marginal distribution. It does not depend on θ, in other words, it is only a normalizing constant with respect to θ that ensures that the posterior distribution is a proper density expression integrating to 1. Hence it holds that

that is, the posterior is proportional to the product of the likelihood and the prior. The computation of the posterior distribution however often is rather intricate so that so-called Markov Chain Monte Carlo methods are needed as discussed in Sect. 2.

A standard reference on Bayesian inference is the book by Berger [8], more recent references are Lee [5], Gelman et al. [15], Bolstad [1] and Hoff [20]. To illustrate the increasing importance of Bayesian methods in statistics, Fig. 1 shows how often the terms “classical statistics” and “Bayesian statistics” have occurred in books since 1900.

Ngram of “classical statistics” (gray) and “Bayesian statistics” (black) created using Google Books Ngram Viewer available at http://books.google.com/ngrams

Three illustrative examples for different types of data (continuous, binary, count) are given below. These represent common types of risk quantities.

Illustration 1.1

(Crop Yields)

Too small crop yields constitute a major risk to farmers. A reliable estimate of the expected crop yield and its variability therefore is needed for careful business planning. For this purpose, an agronomist studies the behavior of the random annual crop yields X 1,…,X n of n acres of the same size and with similar soil and growth conditions. From her experience and discussions with farmers she assumes that the crop yields are normally distributed with common mean θ and (known) variance σ 2 and independent of each other, that is X i ∼N(θ,σ 2), i=1,…,n. Then the likelihood (1.2) is

where \(\overline{x}\) is the empirical mean as defined in (1.1). It is a unimodal function in θ with mode given by \(\overline{x}\).

From previous years the agronomist has some prior knowledge about the likely values of the expected crop yield θ and therefore specifies a prior distribution as normal with known mean μ and known variance τ 2. Having observed the crop yields x 1,…,x n , she therefore calculates the posterior density (1.3) using (1.5) as

where

From (1.6) it follows that the posterior distribution is again normal but now with mean μ 1 and variance \(\tau_{1}^{2}\). To illustrate these concepts further, let us assume the agronomist expects an average yield of 15 per acre, that is, she sets the prior mean μ=15. She is however uncertain about her guess and therefore allows for a large uncertainty by choosing the prior variance τ 2 to be 3. After harvesting n=5 acres, the observed average yield was \(\overline {x}=11\) per acre. The seed manufacturer claims that the variability under normal growing conditions is σ 2=5 per acre. Therefore the posterior distribution has posterior moments \(\tau _{1}^{2}=0.75\) and μ 1=2. This is illustrated in Fig. 2.

The expression of the posterior expectation μ 1 in (1.7) can conveniently be rewritten as

where \(w:=w(\sigma^{2},\tau^{2},n):=\frac{\tau^{2}}{\tau^{2}+\sigma^{2}/n}\) is a weight varying from 0 to 1. Expression (1.8) shows that the posterior mean is the weighted average of the empirical mean \(\overline{x}\) and the prior mean μ. As the uncertainty in the prior knowledge, reflected by the prior variance τ 2, increases, the weight (1−w) for the prior mean decreases and the posterior mean is more heavily pulled towards the empirical mean. Moreover, the belief in the observed data as measured by the weight w also increases when the number of observations n, the number of acres under consideration, is increased. In the example it is w=0.75. This means that there is already a quite strong belief in the data.

Illustration 1.2

(Complication Rate in Medical Studies)

In a medical study, the researcher is interested in the rate of complications θ of n subjects. Clearly, the risk of the researcher is that this rate θ is higher than a small but admissible limit rate. At the end of the study, for each subject it is known whether he or she developed a complication or not. The event of complication occurrence can be modeled by a binary random variable X i which is either 1 if the patient i∈{1,…,n} develops a complication or 0 otherwise. Because the researcher developed a completely new treatment, no prior knowledge about the success probability θ of the Bernoulli distribution representing the complication probability is available. Hence, she simply assumes equal likelihood for each parameter value θ, in other words, a prior density p(θ)=1 corresponding to the uniform distribution. For observations x 1,…,x n the posterior distribution (1.3) for θ therefore simplifies to the likelihood (1.2):

If, however, prior information based on studies of similar treatments is available, the researcher can specify a more informative prior distribution. For a parameter in the range of 0 to 1, the Beta distribution with parameters α>0 and β>0 is a reasonable and quite flexible choice. Its density is given by

with normalizing constant \(B(\alpha,\beta)=\int_{0}^{1} \theta^{\alpha -1}(1-\theta)^{\beta-1} d\theta\). Furthermore, its mean and variance are E(θ)=α/(α+β) and \(\operatorname{Var}(\theta )=\alpha\beta/((\alpha+\beta)^{2}(\alpha+\beta+1))\), respectively, and for α=β=1 the Beta distribution corresponds to the uniform distribution on [0,1]. For example, if the researcher expects a 20 % complication rate with 0.1 standard error, then she solves E(θ)=0.2 and \(\operatorname {Var}(\theta)=0.1^{2}\) for α and β and obtains α=3 and β=12.

It can be shown that the posterior distribution is again Beta with parameters \(\alpha_{1}=\sum_{i=1}^{n} x_{i}+\alpha\) and \(\beta_{1}=n-\sum_{i=1}^{n} x_{i}+\beta\). The posterior mean then can be written similarly to (1.8) as a weighted average of the sample mean and the prior mean:

where \(w:=w(\alpha,\beta,n):=\frac{n}{n+\alpha+\beta}\). As before, belief in the observed data increases as the number of subjects n increases.

Illustration 1.3

(Claim Numbers in Car Insurance)

In car insurance, a good estimate of the expected number of claims is essential for adequate policy pricing. An insurance company here faces a two-way risk. Overestimation of the expected number of claims means too high premiums and therefore a loss of clients. Expecting too few claims however poses the risk of large losses in the portfolio. Assuming that an insurance company has a portfolio of n homogeneous policy holders, a common choice for the distribution of the number of claims X i ,i=1,…,n, is the Poisson distribution with mean and variance parameter θ and probability mass function

Even if the portfolio consists of rather homogeneous policy holders, there is significant uncertainty regarding the expected number of claims θ because it also depends on unobservable quantities such as risk affinity or exogenous risks like extreme weather events.

The insurance company decides to choose a Gamma prior distribution with parameters α>0 and β>0, mean α/β, and density

where Γ(α) is the Gamma function \(\Gamma(\alpha)=\int_{0}^{\infty}\theta^{\alpha-1} e^{-\theta} d\theta\).

The posterior distribution (1.3) based on observations x 1,…,x n from a previous year for example, is then obtained as follows:

which is again a Gamma distribution with parameters \(\alpha_{1}=\sum_{i=1}^{n} x_{i}+\alpha\) and β 1=n+β. As before, the posterior mean can be decomposed into a weighted average of the empirical and prior mean. Such a convenient decomposition is however not always possible.

This mixture of Poisson and Gamma densities has another interesting interpretation: if the insurance company is interested in the claim number probabilities given an unknown parameter θ, Bayes’ theorem can be “inverted” to compute the marginal density as f(x i )=f(x i |θ)p(θ)/p(θ|x i ) which results in a negative binomial distribution with the same mean as the Poisson distribution but with a higher variance due to the uncertainty in the unknown parameter.

1.1 From Non-informativeness to Conjugacy

Illustrations 1.1 and 1.2 also demonstrate a general problem of Bayesian statistics, namely the question: how do we choose an appropriate prior distribution? In certain applications, this choice might be evident but in general this is a non-trivial question and should be as objective as possible in order to not influence the results in an unwanted way. If for example in Illustration 1.1 the uncertainty in the prior knowledge τ 2 is very large, that is, the prior knowledge is rather vague, the prior will be close to p(θ)∝1 like the first prior choice in Illustration 1.2. Such a prior is called non-informative because it assigns equal likelihood to each possible parameter value. One however has to be careful if the parameter space Θ is unbounded. In that case we have ∫Θ p(θ)d θ=∞, and p(θ) is an improper prior.

Hence, such non-informative priors has to be dealt with care to ensure that the resulting posterior is proper. In Illustration 1.1, as τ 2→∞ corresponding to a non-informative prior, the posterior density is a normal density with mean \(\overline {x}\) and variance \(\frac{\sigma^{2}}{n}\), which is a proper distribution.

Another issue of non-informative priors is that they are not invariant under reparametrization of the model. For example a uniform prior on the success probability θ∈(0,1) (see Illustration 1.2) does not result in a uniform prior on the so-called odds parameter given by θ/(1−θ). An alternative approach for defining non-informative priors which has this invariance property was developed by Jeffreys [21]. Jeffreys prior is given as

where

is the expected Fisher information matrix about θ, which is a measure for the information about the parameter contained in the sample. In general, Jeffrey’s approach leads to prior densities in the form of p(θ)∝1 for location parameters θ and p(σ)∝σ −1 for scale parameters σ. For example the mean μ of a normal distribution is a location parameter and the standard error σ is a scale parameter.

On the other hand, the choice of an informative prior is always preferable if there is some kind of a priori knowledge about the parameter of interest. However, it will not be possible to get an analytically closed form expression of the posterior in complex situations, since the normalizing constant f(x) defined in (1.4) of the posterior distribution requires a possibly high-dimensional integration. Posterior calculations are however simple if one considers conjugate prior distributions. A class of prior distributions \(\mathcal{P}\) is conjugate to a class of observational models \(\mathcal{F}\) if for every prior p out of \(\mathcal{P}\) and for any observational distribution f from \(\mathcal {F}\), the posterior distribution p(⋅|x) remains in the class of the prior distribution \(\mathcal{P}\).

Example 1.4

(Conjugate Prior Distributions)

The class of normal priors for the mean (Illustration 1.1) is conjugate for the observational model of normal distributions with known variance, while the class of Beta priors (Illustration 1.2) is conjugate for the observational model of Bernoulli distributions. Finally Illustration 1.3 also shows that the class of Gamma priors is conjugate for Poisson distributions.

1.2 Bayesian Inference

In Bayesian statistics all information about the parameter θ is contained in the posterior distribution, while in classical statistics the information about θ is captured by point and interval estimates. However, for the Bayesian, these quantities can be straightforwardly derived as well.

The main location measures are the posterior mean, as discussed in Illustrations 1.1–1.3, the posterior median and the posterior mode, where the last quantity is closest to the maximum likelihood principle from frequentist statistics, that is, the parameter θ is most likely to be observed as judging from the available information contained in the observations. In maximum likelihood (ML) estimation we choose \(\hat{\boldsymbol{\theta }}_{ML}=\operatorname{argmax}_{\boldsymbol{\theta}\in\Theta} \ell(\boldsymbol {x}|\boldsymbol{\theta})\), while the posterior mode (PM) is augmented by the prior and given by \(\hat{\boldsymbol{\theta}}_{PM}=\operatorname{argmax}_{\boldsymbol{\theta}\in\Theta} \ell(\boldsymbol{x}|\boldsymbol {\theta})p(\boldsymbol{\theta})\). Note that, for example, for normal distributions the mean, mode and median coincide, while this is in general not the case, such as for the Gamma distribution.

The main dispersion measures are the variance, standard deviation (square root of the variance), precision (inverse of the variance) and interquartile range (difference between 75 %- and 25 %-quantiles) of the posterior distribution. Corresponding to the Fisher information defined in (1.12), one also often considers the posterior curvature at the mode which is the matrix of second derivatives of the posterior density in log form at the mode. If θ is a vector, marginal densities can also be assessed.

In addition to these Bayesian point estimates 100(1−α) % credible intervals provide interval estimates for θ and are given for a scalar parameter θ by an interval I(x), depending on the observations x, such that

In contrast to the confidence interval in classical statistics, the credible interval allows the interpretation that the parameter θ is contained with probability 1−α in I(x), since θ is now modeled as a random quantity.

Example 1.5

(Inference of the Normal Distribution)

In Illustration 1.1 we have seen that the posterior distribution is given by the normal distribution with mean μ 1 and variance \(\tau_{1}^{2}\). Therefore the posterior mean, mode and median are μ 1, while the posterior variance is \(\tau_{1}^{2}\) and the posterior precision is \(\tau_{1}^{-2}\), which is also the posterior curvature at the mode.

A 100(1−α) % credible interval [θ l (x),θ u (x)] for θ is given by appropriate quantiles of the posterior distribution: \(\theta_{l}(\boldsymbol{x}) = \mu_{1}-\tau_{1} \Phi^{-1} (1-\frac{\alpha}{2} )\) and \(\theta_{u}(\boldsymbol{x}) = \mu_{1}+\tau_{1} \Phi^{-1} (1-\frac{\alpha}{2} )\), where Φ−1 is the inverse of the standard normal distribution function. This is also the shortest possible credible interval. Note that the corresponding classical 100(1−α) % and confidence interval for θ is given by \(\bar{x}\pm\frac{s}{\sqrt{n}} \Phi^{-1} (1-\frac{\alpha}{2} )\) where \(s^{2}:=\frac{1}{n-1}\sum_{i=1}^{n} (x_{i}-\bar{x})^{2}\) is the sample variance.

Returning to the specific example of Illustration 1.1, a corresponding 95 % credible interval for the mean yield is [10.303,13.697], while a 95 % confidence interval is [9.040,12.960] when assuming a sample variance of s 2=5. From the Bayesian theory the agronomist can say that the mean yield is between 10.303 and 13.697 with 95 % probability. The frequentist approach gives that the random interval \(\bar{x}\pm \frac{s}{\sqrt{n}} \Phi^{-1} (1-\frac{\alpha}{2} )\) covers the mean in 95 % of times. For the specific observations this interval is given by [9.040,12.960].

1.3 Conjugacy and Regression Models

Before closing this section we consider the problem of modeling the influence of potential explanatory variables on a risk quantity called response. The simplest such model is the linear regression model for the response vector Y=(Y 1,…,Y n )′:

where x i1,…,x id are known values of d explanatory variables for the ith observation and β 1,…,β d are unknown regression coefficients. We can rewrite this model in matrix form as follows:

where N n (μ,Σ) denotes the n-dimensional normal distribution with mean vector μ and covariance matrix Σ. Further we define

The matrix X is called the design matrix and we assume that its columns are not linearly dependent.

Applications of such models can be found in virtually all areas of scientific research. For example, in Illustration 1.1 the agronomist may also try to model the crop yields with respect to a set of explanatory variables such as rainfall or sunshine duration. An experienced agronomist may have some prior expert knowledge about the effect of these variables and therefore can choose appropriate prior distributions for the regression coefficients. Similarly, based on her experience she may also be able to specify a prior for the variance parameter of the model parameters.

In model (1.14) it is more convenient to formulate priors in terms of β and the precision ϕ:=σ −2. A typical choice is the Normal-Gamma, NG(b 0,B 0,n 0,S 0), prior, which is, for known constants n 0 and S 0, known vector b 0 and known matrix B 0, defined in a hierarchical way as

Equivalently we can assume β|σ 2∼N d (b 0,σ 2 B 0) and \(\sigma^{2} \sim \text{Inverse Gamma} (\frac{n_{0}}{2}, \frac{n_{0}S_{0}}{2} )\). Here the Inverse Gamma distribution is derived as follows: if X∼Gamma(α,β) then 1/X∼Inverse Gamma(α,β). Under this setup the following theorem holds:

Theorem 1.6

(Conjugacy in Regression)

For the linear model given in (1.14) with observed response y and prior distribution given by (1.15) the posterior distribution of (β,ϕ) is given by an NG(b 1,B 1,n 1,S 1) distribution with

See Gamerman and Lopes [4, Sect. 2.3.2] for a proof.

2 MCMC—Markov Chain Monte Carlo

In Sect. 1.1 we studied the choice of prior distributions. In particular, we discussed non-informative priors and conjugate families which allow for an easy derivation of the posterior distribution (1.3). This is however not the case in general. Markov Chain Monte Carlo (MCMC) methods are used to approximate the posterior in more complex situations. Although being very computer intensive, the increasing availability of computing power nowadays makes the use of MCMC methods increasingly attractive. In particular, MCMC methods may be used to solve complex problems which cannot be treated using classical statistics. Examples of such problems are spam e-mail filtering and the analysis of gene expression data, just to name a few.

MCMC methods are based on the two well-known concepts of Markov Chains and Monte Carlo techniques. Both concepts will be explained first, before we then introduce the two most commonly used algorithms, namely the Gibbs sampler and Metropolis Hastings algorithms. Recent comprehensive references on MCMC methods include Gamerman and Lopes [4] and Marin and Robert [22].

2.1 ∗∗MC—Monte Carlo

To understand MCMC methods, we begin with the second “MC” which refers to “Monte Carlo” and which is due to the often used Monte Carlo integration techniques. In general Monte Carlo methods repeatedly sample from a probability distribution to determine analytically difficult quantities. For example, let us assume that t(⋅) is a function and we are interested in computing the integral

of which no closed form solution is known. This is, for example, often the case for the marginal density function f defined in (1.4) which is part of the posterior distribution defined in (1.3). For such problems we use the following numerical approximation. First let θ∈(0,1) be a random variable with density p. Then the expectation of the random variable t(θ) is \(E(t(\theta)) = \int_{0}^{1} t(\theta) p(\theta) d\theta\). If we can sample from p, an estimate of E(t(θ)) is the sample mean. In particular, let θ be uniform on (0,1) and θ 1,…,θ n a corresponding independent and identically distributed (i.i.d.) random sample. Then (2.1) can be estimated by

By the strong law of large numbers (see Durrett [12]) \(\hat{I}\) converges to I=E(t(θ)) with probability 1, since p(θ)=1 for all θ∈(0,1).

In Bayesian statistics the posterior expectation E(t(θ)|x) can be estimated by the sample mean (2.2) when θ 1,…,θ n is a sample from the posterior distribution p(⋅|x). As long as the posterior distribution and sampling algorithms are available, there are no problems and the first “MC” referring to “Markov chain” is not needed.

As mentioned above, it is unfortunately not the case that an analytical form of the posterior density p(⋅|x) is always available. The idea of MCMC methods therefore is to construct a Markov chain with limiting distribution p(⋅|x). If the Markov chain is run for a sufficiently long time, it can be assumed that the stationary state is reached and therefore the realizations of the chain represent a sample from p(⋅|x). In the following section we therefore give a brief overview of Markov chain theory. Readers familiar with it can skip Sect. 2.2 and continue reading with Sects. 2.3 and 2.4 which discuss the two most common MCMC methods.

2.2 MC∗∗—Markov Chains

We give a short introduction to Markov chains and state major results. A more detailed treatment can be found in Meyn and Tweedie [24], Nummelin [25], Resnick [26] and Guttorp [18]. The set of random variables {θ (t):t∈T} is said to be a stochastic process taking values in the state space S for time points t in the index set T. In our discussion we will only consider discrete time stochastic processes with T being the set of natural numbers \(\mathbb{N}=\{1,2,\ldots\}\). The state space S can generally be a subset of the d-dimensional set of real numbers, \(\mathbb{R}^{d}\), but in the following we will concentrate on a discrete state space S. Details on continuous state space Markov chains can be found in Meyn and Tweedie [24].

A Markov chain is a process, such that given the present state, past and future states are independent:

for all x 0,…,x n+1∈S. If the probabilities in (2.3) do not depend on n, we say that the Markov chain is homogenous. In this case we define the transition probability P(x,y) of moving from state x to state y as:

In general, for A⊂S, P(x,A):=∑ y∈A P(x,y) is called the transition kernel.

Illustration 2.1

(Molecule Movement)

Consider a molecule traveling in a liquid or a gas which moves independently left and right with successive displacements from its current position governed by a probability function f over the integers, that is \(S=\mathbb{Z}\). Such a process is called a random walk. Let θ (n) represent the position of the molecule at time n. Therefore we have

where w i ∼f independently and for all i≥1. For the initial position θ (0) we assume an initial distribution π (0).

The case where the probabilities of right, left or stay move are given by p, q and 1−p−q, respectively, is represented by assuming f(1)=p, f(−1)=q and f(0)=1−p−q. This implies that

which is illustrated in Fig. 3.

If the state space \(S\subset\mathbb{R}^{d}\) is not only discrete but also finite, that is S={x 1,x 2,…,x r }, we can consider the transition matrix P defined by

Higher order transition probabilities P m for m≥2 can be obtained as follows

where the second equality is due to the Markov property (2.3). In matrix notation we have P m=P⋯P meaning matrix multiplication m times of the matrix P.

Further, let π (0) be the initial distribution of the chain, π (0)(x):=P(θ (0)=x). The marginal distribution after n time steps is given by

which can also be written as π (n)=π (0) P n=π (0) P n−1 P=π (n−1) P.

Before we move on to discuss some major results which are the basis of MCMC methods, we consider an illustrative example.

Illustration 2.2

(Daily Allowance in Health Insurance)

A health insurance company sells policies which pay a daily allowance to sick policy holders. In order to price the policies, the company sets up the following simplifying model. The health state of a person is modeled as a Markov chain {θ (n):n≥0} with states S={healthy,sick}, denoted as S={0,1}, respectively. The initial distribution (the proportions of healthy and sick policy holders when the policy is sold) is denoted by π (0)=(π (0)(0),π (0)(1))′ and the transition matrix P by

That is, a healthy policy holder today is assumed to fall ill tomorrow with a probability of p versus staying healthy with a probability of 1−p. Similarly, a sick policy holder becomes healthy with a probability of q and stays sick with a probability of 1−q. An exemplary realization of this Markov chain is shown in Fig. 4

The probability that a person is healthy after n days (independent of whether or not he or she was sick in the meantime) is given by

If p=q=0, that is, healthy (sick) persons always stay healthy (sick), then P(θ (n)=0)=π (0)(0) and P(θ (n)=1)=π (0)(1). If p+q>0, using results for the finite geometric series gives

If the initial distribution is given by \(\pi^{(0)}=(\frac{q}{p+q},\frac {p}{p+q})^{\prime}\), then the marginal probability \(P(\theta^{(n)}=0) = \frac{q}{p+q}\) is the same for all time points n.

If p+q<2, then (1−p−q)n converges to zero as n goes to infinity and therefore

which shows that the initial distribution is obtained as the limiting distribution of the Markov chain. For the realizations of the Markov chain shown in Fig. 4 the convergence is illustrated in Table 1.

To obtain the probability that an initially healthy policy holder is also healthy after n days, denoted by P n(0,0), we assume that we always start in the healthy state, that is π (0)(0)=1. Using (2.4) with π (0)(0)=1 this gives

Similarly, we compute P n(1,0), P n(0,1) and P n(1,1) to determine the nth order transition matrix P n as

Finally, we denote by T 0 the first time that a person becomes healthy again. Given that he or she was healthy when taking out the policy, we have

Similarly let T 1 be the first time that a person falls ill. Then it holds that P(T 1=n|θ (0)=0)=P(0,0)n−1 P(0,1)=p(1−p)n−1.

A fundamental problem for Markov chains in the context of simulation is the study of the asymptotic behavior of the chain as the number of steps or iterations n goes to infinity. A key concept for this is the stationary distribution π, which satisfies

and can be written in matrix notation as π=πP. The reason for the name is clear from the above equation. If the marginal distribution at any step n is π, then the distribution of the next step is πP. Once the chain reaches a stage where π is the distribution of the chain, the chain retains this distribution for all subsequent stages.

Illustration 2.3

(Illustration 2.2 Continued)

Since the policies sold by the health insurance company are valid for the full lifetime of a policy holder, the company would like to investigate the long term expected proportions of healthy and sick persons. Since S={0,1}, in this case condition (2.7) is equivalent to

The solution is \(\pi=(\frac{q}{p+q},\frac{p}{p+q})\). Also for p+q<2 it follows from (2.6) that

and the distribution of θ (n) converges to π at an exponential rate. This shows that for p+q<2 the proportion of healthy and sick policy holders is asymptotically given by the stationary distribution π.

The case p+q=2 still produces a stationary distribution π but this does not provide a unique limiting distribution since from (2.5) it follows that

This case is somewhat different, since the states are always alternating over time corresponding to the case that persons are healthy one day and always fall ill the next day which is evidently rather unrealistic. The chain has a periodic nature that will be addressed below.

Having established some basic properties of Markov chains, we are interested in characterizing the limiting behavior. For this a classification of the states of the Markov chain is necessary. For a more complete treatment see for example Chap. 2 of Resnick [26]. We define the first visit time to y as T y =inf{n≥1:θ (n)=y} and the probability of visiting y after starting in x in finite time by ρ xy :=P(T y <∞|θ (0)=x). Then a state y∈S is recurrent if and only if ρ yy =1, and—more strongly—positive recurrent if and only if y is recurrent and E(T y |θ (0)=y)<∞. Further, the state x is said to hit y or y is accessible from x, denoted by x→y if and only if ρ xy >0. One can show that x→y if and only if there exist an n≥0 such that P n(x,y)>0 (see Resnick [26, p. 78]). Let x↔y if and only if x→y and y→x. This is an equivalence relationship. The Markov chain is called irreducible if x→y for every pair x,y∈S.

Finally, to establish limit distributions one also needs to introduce the notation of periodicity. The period of state x is given by

It follows that the condition P(x,x)>0 implies d x =1. Such a state is called aperiodic. Thus the states 0 and 1 in Illustration 2.3 are aperiodic if p+q<2. On the other hand, if p+q=2, it holds that d 0=d 1=2, in other words, the states 0 and 1 are periodic with period 2.

A state x is called ergodic if it is aperiodic and positive recurrent. Similarly, a Markov chain is called ergodic if all states are aperiodic and positive recurrent. These concepts are sufficient to characterize the limiting distribution.

Theorem 2.4

(Limiting Distribution)

Let {θ (n),n≥0} be an irreducible and ergodic Markov chain with stationary distribution π, then

A proof can be found in Guttorp [18, Theorem 2.9]. This shows that the stationary distribution is also the limiting distribution under the assumptions of Theorem 2.4.

While the empirical mean converges to the population mean as the sample size increases for i.i.d. samples by the strong law of large numbers, a Markov chain equivalent will now be given.

Theorem 2.5

(Ergodic Theorem)

If the chain is ergodic and E π (t(θ))<∞ for the unique limiting distribution π then

A proof can be found on page 49 of Guttorp [18]. This theorem can be used as justification for using \(\overline{t}_{n}\) as an estimate for E π (t(θ)), see also the discussion in Sect. 2.1. A central limit theorem for Markov chains can also be formulated and is found for example in Gilks, Richardson, and Spiegelhalter [17]. It can be used for constructing asymptotic confidence intervals.

Having established the asymptotic theory of Markov chains, the final, and crucial, step is simulation. For this, consider an ergodic Markov chain {θ (n),n≥0} with state space \(S\subset\mathbb{R}^{d}\), transition probabilities P(x,y) and initial distribution π (0). To generate values from this Markov chain the following algorithm can be used.

-

Sample a starting value θ (0) from the initial distribution π (0).

-

For i=1,…,n, sample value θ (i) from the probability mass function f(⋅):=P(θ (i−1),⋅).

As n gets large the sampled values will have a distribution close to the limiting distribution π and can therefore be considered as an approximate sample from π. Note that all samples drawn after convergence are also samples from π since it is the stationary distribution. Here, convergence of a Markov chain means that the stationary distribution is approximated sufficiently accurately, which is difficult to assess. Relevant references will be given below. The values before convergence are called the burn-in period and will be deleted when considering the ergodic averages such as \(\bar {t}_{n}\). The sampled values are dependent, since they arise from a Markov chain, however so-called thinning and batching methods can be applied to achieve an approximately i.i.d. sample. This general method of approximate sampling from the stationary distribution is called the Markov Chain Monte Carlo (MCMC) approach.

We can now use this approach to draw approximate samples from a complex posterior distribution p(⋅|x), which is analytically not tractable, by assuming that p(⋅|x) is the stationary distribution π of a Markov chain. The next two sections will study two famous MCMC algorithms in detail.

2.3 Gibbs Sampler

This chapter introduces and discusses the first widely used sampling scheme for constructing a Markov chain with prespecified limiting distribution π. It was first developed for approximately sampling from the Gibbs distribution used in image analysis. Geman and Geman [16] discussed this problem for several sampling schemes. Gelfand and Smith [14] were the first to point out to the statistical community at large that this sampling scheme could be used for other distributions than the Gibbs distribution. Before stating the sampling algorithm, we consider a small illustrative example.

Illustration 2.6

(Health States of a Couple)

(Casella and George [2]) Let S={(0,0)′,(1,0)′,(0,1)′,(1,1)′} be a two-dimensional state space with probability distribution π for the random vector θ=(θ 1,θ 2)′ given by

In view of Illustration 2.2 this can be interpreted as the healthy and sick states of a married couple. For example, if the first component corresponds to the health state of the husband and the second to that of his wife, then θ 1=1 and θ 2=0 indicates that the husband is sick, while his wife is healthy.

The Markov chain now consists of a bivariate vector \(\boldsymbol{\theta }^{(n)}=(\theta^{(n)}_{1},\theta^{(n)}_{2})^{\prime}\) and the following transition probabilities are assumed.

-

For \(\theta^{(n)}_{1}\) the probability of moving from \(\theta _{2}^{(n-1)}=j\) to \(\theta^{(n)}_{1}=0\) and \(\theta^{(n)}_{1}=1\), respectively, is given by

$$ \pi_1(0|j)= \frac{\pi_{0j}}{\pi_{0j}+\pi_{1j}} \quad \text{and}\quad \pi_1(1|j)= \frac{\pi_{1j}}{\pi_{0j}+\pi_{1j}}. $$(2.9)Note that π 1(⋅|j) is the conditional probability function of θ 1 given θ 2=j, j=0,1.

-

For \(\theta^{(n)}_{2}\) the probability of moving from \(\theta _{1}^{(n)}=i\) to \(\theta^{(n)}_{2}=0\) and \(\theta^{(n)}_{2}=1\), respectively, is given by

$$ \pi_2(0|i)= \frac{\pi_{i0}}{\pi_{i0}+\pi_{i1}} \quad \text{and}\quad \pi_2(1|i)= \frac{\pi_{i1}}{\pi_{i0}+\pi_{i1}}. $$(2.10)Note that π 2(⋅|i) is the conditional probability function of θ 2 given θ 1=i, i=0,1.

This means that the husband’s health state depends on his wife’s yesterday’s state and today’s health state of the wife depends on today’s health state of the husband. For a transition from state (i,j) yesterday to state (k,l) today we have

Therefore the overall transition probability is given by

for (i,j),(k,l)∈S. Thus a 4×4 transition matrix P can be formed.

One can further show that \(\{\boldsymbol{\theta}^{(n)}=(\theta ^{(n)}_{1},\theta^{(n)}_{2})^{\prime}, n\geq0 \}\) forms a Markov chain and that π defined in (2.8) is the stationary distribution of the chain. If all elements of π are positive, it is also a limiting distribution. In particular, chains formed by the superposition of the conditional distributions have a stationary distribution given by the joint distribution.

Illustration 2.6 can easily be extended to the case where θ consists of d components with m 1,…,m d values.

In general, Gibbs sampling is an MCMC scheme where the transition probabilities are formed by the full conditional distributions. Assume as before that the distribution of interest is π(θ), where θ=(θ 1,…,θ d )′. Each of the d components can be a scalar, vector or matrix. Further assume that for each i∈{1,…,n} the full conditional distribution for θ i

is known and can be sampled, for example, using Eqs. (2.9) and (2.10) in the above example. The Gibbs sampling algorithm can now be described as follows.

-

1.

Set the iteration counter to j=1 and set initial values \(\boldsymbol{\theta}^{(0)}=(\boldsymbol{\theta}_{1}^{(0)},\ldots ,\boldsymbol{\theta}_{d}^{(0)})^{\prime}\).

-

2.

Obtain a new value \(\boldsymbol{\theta}^{(j)}=(\boldsymbol {\theta}_{1}^{(j)},\ldots,\boldsymbol{\theta}_{d}^{(j)})^{\prime}\) through successive generation of values

$$\begin{aligned} \boldsymbol{\theta}_1^{(j)} \sim&\pi\bigl(\boldsymbol{ \theta}_1\big|\boldsymbol {\theta}_2^{(j-1)},\ldots, \boldsymbol{\theta}_d^{(j-1)}\bigr), \\ \boldsymbol{\theta}_2^{(j)} \sim&\pi\bigl(\boldsymbol{ \theta}_2\big|\boldsymbol {\theta}_1^{(j)}, \boldsymbol{\theta}_3^{(j-1)}, \ldots,\boldsymbol{ \theta}_d^{(j-1)}\bigr), \\ \vdots& \\ \boldsymbol{\theta}_d^{(j)} \sim&\pi\bigl(\boldsymbol{ \theta}_d\big|\boldsymbol {\theta}_1^{(j)},\ldots, \boldsymbol{\theta}_{d-1}^{(j)}\bigr). \end{aligned}$$ -

3.

Change counter j to j+1 and return to step 2 until convergence is reached.

When convergence is reached the resulting value θ (j) is a draw from π. Often convergence is assessed by choosing an error bound ε>0 and assuming convergence when the distance between θ (n+1) and θ (n) is less than ε. A further example is given in the following.

Illustration 2.7

(Coal Mining Disasters)

Carlin, Gelfand, and Smith [10] discuss the following problem: yearly numbers Y 1,…,Y M of British coal-mining disasters as measured over more than a century are unlikely to have stayed at a similar level due to better technology and increased safety requirements. It is therefore reasonable to assume the presence of a change point m∈{1,…,M} at which the general level of disasters significantly changed. Therefore Carlin et al. [10] assume the number of coal-mining disasters before that (unknown) change point to be Poisson distributed with another intensity parameter than after. They consider the following hierarchical model:

where α,β,γ and δ are known constants and the model is termed “hierarchical”, since the parameters of the Poisson distributions are modeled as random themselves. That is, m is the year where there is a significant change in the number of disasters as modeled by Y 1,…,Y M with different (random) intensities λ and ϕ depending on whether Y i is measured before or after the change point m, respectively. Due to missing prior knowledge about the change point m its distribution is modeled as uniform.

The joint posterior density of λ,ϕ and m given data y=(y 1,…,y M )′ satisfies

where 1 A is the indicator function satisfying 1 A (m)=1 if m∈A and 1 A (m)=0 otherwise. Further f P and f G denote the Poisson and Gamma density functions, respectively (see Glossary A.2).

Therefore the full conditionals can be calculated as

and similarly, \(\pi_{\phi}^{FC}(\phi) \propto\text{Gamma}(\gamma+\sum_{i=m+1}^{M} y_{i},\delta+M-m)\), and for the discrete random parameter m for m=1,…,M as

Therefore the Gibbs sampler for (λ,ϕ,m) draws λ (n+1) from Gamma\((\alpha+ \sum_{i=1}^{m^{(n)}} y_{i},\beta+m^{(n)})\), ϕ (n+1) from Gamma\((\gamma+\sum_{i=m^{(n)}+1}^{n} y_{i},\delta +M-m^{(n)})\) and chooses m (n+1)=m with probability \(\pi_{m}^{FC}(m)\). Here \(\pi_{m}^{FC}(m)\) depends on λ (n+1) and ϕ (n+1).

To get a first impression on the behavior of this Gibbs sampler, we simulated data from the model (2.11) with M=50,α=5,β=1,γ=1 and δ=1 (left panel of Fig. 5) and implemented the Gibbs sampler for 100 iterations. Note that the Gamma priors for λ and ϕ are quite informative, since the signal-to-noise ratio (mean divided by standard deviation) is 1. For illustration we used the true values as starting values. In the left panel of Fig. 5 the data is presented and the time plots of the MCMC iterations and posterior density estimates for each parameter are shown in the right panel of the same figure. The true values are indicated by a vertical dotted line.

Left panel: simulated data from model (2.11) with M=50, α=5, β=1, γ=1 and δ=1. Right panel: time plot of MCMC iterations and posterior density estimates based on 100 iterations from the Gibbs sampler

The time plots (first column of right panel) indicate that the sampler is converged, which we expect since we used the true values as starting values. The true values of λ and ϕ are reasonably in the center of the sampled posterior distribution. The sampler has no difficulty finding the true break point. In general, the assessment of convergence is difficult especially for higher dimensions and convergence diagnostics have to be considered.

We now establish a few basic facts for the Gibbs sampler. First of all the Gibbs sampler defines a Markov chain, since the update step at iteration j involves only values of the chain at j−1. Also the chain is homogeneous, since transitions are only affected by the iteration through the chain values. The transition kernel from ϕ=(ϕ 1,…,ϕ d )′ to θ=(θ 1,…,θ d )′ is given by

The limiting distribution of a Markov chain with transition kernel (2.12) is π, which we established for d=2 and the discrete case in Illustration 2.6. For the continuous case the exact conditions under which the Markov chain resulting from the Gibbs sampler has limiting distribution π are given in Roberts and Smith [27]. For the continuous case π-irreducibility and aperiodicity are sufficient conditions (see Nummelin [25]). However, there are Markov chains derived from the Gibbs sampler which are not irreducible, see, for example, Gilks et al. [17]. Finally it can also be shown that π is stationary.

Even though theoretical results assure the convergence of the Gibbs sampler, they are difficult to validate theoretically for many complex statistical problems. In these cases a more practical approach is to assess the convergence by plotting n versus θ (n). If the variability of θ (n) for n≥n 0 is approximately constant, then a burn-in of n 0 iterations is sufficient. Further MCMC sample based convergence assessments and comparison of several samplers with regard to burn-in iterations and required arithmetic operations are considered in Gilks et al. [17] and Marin and Robert [22] and the references therein.

Next, we draw attention to the use of the sample. For this, assume that we have a sample θ (1),…,θ (n) from the posterior distribution π now available as generated by the Gibbs sampler, after some burn-in period and possibly thinning or batching to reduce autocorrelation of the sampled MCMC iterates. Suppose we are interested in the posterior distribution of the statistics ψ=t(θ). The standard estimator

estimates the posterior mean E π(θ|x)(ψ) of ψ, while the posterior variance \(\sigma_{\boldsymbol{\psi}}^{2}:= \operatorname{Var}_{\pi (\boldsymbol{\theta}|\boldsymbol{x})}(\boldsymbol{\psi})=E_{\pi (\boldsymbol{\theta}|\boldsymbol{x})}(\boldsymbol{\psi}^{2})-[E_{\pi (\boldsymbol{\theta}|\boldsymbol{x})}(\boldsymbol{\psi})^{2}]\) is estimated by

Moreover, posterior credibility intervals for ψ can be estimated by using sample quantiles as the estimates of the interval limits. For example if one is interested in estimating a 95 % credible interval for ψ and n=1000, then the estimated credible interval is given as the interval between the 25th and 975th largest sampled value for ψ. This section concludes with a continuation of the example on linear regression models.

Illustration 2.8

(Linear Regression with Ar(1) Disturbances)

Sometimes the observed risk quantities are not independent, but might depend on previous observations. For example if we consider monthly plant growth rates, then the growth rate might depend on the variety but also on the previous month growth rate. Therefore we extend the linear regression model of Sect. 1.3 to include autoregressive lag 1 (AR(1)) disturbances, that is, the response variables are no longer assumed independent but dependent upon the previous response. We change indices from i to t to acknowledge the time dependencies. Similar to (1.13) the model is then given by

for a time series of responses Y t with possibly time dependent covariates \(\boldsymbol{x}_{t}=(x_{t1},\ldots,x_{td})^{\prime}\in\mathbb {R}^{d}\) for t=1,…,T. Further we assume |ρ|<1 and ϵ t ∼N(0,σ 2) are i.i.d. As an initial condition we use \(u_{0} \sim N(0, \frac{\sigma^{2}}{1-\rho^{2}})\). The following informative priors can be used:

-

\(\boldsymbol{\beta} |\sigma^{2} \sim N_{d}(\boldsymbol{\beta}_{0}, \sigma^{2} A_{0}^{-1})\)

-

\(\sigma^{2} \sim \text{Inverse Gamma} (\frac{n_{0}}{2},\frac {\delta_{0}}{2} )\)

-

\(\rho\sim N(\rho_{0}, R_{0}^{-1})\) truncated to (−1,1), where a truncated normal distribution is a normal distribution whose values are bounded below, above or both. Thus the usual normal density is multiplied with an indicator function 1(a,b) for an interval with endpoints a<b and rescaled appropriately to ensure that it integrates to 1.

In the following we determine the full conditional distributions of the parameters, which can be used in a corresponding Gibbs sampling scheme.

-

1.

Regression parameter: To update the vector of regression parameters β consider the following transformations

$$ \boldsymbol{Y}^*:= \left ( \begin{array}{c} \sqrt{1-\rho^2} Y_1 \\ Y_2 - \rho Y_1 \\ Y_3 - \rho Y_2 \\ \vdots \\ Y_T-\rho Y_{T-1} \end{array} \right ) \quad \text{and}\quad X^*:= \left ( \begin{array}{c} \sqrt{1-\rho^2} \boldsymbol{x}_1^\prime \\ \boldsymbol{x}_2^\prime - \rho\boldsymbol{x}_1^\prime \\ \boldsymbol{x}_3^\prime- \rho\boldsymbol{x}_2^\prime \\ \vdots \\ \boldsymbol{x}_T^\prime-\rho\boldsymbol{x}_{T-1}^\prime \end{array} \right ). $$Therefore Y ∗ follows a standard linear model with

$$ \boldsymbol{Y}^*= X^*\boldsymbol{\beta} +\boldsymbol{\varepsilon} \quad \text{where } \boldsymbol{\varepsilon} \sim N_T\bigl(0, \sigma^2 I_T\bigr). $$Since the full conditional for β given Y,X,ρ and σ 2 is the same as the full conditional for β given Y ∗,X ∗,ρ and σ 2, we can use Theorem 1.6 to show that

$$ \boldsymbol{\beta}|\boldsymbol{Y}, X, \rho,\sigma^2 \sim N_p\bigl(\boldsymbol {\beta}_1,\sigma^2 B_1^{-1}\bigr), $$with B 1=(A 0+X ∗′ X ∗)−1 and β 1=B 1(A 0 β 0+X ∗′ Y ∗).

-

2.

AR(1) error variance: By again considering the precision \(\phi:=\frac{1}{\sigma^{2}}\) and using the equality of the following conditional distributions ϕ|Y,X,β,ρ=ϕ|Y ∗,X ∗,β,ρ, it can be shown that

$$ \sigma^2|\boldsymbol{Y},X,\boldsymbol{\beta},\rho\sim \text{Inverse Gamma} \biggl(\frac{n_1}{2},\frac{\delta_1}{2} \biggr), $$with n 1=T+n 0+d and \(\delta_{1}=\delta_{0}+(\boldsymbol{\beta}-\hat {\boldsymbol{\beta}})^{\prime}X^{*\prime} X^{*} (\boldsymbol{\beta}-\hat {\boldsymbol{\beta}})+(\boldsymbol{Y}^{*}-X^{*}\hat{\boldsymbol{\beta }})^{\prime}(\boldsymbol{Y}^{*}-X^{*}\hat{\boldsymbol{\beta}})+(\boldsymbol {\beta}-\boldsymbol{\beta}_{0})^{\prime}A_{0} (\boldsymbol{\beta}-\boldsymbol {\beta}_{0})\), where \(\hat{\boldsymbol{\beta}} = (X^{*\prime} X^{*})^{-1} X^{*\prime} \boldsymbol{Y}^{*}\).

-

3.

Correlation parameter: Finally for updating the parameter ρ we can use Bayes’ theorem to show that

$$ \rho|\boldsymbol{Y},X,\boldsymbol{\beta},\sigma^2 \sim N(\tilde{\rho },\tilde{R}) \mbox{ truncated to } (-1,1), $$where \(\tilde{R}:= \sigma^{-2}(\sum_{t=1}^{T} u_{t-1}^{2} +R_{0})\) and \(\tilde {\rho}:= \tilde{R}^{-1} (\sigma^{-2}\sum_{t=1}^{T} u_{t} u_{t-1}+R_{0} \rho_{0} )\).

2.4 Metropolis Hastings Algorithms

The final MCMC algorithms presented here are the Metropolis Hastings algorithms (Metropolis et al. [23]; Hastings [19]). A nice introduction to the Metropolis Hastings algorithms is given in Chib and Greenberg [3]. As before, we are interested in constructing a Markov Chain with given stationary distribution π. First we consider a small example to motivate the discussion below.

Illustration 2.9

(Metropolis Hastings Algorithms)

Consider a distribution π for x∈S, where \(S \subset\mathbb{R}^{d}, d \geq1\). For a possible application recall Illustration 2.6, where we investigated the health states of a couple as modeled by the two-dimensional state space S={0,1}2 and the probability distribution π.

Our aim is to construct a Markov chain with stationary and limiting distribution π. For this, let Q be any four-dimensional irreducible transition matrix on S satisfying the symmetry condition Q(x,y)=Q(y,x) ∀x,y∈S and define a Markov chain {θ (n),n≥0} as having transitions from x to y proposed according to the probabilities Q(x,y). This proposed value for θ (n+1) is accepted with probability \(\min\{ 1, \frac{\pi(\boldsymbol{y})}{\pi(\boldsymbol{x})}\}\) and rejected otherwise, leaving the chain in x. This implies that for x≠y

and for x=y

Further observe that if we assume that π(y)>π(x) for x≠y, then

and similarly if π(y)<π(x). This result is referred to as reversibility of a Markov chain and ensures that π constitutes the stationary distribution of the chain. If Q is aperiodic, so will be P and the stationary distribution is also the limiting distribution.

In general, Metropolis Hastings algorithms also exploit the concept of reversibility as in Illustration 2.9. That is, in order to construct a Markov chain with stationary distribution π we require the following reversibility condition for the transition kernel P(θ,ϕ):

Hastings [19] proposes to define the acceptance probability in such a way that when combined with an arbitrary transition probability, it defines a reversible chain. Such an acceptance probability is given by

Algorithms based on (2.13) are called Metropolis Hastings (MH) algorithms. MH algorithms define reversible chains with stationary distribution π if P(θ,ϕ) > 0. Roberts and Smith [27] show that if Q is irreducible and aperiodic and α(θ,ϕ)>0 for all (θ,ϕ), then the algorithm defines an irreducible and aperiodic Markov chain with limiting distribution π. The MH algorithm can now be described as follows:

-

1.

Set iteration counter j=1 and arbitrary initial value θ (0).

-

2.

Move the chain to a new value ϕ generated from the density Q(θ (j−1),⋅).

-

3.

Evaluate the acceptance probability of the move given by α(θ (j−1),ϕ) in (2.13). If the move is accepted, then θ (j)=ϕ. If the move is not accepted, then θ (j)=θ (j−1) and the chain does not move.

-

4.

Change the counter from j to j+1 and return to Step 2 until convergence is reached.

Step 3 can easily be performed by generating an independent uniform quantity u. If u≤α, then the move is accepted and else it is not.

Note that you do not need to know the often complicated normalizing constant of the stationary distribution π to perform the MH algorithm. Further, when using a symmetric proposal probability as in Illustration 2.9, (2.13) simplifies to \(\alpha (\boldsymbol{\theta},\boldsymbol{\phi})=\min\{ 1, \frac{\pi(\boldsymbol {\phi})}{\pi(\boldsymbol{\theta})} \}\) if π(θ)>0 and α(θ,ϕ)=1 otherwise. Other common choices for Q lead to a random walk (new value = old value + disturbance; Illustration 2.1), independence (new value chosen independently of old value) or hybrid chains (Metropolis within Gibbs algorithm).

We close our discussion of MCMC methods with an example resuming and extending the Poisson model for claim frequencies of Illustration 1.3.

Illustration 2.10

(Claim Frequencies)

Scollnik [29] considered the following model for modeling claim frequency data for group insurance policies: let X ij be the number of claims for the ith group of policy holders in the jth policy year and P ij the payroll count for the ith group of company employees in the jth policy year for i=1,…,I, j=1,…,J. The payroll counts give the number of employees which are at risk to incur a claim. The dependency among the claim counts over different years for the same policy i is modeled by introducing an unobserved random unit rate θ i which has a common distribution for all policies. In particular Scollnik [29] assumed that X ij given θ i are independent with

The prior specification for α and β are rather arbitrary, but they imply that each θ i has a prior mean and standard deviation approximately equal to 0.041 and 0.048, which might not be unreasonable in this context according to Scollnik [29]. Denote by X i =(X i1,…,X iJ )′ the number of claims vector of policy group i over all years and \(\boldsymbol {X}=(\boldsymbol{X}_{1}^{\prime},\ldots,\boldsymbol{X}_{I}^{\prime})^{\prime}\) the total number of claims vector. Further, let θ=(θ 1,…,θ I )′. Then the joint distribution of (X,θ,α,β) can be written as follows:

To update the unobserved latent rates θ i we have as full conditional

which is a Gamma distribution with parameters \(\alpha+\sum_{j=1}^{J} X_{ij}\) and \(\beta+\sum_{j=1}^{J} P_{ij}\) and where θ −i =(θ 1,…,θ i−1,θ i+1,…,θ I )′. We see that these conditionals are actually independent of θ −i . For updating α note that

This is not a standard distribution and an MH step is needed.

Finally, to update β, we obtain for β|X,θ,α again a Gamma distribution with parameters Iα+25 and \(\sum_{i=1}^{I} \theta_{i}+1\).

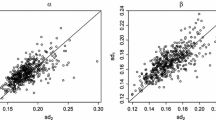

According to Scollnik [29] we implemented a hybrid chain for the small data set with I=3 and J=5 shown in Table 2 using WinBUGS (Bayesian inference Using Gibbs Sampling; http://www.mrc-bsu.cam.ac.uk/bugs/winbugs/contents.shtml), which can be called directly from the statistical computing environment R (see Ntzoufras [7] for more information). The estimated posterior densities of 1000 iterations are shown in Fig. 6.

3 Food for Thought

There is software for Bayesian inference based on MCMC methods available in specialized problems. To the interested reader we particularly recommend to have a look at the above mentioned software WinBUGS and the illustrative book by Ntzoufras [7]. The recent book by Lunn et al. [6] also covers software for Bayesian statistical methods.

Another important issue of MCMC methods which could not be treated here appropriately are burn-in diagnostics which were briefly mentioned in Sect. 2.2 and provide tools for determining when we consider the values of the sampler as realizations from the posterior distribution. Further information can be found for example in Cowles and Carlin [11] and in Brooks and Roberts [9]. Related to this is the theoretical study of convergence questions.

Other areas of interest are, on the one hand, so-called ABC (Approximate Bayesian computation) methods which were developed for computationally very complex problems such as large-scale applications. Roberts et al. [28] and Frühwirth-Schnatter and Sögner [13], on the other hand, use MCMC methods for estimating stochastic volatility models commonly used in financial applications.

4 Summary

In this chapter, we gave a brief introduction to the main concepts of Bayesian statistics. After discussing the fundamental Bayes’ theorem and three illustrating examples, we examined the problem of an appropriate prior choice in more detail and introduced Bayesian inference techniques. The first section closed with the commonly used linear regression model.

In the second section, we introduced the important class of MCMC methods, which are increasingly becoming popular for estimating parameters in complex statistical models. They are based on Monte Carlo techniques and properties of Markov chains, which were discussed before turning to the two most common MCMC algorithms, namely the Gibbs sampler and the Metropolis Hastings algorithms. There were discussed and illustrated using relevant examples involving risk quantities on different scales and with different contexts.

References

Selected Bibliography

W.M. Bolstad, Introduction to Bayesian Statistics (Wiley, Hoboken, 2004)

G. Casella, E.I. George, Explaining the Gibbs sampler. Am. Stat. 46, 167–174 (1992)

S. Chib, E. Greenberg, Understanding the Metropolis-Hastings algorithm. Am. Stat. 49, 327–335 (1995)

D. Gamerman, H.F. Lopes, Markov Chain Monte Carlo: Stochastic Simulation for Bayesian Inference (Taylor & Francis, Boca Raton, 2006)

P.M. Lee, Bayesian Statistics: An Introduction, 4th edn. (Wiley, Hoboken, 2012)

D. Lunn, C. Jackson, N. Best, A. Thomas, D. Spiegelhalter, The BUGS Book—A Practical Introduction to Bayesian Analysis (Chapman & Hall/CRC, London, 2012)

I. Ntzoufras, Bayesian Modeling Using WinBUGS (Wiley, Hoboken, 2009)

Additional Literature

J.O. Berger, Statistical Decision Theory and Bayesian Analysis, 2nd edn. (Springer, Berlin, 1985)

S.P. Brooks, G.O. Roberts, Assessing convergence of Markov chain Monte Carlo algorithms. Stat. Comput. 8, 319–335 (1998)

B.P. Carlin, A.E. Gelfand, A.F.M. Smith, Hierarchical Bayesian analysis of changepoint problems. Appl. Stat. 41, 389–405 (1992)

M.K. Cowles, B.P. Carlin, Markov chain Monte Carlo convergence diagnostics: a comparative review. J. Am. Stat. Assoc. 91, 883–904 (1996)

R. Durrett, Probability: Theory and Examples, 4th edn. (Cambridge University Press, Cambridge, 2010)

S. Frühwirth-Schnatter, L. Sögner, Bayesian estimation of stochastic volatility models based on OU processes with marginal Gamma law. Ann. Inst. Stat. Math. 61(1), 159–179 (2009)

A.E. Gelfand, A.F.M. Smith, Sampling-based approaches to calculating marginal densities. J. Am. Stat. Assoc. 85, 398–409 (1990)

A. Gelman, J.B. Carlin, H.S. Stern, D.B.R. Rubin, Bayesian Data Analysis, 2nd edn. (Chapman & Hall, London, 2003)

S. Geman, D. Geman, Stochastic relaxation, Gibbs distributions and the Bayesian restoration of images. IEEE Trans. Pattern Anal. Mach. Intell. 6, 721–741 (1984)

W.R. Gilks, S. Richardson, D.J. Spiegelhalter, Markov Chain Monte Carlo in Practice (Chapman & Hall, London, 1996)

P. Guttorp, Stochastic Modeling of Scientific Data (Chapman & Hall/CRC, London, 1995)

W.K. Hastings, Monte Carlo sampling methods using Markov chains and their applications. Biometrika 57, 97–109 (1970)

P.D. Hoff, A First Course in Bayesian Statistical Methods. Springer Texts in Statistics (Springer, New York, 2009)

A. Jeffreys, The Theory of Probability (Cambridge University Press, Cambridge, 1961)

J.-M. Marin, C.P. Robert, Bayesian Core: A Practical Approach to Computational Bayesian Statistics (Springer, New York, 2007)

N. Metropolis, A.W. Rosenbluth, M.N. Rosenbluth, A.H. Teller, E. Teller, Equation of state calculations by fast computing machines. J. Chem. Phys. 21, 1087–1092 (1953)

S.P. Meyn, R.L. Tweedie, Markov Chains and Stochastic Stability, 2nd edn. (Cambridge University Press, Cambridge, 2009)

E. Nummelin, General Irreducible Markov Chains and Non-negative Operators (Cambridge University Press, Cambridge, 1984)

S.I. Resnick, Adventures in Stochastic Processes (Birkhäuser, Boston, 1992)

G.O. Roberts, A.F.M. Smith, Simple conditions for the convergence of the Gibbs sampler and Metropolis-Hastings algorithms. Stoch. Process. Appl. 49, 207–216 (1994)

G.O. Roberts, O. Papaspiliopoulos, P. Dellaportas, Bayesian inference for non-Gaussian Ornstein-Uhlenbeck stochastic volatility processes. J. R. Stat. Soc., Ser. B 66, 369–393 (2004)

D.P.M. Scollnik, Actuarial modeling with MCMC and BUGS. N. Am. Actuar. J. 5, 96–124 (2001)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendix: Glossary

Appendix: Glossary

1.1 A.1 Foundations

Symbol | Explanation |

|---|---|

X | random variable (r.v.) |

X=x | realization or observed value of r.v. X |

X continuous | r.v. X takes on any value in an interval (e.g., X= annual crop yield ∈[0,∞)) |

X discrete | r.v. X takes on only finite or countable many values (e.g., X= number of mining disasters ∈{0,1,2,…}) |

i.i.d. | independent and identically distributed |

θ | unknown parameter of a distribution (e.g., θ= probability of occurrence of a complication after a medical treatment) |

θ=(θ 1,…,θ p )′ | unknown parameters of a distribution (e.g., θ=(μ,σ 2), μ mean, σ 2 variance of a normal distribution) |

P θ (A) | probability that event A occurs when parameters θ are true |

F(x|θ) | cumulative distribution function (cdf) of r.v. X, i.e., F(x|θ)=P θ (X≤x) |

f(x|θ) | probability density function (pdf), when X continuous, i.e., f(x|θ)≥0, \(\int_{-\infty }^{\infty}f(x|\boldsymbol{\theta})dx=1\), \(P_{\boldsymbol{\theta}}(X\leq x)=\int_{-\infty}^{x} f(x|\boldsymbol{\theta})dx\) |

f(x|θ) | probability mass function (pmf), when X discrete, i.e., f(x|θ)=P θ (X=x) |

μ=E(X) | mean or expectation of r.v. X (\(E(X)=\int_{-\infty }^{\infty}x f(x|\boldsymbol{\theta})dx\) for X continuous) |

\(\sigma^{2}=\operatorname{Var}(X)\) | variance of r.v. X (\(\operatorname {Var}(X)=\int_{-\infty}^{\infty}(x-\mu)^{2} f(x|\boldsymbol{\theta})dx\) for X continuous) |

\(\phi=\frac{1}{\sigma^{2}}\) | precision of r.v. X |

X∼F(⋅|θ) | X has cdf F(⋅|θ) |

X∼f(⋅|θ) | X has pdf/pmf f(⋅|θ) |

(X,Y)∼f(⋅,⋅|θ) | r.v.s X and Y have joint pdf/pmf f(⋅,⋅|θ) |

f X (x|θ) (f Y (y|θ)) | marginal pdf for X (Y): \(f_{X}(x|\boldsymbol{\theta})=\int_{-\infty}^{\infty}f(x,y|\boldsymbol{\theta})dy\) (\(f_{Y}(y|\boldsymbol{\theta})=\int_{-\infty }^{\infty}f(x,y|\boldsymbol{\theta})dx\)) |

f X (x|θ) (f Y (y|θ)) | marginal pmf for X (Y): \(f_{X}(x|\boldsymbol{\theta})=\sum_{i=1}^{\infty}f(x,y_{i}|\boldsymbol{\theta})\) (\(f_{Y}(y|\boldsymbol{\theta})=\sum_{i=1}^{\infty}f(x_{i},y|\boldsymbol{\theta})\)) |

P θ (A|B) | conditional probability of A given B: \(P_{\boldsymbol{\theta}}(A|B) = \frac{P_{\boldsymbol{\theta }}(A\cap B)}{P_{\boldsymbol{\theta}}(B)}\) if P θ (B)>0 |

x α | α-quantile of continuous r.v. X: P θ (X≤x α )=α |

x 0.5 | median of continuous r.v. X |

x mode | mode of continuous r.v. X, that is the value which maximizes f(x|θ) over x |

X=(X 1,…,X n )′ | X random vector, where X 1,…,X n r.v.s |

F(x|θ) (f(x|θ)) | cdf (pdf/pmf) of X |

E(X)=(E(X 1),…,E(X n )) | mean vector of random vector X |

Σ=(Σ ij ) i,j=1,…,n | covariance matrix of random vector X with \(\Sigma_{ij}=\operatorname {Cov}(X_{i},X_{j})=E((X_{i}-\mu_{i})(X_{j}-\mu_{j}))\) |

Σ−1 | precision matrix of random vector X |

I(θ)=(I(θ) ij ) i,j=1,…,n | Fisher information matrix with \(I(\boldsymbol{\theta})_{ij}=E (\frac{\partial^{2} \ln f(\boldsymbol{X}|\boldsymbol{\theta})}{\partial \theta_{i} \partial\theta_{j}} )\) |

1.2 A.2 Distributions

Symbol | Explanation |

|---|---|

X∼N(μ,σ 2) | X is normally distributed with mean μ, variance σ 2 and pdf \(f(x|\mu,\sigma^{2})=\frac{1}{\sqrt{2\pi\sigma ^{2}}}\exp \{-\frac{1}{2\sigma^{2}}(x-\mu)^{2} \}\), \(x\in\mathbb{R}\) |

X∼Bernoulli(θ) | X is Bernoulli distributed with success probability θ∈(0,1) and pmf f(x|θ)=θ x(1−θ)1−x, x=0,1, E(X)=θ, \(\operatorname {Var}(X)=\theta(1-\theta)\) |

X∼Beta(α,β) | X is Beta distributed with parameters α>0, β>0 and pdf \(f(x|\alpha,\beta)= \frac {1}{B(\alpha,\beta)}x^{\alpha-1}(1-x)^{\beta-1}\), x∈(0,1), \(B(\alpha ,\beta)= \int_{0}^{1} x^{\alpha-1}(1-x)^{\beta-1} dx\), \(E(X)=\frac{\alpha }{\alpha+\beta}\), \(\operatorname{Var}(X)=\frac{\alpha\beta}{(\alpha +\beta)^{2}(\alpha+\beta+1)}\) |

X∼Poisson(θ) | X is Poisson distributed with parameter θ>0 and pmf \(f(x|\theta)= \frac{\theta^{x}}{x!}e^{-x}\), x∈{0,1,2,…}, \(E(X)=\operatorname{Var}(X)=\theta\) |

X∼Gamma(α,β) | X is Gamma distributed with parameters α>0, β>0 and pdf \(f(x|\alpha,\beta)=\frac {1}{\Gamma(\alpha)}\beta^{\alpha}x^{\alpha-1} e^{-\beta x}\), x>0, \(\Gamma(\alpha)=\int_{0}^{\infty}x^{\alpha-1}e^{-x}dx\), \(E(X)=\frac {\alpha}{\beta}\), \(\operatorname{Var}(X)=\frac{\alpha}{\beta^{2}}\) |

X∼N(0,1) | X is standard normal with pdf \(\varphi(x)=\frac {1}{\sqrt{2\pi}}\exp \{-\frac{1}{2}x^{2} \}\), and cdf \(\Phi (x)=\int_{-\infty}^{x} \varphi(u) du\), E(X)=0, \(\operatorname{Var}(X)=1\) |

X∼N n (μ,Σ) | X is multivariate normally distributed with mean vector μ, covariance matrix Σ and pdf \(f(\boldsymbol{x}|\boldsymbol{\mu },\Sigma)=\frac{1}{(2\pi)^{n/2}} |\Sigma|^{-1/2} \exp \{-\frac {1}{2}(\boldsymbol{x}-\boldsymbol{\mu})^{\prime} \Sigma^{-1}(\boldsymbol {x}-\boldsymbol{\mu}) \}\), \(\boldsymbol{x}\in\mathbb{R}^{n}\), E(X)=μ, \(\operatorname{Var}(\boldsymbol {X})=\Sigma\) |

1.3 A.3 Classical Statistics

Symbol | Explanation |

|---|---|

θ (θ) | unknown fixed parameter to be estimated |

(x 1,…,x n )′ | i.i.d. sample (realizations) from r.v. X |

\(\hat{\theta}\) (\(\hat{\boldsymbol{\theta}}\)) | estimate of θ (θ) based on data x=(x 1,…,x n ) |

ℓ(θ|x) | likelihood for θ based on data x from X∼f(⋅|θ) given as ℓ(θ|x)=f(x|θ) |

\(\hat{\boldsymbol{\theta}}_{ML}\) | maximum likelihood estimator of θ: maximizes the likelihood ℓ(x|θ) over θ |

I −1(θ) | inverse Fisher information matrix, corresponds to asymptotic covariance matrix of the maximum likelihood estimator \(\hat{\boldsymbol{\theta}}_{ML}\) |

\(\bar{x}:=\frac{1}{n}\sum_{i=1}^{n} x_{i}\) | sample or empirical mean for the i.i.d. sample (x 1,…,x n ) |

\(s^{2}:=\frac{1}{n-1} \sum_{i=1}^{n} (x_{i}-\bar{x})^{2}\) | sample variance for the i.i.d. sample (x 1,…,x n ) |

Y i ∼N(x i1 β 1+⋯+x id β d ,σ 2) independent for i=1,…,d | linear regression model for response Y i , covariates x i1,…,x id and unknown regression coefficients β=(β 1,…,β d ) |

\(\hat{\boldsymbol{\beta}}_{LS}\) | least square estimator of β, given by minimizing \(Q(\boldsymbol{\beta})= \sum_{i=1}^{n} (y_{i}-x_{i1}\beta_{1}-\cdots-x_{id}\beta_{d})^{2}\) for observed responses y 1,…,y n |

[l(x),u(x)] | 100(1−α) % confidence interval for θ if P θ (l(x)≤θ≤u(x))≥1−α, that is, the random interval [l(x),u(x)] covers the true parameter θ in 100(1−α) % of times |

1.4 A.4 Bayesian Statistics

Symbol | Explanation |

|---|---|

θ (θ) | unknown random parameter |

p(θ) | prior pdf/pmf for θ |

p(θ|x) | posterior pdf/pmf of θ given the observed sample x from X∼f(⋅|θ) Bayes’ theorem: \(p(\boldsymbol{\theta}|\boldsymbol{x}) = \frac{\ell (\boldsymbol{\theta}|\boldsymbol{x}) p(\boldsymbol{\theta})}{\int_{-\infty}^{\infty}\ell(\boldsymbol{\theta}|\boldsymbol{x}) p(\boldsymbol {\theta}) d\boldsymbol{\theta}}\) |

θ mode(x) | posterior mode = mode of posterior distribution |

θ mean(x) | posterior mean = mean of posterior distribution |

I(x) | 100(1−α) % credible interval for θ if ∫ I(x) p(θ|x)dθ=1−α |

θ α (x) (\(\hat{\theta}_{\alpha}(\boldsymbol{x})\)) | (empirical) α-quantile of posterior distribution |

\([\hat{\theta}_{\alpha/2}(\boldsymbol{x}),\hat{\theta}_{1-\alpha /2}(\boldsymbol{x})]\) | 100(1−α) % credible interval based on empirical quantiles |

f(y|x) | predictive density of future observation y given the observations x: f(y|x)=∫f(y|θ)p(θ|x)d θ if Y is independent of X given θ |

1.5 A.5 MCMC Methods

Symbol | Explanation |

|---|---|

{θ (t):t∈T} | stochastic process with random vectors θ (t) taking values in the state space S for each t out of the index set T |

{θ (n):n=1,2,…} | Markov chain (MC) if (2.3) holds |

θ (n) homogeneous | if (2.3) does not depend on n |

P(x,y) :=P(θ (n+1)=y|θ (n)=x) | transition probability of homogeneous MC θ (n) with discrete state space S |

P=(P(x i ,x j )) i,j=1,…,r | transition matrix for a homogeneous MC θ (n) with finite state space S={x 1,…,x r }: P(x i ,x j )=P(θ (n+1)=x j |θ (n)=x i ) |

P m(x,y) :=P(θ (n+m)=y|θ (n)=x) | mth order transition probability for m>n |

π (0)(x)=P(θ (0)=x) | initial distribution of MC θ (n) |

π (n)(x)=P(θ (n)=x) | nth step marginal distribution of MC θ (n) |

π stationary | if (2.7) holds |

T y | first visit of MC θ (n) to y |

ρ xy | probability of visiting y after starting in x |

y∈S (positive) recurrent | ρ yy =1 (ρ yy =1 and E(T y |θ (0)=y)<∞) |

θ (n) irreducible | ρ xy >0, ρ yx >0 ∀x,y∈S |

θ (n) aperiodic | if largest common divisor of {n≥1:P n(x,x)>0}=1 ∀x∈S |

θ (n) ergodic | if θ (n) aperiodic and irreducible |

Full conditionals of random parameter θ=(θ 1,…,θ d )′ | conditional distributions of θ i , i=1,…,d, given all other components different from i |

Autocorrelation of lag k | correlation Cor(θ (n),θ (n+k)) in homogeneous MC θ (n) |

Rights and permissions

Copyright information

© 2014 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Czado, C., Brechmann, E.C. (2014). Bayesian Risk Analysis. In: Klüppelberg, C., Straub, D., Welpe, I. (eds) Risk - A Multidisciplinary Introduction. Springer, Cham. https://doi.org/10.1007/978-3-319-04486-6_8

Download citation

DOI: https://doi.org/10.1007/978-3-319-04486-6_8

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-04485-9

Online ISBN: 978-3-319-04486-6

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)