Abstract

This paper analyzes the Durbin–Watson (DW) statistic for near-integrated processes. Using the Fredholm approach the limiting characteristic function of DW is derived, in particular focusing on the effect of a “large initial condition” growing with the sample size. Random and deterministic initial conditions are distinguished. We document the asymptotic local power of DW when testing for integration.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

In a series of papers Durbin and Watson (1950, 1951, 1971) developed a celebrated test to detect serial correlation of order one. The corresponding Durbin–Watson (DW) statistic was proposed by Sargan and Bhargava (1983) in order to test the null hypothesis of a random walk,

Bhargava (1986) established that the DW statistic for a random walk is uniformly most powerful against the alternative of a stationary AR(1) process. Local power of DW was investigated by Hisamatsu and Maekawa (1994) following the technique by White (1958). Hisamatsu and Maekawa (1994) worked under the following assumptions: (1) a model without intercept like above, (2) a zero (or at least negligible) starting value y 0, (3) serially independent innovations \(\{\varepsilon _{t}\},\) and (4) homoskedastic innovations. Nabeya and Tanaka (1988, 1990a) and Tanaka (1990, 1996) introduced the so-called Fredholm approach to econometrics. Using this approach, Nabeya and Tanaka (1990b) investigated the local power of DW under a more realistic setup. They allowed for an intercept and also a linear trend in the model and for errors displaying serial correlation and heteroskedasticity of a certain degree. Here, we go one step beyond and relax the zero starting value assumption. To this end we adopt the Fredholm approach as well.Footnote 1

In particular, we obtain the limiting characteristic function of the DW statistic for near-integrated processes driven by serially correlated and heteroskedastic processes with the primary focus to reveal the effect of a “large initial condition” growing with \(\sqrt{T}\), where T is the sample size. This starting value assumption has been picked out as a central theme by Müller and Elliott (2003), see also Harvey et al. (2009) for a recent discussion.

The rest of the paper is organized as follows. Section 2 becomes precise on the notation and the assumptions. The underlying Fredholm approach is presented and discussed in Sect. 3. Section 4 contains the limiting results. Section 5 illustrates the power function of DW. A summary concludes the paper. Proofs are relegated to the Appendix.

2 Notation and Assumptions

Before becoming precise on our assumptions we fix some standard notation. Let \(\mathbb{I}(\cdot )\) denote the usual indicator function, while I T stands for the identity matrix of size T. All integrals are from 0 to 1 if not indicated otherwise, and \(w(\cdot )\) indicates a Wiener process or standard Brownian motion.

We assume that the time series observations {y t } are generated from

where x t is the deterministic component of y t which we restrict to be a constant or a linear time trend. We maintain that the following conventional assumption governs the behavior of the stochastic process {u t }.

Assumption 1

The sequence {u t } is generated by

while \(\{\varepsilon _{t}\}\) is a sequence of martingale differences with

for any γ > 0, 0 < σ 2 < ∞, and that F t is the σ-algebra generated by the \(\varepsilon _{s},\ s \leq t\). Also we let \(\sigma _{u}^{2}:=\lim _{T\rightarrow \infty }\frac{1} {T}\sum _{t=1}^{T}E\left (u_{ t}^{2}\right )\) and \(\omega _{u}^{2}:=\lim _{T\rightarrow \infty }T^{-1}E\left (\sum \nolimits _{t=1}^{T}u_{t}\right )^{2} =\sigma ^{2}a^{2}\).

Assumption 2

In model (1) we allow ρ to be time-dependent and set \(\rho _{T} = 1 - \frac{c} {T}\) with c > 0, where the null distribution is covered as limiting case (\(c \rightarrow 0\)).

Assumption 3

For the starting value \(\eta _{0} =\xi\) we assume: a) \(\xi = o_{p}(\sqrt{T})\) (“small starting value”), where \(\xi\) may be random or deterministic; b) \(\xi =\delta \sqrt{\omega _{u }^{2 }/\left (1 -\rho _{ T }^{2 } \right )}\) where \(\delta \sim N\left [\mu _{\delta }\mathbb{I}\left (\sigma _{\delta }^{2} = 0\right ),\sigma _{\delta }^{2}\right ]\) and independent from {u t } (“large starting value”).

Assumption 1 allows for heteroskedasticity of {u t }. If we assume homoskedasticity, \(E\left (\varepsilon _{t}^{2}\vert F_{t-1}\right ) =\sigma ^{2}\), then {u t } is stationary. Under Assumption 1 an invariance principle is guaranteed (see, for example, Phillips and Solo 1992). By Assumption 2, the process {η t } is near-integrated as defined by Phillips (1987). The initial condition under Assumption 3a) will be negligible as \(T \rightarrow \infty \). The effect of initial condition under Assumption 3b) will not be negligible and the specification of \(\delta\), compactly, covers both random and fixed cases depending on the value of \(\sigma _{\delta }\).

We distinguish the model with demeaning from that with detrending using μ and τ for the corresponding cases. The test statistics DW j, T (j = μ, τ) are given by

where \(\hat{\eta }_{t}^{j}\) are OLS residuals calculated from (1) with \(\hat{\overline{\omega }}^{2}\) being a consistent estimator of \(\sigma _{u}^{2}/\omega _{u}^{2}\) (see Hamilton 1994, Sect. 10.5, for further discussions).

DW j, T rejects a null hypothesis of ρ = 1 in favor of ρ < 1 for too large values. The critical values are typically taken from the limiting distributions, DW j , which are characterized explicitly further down,

where \(\mathop{\rightarrow }\limits^{ D}\) denotes convergence in distribution as \(T \rightarrow \infty \).

3 Fredholm Approach

The Fredholm approach relies on expressing limiting distributions as double Stieltjes integrals over a positive definite kernel K(s, t) that is symmetric and continuous on \([0,1] \times [0,1]\).Footnote 2 Given a kernel, one defines a type I Fredholm integral equation as

with eigenvalue λ and eigenfunction f. The corresponding Fredholm determinant (FD) of the kernel is defined as (see Tanaka 1990, Eq. (24))

Further, the so-called resolvent \(\varGamma \left (s,t;\lambda \right )\) of the kernel (see Tanaka 1990, Eq. (25)) is

Those are the ingredients used to determine limiting characteristic functions following Nabeya and Tanaka (1990a) and more generally Tanaka (1990).Footnote 3

Let DW j (j = μ, τ) represent the limit of DW j, T . DW j −1 can be written as \(S_{X} =\int \left \{X\left (t\right ) + n\left (t\right )\right \}^{2}\mathit{dt}\) for some stochastic process \(X\left (t\right )\) and an integrable function \(n\left (t\right )\). Tanaka (1996, Theorem 5.9, p. 164) gives the characteristic function of random variables such as S X summarized in the following lemma.

Lemma 1

The characteristic function of

for a continuous function \(n\left (t\right )\) is given by

where \(h\left (t\right )\) is the solution of the following type II Fredholm integral equation

evaluated at λ = 2iθ, \(K\left (s,t\right )\) is the covariance of \(X\left (t\right ),\) and \(m\left (t\right ) =\int K\left (s,t\right )n\left (s\right )\mathit{ds}\) .

Remark 1

Although Tanaka (1996, Theorem 5.9) presents this lemma for the covariance \(K\left (s,t\right )\) of \(X\left (t\right )\), his exposition generalizes for a more general case. Adopting his arguments one can see that Lemma 1 essentially relies on an orthogonal decomposition of \(X\left (t\right )\), which does not necessarily have to be based on the covariance of \(X\left (t\right )\). In particular, if there exists a symmetric and continuous function \(C\left (s,t\right )\) such that

then we may find \(h\left (t\right )\) as in (7) by solving \(h\left (t\right ) =\int C\left (s,t\right )n\left (s\right )\mathit{ds} +\lambda \int C\left (s,t\right )h\left (s\right )\mathit{ds}\). This may in some cases shorten and simplify the derivations. As will be seen in the Appendix, we may find the characteristic function of DW μ resorting to this remark.

4 Characteristic Functions

In Proposition 2 below, we will give expressions for the characteristic functions of DW j −1 (j = μ, τ) employing Lemma 1. To that end we use that \(\mathit{DW }_{j}^{-1} =\int \left [X_{j}\left (t\right ) + n_{j}\left (t\right )\right ]^{2}\mathit{dt}\) for some integrable functions \(n_{j}\left (t\right )\) and demeaned and detrended Ornstein–Uhlenbeck processes \(X_{\mu }\left (t\right )\) and \(X_{\tau }\left (t\right )\), respectively, see Lemma 2 in the Appendix. \(n_{\mu }\left (t\right )\) and \(n_{\tau }\left (t\right )\) capture the effect of the initial condition whose exact forms are given in the Appendix. Hence, we are left with deriving the covariance functions of \(X_{\mu }\left (t\right )\) and \(X_{\tau }\left (t\right )\). We provide these rather straightforward results in the following proposition.

Proposition 1

The covariance functions of \(X_{\mu }\left (t\right )\) and \(X_{\tau }\left (t\right )\) from \(\mathit{DW }_{j}^{-1} =\int \left [X_{j}\left (t\right ) + n_{j}\left (t\right )\right ]^{2}\mathit{dt}\) are

where \(K_{1}\left (s,t\right ) = \frac{1} {2c}\left [e^{-c\left \vert s-t\right \vert } - e^{-c\left (s+t\right )}\right ]\) and the functions \(\phi _{k}\left (s\right )\), \(\psi _{k}\left (s\right )\),

\(k = 1,2,\ldots,8\) , and \(g\left (s\right )\) and the constant ω 0 can be found in the Appendix.

The problem dealt with in this paper technically translates into finding \(h_{j}\left (t\right )\) as outlined in Lemma 1 for \(K_{j}\left (s,t\right )\), j = μ, τ, i.e. finding \(h_{j}\left (t\right )\) that solves a type II Fredholm integral equation of the form (7). Solving a Fredholm integral equation in general requires the knowledge of the FD and the resolvent of the associated kernel. The FD for \(K_{j}\left (s,t\right )\) (j = μ, τ) are known (Nabeya and Tanaka 1990b),but not the resolvents. Finding the resolvent is in general tedious, let alone the difficulties one might face finding \(h_{j}\left (t\right )\) once FD and the resolvent are known. To overcome these difficulties, we suggest a different approach to find \(h_{j}\left (t\right )\) which follows.Footnote 4 As we see from Proposition 1 kernels of the integral equations considered here are of the following general form

Thus to solve for \(h\left (t\right )\) in

we let \(\upsilon = \sqrt{\lambda -c^{2}}\) and observe that (8) is equivalent to

with the following boundary conditions

where

The solution to (9) can now be written as

where \(g_{k}\left (t\right )\), \(k = 1,2,\ldots,n\), are special solutions to the following differential equations

and \(g_{m}\left (t\right )\) is a special solution of

Using the boundary conditions (10) and (11) together with equations from (12) the unknowns c 1, c 2, b 1, b 2, …, \(b_{n+1}\) are found giving an explicit form for (13). The solution for \(h\left (t\right )\) can then be used for the purposes of Lemma 1. It is important to note that if we replace \(K_{1}\left (s,t\right )\) with any other nondegenerate kernel, the boundary conditions (10) and (11) need to be modified accordingly.

Using the method described above we establish the following proposition containing our main results for DW j (j = μ , τ).

Proposition 2

For DW j (j = μ ,τ) we have under Assumptions 1 , 2 , and 3 b)

where \(D_{j}\left (\lambda \right )\) is the FD of \(K_{j}\left (s,t\right )\) with \(\upsilon = \sqrt{\lambda -c^{2}}\),

and

where \(\varPsi _{j}\left (\theta;c\right )\) for j = μ,τ are given in the Appendix.

Remark 2

Under Assumption 3a) the limiting distributions of DW j , j = μ, τ, are the same as the limiting distributions derived under a zero initial condition in Nabeya and Tanaka (1990b). These results are covered here when \(\mu _{\delta } =\sigma _{ \delta }^{2} = 0\).

Remark 3

The Fredholm determinants, \(D_{j}\left (\lambda \right )\), are taken from Nabeya and Tanaka (1990b).

Remark 4

It is possible to derive the characteristic functions using Girsanov’s theorem (see Girsanov 1960) given, for example, in Chap. 4 of Tanaka (1996). Further, note that Girsanov’s theorem has been tailored to statistics of the form of DW j under Lemma 1 in Elliott and Müller (2006).

5 Power Calculation

To calculate the asymptotic power function of DW j, T (j = μ, τ) we need the quantiles c j, 1−α as critical values where (j = μ, τ)

Our graphs rely on the α = 5 % level with critical values c μ, 0. 95 = 27. 35230 and c τ, 0. 95 = 42. 71679 taken, up to an inversion, from Table 1 of Nabeya and Tanaka (1990b). Let ϕ(θ; c) stand for the characteristic functions obtained in Proposition 2 for large initial conditions of both deterministic and random cases in a local neighborhood to the null hypothesis characterized by c. With x = c j, 1−α −1 we hence can compute the local power by evaluating the distribution function of DW j −1 where we employ the following inversion formula given in Imhof (1961)

When it comes to practical computations, Imhof’s formula (14) is evaluated using a simple Simpson’s rule while correcting for the possible phase shifts which arise due to the square root map over the complex domain (see Tanaka 1996, Chap. 6, for a discussion).Footnote 5

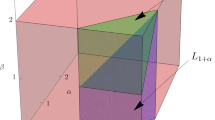

Figure 1 shows the local power functions of DW j (j = μ, τ) for a deterministic large initial condition, that is for \(\sigma _{\delta }^{2} = 0\) in Assumption 3b). As is clear from Proposition 2, the power function is symmetric around \(\mu _{\delta } = 0\) and decreasing in \(\mu _{\delta }^{2}\) for any level of the local-to-unity parameter c.

For the random case we set \(\mu _{\delta } = 0\). Figure 2 contains graphs for

\(\sigma _{\delta } \in \{ 0,0.1,\ldots,3\}\); to keep shape conformity with the case of a large deterministic initial condition the graphs are arranged symmetrically around 0. Figure 2 shows by how much the power decreases in the variance of the initial condition.

6 Summary

We analyze the effect of a large initial condition, random or deterministic, on the local-to-unity power of the Durbin–Watson unit root test. Using the Fredholm approach the characteristic function of the limiting distributions are derived. We observe the following findings. First, the local power after detrending is considerably lower than in case of a constant mean. Second, a large initial value has a negative effect on power: the maximum power is achieved for \(\mu _{\delta } =\sigma _{\delta } = 0\), which corresponds to a “small initial condition.” Finally, comparing Figs. 1 and 2 one learns that deterministic and random initials values have a similar effect depending only on the magnitude of the mean or the standard deviation, respectively, of the large initial condition.

Notes

- 1.

- 2.

- 3.

- 4.

- 5.

References

Bhargava, A. (1986). On the theory of testing for unit roots in observed time series. Review of Economic Studies, 53, 369–384.

Durbin, J., & Watson, G. S. (1950). Testing for serial correlation in least squares regression. I. Biometrika, 37, 409–428.

Durbin, J., & Watson, G. S. (1951). Testing for serial correlation in least squares regression. II. Biometrika, 38, 159–178.

Durbin, J., & Watson, G. S. (1971). Testing for serial correlation in least squares regression. III. Biometrika, 58, 1–19.

Elliott, G., & Müller, U. K. (2006). Minimizing the impact of the initial condition on testing for unit roots. Journal of Econometrics, 135, 285–310.

Gil-Pelaez, J. (1951). Note on the inversion theorem. Biometrika, 38, 481–482.

Girsanov, I. (1960). On transforming a certain class of stochastic processes by absolutely continuous substitution of measures. Theory of Probability and Its Applications, 5, 285–301.

Gurland, J. (1948). Inversion formulae for the distribution of ratios. Annals of Mathematical Statistics, 19, 228–237.

Hamilton, J. D. (1994). Time series analysis. Cambridge: Cambridge University Press.

Harvey, D. I., Leybourne, S. J., & Taylor, A. M. R. (2009). Unit root testing in practice: Dealing with uncertainty over the trend and initial condition. Econometric Theory, 25, 587–636.

Hisamatsu, H., & Maekawa, K. (1994). The distribution of the Durbin-Watson statistic in integrated and near-integrated models. Journal of Econometrics, 61, 367–382.

Hochstadt, H. (1973). Integral equations. New York: Wiley.

Imhof, J. P. (1961). Computing the distribution of quadratic forms in normal variables. Biometrika, 4, 419–426.

Kondo, J. (1991). Integral equations. Oxford: Clarendon Press.

Kurozumi, E. (2002). Testing for stationarity with a break. Journal of Econometrics, 108, 63–99.

Müller, U. K., & Elliott, G. (2003). Tests for unit roots and the initial condition. Econometrica, 71, 1269–1286.

Nabeya, S. (2000). Asymptotic distributions for unit root test statistics in nearly integrated seasonal autoregressive models. Econometric Theory, 16, 200–230.

Nabeya, S. (2001). Approximation to the limiting distribution of t- and F-statistics in testing for seasonal unit roots. Econometric Theory, 17, 711–737.

Nabeya, S., & Tanaka, K. (1988). Asymptotic theory of a test for the constancy of regression coefficients against the random walk alternative. Annals of Statistics, 16, 218–235.

Nabeya, S., & Tanaka, K. (1990a). A general approach to the limiting distribution for estimators in time series regression with nonstable autoregressive errors. Econometrica, 58, 145–163.

Nabeya, S., & Tanaka, K. (1990b). Limiting power of unit-root tests in time-series regression. Journal of Econometrics, 46, 247–271.

Phillips, P. C. B. (1987). Towards a unified asymptotic theory for autoregressions. Biometrika, 74, 535–547.

Phillips, P. C. B., & Solo, V. (1992). Asymptotics for linear processes. The Annals of Statistics, 20, 971–1001.

Presno, M. J., & López, A. J. (2003). Testing for stationarity in series with a shift in the mean. A Fredholm approach. Test, 12, 195–213.

Sargan, J. D., & Bhargava, A. (1983). Testing residuals from least squares regression for being generated by the gaussian random walk. Econometrica, 51, 153–174.

Tanaka, K. (1990). The Fredholm approach to asymptotic inference on nonstationary and noninvertible time series models. Econometric Theory, 6, 411–432.

Tanaka, K. (1996). Time series analysis: Nonstationary and noninvertible distribution theory. New York: Wiley.

White, J. S. (1958). The limiting distribution of the serial correlation coefficient in the explosive case. Annals of Mathematical Statistics, 29, 1188–1197.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendix

Appendix

First we present a preliminary result. Lemma 2 contains the required limiting distributions in terms of Riemann integrals.

Lemma 2

Let \(\left \{y_{t}\right \}\) be generated according to ( 1 ) and satisfy Assumptions 1 and 2 . It then holds for the test statistics from ( 2 ) asymptotically

where under Assumption 3 b)

with \(w_{c}\left (r\right ) = w\left (r\right )\) for c = 0 and

and the standard Ornstein–Uhlenbeck process \(J_{c}\left (r\right ) =\int _{ 0}^{r}e^{-c\left (r-s\right )}\mathit{dw}\left (s\right )\) .

Proof

The proof is standard by using similar arguments as in Phillips (1987) and Müller and Elliott (2003).

1.1 Proof of Proposition 1

We set \(\upsilon = \sqrt{\lambda -c^{2}}\). For DW μ we have \(w_{c}^{\mu }\left (s\right ) = w_{c}\left (r\right ) -\int w_{c}\left (s\right )\mathit{ds}\), thus

where

For DW τ we have \(w_{c}^{\tau }\left (s\right ) = w_{c}^{\mu }\left (s\right ) - 12\left (s -\frac{1} {2}\right )\int \left (u -\frac{1} {2}\right )w_{c}\left (u\right )\mathit{du}\), thus

With some calculus the desired result is obtained. In particular we have with \(\phi _{1}\left (s\right ) = -1\), \(\phi _{2}\left (s\right ) = -g\left (s\right )\), \(\phi _{3}\left (s\right ) = -3f_{1}\left (s\right )\), \(\phi _{4}\left (s\right ) = -3\left (s - 1/2\right )\), \(\phi _{5}\left (s\right ) = 3\omega _{1}\), \(\phi _{6}\left (s\right ) = 3\omega _{1}\left (s - 1/2\right )\), \(\phi _{7}\left (s\right ) = 6\omega _{2}\left (s - 1/2\right )\), \(\phi _{8}\left (s\right ) =\omega _{0}\), \(\psi _{1}\left (t\right ) = g\left (t\right )\), \(\psi _{2}\left (t\right ) =\psi _{6}\left (t\right ) =\psi _{8}\left (t\right ) = 1\), \(\psi _{3}\left (t\right ) =\psi _{5}\left (t\right ) =\psi _{7}\left (t\right ) = t - 1/2\) and \(\psi _{4}\left (t\right ) = f_{1}\left (t\right )\) while

This completes the proof.

1.2 Proof of Proposition 2

Let \(\mathcal{L}(X) = \mathcal{L}(Y )\) stand for equality in distribution of X and Y and set \(A =\delta \left (2c\right )^{-1/2}\). To begin with, we do the proofs conditioning on \(\delta\). Consider first DW μ . To shorten the proofs for DW μ we work with the following representation for a demeaned Ornstein–Uhlenbeck process given under Theorem 3 of Nabeya and Tanaka (1990b), for their \(R_{1}^{\left (2\right )}\) test statistic, i.e. we write

where \(K_{0}\left (s,t\right ) = \frac{1} {2c}\left [e^{-c\left \vert s-t\right \vert } - e^{-c\left (2-s-t\right )}\right ] - \frac{1} {c^{2}} p\left (s\right )p\left (t\right )\) with \(p\left (t\right ) = 1 - e^{-c\left (1-t\right )}\). Using Lemma 2, we find that

For DW μ we will be looking for \(h_{\mu }\left (t\right )\) in

where \(m_{\mu }\left (t\right ) =\int K_{0}\left (s,t\right )n_{\mu }\left (s\right )\mathit{ds}\). Equation (15) is equivalent to the following boundary condition differential equation

with

where \(b_{1} =\int p\left (s\right )h_{\mu }\left (s\right )\mathit{ds}\) and \(b_{2} =\int e^{\mathit{cs}}h_{\mu }\left (s\right )\mathit{ds}\). Thus have

where \(g_{\mu }\left (t\right )\) is a special solution to \(g_{\mu }^{{\prime\prime}}\left (t\right ) +\upsilon ^{2}g_{\mu }\left (t\right ) = m_{\mu }^{{\prime\prime}}\left (t\right ) - c^{2}m_{\mu }\left (t\right )\) and \(g_{1}\left (t\right )\) is a special solution to \(g_{1}^{{\prime\prime}}\left (t\right ) +\upsilon ^{2}g_{1}\left (t\right ) =\lambda\). Boundary conditions (17) and (18) together with \(h_{\mu }\left (t\right )\) imply

while expressions for b 1 and b 2 imply that

These form a system of linear equations in c 1 μ, c 2 μ, b 1, and b 2, which in turn identifies them. With some calculus we write

Solving for c 1 μ and c 2 μ we find that they are both a multiple of A, hence

is free of A. Now with \(\varTheta _{\mu } =\int n_{\mu }^{2}\left (t\right )\mathit{dt}\), an application of Lemma 1 results in

As \(\sqrt{2c}A =\delta \sim N\left (\mu _{\delta },\sigma _{\delta }^{2}\right )\), standard manipulations complete the proof for j = μ.

Next we turn to DW τ . Using Lemma 2 we find that

Here we will be looking for \(h_{\tau }\left (t\right )\) in the

where \(m_{\tau }\left (t\right ) =\int K_{\tau }\left (s,t\right )n_{\tau }\left (s\right )\mathit{ds}\) and \(K_{\tau }\left (s,t\right )\) is from Proposition 1. Equation (19) can be written as

with the following boundary conditions

where

The solution to (20) is

where \(g_{k}\left (t\right )\), \(k = 1,2,\ldots,8\), are special solutions to the following differential equations

and \(g_{\tau }\left (t\right )\) is a special solution of \(g_{\tau }^{{\prime\prime}}\left (t\right ) +\upsilon ^{2}g_{\tau }\left (t\right ) = m_{\tau }^{{\prime\prime}}\left (t\right ) - c^{2}m_{\tau }\left (t\right )\). The solution given in (24) can be written as

The boundary conditions in (21) and (22) imply

while expressions given under (23) characterize nine more equations. These equations form a system of linear equations in unknowns \(c_{1}^{\tau },\ c_{2}^{\tau },\ b_{1},\ \ldots,\ b_{9}\), which can be simply solved to fully identify (25). Let \(\varTheta _{\tau } =\int n_{\tau }\left (t\right )^{2}\mathit{dt}\). Also as for the constant case we set

whose expression is long and we do not report here. When solving this integral we see that \(\varPsi _{\tau }\left (\theta;c\right )\) is free of A. As before we apply Lemma 1 to establish the following

Now, using \(E\left [e^{i\theta \int \left \{w_{c}^{\tau }\left (r\right )\right \}^{2}dr }\right ] = EE\left [e^{i\theta \int \left \{w_{c}^{\tau }\left (r\right )\right \}^{2}dr }\vert \delta \right ]\), standard manipulations complete the proof.

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Hassler, U., Hosseinkouchack, M. (2015). Distribution of the Durbin–Watson Statistic in Near Integrated Processes. In: Beran, J., Feng, Y., Hebbel, H. (eds) Empirical Economic and Financial Research. Advanced Studies in Theoretical and Applied Econometrics, vol 48. Springer, Cham. https://doi.org/10.1007/978-3-319-03122-4_26

Download citation

DOI: https://doi.org/10.1007/978-3-319-03122-4_26

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-03121-7

Online ISBN: 978-3-319-03122-4

eBook Packages: Business and EconomicsEconomics and Finance (R0)