Abstract

We review some recent results on the regularity problem of sub-Riemannian length minimizing curves. We also discuss a new nontrivial example of singular extremal that is not length minimizing near a point where its derivative is only Hölder continuous. In the final section, we list some open problems.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 1 Introduction

One of the main open problems in sub-Riemannian geometry is the regularity of length minimizing curves, see [12, Problem 10.1]. All known examples of length minimizing curves are smooth. On the other hand, there is no regularity theory of a general character for sub-Riemannian geodesics.

It was originally claimed by Strichartz in [15] that length minimizing curves are smooth, all of them being normal extremals. The wrong argument relied upon an incorrect application of Pontryagin Maximum Principle, ingnoring the possibility of abnormal (also called singular) extremals. In 1994 Montgomery discovered the first example of a singular length minimizing curve [11]. In fact, manifolds with distributions of rank 2 are rich of abnormal geodesics: in [9], Liu and Sussmann introduced a class of abnormal extremals, called regular abnormal extremals, that are always locally length minimizing. On the other hand, when the rank is at least 3 the situation is different. In [4], Chitour, Jean, and Trélat showed that for a generic distribution of rank at least 3 every singular curve is of minimal order and of corank 1. As a corollary, they show that a generic distribution of rank at least 3 does not admit (nontrivial) minimizing singular curves.

The question about the regularity of length minimizing curves remains open. The point, of course, is the regularity of abnormal minimizers. Some partial results in this direction are obtained in [8] and [13]. In this survey, we describe these and other recent results. In Sect. 5.2, we present the classification of abnormal extremals in Carnot groups [6], that was announced at the meeting Geometric control and sub-Riemannian geometry held in Cortona in May 2012. The example of nonminimizing singular curve of Sect. 7 is new.

We refer the reader to the monograph [2] for an excellent introduction to Geometric Control Theory, see also the book in preparation [1].

2 2 Basic facts

Let M be an n-dimensional smooth manifold, n ≥ 3, let D be a completely non-integrable (i.e., bracket generating) distribution of r-planes on M, r ≥ 2, called horizontal distribution, and let g = g x be a smooth quadratic form on D(x), varying smoothly with x ∈ M. The triple (M, D, g) is called sub-Riemannian manifold.

A Lipschitz curve γ ([0;1] → M is D-horizontal, or simply horizontal, if \(\dot \gamma (t) \in D(\gamma (t))\) for a.e. t ∈ ([0;1]. We can then define the length of γ

For any couple of points x, y ∈ M, we define the function

If the above set is nonempty for any x, y ∈ M, then d is a distance on M, usually called Carnot-Carathéodory distance.

By construction, the metric space (M, d) is a length space. If this metric space is complete, then closed balls are compact, and by a standard application of Ascoli-Arzelà theorem, the infimum in (1) is attained. Namely, for any given pair of points x, y ∈ M there exists at least one Lipschitz curve γ : ([0,1] → M joining x to y and such that L(y) = d(x, y). This curve, which in general is not unique, is called a length minimizing curve. Its a priori regularity is the Lipschitz regularity. In particular, length minimizing curves are differentiable a.e. on ([0,1].

For our porpouses, we can assume that M is an open subset of ℝn or the whole ℝn itself, and that we have D(x) = span{X 1(x),…,X r (x)}, x ∈ ℝn, where X 1,…, X r are r ≥ 2 linearly independent smooth vector fields in ℝn. With respect to the standard basis of vector fields in ℝn, we have, for any j = 1,…,r,

where X ji : ℝn → ℝ are smooth functions. A Lipschitz curve γ : ([0;1] → M is then horizontal if there exists a vector of functions h = (h 1,…,h r ) ∈ L ∞([0;1]; ℝr), called controls of γ such that

We fix on D(x) the quadratic form g x that makes X 1,…,X r orthonormal. Any other choice of metric does not change the regularity problem. In this case, the length of γ is

Let h = (h 1,…, h r ) be the controls of a horizontal curve γ. When γ is length minimizing we call the pair (γ, h) an optimal pair. Pontryagin Maximum Principle provides necessary conditions for a horizontal curve to be a minimizer.

Theorem 1. Let (γ, h) be an optimal pair. Then there exist ξ0 ∈ {0, 1} and a Lip-schitz curve ξ : ([0, 1] → ℝn such that:

-

i)

ξ0 + |ξ| ≠ 0 on [0, 1];

-

ii)

ξ0 h j + 〈ξ, X j (γ)〉 = 0 on ([0, 1] for all j = 1,…, r;

-

iii)

the coordinates ξ k , k = 1,…,n, of the curve ξ solve the system of differential equations

$${\dot \xi _k} = - \sum\limits_{j = 1}^r {\sum\limits_{i = 1}^n {\frac{{\partial {X_{ji}}}}{{\partial {x_k}}}(\gamma ){h_j}{\xi _i},\quad a.e.\;on[0,1].} } $$((3))

Above, 〈ξ, X j 〉 is the standard scalar product of ξ and X j as vectors of ℝn. If we identify the curve ξ with the 1-form in ℝn along γ

then 〈ξ; X j 〉 is the covector-vector duality.

The proof of Theorem 1 relies upon the open mapping theorem, see [2, Chap. 12]. For any υ ∈ L 2([0, 1]; ℝr), let γ υ be the solution of the problem

The mapping E : L 2([0, 1];ℝr) → ℝn, E(υ) = γ υ (1), is called the end-point mapping with initial point x 0. The extended end-point mapping is the mapping F : L 2([0, 1]; ℝr) → ℝn+1

If (γ, h) is an optimal pair with γ(0) = x 0 then F is not open at υ = h and then its differential is not surjective. It follows that there exists a nonzero vector (λ0, λ) ∈ ℝ×ℝn = ℝn+1 such that for all υ ∈ L 2([0, 1]; ℝr) thereholds 〈dF(h)υ, (λ0, λ)〉 = 0: The case λ0 = 0 is the case of abnormal extremals, that are precisely the critical points of the end-point mapping E, i.e., points h where the differential dE(h) is not surjective. In particular, the notion of abnormal extremal is independent of the metric fixed on the horizontal distribution.

The curve ξ, sometimes called dual curve of γ, is obtained in the following way. Let h be the controls of an optimal trajectory γ starting from x 0. For x ∈ ℝn, let γ x be the solution to the problem

The optimal flow is the family of mappings P t : ℝn → ℝn, P t (x) = γ x (t) with t∈ ℝ. We are assuming that the flow is defined for any t ∈ ℝ. Let (λ0, λ) ∈ ℝ × ℝn be a vector orthogonal to the image of dF(h). At the point x 0 we have the 1-form ξ(0) = λ 1 dx 1 + … + λ n dx n , where (λ1,…, λ n ) are the coordinates of λ. Then the curve t ↦ ξ (t) given by the pull-back of ξ(0) along the optimal flow at time t, namely the curve

satisfies the adjoint Eq. (3).

We can use i)–iii) in Theorem 1 to define the notion of extremal. We say that a horizontal curve γ : ([0, 1] → ℝn is an extremal if there exist ξ0 ∈ {0, 1} and ξ ∈ Lip([0, 1]; ℝn) such that i), ii), and iii) in Theorem 1 hold. We say that γ is a normal extremal if there exists such a pair (ξ0, ξ) with ξ0 ≠ 0. We say that γ is an abnormal extremal if there exists such a pair with ξ0 = 0. We say that γ is a strictly abnormal extremal if γ is an abnormal extremal but not a normal one.

If γ is an abnormal extremal with dual curve ξ, then by ii) we have, for any j = 1,…, r,

Further necessary conditions on abnormal extremals can be obtained differentiating identity (5). In fact, one gets for any j = 1,…, r,In fact, one gets for any j = 1,…, r,

When the rank is r = 2, from (6) along with the free assumption |h| ≠ 0 a.e. on ([0, 1] we deduce that

In the case of strictly abnormal minimizers, necessary conditions analogous to (7) can be obtained also for r ≥ 3.

Theorem 2. Let γ : ([0, 1] → ℝn be a strictly abnormal length minimizer. Then any dual curve ξ ∈ Lip ([0, 1], ℝn) of γ atisfies

for any i, j = 1,…,r.

Condition (8) is known as Goh condition. Theorem 2 can be deduced from second order open mapping theorems. We refer to [2, Chap. 20] for a systematic treatment of the subject. See also the work [3].

The Goh condition naturally leads to the notion of Goh extremal. A horizontal curve γ : ([0, 1] → ℝn is a Goh extremal if there exists a Lipschitz curve ξ : [0, 1] → ℝ n such that ξ ≠ 0, ξ solves the adjoint Eq. (3) and 〈ξ, X i (γ)〉 = 〈ξ, [X i , X j ](γ)〉 = 0 on [0, 1] for all i, j = 1,…, r.

3 3 Known regularity results

In this section, we collect some regularity results for extremal and length minimzing curves. Other results are discussed in Sect. 4. The case of normal extremal is clear and classical.

Theorem 3. Let (M, D, g) be any sub-Riemannian manifold. Normal extremals are C∞ curves that are locally length minimzing.

In fact, with the notation of Sect. 2, if γ is a normal extremal with controls h and dual curve ξ, by condition ii) in Theorem 1 we have, for any j = 1,…, r,

This along with the adjoint Eq. (3) implies that the pair (γ, ξ) solves a.e. the system of Hamilton’s equations

where H is the Hamiltonian function

This implies that \(\dot \gamma \) and \(\dot \xi \) are Lipschitz continuous and thus γ, ξ ∈ C1,1. By iteration, one deduces that γ, ξ ∈ C ∞.

The fact that normal extremals are locally length minimizing follows by a calibration argument, see [9, Appendix C]. Indeed, using the Hamilton’s Eq. (10), the 1-form ξ along γ can be locally extended to an exact 1-form ξ satisfying

This 1-form provides the calibration.

The distribution D = span{X 1,…,X r } on M is said to be bracket-generating of step 2 if for any x ∈ M we have

where n = dim(M). For distributions of step 2, Goh condition (8) implies the smoothness of any minimizer.

Theorem 4. Let (M,, D, g) be a sub-Riemannian manifold where D is a distribution that is bracket generating of step 2. Then any length minimizing curve in (M, D, g) is of class C ∞.

In fact, if γ is a strictly abnormal length minimizing curve with dual curve ξ then by (5), (8), and (11) it follows that ξ = 0 and this is not possible. In other words, there are no strictly abnormal minimizers and this implies the claim made in Theorem 4.

When the step of the distribution is at least 3, then there can exist strictly abnormal extremals. When the step is precisely 3, the regularity question is clear within the setting of Carnot groups. Let g be a stratified nilpotent n-dimensional real Lie algebra with

where g i+1 = [g 1, g i ] for i ≤ s − 1 and g i = {0} for i > s.

The Lie algebra g is the Lie algebra of a connected and simply-connected Lie group G that is diffeomorphic to ℝn. Such a Lie group is called Carnot group. The horizontal distribution D on G is induced by the first layer g 1 of the Lie algebra. In fact, D is spanned by a system of r linearly independent left-invariant vector fields. By nilpotency, the distribution is bracket-generating. So any quadratic form on g 1 induces a left-invariant sub-Riemannian metric on G. The number r = dim(g) is the rank of the group. The number s ≥ 2 is the step of the group.

Theorem 5. Let G be a Carnot group of step s = 3 with a smooth left-invariant quadratic form g on the horizontal distribution D. Any length minimizing curve in (G, D, g) is of class C ∞.

This theorem is proved in [16]. A short and alternative proof, given in [6, Theorem 6.1], relies upon the fact that a strictly abnormal length minimizing curve must be contained in (the lateral of) a proper Carnot subgroup. Then a reduction argument on the rank of the group reduces the analysis to the case r = 2, where abnormal extremals are easily shown to be integral curves of some horizontal left-invariant vector field.

When the step is s = 4, there is a regularity result only for Carnot groups of rank r = 2, see [8, Example 4.6].

Theorem 6. Let G be a Carnot group of step s = 4 and rank r = 2 with a smooth left invariant quadratic form g on the horizontal distribution D. Then any length minimizing curve in (G, D, g) is of class C ∞.

The proof of this result relies upon two facts. First, one proves that the horizontal coordinates of any abnormal extremal are contained in the zero set of a quadratic polynomial in two variables. This shows that the only singularity that abnormal extremals can have is of corner type. Then using a general theorem proved in [8] (see Sect. 4) one concludes that extremal curves with corners are not length minimizing.

When the rank is r = 2 and the step s is larger than 4, the best regularity known for minimizers is the C 1,δ regularity.

Theorem 7. Let G be a Carnot group of rank r = 2, step s > 4 and with Lie algebra g = g 1 ⊕ … ⊕ g s satisfying

Then any length minimizing curve in (G, D, g), where g is a smooth left-invariant metric on the horizontal distribution D , is of class C 1,δ for any

This theorem is proved in [37, Theorem 10.1]. It is a byproduct of a technique that is used to analyse the length minimality properties of extremals of class C 1 whose derivative is only δ-Hölder continuos for some 0 < δ < 1. We give an example of such techniques in Sect. 7. The restriction δ < 2/(s − 4) is a technical one. The estimates developed in [13], however, show that the restriction δ < 1/4 is deeper. We shall discuss (12) it in the next section.

4 4 Analysis of corner type singularities

Let M be a smooth manifold with dimension n ≥ 3, and let D be a completely non-integrable distribution on M. Let D 1 = D and D i = [D 1, D i−1] for i ≥ 2,i.e., D i , is the linear span of all commutators [X, Y] with X ∈ D 1 and Y ∈ D i−1. We also let ℒ0 = {0} and ℒ i , = D 1 + … + D i , i ≥ 1. By the nonintegrability condition, for any x ∈ M there exists s ∈ ℕ such that ℒ s (x) = T X M, the tangent space of M at x. Assume that D is equiregular, i. e., assume that for each i = 1,…, s

In [8], Leonardi and the author proved the following theorem.

Theorem 8. Let (M, D, g) be a sub-Riemannian manifold, where g is a metric on the horizontal distribution D. Assume that D satisfies (14) and

Then any curve in M with a corner is not length minimizing in (M, D, g).

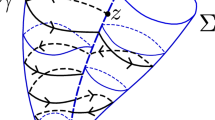

A “curve with a corner” is a D-horizontal curve γ : [0, 1] → M such that at some point t ∈ (0,1) the left and right derivatives \({\dot \gamma _L}(t) \ne {\dot \gamma _R}(t)\) exist and are different. The proof of Theorem 8 is divided into several steps.

-

1)

First one blows up the manifold M, the distribution D, the metric g, and the curve y at the corner point x = γ(t). The blow-up is in the sense of the nilpotent approximation of Mitchell, Margulis and Mostow (see e. g. [10]). The limit structure is a Carnot group and the limit curve is the union of two half-lines forming a corner.

-

2)

The limit curve is actually contained in a subgroup of rank 2, and after a suitable choice of coordinates one can assume that the manifold is M = ℝn with a 2-dimensional distribution D = span{X1, X 2} spanned by the vector fields in ℝn

$${X_1} = \frac{\partial }{{\partial {x_1}}}\quad \operatorname{and} \quad \,{X_2} = \frac{\partial }{{\partial {x_2}}} + \sum\limits_{j = 3}^n {{f_j}(x)\frac{\partial }{{\partial {x_j}}},} $$((16))where f j : ℝn → ℝ, j = 3,…,n, are polynomials with certain properties. The curve obtained after the blow-up is γ : [−1, 1] → ℝn

$$\gamma (t) = \left\{ {\begin{array}{*{20}{c}} { - t{e_2},\;t \in [ - 1,0],} \\ {t{e_1},\;t \in [0,1],} \end{array}} \right.$$((17))where e 1,…, e n is the standard basis of ℝn. If the limit curve is not length minimizing in the limit structure, then the original curve is not length minimizing in the original structure.

-

3)

At this stage, one uses (15). If the original distribution satisfies (15), then the limit Lie algebra satisfies (12) and the polynomials f j only depends on the variables x 1 and x 2. This makes possible an effective and computable way to prove that the curve γ in (17) is not length minimizing. One cuts the corner of γ in the x 1 x 2 plane gaining some length. The new planar curve must be lifted to get a horizontal curve, changing in this way the end-point. One can use several different devices to bring the end-point back to its original position. To do this, we can use a total amount of length that is less than the length gained by the cut. This adjustment is in fact possible, and the entire construction is the main achievement of [8].

The restriction (15) has a technical character. The problem of dropping this restriction is adressed in [14] (see also Sect. 6.2). The cut-and-adjust technique introduced in [8] is extended in [13] to the analysis of curves having singularities of higher order. In Sect. 7, we study a nontrivial example of such a situation.

5 5 Classification of abnormal extremals

The notion of abnormal extremal is rather indirect or implicit. There is a differential equation, the differential Eq. (3), involving the dual curve and the controls of the extremal. Even though this equation can be translated into some better form (see Theorem 2.6 in [6]), nevertheless the carried information is not transparent. In this section, we present some attempts to describe abnormal extremals is a more geometric or algebraic way.

5.1 5.1 Rank 2 distributions

We consider first the case when M = ℝ n and D is a rank 2 distribution in M spanned by vector fields X 1 and X 2 as in (16), where f 3,…,f n ∈ C ∞(ℝ2) are functions depending on the variables x 1 , x 2. We fix on D the quadratic form g making X 1 and X 2 orthonormal. Let K : ℝ n−2 × ℝ2 → ℝ be the function

where λ = (λ1,…, λ n−2) ∈ ℝn−2 and x ∈ ℝ2.

In this special situation, Pontryagin Maximum Principle can be rephrased in the following way (see Propositions 4.2 and 4.3 in [8]).

Theorem 9. Let γ : ([0, 1] → M be a D-horizontal curve that is length minimizing in (M, D, g). Let K = (γ1, γ2) and assume that \(|\dot k|\; = 1\) almost everywhere. Then one (or both) of the following two statements holds:

-

1)

there exists λ ∈ ℝn−2, λ ≠ 0, such that

$$K(\lambda ,\kappa (t)) = 0,\quad for\;all\;t \in [0,1];$$((19)) -

2)

the curve γ is smooth and there exists λ ∈ ℝn−2 such that κ solves the system of differential equations

$$\ddot \kappa = K(\lambda ,\kappa ){\dot \kappa ^ \bot },$$((20))where κ ⊥ = (−κ 2 , κ1)

The geometric meaning of the curvature Eq. (20) was already noticed by Montgomery in [11].

The interesting case in Theorem 9 is the case 1): the curve κ, i. e., the horizontal coordinates of γ, is in the zero set of a nontrivial explicit function.

5.2 5.2 Stratified nilpotent Lie groups

In free stratified nilpotent Lie groups (free Carnot groups) there is an algebraic characterization of extremal curves in terms of an algebraic condition analogous to (19).

Let G be a free nilpotent Lie group with Lie algebra g. Fix a Hall basis X 1 ,…, X n of g and assume that the Lie algebra is generated by the first r elements X 1 ,…, X r . We refer to [5] for a precise definition of the Hall basis. The basis determines a collection of generalized structure constants \(c_{i\alpha }^k \in \mathbb{R}\) where α = α 1,…,α n ) ∈ ℕnis a multi-index and i,k ∈ {1,…,n}. These constants are defined via the identity

where the iterated commutator X α is defined via the relation

Using the constants \(c_{i\alpha }^k\) for any i = 1,…, n and for any multi-index α ∈ ℕn, we define the linear mappings ϕ iα : ℝn → ℝ

Finally, for each i = 1,…, n and υ ∈ ℝn, we introduce the polynomials \(P_i^v:{\mathbb{R}^n} \to \mathbb{R}\)

where we let \({x^\alpha } = x_1^{\alpha 1} \cdots x_n^{\alpha n}\).

The group G can be identified with ℝn via exponential coordinates of the second type induced by the basis X 1,…, X n . For any υ ∈ ℝn, υ ≠ 0, we call the set

an abnormal variety of G of corank 1. For linearly independent vectors υ 1,…, υ m ∈ ℝn,m ≥ 2, we call the set \({Z_{{v_1}}} \cap \ldots \cap {Z_{{v_m}}}\) an abnormal variety of G ofcorank m. Recall that the property of having corank m for an abnormal extremal γ means that the range of the differential of the end-point map at the extremal curve is n − m dimensional.

The main result of [6] is the following theorem.

Theorem 10. Let G = ℝn be a free nilpotent Lie group and let γ : [0, 1] → G be a horizontal curve with γ(0) = 0. The following statements are equivalent:

-

A)

the curve γ is an abnormal extremal of corank m ≥ 1;

-

B)

there exist m linearly independent vectors υ1,…, υ m ∈ ℝn such that \({Z_{{v_1}}} \cap \ldots \cap {Z_{{v_m}}}\) for all t ∈ [0, 1].

A stronger version of Theorem 10 holds for Goh extremals. If g = g 1 ⊕ g 2 ⊕ ⋯ ⊕ g s , we let r 1 = dim(g 1) and r 2 = dim(g 2). Then, for υ ∈ ℝn with υ ≠ 0 we define the zero set

Theorem 11. Let G = ℝn be a free nilpotent Lie group and let γ: [0, 1] → G be a horizontal curve such that γ(0) = 0. The following statements are equivalent:

-

A)

the curve γ is a Goh extremal;

-

B)

there exists υ ∈ ℝ n, υ ≠ 0, such that γ(t) ∈ Γυ for all t ∈ [0,1].

The zero set Γυ is always nontrivial for υ ≠ 0 and, moreover, there holds υ i = 0 for all i = 0,…, r 1 + r 2. See Remark 4.12 in [6].

These results are obtained via an explicit integration of the adjoint Eq. (3). Some work in progress [7] shows that Theorems 10 and 11 also hold in nonfree stratified nilpotent Lie groups.

6 6 Some examples

In this section, we present two examples. In the first one, we exhibit a Goh extremal having no regularity beyond the Lipschitz regularity. In the second example, there are extremals with corner in a sub-Riemannian manifold violating (15).

6.1 6.1 Purely Lipschitz Goh extremals

Let G be the free nilpotent Lie group of rank r = 3 and step s = 4. This group is diffeomorphic to ℝ32. By Theorem 11, Goh extremals of G starting from 0 are precisely the horizontal curves γ in G contained in the algebraic set

for some υ ∈ ℝ32 such that υ ≠ 0 and υ1 = … = υ6 = 0. The structure constants \(c_{i\alpha }^k\) are determined by the relations of the Lie algebra of G. Using (24), we can then compute the polynomials defining Γ υ (for details, see [6]). These are

Theorem 12. For any Lipschitz function ϕ : [0,1] → 211D with ϕ(0) = 0, the horizontal curve γ : [0,1] → G = ℝ 32 such that γ(0) = 0, γ 1(t) = t 2, γ2(t) = t, and γ 3 .t/ = ϕ(t) is a Goh extremal.

With the choice υ 7 = 1, υ18 = 2, and υ j = 0 otherwise, the relevant polynomials are \(P_4^v(x) = x_2^2 - {x_1},P_5^v(x) = P_6^v(x) = 0\) Then, the curve γ is contained in the zero set Γυ and, by Theorem 11, it is a Goh extremal. The Lipschitz function ϕ is arbitrary. It would be interesting to understand the length minimality properties of γ depending on the regularity of ϕ.

6.2 6.2 A family of abnormal curves

During the meeting Geometric control and sub-Riemannian geometry, A. Agrachev and J. P. Gauthier suggested the following situation, in order to find a nonsmooth length-minimizing curve.

In M = ℝ4, consider the vector fields

and denote by D the distribution of 2-planes in ℝ4 spanned pointwise by X 1 and X 2. Fix a parameter α > 0 and consider the initial and final points L = (−1, α, 0, 0) ∈ ℝ4 and R = (1, α, 0, 0) ∈ ℝ4. Let γ : [−1, 1] → ℝ4 be the curve

The curve γ is horizontal and joins L to R. Moreover, it can be easily checked that γ is an abonormal extremal.

This situation is interesting because the distribution D violates condition (15) with i = 2 and j = 3. In fact, we have

That condition (15) is violated is also apparent from the fact that the nonhorizontal variable x 3 do appear in the coefficients of the vector field X 1 in (25). The fact that the distribution D is not equiregular, is not relevant.

Agrachev and Gauthier asked whether the curve γ is length minimizing or not, especially for small α > 0. The results of [8] cannot be used, because of the failure of (15). In [14], we answered in the negative to the question, at least when α ≠ 1.

Theorem 13. For any α > 0 with α ≠1, the curve γ in (26) is not length minimizing in (ℝ4, D, g), for any choice of metric g on D.

The proof is a lengthy adaptation of the cut-and-adjust technique of [8]. When α = 1 the construction of [13] does not work and, in this case, the length minimality property of γ remains open.

7 7 An extremal curve with Hölder continuous first derivative

On the manifold M = ℝ5, let D be the distribution spanned by the vector fields

We look for abnormal curves passing through 0 ∈ ℝ5. In view of Theorem 9, case 1), we consider the function K : ℝ3 × ℝ2 → ℝ, defined as in (18),

With the choice λ1 = 0, λ2 = 1/5, and λ3 = −1, the equation K(λ, x) = 0 reads \(x_1^4 - x_2^3 = 0\) Thus the curve κ : [0, 1] → ℝ2, κ(t) = (t, t 4/3), is in the zero set of K. It can be checked that the horizontal curve γ : [0, 1] → M such that (γ 1, γ 2) = κ is an abnormal extremal with dual curve ξ : [0, 1] → ℝ5,

Notice that we have, for any t ∈ [0,1],

Then, when t > 0 the curve γ is a regular abnormal extremal, in the sense of Definition 14 on page 36 of [9]. By Theorem 5 on page 59 of [9], the curve γ is therefore locally (uniquely) length minimizing on the set where t > 0.

The curve γ fails to be regular abnormal at t = 0. Moreover, there holds γ ∈ C 1,1/3([0, 1]; ℝ5) with no further regularity at t = 0. In this section, we show that γ is not length minimizing.

Theorem 14. Let g be any metric on the distribution D. The horizontal curve γ : [0,1] → M defined above is not length minimizing in (M, D, g) at t = 0.

Proof. For any 0 < η < 1, let T η ⊂ ℝ2 be the set

The boundary ∂T η is oriented counterclockwise. Let κ η: [0, 1] → ℝ2 be the curve κ η(t) = (t, η 1/3 t) for 0 ≤ t ≤ η and κ η(t) = (t, t 4/3) for η ≤ t ≤ 1, and let γη : [0, 1] → ℝ5 be the horizontal curve such that \((\gamma _1^\eta ,\gamma _2^\eta ) = {k^\eta }\).

We assume without loss of generality that g is the quadratic form on D that makes X 1 and X 2 orthonormal. The gain of length in passing from γ to γ η is

On the generic monomial \(x_1^{i + 1}x_2^j\) with i, j ∈ ℕ, the cut T η produces the error \(T_\eta ^{ij}\) given by the formula

We are interested in this formula when i = j = 0, when i = 4 and j = 0, when i = 0 and j = 3. The initial error produced by the cut T η is the vector of ℝ3

Only the exponents 7/3 and 19/3 of η are relevant, not the coefficients.

Our first step is to correct the error of order η 7/3 on the third coordinate. For fixed parameters b > 0, λ > 0, and ε > 0, let us define the curvilinear rectangle

When ε < 0, we let

The boundary ∂R b ,λ(ε) is oriented counterclockwise if ε > 0, while it is oriented clockwise when ε < 0. The curve κ η is deviated along the boundary of this rectangle and then it is lifted to a horizontal curve. The effect of R b ,λ(ε) on the generic monomial \(x_1^{i + 1}x_2^j\) is

The cost of length of R b, λ(ε) is

When i = j = 0, formula (33) reads \(R_{b,\lambda }^{0,0}(\varepsilon ) = \varepsilon |\varepsilon {|^\lambda }\) whereas

and

where \(\widehat {R_{b,\lambda }^{0,3}}(\varepsilon )\) is defined via the last identity.

We choose b = η. The parameter 0 < λ < 1 will be fixed at the end of the argument. To correct the error on the third coordinate, we solve the equation

in the unknown ε. In fact, this equation is \(\varepsilon |\varepsilon {|^\lambda } + \frac{1}{{14}}{\eta ^{7/3}} = 0\) and the solution is

The choice b = η is not relevant, here. By (28) and (34), the cost of length is admissible if η >0 is small enough and we have

This is our first restriction on λ.

The rectangle R η,λ (ε) produces new errors on the fourth and fifth coordinates. Namely, by (35) we have

When λ < 3/4, condition implied by (37), the leading term in η in the sum above is obtained for k = 0.

By (36), the error produced on the last coordinate is

When λ < 3/4, the bracket […]in the sum over k above is

The leading term in the sum in (39) is obtained for k = 3, and the second leading term is obtained for k = 2.

We have the new vector of errors

When λ < 3/4, the errors on the fourth and fifth coordinates produced by the rectangle R η ,λ dominate the errors produced by the cut, see (30). In fact, we have

Also the second leading term in \(R_{\eta ,\lambda }^{0,3}( - {c_0}{\eta ^\beta })\) dominates \(T_\eta ^{0,3}\).In fact, we have

Now we use a rectangle R b,μ(ε) to correct the error on the fourth coordinate. Here, \(\frac{1}{2}< b< 3/4\) is position parameter and μ > 0 is small enough. Conceptually, we could take μ = 0. The parameter μ > 0 is only needed to confine the construction in a bounded region. We solve the equation

in the unknown ε. By the formulas computed above, we deduce that the solution \(\varepsilon = \overline \varepsilon \)

where c 1 > 0 is an explicit constant and the dots stand for lower order terms in η. The cost of length of the rectangle \({R_{b,\mu }}(\overline \varepsilon )\) is admissible for any μ > 0 close to 0, because β(1 + 5λ) > 5/3.

By (36) and (41), we have the identity

and, therefore, the new vector of errors is

where we have

with the coefficient \({c_2} = c_1^{1 = \mu }\)

In the next step, we correct simultaneously the errors on the fourth and fifth coordinates. We need curvilinear squares. Let 0 < b < 1 be a position parameter. For any ε ∈ (− 1,1), we let

The parameter λ of the rectangle is set to λ = 1. Set-theoretically, the definition is the same for positive and negative ε. However, when ε > 0 the boundary ∂Q b (ε) of the square is oriented clockwise; when ε < 0 the boundary is oriented counterclockwise. The cost of length Λ(Q b (ε) of the square is the sum of the length of the four sides. For some constant C > 0 independent of b and we have

By (33), when ε > 0 the effect \(Q_b^{ij}(\varepsilon )\) of the square on the monomial \(x_1^{i + 1}x_2^j\) is

When ε < 0, we have \(Q_b^{ij}(\varepsilon ) = - Q_b^{ij}(|\varepsilon |)\).

Let 3/4 < b 1 < b 2 < 1 be position parameters and let μ > 0 be close to 0. We solve the system of equations

in the unknowns ε 1,ε2. Subtracting the first equation from the second one and using (36), we get the equivalent system

where

for some c 3 > 0. The dots stand for lower order terms in η. In fact, the leading term in \(R_{\eta ,\lambda }^{0,3}( - {c_0}{\eta ^\beta })\) dominates the remaining terms. Using a notation consistent with (35), we also let

Above, c 4 > 0 is a constant and the dots stand for negligible terms. Notice that we have control on the sign of the leading term.

The system (46) can thus be approximated in the following way

where c 5,…, c 8 > 0 are constants and the dots stand for negligible terms. We can compute ε 2 as a function of ε 1 from the first equation and replace this value into the second equation. This operation produces lower order terms. Thus the second equation reads

and there is a solution ε 1 < 0 satisfying

where c 9 > 0 and the dots stand for lower order terms in η. As a consequence, from the first equation in (47) we deduce that

The cost of length of the rectangle \({R_{{b_2},\mu }}({\varepsilon _2})\) is 2|ε 2|, and it is admissible because for μ > 0 close to 0 we have

By (45), the cost of lenght of the square \({Q_{{b_1}}}({\varepsilon _1})\) is at most C|ε 1|, and, for small η, it is admissible if and only if

Here, we have a nontrivial restriction for λ. This restriction is compatible with (37). Now the parameter λ is fixed once for all in such a way that

The device \({Q_{{b_1}}}({\varepsilon _1})\) produces an error on the third coordinate of the order |ε 1|2, that is of the order \({\eta ^{14(2 + \frac{1}{3}\lambda )/9}}\). The device \({R_{{b_2},\mu }}({\varepsilon _2})\) produces an error on the third coordinate of the order |ε 2|1+μ, that is of the order η 19(1+μ)/3. These errors are negligible with respect to the error \(R_{b,\mu }^{0,0}(\overline \varepsilon )\) appearing in (42)–(43). Eventually, after our last correction we have the vector of errors

the dots stand for lower order terms and the number ϱ satisfies the key condition ϱ < 1 provided that 0 < μ < λ. Now also μ is fixed.

Comparing the initial error E 0(η) in (30) and the error E 3(η) in (50), we realize that the initial error η 7/3 on the third coordinate decreased by a geometric factor ϱ < 1 Now we can iterate the entire construction to set to zero all the three components of the error. Here, we omit the details of this standard part of the argument. This finishes the proof.

Remark 1. The curve γ studied in Theorem 14 is of class C 1,1/3. The curves considered in Theorem 7 are at most C 1,1/4. There is a gap between the two cases. In the proof of Theorem 14, the key step is the choice of λ made in (49). In particular, there is a very delicate bound from below for λ. In the proof of Theorem 7, there is no such a bound from below.

8 8 Final comments

Concerning the question about the regularity of length minimizing curves in sub-Riemannian manifolds, there are two possibilities. Either, in any sub-Riemannian manifold every length minimizing curve is C ∞ smooth (answer in the positive); or, there is some sub-Riemannian manifold with nonsmooth (non C 1, non C 2, etc.) length minimizing curves (answer in the negative). The author has no clear feeling on which of the two answers to bet.

Theorem 5 on step 3 Carnot groups suggests that, in sub-Riemannian manifolds of step 3, any length minimizing curve is C ∞ smooth. This seems to be the first question to investigate in view of an answer in the positive. In the same spirit, Theorem 6 suggests that in sub-Riemannian manifolds of rank 2 and step 4 any length minimizing curve is C ∞ smooth.

On the other hand, the first example to investigate in order to find a length minimizer with a corner type singularity is the one of Sect. 6.2 with the choice α = 1. Moreover, Theorem 7 and the computations made in Sect. 7 suggest to look for nonsmooth length minimizing curves in the class of C 1,δ abnormal extremals with 0 < δ < 1 sufficiently close to 1. One interesting example could be the manifold M = ℝ5 with the distribution spanned by the vector fields

for m ∈ ℕ large.

Finally, the example of a purely Lipschitz Goh extremal of Sect. 6.1 proves that the first and second order necessary conditions for strictly abnormal extremals do not imply, in general, any further regularity beyond the given Lipschitz regularity. New and deeper techniques are needed in order to develop the regularity theory.

References

Agrachev, A., Barilari, D., Boscain, U.: Introductionto Riemannianand Sub-Riemanniangeo-metry, http://people.sissa.it/ agrachev/agrachev_files/notes.html

Agrachev, A., Sachkov, Y.L.: Control Theory from the Geometric Viewpoint. Encyclopaedia of Mathematical Sciences 87. Control Theory and Optimization, II. Springer-Verlag, Berlin Heidelberg New York (2004)

Agrachev, A., Sarychev, A.: Abnormal sub-Riemannian geodesics:Morse index and rigidity. Ann. Inst. Henri Poincaré, 13(16), 635–690 (1996)

Chitour, Y., Jean, F., Trélat, E.: Genericity results for singular curves. J. Differential Geom. 73(1), 45–73 (2006)

Grayson, M., Grossman, R.: Models for free nilpotent Lie algebras. J. Algebra 135(1), 177–191 (1990)

Le Donne, E., Leonardi, G.P., Monti, R., Vittone, D.: Extremal curves in nilpotent Lie groups. Geom. Funct. Anal. 23(4), 1371–1401 (2013)

Le Donne, E., Leonardi,G.P., Monti, R., Vittone, D.: Extremalpolynomialsin stratifiedgroups (forthcoming 2013)

Leonardi, G.P., Monti, R.: End-point equations and regularity of sub-Riemannian geodesics. Geom. Funct. Anal. 18(2), 552–582 (2008)

Liu, W., Sussmann, H.: Shortest paths for sub-Riemannian metrics on rank-two distributions, Mem. Amer. Math. Soc. 118, x+104 (1995)

Margulis, G.A., Mostow, G.D.: Some remarks on the definition of tangent cones in a Carnot-Carathéodory space, J. Anal. Math. 80, 299–317 (2000)

Montgomery, R.: Abnormal minimizers, SIAM J. Control Optim., 32, 1605–1620 (1994)

Montgomery, R.: A Tour of Sub-Riemannian Geometries, Their Geodesics and Applications, AMS (2002)

Monti, R.: Regularity results for sub-Riemannian geodesics, Calc. Var. 2013 (to appear). doi: 10.1007/s00526-012-0592-2s

Monti, R.: A family of nonminimizing abnormal curves, Ann. Mat. Pura Appl. 2013 (to appear). doi: 10.1007/s10231-013-0344-8

Strichartz, R.S.: Sub-Riemannian geometry, J. Differential Geom., 24, 221–263 (1986) [Corrections to “Sub-Riemannian geometry”, J. Differential Geom., 30, 595–596 (1989)]

Tan, K., Yang, X.: Subriemannian geodesics of Carnot groups of step 3. http://arxiv.org/pdf/1105.0844v1.pdf

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Monti, R. (2014). The regularity problem for sub-Riemannian geodesics. In: Stefani, G., Boscain, U., Gauthier, JP., Sarychev, A., Sigalotti, M. (eds) Geometric Control Theory and Sub-Riemannian Geometry. Springer INdAM Series, vol 5. Springer, Cham. https://doi.org/10.1007/978-3-319-02132-4_18

Download citation

DOI: https://doi.org/10.1007/978-3-319-02132-4_18

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-02131-7

Online ISBN: 978-3-319-02132-4

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)