Abstract

The core ambitions of map generalisation remain the same-as do a set of inter connected research activities concerned with algorithm developments, user requirements modelling, evaluation methodologies, and the handling and integration of multi scale, global datasets. Technology continues to afford new paradigms of data capture and use, in turn requiring generalisation techniques that are capable of working with a wider variety of data sources (including user generated content), and web friendly mapping services that conceal the complexities of the map design process from the lay user. This summarative chapter highlights yet again, the truly inter disciplinary nature of map generalisation research.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

12.1 Major Achievements

Within the last generalisation book six topics of generalisation research were listed in the research agenda (Mackaness et al. 2007):

-

1.

Modelling user requirements

-

2.

Evaluation methodologies

-

3.

Generalisation and global datasets

-

4.

Generalisation in support of maintenance of multiple representation databases

-

5.

Generalisation and interoperability

-

6.

Map generalisation and geoinformatics

Several chapters within this book have presented successful research related to these topics. For example Chap. 2 refers directly to “Modelling user requirements” (1) and Chap. 9 is focused on the topic of “Evaluation methodologies” (2) Some of the research topics have became even more important in the light of recent developments within cartography. This is especially true of “Generalisation and interoperability” (5) given the current focus of research on the integration of user generated content and multi sourced data—now available through all kinds of geosensors (a topic covered in Chap. 5). Developments in MRDB research has led to successful implementations within NMAs (Chap. 11), however maintenance issues (4) have not been thoroughly researched though they remain an important topic. The next subsections will describe progress made against this research agenda, summarising the major achievements presented within the chapters of this book.

12.1.1 Automated Generalisation for Topographic Map Production Within NMAs

The development of automated generalisation solutions for topographic map production has been a long-standing research field in cartography. One measure of success has been the relatively recent implementation of fully automated generalisation solutions within the production environments of national mapping agencies (Chap. 11). One can speak of something of a breakthrough in the automated derivation of topographic maps that only a few years ago was not considered possible because of its complexity (Foerster et al. 2010). Adoption has been fuelled by (1) progress in research and development in automated generalisation, and (2) the need to make cost savings, whilst acknowledging that it is perhaps unrealistic to suppose that automated solutions can be as good as the human hand. Given the cost of manual intervention, the involvement of the cartographer has either been reduced or removed.

12.1.2 Capturing of User Requirements for On-Demand Mapping

In addition to the advances made in the automated derivation of predefined topographic map series, on-going research has especially focused on a user oriented perspective, that is to say, systems that have the flexibility to support on-demand mapping solutions. In this context, the user and the application governs cartographic design and the degree of generalisation. Prerequisites are: (1) the collection and interpretation of user requirements, and (2) their automated transformation into a map specification. Influencing factors are modelled through a variety of user variables such as context of use, user preferences and visual disabilities. These all have an influence on the map specification. One approach presented in Chap. 2 illustrates how the derivation of low level cartographic constraints can be used to guide the generalisation process (based on high level map specifications defined by the user). Currently map specification models designed for on-demand mapping use constraint based methodologies for data integration and generalisation. Another case study illustrates the collection of user requirements through map samples—utilising the COLorLEGend system for making creative colour choices.

12.1.3 Generalisation Process Modelling

The impact of on-demand mapping for generalisation process modelling has been detailed in Chap. 7. These approaches illustrate how the generalisation process can be decomposed into components that have the potential to be developed independently of each other and shared over the web. A lot of generalisation tools have been designed and implemented; the challenge of creating systems capable of using them automatically is still a very real one. Web generalisation services have been proposed as a way to encapsulate and publish generalisation tools for further re-use. Prerequisite is the formalisation of procedural knowledge (for example through rules, constraints and ontologies) with the aim of chaining generalisation operators together in order to offer web generalisation services. Successful implementations of the automated chaining of generalisation components were presented with the collaborative generalisation (CollaGen) approach documented in a case study in Chap. 7. In CollaGen, the generalisation functionalities of the components are described together with pre-conditions for data input and post-conditions for the expected data modifications caused by the generalisation processes.

12.1.4 Contextual Generalisation

Another important generalisation domain in which substantial progress has been made in recent years is in contextual generalisation—which requires us to make explicit the spatial relationships that exist among geographic entities. Chapter 3 provides an overview of existing classifications and modelling frameworks for spatial relations. On-going research continues to explore the utilisation of a ‘spatial relations ontology’ to support automated generalisation processes. Case studies illustrate the spatial relation modelling for the extraction of groups of objects, the migration of topographic data with thematic user generated data, as well as the extension of CityGML for modelling spatial relations in 3D environments. In Chap. 8 relations between field and object type data are modelled for the purposes of terrain generalisation. Here, the focus has been on maintaining topological relationships between rivers, contours and spot heights. The GAEL model presented in one case study of this chapter offers a solution that handles object-field relations within the generalisation process and thus allows us to find a balance between the deformation and preservation of features. Generalisation of relief and hydrographic networks based on generic deformation algorithms are carried out in parallel in order to have their common outflow relation preserved. The GAEL model has been further developed from a research prototype to a software component that forms part of the production line for the derivation of a 1:25,000 scale base map of France.

12.1.5 Generalisation of Object Groups and Networks

In the past research and development of generalisation algorithms has focussed on isolated objects. In recent years the generalisation of groups of objects and networks has attracted more and more attention. Ideas related to the generalisation of individual objects have been extended to the generalisation of groups of objects. Several approaches were proposed for the generalisation of road and stream networks, island and building groups (case studies in Chaps. 3, 6 and 9). The strategies illustrate the importance of data enrichment to support generalisation that is tailored to specific landscape and settlement contexts. As networks vary with local geographic conditions, network thinning operations now take into account local line density variations. For river networks, ‘prominence estimates’ enable a ranking of ‘strokes’ which are based on stream order, stream name, longest path, drainage area, straightness and the number of upstream branches. In the case of road networks combined stroke and mesh-density strategies have been successfully applied to the task of ‘thinning’. Research on building alignments has concentrated on the development of strategies for preserving alignment properties. This requires corresponding relations between source and target groups to be made explicit, which requires implementation of both pattern recognition and matching strategies. Evaluation of this work shows that group characteristics of building alignments are not just preserved but also becoming more homogenous.

12.1.6 Evaluation in Automated Generalisation

Chapter 9 presents developments in evaluation methodologies. The two main strategies are visual and automated evaluation. While visual evaluation is qualitative, time consuming and focused on map readability, automated evaluation is quantitative and is particularly appropriate for assessing specific feature representations. Research has also been undertaken to define map readability by using map readability formulas assessed through empirical user studies. Measures of readability include: the amount of information, spatial distribution, object complexity and graphical resolution. The results revealed that some measures of content and spatial distribution corresponded well with participants’ opinions, while measures of object complexity did not show the same correspondence.

12.1.7 Generalisation of User Generated Content and Multi-Source Data

Cartographic visualisations and mashups in the context of web mapping and mobile applications continue to evolve (demonstrating further application of automated generalisation techniques). The need for this type of mapping has been fuelled by the increasing availability of volunteered geographic information and geosensor data over the web. The integration and visualisation of such multi-source data presents new generalisation challenges arising from the heterogeneity of data, the differences in quality and the opportunities afforded by tag-based semantic modelling. These issues are discussed in Chap. 5. New opportunities lie in combining VGI data with topographic and other spatial reference data in order to provide meaningful contexts. Generalisation is needed in order to present information with clear semantics, consistent topology and free of graphical conflicts—the overall ambition being to avoid misinterpretation and incorrect decisions. One case study in this chapter compared generalisation of OpenStreetMap data with the generalisation of authoritative data. Generalisation in OSM mainly utilises selection and re-classification operations, with an emphasis on performance and short update intervals between data capturing and map rendering. The ambition is to avoid any manual post processing. In contrast the officially controlled generalisation of NMA data uses the complete set of generalisation operations, with a focus on cartographic quality, over longer update cycles, supported by manually controlled interactions. These activities reflect the importance of quality control in NMA data.

12.1.8 On-the-Fly and Continuous Generalisation

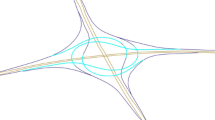

Dynamic use of web and mobile mapping applications need to give immediate response to user interactions and adapt to changing contexts. Thus methods of continuous zoom and on-the-fly generalisation are essential, particularly when it comes to the visualisation of thematic foreground data. A case study in Chap. 6 described the utilisation of quadtrees for on-the-fly generalisation of point data. Chapter 4 introduced the idea of the topological generalised area partitioning (tGAP) structure and the corresponding 3D space-scale cube (SSC). This is an encoding of variable-scale data structures developed especially for continuous generalisation. Advantages are the tight integration of space and scale with guaranteed consistency between scales. Research on the constrained approach (constraint tGAP) showed significant improvement in generalisation quality (as compared to unconstrained tGAP). The tGAP is well suited to web and mobile environment as it requires no further client side processing and supports progressive refinement of vector data. Ongoing research will explore the derivation of mixed scale representations through non-horizontal slices within the SSC and a usage of the vario-scale data structure for the modelling of 3D data.

12.1.9 Generalisation of Schematised Maps

Building on a long tradition of designing schematised maps, there is growing interest in their presentation in interactive environments—particularly the schematised presentation of navigational information on mobile devices, or as a way of presenting highly summarised thematic data. Chapter 10 illustrates recent research on the role of map generalisation techniques in the automated derivation of schematised maps in general and chorematic diagrams in particular. For automated solutions, the challenge is in finding the right balance between displacement, line simplification and smoothing. Despite the complexity in their design, in some cases, the quality of automated solutions is such that it is becoming increasingly hard to differentiate hand drawn solutions from automated ones.

12.2 Future Challenges

Various challenges have been identified throughout this book. In this section we try to frame those challenges in the context of increasing and ready access to geographical information in all its various forms and sources.

12.2.1 Generalisation of Tailored 2D Maps

Generalisation in the context of on-demand mapping is not a new topic (Chaps. 2 and 7). However, the challenge of building a system that is able to understand a user’s needs, and respond with appropriate generalisation techniques to produce a tailored map remains a considerable challenge. Any solution requires more complete models, and knowledge to instantiate them, of (1) map uses (in terms of tasks, user profiles and contexts of use), and (2) how the design of maps, and by how much they are generalised, govern their usability for a specific task. Until recently, the main focus of generalisation was the production of topographic maps by NMAs. The aim, inherited from the time when topographic maps governed what was digitally stored, was to produce general purpose maps with all topographic information relevant to a given scale. The way maps were actually used and the fitness for use of the available maps were rarely studied. This was because it was not considered feasible to automatically adapt maps for various specific uses. NMAs now recognise that they should allocate more resources in order to better understand the needs of their users. Additionally, such knowledge provides useful case studies for continuing research.

A second research topic concerns the development of user-friendly interfaces that facilitate the capture of user needs, preferences, and context of use. Mackaness et al. (2007) had already identified this as a research challenge, suggesting the idea of “generalisation wizards” that could help the user figure out the consequences of their choices by illustration of generalised sample areas. Progress has been made in the modelling of user variables that influence the on-demand mapping process, and the use of data samples has recently led to good results for symbols design (Chap. 2); nevertheless there is room for further development in generalisation interface design. Interfaces of various complexities could be imagined, depending on the level of expertise of the user. They could range from complex interfaces with large numbers of adjustable parameters, through to interfaces with a simple, single slider-bar, graded from “more detailed” to “less detailed”. Perhaps the ultimate solution is one that is able to infer all the required parameters depending on how the user interacts with the map.

A third research topic concerns the translation of formalised user needs, preferences, and contexts of use, into a parameterised generalisation process built from existing components. This requires a language by which we can formalise procedural knowledge. One approach is to describe any generalisation component in terms of its ability to handle various situations (Chap. 7). Another approach could be to identify typical parameterisations and ‘chaining’ of components that appear to fit some given need and contexts of use, and to build solutions to new needs and contexts based upon those partial solutions. Such an idea (a collaborative filtering approach) was proposed by Burghardt and Neun (2006). Tools like ScaleMaster (Brewer and Buttenfield 2010), and its extension by Touya and Girres (2013) that includes an object oriented modelling approach to the storage of sequences and parameters, are the first steps in this direction.

Another important topic is concerned with the re-use of code (Chap. 7). How do we standardise generalisation components and make them available as interoperable generalisation services that could then be combined (either by a human or a machine)? In this context, is it more efficient to send code through the network rather than data? This idea was explored by Müller and Wiemann (2012) who presented the idea of “moving code” since data transfer can be slow and expensive, but further work is needed.

All these issues lead to the requirement for shared semantic frameworks that enable us to formally describe all concepts upon which generalisation depends. Some early solutions to this problem have been presented in Chaps. 2, 3 and 7; again further work on the topic is required. In addition, in terms of ‘contexts of use’ for 2D tailored maps, two particular areas are worthy of greater focus:

-

Firstly the generalisation of maps intended for digital display, where we take into account the legibility constraints of the screen, and the potential for interaction. This context is quite different from the generalisation of traditional paper maps.

-

Secondly, the need for greater research into on-the-fly generalisation for mobile devices—requiring us to address smooth data transfer, over limited bandwidth (Weibel 2010). Though this book has presented partial solutions (Chap. 4, and a use case in Chap. 6), more work is required in this area.

12.2.2 Dealing with Different Communities of Users

By examining the increasing popularity of web 2.0 technologies and services and the growth in VGI, we can discern two communities of users of generalisation technologiesFootnote 1—each with their own expectations. The first group are the professional mapmakers such as the traditional cartographers of NMAs who have a long history of map making. This group makes products of high quality (often at high cost), and are somewhat wary of the idea that higher levels of automation are achievable without sacrificing some elements of quality. GIS vendors and the Development Departments of NMAs, who provide automated solutions to this group based on research results, seek highly sophisticated solutions. The second community of users are non-professional mapmakers who make maps using web mapping applications based on open source data, (for example via OSM or Google maps APIs). This enables them to render data at different scales and also to collect third party data and share the resulting maps. This group is typically free of the constraints imposed on NMAs in terms of completeness, precision and accuracy of the resulting maps. This second community requires solutions that are quick, easy-to-use and available for free. Developers of web mapping applications (i.e. entrepreneurs or volunteers) are aware that map compilation and design requires abstraction at lower levels of detail, but they have limited resources to invest in the development of sophisticated generalisation technologies. Therefore, current web mapping applications provide simplistic generalisation solutions mainly based on attribute-based selection and rendering. Whilst they contribute to the collection of geographic data and its free sharing, the cartographic quality of such maps is not always sufficient.

Weibel (2010) argues that it is this second community of users who are increasingly more deserving of our focus. But the gap between these two communities is narrowing. As Chap. 11 illustrated, NMAs are increasingly considering the balance between cost, currency and cartographic quality, and are also increasingly developing viewers that enable online map customisation. Therefore, ideas of ease of data integration, map comprehension, simplicity in specification, and rapid data transfer are common goals. Perhaps the difference narrows to one of finding compromise between “quick and simplistic” and “elaborate and expensive”—delivering a range of solutions that can be chosen depending on the context of use.

12.2.3 Generalising Data Stemming from Multiple Sources

In addition to adapting the generalisation process to particular needs, we also need to acknowledge that data are increasingly multi sourced. Two particular cases can be distinguished. In the first case the source data includes VGI data retrieved from the web. The major characteristic of this kind of data is that they are often not well described in terms of meta-information and are heterogeneous. As discussed in Chap. 5, methods are needed to automatically acquire metadata associated with VGI data, in order that it can be integrated with “institutional” data, and both can then be generalised together.

In the second case, it is assumed that part of the source data comes from the user, themselves. In this instance, the user usually knows their data; methods are needed to help the user to record metadata, such that the system can propose tailored sequences and parameterisations of operators.

In both cases, we have “thematic” data overlaid on background, reference data that need to be integrated and then handled together, while taking into account the important relations that exist between them. A use case in Chap. 3 examined this issue, but further research is needed to develop generalisation methodologies that are aware of the relations and interdependencies between geographic phenomena.

12.2.4 Generalisation of Higher Dimensional Data

Generalisation has relevance beyond the two dimensional. There are research challenges around the egocentric perspective, and the handling of higher dimensional data (3D and temporal data). Location aware technology coupled with new forms of media (such as augmented reality glasses) tends to overload the user with information. There is therefore an ever greater need for data abstraction and for ways of varying the level of detail depending on the user’s location. There is also a need for research that better connects abstracted data with other augmented information. In such cases we need to take account of the user’s position, the objects in their field of view, and tailor the information in a dynamic way in response to the various tasks that the user is trying to complete. The best solution may well not be a single abstracted form but instead delivered through a range of media, in a variety of forms (map, textual, sonic, graphic, etc.) ranging from the highly abstracted to something akin to augmented reality. A few studies are beginning to emerge on this topic (e.g. Harrie et al. 2002; Dogru et al. 2008; Chap. 4), but much more work needs to be done.

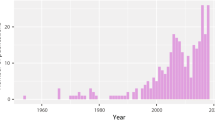

Generalisation of higher dimensional data extends to the temporal domain—a topic of research that has been previously identified (Mackaness et al. 2007), yet to date there are few studies that have addressed this topic. The ambition is to detect trends and spatio-temporal patterns and summarise these changes in a visual form, giving emphasis to the major events that have affected the data over time. One of the few reports is by Andrienko et al. (2010) who proposed the anonymisation of spatio-temporal movement data by means of generalisation.

12.3 In Conclusion

A research agenda is not something set in stone—it changes in response to new opportunities and findings. The underlying ambition of generalisation remains unchanged; ultimately it is concerned with ensuring quality in our geographic decision making. The core methodologies remain the same with focus on manipulation and abstraction of map features through the varying combination and application of generalisation techniques. The research agenda compared with the one proposed in 2007 is not significantly different however it does reflect the following changes: (1) the high expectations of users that they should be able to integrate, combine and explore distributed data sources, and share them with their own data in mapped form; (2) that they should be able to do so intuitively, instantly, anywhere, in a variety of media and web enabled devices, and (3) that the map should provide data at the appropriate level of abstraction, but also support interaction to access further levels of detail. In order for generalisation research to continue to progress, these changing contexts and expectations have to be taken into account. It is also important to investigate the opportunities and limitations of new, often mobile, technologies—technologies that have the potential to make easier the creation of interactive and immersive maps.

All of the research activities in this book point to the inter-disciplinary nature of research in map generalisation—whether it be cognitive modelling of the user, the role of ontologies in semantic modelling, or the design of web interfaces to support service driven cartography. Future advances in this field will surely depend on continuing close collaboration with researchers working in such fields as visual analytics and cartography, spatial reasoning and informatics, computational geometry and geospatial semantics, databases and web services (to name but a few!). Its contribution and continued relevance to the display of geographic information will depend upon its continued close coupling between these complementary disciplines.

Notes

- 1.

Note that the users of generalisation are the mapmakers, not to be confused with the users of the map (even if in some cases both roles are undertaken by the same person).

References

Andrienko G, Andrienko N, Giannotti F, Monreale A, Pedreschi D, Rinzivillo S (2010) A Generalisation-based approach to anonymising movement data. In: Painho M, Santos MY, Pundt H (eds) Geospatial thinking, AGILE 2010 CD-ROM Proceedings, pp 1–10

Brewer CA, Buttenfield BP (2010) Mastering map scale: balancing workloads using display and geometry change in multi-scale mapping. Geoinformatica 14(2): 221–222

Burghardt D, Neun M (2006) Automated sequencing of generalisation services based on collaborative filtering. In: Raubal M, Miller HJ, Frank AU, Goodchild M (eds) Geographic information science, 4th International Conference on Geographical Information Science (GIScience), IfGIprints 28, 41–46

Dogru A, Duchêne C, Mustière S, Ulugtekin N (2008) User centric mapping for car navigation systems, In: Proceedings of the 12th ICA Workshop on Generalisation and Multiple Representation, jointly organised with EuroSDR Commission on Data Specifications and the Dutch program RGI, Montpellier, France, 2008. http://generalisation.icaci.org/images/files/workshop/workshop2008/02_Dogru_et_al.pdf

Foerster T, Stoter JE, Kraak M (2010) Challenges for automated generalisation at european mapping agencies: a qualitative and quantitative analysis. Cartogr J 47(1): 41–54

Harrie L, Sarjakoski T, Lehto L (2002) A variable-scale map for small-display cartography. Int Arch Photogrammetry Remote Sens Spatial Inf Sci 34(4):237–242

Mackaness W, Ruas A, Sarjakoski T (2007) Observations and research challenges in map generalisation and multiple representation. In: Mackaness W, Ruas A, Sarjakoski T (eds) Elsevier, 315–323

Müller M, Wiemann S (2012) A framework for building multi‐representation layers from OpenStreetMap data. In: Proceedings of the 15th ICA workshop on generalisation and multiple representation, jointly organised with EuroSDR commission on data specifications, Istanbul, Turkey

Touya G, Girres J-F (2013) ScaleMaster 2.0: a scalemaster extension to monitor automatic multi-scales generalizations. Cartogr and Geogr Info Sci 40(3): 192–200

Weibel R (2010) From geometry, statics and oligarchy to semantics, mobility and democracy: some trends in map generalization and data integration. In: Workshop on generalization and data integration (GD1 2010) Boulder, USA, June 2010. Postprint available at: http://www.zora.uzh.ch

Acknowledgments

The authors are grateful to their colleagues from the COGIT Laboratory of IGN, the Dresden University of Technology and the University of Edinburgh for the discussions around the future research challenges in generalisation that indubitably made this chapter all the richer!

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Burghardt, D., Duchêne, C., Mackaness, W. (2014). Conclusion: Major Achievements and Research Challenges in Generalisation. In: Burghardt, D., Duchêne, C., Mackaness, W. (eds) Abstracting Geographic Information in a Data Rich World. Lecture Notes in Geoinformation and Cartography(). Springer, Cham. https://doi.org/10.1007/978-3-319-00203-3_12

Download citation

DOI: https://doi.org/10.1007/978-3-319-00203-3_12

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-00202-6

Online ISBN: 978-3-319-00203-3

eBook Packages: Earth and Environmental ScienceEarth and Environmental Science (R0)