Abstract

We consider linear dynamical systems with a single output, where the systems include random parameters to perform an uncertainty quantification. Using the concept of polynomial chaos, a linear stochastic Galerkin system of higher dimension with multiple outputs is arranged. Quadratic combinations of the outputs yield approximations of time-dependent indices in global sensitivity analysis, which indicate the influence of each random parameter. We investigate system norms for the quadratic outputs, because these norms generate time-independent sensitivity measures. Numerical results are presented for a model of an electric circuit.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

1 Introduction

Mathematical modelling often yields systems of differential equations including physical parameters. Uncertainty quantification (UQ) is required to investigate an output of the model with respect to a variability in the parameters. A common approach consists in the substitution of the parameters by random variables, see [10]. In addition, a global sensitivity analysis of the random-dependent model can be performed to characterise the importance of each random parameter. There are variance-based indicators (first-order indices and total-effect indices) as well as derivative-based indicators for global sensitivity analysis, see [4, 5, 8, 9]. The resulting numerical values allow for a ranking of the parameters.

We examine linear dynamical systems composed of ordinary differential equations (ODEs) or differential-algebraic equations (DAEs). A single input or multiple inputs are induced, while a single output represents a quantity of interest (QoI). In the random-dependent system, we expand the state/inner variables as well as the output in the polynomial chaos (PC), see [10]. The stochastic Galerkin method yields a larger deterministic linear dynamical system with multiple outputs, which represent an approximation of coefficient functions in the expansion of the QoI. Quadratic combinations of the outputs produce approximations of three types of indices in global sensitivity analysis: first-order, total-effect, and derivative-based. Since the outputs depend on time, the sensitivity indices also vary in time.

Alternatively, we derive system norms of the stochastic Galerkin system for each non-negative quadratic output. These system norms provide sensitivity measures, which are independent of both time and the input. In [6, 7], this strategy was applied to investigate system norms associated to total-effect indices. Now we extend the approach to first-order indices as well as derivative-based indices. Therein, the derivative-based concept uses the \(\mathscr {L}^2\)-norm of the QoI’s partial derivatives with respect to the parameters. All system norms are computable as \(\mathscr {H}_{\infty }\)-norms of corresponding transfer functions in frequency domain. Finally, we illustrate results of numerical computations employing the electric circuit of the Miller integrator.

2 Random Linear Dynamical Systems

Let a linear dynamical system be given in the form

with time \(t \in I = [0,\infty )\). Single or multiple inputs \(\textbf{u} : I \rightarrow \mathbb {R}^{n_\textrm{in}}\) are supplied. The matrices \(\textbf{A},\textbf{E} \in \mathbb {R}^{n \times n}\), \(\textbf{B} \in \mathbb {R}^{n \times n_\textrm{in}}\), and the vector \(\textbf{c} \in \mathbb {R}^n\) depend on physical parameters \(\textbf{p} \in \varPi \subseteq \mathbb {R}^q\). The variables \(\textbf{x}: I \times \varPi \rightarrow \mathbb {R}^n\) depend on time as well as the parameters. A single output \(y : I \times \varPi \rightarrow \mathbb {R}\) is observed as a QoI. If the mass matrix \(\textbf{E}(\textbf{p})\) is non-singular, then the system (1) consists of ODEs. Alternatively, a singular mass matrix implies a system of DAEs. A linear DAE system exhibits a (nilpotency) index \(\nu \ge 1\), see [3]. We assume that the systems (1) are asymptotically stable for all \(\textbf{p} \in \varPi \). An initial value problem is specified by \(\textbf{x}(0,\textbf{p}) = \textbf{x}_0(\textbf{p})\) with a predetermined function \(\textbf{x}_0 : \varPi \rightarrow \mathbb {R}^n\).

The parameters are often affected by uncertainties due to modelling errors or measurement errors, for example. A common approach to model their variability consists in replacing the parameters by independent random variables, see [10]. Consequently, the parameters become measurable functions \(\textbf{p} : \varOmega \rightarrow \varPi \) on a probability space \((\varOmega ,\mathscr {A},P)\). We assume that there is a joint probability density function \(\rho : \varPi \rightarrow \mathbb {R}\). Hence the expected value of a measurable function \(f : \varPi \rightarrow \mathbb {R}\) reads as

We consider the Hilbert space

which is equipped with the inner product \(\langle f,g \rangle = \mathbb {E}[fg]\) for two functions \(f,g \in \mathscr {L}^2(\varPi ,\rho )\) using the expected value (2). Its norm is \(\Vert f \Vert _{\mathscr {L}^2(\varPi ,\rho )} = \sqrt{\langle f,f \rangle }\).

Let an orthonormal basis \((\varPhi _i)_{i \in \mathbb {N}}\) be given, which consists of multivariate polynomials \(\varPhi _i : \varPi \rightarrow \mathbb {R}\). Without loss of generality, \(\varPhi _1 \equiv 1\) is the unique polynomial of degree zero. We assume that the variables \(\textbf{x}(t,\cdot )\) as well as the output \(y(t,\cdot )\) are (component-wise) functions in the space \(\mathscr {L}^2(\varPi ,\rho )\) for each \(t \ge 0\). It follows that the functions can be expanded in the polynomial chaos (PC), see [10],

with time-dependent coefficient functions \(\textbf{v}_i : I \rightarrow \mathbb {R}^n\) and \(w_i : I \rightarrow \mathbb {R}\). A truncation of the series (4) to \(i=1,\ldots ,m\) with some integer \(m \ge 1\) yields a finite approximation. Typically, all basis polynomials up to some total degree d are included. Hence the number of basis functions results to \(m = (d+q)!/(d!q!)\).

3 Stochastic Galerkin Systems and Norms

The stochastic Galerkin method changes the random-dependent linear dynamical system (1) into the larger deterministic linear dynamical system

The constant matrices \(\mathbf{\hat{A}},\mathbf{\hat{E}} \in \mathbb {R}^{mn \times mn}\), \(\mathbf{\hat{B}} \in \mathbb {R}^{mn \times n_\textrm{in}}\), \(\mathbf{\hat{C}} \in \mathbb {R}^{m \times mn}\) are derived from \(\textbf{A}\), \(\textbf{E}\), \(\textbf{B}\), \(\textbf{c}\), respectively. The definition of the matrices can be found in [6], for example. The state/inner variables are \(\mathbf{\hat{v}} = (\mathbf{\hat{v}}_1^\top ,\ldots ,\mathbf{\hat{v}}_m^\top )^\top \). Now the system produces multiple outputs \(\mathbf{\hat{w}} = (\hat{w}_1,\ldots ,\hat{w}_m)^\top \) by (6). Both \(\mathbf{\hat{v}}\) and \(\mathbf{\hat{w}}\) include approximations of the exact coefficients in the PC expansions (4). The induced approximation of the random QoI in (1) becomes

We assume that the stochastic Galerkin system (5) is asymptotically stable. In the following, we always predetermine initial values \(\mathbf{\hat{v}}(0)=\textbf{0}\).

A non-negative quadratic output of the system (5) reads as

with a symmetric positive semi-definite matrix \(\mathbf{\hat{M}} \in \mathbb {R}^{m \times m}\). Let \(\varSigma (\mathbf{\hat{M}})\) be the system consisting of the dynamical part (5) and the quadratic output (8). Now we employ a symmetric decomposition

with a matrix \(\mathbf{\hat{F}} \in \mathbb {R}^{m \times m}\), for example, using a pivoted Cholesky decomposition. We arrange a stochastic Galerkin system \(\varSigma (\mathbf{\hat{F}})\) consisting of (5) and the linear outputs

We define the system norm belonging to (5), (8) as

including the \(\mathscr {L}^2\)-norm in the time domain \([0,\infty )\). This norm involves the supremum of the set of all inputs \(\textbf{u} \in \mathscr {L}^2[0,\infty )\backslash \{ \textbf{0} \}\). In view of (10), it follows that

This system norm is independent of the chosen decomposition (9).

The input-output behaviour of a linear dynamical system can be described by a transfer function in frequency domain, see [1]. The matrix-valued transfer function of the system (5), (10) reads as \(\mathbf{\hat{H}}(s) = \mathbf{\hat{F}} \mathbf{\hat{C}} (s \mathbf{\hat{E}} - \mathbf{\hat{A}})^{-1} \mathbf{\hat{B}}\) for almost all \(s \in \mathbb {C}\). Now the system norm of \(\varSigma (\mathbf{\hat{F}})\) in (12) coincides with the \(\mathscr {H}_{\infty }\)-norm of this transfer function. The \(\mathscr {H}_{\infty }\)-norm is computable by methods of numerical linear algebra, see [2]. If the linear stochastic Galerkin system (5) consists of ODEs or DAEs with index \(\nu = 1\), then the finiteness of the \(\mathscr {H}_{\infty }\)-norm is guaranteed. Yet the \(\mathscr {H}_{\infty }\)-norm may still be finite for DAEs of index \(\nu \ge 2\) depending on the definition of inputs and outputs. The following sensitivity analysis applies to any DAE system (5), (6) with finite \(\mathscr {H}_{\infty }\)-norm, because a system (5), (10) with modified output inherits a finite \(\mathscr {H}_{\infty }\)-norm.

4 Sensitivity Measures

Our aim is a global sensitivity analysis of the stochastic model (1) with respect to the influence of the individual random parameters. In general, there are variance-based sensitivity measures and derivative-based sensitivity measures, see [8, 9]. Although the variance-based sensitivity indices originally were defined different, we use an equivalent specification by the PC expansion as in [5].

Let V(t) be the variance of the random QoI \(y(t,\cdot )\) for \(t \ge 0\). We define the index sets \(\mathbb {I}_j,\mathbb {I}_j' \subset \mathbb {N}\) for \(j=1,\ldots ,q\) using the family of basis polynomials \((\varPhi _i)_{i \in \mathbb {N}}\)

It holds that \(\mathbb {I}_j \subset \mathbb {I}_j'\) and \(1 \notin \mathbb {I}_j\) due to \(\varPhi _1 \equiv 1\). Variance-based sensitivity measures are the first-order indices

and the total-effect indices

These real numbers satisfy \(0 \le \overline{S}_j^\textrm{FO}(t) \le \overline{S}_j^\textrm{TE}(t) \le 1\) for each \(t \ge 0\) and \(j=1,\ldots ,q\). If it holds that \(V(t)=0\) for some t, then the variance-based sensitivity indices are not defined. However, an UQ is obsolete in this case as there is no variability.

Furthermore, we examine derivative-based indices with respect to the norm of (3), i.e.,

Applying the PC expansion (4), it follows that

assuming that infinite summations and integration can be interchanged.

Now the sensitivity indices \(S_j^\textrm{FO}\) in (13), \(S_j^\textrm{TE}\) in (14), and \(S_j^\textrm{DB}\) in (15) can be approximated by quadratic outputs (8) of the stochastic Galerkin system (5). Let \(\mathbb {K} \subset \mathbb {N}\) be an index set. We define the diagonal matrix

This matrix owns the trivial symmetric factorisation \(\mathbf{\hat{D}}(\mathbb {K}) = \mathbf{\hat{D}}(\mathbb {K})^\top \mathbf{\hat{D}}(\mathbb {K})\). On the one hand, we obtain the variance-based indices by

On the other hand, we arrange the symmetric matrices \(\mathbf{\hat{N}}_j = (\eta _{jk\ell })_{k,\ell = 1,\ldots ,m}\) including the coefficients from (16). It can be shown that the matrices are also positive semi-definite. Although the integrals \(\eta _{jk\ell }\) include the derivatives of the basis polynomials, the majority of these integrals are zero, i.e., the matrices \(\mathbf{\hat{N}}_j\) are sparse. Consequently, the approximation of the derivative-based indices reads as

We consider the system norms (11) with \(\mathbf{\hat{M}}_j \in \{\mathbf{\hat{D}}(\mathbb {I}_j) , \mathbf{\hat{D}}(\mathbb {I}_j') , \mathbf{\hat{N}}_j \}\) for \(j=1,\ldots ,q\). These system norms represent sensitivity measures \(\mu _1^\star ,\ldots ,\mu _q^\star \) for \(\star \in \{ \text{ FO } , \text{ TE } , \text{ DB } \}\), which are independent of time t as well as the selection of inputs \(\textbf{u}\). A standardisation yields coefficients \(0 \le \tilde{\mu }_1^\star ,\ldots ,\tilde{\mu }_q^\star \le 1\) with

to investigate the relative differences for the random parameters.

5 Illustrative Example

We examine the electric circuit of a Miller integrator shown in Fig. 1. A mathematical model of the Miller integrator was presented in [3], which consists of a system of \(n=5\) linear DAEs. The system involves \(q=4\) physical parameters: two capacitances \(C_1,C_2\), a conductance G, and an amplification factor A. The index of this DAE system is \(\nu =2\) for all (positive) parameters. Furthermore, the DAE systems are asymptotically stable. An input voltage \(u_\textrm{in}\) is supplied as single input. The output voltage \(y = u_\textrm{out}\) represents the QoI. The DAE system can be written in the form (1). Although the system of DAEs exhibits index two, the \(\mathscr {H}_{\infty }\)-norm of the associated transfer function is finite.

We replace the physical parameters by independent random variables with uniform distributions. The mean values are chosen as \(\bar{C}_1=10^{-10}\), \(\bar{C}_2 = 5 \cdot 10^{-11}\), \(\bar{G} = 0.001\), \(\bar{A} = 2\), whereas each random variable varies 20% around its mean value. We standardise the resulting hypercuboid \(\varPi \) to \([-1,1]^4\), which changes only the magnitude of the derivative-based indices (15), since the derivatives are multiplied by constants in the transformation. The PC expansions (4) include basis polynomials, which are the products of univariate Legendre polynomials. We truncate the expansions such that all polynomials up to total degree \(d=4\) are included, i.e., \(m=70\) basis polynomials. The stochastic Galerkin method produces a system of DAEs (5) with dimension \(mn=350\). This linear dynamical system is asymptotically stable.

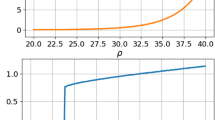

In [7], the Miller integrator was also used as a test example, where only the system norms associated to the total-effect sensitivity indices were investigated. Now we examine all cases of system norms introduced in Sect. 4. Table 1 depicts the computed system norms with respect to the three types of sensitivity indices. The standardised sensitivity measures satisfying (17) are illustrated in Fig. 2.

We perform a transient simulation of the stochastic Galerkin system for comparison. The two-tone signal

is supplied as input voltage. Initial values are zero and the time interval [0, 1] is considered. The backward differentiation formula (BDF) of order two yields a numerical solution of this initial value problem. The outcome (7) also provides approximations for the expected value as well as the standard deviation of the random output voltage, demonstrated in Fig. 3. Using the approximation from the stochastic Galerkin system, we calculate the maxima in time with respect to (14), i.e.,

The threshold \(\delta = 10^{-5} > 0\) is introduced, because the initial conditions imply \(V(0)=0\) and thus the variance exhibits tiny values at the beginning. Table 1 and Fig. 2 also show the sensitivity measures (18).

We observe that the ranking of the random parameters agrees in all four concepts: the amplification factor A is the most influential parameter, followed by the two capacitances \(C_1,C_2\), and the conductance G is of least importance.

6 Summary

We investigated a sensitivity analysis for linear systems of ODEs or DAEs with respect to the influence of random variables. Three concepts were considered: two variance-based approaches and a derivative-based approach. Each concept yields sensitivity indices, which represent quadratic outputs of a stochastic Galerkin system. It follows that associated sensitivity measures are computable as \(\mathscr {H}_{\infty }\)-norms of the system. A test example demonstrates that this sensitivity analysis identifies a correct ranking of the random variables.

References

Antoulas, A.C.: Approximation of Large-Scale Dynamical Systems. SIAM, Philadelphia (2005)

Boyd, S., Balakrishnan, V., Kabamba, P.: A bisection method for computing the \(H_\infty \) norm of a transfer matrix and related problems. Math. Control Signals Syst. 2, 207–219 (1989)

Günther, M., Feldmann, U., ter Maten, J.: Modelling and discretization of circuit problems. In: Ciarlet, P.G. (ed.) Handbook of Numerical Analysis, vol. 13, pp. 523–659. Elsevier, North-Holland (2005)

Liu, Q., Pulch, R.: Numerical methods for derivative-based global sensitivity analysis in high dimensions. In: Langer, U., Amrhein, W., Zulehner, W. (eds.) Scientific Computing in Electrical Engineering. MI, vol. 28, pp. 157–167. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-75538-0_15

Mara, T., Becker, W.: Polynomial chaos expansions for sensitivity analysis of model output with dependent inputs. Reliab. Eng. Syst. Saf. 214, 107795 (2021)

Pulch, R., Narayan, A.: Sensitivity analysis of random linear dynamical systems using quadratic outputs. J. Comput. Appl. Math. 387, 112491 (2021)

Pulch, R., Narayan, A., Stykel, T.: Sensitivity analysis of random linear differential-algebraic equations using system norms. J. Comput. Appl. Math. 397, 113666 (2021)

Sobol, I.M.: Sensitivity estimates for nonlinear mathematical models. Math. Model. Comput. Exp. 1, 407–414 (1993)

Sobol, I.M., Kucherenko, S.: Derivative based global sensitivity measures and their link with global sensitivity indices. Math. Comput. Simul. 79, 3009–3017 (2009)

Sullivan, T.J.: Introduction to Uncertainty Quantification. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-23395-6

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Pulch, R. (2024). Sensitivity Analysis of Random Linear Dynamical Models Using System Norms. In: van Beurden, M., Budko, N.V., Ciuprina, G., Schilders, W., Bansal, H., Barbulescu, R. (eds) Scientific Computing in Electrical Engineering. SCEE 2022. Mathematics in Industry(), vol 43. Springer, Cham. https://doi.org/10.1007/978-3-031-54517-7_24

Download citation

DOI: https://doi.org/10.1007/978-3-031-54517-7_24

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-54516-0

Online ISBN: 978-3-031-54517-7

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)