Abstract

The Internet of Vehicles (IoV) is one of the most exciting and practical ways that corporations and academics are interested in, especially by employing coordinated unmanned vehicles to explore areas like the automobile industry. To provide long-term possibilities for task investigations, the IoV connects vehicles, transportation networks, and communication infrastructure. Data privacy, however, may be compromised by the coordination of information gathering from numerous sources. Federated Learning (FL) is the answer to these concerns of privacy, scalability, and high availability. A well-distributed learning framework designed for edge devices is federated learning. It makes use of large-scale processing from edge devices while allowing private data to remain locally. In this work, different categories of federated learning have been discussed. A review of various systems implementing FL for IoV has been presented followed by the applications and challenges of FL in the IoV paradigm. The paper concludes by providing future research directions for FL in the IoV.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction to Federated Learning

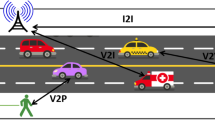

Federated Learning (FL) utilizes a centralized aggregator and provides a solution to the issues associated with many Machine Learning (ML) clients. It ensures that training data for federated learning is decentralized to protect data privacy [1]. Two key concepts of local computation and model transmission have been introduced to lower the privacy risk and cost of centralized ML systems. In FL, participants train their models by using local data and then send the model to the server for aggregation, and the server disseminates model updates. In Fig. 1, FL’s high-level map process has been given [2]. In FL, local models are trained on separate vehicles before aggregating them in the cloud to enhance security, accuracy and learning efficiency [3]. In two phase mitigating scheme, an intelligent architecture with FL provides data leakage detection [4] and intelligent data transmission [5] to improve security [6]. In Unmanned Aerial Vehicles (UAV) federated deep learning applications with wireless networks, the focus is to improve the learning efficiency, learning speed significance, conscious joint data selection, resource allotment algorithm [7], and content caching method for edge computing of FL in IoV.

1.1 Categories of Federated Learning.

FL depends on five aspects which are heterogeneity, communication architecture, data partitioning, applicable machine learning models, and privacy mechanism [8]. Figure 2 depicts the classification of FL.

1.1.1 Data Partition

Based on various distributions of design, FL can be divided into the three types [9] i.e. horizontal FL, Vertical FL, and Federated Transfer Learning. Horizontal FL is employed where user attributes for two datasets overlay remarkably but user overlap is minimal. The data set is divided horizontally, with the same user attributes, but different users for training [10, 11]. In vertical FL (VFL), users are overlapped a lot and user features are overlapped a little. In VFL, data sets are divided vertically and a portion of data is considered for training wherever users are identical with different useful features [12]. In Federated Transfer learning (FTL) the users or the users’ attributes are never segmented. However, it can be employed in cases when there is a lag of information or tags [13].

1.1.2 Privacy Mechanism

Using FL, clients can store data locally and transmit model information for target model training. In model aggregation, the only significant aspect of FL is model aggregation that trains their global model by integrating the model attributes from all clients thus prevent transferring the metadata throughout the training process [14]. The problems associated with calculating encrypted data have been resolved using homomorphic encryption key. In differential privacy, both ML and deep learning use gradient iteration [15], which incorporates the addition of noise to the result to implement differential privacy to safeguard user privacy [16].

1.1.3 Machine Learning Schemes

FL enhance the capability and security of the ML model. Neural network, decision tree and linear model are the three main ML models. In federated environment, the linear model of training that mitigates the security issues and attains the same accuracy as a non-private solution is proposed in [17]. The tree model in FL, random forest and gradient boost decision trees are utilized for both single and multiple decision trees [18]. The most famous model of machine learning is the neural network model that trains complex tasks. In autonomous vehicles using drones target location, trajectory planning, target recognition services plays a vital role [19]. Due to the drawback of regular connection between UAV group and base station, centralized training fails in real time but deep learning provides excellent efficiency with UAV group usually [20].

1.1.4 Communication Architecture

The problems of application scenario of FL are equipment computing and uneven distribution of user data [21]. All the participants that are under training are in touch with centralized server for the update of global model. The communication cost of FL is high for critical problems. To minimize the cost between server and local users the model data is compressed by secondary sample random rotation quantization [22].

1.1.5 Methods for Solving Heterogeneity

Different devices affect the accuracy of total training process. The diversions of heterogeneity are model heterogeneity, fault tolerance, asynchronous communication and device sampling [23]. The main factor of FL is the efficiency of unevenly distributed data from various devices. In FL, the processing of data from various devices affects the model. Asynchronous communication is the solution to many problems with dispersed devices in FL settings.

2 Related Work

This section discusses some of the related works of FL in IoV. For intelligent object detection, a two-layer FL model has been used in a 6G supported IoV environment [24, 25]. The use of a hybrid blockchain method in addition to FL by using DRL to select optimized participants which improve the learning efficiency has been proposed in [26]. Iterative model averaging is used by the deep network federated learning frame-work to train the global model by adding the local models in each round of updates [20]. For the selection of Smart Vehicles (SVs) for FL, local learners are adopted by using round robin, random scheduling using heterogeneous asynchronous FL networks [27,28,29]. In [30], the authors concluded that the Quality of Information (QoI) received by the SVs is dynamic and will affect the performance of FL. Therefore, to improve the QoI, the Vehicular Service Provider’s (VSP’s) responsibility is to select SVs of current location within important areas. With the combination of important areas and QoI, VSP can obtain beneficial on-road data [14] and trustworthy trained model updates [31] from the chosen SVs.

In a non-collaborative Stackleberg game model proposed in [32,33,34], the mobile devices have full information about the VSPs payment budget. For estimating the traffic with FL that more correctly captures the spatio temporal correlation of the traffic flow with the use of clustering, FedGRU approach proposed in [35] combines the GRU (Gated Recurrent Unit) to get the best overall model. A model proposed in [36] FedProx integrates the edge devices data of distributed training with the Federal Averaging (FedAvg) model maximization method that improves the reliability of the target task. In [37], Multiple Principal One Agent (MPOA) based contract optimization is being employed to maximize the revenue of VSPs in each iteration [38]. OBU in IoV can gather data and glean local knowledge. A model proposed in [39] replaces data as a service by know ledge as a service in IoV. Knowledge serves as real information and is incorporated into data intelligence. IoV cars learn about the environment and the roads in different locations using ML techniques, and they share their expertise [40]. In [41], a model has been proposed to maintain balance between the dataset computational resources and wireless resources that is affected by the combination of vehicle client selection resource and wireless resource. Table 1 gives the review of previous work done by various researchers with respect to implementation of federated learning for IoV.

3 Applications of Federated Learning

This section discusses the various applications of federated learning including its applications for IoVs.

3.1 Google Keyboard

The prediction of the next word is achieved while improving the quality of the keyboard with security and privacy [7, 57]. In building the recommended systems, building of language model is also attained.

3.2 Intelligent Medical Diagnosis Systems

In the centralized method of ML, the data gathering and processing for medical diagnosis becomes difficult because of privacy and security concerns. With FL, it is possible to use the data locally without any issues of privacy and train the model for diagnosis [57, 58]. For small and insufficient labels, federated transfer learning is the solution. To implement an integrated multi FL network on APOLLO network merges the interrelated medical system’s longitudinal real world data with health outcome data for the help of doctors in forward-looking diagnosis of patients [57].

3.3 RSU Intelligence

IoV comprises of RSUs that are designed to receive data to process basic operations. Different varieties of data are received by RSUs and thus FL can be applied in various situations. One of the familiar approaches is in image processing. For autonomous vehicles, both onboard Vehicular Computing (VC) and RSU image processing is important. Collision detection and pedestrian detection also make use of image processing tasks [7].

3.4 Network Function Virtualization (NFV) Orchestration

NFV enabled network highlights the use of FL in making NFV orchestration in security/privacy services and in vehicular service delivery. Each of the networks is divided into sub-networks which may be further divided, utilizing network slicing techniques [59]. This added complexity supports the use of FL because NFV orchestrators from different network partitions can use cooperative ML training to create models capable of performing operations like VNF installation, scaling, termination, and migration. In MEC enabled orchestration of NFV, the RSU is placed near the network edge so that it can act as a network node to hold Virtual network functions (VNFs) [7].

3.5 Vehicular Intelligence

Vehicular intelligence in IoV has many applications such as forecasting the road conditions, image processing in lane detection and popular predictive maintenance [60]. The predictive maintenance uses operational data and alerts the user for maintenance of specific part by predicting the failure of the component through planned maintenance. With the use of FL, in addition to vast collection of data, predictive maintenance models have been built with greater efficiency [7].

4 Conclusions and Future Direction

Given the importance of communication in federated networks and the privacy risks associated with transferring raw data, it is mandatory to keep generated data local. In this situation, two things can be done to further minimize communication: one is to lessen the total number of iterations of communication rounds, and other is too minimize the size of message. In this paper, federated learning and its various categories have been discussed. A systematic review of different systems implementing FL in IoVs has been presented followed by applications of federated learning in different areas related to IoV. However, due to the heterogeneity of the VCs, system complexity is a major challenge. Thus, building privacy protection schemes depending on specific devices in IoV is the future direction. Resolving the tradeoff between communication cost and computational pressure is another challenge. Distributed FL is forthcoming research direction with heterogeneous data.

References

Zhang, C., et al.: A survey on federated learning. Knowledge-Based Syst. 216, (Mar. 2021). https://doi.org/10.1016/J.KNOSYS.2021.106775

Manias, D.M., Shami, A.: Making a case for federated learning in the internet of vehicles and intelligent transportation systems. IEEE Netw. 35(3), 88–94 (2021)

Tang, F., Kawamoto, Y., Kato, N., Liu, J.: Future intelligent and secure vehicular network toward 6G: Machine-learning approaches. Proc. IEEE 108(2), 292–307 (2019)

Du, Z., Wu, C., Yoshinaga, T., Yau, K.-L.A., Ji, Y., Li, J.: Federated learning for vehicular internet of things: Recent advances and open issues. IEEE Open J. Comput. Soc. 1, 45–61 (2020)

Brik, B., Ksentini, A., Bouaziz, M.: Federated learning for UAVs-enabled wireless networks: use cases, challenges, and open problems. IEEE Access 8, 53841–53849 (2020)

Yu, Z., et al.: Mobility-aware proactive edge caching for connected vehicles using federated learning. IEEE Trans. Intell. Transp. Syst. 22(8), 5341–5351 (2020)

Yang, Q., Liu, Y., Chen, T., Tong, Y.: Federated machine learning: Concept and applications. ACM Trans. Intell. Syst. Technol. 10(2), 1–19 (2019)

Aono, Y., et al.: Privacy-preserving deep learning via additively homomorphic encryption. IEEE Trans. Inf. Forensics Secur. 13(5), 1333–1345 (2017)

Geyer, R.C., Klein, T., Nabi, M.: Differentially private federated learning: A client level perspective. arXiv Prepr. arXiv1712.07557 (2017)

Wei, J.: Managed communication and consistency for fast data-parallel iterative analytics. In: Proceedings of the Sixth ACM Symposium on Cloud Computing, pp. 381–394 (2015)

Mansour, Y., Mohri, M., Ro, J., Suresh, A.T.: Three approaches for personalization with applications to federated learning. arXiv Prepr. arXiv2002.10619 (2020)

Chen, Y.-R., Rezapour, A., Tzeng, W.-G.: Privacy-preserving ridge regression on distributed data. Inf. Sci. (Ny). 451, 34–49 (2018)

Kang, J., et al.: Reliable federated learning for mobile networks. IEEE Wirel. Commun. 27(2), 72–80 (2020)

Wan, L., Ng, W.K., Han, S., Lee, V.C.S.: Privacy-preservation for gradient descent methods. In: Proceedings of the 13th ACM SIGKDD international conference on Knowledge discovery and data mining, pp. 775–783 (2007)

McMahan, B., Moore, E., Ramage, D., Hampson, S., y Arcas, B.A.: Communication-efficient learning of deep networks from decentralized data. In: Artificial intelligence and statistics, pp. 1273–1282 (2017)

Papernot, N., Abadi, M., Erlingsson, U., Goodfellow, I., Talwar, K.: Semi-supervised knowledge transfer for deep learning from private training data. arXiv Prepr. arXiv1610.05755 (2016)

Kim, H., Park, J., Bennis, M., Kim, S.-L.: Blockchained on-device federated learning. IEEE Commun. Lett. 24(6), 1279–1283 (2019)

Nikolaenko, V., et al.: Privacy-preserving ridge regression on hundreds of millions of records. In: 2013 IEEE symposium on security and privacy, pp. 334–348 (2013)

Cheng, K.: Secureboost: a lossless federated learning framework. IEEE Intell. Syst. 36(6), 87–98 (2021)

Abadi, M.: Deep learning with differential privacy. In: Proceedings of the 2016 ACM SIGSAC conference on computer and communications security, pp. 308–318 (2016)

Agarwal, N., Suresh, A.T., Yu, F.X.X., Kumar, S., McMahan, B.: cpSGD: Communication-efficient and differentially-private distributed SGD. Adv. Neural Inf. Process. Syst. 31 (2018)

Du, W., Han, Y.S., Chen, S.: Privacy-preserving multivariate statistical analysis: Linear regression and classification. In: Proceedings of the 2004 SIAM international conference on data mining, pp. 222–233 (2004)

Lindell, Y., Pinkas, B.: A proof of security of Yao’s protocol for two-party computation. J. Cryptol. 22(2), 161–188 (2009)

Saputra, Y.M., et al.: Dynamic federated learning-based economic framework for internet-of-vehicles. IEEE Trans. Mob. Comput. 1233(c), 1–20 (2021). https://doi.org/10.1109/TMC.2021.3122436

Zhou, X., et al.: Two-layer federated learning with heterogeneous model aggregation for 6g supported internet of vehicles. IEEE Trans. Veh. Technol. 70(6), 5308–5317 (2021)

Lu, Y., Huang, X., Zhang, K., Maharjan, S., Zhang, Y.: Blockchain empowered asynchronous federated learning for secure data sharing in internet of vehicles. IEEE Trans. Veh. Technol. 69(4), 4298–4311 (2020)

Yang, H.H., Liu, Z., Quek, T.Q.S., Poor, H.V.: Scheduling policies for federated learning in wireless networks. IEEE Trans. Commun. 68(1), 317–333 (2019)

Xie, C., Koyejo, S., Gupta, I.: Asynchronous federated optimization. arXiv Prepr. arXiv1903.03934 (2019)

Li, T., et al.: Federated optimization in heterogeneous networks. Proc. Mach. Learn. Syst. 2, 429–450 (2020)

Amiri, M.M., Gündüz, D., Kulkarni, S.R., Poor, H.V.: Convergence of update aware device scheduling for federated learning at the wireless edge. IEEE Trans. Wirel. Commun. 20(6), 3643–3658 (2021)

Zhan, Y., Li, P., Qu, Z., Zeng, D., Guo, S.: A learning-based incentive mechanism for federated learning. IEEE Internet Things J. 7(7), 6360–6368 (2020)

Khan, L.U.: Federated learning for edge networks: Resource optimization and incentive mechanism. IEEE Commun. Mag. 58(10), 88–93 (2020)

Liu, Y., Zhang, S., Zhang, C., Yu, J.J.Q.: FedGRU: privacy-preserving traffic flow prediction via federated learning. In: 2020 IEEE 23rd International Conference on Intelligent Transportation Systems (ITSC), pp. 1–6 (2020). https://doi.org/10.1109/ITSC45102.2020.9294453

Sarikaya, Y., Ercetin, O.: Motivating workers in federated learning: A stackelberg game perspective. IEEE Netw. Lett. 2(1), 23–27 (2019)

Kang, J., Xiong, Z., Niyato, D., Xie, S., Zhang, J.: Incentive mechanism for reliable federated learning: a joint optimization approach to combining reputation and contract theory. IEEE Internet Things J. 6(6), 10700–10714 (2019)

Zou, Y., Shen, F., Yan, F., Lin, J., Qiu, Y.: Reputation-based regional federated learning for knowledge trading in blockchain-enhanced IOV. In: 2021 IEEE Wireless Communications and Networking Conference (WCNC), pp. 1–6 (2021)

Abad, M.S.H., Ozfatura, E., Gunduz, D., Ercetin, O.: “Hierarchical federated learning across heterogeneous cellular networks. In: ICASSP 2020–2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 8866–8870 (2020)

Wang, S., Liu, F., Xia, H.: Content-based vehicle selection and resource allocation for federated learning in iov. In: 2021 IEEE Wireless Communications and Networking Conference Workshops (WCNCW), pp. 1–7 (2021)

Anand, A., Rani, S., Anand, D., Aljahdali, H.M., Kerr, D.: An efficient CNN-based deep learning model to detect malware attacks (CNN-DMA) in 5G-IoT healthcare applications. Sensors 21(19), 6346 (2021)

Bonawitz, K.: Practical secure aggregation for privacy-preserving machine learning. In: Proceedings of the (2017) ACM SIGSAC Conference on Computer and Communications Security, pp. 1175–1191 (2017)

Price, W.N., Cohen, I.G.: Privacy in the age of medical big data. Nat. Med. 25(1), 37–43 (2019)

Zhao, P., et al.: Federated learning-based collaborative authentication protocol for shared data in social IoV. IEEE Sens. J. 22(7), 7385–7398 (2022)

Hammoud, A., Otrok, H., Mourad, A., Dziong, Z.: On demand fog federations for horizontal federated learning in IoV. IEEE Trans. Netw. Serv. Manag. 19(3), 3062–3075 (2022)

Xie, K.: Efficient federated learning with spike neural networks for traffic sign recognition. IEEE Trans. Veh. Technol. 71(9), 9980–9992 (2022)

Peng, O., et al.: Bflp: an adaptive federated learning framework for internet of vehicles. Mob. Inf. Syst. 2021, 1–18 (2021)

Tao, X., Zhang, X., Liu, J., Xu, J.: Privacy-preserved federated learning for autonomous driving. IEEE Trans. Intell. Transp. Syst. 23(7), 8423–8434 (2021)

Liu, Y., James, J.Q., Kang, J., Niyato, D., Zhang, S.: Privacy-preserving traffic flow prediction: a federated learning approach. IEEE Internet Things J. 7(8), 7751–7763 (2020)

Wang, Y., Su, Z., Zhang, N., Benslimane, A.: Learning in the air: Secure federated learning for UAV-assisted crowdsensing. IEEE Trans. Netw. Sci. Eng. 8(2), 1055–1069 (2020)

Aloqaily, M., Al Ridhawi, I., Guizani, M.: Energy-aware blockchain and federated learning-supported vehicular networks. IEEE Trans. Intell. Transp. Syst. 23(11), 22641–22652 (2021)

Wang, R., Li, H., Liu, E.: Blockchain-based federated learning in mobile edge networks with application in internet of vehicles. arXiv Prepr. arXiv2103.01116 (2021)

Liu, H.: Blockchain and federated learning for collaborative intrusion detection in vehicular edge computing. IEEE Trans. Veh. Technol. 70(6), 6073–6084 (2021)

Joshi, G.P., et al.: Toward blockchain-enabled privacy-preserving data transmission in cluster-based vehicular networks. Electronics 9(9), 1358 (2020)

Hua, G., et al.: Blockchain-based federated learning for intelligent control in heavy haul railway. IEEE Access 8, 176830–176839 (2020)

Chai, H., Leng, S., Chen, Y., Zhang, K.: A hierarchical blockchain-enabled federated learning algorithm for knowledge sharing in internet of vehicles. IEEE Trans. Intell. Transp. Syst. 22(7), 3975–3986 (2020)

Shen, C., Zhu, L., Hua, G., Zhou, L., Zhang, L.: A blockchain based federal learning method for urban rail passenger flow prediction. In: 2020 IEEE 23rd International Conference on Intelligent Transportation Systems (ITSC), pp. 1–5 (2020)

Lu, Y., Huang, X., Zhang, K., Maharjan, S., Zhang, Y.: Blockchain empowered asynchronous federated learning for secure data sharing in internet of vehicles. IEEE Trans. Veh. Technol. 69(4), 4298–4311 (2020). https://doi.org/10.1109/TVT.2020.2973651

Lee, J.S.H.: From discovery to practice and survivorship: building a national real-world data learning healthcare framework for military and veteran cancer patients. Clin. Pharmacol. & Ther. 106(1), 52–57 (2019)

Rieke, N.: The future of digital health with federated learning. NPJ Digit. Med. 3(1), 1–7 (2020)

Jain, B., Brar, G., Malhotra, J., Rani, S., Ahmed, S.H.: A cross layer protocol for traffic management in Social Internet of Vehicles. Futur. Gener. Comput. Syst. 82, 707–714 (2018). https://doi.org/10.1016/j.future.2017.11.019

Seth, I., Guleria, K., Panda, S.N.: Introducing intelligence in vehicular ad hoc networks using machine learning algorithms. ECS Trans. 107(1), 8395 (2022)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Bala, P.H.M., Chhabra, R. (2024). Role of Federated Learning for Internet of Vehicles: A Systematic Review. In: Challa, R.K., et al. Artificial Intelligence of Things. ICAIoT 2023. Communications in Computer and Information Science, vol 1930. Springer, Cham. https://doi.org/10.1007/978-3-031-48781-1_11

Download citation

DOI: https://doi.org/10.1007/978-3-031-48781-1_11

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-48780-4

Online ISBN: 978-3-031-48781-1

eBook Packages: Computer ScienceComputer Science (R0)