Abstract

The number of electronic control units (ECU) installed in vehicles is increasingly high. Manufacturers must improve the software quality and reduce cost by proposing innovative techniques.

This chapter proposes a technique being able to generate not only test-cases in real time but to decide the best means to run them (hardware-in-the-loop simulations or prototype vehicles) to reduce the cost and software testing time. It is focused on the engine ECU software which is one of the most complex software installed in vehicles. This software is coded by using Simulink® models. Two genetic algorithms (GAs) were coded. The first one is in charge of choosing which parts of the Simulink® models should be validated by using hardware-in-the-loop (HIL) simulations and which ones by using prototype vehicles. The second one tunes the inputs of the software module (SM) under validation to cover these parts of the Simulink® models. The usage of dynamic-linked libraries (dlls) is described to deal with the issues linked to SM interactions when running HIL simulations. In addition to this, this chapter focuses on the validation of engine electronic control unit software by using expert systems (EXs) and dynamic link libraries (dlls) with the aim of checking if this technique performs better than traditional ones. Finally, the chapter aims to check if two rule-based EXs combined with dynamic-link libraries (dlls) perform better than other techniques widely employed in the automotive sector when validating the engine control unit (ECU) software by using a hardware-in-the-loop simulation (HIL).

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

2.1 Introduction

2.1.1 Engine ECU Software

Electronic control units (ECUs) have become essential for the correct operation of a vehicle [1, 2]. Software validation plays a key role and has two fundamental goals [3]. Firstly, the software must comply with the functional specifications set by the design team. Secondly, software validation ensures the integration of all software modules (SMs) into the hardware, simultaneously checking that all the elements present in the network interact properly [4, 5]. The process of software validation of an ECU implies significant costs for the companies during a project because of the means necessary to carry out this activity [6, 7]. In addition, the cost of correcting bugs, once the software is marketed, is high and it can tarnish the brand’s image [8, 9]. Consequently, a balance between costs, deadlines, and quality must be reached.

Powertrain control is a system in charge of transforming the driver’s will into an operating point of the powertrain according to the performance established for the product [10]. The key element of the control system is the engine ECU composed of complex hardware and software. The engine ECU (hardware and software) must be validated to assure that engine is properly controlled, the interaction with the rest of the ECUs is rightly performed and the passengers’ safety is insured. Thus, one can deduce that the software validation process is complex and needs improvements with the aim of reducing costs, increasing productivity and reliability in the automotive sector [11, 12].

This chapter is focused on the engine ECU software validation and shows solutions to the main difficulties associated with traditional software validation techniques by using expert systems (EXs) and dynamic-link libraries (dlls) during the hardware-in-the-loop (HIL) simulation. The technique proposed in this research performs better than traditional techniques and allows improving: ease for automating test-cases, bug detection skills, functional coverage, difficulties to detect bugs linked to SMs that do many calculations and the difficulties to validate the software automatically among others. In addition, it shows that the HIL simulation can be automated in an easier way.

2.1.2 Related Works

The code and functional coverage is a real concern when validating a software. Research has been conducted on this topic to enhance this parameter [13,14,15,16,17]. Therefore, test-case generation is a key issue. The black-box technique has been used for a long time in the automotive sector, as discussed by Conrad [18]. Despite its widespread use, it is true that it has some weak points as discussed by Chundur et al. [19]. In their dissertation, they consider that test-cases based on the engineers’ experience usually imply gaps and test-redundancies. The model-based testing technique is an option to assess the code and functional coverage rate. The generation and execution of test-cases based on models have been proposed on several occasions. For instance, Skruch and Buchala (DELPHI supplier) proposed a study based on models [20]. The tool Automation Desk (dSpace®) was used. Raffaelli et al presented research focused on functional models by using the commercial software Matelo® [21, 22].

The HIL simulation should be carried out as quickly as possible and with the highest number of test cases executed to ensure the time-frame and quality of the project [23]. Test automation is essential to ensure a high code coverage and to improve reliability [24, 25]. There are many ways for automating HIL simulation in the market [26, 27]. The automation process is mainly based on black-box techniques such as stated by Lemp, Köhl and Plöger: “As a rule, the tests specified by the ECU departments are first performed as black box tests on the network system (know-how on software structures is not taken)”.

The HIL simulation implies that a specific operating point is reached by the engine ECU. This can be extremely complicated, requiring a lot of manipulations on the HIL model due to SM interactions. There are three possible ways for executing a given test-case in an HIL simulation. Firstly, executing the test-case manually, that is, a technician performs all the necessary actions in the HIL simulation to reach the desired operating point. Secondly, the “tester-on-the-loop” concept can be used. Petrenko, Nguena-Timo and Ramesh, reported the main problems and solutions associated with software validation in the automotive sector [28]. Their main conclusion was focused on the methodology known as “tester-in-the-loop”, in which the test engineer leads the system to a desired operation point, considered as a crucial operation point. Once the crucial point is reached, a series of automated actions are executed to reach the goals previously established in the test-case. Finally, test-cases can be fully automated. In this case, a script controls the whole execution process.

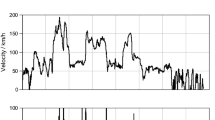

Some types of bugs are not detected by using some techniques such as the tester-in-the-loop or black-box, Fig. 2.1, depicts the obtained result for an output for a variable of a SM when executing the software in an HIL simulation (in red) and its expected value (in blue). As one can see, the results are different. This error represents an inaccuracy when it comes to calculating the gas speed in the exhaust pipe. This error impacts the amount of urea injected to treat NOx. Because this bug does not imply the presence of a functional bug, it is impossible to detect it by using the black-box technique. The detection of this type of bugs involves the checking and detailed analysis of the software code by running additional software.

The solution for validating no matter what type of SM is very far from achieving by employing a direct comparison between the HIL results and the expected outputs indicated in the test-cases. One can encounter some difficulties such as synchronization problems or difficulties to validate the software automatically, among others. Table 2.1 describes the main issues.

The present chapter proposes how to implement the possible solutions depicted in Table 2.1 thanks to the use of dlls for validating any types of SMs when automating a test-case through the HIL simulation, and especially all SMs that cannot be validated by employing traditional techniques. Thanks to dlls, SMs responsible for doing a great deal of internal calculations, can be validated. During the HIL simulation, it can be checked that all the calculations are properly carried out when the software and hardware are integrated. This feature allows finding bugs which cannot be found by traditional techniques. In addition, in case the desired operating point set in the test-case is not reached in an automated HIL simulation, owing to SM interactions, the dlls can determine the expected output that the software should provide. Thanks to rule-based EX, it is possible to verify whether the functional behavior of the software is correct for the outputs obtained after the HIL simulation. EXs can carry out a real-time performance validation when executing a test-case thanks to dlls.

2.2 Application of Rule-Based Expert Systems and Dynamic-Link Libraries to Enhance Hardware-In-The-Loop Simulation Results

Extract of the following paper published in Journal of Software “Application of Rule-Based Expert Systems and Dynamic-Link Libraries to Enhance Hardware-In-The-Loop Simulation Results” JSW 2019 Vol.14(6): 265–292. ISSN: 1796-217X. https://doi.org/10.17706/jsw.14.6.265-292. http://www.jsoftware.us/.

2.2.1 Introduction

New and innovative techniques to validate software are needed to reduce cost and increase software quality.

This research focuses on the validation of engine electronic control unit software by using EXs and dlls with the aim of checking if this technique performs better than traditional ones.

To do this, a test-case database was built and run by using HIL simulations to validate a series of SMs by using these techniques: the tester-in-the-loop, automation by using a Python script, the model-based testing and EXs combined with dlls with the aim of assessing several factors such as: productivity gain, bug detection skills, functional coverage assessment, ease to automate test-cases among others.

Dlls and EXs improve the HIL success rate by 4.8%, 6% and 20% at least, for simple, fairly-complex, and highly-complex SMs, respectively. Between 9 and 13 more bugs were found when using the EXs and dlls compared with other techniques. Two of the bugs would have required software not initially planned as they were linked to environmental policies. The proposed technique can be applied to any types of a SM, especially in those cases in which traditional validation techniques fail.

2.2.2 Method

2.2.2.1 Description

The engine specifications are composed of Simulink® models. Thus, the dll can be easily built considering that Mathworks® has implemented different ways to build a dll from a Simulink® model [29].

The method used in this research are composed of different stages. Firstly, a series of test-cases are designed. Then, all test-cases are run by using the following techniques: manual execution by a technician, automation by employing Python scripts (with and without dlls), the tester-in-the-loop technique and fully automated process by using a performance EX combined with dlls. The EX compiles all rules (software requirements) related to the SM under validation. To conduct the test-cases, an HIL simulation is used. The HIL model belongs to the company subjected to this case-study and has been validated by its experts. The hypothesis to be proved by following this method is that all issues shown in Table 2.1 can be solved thanks to this technique proposed by the authors. Several indicators are analyzed such as: evaluation of the success rate of the HIL simulation, main causes of failure and success for each of the methodologies when running test-cases, the functional coverage obtained, the productivity gain which may take place. The advantages and limitations of using dlls will be discussed. EXs will assess the software performance.

The dll can be implemented by following the steps indicated in many Mathworks® documentation available in their site. The only thing that the user really needs is the Simulink® model to be converted into a dll. In this study, this is not a problem as the specifications needed to code the engine ECU software, are composed of Simulink® models. The main difficulty is how to call the dll. To do this, as described in Matlab® documentation, different programming languages such as C or an m-file can be employed. In this research, C language has been chosen. It is important to describe how the HIL simulation is performed when using dlls to validate the software. Figure 2.2 depicts the process when using an automation script. This description is valid for all techniques but the manual execution one (no automation process). A test-case is executed through a Python script coded by a test engineer. At this moment, the software Inca® [30], or any other software that can read the memory positions of the ECU, performs the data acquisition of all the software variables selected by the test engineer. The result of this process is to generate a data-acquisition file. During the HIL simulation the script is in charge of performing all the necessary manipulations on the driver-ECU interface of the HIL model automatically. If after a certain pre-established time, the values for the input set in the test case are not reached, the data acquisition process and the test-case execution are stopped by the Python script. Then, a data acquisition file containing all the software variables chosen by the test-engineer in the HIL simulation is obtained. A C-file is in charge of decoding the data acquisition file and sending, one by one, all the samples of the HIL simulation to the dll as exposed later. Every time a sample is sent by the C-file, the dll returns the theoretical value that the software should have delivered. Then, the Python scripts checks whether the software outputs are equal to dll outputs every time the dll returns a value. Two key topics must be reminded. Firstly, the outputs of the SM are also available in the asci-ii file. Secondly, the engine ECU software is an image of the Simulink® models of the SM under validation.

2.2.2.2 Functions Used in the HIL Simulation

The methodology proposed in this study has been tested in three types of functions or SMs chosen according to the number of calculations to be done as well as their complexity, number of inputs and/ outputs of the SM and the accuracy required for the output results (Table 2.2). They have been considered as representative for this case-study by the authors and the company subjected to this research.

It is important to establish this classification because the validation requirements as well as the characteristics of the SM clearly influence the time required to carry out the validation process, as well as the additional difficulties that may arise. 5 SMs of each type were selected, based on different criteria such as test engineers’ experience, the most problematic SMs in other projects, SMs that require systematic validations to ensure the vehicle safety, SMs that require frequent regression validations as well as those SMs that have never been implemented in previous projects and, in short, they are a novelty (see Table 2.2).

Table 2.3 shows the number of tests considered in this research according to the type of SM.

Table 2.4 indicates the methods followed to generate test-cases for each technique.

It is important to analyze what A2 and A3 mean. In A2, Matelo® can generate all necessary test-cases with the aim of covering the functional model. In A3, Python scripts also generate test-cases trying to cover the functional model. In addition, they generate pseudorandom values trying to reach functional states not implemented in the model. A functional state not implemented in the model involves a use-case not considered by the design team. In other words, a design error. The fact of using fuzzy variables, as exposed later, allows increasing the combination of the inputs of the SM under validation. It must also be taken into account that the scripts in charge of generating pseudorandom values have to avoid impossible combinations such as a vehicle speed at 90 km/h and the first shift engaged.

Table 2.5 shows examples of test-cases which could be used to check some functionalities of the software by using different techniques. Fuzzy variables are used when using EXs combined with dlls by increasing the number of combinations of the inputs provided by the SM under validation.

2.2.2.3 Equipment

The following equipment was used in this research.

-

An engine ECU software and hardware.

-

The HIL bench used to conduct this research belongs to the manufacturer dSpace®, model dSpace® Simulator Full-size [31]. It is a versatile HIL simulator capable of emulating the dynamic vehicle behavior.

-

When it comes to building the model that serves as the driver’s interface, ControlDesk® version 5.1 from dSpace® manufacturer is employed [32]. By using this software, it is possible to carry out all necessary data exchange between the HIL bench and the engine ECU. This model was designed by the company subjected to this case-study and it is validated by the Electronic Validation Powertrain and Hybrids service before using it.

-

Throughout this research, it is necessary to make measurements of different software variables stored in the engine ECU memory. To do this, it is imperative to use software that allows reading memory locations. In this research, version 7.1.9 of INCA® was used [30].

-

The automation process can be carried out in different ways: by using Python script or AutomationDesk® software [33]. In this research, the Python script was chosen because the staff’s skill in AutomationDesk® in the service subjected to this case-study was low.

-

Matlab® R2013 and Microsoft Visual Studio 2015 were used to create the dlls used in this research.

-

Matelo®. Software used for validation purposes being able to generate test-cases.

2.2.3 Results

2.2.3.1 Ease for Automation Test-Cases

-

a. Simple software modules

Simple SMs, as indicated in the previous section, are characterized by handling a small number of variables. As a result, it is not difficult to reach the values established in the test-case. The problem associated with SM interactions appeared in all SMs considered in this research. For example, by analyzing the measurements obtained in the HIL simulation when validating a simple SM, by using MDA® [33], it was observed that, when actuating the brake pedal, multiple variables were affected and changed their values. When the brake pedal is actuated, the vehicle speed is reduced significantly, even without changing the accelerator pedal position. To decrease the vehicle speed, the engine ECU must control the engine combustion by modifying the air-diesel mixture rate. This phenomenon is regulated by other SMs which were not validated in this process. Therefore, one can conclude that to achieve the values set in the test-case, multiple SMs must be controlled simultaneously. This fact involves a great deal of complexity to code Python scripts.

One of the most important issues to be analyzed is the consequences of not reaching the values set in the test-case. Table 2.6 shows the results when validating the simple functions by using different techniques. As one can see, the tester-in-the-loop technique offers better results than the automated one without using dlls, because a technician makes the engine ECU reach a specific operating point during the test-case execution. When using dlls, the results are by 4.8% and 14.4% better than the tester-in-the-loop or automation results achieved by using a Python script only.

The Simulink® blocks that, in most cases, prevent reaching the values set in the test-case in this research, are show in Table 2.7.

It is important to analyze the root cause of the 5.2% failures. After the analysis of the 13 failures shown in Table 2.6, it was verified that the dynamic model used for the HIL simulation failed. Analysis showed that this issue came from 2 SMs. These SMs needed a 10 ms-sample period. Owing to imperfections of the HIL model, latency times and hardware limitations of the HIL bench, in certain occasions this sample time was not respected.

-

b. Fairly-complex and highly-complex software modules

For fairly-complex and highly complex validation SMs, the number of variables increased up to 80. Therefore, the issue of SM interactions is even more present. Figure 2.3 shows the total number and types of variables of a fairly-complex SM and the difficulty of manipulation to make the variables reach a specific value set in a test-case. The graph depicted in Fig. 2.3 shows that the Boolean variables were easier to be manipulated to reach the desired value, especially when they were related to variables directly linked to the driver’s interface-model. If they were linked to analogical variables, it was not easy to reach the desired value. The triangle obtained for a fairly-complex SM was an isosceles whose height is focused on high difficulty. Therefore, the issue about SM interaction arises. On average, after having analyzed 5 SMs it was concluded that at least 40 variables were influenced between them. It is important to explain the nuance of “at least”. The Boolean variables are simple to manipulate. Nevertheless, some of them have a direct impact on making the analogical ones reach the desired value established in the test-case. The HIL simulation results are shown in Table 2.8 in which one can see the number of times the expected output values specified in the test-cases are no longer valid when the SM inputs fail to reach the specific values set in the test-case. At the same time, the most problematic blocks present in the Simulink® models can also be observed (Table 2.9).

When it comes to a highly complex SM, the triangle obtained is closer to that of an isosceles one with a lower base. This characteristic indicates a greater presence of variables that are difficult to manipulate in a HIL simulation (Fig. 2.4). In this case, a total of 120 variables that influence the other variables had to be handled. The Simulink® blocks that pose the most problems were the same as those shown in Table 2.8. The results after the 100 HIL simulations are shown in Table 2.10.

In highly complex SMs, errors that prevent the HIL simulation from succeeding when using dlls were also detected. When validating a highly complex SM, a lower success-rate with dlls was obtained because these SMs require covering thousands of kilometers (close to 20,000 km in some cases). Thus, the probability of failure in the simulator increases. Considering the strong SM interaction, it is unlikely to reach the specific values set in the test-case. Thus, the tester-in-the-loop solution offers worse results than when using dlls.

In fairly and highly complex SMs, at any given time, it was observed that several variables were close to the values previously set in the test-case as long as other values were quite far. If some manipulations were performed to make all the variables closer to the values set in the test-case, then the ones which were far from the expected values started to get closer, and the remaining variables started to get further. Thus, it is unlikely to be able to reach the input values set in a test case owing to SM interactions in such complex software as in an HIL simulation. Figure 2.5 shows how, by increasing the error tolerance against the value set in the test-case for the variables that constitute the test-case, the number of variables that remained within those tolerance margins increased. However, in any case, it was never possible to make all the variables remain within the established tolerance range. This fact happened when executing the test-cases manually or automatically. As a result, these results show the great difficulty of validating an engine ECU software version by using HIL simulation.

Figure 2.6 summarizes the results obtained when using or not using dlls in an HIL simulation. As shown, dlls improve the HIL results in a significant way for all types of SMs, especially for simple and fairly-complex functions. It must be reminded that estimator SMs belong mainly to simple functions. That is why one can see such a huge difference when comparing the results obtained when activating or not activating dlls in an HIL simulation. In fairly and highly complex functions, it must also be noted that the SMs that require performing of many calculations belong to this category. Thus, there is also a significant difference when using dlls.

The reader may think that the automation process is not useful when validating the engine ECU software. This conclusion is false as there are some SMs, especially those related to electronics, which can be successfully automated such as CAN (Controller Area Network) and LIN (Local Interconnect network) bus or the basic functionalities of adaptive cruise control with the capacity to stop the vehicle (see Table 2.11). These statements have been proven in this research as shown in Table 2.12.

In this research, the SMs listed in Table 2.10 were used.

Table 2.11 does not show the results for automation with dlls as most of the function did not have a Simulink® model.

2.2.3.2 Functional Coverage

The functional coverage has been assessed by using Eq. (2.1) which is widely employed in the automotive sector. Table 2.13 shows the total number of functional requirements associated with the SMs validated in this research.

Table 2.14 depicts the results obtained for each technique in this research.

-

a. Cause-effect technique and tester-in-the-loop

All test-cases run in this research by using these techniques are similar to the ones depicted in Table 2.5. It must be reminded that the test-cases can be run in a manual way or by employing Python scripts with the aim of automating the process.

The main limitation of the cause-effect technique is test-case redundancy. Many test cases run to validate the software were indeed linked to the same software requirements. The main reason behind this issue is the lack of a functional model of the SM under validation. When a use-case is not considered initially in the software requirements, it cannot be found by the cause-effect technique. In addition, bugs linked to calculation errors cannot be detected.

-

b. Model-based testing

When using Matelo®, it is important to expose the problems found. If the test engineer let Matelo® generate test-cases, this software will assign specific values for each input of the SM under validation. As a consequence, the problems of SM interactions, are identified. The only way to overcome this issue is to use fuzzy values combined with dlls. In this case, results are similar to the ones obtained when using a performance EX as long as dlls are used. Matelo® can be used also in such a way that Matelo® will not generate the test-case but it will control the automation process. In order words, the test engineer must code a Python script to generate the test -cases needed and then Matelo® will check the functional states covered as the automation is performed.

In the present research, the test engineer codes Python scripts with the aim of running the same test-cases as for the manual execution, the tester-in-the-loop and so on. Consequently, the results shown in Table 2.9 are the same for the cause-effect technique and the model based-testing one.

-

c. Performance expert system

The rule-based EX allows specifying the functional requirements of SMs. Two phases are considered when validating EXs: a validation and a test one. On the one hand, the former consists of verifying a certain number of test-cases depending on the type of SMs to assess the EX performance to be sure that the EXs seem to work properly (Table 2.15). Table 2.16 shows the results obtained during the first phase in which a 83.3% success rate was obtained.

Once the errors were corrected, the test phase was performed to assure that the EXs would assess the software behavior properly. If no error occurred the EX was accepted.

The main conclusion that can be drawn is all possible use-cases are not checked when no EX is used. When it comes to simple and medium-complexity SMs, the number of unchecked functional states is shown in Table 2.17. The number of untested rules in a medium or highly complex function is greater because of the large number of use cases involved in this type of SMs.

These improvements are mainly based on two reasons:

-

1.

Dlls allow controlling better the HIL simulation as it is possible to know at any time if the current state of the engine ECU is coherent or not as already exposed in this research.

-

2.

EXs assess the functional coverage easily. The reader can think that a similar result could be obtained by using Matelo® combined with dlls. Matelo® generates test-cases off-line. If after the HIL simulation, the inputs of SM under validation do not reach the desired operating point, Matelo® cannot calculate the expected value for the current state of the engine ECU in-real time.

-

3.

Dlls allow finding bugs linked to calculation errors.

2.2.3.3 Productivity Gain

It is essential to check if EXs implementation respects the timeframes of the project by analyzing several factors. As shown in Table 2.18, the gain is positive for fairly and highly-complex SMs when using an EX. This gain comes from the automation process which allows testing test-cases quicker. In addition, these test-cases can be always run thanks to dlls. Consequently, an EX combined with dlls performs better than the other techniques. For simple SMs, the result is different as the HIL simulation implies that very simple and quick manipulations are conducted on the driver’s interface model. As a result, the time gain is negative and the timeframe of the project may not be respected. It must be reminded that several projects are being developed at the same time by car manufacturers: diesel or gasoline engines. Between these types of engines, one can find considerable differences when it comes to torque structure or after treatment of exhaust gas systems. However, when comparing engines of the same groups, they are remarkably similar. As a result, an EX designed for a project can be used for another one. Then, only the automation and validation phases will be performed. As one can see in these phases, this technique outperforms the other ones. The main conclusions which can be drawn is that the proposed technique always meets the project planning especially when there are several engines developing at the same time.

2.2.3.4 Bug Detection

Figure 2.7 shows the bugs found by each technique when running the test-cases. The tester-in-the-loop offers a better performance than the automation process as it can make the system reach critical states that are not easy to reach when only using a Python script. There are not significant differences between manual and tester-in-the-loop techniques when it comes to bug detection as there is a technician who participates in the test-case execution, Python scripts detect fewer bugs than the rest of the techniques as test-cases are difficult to automate due to SM interactions. As a consequence, when the system reaches an operating point close to the one established in the test-cases, the outputs indicated in the test-cases may be no longer valid. To solve these problems, fuzzy values for the SM inputs may be used as exposed later in this section.

The results obtained in this research show that EXs with dlls give better performance and can be used to test more functional states and detect more bugs than the other techniques. Basically, this statement is based on two main reasons:

-

1.

The problems coming from the SM interactions are fixed due to dlls. Even though the operating point established in the test-case is not reached, dlls can provide the right values expected from the software. Consequently, the test-cases can be successfully run and the automation process can validate the HIL simulation results automatically.

-

2.

The functional coverage is improved due to the existence of the functional model. In addition, this model can be covered easily thanks to the automation success by using the dlls. It is also important to establish the main types of bugs found for each technique (Table 2.19).

Table 2.19 Type of bugs detected -

3.

When the bug is linked to calculation errors (calculation faults).

-

4.

When no code error occurred but there was unexpected performance software. This issue can come from an error design in the SM under validation (performance faults).

-

5.

When there is a code bug. This means the programmer has made a mistake and coded differently from what was indicated in the specifications.

2.2.3.5 Costs

It is necessary to discuss costs. The first one is associated with the licenses needed to use a specific technique (already discussed). The other one is linked to software versions needed to correct bugs detected at the end of the project. This can be caused by two things. Firstly, certain SMs (especially those related to advanced driver assistance systems) cannot be tested at the beginning of the project. The validation of these functions needs very mature software of some ECUs present in the network (electronic stability program ECU, body control unit, radars, cameras, gearbox ECU in automatic cars). Secondly, some bugs appear when testing some use-cases that were not considered in the validation process. When these bugs are detected, the project team must decide whether the bug has a significant functional impact and therefore require correction of the software. Otherwise, the bug can be corrected in future engine projects and no correction will be made. Developing new software versions involves a high cost but also might imply updating the ECU of vehicles that have already been marketed. The results showed that EXs combined with dlls detected two bugs that would have required corrective software development. These bugs were not detected using the cause-effect technique, the model-based testing one, the manual execution or the model-based testing one.

The reader might think that, in case of bugs in the Simulink® model, the software will also contain these faults. As a result, no bug will be detected by using the method proposed in this research. This study has proven that this statement is true and that is why the performance EX must be used.

2.2.3.6 Comparison Among Other Methods

When performing an HIL simulation, it is not easy to reach the values indicated in the test-case due to SM interactions. Figure 2.8 shows an example of a histogram displaying speed value. Depending on the value reached, the output can be 1 or 0. Consequently, if a test-case indicates that the speed must be 60 km/h, the accuracy is a critical factor and the expected output could be no longer valid.

A comparison among different techniques is shown in Table 2.20.

2.2.4 Conclusions

This research, conducted at the second most important European car manufacturer, is focused on the software validation of an engine ECU by using dlls and an EX (ES). This combination allows the detection of software performance and coding bugs. As shown in this research, dlls and ES can detect bugs that other techniques such as the black-box or the tester-in-the-loop cannot, especially those in temperature estimator SMs and after-treatment of exhaust gases SMs, which require accurate calculations. The obtained results show how dlls and the EX can improve the HIL success rate compared with the tester-in-the-loop technique and can execute 4.8% of the test-cases in simple validation SMs, 6% of the test-cases of fairly complex SMs and 20% of the test-cases of highly complex SMs despite the presence of SM interactions. In comparison to the use of a Python script without using a dll, the dlls and the EX can improve the HIL and can execute 14.4% of the test-cases in simple validation SMs, 28.4% of the test-cases of fairly complex SMs and 46% of the test-cases of highly complex SMs. As a result, dlls can overcome the issue linked to SM interactions. In addition, between 9 and 13 more bugs were found when using the EX and dlls, six of which could not be detected by other techniques. Even though EXs and dlls require more time to be implemented, the timeframe of the project was respected.

2.3 Use of Genetic Algorithms to Reduce Costs of the Software Validation Process

Extracted from Ortega-Cabezas, P.M., Colmenar-Santos, A., Borge-Diez, D. et al. Experience report on the application of genetic algorithms to reduce costs of the software validation process in the automotive sector during an engine control unit project. Software Quality Journal 30, 687–728 (2022). https://doi.org/10.1007/s11219-021-09582-x. https://www.springer.com/journal/11219.

2.3.1 Introduction

The number of ECUs installed in vehicles is increasingly high. Manufacturers must improve the software quality. Innovative techniques must be proposed to reduce cost and increase software quality.

This research proposes a technique being able to generate not only test-cases in real time but to decide the best means to run them (HIL simulations or prototype vehicles) to reduce the cost and software testing time. It is focused on the engine ECU software which is one of the most complex software installed in vehicles. This software is coded by using Simulink® models. Two genetic algorithms (GAs) were coded. The first one is in charge of choosing which parts of the Simulink® models should be validated by using HIL simulations and which ones by using prototype vehicles. The second one tunes the inputs of the SM under validation to cover these parts of the Simulink® models. The usage of dlls is described to deal with the issues linked to SM interactions when running HIL simulations.

GAs found at least 7 more bugs than traditional techniques and improved the functional and code coverage by between 3% and 11% for functional coverage and by between 1.4% and 7% for code coverage depending on the SM complexity. The validation time is reduced by 11.9% regarding traditional techniques. GAs perform better than traditional techniques improving software quality and reducing costs and validation time. The usage of dlls allows testing the software in real time as described in this study.

Both the number of ECUs installed in vehicles and their complexity are increasing [5, 34, 35]. Thus, manufacturers must assure software quality and reliability [12]. The software and hardware validation of an engine ECU is performed by using the HIL simulation and prototype vehicles [36]. The HIL simulation has several advantages as no vehicle with all ECUs updated with the latest software version is necessary. Secondly, the ECU behavior in the network can be checked by analyzing the frames transmitted and received when conducting an HIL simulation. However, the real interactions between ECUs are not tested as all frames received are sent by a model and not by real ECUs. Regarding prototype vehicles, the engine ECU software is tested in real vehicles which must have all ECUs properly updated: ESP (Electronic Stability Program), ADAS (Advanced Driver-Assistance System ECU), ATCU (Automatic Transmission Control Unit), etc.

This chapter is focused on one of the most complex software installed in vehicles: the engine ECU software. It proposes the usage of GAs aiming at choosing the most adequate means to be used for validation while generating test-cases automatically at the same time. The main goals are:

-

a.

Choosing automatically the optimal means to reduce the validation time and costs.

-

b.

Finding solutions to technical problems when using the HIL simulation due to SM interactions.

-

c.

Assessing whether GAs perform better than other techniques such as the model-based testing and the black-box techniques.

-

d.

Verifying whether GAs are able to find bugs when other techniques fail.

-

e.

Assessing the staff skill impact on the validation process.

The engine ECU software development comprises three phases (V-cycle development): implementing models based on Simulink® software in order to control the engine performance, generating C-code and checking the final integration of the software into the hardware. During the whole process, the engine software completes three levels of testing: model-in-the-loop (MIL), software-in-the-loop (SIL) and HIL simulations [37]. Consequently, the software is tested to assure that it meets all requirements. During the MIL, a controller model is implemented and applied to the Simulink® model aiming at checking if the model behaves as expected [38,39,40]. During the HIL simulation, the integration between software and hardware is tested thanks to a controller (the engine ECU and its software) which controls the system that imitates the engine behavior (the HIL simulator) [41,42,43,44,45]. In addition, prototype vehicles are used to test some functions which cannot be completely validated when using HIL simulations such as ADAS [46]. Therefore, the most adequate means to validate the software must be chosen to reduce time and costs. Finally, SIL is employed to test an executable code within a modelling environment [47].

Currently, software is tested based on software, architecture and system requirements [37]. At this point, how to test software requirements is a key point discussed in some standards such as ASPICE (2020). Software testability depends on 5 factors such as: requirements, built-in test capabilities, the test-cases design, the test support environment, and the software process in which testing is conducted [48]. Regarding software requirements, the most significant cause of accidents due to software is linked to poorly created software requirements or requirements that are partially delivered to developers [49, 50]. Dos Santos et al. carried out a detailed analysis about software requirements testing approaches such as the requirement driven testing [51, 52].

Concerning autonomous driving, ISO 26262 only covers functional safety when a failure occurs but not when there is no system failure. That is why, the safety of the intended functionality (SOTIF) ISO 21448 came out [53, 54]. Some key topics to validate the software are focused on 3D Modeling and sensor buildings. The former aims to create a realistic environment while the latter consists of modeling and testing sensors among others [55]. Huang et al. detail in their research the main tendencies to validate software such as software testing, simulation testing, x-in-the-loop testing and driving test in real conditions [56]. Riedmaier et al. describe an important method to test the software: the scenario-based approach which allows individual traffic situations to be tested by using virtual simulations [57]. Other approaches such as formal verification, a function-based approach, real-world testing, shadow mode testing and traffic-simulation-based approach are used to test SOTIF. The main difference among them is that in the scenario-based and function-based approaches, a microscopic statement about the safety of the system is first made to be transferred to a macroscopic statement. The rest of the methods result directly in a macroscopic statement. There are solutions in the market which allow rapid prototype, MIL/SIL simulations, HIL simulations and real test drives [58].

Cybersecurity in the automotive industry involves three main factors to be considered such as authentication and access control, protection from external attacks and detection and incident response [59]. The factors which make the automotive security more efficient include integration of right solutions such as firewalls, protecting communications, authenticating communications and encrypting data [60, 61]. These topics are important to offer performance such as on-the-air software update and V2X communication [62, 63]. As detailed by McAfee, the scope of cybersecurity involves the distributed security architecture, hardware and software security and finally network security [62]. Standards such as ISO/SAE 21434 will help the automotive sector to implement solutions for effective compliance with cybersecurity requirements as today’s knowledge sharing is inadequate [64, 63] In this research, some topics linked to cybersecurity testing are analyzed.

2.3.2 Methods

2.3.2.1 Simulink Models

The SMs are composed of multiple complex Simulink® models and subsystems. Figure 2.9 shows an example of the internal structure of the SM linked to the NOx heating probes installed in vehicles. When the initial conditions are reached (key on, the engine rpm more than 650 rpm and the vehicle speed higher than a threshold), the engine ECU software checks whether the dew point is reached. This point is the temperature to which air must be cooled to become saturated with water vapor. Afterwards, the NOx probes start to heat until reaching the required temperature to measure NOx ppm present in the exhaust gas pipe. In this study, all models were transformed into models based on nodes (Sx) which represent different low-level system statesFootnote 1 and relations between them (Fig. 2.10). When designing test-cases, it must be determined which parts of the Simulink® model should be validated by using the HIL simulation or prototype vehicles and how inputs are tuned. Next section describes in-detail how GAs work to do this.

2.3.2.2 How GAs Work Together

Figure 2.11 depicts a pseudocode and a high-level description of the method. A model is implemented by using the Python code (Fig. 2.11) through the variable named ARCS which contains the cost and conditions to go from one state to another one. Next sections display how the conditions are specified. Once the model is made, the GAs are parametrized, and the range values of the input variables of the SM under validation and the constrained linked to the optimization problem are specified. The GA2 generates populations (inputs for the SM under validation). GA1 is used to assess and optimize the path with the lowest cost by doing operations such as mutation or crossover taking into account the population generated by GA2. The GA2 makes the population evolve in such a way that the cost calculated by GA1 is minimized.

2.3.2.3 GAs Description

-

a. GA in charge of tuning inputs

Once the model is implemented and set in the code, this generates populations. To do this, the range must be specified as well as the constrained among software variables linked to the optimization problem. The fitness function of this GA2 is the output of GA1 described later which was responsible for finding an optimal path given specific inputs. When the GAs are run, the results display the values of all inputs of the SM which cover the path requiring the minimum cost and the usage of HIL simulations.

-

b. GA in charge of choosing the most adequate means

Several key factors must be considered when deciding the most adequate means to validate the software such as the ones shown in Table 2.21 [42, 65].

All factors shown in Table 2.21 are assessed when going among states of the models (see Fig. 2.10). This process is composed of two phases:

-

Phase 1. A multidisciplinary team assesses these factors aiming at determining the cost of each path by using the process depicted in Fig. 2.12. As a result, a model with the whole cost set for each transition is obtained (Fig. 2.10).

Fig. 2.12 Factors indicated in Eq. (2.1)

-

Phase 2. This GA chooses the most adequate means to validate the SM by assessing the cost function given by Eq. (2.2):

$$ {\text{Fitness function}} = \mathop \sum \limits_{i = 1}^{i = n} S_{i} $$(2.2)where Si is the cost of reaching the Si state, \(\sum\nolimits_{i = 1}^{i = n} {S_{i} }\) is the cost linked to all transitions of a specific path. When the HIL is chosen, the fitness function is always lower than 150. Otherwise, prototype vehicles are employed as, in this case, the fitness function reaches 150 or more. The reader can check this by adding all Si values needed to reach S14.

Each path is composed of different states. The paths which contain state 17 more frequently are considered as the optimal ones to be validated by using an HIL simulation. Otherwise, it should be validated by using prototype vehicles. The test-engineer can collect important information when analyzing the states covered once the optimal path is assessed (dependency on other ECUs, feedback from other projects, etc.).

2.3.2.4 HIL Simulations

Once the GAs are parametrized and a model is built as shown in Fig. 2.10, the HIL simulation can be conducted. In addition to the cost value, the actions to be conducted on the HIL model for each transition must be coded (Fig. 2.13) as the software variables have to reach the values specified in the test-case. Several ways to set the conditions to pass from one state to another one can be used. The first entails writing the equations directly in the code, which is limited to simple SMs as fairly complex and high complex SMs involve many equations. The second option is to call the Simulink® model by using the test-case inputs to make the Simulink® model return the expected output values. In this study, the Simulink® models were transformed into dlls by following the steps described in the official Matlab® documentation. Figure 2.13 depicts the usage of dlls. They are necessary to conduct the validation process to find bugs due to SM interactions as it will be shown in the results and discussion sections.

2.3.2.5 Network and Software and Hardware Integration

This proposal validates the network and hardware and software integration by using the dlls as shown in Fig. 2.13. Once the software is coded, the software outputs must be equal or very close (if the outputs are analogical) to the values provided by the Simulink® models despite the SM interactions. This point is checked by using the dlls which allow comparing the HIL results when running a test-case with the outputs provided by dlls. The same explanation can be used for vehicles as the data acquisition can be injected into the Simulink® models, and both results can be compared.

Regarding the network, it is tested when using prototype vehicles in real conditions. Not all SMs implemented in the software exchange information with other ECUs. All these aspects are considered in Fig. 2.12 where the reader can find state S10 which assesses if the SM under validation has an impact on the network. Anyway, if an SM must be validated and prototype vehicles with all ECUs properly updated are not available (specially at the beginning of the project), HIL simulations are used considering that the frames are simulated by using a model (this situation is also considered in Fig. 2.12).

2.3.2.6 Traditional Techniques

The hereafter techniques were used in this research.

-

a. The cause-effect technique

One of the most used techniques in the automotive sector is the black-box technique [18]. The main idea behind this technique is to test software as a black box. In other words, the internal structure of the SM is not considered by the test-engineer who is focused on the software behavior. That is why this technique is also known as behavioral testing. When designing the test to be run, test engineers design test-cases and decide which means could be used according to their experience [18, 66, 67]. The cause-effect is a black-box technique widely employed in the automotive sector for several reasons (easy to automate among others). This technique is based on considering a series of conditions linked to inputs of the SM under validation, the test-engineer must check if the software runs as expected. To do this, the test-engineer performs a series of actions by using the means employed for validation (prototype vehicles or the HIL simulation) and, finally, verify the software behavior. This behavior is validated and assessed by using the outputs of the SM under validation. It must be reminded that the means used to validate it are chosen by considering the test-engineers’ experience when using this technique.

-

b. The model-based testing

It is a software testing technique consisting of deriving test-cases from a functional model which describes the functional aspects and requirements of the SM under validation. Thanks to this model, it is easier to assess the functional coverage as the number of functional states covered when validating an SM is known. When implementing it, all functional states and the transition from one state to another are indicated. In this research, Matelo® software was used to generate the functional model of SMs [68]. This software allows implementing a model easily. Regarding the activation of each transition, the conditions are set. In this study, each transition calls a Simulink® model to check the next state to be activated. Matelo® allows generating test-cases by assigning values to all variables used in transition in such a way that it tries to cover all possible transitions and paths. Finally, each state can be a model as it is the case in this research making the models extremely complex. Figure 2.14 sums up all aforementioned process explained. A test-case is generated and by using calls to Simulink® models, Matelo® determines which part of the model will be covered (Fig. 2.14 in orange). Many test-cases are generated to cover the whole model and to increase functional and code coverage.

2.3.2.7 Experimental Settings

The characteristics of SMs have an impact on three factors: the time needed to validate the software, the means used to run test-cases and the number of test-cases to be run considering the planning of the engine software development. According to the test-engineers’ experience and the technical documentation used for coding the software, the SMs were classified as simple, fairly complex and high complex SMs (Table 2.2).

Table 2.22 shows the way of generating test-cases. All techniques used the software and system requirements traced in DOORs, feedback from other projectsFootnote 2 and the Simulink® specifications as inputs. By analyzing all these input data, the test-engineers build models when using GAs and the model-based testing. Finally, test-cases are implemented automatically or manually. As described later, the test-engineers’ skills have a significant impact on the time needed to implement test-cases and to obtain a productivity gain.

2.3.3 Results

This section compares the performance among GAs and traditional techniques by using the KPIs indicated in Table 2.23.

2.3.3.1 Code Coverage

During HIL simulations, a bug is detected if the difference between the HIL results and the outputs provided by the Simulink® models does not obey Eq. (2.3).

where \({\text{HIL}}_{j}\) is the value for the output j of the SM under validation after having run a test-case by using an HIL simulation and \({\text{Simulink}}_{j}\) is the value for the output j of the SM under validation after having run a test-case by using the Simulink® model.

The coverage is assessed by using Eq. (2.4) which relates to the number of Simulink® blocks tested versus the total number of blocks presented in the specifications of the SM under validation.

Table 2.24 shows the number of blocks present in the SMs validated in this research, which is used to assess the code coverage (Table 2.25).

As the cause-effect technique does not use models, the code coverage is lower than the one obtained when using the model-based testing and GAs. Building a model in which each state is a Simulink® block allows testing the same functional state by following different branches of the Simulink® model (Fig. 2.15). The model-based testing does not allow tuning the inputs of the SM with the aim of choosing the best means (an HIL simulation or vehicles) to validate an SM contrary to GAs. In addition, this technique needs to define test-cases as inputs and expected outputs. In case of a problem with the automation process due to SM interactions, the expected outputs could be no longer valid. This problem is solved by GAs and dlls. Regarding GAs, the code coverage is the addition of the code coverage when using HIL simulation and prototype vehicles. GAs perform better as they can cover more Simulink® blocks providing that the right means are used.

Code coverage should be at least 90% to meet standards. The validation process of an engine ECU is the combination of the software validation performed by the validation team (topic considered in this research), the tuning activities and the driving tests which consist of making 6 vehicles cover 20,000 km each to test the software in real conditions. The total code and functional coverage are assessed considering these three activities. No technique can reach 100% coverage due to several reasons such as project planning constraints. As proved later, validating by choosing the wrong means increases the validation time.

2.3.3.2 Functional Coverage

Table 2.26 shows the functional states linked to the Simulink® blocks present in the SM chosen. The number of functional states can be lower than the number of Simulink® blocks as some outputs of the SM can be activated by using several paths without any impacts on the functional state of the vehicle (Fig. 2.15).

Table 2.27 shows the results obtained for each technique. These results are logical as the higher the code coverage is, the higher the functional coverage is. The standard percentage of validation (90%) is reached thanks to tuning, validation and test-driving activities.

2.3.3.3 Automation

For several reasons, the automation process is difficult to be performed when it comes to engine ECU software due to SM interactions. Firstly, reaching the values for inputs of the SM under validation is difficult as the complexity of the SM increases. Secondly, if inputs do not reach the expected values, the values of the outputs of the SMs under validation will be no longer valid (Fig. 2.16).

Test-cases can be fully automated, partially automated or can be run manually. In this research, GAs were run by using the tester-in-the-loop and fully automated options. The success rate of reaching the values indicated in the test-case is shown in Fig. 2.17.

2.3.3.4 Bugs

2.3.3.4.1 Types of Bugs

Generally, all techniques detect the same types of bugs linked to Simulink® blocks. Some examples of Simulink® blocks where a bug was found are shown in Table 2.28 and Fig. 2.18. Some types of bugs linked to multiple calculations such as temperature or gas speed estimators can only be detected when using HIL simulations combined with dlls. Figure 2.19 depicts the obtained result for a software variable output of an SM when running the software by using an HIL simulation (in red) and its expected value (in blue). The error between the red and blue lines, represents an inaccuracy regarding the calculation of the gas speed in the exhaust pipe, which impacts the amount of urea injected to treat NOx. Since this bug does not imply the presence of a functional bug unless it causes a malfunction detected by the driver, it is not detected by using the cause-effect technique or the model-based testing one. Only GAs combined with Simulink® model can detect it.

2.3.3.4.2 Number of Bugs

The results are shown in Fig. 2.20. GAs overperform the rest of the techniques used in this study because Simulink® blocks are used as shown in Fig. 2.13. Regarding the model-based testing, the fact of using models ensures better results than the cause-effect technique. Finally, the cause-effect technique performs least efficiently as no model is used. The result is that it is extremely difficult to establish both the code and functional coverage.

2.3.4 Discussion

2.3.4.1 Test-Case Formulation

Several challenges must be considered when designing test-cases.

-

a.

The engine ECU software consists of SMs composed of an important number of inputs and outputs which are usually analogical. Consequently, their values range between specific intervals. When running test-cases, it is difficult to reach values contained in the variable range. For example, a variable representing the soot present in the diesel particulate filter can take a value of 40 g.

-

b.

Considering the number of variables of SMs and their ranges, it is not possible to generate and run all test-cases which could cover the whole combination of the spectrum. In some occasions, when a variable takes a value close to its upper limit, the test-case can fail. However, if values are not close to this value, the test-case provides the expected results. That is why, at least during the validation process, the functional model must be covered with different test-cases which take different combinations.

-

c.

Constraints must be considered to avoid generating uncoherent test-cases (for example speed = 100 km/h and first gear engaged).

-

d.

When running a test-case by using automation processes, it is not possible to obtain the exact values indicated in the test-case due to SM interactions. Thus, the expected outputs specified in the test-case might be no longer valid. Therefore, the traditional formulation of test-cases based on input and expected output values cannot be used in simulations. Dlls allows solving this technical issue as depicted in Fig. 2.5. Thanks to them, it is always possible to assess Eq. (2.2) as they can provide the output values for the input ones reached during the HIL simulation. Therefore, GAs can check if software runs as expected by comparing the HIL results and the Simulink® models results.

2.3.4.2 Test-Cases Automation

Python scripts for automating the process must keep the inputs of the SM in a specific range. Otherwise, the expected output of the test-case may be no longer valid (Fig. 2.16). Regarding fairly complex SMs, as the number of variables present in SMs is high, it is recommended to use the tester-in-the-loop. High complex SMs have many functional states linked to the number of kms covered (example oil dilution rate). Consequently, reaching a functional state is not difficult and test-cases can be fully automated.

GAs allow testing most of the SMs present in the engine ECU software except for:

-

a.

Estimators. There are SMs responsible for predicting temperature and other magnitude trends of certain components, which involve many calculations. The easiest way to test these SMs is to perform data acquisition by using prototype vehicles and, then, the obtained.dat file is injected into the Simulink® model. The difference between the data acquisition and the Simulink® outputs is expected to be close to zero.

-

b.

Networks. The most important network in cars is the CAN (Controller Area Network). In these cases, the testers have to verify if frames are transmitted and received properly, how the engine ECU reacts when receiving an invalid value or an absent frame, etc. This statement can be applied to other types of networks. It is easier to validate networks by using the HIL simulations than using prototype vehicles.

-

c.

SMs which are not modeled by using Simulink®. Dlls must be used if GAs are applied. Not all SMs of the engine ECU software have a specification based on Simulink® model. Consequently, GAs cannot be applied to any SMs. However, only 7% of the SMs did not have Simulink® models.

Certain high complex SMs need to cover many kilometers to reach the specific operating point indicated in the test-case. When validating the software, GAs cannot be used as the number of generated populations is not compatible with the project planning. In these cases, the cause-effect technique is recommended to reduce the validation time. Anyway, these SMs can be validated by using GAs if the calibration dataset is modified in the same way as it is done in this study.

2.3.4.3 Means Used to Validate

Using the most adequate means to validate is an essential topic as:

-

a.

The difficulty to reach an operation point depends on the means used to validate. It is easier to use test failures on a probe by using the HIL model than by using a prototype vehicle. If the wrong means is chosen, many attempts are required to run the test-case properly.

-

b.

The chances to find more bugs than by using other techniques are increased as the validation time is reduced. Consequently, test-engineers have time to run more test-cases than other techniques. Thus, the code and functional coverage are increased. In addition, implementing a model by using the model-based testing and GAs reduces redundancies in test-cases.

-

c.

The productivity gain obtained thanks to GAs has an important impact on software quality. As shown in Fig. 2.21, if software version A is validated with some delay (weeks 17 and 18), after the specifications for software B are sent to the supplier (week 16) in charge of coding the software, the software version B is delivered with bugs found in weeks 17 and 18, which may be blocking points. Therefore, the software version B could be not usable.

-

d.

The test-engineers establish the best means according to their experience when using the model-based testing and the black-box technique. Regarding GAs, a multidisciplinary team sets the cost of the functional model.

2.3.5 Conclusions

Engine electronic control unit (ECU) software is one of the most complex software which is in charge of controlling the engine as well as other systems such as exhaust after-treatment systems. Among the main issues that test engineers can face is how to choose the best means to validate (HIL simulations or prototype vehicles) as well as design test-cases which are representative enough.

This research uses two GAs to establish the best means to validate SMs and to generate test-cases in which the expected outputs are no longer needed thanks to the usage of Simulink® models used to develop the engine ECU software with the aim of improving code and functional coverage, software bugs, test-case automation capacity and productivity. The obtained results were compared with the ones got by using traditional techniques such as the model-based testing or cause-effect ones.

The results obtained in this research show that GAs can find similar results for simple SMs and high complex ones. However, when it comes to fairly complex ones (the ones that are more present in the engine ECU software), GAs perform better than the other techniques as at least 7 more bugs were found. When it comes to functional and code coverage GAs perform better. When it comes to functional coverage, GAs improve up to 11% in fairly complex SMs and 8.4% for high complex SMs when using the cause-effect technique. When it comes to the model-based testing technique, GAs improve up to 4% in fairly complex SMs and 3% for high complex SMs. The code coverage is also improved by GAs reaching 12.8% and 7% for fairly complex and high complex SMs respectively when using the cause-effect technique. When using the model-based testing, GAs perform better up to 7.1% and 1.4% for fairly complex and high complex SMs respectively.

Another advantage of using GAs is that they can detect all types of bugs thanks to the usage of Simulink® models contrary to other techniques such as the model-based testing and the cause-effect ones.

The implementation time is compatible with an engine project planning as shown in this research.

2.4 Application of Rule-Based Expert Systems in Hardware-In-The-Loop Simulation. Case-Study: Software and Performance Validation of an Engine Control Unit

Extracted form Journal of Software: Evolution and Process. 2020, Volume 32, Issue 1. https://doi.org/10.1002/smr.2223, https://onlinelibrary.wiley.com/journal/20477481.

2.4.1 Introduction

2.4.1.1 Background

Innovative techniques to validate software are needed to reduce cost and increase software quality.

This research aims to check if two rule-based EXs combined with dlls perform better than other techniques widely employed in the automotive sector when validating the engine control unit (ECU) software by using a HIL simulation.

To perform this research fifteen SMs of different complexity were chosen to be validated in an HIL simulation by using different techniques such as the manual execution, the tester-in-the-loop, the model-based testing, a rule-based EX and the combination of two EXs to establish the code and functional coverage, the productivity gain, the number of bugs found, potential limitations of each technique and the success rate of the HIL simulation. The test-cases used are described in-depth in the method section.

The enhancement, that dlls and EXs offer, depends on the number of states in the functional model used in the EXs and the number of subintervals in which the SM inputs can be divided. A range between 6 and 16 more bugs can be detected when using two EXs. The HIL enhancement can reach 6%, 16.8% and 18% depending on the SM complexity.

2.4.1.2 Engine ECU Software

The electronic architecture of today's vehicles is extremely complex. As a result, the number of ECUs present in vehicles is increasingly high [1, 2]. This trend will continue in the next years, thanks to driving assistance systems, which are essential for autonomous cars. ECUs are composed of hardware and software whose complexity depends on the function carried out in the network. Therefore there are multiple software running simultaneously and coexisting in a commercial car [5, 35]. This fact forces manufacturers to improve the software quality and the validation processes [3]. In addition, it is not difficult to find estimates that indicate that the total number of lines of code present in the software ECUs of a vehicle can reach up to more than 100 million. In the future, these figures will even grow significantly up to 200 or 300 million in autonomous vehicles.

Powertrain control is a system in charge of transforming the driver's will into an operating point of the powertrain according to the performance established for the product (eg, consumption and emissions) [69]. The key element of the control system is the engine ECU composed of complex hardware and software. The hardware is responsible for getting information from sensors after a filtering process to reduce noise in signals. The software processes all data received and handles actuators to reach the operating point. In addition, when a vehicle is in motion, the engine ECU (hardware and software) interacts with other ECUs to ensure the proper functioning of the car. This implies that each ECU should receive the information at a specific time. Therefore, the engine ECU (hardware and software) must be validated to assure that engine is properly controlled, the interaction with the rest of the ECUs is rightly performed, and the passengers' safety is insured. Otherwise, some failures could occur and lead to the situation in which the vehicle stalls. This fact makes the most safety critical parts of the software a hard‐real‐time (HRT) system. In other words, the system is subjected to real‐time constraints in which every critical task must be executed at a specific deadline to ensure the correct operation of the system. Thus, one can deduce that the software validation process is complex and needs improvements with the aim of reducing costs, increasing productivity and reliability in the automotive sector.

This chapter is focused on the engine ECU software validation (one of the most complex software present in a vehicle) and shows solutions to the main difficulties associated with traditional software validation techniques. The solution proposed is showing that two EXs working in cooperation and combined with dynamic‐link libraries (dlls) perform better than traditional techniques such as the model‐based testing or tester‐in‐the‐loop among others.

2.4.1.3 Techniques Currently Used

The engine ECU software validation is based on HIL simulation, combined with different techniques for generating test cases. Three key stages must be considered when performing an HIL simulation: test‐case generation, test‐case execution, and validation of the execution results.

One can find different definitions for the black box concept such as “the black-box testing is a method of software testing that examines the functionality of an application without peering into its internal structures or workings” [70, 71]. Among others, there are three types of techniques used when applying the black-box one:

-

a. Equivalence portioning

The inputs of the SM under validation are divided into partitions, and after having selected representative values for each partition, the test case is conducted. Then the software behavior is analyzed. The model‐based testing can be defined as the automatic generation of software test procedures, using models of system requirements and behavior. To do this, a functional model must be implemented. This technique may be considered in this research as an equivalence portioning technique in the black‐box testing. Because test cases are derived from functional models and not from the source code, the model‐based testing is usually seen as one form of the black‐box testing. The main advantage of this functional model is that all functional states and the transition from one state to another are indicated. Thanks to this, it is easier to assess the functional coverage as the number of states covered when validating an SM is known.

The EX combined with dlls consists of using an EX to assess if the software behaves as expected. The EX is built by using rules coming from the specifications and software requirements. The dlls are the Simulink model of the SM under validation that allows calculating the software outputs when performing the HIL simulation despite the SM interactions. This topic is analyzed in‐depth in this research. The authors have considered this technique as an equivalence portioning one as it is exposed in this chapter.

-

b. Boundary value analysis

Boundary values for the SM inputs are determined and the test-case obtained is performed. Then the software behavior is analyzed.

-

c. Cause-effect technique

In the automotive sector, the test engineer usually has to validate cause‐effect test cases that come from the software requirements. As a result, given a series of specific causes (conditions related to inputs), the validation process has to check the effect (software behavior). An example of a possible test case could be: “In case of an ESP frame is absent, the stop and start function must be inhibited.” The tester‐in‐the‐loop, the manual execution, or automated can be considered as cause‐effect techniques in this research.

All techniques that may be used to validate the engine ECU software have to face several issues such as the SM interactions that prevent reaching the values established in the test case, the type of bugs that can be found, and the problem of enhancing the code and functional coverage. Considering that the engine ECU software has up to 70 complex SMs, the interaction between SMs is continuously present and disturbs the validation process such as electronic noise. Consequently, given a test case, it is almost impossible to make the inputs reach the desired value. The main consequence is that the expected output set in the test case could be no longer available.

Some types of bugs are extremely difficult to detect by using HIL simulation unless a technician uses a significant amount of time to analyze the data acquisition. Figure 2.22 shows an example, the obtained result for an output for a variable of an SM when running the software in an HIL simulation (in red) and its expected value (in blue). As one can see, the results are different. This error represents an inaccuracy when it comes to calculating the gas speed in the exhaust pipe. This error could impact the amount of urea injected to treat NOx. Because this bug is not linked to a functional bug, it is impossible to detect it by using the black‐box technique. The detection of this type of bug involves checking and detailed analysis of the software code by running additional software.

Considering all aforementioned, the main limitations associated with these techniques currently used in the automotive sector when using the HIL simulation are depicted in Table 2.29. The aim of this research is to solve all these limitations by using two EXs working in cooperation combined with dlls. The fact of using two EXs allows improving the code and functional coverage and gaining a better control of the automation process, thanks to dlls. It also provides an opportunity to detect any type of bugs.

2.4.1.4 Related Works

The engine ECU software validation is based on HIL simulation. Several stages must be considered when performing an HIL simulation such as test‐case generation and test‐case execution.

A test-case consists of a set of inputs and their expected outputs that the software should provide when working properly. In an HIL simulation, a test case is run, and the obtained result is compared with the expected one to check whether the software has operated properly for this specific test case [73,74,74]. There are many different ways to generate a test case, such as assigning specific values to all inputs of the SMs under validation to cover a functional model, as exposed later in this research, or assessing the software performance when checking each software requirement [76,77,78,79,79]. The former is very difficult to implement owing to SM interactions, as it will be discussed in this paper. The aim of this method is to make the inputs reach specific values and check the outputs. The latter is widely used because the inputs of SMs do not need to reach exact values but approximate ones to check the software performance. As a result, it is more flexible.