Abstract

In recent years the growth of social media use all over the world, has had a big impact on the proliferation of misleading information and fake news on the social web. The possibility of sharing any content that is given to users, has emphasized this issue and made the automatic detection of fake news a crucial need. In this work, we focus on the Tunisian social web and address the issue of automatically detecting fake news in the Tunisian social media platforms. We propose a hybrid detection approach, combining content-based and social-based features, and using an RNN-based model. For implementing the proposed approach, we conduct an exploratory study of several variants of RNNs, which are Simple LSTM, Bi-LSTM, Deep LSTM and Deep Bi-LSTM combination. The obtained results are also compared with experiments we conducted, using an SVM-based model.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Fake News Detection

- Tunisian Social Web

- Deep Learning

- Recurrent Neural Network

- LSTM

- Machine Learning

- SVM

- Natural Language Processing

- Text Mining

1 Introduction

Fake news (FN) is not a new phenomenon and already has a long history dating back to the 18th century [1], but it is clear that it has taken on greatly increasing importance over the past few years. The term Fake News, being very widely used in the 2016 US presidential elections, was selected as the international word of that year.

As simple and self-explanatory as it may seem, there has been no universal definition for Fake News which is indeed, usually connected to several related terms and concepts such as maliciously false news, false news, satire news, disinformation, misinformation, rumor [2]. Still according to Zhou and Zafarani [2], Fake News can be defined broadly, as false news focusing on the news authenticity regardless it’s intention, or can have a narrower definition that considers Fake News as news that are intentionally false, intended to mislead readers.

Over the last few years, the interest around the Fake News phenomenon has considerably increased. This is mainly due to the massive use of the Internet and more precisely the social networks. These applications are allowing users, to freely share and spread any content. However, as user’s intentions aren’t monitored, this liberty has made these sites, growingly, a source of misinformation and fake news [3]. Troude-Chastened in [4], distinguishes between three web source categories issuing fake news: satire websites whose objective is humor; conspiratorial sites which sometimes take the form of clickbait sites, aiming to generate advertising revenue; and Fake News Websites which disseminate rumors and hoaxes of all kinds.

Since humans are considered as “poor lie detectors” [5] and considering the big amount of news, generated on the web and spread over social networks, the automatic Fake News detection has become a crucial need and an inspiring research field. Many analysis and detection approaches have been proposed for Fake News, including among others, fact-checking and various classification techniques.

In this work, we are mainly concerned with the case of the Tunisian social web. According to the latest stats given in [6], the number of social media users in Tunisia, reached 8.2 million in January 2021, which corresponds to 69% of the Tunisian population, among which 68.8% are active Facebook users. As a result, social networks and Facebook in particular, have become a very popular source of news information for Tunisian people. However, this has also led to the proliferation of fake accounts, fake pages and fake news on the Tunisian social web. In particular, political false information spreads very quickly and increasingly, becomes a means of discrediting opponents, spreading unfounded rumors or diverting information. As a result, some initiatives are beginning to emerge to fight against fake news on the Tunisian social web. They essentially consist of the emergence of a few fact-checking platforms, among which we can cite “Birrasmi.tn”Footnote 1, “BN Check”Footnote 2, “Tunisia Checknews”Footnote 3, etc. However, there is no to date, a vigorous study about the audience perception of fact-checking in the Tunisian context [7] and actions token against Fake News in the Tunisian Social web, remain still limited.

As for the automatic detection of Fake News and to our knowledge, there is not yet published works tackling the case of Tunisian social media, especially since the specificities of the latter make the FN automatic detection, a quite complex and challenging task. Indeed, the user-generated and shared content over the social media in Tunisia is informal, multilingual, may use both the Latin and the Arabic script for writing Arabic and dialectal content, and often, include code-switching [8].

We propose in this work, a deep learning-based approach for detecting Fake news on the Tunisian social web, using Recurrent Neural Network (RNN) models. It takes into consideration the Tunisian social web content specificities and explores different features combinations: content-based features (news title and text) and social-based features (users’ comments and reactions). For implementing the proposed approach, we conducted an exploratory study of several variants of RNNs, which are Simple Long Short-Term Memory Network (LSTM), Bi-LSTM, Deep LSTM and Deep Bi-LSTM combination. The obtained results are also compared with experiments we conducted, using an SVM-based model.

The work carried out is described in this paper, which is organized as follows: in Sect. 2, we provide a brief literature review, related to the issue of the automatic FN detection, focusing in particular, on the related work dealing with the Arabic language. Section 3 is dedicated to describing the proposed hybrid approach for the automatic fake news detection. The conducted experiments and the obtained results are presented and discussed in Sect. 4. Finally, our work is summarized in the conclusion section and future work is presented.

2 Related Work

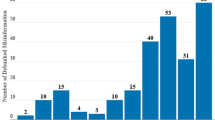

The automatic detection of fake news has aroused the interest of many researchers in the few last years. The study presented in [9] shows indeed, a significant upward trend in the number of publications dealing with rumors detection from 2015, as well as a surge in the number of publications on the detection of fake news from 2017. A study of the literature allows us to distinguish three main approaches, adopted in research work when dealing with FN detection, which are content-based approaches, social-based approaches and hybrid approaches combining content and social features.

Content-based FN detection approaches mainly rely on the news content features [9, 10]. They consider linguistic and visual information extracted from the news content such as text, images, or videos. Some studies investigated in combining textual and non-textual content features [11]. However, in most of studies, it is above all the textual characteristics that have been the most investigated, by exploiting NLP techniques and tools for analyzing the textual content, at different language levels: lexical, syntactic, semantic and discourse levels [2]. In [4], FN detection models were built by combining different sets of features, such as N-grams, punctuation, psycholinguistic features, readability and syntax, and experiments were conducted using a linear SVM classifier. Linguistic features have also been frequently considered such as, in [12] that experimented with an SVM model, using linguistic features related to Absurdity, Humor, Grammar, Negative Affect, and Punctuation.

Social-based FN detection approaches, also referred to as context-based approaches in [9, 10], or network-based approaches in [13], mainly rely on extracted information around the news, such as the news source, its propagation, the features of users producing or sharing the news, other social media users’ interactions with the news, etc. We can cite in this respect, the work in [14] which showed that differences in the way messages propagate on twitter, can be helpful in analyzing the news credibility. In [2], a classification of Facebook posts as hoaxes or non-hoaxes was proposed. The classification was based on users’ reactions to the news, using Logistic Regression and Boolean Label Crowdsourcing as classification techniques. Authors in [15] consider the news propagation network on social media and investigate in particular, news hierarchical propagation network for FN detection. They show that the propagation network features from structural, temporal, and linguistic perspectives, can be exploited to detect fake news. A model for early detecting fake news, through classifying news propagation paths, was proposed in [16], in which, a news propagation path is modeled as a multivariate time series, and a time series classifier incorporating RNN and CNN networks, is used.

Several works have combined both content-based and Social-based features, in order to improve the performance of FN detection. Authors in [17] show indeed, that RNN and CNN models, trained on tweet content and social network interactions, outperform lexical models. We can also mention the work in [18], which considered a combination of content signals, using a logistical Regression model, and social signals, using Harmonic Boolean Label Crowdsourcing. In [19], a model called CSI is proposed. It uses an RNN model and combines characteristics of the news text, the user activity on the news and the source users promoting it.

It should be noted that with regard to Arabic FN detection, recent works are beginning to emerge, but remain still relatively few in number. Among these works, we can cite [20], who collected a corpus from Youtube, for detecting rumors. They focused in particular, on proven fake news concerning the death of three Arab celebrities. Machine learning classifiers (SVM, Decision Trees and Multinomial Naïve Bayes) were then proposed for detecting rumors. In order to detect check-worthy tweets on a given topic, [21] have experimented with traditional ML classifiers (SVM, Logistic Regression and Random Forest) using both social and content-based features, and with a multilingual BERT (mBERT) pre-trained language model. They showed that the used mBERT model achieved better performance. These models were trained on a dataset including three topics, each having 500 annotated Arabic tweets and were was tested on a dataset including twelve topics with 500 Arabic tweets. Authors in [22], addressed the problem of detecting fake news surrounding COVID-19 in Arabic tweets. They manually annotated a dataset of tweets that were collected using trending hashtags related to COVID-19 from January to August 2020. This dataset was used to train a set of machine learning classifiers (Naïve Bayes, LR, SVM, MLP, RF and XGB). Authors in [23] presented a manually-annotated Arabic dataset for misinformation detection, called ArCOV19-Rumors and which covers tweets spreading claims related to COVID-19, during the period from January to April 2020. It contains 138 COVID-19 verified claims and 9.4K labeled tweets, related to these claims with their propagation networks. Results on detecting the veracity of tweets, were also presented, using a set of classification models (Bi-GCN, RNN+CNN, AraBERT, MARBERT), applied on a dataset of 1108 tweets.

As we can see, the lack of Arabic FN datasets remains a difficulty to overcome and most of the examined works were confronted with a stage of building a dataset in order to be able to propose an approach of Arabic FN detection. Regarding the case of Tunisian social media in particular, we can notice that no work addressing the automatic FN detection has yet been published to date. In this work, we propose a first exploratory study that addresses this issue, considering the case of the Tunisian social web and its specificities.

3 Fake News Detection proposed Approach

In this work, we consider the FN detection task as a binary classification aiming to predict whether a given posted news is fake or not. We propose a hybrid classification approach that combines content-based and social-based features. This approach takes into consideration the Tunisian social web specificities and explores different features combinations:

-

Content-based features. We mainly consider two textual contents characterizing a news item, namely its title and its body;

-

Social-based features. In our approach, we propose to consider two social signals categories: first, users’ comments on social media about posted news as they may carry user’s behaviors and opinions about the news and may thus, be a very informative source about its veracity. Second, we propose to take into account the users’ reactions to the news post and to investigate their effectiveness in predicting the veracity of the news. As a matter of fact, reactions allow users to express their behavior easily, and this facility of reacting may make this type of information more available than comments. In our case, we consider the six types of reactions provided by Facebook which are: “like”, “love”, “haha”, “wow”, “sad”, “angry”.

In order to implement our approach, we proceeded to build a corpus of fake news in Arabic, extracted from Tunisian pages on Facebook. This corpus is described in the next sub-section. Using the collected data, we propose a deep learning FN detection model, based on Recurrent Neural Networks known to show good performance with textual inputs. In particular, we propose to adopt LSTM networks for the binary classification of Fake news. For this purpose, we conduct an exploratory study of several variants of this model: simple LSTM, Bi-LSTM, Deep LSTM, as well as the combination Deep Bi-LSTM. As our Fake News corpus has a limited size, we propose in our exploratory study of the LSTM-based approach, a comparison with a traditional machine learning model. For this purpose, we proposed a model based on SVMs and compared the obtained results.

3.1 Dataset Construction

As no Tunisian fake news dataset is available, we proceeded to build a dataset, which we collected from Facebook, using the tool FacepagerFootnote 4 (a tool for fetching public data from social networks). The first step in our data collection was to select the pages from where, posts containing fake and true news will be extracted. We resorted to the pages of Mosaique FM and Jawhara FM radios as truthworthy. As for the pages that we considered to be sources of Fake News, we have identified them, from the fact checking platforms (cited in Sect. 1), which indicate the source page that posted a news checked as fake. We mainly collected a set of fake news (after we verified they were fake) from these Facebook pages:

(“Laugh to tears”),

(“Laugh to tears”),  (“El Mdhilla News”), “Capital News”, “Asslema Tunisie” (“Hello Tunisia”),

(“El Mdhilla News”), “Capital News”, “Asslema Tunisie” (“Hello Tunisia”),  (“Tunisians around the world”).

(“Tunisians around the world”).

Posts we collected are related to different domains such as social subjects, sports, politics and news about covid-19 pandemic. The obtained dataset contains 350 fake news and 350 legitimate news. It provides for each posted news:

-

its textual title content,

-

its textual body,

-

the textual comments related to the post,

-

the total number of users’ reactions,

-

the number of “like” reactions,

-

the number of “love” reactions,

-

the number of “haha” reactions,

-

the number of “wow” reactions,

-

the number of “sad” reactions,

-

the number of “angry” reactions,

The collected news are written in MSA (Modern Standard Arabic), however, users’ comments are written in Arabic, French or in the Tunisian dialect. As mentioned in Sect. 3, some of them are multilingual and may contain code-switching.

3.2 Classification models

As mentioned above, we implemented the proposed approach combining both content-based and social-based features, using various variants of LSTM neural networks for the FN binary classification. The experimented classification models are:

-

LSTM model. Long Short-Term Memory networks [24] are a special kind of Recurrent Neural Networks, characterized by their ability to learn long-term dependencies, by remembering information for long periods. The standard LSTM architecture consists of a cell and three gates also called “controllers”: an Input gate that decides whether the input should modify the cell value; a Forget gate that decides whether to reset the cell content and an Output gate that decides whether the contents of the cell should influence the neuron output. These three gates constitute sorts of valves using a sigmoid function.

-

Bi-LSTM model. Bidirectional LSTM is an extension of the LSTM model, where two LSTM models are trained and combined, so that the input sequence information is learned in both directions: forward and backwards. The outputs from both these two LSTM layers can be combined in several ways (such as average, sum, multiplication, concatenation).

-

Deep LSTM model. A deep (or Stacked) LSTM network [25], is an extension of the original LSTM model. Indeed, instead of having a single hidden LSTM layer, it has multiple hidden layers, each containing multiple memory cells. The addition of layers makes the model deeper and puts on additional levels of abstraction of input observations over time.

-

Deep Bi-LSTM model. It is an LSTM variant that is based on a multiple Bi-LSTM hidden layers.

-

SVM model. Support Vector Machines [26] basic idea is to find a hyperplane separating the data to be classified, so as to group the data belonging to each class in the same side of this hyperplane. This hyperplane must maximize the margin separating it from the nearest data samples, called support vectors. SVMs are known to be well suited for textual data classification [27, 28]. [28] points out, the independency of their learning capacity from the feature space dimensionality, which make them suitable for text classification, generally characterized by a high dimensional input space.

3.3 Text Representation

The various LSTM-based models we have implemented, incorporate a word embeddings layer, which is their first hidden layer. It encodes the input words as real-valued vectors in a high dimensional space. Word embeddings are learned from the training textual dataset, based on their surrounding words in the text. Being part of the model, this embedding layer is learned jointly with the proposed model.

As for the SVM-based model, we simply used a TF-IDF (Term Frequency-Inverse Document Frequency) vectorization of the textual data.

4 Experiments and Results

For all the conducted experiments, we used the dataset we collected from Facebook as described in 4.1. In order to evaluate the effectiveness of the proposed models with different features combinations, we have randomly dedicated 80% of the dataset samples for the training process and 20% for testing the models. We propose in Table 1, the results obtained with several textual feature combinations as input, before adding the users’ reactions, while Table 2 shows the obtained results after adding reactions. Results given in Tables 1 and 2 represent the accuracy of the implemented classification models.

Considering results given in Tables 1 and 2, we can observe the relevance of the content-based features, since the best results were always obtained when considering the textual post body or the textual post body jointly with the title. We can indeed see that, for all the implemented models, adding the user’s comments to the input features, did not improve results and instead, has degraded them, except for the experiment conducted with the LSTM model, where combining all textual features and adding users’ reactions have led to an improved accuracy (reaching 0.8142, as shown in Table 2).

Similarly, considering only users’ comments has led to an accuracy not exceeding 0.6715 (obtained with the SVM model in Table 2), remaining significantly lower than that obtained by models considering the textual body and title content, which confirms the relevance of content-based features. The relatively limited performance of textual social-based features (users' comments) can be explained by the complex nature of the written user-generated comments, which are frequently multilingual and informal, since they do not follow any specific spelling rules. These comments may be written in French, Arabic, or in the Tunisian dialect and sometimes in English. Arabic comments can be written both in the Latin and the Arabic script and may include code-switching. These characteristics specific to the Tunisian social web complicate the classification task and affect the quality of learning, especially since the dataset used in our case, remains of limited size.

However, taking into consideration the second type of social-based features which are the users’ reactions, within the proposed models, has clearly improved the accuracy of all of them. The non textual social-based features, which are the nature and the percentage of each type of reaction, seem thus to be efficient features in detecting fake news on Tunisian Facebook pages. As shown in Table 2, best results obtained when considering these features vary between an accuracy that ranges from 0.8 to 0.9197.

As for the proposed deep learning models, we can observe from Tables 1 and 2, that the Deep Bi-LSTM model outperformed the three other variants of LSTM-based models, reaching an accuracy of 0,8357 when considering only content-based features (post title and body), and 0,85 when adding users’ reactions. This therefore, confirms the performance improvement we were expecting by stacking two Bi-LSTM layers in order to learn more abstract features.

However, in our experiments, the proposed deep learning models did not outperform the SVM-based machine learning model which resulted in an accuracy reaching 0.9 when considering only content-based features, and 0,9197 when adding users’ reactions. This can be explained by the limited size of our training corpus, as deep learning algorithms need large datasets to be more effective.

5 Conclusion

In this work, we proposed a first exploratory study that tackles the issue of automatically detecting fake news the Tunisian Social web, which represents a challenging task due to the Tunisian social web specificities, such as multilingualism and code-witching. To our knowledge, it is the first work dealing with the automatic FN detection considering the Tunisian context, in particular. The main contributions of this work are:

-

the elaboration of a real word dataset, containing posts that we collected from public Tunisian Facebook pages. This dataset could be a starting point for other future works aiming to its enrichment and its use in automatic FN detection works, dealing with the Tunisian context.

-

A study and evaluation of a hybrid approach combining a set of content-based and social-based features. In this context, multiple variants of LSTM networks have been explored: Bi-LSTM, deep LSTM and Deep Bi-LSTM, and an SVM-based machine learning was also investigated.

The results of this study showed that an accuracy of over 91% can be achieved with an SVM model, and an accuracy of 85% is reached using a Deep Bi-LSTM model. They also allowed us to measure the relevance of various content-based and social-based features.

We consider this work as starting point for future more elaborated investigations. We plan indeed as a first step, to work on collecting more data, in order to expand and enrich our Tunisian FN corpus. Then, we plan to explore other deep learning techniques, such as BERT embeddings and classifiers. As we obtained good results with the SVM model, we also propose to investigate deep hybrid learning-based approaches that combine deep learning with machine learning classifiers.

Notes

- 1.

http://birrasmi.tn/ (accessed on 29 April 2022)

- 2.

http://ar.businessnews.com.tn/bncheck (accessed on 29 April 2022)

- 3.

http://tunisiachecknews.com/ (accessed on 29 April 2022)

- 4.

References

Allcott, H., Matthew, G.: Social media and fake news in the 2016 election. J. Econo. Perspect. 31(2), 211–236 (2017)

Zhou, X., Zafarani, R.: Fake news: A survey of research, detection methods, and opportunities. arXiv preprint arXiv:1812.00315 (2018)

Tacchini, E., Ballarin, G., Della Vedova, M.L., Moret, S., De Alfaro, L.: Some like it hoax: Automated fake news detection in social networks. arXiv preprint arXiv:1704.07506 (2017)

Troude-Chastened, P.: Fake news et post-vérité. De l’extension de la propagande au Royaume-Uni, aux États-Unis et en France. Quaderni 96, 87-101 (2018)

Shu, K., Sliva, A., Wang, S., Tang, J., Liu, H.: Fake news detection on social media. ACM SIGKDD Explorations Newsl 19(1), 22–36 (2017)

Kemp, S.: Digital 2021: Tunisia. DataReportal. Retieved from. Accessed in 2022-03-15

Zamit, F., Kooli, A., Toumi, I.: An examination of Tunisian fact-checking resources in the context of COVID-19. JCOM 19(07), A04 (2020)

Jerbi, M.A., Achour, H., Souissi, E.: Sentiment Analysis of Code-Switched Tunisian Dialect: Exploring RNN-Based Techniques. In: Smaïli, K. (ed.) Arabic Language Processing: From Theory to Practice. ICALP 2019. Communications in Computer and Information Science, vol. 1108. Springer, Cham (2019)

Bondielli, A., Marcelloni, F.: A Survey on fake news and rumour detection techniques. Inf. Sci. 497, 38–55 (2019)

Shu, K., Sliva, A., Wang, S., Tang, J., Liu, H.: Fake news detection on social media: a data mining perspective. ACM SIGKDD Explor. Newslett. 19(1), 22–36 (2017)

Parikh, S.B., Atrey, P.K.: Media-rich fake news detection: a survey. In: Proceedings of the IEEE Conference on Multimedia Information Processing and Retrieval (MIPR’18). IEEE, pp. 436-441 (2018)

Rubin, V., Conroy, N., Chen, Y., Cornwell, S.: Fake news or truth? Using satirical cues to detect potentially misleading news. In: Proceedings of the NAACL-CADD Second Workshop on Computational Approaches to Deception Detection, pp. 7–17. San Diego, California, USA (2016)

Qazvinian, V., Rosengren, E., Radev, D.R., Mei, Q.: Rumor has it: Identifying misinformation in microblogs. In: Proceedings of the Conference on Empirical Methods in Natural Language Processing (EMNLP), pp. 1589–1599. Association for Computational Linguistics, Edinburgh, Scotland, UK (2011)

Castillo, C., Mendoza, M., Poblete, B.: Information credibility on twitter. In: Proceedings of the Twentieth International Conference on World Wide Web, pp. 675–684. ACM, Hyderabad, India (2011)

Shu, K., Mahudeswaran, D., Wang, S., Liu, H.: Hierarchical propagation networks for fake news detection: Investigation and exploitation. arXiv:1903.09196 (2019)

Liu, Y., Frang, Y., Wu, B.: Early detection of fake news on social media through propagation path classification with recurrent and convolutional networks. Association for the Advancement of Artificial Intelligence (2018)

Volkova, S., Shaffer, K., Jang, J.Y., Hodas, N.: Separating facts from fiction: linguistic models to classify suspicious and trusted news posts on twitter. In: Proceedings of the Fifty Fifth Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers), 2, pp. 647–653 (2017)

Della Vedova, M.L., Tacchini, E., Moret, S., Ballarin, G., DiPierro, M., de Alfaro, L.: Automatic Online Fake News Detection Combining Content and Social Signals. The 22nd Conference of Open Innovations Association (FRUCT) (2018)

Ruchansky, N., Seo, S., Liu, Y.: CSI: a hybrid deep model for fake news detection. In: Proceedings of the 26th ACM International Conference on Information and Knowledge Management (CIKM) (2017)

Alkhair, M., Meftouh, K., Smaïli, K., Othman, N.: An Arabic Corpus of Fake News: Collection, Analysis and Classification. In: Smaïli, K. (ed.) Arabic Language Processing: From Theory to Practice. ICALP 2019. Communications in Computer and Information Science, vol 1108. Springer, Cham (2019)

Hasanain, M., Elsayed, T.: bigIR at CheckThat! 2020: Multilingual BERT for Ranking Arabic Tweets by Check-worthiness (2020)

Mahlous, A.R., Al-Laith, A.: Fake news detection in arabic tweets during the COVID-19 pandemic. Int. J. Adva. Comp. Sci. Appl. 12(6) (2021)

Haouari, F., Hasanain, M., Suwaileh, R., Elsayed, T.: Arcov19-rumors: Arabic covid-19 twitter dataset for misinformation detection. In: Proceedings of the Sixth Arabic Natural Language Processing Workshop, WANLP 2021 (2021)

Hochreiter, S., Schmidhuber, J.: Long short-term memory. Neural Comput. 9(8), 1735-80 (1997 Nov 15)

Lundberg, S., Lee, S.: Advances in Neural Information Processing Systems 30, pp. 4768–4777. Curran Associates, Inc. (2017)

Cortes, C., Vapnik, V.: Support-Vector Networks. In: Machine Learning (1995)

Vinot, R., Grabar, N., Valette, M.: Application d’algorithmes de classification automatique pour la détection des contenus racistes sur l’Internet. In: Proceedings of the 10th annual conference on Natural Language Processing TALN, Batz-sur-Mer (2003)

Joachims, T.: Text categorization with support vector machines: In: Proceedings of the 10th European Conference on Machine Learning, Chemnitz (1998)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Haddad, Y., Achour, H. (2023). Fake News Detection in the Tunisian Social Web. In: Jallouli, R., Bach Tobji, M.A., Belkhir, M., Soares, A.M., Casais, B. (eds) Digital Economy. Emerging Technologies and Business Innovation. ICDEc 2023. Lecture Notes in Business Information Processing, vol 485. Springer, Cham. https://doi.org/10.1007/978-3-031-42788-6_23

Download citation

DOI: https://doi.org/10.1007/978-3-031-42788-6_23

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-42787-9

Online ISBN: 978-3-031-42788-6

eBook Packages: Computer ScienceComputer Science (R0)