Abstract

Artificial Intelligence (AI) is increasingly used in the media industry, for instance, for the automatic creation, personalization, and distribution of media content. This development raises concerns in society and the media sector itself about the responsible use of AI. This study examines how different stakeholders in media organizations perceive ethical issues in their work concerning AI development and application, and how they interpret and put them into practice. We conducted an empirical study consisting of 14 semi-structured qualitative interviews with different stakeholders in public and private media organizations, and mapped the results of the interviews on stakeholder journeys to specify how AI applications are initiated, designed, developed, and deployed in the different media organizations. This results in insights into the current situation and challenges regarding responsible AI practices in media organizations.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Artificial Intelligence (AI) is increasingly used in the media industry [19], for instance, for the automatic creation, personalization, distribution and archiving of media content [2, 19]. This development raises concerns in society and the media sector about the responsible use of AI. There are worries, e.g., about the creation of deep fakes [11], the spread of disinformation through algorithms [12], issues with fairness and bias in recommendation [7], and algorithms reinforcing and strengthening existing stereotypes [16]. Rapid progress in AI techniques also affect the work of journalists and media professionals. These techniques reshape editorial and decision-making routines [4, 5] as well as the relationship of media with audiences [18]. This raises the question how to responsibly design, develop and deploy AI in the media domain [2].

In recent years, a large number of guidelines for ethical AI have been proposed (for overviews see, e.g., [8, 10]). Based on these abstract guidelines, several ethics tools have been proposed, but the adoption rate of such tools remains low, as these tools still lack practical applicability in the day-to-day practice [1, 6, 20]. In addition, most of these tools are not tailored to the specifics of the media domain. A notable exception is [3], developing tools for ethical AI in the context of music recommendation.

To create practical ethics tools that address domain-specific issues and fit the needs of professionals in that particular domain a good understanding of the organizational structures, routines, habits with respect to the development and use of AI and the role of ethics in those processes is needed. Several studies have been performed in non-media industries (e.g., [13, 14, 17]). This paper describes a study into current practices around the development and deployment of AI in the media industry specifically. We conducted interviews with stakeholders working at four large national media organizations in the Netherlands. Based on the results, we indicated challenges of media organizations in applying AI in a responsible way.

2 Method

To determine the current practices around design, development, and deployment of AI in the Dutch national media organizations, we conducted 14 semi-structured interviews with different stakeholders of four media organizations, with 9 interviewees working on a strategic level and 5 interviewees being involved in the (AI) development. The goal of the interviews was explorative, to gain as much information as possible on, amongst others, the current state of AI development in the organizations, ethical considerations that are being made, and challenges as they are experienced by the different organizations.

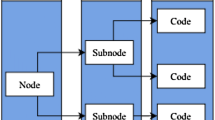

The interviews were recorded, fully transcribed, and qualitatively analyzed by iteratively and collaboratively coding the interview transcripts. We coded inductively as a way to enter the data analysis with a more complete, unbiased look at the themes throughout our data. We categorized the resulting 46 codes into 10 overall themes. Based on these results, we created stakeholder journeys for three of the four organizations, mapping how AI applications are initiated, designed, developed, deployed and monitored in the different media organizations, which stakeholders are involved, which values play a role, at which points in the process ethical issues are considered, and how (ethical) decisions are made. The stakeholder journeys were then verified by representatives of the media organizations and improved.

3 Results

3.1 Core Themes of Stakeholder Journeys

Organizational Values and Strategic Decision-Making for AI Development

Different values play a role in the different organizations: the public media organizations in our study focus on public values (e.g., independence, pluriformity), whereas the private media organization follows a company vision with (in this case) more implicit values. For all organizations hold that these are regularly reviewed and shared with employees. Across both type of organizations employees recognize the importance of these values but cannot always reproduce them. It is unclear how organizational values are taken into account in the decision-making process regarding investments in innovative (AI) projects, as there is no explicit documentation regarding ethical criteria. It is assumed that values are embedded in the culture of the organization in such a way that they are automatically included in all considerations; however, there is no explicit mechanism to evaluate (or monitor) this.

Embedding Ethical Aspects in Work Processes

The public media organizations have made a start of composing a set of internal (and overarching) guidelines regarding Ethics and Technology. Employees are largely aware of (the processes regarding) the development of internal and external guidelines. The employees of the private media organization participated in internal trainings regarding for example privacy and security. In practice, both the guidelines and trainings however changed little of their work processes and behavior and mostly increased their level of awareness and knowledge concerning these topics. The work processes in the media organizations are not yet set up for explicitly including ethical considerations for AI, e.g. the use of ethical guidelines and instruments regarding AI is not officially put in protocols.

Effects of AI on Employees

The participating media organizations are aware that the introduction of AI can greatly change the work of some of their employees. Mostly, the organizations expect that the new technology will make work more enjoyable and free up time for creative tasks and less time spent on monotonous tasks; however, everyone is aware that introducing AI systems requires support and coaching of employees during the transition. Attention, knowledge, and responsibility of ethics and AI issues are diffusely distributed over the various departments. Clear points of contact with regards to ethics and AI are also lacking. Ethical questions end often up with the head of Innovation, Privacy Officer, or legal department. Several employees mention that it often is challenging to make time for ethical considerations, evaluations, and reflections during their work processes.

Ethical Protocols and Instruments

Ethical instruments are not explicitly included in the decision-making and development process. Employees state that ethical guidelines are often too superficial, general and abstract. Existing instruments are only known to a limited extent within the organizations. It is also not clear which instruments are particularly suitable for which projects and at what point in the development process they should be used. A concern that some employees have is that ethical tools are time-consuming and therefore too expensive to apply structurally and that ethical checklists mainly stimulate a culture of ticking boxes and not taking ethical responsibility. As a result, they are currently not applied in the participating media organizations, which means that ethical choices are often made implicitly.

3.2 AI-related Challenges in the Organizations

Overarching Organizational Challenges

Overarching organizational challenges (applicable to all media organizations in this study) involve: 1) creating time for validation and reflection after the different phases of a project or after the entire project, 2) obtaining funding to continue projects after the initial (exploration) phase, 3) translating the abstract vision regarding Ethics and AI into more concrete guidelines and instruments, 4) developing protocols so that ethical considerations are made more explicit and sufficiently documented, 5) adding value for employees by means of ethical instruments that do not limit them in their work processes/tasks, 6) keeping processes and ethical considerations transparent (this poses a particular challenge when collaborating with external agencies, which is a regular occurrence), 7) embedding responsibilities for ethical considerations more clearly within the organizations, and 8) making non-technical employees (such as editorial staff) aware of the importance of ethical challenges surrounding AI.

Challenges in Strategy Phase

These challenges involve: 1) assessing whether AI is a solution for a (real) problem and not just a technology push, 2) supporting and stimulating the User Experience team in making ethical choices during their work processes, 3) involving the right (internal and external) stakeholders regarding ethical evaluation and the choice to continue with a project, and 4) estimating ethical impact by examining the effects and risks for (internal and external) users.

Challenges in Proof-of-Concept Phase

These challenges involve: 1) making ethical considerations measurable in order to test and validate them, and 2) supporting and stimulating the Data Science team in making ethical choices in their work.

Challenges in Development Phase

These challenges involve: 1) explicitly, consciously and continuously testing and evaluating ethical aspects during development, 2) assessing, usage and maintenance of third-party models, 3) supporting and stimulating the Development team in making ethical choices during their work processes, 4) getting the ‘right’ (sufficient, unbiased, GDPR approved, etc.) data, and 5) gathering user-data versus best fit regarding content recommendations.

4 Discussion and Conclusion

In this study, we found that all participating media organizations see the importance of ethical aspects during the design, development, and deployment of AI systems, and there is a strong drive to incorporate ethical decision-making. However, currently, available tools or guidelines to support the design of responsible AI are not used by the participating media organizations because they are perceived to be not sufficiently tailored to their needs. Furthermore, we found that media organizations mostly believe the challenges to be technical; however, we found that (introducing) responsible AI has large organizational challenges at different levels and departments and that there is a lack of cooperation and communication during the whole chain of development about ethical challenges. These findings are in line with other work on ethical considerations in media organizations regarding AI systems [9, 15].

Limitations of this work are that we had a relatively small number of participants and that these participants work in different kinds of media organizations, public as well as commercial. Nonetheless, the participants showed a diversity in thinking which leads us to believe that the analyses give a good insight into the current situation in (Dutch) media organizations.

References

Ayling, J., Chapman, A.: Putting ai ethics to work: are the tools fit for purpose? AI and Ethics 2(3), 405–429 (2022)

Chan-Olmsted, S.M.: A review of artificial intelligence adoptions in the media industry. Int. J. Media Manag. 21(3–4), 193–215 (2019)

Cramer, H., Garcia-Gathright, J., Reddy, S., Springer, A., Takeo Bouyer, R.: Translation, tracks & data: an algorithmic bias effort in practice. In: Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems, pp. 1–8 (2019)

Diakopoulos, N.: Automating the news: How algorithms are rewriting the media. Harvard University Press (2019)

Diakopoulos, N.: Towards a design orientation on algorithms and automation in news production. Digit. J. 7(8), 1180–1184 (2019)

Dolata, M., Feuerriegel, S., Schwabe, G.: A sociotechnical view of algorithmic fairness. Inf. Syst. J. 32(4), 754–818 (2022)

Elahi, M., et al.: Towards responsible media recommendation. AI and Ethics, pp. 1–12 (2022)

Hagendorff, T.: The ethics of ai ethics: an evaluation of guidelines. Mind. Mach. 30(1), 99–120 (2020)

Helberger, N., van Drunen, M., Moeller, J., Vrijenhoek, S., Eskens, S.: Towards a normative perspective on journalistic ai: Embracing the messy reality of normative ideals (2022)

Jobin, A., Ienca, M., Vayena, E.: The global landscape of ai ethics guidelines. Nature Mach. Intell. 1(9), 389–399 (2019)

Karnouskos, S.: Artificial intelligence in digital media: the era of deepfakes. IEEE Trans. Technol. Society 1(3), 138–147 (2020)

Martens, B., Aguiar, L., Gomez-Herrera, E., Mueller-Langer, F.: The digital transformation of news media and the rise of disinformation and fake news (2018)

Rakova, B., Yang, J., Cramer, H., Chowdhury, R.: Where responsible ai meets reality: Practitioner perspectives on enablers for shifting organizational practices. Proc. ACM Human-Comput. Interact. 5(CSCW1), 1–23 (2021)

Sanderson, C., et al.: Ai ethics principles in practice: Perspectives of designers and developers. arXiv preprint arXiv:2112.07467 (2021)

Schjøtt Hansen, A., Hartley, J.M.: Designing what’s news: an ethnography of a personalization algorithm and the data-driven (re) assembling of the news. Digital J. 1–19 (2021)

Schroeder, J.E.: Reinscribing gender: social media, algorithms, bias. J. Mark. Manag. 37(3–4), 376–378 (2021)

Subramonyam, H., Im, J., Seifert, C., Adar, E.: Solving separation-of-concerns problems in collaborative design of human-ai systems through leaky abstractions. In: Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems, pp. 1–21 (2022)

Thurman, N., Moeller, J., Helberger, N., Trilling, D.: My friends, editors, algorithms, and i: examining audience attitudes to news selection. Digit. J. 7(4), 447–469 (2019)

Trattner, C., et al.: Responsible media technology and AI: challenges and research directions. AI Ethics 2(4), 585–594 (2022)

Wong, R.Y., Madaio, M.A., Merrill, N.: Seeing like a toolkit: How toolkits envision the work of AI ethics. arXiv preprint arXiv:2202.08792 (2022)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Mioch, T., Stembert, N., Timmers, C., Hajri, O., Wiggers, P., Harbers, M. (2023). Exploring Responsible AI Practices in Dutch Media Organizations. In: Abdelnour Nocera, J., Kristín Lárusdóttir, M., Petrie, H., Piccinno, A., Winckler, M. (eds) Human-Computer Interaction – INTERACT 2023. INTERACT 2023. Lecture Notes in Computer Science, vol 14145. Springer, Cham. https://doi.org/10.1007/978-3-031-42293-5_58

Download citation

DOI: https://doi.org/10.1007/978-3-031-42293-5_58

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-42292-8

Online ISBN: 978-3-031-42293-5

eBook Packages: Computer ScienceComputer Science (R0)