Abstract

Virtual design studies for dynamics of advanced mechanical structures are often preferred over experimental studies. They allow a cheap evaluation of different designs. However, for systems undergoing large deformations or systems where viscoelastic effects cannot be neglected, these studies can mean long simulation times. A promising approach that can overcome this burden is model reduction. Model reduction reduces the computation costs by approximating high dimensional models by reduced order models with mathematical methods. For this reason, a research project was initiated within the Priority Program, whose goal is to develop reduction methods for such systems to enable faster design iterations. This article summarizes the results of this project: First, an overview over the equations of motion for geometric nonlinear systems is given. Then different reduction techniques for these system are summarized. A focus, here, is on simulation-free reduction techniques that avoid costly time simulations with the full order model and on parametric approaches that can consider design changes. This also includes geometric modifications that are handled by mesh morphing techniques which are able to maintain mesh topology making them perfectly suited to parametric reduction approaches. A case study illustrates the performance of the most promising approaches. Many literature references are given where the reader can find more information about the different approaches. The second part of the article deals with models containing viscoelastic materials. Here, a linear formulation for the equation of motion is considered. Different reduction bases are proposed to obtain a reduced order model. Their performance is illustrated with a plate model that contains an acoustic black hole with a viscoelastic constrained layer damper.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

1 Introduction

1.1 Mechanical Systems

The basic dynamics of mechanical systems are commonly determined by the equilibrium of inertia forces \(\boldsymbol{M} \ddot{\boldsymbol{q}}(t)\), damping and internal restoring forces \(\widehat{\boldsymbol{f}}(\dot{\boldsymbol{q}}(t), \boldsymbol{q}(t))\) and external forces, i.e. loads, \(\widehat{\boldsymbol{F}}(t)\):

with (generalized) displacements \(\boldsymbol{q}(t) \in \mathbb {R}^N\), mass matrix \(\boldsymbol{M} \in \mathbb {R}^{N \times N}\), \(\widehat{\boldsymbol{f}}: ~ \mathbb {R}^N \times \mathbb {R}^N \rightarrow \mathbb {R}^N\) and \(\widehat{\boldsymbol{F}}(t) \in \mathbb {R}^N\).

From a system-theoretic point of view with the input-output behavior being of importance, the external forces are considered explicitly as a space- and a time-dependent part \(\widehat{\boldsymbol{F}}(t) = \boldsymbol{B} \boldsymbol{F}(t)\) where the input matrix \(\boldsymbol{B} \in \mathbb {R}^{N \times p}\) contains weights and allocations to the degrees of freedom of the time-dependent forces, i.e. the input signals \(\boldsymbol{F}(t) \in \mathbb {R}^p\) (\(p \le N\)). The corresponding system outputs are given by Eq. (4).

Additionally, the lack of knowledge about the dominating damping mechanisms frequently leads to an assumption of simpler linear viscous damping \(\boldsymbol{D} \dot{\boldsymbol{q}}(t)\). Excluding gyroscopic effects allows for writing \(\widehat{\boldsymbol{f}}(\dot{\boldsymbol{q}}(t), \boldsymbol{q}(t)) = \boldsymbol{D} \dot{\boldsymbol{q}}(t) + \boldsymbol{f}(\boldsymbol{q}(t))\):

with damping matrix \(\boldsymbol{D} \in \mathbb {R}^{N \times N}\) and nonlinear internal restoring forces \(\boldsymbol{f}: \mathbb {R}^N \rightarrow \mathbb {R}^N\).

Sufficiently small displacements around an equilibrium position or initial configuration allows for considering only the linear part of the internal restoring forces \(\boldsymbol{f}(\boldsymbol{q}(t)) \approx \boldsymbol{K} \boldsymbol{q}(t)\) resulting in the well-known linear second-order representation

with stiffness matrix \(\boldsymbol{K} \in \mathbb {R}^{N \times N}\) and

with output matrix \(\boldsymbol{C} \in \mathbb {R}^{q \times N}\) returning the outputs of interest for the mechanical system if only displacements are considered.

In the Laplace-domain, the transfer behavior from inputs to outputs for zero initial conditions is given by

such that \(\boldsymbol{Y}(s) = \boldsymbol{G}(s) \boldsymbol{F}(s)\) where \(\boldsymbol{Y}(s)\) and \(\boldsymbol{F}(s)\) are the Laplace transformed outputs \(\boldsymbol{y}(t)\) and inputs \(\boldsymbol{F}(t)\), respectively.

Typically, mass (\(\boldsymbol{M}\)) and stiffness (\(\boldsymbol{K}\)) matrices are symmetric and positive (semi-) definite for appropriate boundary conditions suppressing rigid body modes. Commonly, linear damping is realized via modal damping. A simple and popular choice is the special case of proportional or Rayleigh damping where \(\boldsymbol{D} = \alpha \boldsymbol{M} + \beta \boldsymbol{K}\) with \(\alpha , \beta \ge 0\). The damping matrix \(\boldsymbol{D}\) is symmetric and positive definite in that case, same as \(\boldsymbol{M}\) and \(\boldsymbol{K}\).

Further information about mechanical systems and their properties can be found e.g. in [1, 2].

1.2 Model Order Reduction

The computational effort for numerically solving systems (2) or (3) and (4) can be significantly reduced by applying reduced-order models (ROM) that accurately approximate the relevant behavior of the original full-order model (FOM). One classical option to obtain such ROMs is by applying projective model order reduction (MOR).

The full-order displacements \(\boldsymbol{q}(t) \in \mathbb {R}^N\) are first approximated as a linear combination of reduced coordinates \(\boldsymbol{q}_{\textrm{r}}(t) \in \mathbb {R}^n\): \(\boldsymbol{q}(t) = \boldsymbol{V} \boldsymbol{q}_{\textrm{r}}(t) + \boldsymbol{e}(t)\) where \(\boldsymbol{V} \in \mathbb {R}^{N \times n}\), \(n \ll N\). Inserting this approximation in (2) or (3) and (4) leads to an overdetermined system with the residuals \(\boldsymbol{\varepsilon }(t) \in \mathbb {R}^N\)

Additionally the Petrov-Galerkin conditions \(\boldsymbol{W}^{\textrm{T}} \boldsymbol{\varepsilon }(t) = \boldsymbol{0}\) are enforced such that the residuals \(\boldsymbol{\varepsilon }(t)\) vanish. Premultiplying (6) with \(\boldsymbol{W}^{\textrm{T}} \in \mathbb {R}^{n \times N}\) leads to the fully determined system

where the reduced matrices and operators are given by \(\left\{ \boldsymbol{M}, \boldsymbol{D}, \boldsymbol{K}\right\} _{\textrm{r}} = \boldsymbol{W}^{\textrm{T}} \left\{ \boldsymbol{M}, \boldsymbol{D}, \boldsymbol{K}\right\} \boldsymbol{V}\), \(\boldsymbol{B}_{\textrm{r}} = \boldsymbol{W}^{\textrm{T}} \boldsymbol{B}\), \(\boldsymbol{C}_{\textrm{r}} = \boldsymbol{C} \boldsymbol{V}\) and \(\boldsymbol{f}_{\textrm{r}}(\boldsymbol{q}_{\textrm{r}}(t)) = \boldsymbol{W}^{\textrm{T}} \boldsymbol{f}(\boldsymbol{V} \boldsymbol{q}_{\textrm{r}}(t))\). The initial conditions are \(\left\{ \boldsymbol{q}, \dot{\boldsymbol{q}}\right\} _{\textrm{r}}(0) = (\boldsymbol{W}^{\textrm{T}} \boldsymbol{V})^{-1} \boldsymbol{W}^{\textrm{T}} \left\{ \boldsymbol{q}_0, \dot{\boldsymbol{q}}_0\right\} \).

The main task of any projective model order reduction technique reduces to finding suitable reduction bases \(\boldsymbol{V}, \boldsymbol{W} \in \mathbb {R}^{N \times n}\) that span appropriate subspaces \(\mathcal {V} = {\text {cspan}}(\boldsymbol{V})\) and \(\mathcal {W} = {\text {cspan}}(\boldsymbol{W})\).

Model order reduction in mechanical engineering typically aims at achieving a good approximation quality, the preservation of certain system properties and numerical efficiency. Depending on the application and the characteristic behavior of the FOM, two categories can be distinguished: Initial condition-state based reduction or input-output based reduction. To keep the second-order structure, so-called structure-preserving model reduction is applied. In order not to violate the principle of virtual work, the reduction should be performed by a (orthogonal) Galerkin projection where \(\boldsymbol{W} = \boldsymbol{V}\) instead of a two-sided (oblique) Petrov-Galerkin projection.

Further information about model order reduction for mechanical systems and specific algorithms can be found e.g. in [2].

In the following, Sect. 2 presents specific simulation-free model reduction approaches for mechanical systems with geometric nonlinearities which were addressed during the first phase of the DFG priority program 1897. Section 3 continues on simulation-free model reduction approaches for linear mechanical systems with partial visco-elastic material treatments focused on in the second phase. Furthermore, these methods are extended to work on parameterized systems to make the methods usable for applications such as design studies, optimization or sensitivity analyses.

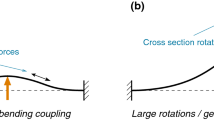

2 Geometrical Nonlinear Mechanical Systems

Model reduction for geometrical nonlinear mechanical systems requires meeting two challenges. First, a reduction basis must be found that is able to capture nonlinear effects originating from large displacements. Classic reduction methods from linear theory are not suitable for this kind of system. Second, a Galerkin projection with a suitable reduction basis is not sufficient to reduce computation time. The reason is that the nonlinear restoring force term must be evaluated and assembled in the full element domain. Therefore, methods are demanded that are able to accelerate this evaluation. These methods are called Hyperreduction.

2.1 Simulation-Free Reduction Bases

In contrast to reduction bases obtained from training data, such as the Proper Orthogonal Decomposition of displacement training sets, simulation-free reduction bases do not require time integration of full order models. They are thus much cheaper to compute.

One idea to gain a reduction basis for geometric nonlinear systems is to use simulation-free bases from techniques for linear systems and augment them with special vectors that are able to capture nonlinear effects. A prominent example are the combination of eigenmodes and modal derivatives. They extend the modal truncation reduction basis, that is known from linear model reduction, with their sensitivities in the direction of the modes themselves.

First, the solutions \(\boldsymbol{\phi }_i\) to the eigenproblem

describe the modes of the linearized system. Since \(\textbf{K}\) is a function of the displacements \(\textbf{q}\), the eigenproblem can be derived with respect to them. This leads to modal derivatives. However, experience has shown that neglecting the mass matrix for the computation of these derivatives results in basis vectors that lead to more accurate reduced systems. These derivatives are called static modal derivatives [3] \((\boldsymbol{\nabla }_{\boldsymbol{\phi }_{j}} \boldsymbol{\phi }_i)\) that are determined by solving

The final reduction basis

is then built by stacking some modes and some static modal derivatives into one reduction basis.

The same idea can be applied to other linear reduction techniques, such as the moment matching technique. The linear reduction basis known from moment matching is computed iteratively via

Afterwards, their derivatives can be computed as follows:

where a constant mass and damping matrix according to Eq. 2 is assumed. A case study evaluating the performance of these bases is shown in [4].

2.2 Simulation-Free Hyper-Reduction

2.2.1 Polynomial Expansion

One technique that can be considered as hyperreduction is the polynomial expansion. One can show that the nonlinear force term is a polynomial of third order if the system is set up with constitutive laws that are linear in the Green Lagrange strain measure. The nonlinear force term can then be written as

The tensors \(\textbf{K}^{1}, \textbf{K}^{2}\) and \(\textbf{K}^{3}\) are costly to store. But they can be reduced by applying a Galerkin projection with a proper reduction basis \(\textbf{V}\) shown in the previous sections such that

where

and where we use Einstein’s summation convention, i.e., indices that appear twice are summed up.

The polynomial expressions allow a very fast evaluation of the nonlinear restoring force term [5]. However, this representation is only feasible for systems that can be described by a low dimensional reduction basis. If a reduction basis of medium or large size is required, other hyperreduction techniques are more suitable, such as the Energy Conserving Sampling and Weighting method (ECSW).

2.2.2 Energy Conserving Sampling and Weighting

The idea of the Energy Conserving Sampling and Weighting method (ECSW) [6] is to not evaluate all elements during the assembly of the restoring force term. Instead, it evaluates only a subset \(\tilde{E} \subset E\) of all elements and interpolates their contribution to the full restoring force term

where \(\textbf{L}_e\) describes Boolean localization matrices to map the local elemental degrees of freedom to the global degrees of freedom.

The interpolation is achieved through positive weights \(\xi _e\). These weights and the element set \(\tilde{E}\) are chosen by requesting that the virtual work of the restoring force in the direction of all reduction basis vectors is retained in the hyperreduced model for some training sets \(\textbf{q}_{r, \tau }\). This requirement can approximately be formulated as the minimization problem

where

are built up by the entries

Here, \(N_e\) and \(N_\tau \) describe the number of elements of the full order model and the number of training sets, respectively.

The training sets \(\textbf{q}_{\tau _l} = \textbf{V}\textbf{q}_{\textrm{r}, \tau _l}\) can be gained by obtaining displacement vectors of a full order solution or by the so called Nonlinear Stochastic Krylov Training Sets method.

2.2.3 Nonlinear Stochastic Krylov Training Sets

The idea of these training sets [7] is to build a subspace

that is able to approximate the nonlinear restoring force \(\textbf{f}\). Afterwards, some random vectors \(\textbf{f}_{NSKTS}^\tau \in \mathcal {K}(\textbf{M}\textbf{K}^{-1}, \textbf{B})\) are generated and the nonlinear static problems

are solved. The solutions \(\textbf{q}_\tau ^{(k)}\) are then used as training sets for the ECSW. This procedure avoids costly time integration of the high dimensional model. However, some nonlinear static equations of full dimension must be solved.

2.3 Extension to Parametric Bases

2.3.1 Parameterization of Finite Element Models

Design studies or sensitivity analyses require a parameterization of the Finite element model. The parameterization of material data and boundary conditions is quite easy to achieve. But shape parameterization of the mesh is much harder. Classic approaches for parameter studies create a new mesh for each iteration. This is disadvantageous for our applications for two reasons: First, a mesh generation can take large amounts of computation time. Second, the mesh topology can change, which makes already computed reduction bases useless unless one applies special mapping techniques.

Therefore, we use a mesh parameterization approach that avoids both drawbacks: Mesh morphing. The mesh morphing approach just modifies the coordinates of the nodes in the mesh while maintaining the mesh connectivity.

We use Radial Basis functions, more precisely, thin plate splines to move interior nodes and maintain mesh quality. One example is the beam shown in Fig. 1 where a notch’s position is parameterized.

Mesh morphing for a cantilever beam. The beam has a notch on the bottom side. Middle: The reference mesh. Top: The notch is translated to the left. Bottom: The notch has been morphed to the right. The translation is done via mesh morphing such that only nodal coordinates change while the mesh topology is maintained

After parameterization, the equations of motion (2) become parametric and can be written as

Now, simulation-free reduction basis also depend on the parameters \(\boldsymbol{p}\). This implies that a computed reduction basis for a certain parameter value may not be valid for other parameter values as well. Some methods to overcome this burden are summarized in the following sections.

2.3.2 Basis Updating

The most simple parametric model reduction technique is to compute a new simulation-free reduction basis for each new parameter value. But since the reduction basis information will not be completely different, information from previous computations can be used to gain new reduction bases with less effort. This procedure is called basis updating. In the typical case where the reduction basis consists of modes and static modal derivatives, this can be done in two steps. First, an inverse free preconditioned Krylov subspace method (IFPKS) is used to update the modal part of the reduction basis. Second, an iterative solver such as the preconditioned conjugate gradient method can be used to gain the static derivative part of the reduction basis. This procedure is described in detail in [8].

2.3.3 Global Reduction Basis Through Sampling

Another idea is to sample the parameter space

and compute a simulation-free reduction basis for each sample point

Afterwards, all sampled reduction bases are stacked into one reduction basis. The reduction basis is then deflated such that nearly parallel vectors are removed from the reduction basis. The advantage is that a global reduction basis can be found very easily. But if the reduction basis space changes drastically in the parameter space of interest, the reduction basis can be of high dimension and many sample points could be necessary, which makes other approaches more suitable [9].

2.3.4 Augmentation with Parametric Sensitivities

If only a small parameter space around a certain parameter is of interest, an augmentation by parametric sensitivities is an option [10]. This idea is similar to the ideas of simulation-free reduction bases for geometric nonlinear systems. The basis is built up by stacking the simulation-free reduction basis at a certain point and their parametric derivatives into one basis:

where \(\textbf{e}_i\) is the i-th unit vector in the Euclidean space.

2.3.5 Interpolation on Manifolds

The space of reduction bases can be seen as a manifold which enables interpolations between different bases. One option to define this manifold is the Grassmann-manifold. If two reduction bases \(\textbf{V}_1\) and \(\textbf{V}_2\) at sample points \(p_1\) and \(p_2\) have been computed and a reduced model at sample point \(\hat{p}\), that is between these two points, is demanded, one can interpolate between the computed reduction bases. One has to perform two steps for this interpolation: First, a singular value decomposition of

is computed. Afterwards, the interpolated reduction basis \({\hat{\textbf{V}}}\) at \(\hat{p}\) is determined by

This method works very well even for systems whose modes show high parametric dependencies [11].

2.4 Parametric Hyper-Reduction

Similar to the global reduction basis approach, the hyperreduction problem can also be globalized [12]. First, we compute \(\textbf{q}_{\tau }(\textbf{p}_s)\) (using NSKTS) for each sample point \(\textbf{p}_{s} \in P_{\textrm{sample}}\). Then, the quantities

are built up by the entries

where \(N_S = |P_{\textrm{sample}}|\) is the number of sample points, \(\beta \) is the number of NSKTS vectors per sample, k is the number of load increments (Eq. 25) that are computed for each NSKTS vector and \(\textbf{V}(\textbf{p}_s)\) are local reduction bases that are computed at sampling point \(\textbf{p}_s\). The matrix \(\textbf{G}_{\textrm{global}}\) and the vector \(\textbf{b}_{\textrm{global}}\) are used to compute a global set of weights and elements for the ECSW as described by Eq. (21).

2.5 Case Study

We want to show a simple case study showing the performance of the most promising approaches. A notched cantilever beam with hexagonal elements is set up as shown in Fig. 1. The beam is parameterized with the position of the notch. The left end is fixed and an harmonic excitation force is applied at the tip. Since the beam is very slender, a highly nonlinear behavior is expected for sufficiently large excitation forces.

Two approaches are compared to find a suitable reduction basis for \(p=0.25\). First, the interpolation method is tested by interpolating between two reduction bases at \(p = 0.24\) and \(p = 0.26\). Second, the parametric sensitivity approach is tested by computing a reduction basis at \(p=0.24\) and its parametric sensitivities. The tip displacement errors for the different bases are depicted in Fig. 2. One can conclude that both approaches lead to a good reduced model. The parametric sensitivity approach performs best and gives almost the accuracy of a model with direct computation of a reduction basis at \(p=0.25\).

Tip displacement error for a model at \(p=0.25\) over time for different reduction bases. The parametric sensitivity approach at \(p=0.24\) (4 modes \(+\) static modal derivatives + parametric sensitivities \(=\) 28 basis vectors) gives almost the same accuracy as the directly computed reduction basis for \(p=0.25\) (6 modes \(+\) static modal derivatives \(=\) 27 basis vectors). The interpolation of the basis between \(p=0.24\) and \(p=0.26\) (6 modes \(+\) static modal derivatives \(=\) 27 basis vectors) also gives good accuracy. The reduction basis at \(p=0.24\) (6 modes \(+\) static modal derivatives) is not suitable for reduction

Furthermore, a hyperreduction with the ECSW and NSKTS is conducted with the reduction that performed best, namely the parametric sensitivity approach. Figure 3 shows the tip displacement error for the hyperreduced model with different tolerances for the ECSW. One can see that a very tight tolerance leads to a model that is as accurate as a reduced order model without hyperreduction. However, a very high tolerance leads to a model that is more accurate compared to the non-reduced solution. This probably originates from a softening effect because too few integration points (i.e. selected finite elements) are used.

Table 1 lists simulation times in seconds, measured with Python’s function process_time(), and speedup factors for the hyperreduced models compared to the full order model. The simulations are conducted on a machine with Intel Xeon CPU E3-1270 v5 (3.6 GHz) with 32 GB RAM. The table also contains the number of elements that are evaluated for the nonlinear restoring force vector. For this small academical problem, the hyperreduced model with largest tolerance reaches a speedup factor of 2.0.

3 Linear Visco-Elastic Mechanical Systems

Many materials that are used to damp structural vibrations, such as rubber-like layers placed on plate-like structures, show a material behavior that is called viscoelastic. Viscoelastic behavior is characterized by a mixture of elastic and viscous properties. These are often modeled by the Generalized Maxwell model especially if a time domain simulation is demanded. One major drawback of this model is that it introduces internal state variables that must be evaluated in each timestep. For this reason, the computational effort is drastically increased for such models and, thus, model reduction is highly desired.

3.1 Modeling Aspects

3.1.1 Generalized Maxwell Model

Figure 4 illustrates the Generalized Maxwell model that is used to model viscoelastic materials in time domain. It consists of several Maxwell elements built from linear elastic springs and viscous dashpots.

The constitutive equations

for the dashpot and the spring and the kinematic relation

leads to the equation

where \(\varepsilon _{m, \textrm{in}}\) has been replaced by \(\alpha \). We call \(\alpha \) an internal variable. This leads to two equations for the constitutive law:

When a step load in strain with amplitude \(\varepsilon _0\) is applied to a Maxwell element, its response is

where we use the definition \(\theta _m := \frac{\eta _m }{ E_m}\). The full response of all Maxwell elements is the sum

Therefore, the constitutive equation can also be expressed by a Duhamel integral

An extension to the three dimensional case is easy to achieve by introducing a split into volumetric and deviatoric parts and using an internal variable for each coordinate of the strain tensors [13, 14].

3.1.2 Explicit Form

The explicit state form contains the internal variables in its system state vector \(\textbf{x}= [\textbf{u}, {\boldsymbol{\alpha }}]\). This allows to apply model reduction techniques for linear systems because this representation leads to a system

that is linear.

The matrices \(\textbf{M}_{uu}, \textbf{D}_{uu}, \textbf{K}_{uu}\) are similar to those from classic Finite Element models containing linear materials. The matrices \(\textbf{D}_{\alpha \alpha }\) and \(\textbf{K}_{\alpha \alpha }\) are fully diagonal. The coupling between the internal states \({\boldsymbol{\alpha }}\) and the displacements \(\textbf{u}\) happens in the stiffness matrix through the blocks \(\textbf{K}_{u\alpha }\) and \(\textbf{K}_{\alpha u}\).

3.2 Model Reduction via Decoupling into Subsystems

In a first naiv approach, classical model order reduction approaches, like moment matching or modal truncation, are applied the fully coupled system (42). With this approach different physics, displacements \(\boldsymbol{u}\) and partial stresses (internal variables) \(\boldsymbol{\alpha }\) are mixed in the reduced coordinates \(\boldsymbol{x}_{\textrm{r}}\), thereby losing their physical meaning and limiting the reduction process.

To avoid those limitations, displacement variables and internal partial stress variables are treated separately. For this, the visco-elastic structural system (42) \(\mathcal {S}\) is treated as a coupled respectively closed-loop system: The purely elastic structural subsystem \(\mathcal {S}_{1}\) with \(N_u\) degrees of freedom \(\boldsymbol{u}\) is coupled to the viscous subsystem \(\mathcal {S}_{2}\) with \(N_{\alpha }\) degrees of freedom \(\boldsymbol{\alpha }\) via the interface equations \(\mathcal {I}\)

With regard to model reduction, this way both systems can be treated separately. Thus, for each subsystem, well established first- or second-order methods like first-/second-order moment matching, modal truncation with complex eigenvectors, second-order balanced truncation and others can be applied. The reduced and re-coupled system will have the dimension \(n = n_{1} + n_{2}\). Additionally, the interface equations are also reduced, i.e. (internal) in- and outputs are reduced.

3.2.1 Second-Order Moment Matching

Transfer function (5) of the full and reduced system are represented by Taylor series around the shift \(s_0 \in \textbf{C}\):

where \(\boldsymbol{M}_{s_0,i}\) and \(\boldsymbol{M}_{\textrm{r},s_0,i}\) \(\forall i = 0, \ldots , \infty \) are called the moments of the full and reduced system, respectively.

The basic ansatz consists in making a specified amount of moments match around \(s_0\):

This can implicitly and numerically efficiently be achieved by using second-order Krylov subspaces as reduction bases [15]. The necessary Krylov subspace is defined as

where \(\boldsymbol{P}_0 = \boldsymbol{V}\), \(\boldsymbol{P}_1 = \boldsymbol{M}_1 \boldsymbol{V}\) and \(\boldsymbol{P}_i = \boldsymbol{M}_1 \boldsymbol{P}_{i - 1} + \boldsymbol{M}_2 \boldsymbol{P}_{i - 2}\).

In general with one-sided moment matching \(q_0\) moments can be matched if \(\textbf{V}\) is chosen such that it includes the input Krylov subspace:

where \(\boldsymbol{K}_{s_0} = s_0^2 \boldsymbol{M} + s_0 \boldsymbol{D} + \boldsymbol{K}\) and \(\boldsymbol{D}_{s_0} = 2 s_0 \boldsymbol{M} + \boldsymbol{D}\).

To achieve moment matching around different shifts \((s_0, q_0)\), \((s_1, q_1)\), ...the appropriate Krylov subspaces simply need to be augmented.

3.2.2 Reduction of Coupling Blocks

The coupling block \(\boldsymbol{K}_{u\alpha }\) and \(\boldsymbol{K}_{\alpha u}\) are reduced via singular value decomposition and only considering the dominant singular values:

Thus, internal in- and output matrices for both subsystems can be defined

and included in the moment matching process for each subsystem guaranteeing moment matching for the fully re-coupled system [16].

3.3 Schur Complement

The diagonality of \(\textbf{D}_{\alpha \alpha }\) and \(\textbf{K}_{\alpha \alpha }\) can be exploited to condense the internal states in the equations of motion (42). The equations of motion in frequency domain

consist of two blocks of rows. The second block can be transformed

such that the states \(\textbf{A}\) can be inserted into the first block. This results in

This procedure is a Schur complement of the dynamics stiffness matrix. It can be computed very cheaply because \((\textbf{K}_{\alpha \alpha } + \textrm{i}\Omega \textbf{D}_{\alpha \alpha })\) is diagonal and its inverse is very cheap to evaluate. The same procedure can also be done in time domain if a certain time integration scheme is applied but we stick to the frequency domain for simplicity.

The Schur complement is an exact procedure, i.e. it produces no procedural error. It reduces the number of degrees of freedom to the number of displacement degrees of freedom. This can be a large reduction, especially if large regions of the model are considered viscoelastic or if viscoelastic materials have many Maxwell elements.

3.3.1 Modal Reduction

The Schur complement is a good starting point to reduce the degrees of freedom of the equations of motion, but we want to go further. One idea is to apply a modal reduction after the application of the Schur complement. One can use the eigenvectors of the eigenvalue problem

and stack these modes into a reduction basis such that

The reduced system can then be expressed as

However, we will see that these modes are not a good choice for the reduction. The reason is that these modes are the modes of an elastic system where all dampers of Maxwell elements are blocked (\({\boldsymbol{\alpha }}=\boldsymbol{0}\)). This results in a system that is stiffer and leads too high eigenfrequencies in the reduced system. A better approach is to use the eigenmodes of the elastic system where no Maxwell element is active, i.e. only the long-time elastic behavior is considered (\(\dot{{\boldsymbol{\alpha }}}= \boldsymbol{0}\)). These modes are computed by solving the eigenproblem

An extension to this idea is the augmentation by the static response to loads that are generated by the imaginary part of the stiffness matrix when the structure is deformed according to a mode [17, 18]. The reduction basis then reads

with \(\textbf{K}_\infty = \textbf{K}_{\textrm{schur}}(\textrm{i} 0)\).

3.4 Numerical Example: Plate with Acoustic Black Hole and Visco-Elastic Constrained Layer Damping

The proposed methods are illustrated by a model of an aluminium plate that contains a so called acoustic black hole (ABH). ABHs are regions where the plate thickness is decreased with a special shape function. The theory claims that bending waves traveling through the plate decrease their travel velocity when the plate thickness is decreased. Therefore, the waves stay longer in regions with decreased thickness and can damped more effectively in these regions.

The proposed model, that is taken from [19], is depicted in Fig. 5. It consists of an aluminium plate with a circular ABH and a constrained layer damper treatment placed in the ABH region. The constrained layer damper treatment consists of a viscoelastic rubber-like layer and a constrained layer made of CFRP. The Finite Element model has 14,769 displacement degrees of freedom \(\textbf{u}\) and 14,280 internal states \({\boldsymbol{\alpha }}\).

Figure 6 shows a part of the frequency response function evaluated at a point on the plate for different reduction methods. When modes \(\phi ^0\) are used for reduction, one can see that the solution is similar to a full solution where viscoelasticity is neglected, i.e. the system \(\{ \textbf{M}_{uu}, \textbf{D}_{uu}, \textbf{K}_{uu} \}\). The reason is that the modes are computed for a very stiff constrained layer damper (all dashpots of Maxwell elements are blocked) and they do not activate the viscoelastic layer. A modal reduction with the modes \(\phi ^\infty \) gives better results. But modes computed with \(\phi ^\infty \) and the augmentation according to Eq. 58 gives best results. However, these perform worse in the higher frequency domain if the dimension of the reduction basis is equal for all methods (this is not illustrated in the Figure). Due to the augmentation only half of the modes \(\phi ^\infty \) can be used to keep the same dimension. The moment matching approach is not able to approximate the frequency response fir a comparable reduced dimension of 50. However, moment matching can approximate the FRF very well if a dimension of about 300 is used which is also shown in [19].

Frequency response function computed with different reduced order models compared to the full order model. The Schur complement gives exact results. Modal reduction with modes \(\phi ^0\) approximate a full solution where viscoelasticity is neglected. The reduced order model with \(\phi ^\infty \) give good results while the augmentation according to Eq. (58) gives best accuracy for reduction bases with dimension 50. The moment matching approach needs a higher number of basis vectors to give a good accuracy

4 Conlusion and Outlook

Accurate simulation models to motivate design decisions in early product development phases is a challenge. Especially models containing viscoelastic materials or undergoing large deformations can lead to high computation times. It is desired to reduced these times to accelerate simulations in the concept phase. A promising method to achieve this goal is model reduction.

We have shown different methods to reduce Finite element models of mechanical structures undergoing large deformations. The challenge here is to find reduction bases that are able to capture nonlinear effects and parametric dependencies. Furthermore a hyperreduction must be applied to accelerate evaluation of the nonlinear restoring force term. A cantilever beam case study illustrates the potential of the methods. Furthermore, we have introduced how viscoelasticity is modeled in Finite Element models. The equations of motion contain many internal states that can be considered as additional system states that can increase the system dimension drastically. Some reduction bases are proposed to reduce these models. A case study on a plate with an acoustic black hole illustrates the performance of the reduction bases. Further research is necessary to also apply the parametric methods from the geometric nonlinear reduced order models to the viscoelastic systems. Hyperreduction methods can also be a potential candidate to reduce the evaluation costs of internal states in viscoelastic models.

References

Géradin, M., Rixen, D.J.: Mechanical Vibrations: Theory and Application to Structural Dynamics. John Wiley & Sons (2014)

Benner, P. et al.: Model Order Reduction. Applications, vol. 3 De Gruyter (2021)

Slaats, P., de Jongh, J., Sauren, A.: Model reduction tools for nonlinear structural dynamics. Int. J. Solids Struct. 38(10), 2131–2147 (2001)

Lerch, C., Meyer, C.H.: Modellordnungsreduktion für parametrische nichtlineare mechanische Systeme mittels erweiterter simulationsfreier Basen und Hyperreduktion–6. In Lohmann, B.; Roppenecker, G. (Hrsg.): Methoden und Anwendungen der Regelungstechnik, pp. 67–86, Shaker Verlag (1. Aufl.) (2017)

Lohmann, B., Bechtold, T., Eberhard P., Fehr, J., Rixen, D.J., Cruz Varona M., Lerch, C., Yuan, C.D., Rudnyi, E.B., Fröhlich, B., Holzwarth, P., Grunert, D., Meyer, C.H., Rutzmoser, J.B.: Model Order Reduction in Mechanical Engineering. Model Order Reduction–Applications, De Gruyter, vol. 3, pp. 33–74 (2020)

Farhat, C., Chapman, T., Avery, P.: Structure-preserving, stability, and accuracy properties of the energy-conserving sampling and weighting method for the hyper reduction of nonlinear finite element dynamic models. Int. J. Numeric. Methods Eng. 102(5), 1077–1110 (2015)

Rutzmoser, J.B., Rixen, D.J.: A lean and efficient snapshot generation technique for the Hyper-Reduction of nonlinear structural dynamics. Comput. Methods Appl. Mech. Eng. 325, 330–349 (2017)

Meyer, C.H., Lerch, C., Karamooz Mahdiabadi, M., Rixen, D.: Efficient basis updating for parametric nonlinear model order reduction. PAMM 18(1) (2018)

Meyer, C.H., Rixen, D.: Global proper orthogonal decomposition for parametric model reduction of geometrically nonlinear structures. PAMM 19(1) (2019)

Rothe, M.: Parametrische Basen für nichtlineare Modellreduktion von mechanischen Finite Elemente Modellen. Technische Universität München, Bachelorarbeit (2018)

Meyer C.H., Rixen D.J.: Simulation-free reduction basis interpolation to reduce parametrized dynamic models of geometrically non-linear structures. In: Kerschen G., Brake M.R., Renson L. (Eds.) Nonlinear Structures & Systems. Conference Proceedings of the Society for Experimental Mechanics Series, vol. 1. Springer, Cham (2021)

Meyer, C.H., Rixen, D.J.: Simulation-lean training-sets for hyper-reduction of parametric geometric non-linear structures. In: Proceedings of the International Conference on Structural Dynamic, EURODYN. vol. 1, pp. 80–92. Athens, Greece (2020)

Rust, W.: Nichtlineare Finite-Elemente-Berechnungen: Kontakt, Kinematik, Material, p. 156. Springer (2016)

Wriggers, P.: Nichtlineare Finite-Element-Methoden, pp. 66–67. Springer (2001)

Salimbahrami, B., Lohmann, B.: Order reduction of large scale second-order systems using Krylov subspace methods. Linear Algebra Appl. 415, 385–405 (2006)

Lutowska, A.: Model order reduction for coupled systems using low-rank approximations. Technische Universiteit Eindhoven (2012)

Rouleau, L., Deü, J.-F., Legay, A.: A comparison of model reduction techniques based on modal projection for structures with frequency-dependent damping. Mech. Syst. Signal Process. 90, 110–125 (2017)

Plouin, A., Balmès, E.: Steel/viscoelastic/steel sandwich shells computational methods and experimental validations. In: International Modal Analysis Conference, pp. 384–390 (2000)

Meyer, C.H., Lerch, C., Rothe, S., Hoffmann, S., Langer S.C., Lohmann, B., Rixen, D.J.: Study on model reduction for position optimization of acoustic black holes. In: PAMM 21 (2021). (Submitted for publication)

Acknowledgements

Funding: This research was funded by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation)—314987946.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Cite this chapter

Lerch, C., Meyer, C., Rixen, D.J., Lohmann, B. (2024). Simulation-Free Model Reduction Approaches for Geometric-Nonlinear and Linear-Visco-Elastic Mechanical Systems. In: Eberhard, P. (eds) Calm, Smooth and Smart. Lecture Notes in Applied and Computational Mechanics, vol 102. Springer, Cham. https://doi.org/10.1007/978-3-031-36143-2_10

Download citation

DOI: https://doi.org/10.1007/978-3-031-36143-2_10

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-36142-5

Online ISBN: 978-3-031-36143-2

eBook Packages: EngineeringEngineering (R0)