Abstract

This paper describes a study, which is currently underway, whose aim is to investigate how the haptic channel can be effectively exploited by visually impaired users in a mobile app for the preliminary exploration of an indoor environment, namely a shopping mall. Our goal was to use haptics to convey knowledge of how the points of interest (POIs) are distributed within the physical space, and at the same time provide information about the function of each POI, so that users can get a perception of how functional areas are distributed in the environment “at a glance”. Shopping malls are typical indoor environments in which orientation aids are highly appreciated by customers, and many different functional areas persist. We identified seven typical categories of POIs which can be encountered in a mall, and then associated a different vibration pattern each. In order to validate our approach, we designed and developed a prototype for preliminary testing, based on the Android platform. The prototype was periodically debugged with the aid of two visually impaired experienced users, who gave us precious advice throughout the development process. We will describe how this app was conceived, the issues emerged during its development and the positive outcomes produced by a very early testing stage. Finally, we will show that the proposed approach is promising and is worthy of further investigation.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Haptic feedback

- User interfaces

- Accessibility

- Mobile applications

- Orientation and mobility

- Indoor exploration

- Cognitive maps

1 Introduction

Mobile applications for navigation have been growing more and more popular over the latest years and are now considered essential utilities on every smartphone. Positioning services such as those provided by Google Maps [1] or OpenStreetMap [2] are widely adopted by developers in order to build applications dealing with the Internet of Things (IoT) and Smart Cities environments. Navigation apps have proven to be effective assistive solutions for persons with visual impairments, helping them achieve better social inclusion and autonomy [3]. While visiting an indoor or outdoor environment, visually impaired users can rely upon a navigation app to get real-time information about their actual position, route planning, and accessibility warnings, so that they can get to a specific place in the safest and more effective way. An overview of indoor navigation aids for persons with visual impairments is provided in [13].

In this paper, we are investigating digital maps meant to provide visually impaired users with a “mental overview” of the environment before physically accessing it. A typical use case might be planning a visit to a mall to make a series of purchases. A shopping mall is a very complex building in which it is important to distinguish specific functional areas, such as ATMs, information centers, bars and obviously the different types of shops. Any mall nowadays provides visitors with digital maps, through its website or a dedicated mobile app, however, as far as we know, these aids are not accessible for visually impaired users.

A cognitive, or mental, map is a mental representation of the spatial environment that the human brain builds as a memory aid, in order to support future actions. A cognitive map is leveraged by the human brain during spatial navigation. A neurological insight of this process is provided by Epstein et al. in [4], in which authors highlight that cognitive mapping accounts for three basic elements, spatial coding, landmark anchoring and route planning. An effective cognitive map allows a subject to localize and orient themselves in the space in relation to the landmarks and elaborate a route to reach a given point in the environment. Building a cognitive map, thus, implies developing skills to understand the structure and function of an environment, and to describe its organization and relations with other physical spaces [3]; it is a process of gaining awareness of a physical environment, so it is useful in a training phase before accessing an unfamiliar place. An app that helps to form a mental map, therefore, should not be understood as a tool that guides the user step-by-step in real time, but rather as a tool to be used in advance to facilitate a visit and plan an itinerary through a complex environment.

Hardcopy tactile maps are commonly used to support the development of cognitive maps for visually impaired users [5, 6]. The haptic channel, anyway, has been proven to be as effective as the tactile feedback [7], especially when adopted together with the audio channel [5].

Traditionally, haptics has been used to provide information about dangerous spots, obstacles, crossroads and other physical barriers [3]. Studies on wearable systems exist, which involve the use of the haptic channel to convey instructions on movement. Bharadwaj et al. [8] investigated the usage of a hip-worn vibrating belt to convey turn signals while walking through an unknown route, and their findings suggest the effectiveness of haptics as a notification channel in noisy environments when using a navigation aid.

Papadopoulos et al. [5], investigated how different modalities of exploring a map affect the spatial knowledge acquired by the users. In particular, three modalities of exploration were considered; a verbal description of the physical environment, an audio-tactile map rendered via a touchpad, and an audio-haptic map where the haptic feedback was provided through a commercial, portable haptic device. During the exploration, different feedbacks were associated to streets, POIs and dangerous locations, in order to help distinguish among them. Moreover, a soundscape was associated to intersections. Results of the study highlight how tactile and haptic feedbacks, associated with the audio channel, can improve the mental representation of physical space, compared to systems in which only audio feedbacks are adopted.

As tablets and smartphones have become increasingly powerful and versatile, several studies have explored the possibility of using digital counterparts of tactile maps, obtained by exploiting mobile devices’ haptic, audio and text-to-speech capabilities and taking advantage of the assistive technologies provided by the mobile devices’ operating systems.

Palani et al. [9] developed an experimental digital map for the formation of a cognitive spatial representation based on the haptic and audio channels. Authors performed a series of tests with sighted and visually impaired users, aimed at assessing the validity of the digital map with respect to traditional mapping methods (visual and hardcopy-vibration). Their study shows that a digital vibro-audio map provides equivalent performances than traditional mapping methods for both categories of users. Analogous results were achieved in [10], where authors investigate the learning and wayfinding performances obtained by visually impaired users equipped with digital maps for the preliminary exploration of unknown indoor environments. In both studies, vibrations are used to help users acquire knowledge of the physical space, but scarce or no attention is paid to provide information about POIs.

The use of vibration patterns to convey information through the haptic channel was explored in [11] to delimit logical partitions of the screen with different functions, while in [12] Gonzalez-Canete et al. Showed that different vibration patterns associated to haptic icons improve the process of learning the icons’ locations on a touchscreen and the icon recognition rate for both sighted and visually impaired users.

Our study aims at investigating the potentialities of vibration patterns to convey information about the position and function of a POI, thus enhancing the learning rate of a cognitive map. The novelty of our approach is to adopt the haptic channel in order to provide not only spatial cognition, but also an overview of the functional areas of the environment. This paper describes the preliminary phase of our study, in which a mobile Android application for testing purposes was designed and developed with the aid of two visually impaired users. Our reference use case was “planning a visit to an unknown mall”, however our approach can be generalized to any indoor environments or even outdoors.

2 The Test Application

Our test application provides users with a simple audio-vibration map of a shopping mall. Seven functional categories of POIs were identified, which are typical of the target environment, and each category was associated with a different vibration pattern.

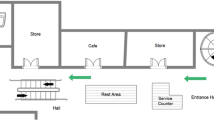

The map was developed by overlapping two layers, i.e. two PNG images, one of which is invisible (hidden) to the end user. The invisible layer is responsible for the haptic and audio rendering, while the other one is used for visualization purposes; in fact, it is a black and white representation of the environment containing the POIs and their descriptive labels (i.e. short descriptions). The hidden layer is formed by a set of colored areas, each corresponding to a POI, as shown in Fig. 1.

We adopted RGB color encoding to identify POIs and provide information about their categories. A color scheme for our application was in fact defined in such a way that each POI category corresponded to a fixed couple of Red and Green levels. The Blue component, on the other hand, was used to precisely identify each single POI and was associated with a descriptive label, e.g. the name of a shop. According to this strategy, hence, each category can account for up to 256 different POIs.

Figure 2 depicts a code snippet of the application, in which POI categories and the related vibration patterns are declared. Vibration patterns are encoded, as required by Android, as arrays of long integers, that are taken as arguments by the functions of the operating system’s vibration service. Vibration patterns associated to the different categories are described in an intuitive form in Table 1, in which the dash identifies a 100 ms pause, and the terms “short”, “long” and “longer” identify 100, 200, and 300 ms vibrations respectively.

As a user swipes their finger across the smartphone screen, the app checks the color of the underlying coordinates. Whenever a couple of red and green components is detected which correspond to a POI category, the matching vibration pattern is triggered, and if the user lifts their finger, the Blue component of the color will be considered to get the matching descriptive label, which in turn will be sent to the TTS engine to be announced.

We strove to design vibration patterns in such a way as to make the POI categories as distinguishable as possible while keeping a low level of intrusiveness. Preliminary user tests highlighted that, after an initial training phase, POIs were successfully recognized through the associated vibrations, anyway a concern arose that the cognitive load may become too heavy in certain conditions (e.g. in narrow areas containing many different kinds of POIs) or for certain categories of users, such as the elderly. We, therefore, introduced a filtering function to enable users to choose which POI categories they want to be notified of. Figure 3 shows a screenshot of the filtering function.

Users were asked to evaluate three alternative modalities of feedback; audio only, vibration only, audio and vibration. Table 2 compares the different behavior of the app when a POI is hovered in the three different modalities of interaction. In any case, lifting the finger in correspondence of a POI triggers the announcement of the POI’s short description.

Figure 4 shows a screenshot of the app with the three buttons to switch between the different modalities on the upper right corner, and the button to filter through POI categories on the bottom. In order to enable visually impaired users to easily switch between the modalities of interaction without the aid of a screen reader, we associated a “toggle mode” function to the double-tap gesture. For this preliminary version of the app, we did not consider the use of Android’s default screen reader (TalkBack), since it posed development issues, due to known bugs, that went beyond the scope of our study.

Due to the COVID-19 pandemic situation, we were not able to carry out extensive structured tests at the time of writing. Sessions for preliminary evaluation and debugging were held remotely via Skype instead of in person, as we had planned. Two experienced visually impaired users (i.e. users accustomed to using the smartphone as a daily aid to their independence) installed the app on their devices; unfortunately, remote testing did not make it possible to involve users that were less skilled in using the smartphone. Users were asked to explore the same map of the ground floor of a shopping mall, unknown to both of them, by interacting with the app in each of the three modalities.

2.1 Preliminary Evaluation

The app was developed on a HUAWEI T5 Mediapad tablet with a 10.1″ display, running Android 8.0. Accessibility and usability debugging sessions were held remotely on a TCL 20S, and a Google Pixel 3, both running Android 11, with a 6.67″ and a 6,3″ display, respectively.

Overall, we found that, by exploring the map through the application, users were able to get an idea of the arrangement of the POIs and had no difficulty in recalling the location of specific POIs, as well as the total number of shops, entrances and stairs. The task of finding a given shop on the map was also successfully accomplished. The map was correctly rendered on their devices and the vibration patterns were correctly perceived, while problems were occasionally noticed with the TTS hints. Our users pointed out that the audio hints were often missing and they had to repeat the exploration of the area surrounding the POI, two or three times before having an audio response. This behavior was probably due to differences in the TTS engines, but we could not reproduce it, so it needs further investigations.

The “audio and vibration” mode was considered “overwhelming”. Users declared they would rather not use it as a “standard” modality of interaction, but only in a preliminary phase, when correspondences between vibration patterns and POI categories must be learnt. Speaking of audio feedback, users declared that it may be worth trying to have less intrusive, non-verbal “audio icons” associated to POI categories. Users also suggested that the stereo audio channels could be exploited to reproduce this kind of icons, in such a way to indicate the direction of the related POIs. The feature of filtering among POI categories was also highly appreciated, anyway it was highlighted that POIs related to obstacles or potentially dangerous structural elements (such as stairs) should always be shown on the map, and users should not be enabled to filter them out.

Finally, a “zoom and pagination” feature was required for a later version of the app, since vibration hints related to close POIs in narrow areas occasionally generated confusion and required repeated explorations. For the map under test, this phenomenon had a fairly limited impact, but for more complex maps, e.g. relating to urban environments, a zooming function that could also divide the space into separately navigable areas was suggested as very helpful.

3 Open Issues and Future Work

Besides the bugs signaled by our users, which are strictly platform-dependent, the major open issues we will face before releasing the app for testing to a wide audience are those related to the “zooming and pagination feature”. Integration with TalkBack, albeit not fundamental for evaluating the validity of our approach, will also be implemented, in order to allow an easier execution of the tests to a greater number of users.

Regarding the use of the haptic channel, further ad hoc trials will be carried out, focusing on specific aspects such as the maximum number of patterns that can be used at the same time and the most effective pause and vibration configurations. In particular, we will analyze correlations between the users’ demographic data and their preferred patterns. The need to hold the tests remotely prevented us from involving users who were less experienced in the use of digital technologies. In the upcoming tests, we will involve also this crucial category of users. Finally, the extension of the presented approach to outdoor maps provided by services such as OpenStreetMap is already the subject of an ongoing study.

4 Conclusions

We have presented a novel approach to exploit the haptic channel in order to help users with visual impairments build mental representations of a physical space. In particular, we focused our attention on mobile applications as a means of building cognitive maps. A mobile application to explore a shopping mall was developed for testing purposes and evaluated by two visually impaired users. Vibration patterns were used to indicate positions and functions of distinct areas in the shopping mall, together with more traditional audio cues; comparisons between different modalities of interaction were carried out. Overall, our approach has proven sound and promising for the future. Of course, more extensive testing needs to be done, involving many more users and more effective functionalities. To this end, we have highlighted the problems that are still open and how we intend to solve them. Finally, we have described possible ways to exploit the potentialities of our study in the near future.

References

Google Maps website: https://developers.google.com/maps?hl=en

OpenStreetMap website: https://www.openstreetmap.org/about

Khan, A., Khusro, S.: An insight into smartphone-based assistive solutions for visually impaired and blind people: issues, challenges and opportunities. Univ. Access Inf. Soc. 20(2), 265–298 (2020). https://doi.org/10.1007/s10209-020-00733-8

Epstein, R.A., Patai, E.Z., Julian, J.B., Spiers, H.J.: The cognitive map in humans: spatial navigation and beyond. Nat. Neurosci. 20, 1504–1513 (2017)

Papadopoulos, K., Koustriava, E., Koukourikos, P., et al.: Comparison of three orientation and mobility aids for individuals with blindness: Verbal description, audio-tactile map and audio-haptic map. Assist. Technol. 29, 1–7 (2017)

Espinosa, M.A., Ochaita, E.: Using tactile maps to improve the practical spatial knowledge of adults who are blind. J. Vis. Impairment Blindness 92, 338–345 (1998)

Saket, B., Prasojo, C., Huang, Y., Zhao, S.: Designing an effective vibration-based notification interface for mobile phones. In: Proceedings of the 2013 Conference on Computer Supported Cooperative Work (2013)

Bharadwaj, A., Shaw, S., Goldreich, D.:Comparing tactile to auditory guidance for blind individuals. Front. Hum. Neurosci. 13 (2019)

Palani, H.P., Fink, P.D., Giudice, N.A.: Comparing map learning between touchscreen-based visual and haptic displays: a behavioral evaluation with blind and sighted users. Multimodal Technol. Interact. 6(1) (2021)

Giudice, N.A., Guenther, B.A., Jensen, N.A., and Haase, K.N.: Cognitive mapping without vision: comparing wayfinding performance after learning from digital touchscreen-based multimodal maps vs. embossed tactile overlays. Front. Hum. Neurosci. 14 (2020)

Buzzi, M., Buzzi, M., Leporini, B., Paratore, M.T.: Vibro-tactile enrichment improves blind user interaction with mobile touchscreens. In Proceedings of the 14th IFIP Conference on Human-Computer Interaction, Cape Town, South Africa, 2–6 September 2013

González-Cañete, F.J., López-Rodríguez, J.L., Galdón, P.M., Estrella, A.D.: Improving the screen exploration of smartphones using haptic icons for visually impaired users. Sensors 21 (2021)

Ryu, H., Kim, T., Li, K.: Indoor navigation map for visually impaired people. ISA 2014 (2014)

Acknowledgments

This work was funded by the Italian Ministry of Research through the research projects of national interest (PRIN) TIGHT (Tactile InteGration for Humans and arTificial systems).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 ICST Institute for Computer Sciences, Social Informatics and Telecommunications Engineering

About this paper

Cite this paper

Paratore, M.T., Leporini, B. (2023). Haptic-Based Cognitive Mapping to Support Shopping Malls Exploration. In: Pires, I.M., Zdravevski, E., Garcia, N.C. (eds) Smart Objects and Technologies for Social Goods. GOODTECHS 2022. Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering, vol 476. Springer, Cham. https://doi.org/10.1007/978-3-031-28813-5_4

Download citation

DOI: https://doi.org/10.1007/978-3-031-28813-5_4

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-28812-8

Online ISBN: 978-3-031-28813-5

eBook Packages: Computer ScienceComputer Science (R0)