Abstract

In this note, we prove self-standardized central limit theorems (CLTs) for trimmed subordinated subordinators. We shall see that there are two ways to trim a subordinated subordinator. One way leads to CLTs for the usual trimmed subordinator and a second way to a closely related subordinated trimmed subordinator and CLTs for it.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

2020 Mathematics Subject Classification:

1 Introduction

Ipsen et al [3] and Mason [7] have proved under general conditions that a trimmed subordinator satisfies a self-standardizedcentral limit theorem [CLT]. One of their basic tools was a classic representation for subordinators (e.g., Rosiński [9]). Ipsen et al [3] used conditional characteristic function methods to prove their CLT, whereas Mason [7] applied a powerful normal approximation result for standardized infinitely divisible random variables by Zaitsev [12]. In this note, we shall examine self-standardized CLTs for trimmed subordinated subordinators. It turns out that there are two ways to trim a subordinated subordinator. One way leads to CLTs for the usual trimmed subordinator treated in [3] and [7], and a second way to a closely related subordinated trimmed subordinator and CLTs for it.

We begin by describing our setup and establishing some basic notation. Let \(V=\left ( V\left ( t\right ) ,t\geq 0\right ) \) and \(X=\left ( X\left ( t\right ) ,t\geq 0\right ) \) be independent 0 drift subordinators with Lévy measures ΛV and ΛX on \(\mathbb {R}^{+}=\left ( 0,\infty \right ) \), respectively, with tail function \(\overline {\Lambda }_{V}(x)=\Lambda _{V}((x,\infty ))\), respectively, \(\overline {\Lambda }_{X}(x)=\Lambda _{X}((x,\infty ))\), defined for x > 0, satisfying

For u > 0, let \(\varphi _{V}(u)=\sup \{x:\overline {\Lambda }_{V}(x)>u\},\) where \(\sup \varnothing :=0\). In the same way, define φX.

Remark 1

Observe that we always have

Moreover, whenever \(\overline {\Lambda }_{V}(0+)=\infty \), we have

For details, see Remark 1 of Mason [7]. The same statement holds for φX.

Recall that the Lévy measure ΛV of a subordinator V satisfies

The subordinator V has Laplace transform defined for t ≥ 0 by

where

which can be written after a change of variable to

In the same way, we define the Laplace transform of X.

Consider the subordinated subordinator process

Applying Theorem 30.1 and Theorem 30.4 of Sato [11], we get that the process W is a 0 drift subordinator W with Lévy measure ΛW defined for Borel subsets B of \(\left ( 0,\infty \right ) \) by

with Lévy tail function

Remark 2

Notice that (1) implies

To see this, we have by (3) that

Now \(\overline {\Lambda }_{V}\left ( 0+\right ) =\infty \) implies that for all y > 0, \(P\left \{ V\left ( y\right ) \in \left ( 0,\infty \right ) \right \} =1.\) Hence by the monotone convergence theorem,

For later use, we note that W has Laplace transform defined for t ≥ 0 by

where

Definition 30.2 of Sato [11] calls the transformation of V into W given by \(W\left ( t\right ) =V\left ( X\left ( t\right ) \right ) \) subordination by the subordinator X, which is sometimes called the directing process.

2 Two Methods of Trimming W

In order to talk about trimming W, we must first discuss the ordered jump sequences of V , X, and W. For any t > 0, denote the ordered jump sequence \(m_{V}^{\left ( 1\right ) }(t)\geq m_{V}^{\left ( 2\right ) }(t)\geq \cdots \) of V on the interval \(\left [ 0,t\right ] \). Let ω1, ω2, … be i.i.d. exponential random variables with parameter 1, and for each n ≥ 1, let Γn = ω1 + … + ωn. It is well-known that for each t > 0,

and hence for each t > 0,

See, for instance, equation (1.3) in IMR [3] and the references therein. It can also be inferred from a general representation for subordinators due to Rosiński [9].

In the same way, we define for each t > 0, \(\left ( m_{X}^{\left ( r\right ) }(t)\right ) _{r\geq 1}\) and \(\left ( m_{W}^{\left ( r\right ) }(t)\right ) _{r\geq 1}\), and we see that the analogs of the distributional identity (4) hold with \(m_{V}^{\left ( r\right ) }\) and φV replaced by \(m_{X}^{\left ( r\right ) }\) and φX , respectively, \(m_{W}^{\left ( r\right ) }\) and φW. Recalling (2), observe that for all t > 0,

From (6) and the version of (4) with \(m_{V}^{\left ( r\right ) }\) and φV replaced by \(m_{W}^{\left ( r\right ) }\) and φW, we have for each t > 0

Let V, X and \(\left ( \Gamma _{r}\right ) _{r\geq 1}\) be independent. In particular, V is independent of

Next consider for each t > 0

Note that conditioned on \(X\left ( t\right ) =y\)

Therefore, using (4), we get for each t > 0

and thus by (5),

Here are two methods of trimming \(W(t)=V\left ( X(t)\right ) \).

Method I

For each t > 0, trim \(W(t)=V\left ( X(t)\right ) \) based on the ordered jumps of V on the interval \(\left ( 0,X\left ( t\right ) \right ] .\) In this case, for each t > 0 and k ≥ 1, define the kth trimmed version of \(V(X\left ( t\right ) )\)

which we will call the subordinated trimmed subordinator process. We note that

Method II

For each t > 0, trim W(t) based on the ordered jumps of W on the interval \(\left ( 0,t\right ] .\) In this case, for each t > 0 and k ≥ 1, define the kth trimmed version of W(t)

Remark 3

Notice that in method I trimming for each t > 0, we treat \(V(X\left ( t\right ) )\) as the subordinator V randomly evaluated at \(X\left ( t\right ) \), whereas in method II trimming we consider W = V (X) as the subordinator, which results when the subordinator V is randomly time changed by the subordinator X.

Remark 4

Though for each t > 0, \(V(X\left ( t\right ) )=W(t) \), typically we cannot conclude that for each t > 0 and k ≥ 1

This is because it is not necessarily true that

See the example in Appendix 1.

3 Self-Standardized CLTs for W

3.1 Self-Standardized CLTs for Method I Trimming

Set \(V^{\left ( 0\right ) }(t):=V(t)\), and for any integer k ≥ 1, consider the trimmed subordinator

which on account of (4) says for any integer k ≥ 0 and t > 0

Let T be a strictly positive random variable independent of

Clearly, by (4), (7), and (8), we have for any integer k ≥ 0

Set for any y > 0

We see by Remark 1 that (1) implies that

Throughout these notes, Z denotes a standard normal random variable. We shall need the following formal extension of Theorem 1 of Mason [7]. Its proof is nearly exactly the same as the proof of the Mason [7] version, and just replace the sequence of positive constants \(\left \{ t_{n}\right \} _{n\geq 1}\) in the proof of Theorem 1 of Mason [7] by \(\left \{ T_{n}\right \} _{n\geq 1}\). The proof of Theorem 1 of Mason [7] is based on a special case of Theorem 1.2 of Zaitsev [12], which we state in the digression below. Here is our self-standardized CLT for method I trimmed subordinated subordinators.

Theorem 1

Assume that \(\overline {\Lambda }_{V}(0+)=\infty \) . For any sequence of positive integers \(\left \{ k_{n}\right \} _{n\geq 1}\) and sequence of strictly positive random variables \(\left \{ T_{n}\right \} _{n\geq 1}\) independent of \(\left ( \Gamma _{k}\right ) _{k\geq 1}\) satisfying

we have uniformly in x, as \(n\rightarrow \infty \),

which implies as \(n\rightarrow \infty \)

The remainder of this subsection will be devoted to examining a couple of special cases of the following example of Theorem 1.

Example

For each 0 < α < 1, let \(V_{\alpha }=\left ( V_{\alpha }\left ( t\right ) ,t\geq 0\right ) \) be an α-stable process with Laplace transform defined for θ > 0 by

where

(See Example 24.12 of Sato [11].) Note that for Vα,

We record that for each t > 0

For any t > 0, denote the ordered jump sequence \(m_{\alpha }^{\left ( 1\right ) }\left ( t\right ) \geq m_{\alpha }^{\left ( 2\right ) }\left ( t\right ) \geq \dots \) of Vα on the interval \(\left [ 0,t\right ] \). Consider the kth trimmed version of \(V_{\alpha }\left ( t\right ) \) defined for each integer k ≥ 1

which for each t > 0

In this example, for ease of notation, write for each 0 < α < 1 and y > 0, \(\mu _{V_{\alpha }}\left ( y\right ) =\mu _{\alpha }\left ( y\right ) \) and \(\sigma _{V_{\alpha }}^{2}\left ( y\right ) =\sigma _{\alpha }^{2}\left ( y\right ) \). With this notation, we get that

and

From (13), we have that for any k ≥ 1 and T > 0

Notice that

Clearly by (15) for any sequence of positive integers \(\left \{ k_{n}\right \} _{n\geq 1}\) converging to infinity and sequence of strictly positive random variables \(\left \{ T_{n}\right \} _{n\geq 1}\) independent of \(\left ( \Gamma _{k}\right ) _{k\geq 1}\),

Hence, by rewriting (9) in the above notation, we have by Theorem 1 that as \(n\rightarrow \infty \)

Digression

To make the presentation of our Example more self-contained, we shall show in this digression how a special case of Theorem 1.2 of Zaitsev [12] can be used to give a direct proof of (16).

It is pointed out in Mason [7] that Theorem 1.2 of Zaitsev [12] implies the following normal approximation. Let Y be an infinitely divisible mean 0 and variance 1 random variable with Lévy measure Λ and Z be a standard normal random variable. Assume that the support of Λ is contained in a closed interval \(\left [ -\tau ,\tau \right ] \) with τ > 0; then for universal positive constants C1 and C2 for any λ > 0 all \(x\in \mathbb {R}\)

We shall show how to derive (16) from (17). Note that

where \(\left ( \Gamma _{i}^{\prime }\right ) _{i\geq 1}\overset {\mathrm {D}} {=}\left ( \Gamma _{i}\right ) _{i\geq 1}\) and is independent of \(\left ( \Gamma _{i}\right ) _{i\geq 1}\). Let \(Y_{\alpha }=\left ( Y_{\alpha }\left ( y\right ) ,y\geq 0\right ) \) be the subordinator with Laplace transform defined for each y > 0 and θ ≥ 0, by

Observe that the Lévy measure Λα of Yα has Lévy tail function on \(\left ( 0,\infty \right ) \)

with φ function

Thus from (5), for each y > 0,

Also, we find by differentiating the Laplace transform of \(Y_{\alpha }\left ( y\right ) \) that for each y > 0

and hence,

is a mean 0 and variance 1 infinitely divisible random variable whose Lévy measure has support contained in the closed interval \(\left [ -\tau \left ( y\right ) ,\tau \left ( y\right ) \right ] \), where

Thus by (17) for universal positive constants C1 and C2 for any λ > 0 all \(x\in \mathbb {R}\) and λ > 0,

Clearly, since \(\left ( \Gamma _{i}^{\prime }\right ) _{i\geq 1}\overset {\mathrm {D}}{=}\left ( \Gamma _{i}\right ) _{i\geq 1}\) and \(\left ( \Gamma _{i}^{\prime }\right ) _{i\geq 1}\) is independent of \(\left ( \Gamma _{k_{n}}\right ) _{n\geq 1}\), we conclude by (22) and (21) that

Now by the arbitrary choice of λ > 0, we get from (23) that uniformly in x, as \(k_{n}\rightarrow \infty \),

This implies as \(n\rightarrow \infty \)

Since the identity (14) holds for any k ≥ 1 and T > 0, (16) follows from (18) and (24). Of course, there are other ways to establish (24). For instance, (24) can be shown to be a consequence of Anscombe’s Theorem for Lévy processes. For details, see Appendix 2.

Remark 5

For any 0 < α < 1 and k ≥ 1, the random variable \(Y_{\alpha }\left ( \Gamma _{k}\right ) \) has Laplace transform

It turns out that for any t > 0

where \(V_{\alpha }^{\left ( k\right ) }\left ( t\right ) \) and \(m_{\alpha }^{\left ( k\right ) }\left ( t\right ) \) are as in (12). See Theorem 1.1 (i) of Kevei and Mason [6]. Also refer to page 1979 of Ipsen et al [4].

Next we give two special cases of our example, which we shall return to in the next subsection when we discuss self-standardized CLTs for method II trimming.

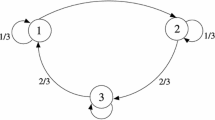

Special Case 1: Subordination of Two Independent Stable Subordinators

For 0 < α1, α2 < 1, let \(V_{\alpha _{1}}\), respectively \(V_{\alpha _{2}}\), be an α1-stable process, respectively an α2-stable process, with a Laplace transform of the form (10). Assume that \(V_{\alpha _{1}}\) and \(V_{\alpha _{2}}\) are independent. Set for t ≥ 0

and

One finds that for each t ≥ 0

Moreover, W is a stationary independent increment process, and for each t ≥ 0 and θ ≥ 0,

This says that W is the α1α2-stable subordinator \(V_{\alpha _{1}\alpha _{2}}\) with Laplace transform (25). (See Example 30.5 on page 202 of Sato [11].) Thus for each t ≥ 0 and θ ≥ 0,

Therefore, with \(c\left ( \alpha _{1}\alpha _{2}\right ) =\frac {1} {\Gamma ^{1/\left ( \alpha _{1}\alpha _{2}\right ) }\left ( 1-\alpha _{1} \alpha _{2}\right ) }\), we get

which by (11), (25), and (26) for each fixed t > 0 is

Here we get that for any sequence of positive integers \(\left \{ k_{n}\right \} _{n\geq 1}\) converging to infinity and sequence of positive constants \(\left \{ s_{n}\right \} _{n\geq 1}\), by setting \(T_{n} =V_{\alpha _{2}}\left ( s_{n}\right ) ,\) for n ≥ 1, we have by (16) that as \(n\rightarrow \infty \)

Special Case 2: Mittag-Leffler Process

For each 0 < α < 1, let Vα be the α-stable process with Laplace transform (10). Now independent of V , let \(X=\left ( X\left ( s\right ) ,s\geq 0\right ) \) be the standard Gamma process, i.e., X is a zero drift subordinator with density for each s > 0

mean and variance

and Laplace transform for θ ≥ 0

which after a little computation is

Notice that X has Lévy density

(See Applebaum [1] pages 54–55.)

Consider the subordinated process

Applying Theorem 30.1 and Theorem 30.4 of Sato [11], we see that W is a drift 0 subordinator with Laplace transform

It has Lévy measure ΛW defined for Borel subsets B of \(\left ( 0,\infty \right ) \), by

In particular, it has Lévy tail function

For later use, we note that

Such a process W is called the Mittag-Leffler process. See, e.g., Pillai [8].

By Theorem 4.3 of Pillai [8] for each s > 0, the exact distribution function Fα,s(x) of \(W\left ( s\right ) \) is for x ≥ 0

which says that for each s > 0 and x ≥ 0

In this special case, for any sequence of positive integers \(\left \{ k_{n}\right \} _{n\geq 1}\) converging to infinity and sequence of positive constants \(\left \{ s_{n}\right \} _{n\geq 1}\), by setting \(T_{n}=X\left ( s_{n}\right ) ,\) for n ≥ 1, we get by (16) that as \(n\rightarrow \infty \)

3.2 Self-Standardized CLTs for Method II Trimming

Let W be a subordinator of the form (2). Set for any y > 0

We see by Remarks 1 and 2 that (1) implies that

For easy reference for the reader, we state here a version of Theorem 1 of Mason [7] stated in terms of a self-standardized CLT for the method II trimmed subordinated subordinator W.

Theorem 2

Assume that \(\overline {\Lambda }_{W}(0+)=\infty \) . For any sequence of positive integers \(\left \{ k_{n}\right \} _{n\geq 1}\) and sequence of positive constants \(\left \{ t_{n}\right \} _{n\geq 1}\) satisfying

we have uniformly in x, as \(n\rightarrow \infty \),

which implies as \(n\rightarrow \infty \)

Remark 6

Theorem 1 of Mason [7] contains the added assumption that \(k_{n}\rightarrow \infty \), as \(n\rightarrow \infty \). An examination of its proof shows that this assumption is unnecessary. Also we note in passing that Theorem 1 implies Theorem 2.

For the convenience of the reader, we state the following results. Corollary 1 is from Mason [7]. The proof of Corollary 2 follows after some obvious changes of notation that of Corollary 1.

Corollary 1

Assume that \(W\left ( t\right ) \), t ≥ 0, is a subordinator with drift 0, whose Lévy tail function \(\overline {\Lambda }_{W}\) is regularly varying at zero with index − α, where 0 < α < 1. For any sequence of positive integers \(\left \{ k_{n}\right \} _{n\geq 1}\) converging to infinity and sequence of positive constants \(\left \{ t_{n}\right \} _{n\geq 1}\) satisfying \(k_{n}/t_{n}\rightarrow \infty \), we have, as \(n\rightarrow \infty \),

Corollary 2

Assume that \(W\left ( t\right ) \), t ≥ 0, is a subordinator with drift 0, whose Lévy tail function \(\overline {\Lambda }_{W}\) is regularly varying at infinity with index − α, where 0 < α < 1. For any sequence of positive integers \(\left \{ k_{n}\right \} _{n\geq 1}\) converging to infinity and sequence of positive constants \(\left \{ t_{n}\right \} _{n\geq 1}\) satisfying kn∕tn → 0, as \(n\rightarrow \infty \), we have (27) .

The subordinated subordinator introduced in Special Case 1 above satisfies the conditions of Corollary 1, and the subordinated subordinator in Special Case 2 above fulfills the conditions of Corollary 2. Consider the two cases.

-

To see this, notice that in Special Case 1, by (25) necessarily W has Lévy tail function on \(\left ( 0,\infty \right ) \)

$$\displaystyle \begin{aligned} \overline{\Lambda}_{W}(y)=\Gamma\left( 1-\alpha_{1}\alpha_{2}\right) y^{-\alpha_{1}\alpha_{2}}{\mathbf{1}}_{\left\{ y>0\right\} }, \end{aligned}$$for 0 < α1, α2 < 1, which is regularly varying at zero with index − α, where 0 < α = α1α2 < 1. In this case, from Corollary 1, we get (27) as long as \(k_{n}\rightarrow \infty \) and \(k_{n}/t_{n}\rightarrow \infty \), as \(n\rightarrow \infty .\)

-

In Special Case 2, observe that \(W=V_{\alpha }\left ( X\right ) ,\) with 0 < α < 1, where \(V_{\alpha }=\left ( V_{\alpha }\left ( t\right ) ,t\geq 0\right ) \) is an α-stable process with Laplace transform (10), \(X=\left ( X\left ( s\right ) ,s\geq 0\right ) \) is a standard Gamma process, and Vα and X are independent. The process \(r^{-1/\alpha }W\left ( r\right ) \) has Laplace transform \(\left ( 1+\theta ^{\alpha }/r\right ) ^{-r}\), for θ ≥ 0, which converges to \(\exp \left ( -\theta ^{\alpha }\right ) \) as \(r\rightarrow \infty \). This implies that for all t > 0

$$\displaystyle \begin{aligned} r^{-1/\alpha}W\left( rt\right) \overset{\mathrm{D}}{\rightarrow}V_{\alpha }\left( t\right) ,\mathrm{as}r\rightarrow\infty\text{.} \end{aligned}$$By part (ii) of Theorem 15.14 of Kallenberg [5] and (10) for all x > 0

$$\displaystyle \begin{aligned} r\overline{\Lambda}_{W}\left( r^{1/\alpha}x\right) \rightarrow\Gamma\left( 1-\alpha\right) x^{-\alpha},\mathrm{as}r\rightarrow \infty\text{.} \end{aligned}$$This implies that W has a Lévy tail function \(\overline {\Lambda }_{W}(y)\) on \(\left ( 0,\infty \right ) \), which is regularly varying at infinity with index − α, 0 < α < 1. In this case, by Corollary 2, we can conclude (27) as long as \(k_{n}\rightarrow \infty \) and kn∕tn → 0, as \(n\rightarrow \infty .\)

4 Appendix 1

Recall the notation of Special Case 1. Let \(V_{\alpha _{1}}\), \(V_{\alpha _{2}}\), and \(\left ( \Gamma _{k}\right ) _{k\geq 1}\) be independent and \(W=V_{\alpha _{1}}\left ( V_{\alpha _{2}}\right ) \). For any t > 0, let \(m_{V_{\alpha _{1}}}^{\left ( 1\right ) }(V_{\alpha _{2}}\left ( t\right ) )\geq m_{V_{\alpha _{1}}}^{\left ( 2\right ) }(V_{\alpha _{2}}\left ( t\right ) )\geq \cdots \) denote the ordered jumps of \(V_{\alpha _{1}}\) on the interval \(\left [ 0,V_{\alpha _{2}}\left ( t\right ) \right ] \). They satisfy

Let \(m_{W}^{\left ( 1\right ) }(t)\geq m_{W}^{\left ( 2\right ) }(t)\geq \cdots \) denote the ordered jumps of W on the interval \(\left [ 0,t\right ] \). In this case, for each t > 0

Observe that for all t > 0

Note that though (28) holds, \(\left ( m_{\alpha _{1}}^{\left ( k\right ) }(V_{\alpha _{2}}\left ( t\right ) )\right ) _{k\geq 1}\) is not equal in distribution to \(\left ( m_{W}^{\left ( k\right ) }(t)\right ) _{k\geq 1}\). To see this, notice that

whereas

Obviously, the sequences (29) and (30) are not equal in distribution and thus

5 Appendix 2

A straightforward modification of the proof of Theorem 1 of Rényi [10] gives the following Anscombe’s theorem for Lévy processes.

Theorem A

Let \(X=\left ( X\left ( t\right ) ,t\geq 0\right ) \) be a mean zero Lévy process with \(EX^{2}\left ( t\right ) =t\) for t ≥ 0, and let \(\eta =\left ( \eta \left ( t\right ) ,t>0\right ) \) be a random process such that \(\eta \left ( t\right ) >0\) for all t > 0 and for some c > 0, \(\eta \left ( t\right ) /t\overset {\mathrm {P}}{\rightarrow }c\), as \(t\rightarrow \infty \), then

A version of Anscombe’s theorem is given in Gut [ 2 ]. See his Theorem 3.1. In our notation, his Theorem 3.1 requires that \(\left \{ \eta \left ( t\right ) ,t\geq 0\right \} \) be a family of stopping times.

Example A

Let \(Y_{\alpha }=\left ( Y_{\alpha }\left ( y\right ) ,y\geq 0\right ) \) be the Lévy process with Laplace transform (19) and mean and variance functions (20). We see that

defines a mean zero Lévy process with \(EX^{2}\left ( y\right ) =y \) for y ≥ 0. Now let \(\eta =\left ( \eta \left ( t\right ) , t\geq 0\right ) \) be a standard Gamma process independent of X. Notice that \(\eta \left ( t\right ) /t\overset {\mathrm {P}}{\rightarrow }1\), as \(t\rightarrow \infty \). Applying Theorem A, we get as \(t\rightarrow \infty \),

In particular, since for each integer k ≥ 1, \(\eta \left ( k\right ) \overset {\mathrm {D}}{=}\Gamma _{k}\), this implies that (24) holds for any sequence of positive integers \(\left ( k_{n}\right ) _{n\geq 1}\) converging to infinity as \(n\rightarrow \infty \), i.e.,

References

D. Applebaum, Lévy Processes and Stochastic Calculus, 2nd edn. Cambridge Studies in Advanced Mathematics, vol. 116 (Cambridge University Press, Cambridge, 2009)

A. Gut, Stopped Lévy processes with applications to first passage times. Statist. Probab. Lett. 28, 345–352 (1996)

Ipsen, Y., Maller, R., Resnick, S.: Trimmed Lévy processes and their extremal components. Stochastic Process. Appl. 130, 2228–2249 (2020)

Ipsen, Y., Maller, R., Shemehsavar, S.: Limiting distributions of generalised Poisson-Dirichlet distributions based on negative binomial processes. J. Theoret. Probab. 33 1974–2000 (2020)

Kallenberg, O.: Foundations of modern probability. Second edition. Probability and its Applications (New York). Springer-Verlag, New York, 2002.

Kevei, P., Mason, D.M.: The limit distribution of ratios of jumps and sums of jumps of subordinators. ALEA Lat. Am. J. Probab. Math. Stat. 11 631–642 (2014).

Mason, D. M.: Self-standardized central limit theorems for trimmed Lévy processes, J. Theoret. Probab. 34 2117–2144 (2021).

Pillai, R. N.: On Mittag-Leffler functions and related distributions, Ann. Inst. Statist. Math. 42 157–161 (1990)

Rosiński, J.: Series representations of Lévy processes from the perspective of point processes. In Lévy processes, 401–415. Birkhäuser Boston, Boston, MA (2001)

Rényi, A.: On the asymptotic distribution of the sum of a random number of independent random variables. Acta Math. Acad. Sci. Hungar. 8 193–199 (1957)

Sato, K.: Lévy Processes and Infinitely Divisible Distributions. Cambridge Univ. Press, Cambridge (2005)

Zaı̆tsev, A. Yu: On the Gaussian approximation of convolutions under multidimensional analogues of S. N. Bernstein’s inequality conditions. Probab. Theory Related Fields 74, 535–566 (1987)

Acknowledgements

The author thanks Ross Maller for some useful email discussions that helped him to clarify the two methods of trimming discussed in this chapter. He is also grateful to Péter Kevei and the referee for their careful readings of the manuscript and valuable comments.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Mason, D.M. (2023). A Note on Central Limit Theorems for Trimmed Subordinated Subordinators. In: Adamczak, R., Gozlan, N., Lounici, K., Madiman, M. (eds) High Dimensional Probability IX. Progress in Probability, vol 80. Birkhäuser, Cham. https://doi.org/10.1007/978-3-031-26979-0_9

Download citation

DOI: https://doi.org/10.1007/978-3-031-26979-0_9

Published:

Publisher Name: Birkhäuser, Cham

Print ISBN: 978-3-031-26978-3

Online ISBN: 978-3-031-26979-0

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)