Abstract

We discuss optimal bounds on the Rényi entropies in terms of the Fisher information. In Information Theory, such relations are also known as entropic isoperimetric inequalities.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

2020 Mathematics Subject Classification:

1 Introduction

The entropic isoperimetric inequality asserts that

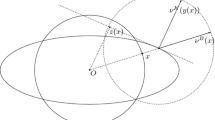

for any random vector X in \({\mathbb R}^n\) with a smooth density. Here

denote the Shannon entropy power and the Fisher information of X with density p, respectively (with integration with respect to Lebesgue measure dx on \({\mathbb R}^n\) which may be restricted to the supporting set supp(p) = {x : p(x) > 0}).

This inequality was discovered by Stam [15] where it was treated in dimension one. It is known to hold in any dimension, and the standard normal distribution on \({\mathbb R}^n\) plays an extremal role in it. Later on, Costa and Cover [6] pointed out a remarkable analogy between (1.1) and the classical isoperimetric inequality relating the surface of an arbitrary body A in \({\mathbb R}^n\) to its volume voln(A). The terminology “isoperimetric inequality for entropies” goes back to Dembo, Costa, and Thomas [8].

As Rényi entropies have become a focus of numerous investigations in the recent time, it is natural to explore more general relations of the form

for the functional

It is desirable to derive (1.2) with optimal constants cα,n independent of the density p, where α ∈ [0, ∞] is a parameter called the order of the Rényi entropy power Nα(X). Another representation

shows that Nα is non-increasing in α. This allows one to define the Rényi entropy power for the two extreme values by the monotonicity to be

where ∥p∥∞ = ess sup p(x). As a standard approach, one may also put N1(X) =limα↓1Nα(X) which returns us to the usual definition of the Shannon entropy power N1(X) = N(X) under mild moment assumptions (such as Nα(X) > 0 for some α > 1).

Returning to (1.1)–(1.2), the following two natural questions arise.

Question 1

Given n, for which range \({\mathfrak A}_n\) of the values of α does (1.2) hold with some positive constant?

Question 2

What is the value of the optimal constant cα,n and can the extremizers in (1.2) be described?

The entropic isoperimetric inequality (1.1) answers both questions for the order α = 1 with an optimal constant c1,n = 2πe n. As for the general order, let us first stress that, by the monotonicity of Nα with respect to α, the function α↦cα,n is also non-increasing. Hence, the range in Question 1 takes necessarily the form \({\mathfrak A}_n = [0,\alpha _n)\) or \({\mathfrak A}_n = [0,\alpha _n]\) for some critical value αn ∈ [0, ∞]. The next assertion specifies these values.

Theorem 1.1

We have

Thus, in the one dimensional case there is no restriction on α (the range is full). In fact, this already follows from the elementary sub-optimal inequality

implying that cα,1 ≥ 1 for all α. To see this, assume that I(X) is finite, so that X has a (locally) absolutely continuous density p, thus differentiable almost everywhere. Since p is non-negative, any point \(y \in {\mathbb R}\) such that p(y) = 0 is a local minimum, and necessarily p′(y) = 0 (as long as p is differentiable at y). Hence, applying the Cauchy inequality, we have

It follows that p has a bounded total variation not exceeding \(\sqrt {I(X)}\), so \(p(x) \leq \sqrt {I(X)}\) for every \(x \in {\mathbb R}\). This amounts to (1.5) according to (1.4) for n = 1.

Turning to Question 2, we will see that the optimal constants cα,1 together with the extremizers in (1.2) may be explicitly described in the one dimensional case for every α using the results due to Nagy [13]. Since the transformation of these results in the information-theoretic language is somewhat technical, we discuss this case in detail in the next three sections (Sects. 2, 3, and 4). Let us only mention here that

where the inequalities are sharp for α = ∞ and α = 0, respectively, with extremizers

The situation in higher dimensions is more complicated, and only partial answers to Question 2 will be given here. Anyway, in order to explore the behavior of the constants cα,n, one should distinguish between the dimensions n = 2 and n ≥ 3 (which is also suggested by Theorem 1.1). In the latter case, these constants can be shown to satisfy

where the left inequality is sharp and corresponds to the critical order \(\alpha = \frac {n}{n-2}\). With respect to the growing dimension, these constants are asymptotically 2πen + O(1), which exhibits nearly the same behavior as for the order α = 1. However (which is rather surprising), the extremizers for the critical order exist for n ≥ 5 only and are described as densities of the (generalized) Cauchy distributions on \({\mathbb R}^n\). We discuss these issues in Sect. 7, while Sect. 6 deals with dimension n = 2, where some description of the constants cα,2 will be given for the range \(\alpha \in [\frac {1}{2},\infty )\).

We end this introduction by giving an equivalent formulation of the isoperimetric inequalities (1.2) in terms of functional inequalities of Sobolev type. As was noticed by Carlen [5], in the classical case α = 1, (1.1) is equivalent to the logarithmic Sobolev inequality of Gross [9], cf. also [4]. However, when α ≠ 1, a different class of inequalities should be involved. Namely, using the substitution \(p=f^2/\int f^2\) (here and in the sequel integrals are understood with respect to the Lebesgue measure on \({\mathbb R}^n\)), we have

and

Therefore (provided that f is square integrable), (1.2) can be equivalently reformulated as a homogeneous analytic inequality

where we can assume that f is smooth and has gradient ∇f (however, when speaking about extremizers, the function f should be allowed to belong to the Sobolev class \(W_1^2({\mathbb R}^n)\)). Such inequalities were introduced by Moser [11, 12] in the following form

More precisely, (1.7) corresponds to (1.6) for the specific choice \(\alpha =1+\frac {2}{n}\). Here, the one dimensional case is covered by Nagy’s paper with the optimal factor \(B_1=\frac {4}{\pi ^2}\). This corresponds to α = 3 and n = 1, and therefore c3,1 = π2 which complements the picture depicted above. To the best of our knowledge, the best constants Bn for n ≥ 2 are not known. However, using the Euclidean log-Sobolev inequality and the optimal Sobolev inequality, Beckner [2] proved that asymptotically \(B_n \sim \frac {2}{\pi e n}\).

Both Moser’s inequality (1.7) and (1.6) with a certain range of α enter the general framework of Gagliardo–Nirenberg’s inequalities

with 1 ≤ q, r, s ≤∞, 0 ≤ θ ≤ 1, and \(\frac {1}{r} = \theta \, (\frac {1}{q} - \frac {1}{n}) +(1-\theta )\,\frac {1}{s}\). We will make use of the knowledge on Gagliardo–Nirenberg’s inequalities to derive information on (1.2).

In the sequel, we denote by \(\|f\|{ }_r = (\int |f|{ }^{r})^{\frac {1}{r}}\) the Lr-norm of f with respect to the Lebesgue measure on \({\mathbb R}^n\) (and use this functional also in the case 0 < r < 1).

2 Nagy’s Theorem

In the next three sections we focus on dimension n = 1, in which case the entropic isoperimetric inequality (1.2) takes the form

for the Rényi entropy

and the Fisher information

In dimension one, our basic functional space is the collection of all (locally) absolutely continuous functions on the real line whose derivatives are understood in the Radon–Nikodym sense. We already know that (2.1) holds for all α ∈ [0, ∞].

According to (1.6), the family (2.1) takes now the form

when α > 1, and

when α ∈ (0, 1).

In fact, these two families of inequalities can be seen as sub-families of the following one, studied by Nagy [13],

with

and some constants D = Dγ,β,p depending on γ, β, and p, only. For such parameters, introduce the functions yp,γ = yp,γ(t) defined for t ≥ 0 by

To involve the parameter β, define additionally yp,γ,β implicitly as follows. Put yp,γ,β(t) = u, 0 ≤ u ≤ 1, with

if p ≤ γ. If p > γ, then yp,γ,β(t) = u, 0 ≤ u ≤ 1, is the solution of the above equation for

and yp,γ,β(t) = 0 for all t > t0. With these notations, Nagy established the following result.

Theorem 2.1 ([13])

Under the constraint (2.4), for any (locally) absolutely continuous function \(f:{\mathbb R} \rightarrow {\mathbb R}\),

-

(i)

$$\displaystyle \begin{aligned} \| f \|{}_\infty \leq \Big(\frac{q}{2}\Big)^{\frac{1}{q}} \Big( \int {|f'|}^p \Big)^{\frac{1}{pq}} \Big( \int |f|{}^\gamma \Big)^{\frac{p-1}{pq}}.{} \end{aligned} $$(2.5)

Moreover, the extremizers take the form f(x) = ayp,γ(|bx + c|) with a, b, c constants (b ≠ 0).

-

(ii)

$$\displaystyle \begin{aligned} \int |f|{}^{\beta+\gamma} \leq \left(\frac{q}{2}\,H\Big(\frac{q}{\beta} , \frac{p-1}{p}\Big) \right)^{\frac{\beta}{q}} \Big( \int {|f'|}^p \Big)^{\frac{\beta}{pq}} \Big( \int |f|{}^\gamma \Big)^{1+\frac{\beta(p-1)}{pq}},{} \end{aligned} $$(2.6)

where

$$\displaystyle \begin{aligned} H(u,v)=\frac{\Gamma(1+u+v)}{\Gamma(1+u)\,\Gamma(1+v)} \, \Big( \frac{u}{u+v} \Big)^u \Big( \frac{v}{u+v} \Big)^v, \quad u,v \geq 0. \end{aligned}$$Moreover, the extremizers take the form f(x) = ayp,γ,β(|bx + c|) with a, b, c constants (b ≠ 0).

Here, Γ denotes the classical Gamma function, and we use the convention that H(u, 0) = H(0, v) = 1 for u, v ≥ 0. It was mentioned by Nagy that H is monotone in each variable. Moreover, since \(H(u,1) = (1 + \frac {1}{u})^{-u}\) is between 1 and \(\frac {1}{e}\), one has \(1 > H(u,v) > (1 + \frac {1}{u})^{-u} > \frac {1}{e}\) for all 0 < v < 1. This gives a two-sided bound

3 One Dimensional Isoperimetric Inequalities for Entropies

The inequalities (2.2) and (2.3) correspond to (2.6) with parameters

and

respectively. Hence, as a corollary from Theorem 2.1, we get the following statement which solves Question 2 when n = 1. Note that, by Theorem 2.1, the extremal distributions (their densities p) in (2.1) are determined in a unique way up to non-degenerate affine transformations of the real line. So, it is sufficient to indicate just one specific extremizer for each admissible collection of the parameters. Recall the definition of the optimal constants cα,1 from (2.1).

Theorem 3.1

-

(i)

In the case α = ∞, we have

$$\displaystyle \begin{aligned} c_{\infty,1}= 4. \end{aligned}$$Moreover, the density \(p(x)=\frac {1}{2}\,e^{-|x|}\) \((x \in {\mathbb R})\) of the two-sided exponential distribution represents an extremizer in (2.1).

-

(ii)

In the case 1 < α < ∞, we have

$$\displaystyle \begin{aligned} c_{\alpha,1} = \frac{2 \pi}{\alpha-1}\,\Big( \frac{2}{\alpha+1}\Big)^{\frac{\alpha-3}{\alpha-1}} \bigg( \frac{\Gamma(\frac{1}{\alpha-1})} {\Gamma( \frac{\alpha+1}{2(\alpha-1)})} \bigg)^2. \end{aligned}$$Moreover, the density \(p(x)=a\cosh (x)^{-\frac {2}{\alpha -1}}\) with a normalization constant \(a =\frac {1}{\sqrt {\pi }} \frac {\Gamma (\frac {\alpha +1}{2(\alpha -1)})}{\Gamma (\frac {1}{\alpha -1})}\) represents an extremizer in (2.1).

-

(iii)

In the case 0 < α < 1,

$$\displaystyle \begin{aligned} c_{\alpha,1} = \frac{2 \pi}{1-\alpha} \, \Big( \frac{2}{1+\alpha}\Big)^{\frac{1+\alpha}{1-\alpha}} \bigg( \frac{\Gamma(\frac{1+\alpha}{2(1-\alpha)})}{\Gamma(\frac{1}{1-\alpha})}\bigg)^2. \end{aligned}$$Moreover, the density \(p(x)=a\cos {}(x)^{\frac {2}{1-\alpha }} \,1_{[-\frac {\pi }{2},\frac {\pi }{2}]}(x)\) with constant \(a =\frac {1}{\sqrt {\pi }} \frac {\Gamma (\frac {3 - \alpha }{1 - \alpha })}{\Gamma (\frac {3 - \alpha }{2(3 - \alpha )})}\) represents an extremizer in (2.1).

To prove the theorem, we need a simple technical lemma.

Lemma 3.2

-

(i)

Given a > 0 and t ≥ 0, the (unique) solution y ∈ (0, 1] to the equation \(\int _y^1 \frac {ds}{s \sqrt {1-s^a}} = t\) is given by

$$\displaystyle \begin{aligned} y= \Big[\cosh\Big( \frac{at}{2} \Big)\Big]^{-\frac{2}{a}}. \end{aligned}$$ -

(ii)

Given a, b > 0 and \(c \in {\mathbb R}\), we have

$$\displaystyle \begin{aligned} \int_{-\infty}^\infty \cosh(|bx+c|)^{-a}\,dx = \ \frac{\sqrt{\pi}}{b} \frac{\Gamma(\frac{a}{2})}{\Gamma(\frac{a+1}{2})}. \end{aligned}$$ -

(iii)

Given a ∈ (0, 1) and u ∈ [0, 1], we have

$$\displaystyle \begin{aligned} \int_{u}^1 \frac{ds}{s^{a} \sqrt{1-s^{2(1-a)}}} \, = \, \frac{1}{1-a}\,\arccos(u^{1-a}). \end{aligned}$$

Remark 3.3

Since \(\Gamma (\frac {a+1}{2}) = \Gamma (m+\frac {1}{2})= \frac {(2m)!}{4^m m!}\sqrt {\pi }\) for a = 2m with an integer m ≥ 1, for such particular values of a, we have

Proof of Lemma 3.2

Changing the variable \(u=\sqrt {1-s^a}\), we have

Inverting this equality leads to the desired result of item (i).

For item (ii) we use the symmetry of the \(\cosh \)-function together with the change of variables u = bx + c and then \(t=\sinh (u)^2\) to get

To obtain the result, we need to perform a final change of variables \(v=\frac {1}{1+t}\). This turns the last integral into

where we used the beta function \(B(x,y) = \int _0^1 (1-v)^{x-1}v^{y-1}\,dv = \frac {\Gamma (x)\Gamma (y)}{\Gamma (x+y)}\), x, y > 0.

Finally, in item (iii), a change of variables leads to

□

Proof of Theorem 3.1

When α = ∞ as in the case (i), (2.2) with \(\int f^2 =1\) becomes

This corresponds to (2.5) with parameters p = q = γ = 2. Therefore, item (i) of Theorem 2.1 applies and leads to

that is, c∞,1 = 4. Moreover, the extremizers in (2.5) are given by

But, the extremizers in (2.1) are of the form \(p=f^2/\int f^2\) with f an extremizer in (2.5). The desired result then follows after a change of variables.

Next, let us turn to the case (ii), where 1 < α < ∞. Here (2.1) is equivalent to (2.2) and corresponds to (2.6) with p = γ = q = 2 and β = 2(α − 1). Therefore, by Theorem 2.1, \((\frac {4}{c_{\alpha ,1}})^{\frac {\alpha -1}{2}} = H(\frac {1}{\alpha -1},\frac {1}{2})^{\alpha -1}\), so that

where we used the identities \(\Gamma (3/2)=\sqrt {\pi }/2\) and Γ(1 + z) = z Γ(z). This leads to the desired expression for cα,1.

As for extremizers, item (ii) of Theorem 2.1 applies and asserts that the equality cases in (2.2) are reached, up to numerical factors, for functions f(x) = y(|bx + c|), with b ≠ 0, \(c \in {\mathbb R}\), and \(y \colon [0,\infty ) \to \mathbb {R}\) defined implicitly for t ∈ [0, ∞) by y(t) = u, 0 ≤ u ≤ 1, with

Now, Lemma 3.2 provides the solution \(y(t)=(\cosh ((\alpha -1)\,t))^{-\frac {1}{\alpha -1}}\). Therefore, the extremizers in (2.2) are reached, up to numerical factors, for functions of the form

Similarly to the case (i), the extremizers in (2.1) are of the form \(p=f^2/\int f^2\) with f an extremizer in (2.2). Therefore, by Lemma 3.2, with some b > 0 and \(c \in {\mathbb R}\),

as announced.

Finally, let us turn to item (iii), when α ∈ (0, 1). As already mentioned, (2.1) is equivalent to (2.3) and therefore corresponds to (2.6) with p = 2, β = 2(1 − α), γ = 2α, and q = 1 + α. An application of Theorem (2.1) leads to the desired conclusion after some algebra (which we leave to the reader) concerning the explicit value of cα,1. In addition, the extremizers are of the form p(x) = ay2(|bx + c|), with a a normalization constant, b ≠ 0, and \(c \in {\mathbb R}\). Here y = y(t) is defined implicitly by the equation

for \(t \leq t_0 = \int _0^1\frac {1}{s^\alpha \sqrt {1-s^{2(1-\alpha )}}} ds\) and y(t) = 0 for t > t0. Item (iii) of Lemma 3.2 asserts that

This leads to the desired conclusion. □

4 Special Orders

As an illustration, here we briefly mention some explicit values of cα,1 and extremizers for specific values of the parameter α in the one dimensional entropic isoperimetric inequality

The order α = 0

The limit in item (iii) of Theorem 3.1 leads to the optimal constant

Since all explicit expressions are continuous with respect to α, the limits of the extremizers in (2.1) for α → 0 represent extremizers in (2.1) for α = 0. Therefore, the densities

are extremizers in (2.1) with α = 0.

The order \(\alpha =\frac {1}{2}\)

Direct computation leads to \(c_{\frac {1}{2},1}=(4/3)^3\pi ^2\). Moreover, the extremizers in (2.1) are of the form

The order α = 1

This case corresponds to Stam’s isoperimetric inequality for entropies. Here c1,1 = 2πe, and, using the Stirling formula, one may notice that indeed

Moreover, Gaussian densities can be obtained from the extremizers \(p(x)=\cosh (bx+c)^{-\frac {2}{\alpha -1}}\) with \(b=b'\sqrt {\alpha -1}\), \(c=c'\sqrt {\alpha -1}\) in the limit as α ↓ 1. (Note that the limit α ↑ 1 would lead to the same conclusion.)

The order α = 2

A direct computation leads to c2,1 = 12 with extremizers of the form

In this case, the entropic isoperimetric inequality may equivalently be stated in terms of the Fourier transform \(\hat {p}(t) =\int e^{itx}p(x)\), \(t \in {\mathbb R}\), of the density p. Indeed, thanks to Plancherel’s identity, we have

Therefore, the (optimal) isoperimetric inequality for entropies yields the relation

which is a global estimate on the L2-norm of \(\hat {p}\). In [18], Zhang derived the following pointwise estimate: If the random variable X with density p has finite Fisher information I(X), then (see also [3] for an alternative proof)

The latter leads to some bounds on c2,1, namely

Hence N2(X)I(X) ≥ 4 that should be compared to N2(X)I(X) ≥ 12.

The order α = 3

Then c3,1 = π2, and the extremizers are of the form

The order α = ∞

From Theorem 3.1, c∞,1 = 4, and the extremizers are of the form

5 Fisher Information in Higher Dimensions

In order to perform the transition from the entropic isoperimetric inequality (1.2) to the form of the Gagliardo–Nirenberg inequality such as (1.8) via the change of functions \(p = f^2/\int f^2\) and back, and to justify the correspondence of the constants in the two types of inequalities, let us briefly fix some definitions and recall some approximation properties of the Fisher information. This is dictated by the observation that in general f in (1.8) does not need to be square integrable, and then p will not be defined as a probability density.

The Fisher information of a random vector X in \({\mathbb R}^n\) with density p may be defined by means of the formula

This functional is well-defined and finite if and only if \(f = \sqrt {p}\) belongs to the Sobolev space \(W_1^2({\mathbb R}^n)\). There is the following characterization: A function f belongs to \(W_1^2({\mathbb R}^n)\), if and only if it belongs to \(L^2({\mathbb R}^n)\) and

In this case, there is a unique vector-function g = (g1, …, gn) on \({\mathbb R}^n\) with components in \(L^2({\mathbb R}^n)\), called a weak gradient of f and denoted g = ∇f, with the property that

As usual, \(C_0^\infty ({\mathbb R}^n)\) denotes the class of all C∞-smooth, compactly supported functions on \({\mathbb R}^n\). Still equivalently, there is a representative \(\bar f\) of f which is absolutely continuous on almost all lines parallel to the coordinate axes and whose partial derivatives \(\partial _{x_k} \bar f\) belong to \(L^2({\mathbb R}^n)\). In particular, \(g_k(x) = \partial _{x_k} \bar f(x)\) for almost all \(x \in {\mathbb R}^n\) (cf. [19], Theorems 2.1.6 and 2.1.4).

Applied to \(f = \sqrt {p}\) with a probability density p on \({\mathbb R}^n\), the property that \(f \in W_1^2({\mathbb R}^n)\) ensures that p has a representative \(\bar p\) which is absolutely continuous on almost all lines parallel to the coordinate axes and such that the functions \(\partial _{x_k} \bar p/\sqrt {p}\) belong to \(L^2({\mathbb R}^n)\). Moreover,

Note that \(W_1^2({\mathbb R}^n)\) is a Banach space for the norm defined by

We use the notation Nα(X) = Nα(p) when a random vector X has density p.

Proposition 5.1

Given a (probability) density p on \({\mathbb R}^n\) such that I(p) is finite, there exists a sequence of densities \(p_k \in C_0^\infty ({\mathbb R}^n)\) satisfying as \(k \rightarrow \infty \)

-

(a)

\(I(p_k) \rightarrow I(p)\), and

-

(b)

\(N_\alpha (p_k) \rightarrow N_\alpha (p)\) for any α ∈ (0, ∞), α ≠ 1.

Proof

Let us recall two standard approximation arguments. Fix a non-negative function \(\omega \in C_0^\infty ({\mathbb R}^n)\) supported in the closed unit ball \(\bar B_n(0,1) = \{x \in {\mathbb R}^n: |x| \leq 1\}\) and such that \(\int \omega = 1\), and put ωε(x) = ε−nω(x∕ε) for ε > 0. Given a locally integrable function f on \({\mathbb R}^n\), one defines its regularization (mollification) as the convolution

It belongs to \(C^\infty ({\mathbb R}^n)\), has gradient ∇fε = f ∗∇ωε, and is non-negative, when f is non-negative. From the definition it follows that, if \(f \in L^2({\mathbb R}^n)\), then

Moreover, if \(f \in W_1^2({\mathbb R}^n)\), then, by (5.2)–(5.3), we have ∇fε = ∇f ∗ ωε. Hence

so that

Thus, \(C^\infty ({\mathbb R}^n) \cap W_1^2({\mathbb R}^n)\) is dense in \(W_1^2({\mathbb R}^n)\).

To obtain (a), define \(f = \sqrt {p}\). Given \(\delta \in (0,\frac {1}{2})\), choose ε > 0 such that \(\|f_\varepsilon - f\|{ }_{W_1^2} < \delta \). Let us take a non-negative function \(w \in C_0^\infty ({\mathbb R}^n)\) with w(0) = 1 and consider a sequence

These functions belong to \(C_0^\infty ({\mathbb R}^n)\), and by the Lebesgue dominated convergence theorem, \(u_l \rightarrow f_\varepsilon \) in \(W_1^2({\mathbb R}^n)\) as \(l \rightarrow \infty \). Hence

for some u = ul, which implies

and thus \(\|u\|{ }_2 > \frac {1}{2}\). As a result, the normalized function \(\tilde f = u/\|u\|{ }_2\) satisfies

where we used \(\| f\|{ }_{W_1^2} \geq \| f\|{ }_2 = 1\). This gives

and hence

Here \(\|f\|{ }_{W_1^2}^2 = 1 + I(p)\) and

Eventually, the probability density \(\tilde p = \tilde f^2\) satisfies

With \(\delta = \delta _k \rightarrow 0\), we therefore obtain a sequence \(p_k = \tilde p\) such that \(I(p_k) \rightarrow I(p)\) as \(k \rightarrow \infty \), thus proving (a).

Let us see that similar functions pk may be used in (b) when

which corresponds to the case where Nα(p) = 0 for α > 1 and Nα(p) = ∞ for 0 < α < 1. Returning to the previously defined functions ul, we observe that \(\|u_l\|{ }_{2\alpha } \rightarrow \|f_\varepsilon \|{ }_{2\alpha }\) as \(l \rightarrow \infty \). Hence, it is sufficient to check that \(\|f_\varepsilon \|{ }_{2\alpha } \rightarrow \|f\|{ }_{2\alpha } = \infty \) for some sequence \(\varepsilon = \varepsilon _k \rightarrow 0\). Indeed, since ∥f∥2 = 1, the function f is locally integrable, implying that \(f_\varepsilon (x) \rightarrow f(x)\) as \(\varepsilon \rightarrow 0\) for almost all points \(x \in {\mathbb R}\). This follows from (5.2) and the Lebesgue differentiation theorem which yields

Hence, by Fatou’s lemma, \(\|f\|{ }_{2\alpha } \leq \liminf _{\varepsilon \rightarrow 0} \|f_\varepsilon \|{ }_{2\alpha }\), and we are done.

Now, let us turn to the basic case where \(\int p(x)^\alpha \, dx < \infty \), α ∈ (0, ∞). To prove (b), we borrow arguments from the proof of Theorem 2.3.2 in [19]. Consider a partition \(\{w_i\}_{i=0}^\infty \) of unity of \({\mathbb R}^n\) subordinate to the covering \(G_i = B_n(0,i+1)\setminus \bar B_n(0,i-1)\), in which Bn(0, −1) = Bn(0, 0) = ∅. Every function wi is supposed to be in \(C_0^\infty ({\mathbb R}^n)\) with a support lying in Gi, to be non-negative, and all of them satisfy

As before, let \(f = \sqrt {p}\). Given \(0 < \delta < \frac {1}{2}\), for each i ≥ 0 choose εi > 0 small enough such that \((w_i f)_{\varepsilon _i}\) is still supported in Gi and

The latter is possible due to the property (5.3) applied to wif.

By the integrability assumption on p, we have ∥wif∥2α < ∞, implying

as long as 2α ≥ 1. Since \(f \in L^2({\mathbb R}^n)\), we similarly have \(\|(w_i f)_\varepsilon - w_i f\|{ }_2 \rightarrow 0\). The latter implies that (5.8) holds in the case 2α < 1 as well, since wif is supported on a bounded set. Therefore, in addition to (5.7), we may require that

Now, by (5.6), \(f(x) = \sum _{i=0}^\infty w_i(x) f(x)\), where the series contains only finitely many non-zero terms. More precisely,

Similarly, for the function \(u(x) = \sum _{i=0}^\infty (w_i(x) f(x))_{\varepsilon _i}\), we have

This equality shows that u is non-negative and belongs to the class \(C_0^\infty ({\mathbb R}^n)\). In addition, by (5.7),

Hence

and repeating the arguments from the previous step, we arrive at the bound (5.5) for the density \(\tilde p = \tilde f^2\) with \(\tilde f = u/\|u\|{ }_2\).

Next, if \(\alpha \geq \frac {1}{2}\), by the triangle inequality in L2α, from (5.9) we also get ∥u − f∥2α < δ, so

If \(\alpha < \frac {1}{2}\), then, applying the inequality \((a_1 + \dots + a_N)^{2\alpha } \leq a_1^{2\alpha } + \dots + a_N^{2\alpha }\) (ak ≥ 0), from (5.9) we deduce that

This yields

and therefore, by Jensen’s inequality,

In view of (5.10), inequalities similar to (5.11)–(5.12) hold also true for the function \(\tilde f = u/\|u\|{ }_2\) in place of u. Applying this with \(\delta = \delta _k \rightarrow 0\), we obtain a sequence \(\tilde f_k\) such that the probability densities \(\tilde p = \tilde f^2\) satisfy (a) − (b) for any α ≠ 1. □

Corollary 5.2

For any α > 0, α ≠ 1, the infimum

may be restricted to the class of compactly supported, C ∞ -smooth densities p on \({\mathbb R}^n\) with finite Fisher information.

6 Two Dimensional Isoperimetric Inequalities for Entropies

In this section we deal with dimension n = 2. As will be clarified, the entropic isoperimetric inequality

holds true for any α ∈ [0, ∞) with a positive constant cα,2 and does not hold for α = ∞ which answers Question 1 in the introduction. In addition, we will give a certain description of the optimal constants cα,2 in (6.1) for the range \(\alpha \in [\frac {1}{2},\infty )\), thus answering partially Question 2.

When n = 2, the family of inequalities (1.6) takes now the form

with \(\theta =\frac {\alpha -1}{\alpha }\) when α > 1, and

with θ = 1 − α when α ∈ (0, 1).

Both inequalities enter the framework of Gagliardo–Nirenberg’s inequality (1.8). The best constants and extremizers in (1.8) are not known for all admissible parameters. The most recent paper on this topic is due to Liu and Wang [10] (see references therein and historical comments). The case q = s = 2 in (1.8) that corresponds to (6.2) with r = 2α goes back to Weinstein [17] who related the best constants to the solutions of non-linear Schrödinger equations.

We present now part of the results of [10] that are useful for us. Since we will use them for any dimension n ≥ 2, the next statement does not deal only with the case n = 2. Also, since all the inequalities of interest for us deal with the L2-norm of the gradient only, we may restrict ourselves to q = 2 for simplicity, when (1.8) becomes

with parameters satisfying 1 ≤ r, s ≤∞, 0 ≤ θ ≤ 1, and \(\frac {1}{r} = \theta (\frac {1}{2} - \frac {1}{n}) + (1-\theta )\,\frac {1}{s}\). This inequality may be restricted to the class of all smooth, compactly supported functions f ≥ 0 on \({\mathbb R}^n\). Once (6.4) holds in \(C_0^\infty ({\mathbb R}^n)\), this inequality is extended by a regularization and density arguments to the Sobolev space of functions \(f \in L^s({\mathbb R}^n)\) such that \(|\nabla f| \in L^2({\mathbb R}^n)\) (the gradients in this space are understood in a weak sense).

The next statement relates the optimal constant in (6.4) to the solutions of the ordinary non-linear equation

on the positive half-axis. Put

We denote by |x| the Euclidean norm of a vector \(x \in {\mathbb R}^n\).

Theorem 6.1 ([10])

In the range 1 ≤ s < σ, s < r < σ + 1,

where the functions ur,s = ur,s(t) are defined for t ≥ 0 as follows.

-

(i)

If s < 2, then ur,s is the unique positive decreasing solution to the equation (6.5) in 0 < t < T (for some T), satisfying u′(0) = 0, u(T) = u′(T) = 0, and u(t) = 0 for all t ≥ T.

-

(ii)

If s ≥ 2, then ur,s is the unique positive decreasing solution to (6.5) in t > 0, satisfying u′(0) = 0 and \(\lim _{t \rightarrow \infty } u(t)=0\).

Moreover, the extremizers in (6.4) exist and have the form f(x) = aur,s(|bx + c|) with \(a \in {\mathbb R}\), b ≠ 0, \(c \in {\mathbb R}^n\).

Note that (6.2) corresponds to Gagliardo–Nirenberg’s inequality (6.4) with s = 2, r = 2α, and \(\theta =\frac {\alpha -1}{\alpha }\) for α > 1, while (6.3) with \(\alpha \in [\frac {1}{2},1)\) corresponds to (6.4) with r = 2, s = 2α, and θ = 1 − α. Applying Corollary 5.2, we therefore conclude that

Together with Liu–Wang’s theorem, we immediately get the following corollary, where we put as before

Corollary 6.2

-

(i)

For any α > 1, we have

$$\displaystyle \begin{aligned} c_{\alpha,2}= 4 (\alpha-1)\, \alpha^{-\frac{1}{\alpha-1}} M_2, \end{aligned}$$where M2 is defined for the unique positive decreasing solution u(t) on (0, ∞) to the equation \(u''(t)+\frac {u'(t)}{t}+u(t)^{2\alpha -1}=u(t)\) with u′(0) = 0 and \(\lim _{t \rightarrow \infty } u(t)=0\).

-

(ii)

For any \(\alpha \in [\frac {1}{2},1)\) , we have

$$\displaystyle \begin{aligned} c_{\alpha,2}= 4 (1-\alpha)\, \alpha^{\frac{\alpha}{1-\alpha}} M_{2\alpha}, \end{aligned}$$where M2α is defined for the unique positive decreasing solution u(t) to \(u''(t)+\frac {1}{t}u'(t)+u(t)=u(t)^{2\alpha -1}\) in 0 < t < T with u′(0) = 0, u(T) = u′(T) = 0, and u(t) = 0 for all t ≥ T.

In both cases the extremizers in (6.1) represent densities of the form \(p(x)=\frac {b}{M}u^2(|bx+c|)\), \(x \in \mathbb {R}^2\), with b > 0 and \(c \in {\mathbb R}^2\).

So far, we have seen that (6.1) holds for any α ∈ [1∕2, ∞). Since, as observed in the introduction, α↦cα,n is non-increasing, (6.1) holds also for α < 1∕2 and therefore for any α ∈ [0, ∞). Note that the case α = 1, which is formally not contained in the results above, is the classical isoperimetry inequality for entropies (1.1). Let us now explain why (6.1) cannot hold for α = ∞. The functional form for (6.1) should be the limit case of (6.2) as \(\alpha \rightarrow \infty \), when it becomes

with D = 4∕c∞,2. To see that (6.6) may not hold with any constant D, we reproduce Example 1.1.1 in [14]. Let, for \(x \in \mathbb {R}^2\),

Then, passing to radial coordinates, we have

while f is not bounded. In fact, (6.6) is also violated for a sequence of smooth bounded approximations of f.

7 Isoperimetric Inequalities for Entropies in Dimension n = 3 and Higher

One may exhibit two different behaviors between n = 3, 4, and n ≥ 5 in the entropic isoperimetric inequality

Let us rewrite the inequality (1.6) separately for the three natural regions, namely as

with \(\theta =\frac {n(\alpha -1)}{2\alpha }\) when \(1 < \alpha \leq \frac {n}{n-2}\),

with \(\theta =\frac {2\alpha }{n(\alpha -1)}\) when \(\alpha > \frac {n}{n-2}\) (observe that θ ∈ (0, 1) in this case), and finally

with \(\theta =\frac {n(1-\alpha )}{\alpha (2-n)+n}\) when α ∈ (0, 1).

Both (7.2) and (7.4) enter the framework of Gagliardo–Nirenberg’s inequality (1.8). As for (7.3), we will show that such an inequality cannot hold. To that aim, we need to introduce the limiting case θ = 1 in (7.2), which corresponds to \(\alpha =\frac {n}{n-2}\). It amounts to the classical Sobolev inequality

which is known to hold true with best constant

Moreover, the only extremizers in (7.5) have the form

(sometimes called the Barenblatt profile), see [1, 7, 16]. If \(f \in L^2({\mathbb R}^n)\) and \(|\nabla f| \in L^2({\mathbb R}^n)\), then, by (7.3), we would have that \(f \in L^p({\mathbb R}^n)\) with \(p = 2\alpha > \frac {2n}{n-2}\) which contradicts the Sobolev embeddings. Therefore (7.3) cannot be true, so that (7.1) holds only for \(\alpha \in [0,\frac {n}{n-2}]\).

As for the value of the best constant cα,n in (7.1) and the form of the extremizers, we need to use again Theorem 6.1 which can, however, be applied only for n ≤ 5. As in Corollary 6.2, we adopt the notation

for a function u satisfying the non-linear ordinary differential equation

or (in a different scenario)

Corollary 7.2

Let 3 ≤ n ≤ 5.

-

(i)

For any \(1 < \alpha < \frac {n}{n-2}\), we have

$$\displaystyle \begin{aligned} c_{\alpha,n} = \frac{2n(\alpha-1)}{\alpha}\, \Big( \frac{2\alpha}{\alpha(2-n)+n} \Big)^{\frac{n(\alpha-1)-2}{n(\alpha-1)}}\, M_2^{\frac{2}{n}}, \end{aligned}$$where M2 is defined for the unique positive decreasing solution u(t) to (7.7) on (0, ∞) with u′(0) = 0 and \(\lim _{t \rightarrow \infty } u(t)=0\).

-

(ii)

For any \(\alpha \in [\frac {1}{2},1)\),

$$\displaystyle \begin{aligned} c_{\alpha,n}= 4\, \frac{n(1-\alpha)}{\alpha(2-n)+n}\, \Big( \frac{2\alpha}{\alpha(2-n)+n}\Big)^{\frac{2\alpha}{n(1-\alpha)}}\, M_{2\alpha}^{\frac{2}{n}} \end{aligned}$$where M2α is defined for the unique positive decreasing solution u(t) to (7.8) with u′(0) = 0, u(T) = u′(T) = 0, and u(t) = 0 for all t ≥ T.

In both cases, the extremizers in (7.1) are densities of the form \(p(x)=\frac {b}{M}u^2(|bx+c|)\), \(x \in {\mathbb R}^n\), with b > 0 and \(c \in {\mathbb R}^n\).

For the critical value of α, the picture is more complete but is different.

Corollary 7.3

Let n ≥ 3 and \(\alpha = \frac {n}{n-2}\). Then

-

(i)

For n = 3 and n = 4, (7.1) has no extremizers, i.e., there does not exist any density p for which equality holds in (7.1) with the optimal constant.

-

(ii)

For n ≥ 5, the extremizers in (7.1) exist and have the form

$$\displaystyle \begin{aligned} p(x)=\frac{a}{(1+b|x - x_0|{}^2)^{n-2}}, \quad a,b>0, \ x_0 \in {\mathbb R}^n.{} \end{aligned} $$(7.9)

Remark 7.4

Recall that c1,n = 2πen. Using the Stirling formula, it is easy to see that, for \(\alpha = \frac {n}{n-2}\),

In particular, cα,n ≥ 2πen − c0 for all \(0 \leq \alpha \leq \frac {n}{n-2}\) with some absolute constant c0 > 0. To get a similar upper bound, it is sufficient to test (7.1) with α = 0 on some specific probability distributions. In this case, this inequality becomes

Suppose that the random vector X = (X1, …, Xn) in \({\mathbb R}^n\) has independent components such that every Xk has a common density \(w(s) = \frac {2}{\pi }\,\cos ^2(s)\), \(|s| \leq \frac {\pi }{2}\). As we already mentioned in Sect. 4, this one dimensional probability distribution appears as an extremal one in the entropic isoperimetric inequality (1.2) for the parameter α = 0. The random vector X has density

so that

Therefore, from (7.10) we may conclude that c0,n ≤ 4π2n.

Proof of Corollaries 7.2 – 7.3

The first corollary is obtained by a straight forward application of Theorem 6.1 with

when \(1 < \alpha < \frac {n}{n-2}\), and with

when α ∈ (0, 1). Details are left to the reader.

For the second corollary, we first observe that (7.2) can be recast for n ≥ 3 and \(\alpha = \frac {n}{n-2}\) as

Therefore \(\frac {4}{c_{\alpha ,n}}=S_n^2\) from which the explicit value of cα,n follows (recalling Corollary 5.2).

Now, in order to analyze the question about the extremizers in (7.1), suppose that we have an equality in it for a fixed (probability) density p on \({\mathbb R}^n\). In particular, we should assume that the function \(f = \sqrt {p}\) belongs to \(W_1^2({\mathbb R}^n)\). Rewriting (7.1) in terms of f, we then obtain an equality in (7.11), which is the same as (7.5). As mentioned earlier, this implies that f must be of the form (7.6), thus leading to (7.9). However, whether or not this function p is integrable depends on the dimension. Using polar coordinates, one immediately realizes that

has the same behavior as \(\int _1^\infty \frac {1}{r^{n-3}}dr\). But, the latter integral converges only if n ≥ 5. □

References

T. Aubin, Problèmes isopérimétriques et espaces de Sobolev. J. Differ. Geom. 11, 573–598 (1976)

W. Beckner, Asymptotic estimates for Gagliardo-Nirenberg embedding constants. Potential Anal. 17, 253–266 (2002)

S.G. Bobkov, G.P. Chistyakov, F. Götze, Fisher information and the central limit theorem. Probab. Theory Relat. Fields 159(1–2), 1–59 (2014)

S.G. Bobkov, N. Gozlan, C. Roberto, P.-M. Samson, Bounds on the deficit in the logarithmic Sobolev inequality. J. Funct. Anal. 267(11), 4110–4138 (2014)

E.A. Carlen, Superadditivity of Fisher’s information and logarithmic Sobolev inequalities. J. Funct. Anal. 101(1), 194–211 (1991)

M.H.M. Costa, T.M. Cover, On the similarity of the entropy power inequality and the Brunn-Minkowski inequality. IEEE Trans. Inform. Theory 30, 837–839 (1984)

M. Del Pino, J. Dolbeault, Best constants for Gagliardo-Nirenberg inequalities and applications to nonlinear diffusions. J. Math. Pures Appl. (9) 81(9), 847–875 (2002)

A. Dembo, T.M. Cover, J. Thomas, Information theoretic inequalities. IEEE Trans. Inform. Theory 37(6), 1501–1518 (1991)

L. Gross, Logarithmic Sobolev inequalities. Am. J. Math. 97, 1061–1083 (1975)

J.-G. Liu, J. Wang, On the best constant for Gagliardo-Nirenberg interpolation inequalities. Preprint (2017). Available at http://arxiv.org/abs/1712.10208v1

J. Moser, On Harnack’s theorem for elliptic differential equations. Commun. Pure Appl. Math. 14, 577–591 (1961)

J. Moser, A Harnack inequality for parabolic differential equations. Commun. Pure Appl. Math. 17, 101–134 (1964); correction in 20, 231–236 (1967)

B. Nagy, Über integralungleichungen zwischen einer Funktion und ihrer Ableitung. Acta Univ. Szeged. Sect. Sci. Math. 10, 64–74 (1941)

L. Saloff-Coste, Aspects of Sobolev-Type Inequalities, vol. 289 (Cambridge University Press, Cambridge/New York, 2002)

A.J. Stam, Some inequalities satisfied by the quantities of information of Fisher and Shannon. Inform. Control 2, 101–112 (1959)

G. Talenti, Best constant in Sobolev inequality. Ann. Mat. Pura Appl. 110, 353–372 (1976)

M.I. Weinstein, Nonlinear Schrödinger equations and sharp interpolation estimates. Commun. Math. Phys. 87, 567–576 (1983)

Z. Zhang, Inequalities for characteristic functions involving Fisher information. C. R. Math. Acad. Sci. Paris 344(5), 327–330 (2007)

W.P. Ziemer, Weakly Differentiable Functions. Sobolev Spaces and Functions of Bounded Variation. Graduate Texts in Mathematics, vol. 120 (Springer, New York, 1989), xvi+308pp

Acknowledgements

Research of the first author was partially supported by the NSF grant DMS-2154001. Research of the second author was partially supported by the grants ANR-15-CE40-0020-03 – LSD – Large Stochastic Dynamics, ANR 11-LBX-0023-01 – Labex MME-DII and Fondation Simone et Cino del Luca in France. This research has been conducted within the FP2M federation (CNRS FR 2036).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Bobkov, S.G., Roberto, C. (2023). Entropic Isoperimetric Inequalities. In: Adamczak, R., Gozlan, N., Lounici, K., Madiman, M. (eds) High Dimensional Probability IX. Progress in Probability, vol 80. Birkhäuser, Cham. https://doi.org/10.1007/978-3-031-26979-0_3

Download citation

DOI: https://doi.org/10.1007/978-3-031-26979-0_3

Published:

Publisher Name: Birkhäuser, Cham

Print ISBN: 978-3-031-26978-3

Online ISBN: 978-3-031-26979-0

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)