Abstract

Owing to Machine Learning and visual action recognition, Handwritten Digital Recognition (HDR) has become critical to most other research groups and intends to reach society. Because Arabic digits are more complicated than English models, quick creations are made. Convolutional Neural Networks (CNN) has the advantage of extracting and using feature data. The activity of the convolution process entails several layers. Specific activities would be executed in each layer, and the performance would be transferred to the next layer due to the function. Image processing techniques were used as convolutional layers at the first layer to create different Convolutional Neural Networks. Except for input and output layers, which operate minor matrix equations, all neurons in one layer generally produce routine arithmetic computations, and that’s how a layer initially comes. The training and classification of Machine Learning methods were chosen from the MNIST database, and the findings were enhanced using an improving method.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

A Convolutional Neural Network provides the convenience of extracting and using feature vectors over other ANN to evaluate the awareness of 2D form with a significantly lower degree of accuracy and no translation, balancing/manipulations. Initially, this research was addressed in their object. Convolutional Neural Networks was a control layer by the author to determine the digit and character [1]. CNN technology is simple, making it easy to install. We might well take the MNIST set of data for training and identification. This set of data aims primarily at characterising articles 1–10. Therefore, we have a total of 85,000 images for training and validation. Each digit is described by 32 grey image pixels [2]. The quantities are transferred to the Convolutional Neural Networks input layers, and afterwards, the hidden layers usually contain two sets of the convolutional layer. After this, it is visualised to the fully connected layers, and a SoftMax classification scheme is presented to dial the numbers. Researchers will use Python, OpenCV, Django, or TensorFlow to incorporate this classification [3]. To achieve accuracy with decreased operating uncertainty and costs, the Convolutional Neural Networks framework is proposed. To express the best learning parameters to set up a CNN, the complete innovative database identifies the investigator applying the review process for HDR and the mathematical collection of neural networks. The cohesive hybrid set of mathematical and geometric features aims to accomplish local and global sample numbers’ characteristics [4]. The process utilises genetic modification algorithms to select the best attributes and a neighbour to evaluate the handwritten digit dataset’s endurance. Regarding the purpose of isolated handwritten words [5], suggested a deep CNN. The proposed method is an excellent way of extracting practical visual attributes from an image frame. The approach assumes two handwritten datasets (IAM and RIMES) under several experiments to determine the model’s optimal parameters [6].

1.1 Abbreviations and Acronyms

-

CNN – Convolution Neural Network

-

NN – Neural Network

1.1.1 Units

-

Fully Connected Multi-layer Neural Network: The multi-layer CNN can label data points in the MNIST training dataset at a failure of less than 4.42% on the validation dataset with one or more neural networks [7]. This channel extracts the features that encompass the practical spatial domain of the image data, and therefore extraordinarily high dimensions are required. Such CNN is questionable because such networks’ criteria are more than 200,000, which is unacceptable if complex and complicated faults have collaborated with large data sets [8].

-

Data Sets: The classification of specific character recognition is investigated in this problem. The MNIST database provided an example of training. This research created a database of 50,000 training sets and 20,000 test results, including census responses extracted. The original images are 64 × 64 standardised in size; however, they contain grey images because of the Graphical User Interface [9].

The image pixel resolution results are computed as −0.1 in shadow (white) and 1.275 in the middle of the photo (black)—the actual outcomes in a measured input of 0 and a difference of roughly 1. The decision variables are 15 grey images of 15 × 8 digits developed by hand. But only data variables were used in this case [10]: the background and foreground (−1) result in binary images. Such images were configured to provide adequate imbalance features for discriminatory practices in each ‘0’.

2 Related Works

HSD of minimal security has indeed formed significant improvements. Several papers were published with research and development of new handwritten numerals, characters, and English word categorisation [11]. The 3-layer Deep Belief Network (DBN) with a greedy algorithm for the MNIST dataset was evaluated, and a precision of 98.75% was described. In the aim of improving the efficiency of recurrent neural networks (RNN), the procedures and principles for deactivating were adapted in recognition of unpredictable handwriting. The reviewer significantly improved RNN efficiency, reducing the Character Error Rate (CER) and Word Error Rate (WER) [12]. The experiment explained that he might have been sufficient to attain an extremely high degree of precision using DL. The accuracy of the CNN with Keras and Theano was 98.72% [13]. Consequently, CNN operations that have used Tensorflow performance in an exceedingly better outcome of 99.70%. Even though the method and guidelines seem more complex and challenging than standard ML algorithms, precision becomes apparent. The investigator focuses on the various pre-processing methods used to recognise characters by respective classes of images, from easy, handwritten verification and information with a vibrantly coloured, cluttered background and wide-ranging complexity. This describes specific pre-processing methodologies, including skew detection and identification, stretching of the image, character recognition, deletion of noise, widespread acceptance and differentiation, and morphological diagnostic methods [14]. It was concluded that together we could process the image completely using a single pre-processing approach. However, a pre-processing module could not achieve complete precision even after implementing all these methodologies. CNN can be used for the acknowledgement of English character recognition. The features are considered from threshold mapping and its Fourier descriptors. The character is described by researching its template and attributing its attributes. To have access, a test was conducted to determine the number of hidden layer nodes to attain the network’s maximum performance. For handwritten English alphabets with a minor test set, 94.13% accuracy was mentioned [15].

3 Proposed CNN Image Classification

Classified images are not a simple problem that can be managed to achieve by various methods. Nevertheless, many ML systems have been effectively implemented in recent times. Researchers consequently recommended dramatic CNNs to direct and evaluate our handwritten figures throughout that work. Building Convolutional Neural Networks plays an essential role in effectiveness and cost factors. So, after thoroughly reading its boundary conditions, we have established a chic CNN in our execution. Usually, critical elements described below have included Convolutional Neural Networks for HDR: Prepare patterns before feeding CNN. Before actually accessing the network, all images are pre-processed [16,17,18]. CNN is constructed for the size of 64 × 64 pixels in our experimental tests. Subsequently, all images were cut to the same size to feed the model. They are provided to the deep model to prepare images to retrieve characteristics. As relatively recently shown, a clear CNN is used throughout the experiment to extract powerful features used in the ultimate decision to support their classification [54,55,56]. The last layer, SoftMax, minimises the variance at the highest possible CNN level [19, 20].

3.1 PReLU

A Parametric Rectified Linear Unit (PReLU) is an intuitionistic fuzzy rectified unit with a curve for zero value. Lawfully: f (Bi) = Bi if Bi ≥ 0f (Bi) = Ai Bi if Bi ≤ 0.

-

Feature Extraction: LR Image

-

CNN Layer 1: 56 Filters of 1 × 5 × 5 Image Size Extraction

-

Activation Function:ReLU

-

Result: 56 Map Feature Set

-

Limits: 1 × 5 × 5 × 56

3.2 Shrinking

Reduces the function vectors’ size (by limiting parameters) using reduced filters (compared to the number of filters used for image feature extraction).

-

Conv. Layer 2: Shrinking 12 filters of size 56 × 2 × 2

-

Activation Function:PReLU

-

Result: 12 Feature Maps

-

Limits: 56 × 2 × 2 × 12

3.3 Non-linear Mapping

Maps the LR to HR patches image features. This procedure is performed with many map-based layers relatively small than SCRNN (Fig. 1).

-

Conv. Layers 3–6:

-

Mapping

-

4 × 12 Filters of Size 12 × 4 × 4

-

Activation Function:PReLU

-

Result: HR Feature Maps

-

Limits: 4 × 12 × 4 × 4 × 12

3.4 Expanding

Determines the complexity of the feature vector. The whole procedure performs the complete reverse function as the decreasing layers produce the HR image more reliably.

-

Conv. Layer 7:

-

Increasing

-

56 Filters of Size 12 × 2 × 2

-

-

Activation Function:PReLU

-

Result: 12 Feature Maps

-

Limits: 12 × 2 × 2 × 56

3.5 Deconvolution

Produces the HR image from HR features

-

DeConv Layer 8:

-

Deconvolution

-

One filter of size 56 × 8 × 9

-

-

Activation Function:PReLU

-

Output: 13 Feature Maps

-

Parameters: 56 × 8 × 9 × 1

The down-sampling layer might be another layer and is often hidden (Fig. 2).

4 Mathematical Model

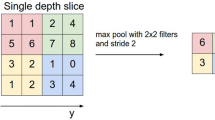

4.1 Subsampling Layer

The sub-sampling function applies a sampling technique on the input maps. The input and output visualisations do not alter in this interface. For instance, if there are N input maps, there are N output maps exactly [21,22,23,24,25,26,27,28,29]. The test operation reduces the size of the feature maps based on the size of the mask [30,31,32,33,34]. The two different shows are used in this investigation as Eq. (1).

where (·) is a sub-sampling feature. This predominant source encapsulates the estimated value or n to block the actual accuracy of the input image maps [35,36,37,38,39,40,41]. Therefore, the map output dimension significantly decreases to n periods for both feature vector components. The output maps are ultimately triggered as linear/non-linear [42,43,44,45,46,47].

5 Result and Discussion

In this case, the digital image of the handwritten digit is the pattern x, and0–9 is the category y. We use 1500 of 64 × 64 gray scaled images as a dataset, and we separate this dataset into 1200 for training data and 300 for testing data. For pattern x, we reshape 64 × 64 gray scaled images to 4096-dimension vectors [48,49,50,51,52,53]. Therefore, we apply LDA on a Gaussian model with 4096-dimension Gaussian distribution (Figs. 3, 4, 5, 6, 7, 8 and 9).

6 Conclusion

The proposed Handwritten Digital Recognition has shown us that traditional neural networks training can distribute comparatively more minor fault rates that aren’t too far from several other trailing results that focus on deep Convolutional Neural Networks. Convolutional Neural Networks has the advantage of being able to extract and use feature data. This research’s significance would address all the Convolutional Neural Networks model features that deliver the best precise assessment for an MNIST dataset. The model’s metadata of dissimilar methodologies and error frequency is ordered as follows: (a) Random Forest Classifier is 1.32%, (b) K-Nearest Neighbours is 4.34%, (c) Support Vector Machine is 4.134%, (d) Convolutional Neural Networks is 5.28%, (e) TensorFlow is appropriate and provides a maximum 100% presentation similar to OpenCV.

References

Ahlawat, S., Rishi, R.: A genetic algorithm-based feature selection for handwritten digit recognition. Recent Pat. Comput. Sci., 12, 304–316, (2019)

Aparna, KH, Vidhya, Subramanian., Kasirajan, M., Vijay Prakash, G., Chakravarthy, V.S., Sriganesh Madhvanath.: Online Handwriting Recognition for Tamil. Proceedings. 9th International Workshop on Frontiers in Handwriting Recognition, Proceedings. 438–443, (2004).

Avita, Ahlawat., Amit, Choudhary., Anand, Nayyar., Saurabh, Singh., Byungun, Yoon.: Improved Handwritten Digit Recognition Using Convolutional Neural Networks (CNN). Sensors, 20, 3344, (2020).

Boufenar, C., Kerboua, A., Batouche, M.: Investigation on deep learning for off-line handwritten Arabic character recognition. Cogn. Syst. Res., 50, 180–195, (2018).

Chen, L.C., Papandreou, G., Kokkinos, I., Murphy, K., Yuille, A.L.: Deep Lab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell., 40, 834–848, (2018).

Devi R. K and Elizabeth Rani G.: “A Comparative Study on Handwritten Digit Recognizer using Machine Learning Technique,” IEEE International Conference on Clean Energy and Energy Efficient Electronics Circuit for Sustainable Development, 1–5, (2019).

Elanwar, R., Qin, W., Betke, M.: Making scanned Arabic documents machine-accessible using an ensemble of SVM classifiers. Int. J. Document Anal. Recognit., 21 (1–2), 59–75, (2020).

Garg, N.K., Kaur, D.L., Kumar, D.M.: Segmentation of handwritten Hindi text, Int. J. Comput. Appl., 1 (4), 22–26, (2010).

Liang, T., Xu, X., Xiao, P.: A new image classification method based on modified condensed nearest neighbor and convolutional neural networks. Pattern Recognit. Lett., 94, 105–111, (2017).

Ptucha, R., Such, F., Pillai, S., Brokler, F., Singh, V., Hutkowski, P.: Intelligent character recognition using fully convolutional neural networks. Pattern Recognit., 88, 604–613, (2019).

Sarkhel, R., Das, N., Das, A., Kundu, M., Nasipuri, M.: A multi-scale deep quad tree-based feature extraction method for the recognition of isolated handwritten characters of popular indic scripts. Pattern Recognit., 71, 78–93, (2017).

Shi, B., Bai, X., Yao, C.: An End-to-end trainable neural network for image-based sequence recognition and its application to scene text recognition. IEEE Trans. Pattern Anal. Mach. Intell., 39, 2298–2304, (2017).

Sueiras, J., Ruiz, V., Sanchez, A., Velez, J.F.: Offline continuous handwriting recognition using sequence to sequence neural networks. Neurocomputing, 289, 119–128, (2018).

Vashist P.C, Pandey A and Tripathi A.: A Comparative Study of Handwriting Recognition Techniques, International Conference on Computation, Automation and Knowledge Management, 456–461, (2020).

Wu, Y.C., Yin, F., Liu, C.L., Improving handwritten Chinese text recognition using neural network language models and convolutional neural network shape models. Pattern Recognit., 65, 251–264, (2017).

S. Sudhakar and S. Chenthur Pandian “Secure packet encryption and key exchange system in mobile ad hoc network”, Journal of Computer Science, vol. 8, no. 6, pp. 908–912, (2012).

S. Sudhakar and S. Chenthur Pandian, “Hybrid cluster-based geographical routing protocol to mitigate malicious nodes in mobile ad hoc network”, International Journal of Ad Hoc and Ubiquitous Computing, vol. 21 no. 4, pp. 224–236, (2016).

A. U. Priyadarshni and S. Sudhakar, “Cluster-based certificate revocation by cluster head in mobile ad-hoc network”, International Journal of Applied Engineering Research, vol. 10, no. 20, pp. 16014–16018, (2015).

S. Sudhakar and S. Chenthur Pandian, “Investigation of attribute aided data aggregation over dynamic routing in wireless sensor,” Journal of Engineering Science and Technology, vol. 10, no. 11, pp. 1465–1476, (2015).

T. A. Al-asadi and A. J. Obaid, “Object Based Image Retrieval Using Enhanced SURF,” Asian Journal of Information Technology, vol. 15, no. 16, pp. 2756–2762, (2016).

C. Meshram, R. W. Ibrahim, A. J. Obaid, S. G. Meshram, A. Meshram and A. M. Abd El-Latif, “Fractional chaotic maps based short signature scheme under human-centered IoT environments,” Journal of Advanced Research, (2020).

A. J. Obaid, K. A. Alghurabi, S. A. K. Albermany and S. Sharma, “Improving Extreme Learning Machine Accuracy Utilizing Genetic Algorithm for Intrusion Detection Purposes,” in Advances in Intelligent Systems and Computing, Springer, Singapore, pp. 171–177 (2021).

Ishaq, A., Sadiq, S., Umer, M., Ullah, S., Mirjalili, S., Rupapara, V., & Nappi, M. Improving the Prediction of Heart Failure Patients’ Survival Using SMOTE and Effective Data Mining Techniques. IEEE Access, 9, 39707–39716 (2021).

Surinder Singh and Hardeep Singh Saini “Security approaches for data aggregation in Wireless Sensor Networks against Sybil Attack,” 2018 Second International Conference on Inventive Communication and Computational Technologies, pp. 190–193, Coimbatore, India (2018).

Rustam, F., Khalid, M., Aslam, W., Rupapara, V., Mehmood, A., & Choi, G. S. A performance comparison of supervised machine learning models for Covid-19 tweets sentiment analysis. PLOS ONE, 16(2), e0245909 (2021).

Surinder Singh and Hardeep Singh Saini “Security Techniques for Wormhole Attack in Wireless Sensor Network”, International Journal of Engineering & Technology, Vol. 7, No. 2.23, 59–62 (2018).

Yousaf, A., Umer, M., Sadiq, S., Ullah, S., Mirjalili, S., Rupapara, V., & Nappi, M. Emotion Recognition by Textual Tweets Classification Using Voting Classifier. IEEE Access, 9, 6286–6295 (2021).

Surinder Singh and Hardeep Singh Saini, “Learning-Based Security Technique for Selective Forwarding Attack in Clustered WSN”, Wireless Pers Commun 118, 789–814 (2021).

Sadiq, S., Umer, M., Ullah, S., Mirjalili, S., Rupapara, V., & NAPPI, M. Discrepancy detection between actual user reviews and numeric ratings of Google App store using deep learning. Expert Systems with Applications, 115111 (2021).

A.K. Gupta, Y. K. Chauhan, and T Maity, “Experimental investigations and comparison of various MPPT techniques for photovoltaic system,” Sādhanā, Vol. 43, no. 8, pp. 1–15, (2018).

A. Jain, A. K. Gahlot, R. Dwivedi, A. Kumar, and S. K. Sharma, “Fat Tree NoC Design and Synthesis,” in Intelligent Communication, Control and Devices, Springer, 2018, pp. 1749–1756 (2018).

A.K. Gupta, “Sun Irradiance Trappers for Solar PV Module to Operate on Maximum Power: An Experimental Study,” Turkish Journal of Computer and Mathematics Education, Vol. 12, no. 5, pp. 1112–1121, (2021).

N. R. Misra, S. Kumar, and A. Jain, “A Review on E-waste: Fostering the Need for Green Electronics,” in 2021 International Conference on Computing, Communication, and Intelligent Systems, 2021, pp. 1032–1036 (2021).

A.K. Gupta, Y.K Chauhan, and T Maity and R Nanda, “Study of Solar PV Panel Under Partial Vacuum Conditions: A Step Towards Performance Improvement,” IETE Journal of Research, pp. 1–8, (2020).

A. Jain, A. K. AlokGahlot, and S. K. S. RakeshDwivedi, “Design and FPGA Performance Analysis of 2D and 3D Router in Mesh NoC,” Int. J. Control Theory Appl., pp. 0974–5572, (2017).

A.K. Gupta, Y.K Chauhan, and T Maity, “A new gamma scaling maximum power point tracking method for solar photovoltaic panel Feeding energy storage system,” IETE Journal of Research, vol. 67, no. 1, pp. 1–21, (2018).

Joshi M., Agarwal A.K., Gupta B. Fractal Image Compression and Its Techniques: A Review. In: Ray K., Sharma T., Rawat S., Saini R., Bandyopadhyay A. (eds) Soft Computing: Theories and Applications. Advances in Intelligent Systems and Computing, vol 742. Springer, Singapore (2019).

Agarwal, A. Implementation of Cylomatrix complexity matrix. Journal of Nature Inspired Computing, 1 (2013).

A. K. Gupta et al., “Effect of Various Incremental Conductance MPPT Methods on the Charging of Battery Load Feed by Solar Panel,” in IEEE Access, vol. 9, pp. 90977–90988, (2021).

Saleem A., Agarwal A.K. Analysis and Design of Secure Web Services. In: Pant M., Deep K., Bansal J., Nagar A., Das K. (eds) Proceedings of Fifth International Conference on Soft Computing for Problem Solving. Advances in Intelligent Systems and Computing, vol 437. Springer, Singapore (2016).

N. Gupta and A. K. Agarwal, “Object Identification using Super Sonic Sensor: Arduino Object Radar,” 2018 International Conference on System Modeling & Advancement in Research Trends, pp. 92–96 (2018),

S. Shukla, A. Lakhmani and A. K. Agarwal, “A review on integrating ICT based education system in rural areas in India,” 2016 International Conference System Modeling & Advancement in Research Trends, 2016, pp. 256–259 (2016).

Agarwal A.K., Rani L., Tiwari R.G., Sharma T., Sarangi P.K. Honey Encryption: Fortification Beyond the Brute-Force Impediment. In: Manik G., Kalia S., Sahoo S.K., Sharma T.K., Verma O.P. (eds) Advances in Mechanical Engineering. Lecture Notes in Mechanical Engineering. Springer, Singapore (2021).

Khullar V, Singh HP, Agarwal AK. Spoken buddy for individuals with autism spectrum disorder. Asian J Psychiatr; 62 102712 (2021).

Hassan, M.I., Fouda, M.A., Hammad, K.M. and Hasaballah, A.I. Effects of midgut bacteria and two protease inhibitors on the transmission of Wuchereria bancrofti by the mosquito vector, Culex pipiens. Journal of the Egyptian Society of Parasitology. 43(2): 547–553 (2013).

Surinder Singh and Hardeep Singh Saini, “Detection Techniques for Selective Forwarding Attack in Wireless Sensor Networks”, International Journal of Recent Technology and Engineering, Vol. 7, Issue-6S, 380–383 (2019).

Fouda, M.A., Hassan, M.I., Hammad, K.M. and Hasaballah, A.I. Effects of midgut bacteria and two protease inhibitors on the reproductive potential and midgut enzymes of Culex pipiens infected with Wuchereria bancrofti. Journal of the Egyptian Society of Parasitology. 43(2): 537–546 (2013).

Surinder Singh and Hardeep Singh Saini “Security for Internet of Thing (IoT) based Wireless Sensor Networks”, Journal of Advanced Research in Dynamical and Control Systems, 06-Special Issue, 1591–1596 (2018).

Hasaballah, A.I. Toxicity of some plant extracts against vector of lymphatic filariasis, Culex pipiens. Journal of the Egyptian Society of Parasitology. 45(1): 183–192 (2015).

J. Kubiczek and B. Hadasik, “Challenges in Reporting the COVID-19 Spread and its Presentation to the Society,” J. Data and Information Quality, vol. 13, no. 4, pp. 1–7, Dec. (2021).

Hasaballah, A.I. Impact of gamma irradiation on the development and reproduction of Culex pipiens. International journal of radiation biology. 94(9): 844–849 (2018).

Sajja, G. S., Rane, K. P., Phasinam, K., Kassanuk, T., Okoronkwo, E., & Prabhu, P. Towards applicability of blockchain in agriculture sector. Materials Today: Proceedings (2021).

Pallathadka, H., Mustafa, M., Sanchez, D. T., Sekhar Sajja, G., Gour, S., & Naved, M. Impact of machine learning on management, healthcare and agriculture. Materials Today: Proceedings (2021).

Guna Sekhar Sajja, Malik Mustafa, R. Ponnusamy, Shokhjakhon Abdufattokhov, Murugesan G., P. Prabhu. Machine Learning Algorithms in Intrusion Detection and Classification. Annals of the Romanian Society for Cell Biology, 25(6), 12211–12219 (2021).

Arcinas, Myla & Sajja, Guna & Asif, Shazia & Gour, Sanjeev & Okoronkwo, Ethelbert & Naved, Mohd. Role of Data Mining in Education For Improving Students Performance For Social Change. Turkish Journal of Physiotherapy and Rehabilitation. 32. 6519 (2021).

Hasaballah, A.I. Impact of paternal transmission of gamma radiation on reproduction, oogenesis, and spermatogenesis of the housefly, Musca domestica L. International Journal of Radiation Biology. 97(3): 376–385 (2021).

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Cite this chapter

Deol Gosu, J.S., Subramaniam, B., Nachimuthu, S., Shivasankaran, K., Subburaj, A., Sengan, S. (2023). Comparative Analysis of Handwritten Digit Recognition Investigation Using Deep Learning Model. In: Agarwal, P., Khanna, K., Elngar, A.A., Obaid, A.J., Polkowski, Z. (eds) Artificial Intelligence for Smart Healthcare. EAI/Springer Innovations in Communication and Computing. Springer, Cham. https://doi.org/10.1007/978-3-031-23602-0_4

Download citation

DOI: https://doi.org/10.1007/978-3-031-23602-0_4

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-23601-3

Online ISBN: 978-3-031-23602-0

eBook Packages: EngineeringEngineering (R0)