Abstract

Invariant coordinate (or component) selection (ICS) is a multivariate statistical method introduced by Tyler et al. (J R Stat Soc Ser B (Stat Methodol) 71(3):549–592, 2009) and based on the simultaneous diagonalization of two scatter matrices. A model-based approach of ICS, called invariant coordinate analysis, has already been adapted for compositional data in Muehlmann et al. (Independent component analysis for compositional data. In Daouia, A, Ruiz-Gazen A (eds) Advances in Contemporary Statistics and Econometrics: Festschrift in Honor of Christine Thomas-Agnan. Springer, New York, pp. 525–545, 2021). In a model-free context, ICS is also helpful at identifying outliers Nordhausen and Ruiz-Gazen (J Multivar Anal 188:104844, 2022). We propose to develop a version of ICS for outlier detection in compositional data. This version is first introduced in coordinate space for a specific choice of isometric log-ratio coordinate system associated to a contrast matrix and follows the outlier detection procedure proposed by Archimbaud et al. (Comput Stat Data Anal 128:184–199, 2018a). We then show that the procedure is independent of the choice of contrast matrix and can be defined directly in the simplex. To do so, we establish some properties of the set of matrices satisfying the zero-sum property and introduce a simplex definition of the Mahalanobis distance and the one-step M-estimators class of scatter matrices. We also need to define the family of elliptical distributions in the simplex. We then show how to interpret the results directly in the simplex using two artificial datasets and a real dataset of market shares in the automobile industry.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Anomaly detection

- Elliptical distribution

- Log-ratio transformation

- Market share data

- Scatter matrix

- Ternary diagram

1 Introduction

Compositional data are by nature multivariate. Indeed, vectors with positive components are considered as compositional data when the interest lies in the relative information between their components: this last fact implies that they can be represented by a unique element in a simplex by dividing the components by their sum. Classical statistical techniques need to be adapted to deal with these constraints (positivity, sum equal to one). A common approach consists in transforming the data using the centered log-ratio (clr) or the isometric log-ratio (ilr) transformations (see Egozcue et al. 2011), and in applying standard techniques in this coordinate space. Filzmoser et al. (2012) propose to use the ilr transformation and detect outliers with the usual or the robust version of the Mahalanobis distance. Because of the affine invariance property of the Mahalanobis distance, the authors notice that the identified outliers do not depend on the choice of the ilr transformation. Moreover, they propose some graphical tools in coordinate space based on robust principal component analysis (PCA) and biplots representation in order to interpret the outliers. Their interpretation is only done in coordinate space. This is also the case for Filzmoser et al. (2014) who propose tools based on pairwise Mahalanobis distances for detecting local outliers in data that are compositional and spatial at the same time. In the present work, we consider adapting the invariant coordinate selection (ICS) technique for outlier detection to compositional data. ICS is a multivariate statistical method based on the joint diagonalization of two scatter matrices and aimed at detecting interesting features in multivariate datasets such as outliers or clusters (see, e.g., Tyler et al. 2009 and Archimbaud et al. 2018a). Compared to the Mahalanobis distance criterion, ICS includes a dimension reduction step. Compared to PCA, the components of ICS are invariant under affine transformations. We first propose to introduce ICS in coordinate space using an ilr transformation. Following Archimbaud et al. (2018a), we focus on the case of a small proportion of outliers and use the invariant components associated with the largest eigenvalues of the joint diagonalization of two particular scatter matrices. As with the Mahalanobis distance, the identification of outliers with ICS does not depend on the choice of the ilr transformation (see also Muehlmann et al. 2021). In order to go beyond coordinate space and interpret the outliers in the simplex, we introduce new algebra tools and define eigen-elements of endomorphisms of the simplex. We also introduce a class of one-step M-scatter estimators and elliptical distributions in the simplex. Thanks to these tools, we are able to write a reconstruction formula of the data in the simplex decomposing the data in a proper way for outlier identification and interpretation using ternary diagrams. In Sect. 2, we recall some facts about the ICS method and its application to outlier detection. Section 3 is a reminder about compositional data analysis. In Sect. 4, we develop some tools necessary for Sect. 5. First come some properties of the algebra of D × D matrices with the zero-sum property: in particular, their rank, their inverses, and their eigen-elements. Then Sect. 4.2 defines one-step M-scatter functionals for simplex-valued random variables together with an adapted version of Mahalanobis distance. Finally, Sect. 4.3 introduces the family of elliptical distributions in the simplex. Section 5 first introduces ICS in coordinate space and then reformulates ICS directly in the simplex. In Sect. 5.3, we present a formula for reconstructing the data from ICS in coordinate space and in the simplex. Section 6 is dedicated to three applications, with two toy datasets (with small and large dimensions) and a real marketing application from the automobile industry.

2 Reminder About ICS and Outlier Detection

Invariant coordinate (or component) selection is a multivariate statistical method based on the simultaneous diagonalization of two scatter matrices. As detailed in Nordhausen and Ruiz-Gazen (2022), the method belongs to a large family of multivariate statistical methods and is useful in particular for outlier detection as described below.

2.1 Scatter Matrices

The family of scatter matrices generalizes the notion of covariance matrix (see Nordhausen and Tyler 2015; Tyler et al. 2009, among others), and it has the following functional definition. For a p-dimensional vector X with distribution function FX, a functional S(FX), also denoted by S(X), is called a scatter functional if it is a p × p symmetric positive-definite and affine equivariant matrix. We recall that an affine equivariant matrix S(X) is such that

where T denotes the transpose operator, A is any full rank p × p matrix, and b any p-vector.

For a p-variate dataset Xn = (x1, …, xn)T, the empirical version S(Xn) of a scatter functional is the scatter functional S(Fn), where Fn is the empirical distribution function. Thus, a scatter matrix estimator is a p × p symmetric positive-definite and affine equivariant matrix such that

where A is any full rank p × p matrix, b any p-vector, and 1n an n-dimensional vector of ones.

There exist many scatter matrices as detailed for example in Tyler et al. (2009). The most well-known scatter matrix is the covariance matrix. As many other scatter matrices, the covariance involves the mean that is an affine equivariant location estimator. We recall that an affine equivariant location estimator T is such that:

for the functional version, and

for the empirical version where A is any full rank p × p matrix and b any p-vector.

A general class of scatter matrices is the class of one-step M-estimators with a functional defined by

where w is a non-negative and continuous weight function and

is the square Mahalanobis distance with E(X) the expectation of X and COV(X) its covariance matrix. The sample version of one-step M-estimators is

where \(\bar {\mathbf x}_n=1/n \sum _{i=1}^n \mathbf x_i\) is the empirical mean and

is the empirical version of the square Mahalanobis distance.

Note that the covariance matrix COV is obtained with w(d) = 1, while the fourth-moment-based estimator COV4 is obtained with w(d) = d∕(p + 2). COV4 is widely used in the blind source separation literature (see, e.g., Nordhausen and Virta 2019; Theis and Inouye 2006) but also in the context of outlier detection (see Archimbaud et al. 2018a).

For elliptical distributions with second moments, scatter functionals are all proportional to the covariance matrix (see, e.g., Bilodeau and Brenner 2008). We recall that an elliptical distribution is obtained as an affine transformation of a spherical distribution that is a distribution invariant by orthogonal transformation. Multivariate normal and Student distributions belong to this family of distributions.

2.2 ICS Principle

Let S1(X) and S2(X) be two scatter functionals. ICS consists of the simultaneous diagonalization of S1(X) and S2(X). If the random vector X follows an elliptical distribution, the two scatter matrices will be proportional, and the result will be useless. However, as mentioned in Tyler et al. (2009), comparing two different scatter functionals may help revealing interesting departures from an elliptical distribution. This is the case in particular for anomaly detection. The method searches for a p × p matrix H(X) and a diagonal matrix Λ(X) so that

where Ip denotes the p × p identity matrix. The matrix Λ(X) contains the eigenvalues of S1(X)−1S2(X) in decreasing order, while the columns of the matrix H(X) = (h1, …, hp) contain the corresponding eigenvectors so that

These eigenvalues and eigenvectors can also be derived through the spectral decomposition of the following symmetric matrix:

with U(X) a p × p orthogonal matrix and the same eigenvalues in the diagonal matrix Λ(X). We have

and

Tyler et al. (2009) give an interesting interpretation of the eigenvalues λ1, …, λp in terms of kurtosis. Using the optimality property of eigen-elements, it is easy to see that h1 maximizes the ratio:

over all possible vectors h in \(\mathbb {R}^p\) and that λ1 is equal to the maximum ratio. This ratio of two scale measures can be viewed as a generalized measure of kurtosis, and λ1 can thus be interpreted as a maximum kurtosis. The other eigenvalues and eigenvectors can be defined in a similar way by maximizing the same ratio over vectors h that verify additional orthogonality conditions (see Tyler et al. (2009) for details).

Using any affine equivariant location estimator T(X), the ICS scores Z = (z1, …, zp)T are defined by

or equivalently by zk =< hk, X −T(X) > where < ., . > denotes the standard scalar product. The scores define the affine invariant coordinates or components. The square Euclidian norm of these coordinates is given by

The last expression is a generalization of the Mahalanobis distance (1) of X with the location parameter T(X) (instead of E(X)) and with respect to the scatter matrix S1(X) (instead of COV(X)). In the special case where T(X) = E(X) and S1(X) = COV(X), we have

The empirical version of ICS consists of the joint diagonalization of a scatter pair of estimators S1(Xn) and S2(Xn). For a p × p matrix H(Xn) and a diagonal matrix Λ(Xn), we have

Using any affine equivariant location estimator T(Xn), the ICS scores are given by

and are affine invariant. As in (4), if \(T(\mathbf X_n)=\bar {\mathbf x}_n\) and S1(Xn) = COV(Xn), we have

2.3 ICS for Outlier Detection

As already stated in Tyler et al. (2009), one possible application of ICS is outlier detection. The Mahalanobis distance is a well-known tool to detect outliers (see Rousseeuw and Van Zomeren 1990), but it does not offer the possibility of dimension reduction. ICS gives the possibility of selecting components that are helpful in detecting anomalies (see Archimbaud et al. (2018a) for details). In the case of a small proportion of outliers, the theoretical properties of ICS (see Archimbaud et al. (2018a) for details) lead us to only focus on the invariant components associated with the largest kurtosis and thus the largest eigenvalues. In this context, Archimbaud et al. (2018a) show that the scatter pair S1(X) = COV(X) and S2(X) = COV4(X) is not only simple and fast to compute but also effective in detecting outliers when compared to other pairs that involve robust scatter estimators. Archimbaud et al. (2018a) propose different automatic procedures for invariant components selection based on hypothesis testing. In short, the idea is to test sequentially the normality of each of the invariant components using some classical tests such as the D’Agostino test and to select the first k components that reject the Gaussian assumption. After selecting k invariant components among p, the last step of the procedure is the outlier identification. Let us consider the empirical version of ICS. For each observation i = 1, …, n, the square “ICS distance” is the square Euclidian norm in the invariant coordinate system accounting for the k first coordinates:

where \(z_i^j\) denotes the jth value of the score zi. In Archimbaud et al. (2018a), an observation is flagged as an outlier when its ICS distance using k components is larger than a cutoff based on Monte Carlo simulations from the standard Gaussian distribution. Given a data dimension, a scatter pair, and a number k of selected components, many Gaussian samples are generated, and the ICS distances are computed. A cutoff is derived for a fixed level γ as the mean of the (1 − γ)-quantiles of these distances over the replications. The whole ICS procedure for outlier detection is available in the R package ICSOutlier described in Archimbaud et al. (2018b) and used in Sect. 6 below.

3 Reminder About Compositional Data Analysis

A D composition u is a vector of D parts (or shares) of some whole that carries relative information. There exists a unique representation of this vector in the unit simplex space

For any vector \(\mathbf {w} \in {{\mathbb R}^{+D}},\) its representer in the simplex is obtained by the closure operation

The following operations endow the unit simplex with a vector space structure:

-

1.

⊕ is the perturbation operation, corresponding to the addition in \({\mathbb R}^D\):

$$\displaystyle \begin{aligned} \text{For} \quad \mathbf{u},\mathbf{v} \in {\mathbf{S}}^D, \mathbf{u} \oplus \mathbf{v} = \mathcal{C}(u_1v_1,\ldots,u_Dv_D). \end{aligned}$$ -

2.

⊙ is the power operation, corresponding to the scalar multiplication in \({\mathbb R}^D\):

$$\displaystyle \begin{aligned} \text{For} \quad \lambda \in {\mathbb R}, \mathbf{u} \in {\mathbf{S}}^D \quad \lambda \odot \mathbf{u} = \mathcal{C}(u_1^\lambda,\ldots,u_D^\lambda). \end{aligned}$$

The subtraction operation can be naturally defined by \(\mathbf {u} \ominus \mathbf {v} = \mathcal {C}(u_1/v_1,\ldots , u_D/v_D)\). Compositional data analysis uses log-ratio transformations such as the centered log-ratio (clr) and the isometric log-ratio (ilr) transformations. The clr vector components specify the relative dominance of each compositional part over the whole composition, see for example Filzmoser et al. (2018). Formally, the clr transformation of a vector u ∈SD is defined by

where \( {\mathbf {G}}_D = {\mathbf {I}}_D - \frac {1}{D}{\mathbf {1}}_{D}{{\mathbf {1}}_{D}}^T\), ID is a  identity matrix, and 1D is the D-vector of ones and where the logarithm of u ∈SD is understood componentwise.

identity matrix, and 1D is the D-vector of ones and where the logarithm of u ∈SD is understood componentwise.

For a vector u in the orthogonal space \({\mathbf {1}}_D^\perp \) (orthogonality with respect to the standard scalar product of \({\mathbb R}^D\)), the inverse clr transformation is defined by

The simplex \(\mathcal {S}^D\) of dimension D − 1 can be equipped with the Aitchison scalar product

where the right-hand side scalar product is the standard scalar product in \(\mathbb {R}^D\).

The clr coordinates sum up to zero inducing a degeneracy. For this reason, the class of isometric log-ratio coordinates has been introduced providing orthonormal and non-singular coordinates. For any given orthonormal basis (e1, ⋯ , eD−1) of SD, orthonormality being understood with respect to the Aitchison scalar product here, one can define a so-called contrast matrix V of dimension D × (D − 1) (e.g. Pawlowsky-Glahn et al. 2015) given by V = clr(e1, ⋯ , eD−1), where clr is understood columnwise. To each such matrix,  is associated an isometric log-ratio transformation by

is associated an isometric log-ratio transformation by

The inverse transformation, for any vector u∗ of \({\mathbb R}^{D-1},\) is given by

The link between the ilr and clr transformations is clr(u) = VilrV(u).

4 Multivariate Tools for Compositional Data

For working with scatter matrices for compositional data, we are going to need some algebra tools concerning matrices of endomorphisms in the simplex.

4.1 Algebra of Endomorphisms of the Simplex and Eigendecomposition

Let \(\mathcal {A}\) be the set of D × D matrices such that A1D = 0D and AT1D = 0D, where 0D denotes the D-dimensional column vector of zeros: this condition is called the zero-sum property. Pawlowsky-Glahn et al. (2015) define endomorphisms of the simplex using the ilr transformation and prove that they can be associated to a matrix belonging to \(\mathcal {A}\), see Property 4.16 and pages 55–58. The linearity here refers to the vector space structure of the simplex based on the perturbation and powering operations. Let us introduce an equivalent formulation based on the clr transformation: for u ∈ SD and \(\mathbf A \in \mathcal {A}\), endomorphisms of the simplex are defined by maps \(\mathbf {u} \mapsto \mathbf A \boxdot \mathbf u := \text{clr}^{-1}(\mathbf A\text{clr}(u)).\)

The composition of endomorphisms corresponds to the ordinary matrix product since it is clear that \(\mathbf {A} \boxdot (\mathbf {B} \boxdot \mathbf {u}) = \mathbf {AB} \boxdot \mathbf {u}\), and therefore, \(\mathcal {A}\) is an algebra with neutral element GD. We are now going to extend the definition of the ilr transformation to matrices of \(\mathcal {A}\).

Theorem 1

LetVbe a D × (D − 1) contrast matrix, and letPVbe the D × D block matrix\([\mathbf {V} \; \frac {1}{\sqrt {D}}{\mathbf {1}}_D].\)For a D × D matrix\(\mathbf {A} \in \mathcal {A},\)the (D − 1) × (D − 1) matrixA∗ := ilrV(A) = VTAVis such that\(\mathbf {A} =\mathit{\text{ilr}}^{-1}_V({\mathbf {A}}^*)= \mathbf {V}{\mathbf {A}}^*{\mathbf {V}}^T= {\mathbf {P}}_V \begin {pmatrix} \mathbf A^* & \mathbf 0_{D-1} \\\mathbf 0_{D-1}^T & 0\end {pmatrix} {\mathbf {P}}_V^T\)and satisfies the following properties:

-

1.

The rank ofAis equal to the rank of ilrV(A).

-

2.

If ilrV(A) is invertible, thenAis invertible in\(\mathcal {A}\), and we have the following expressions for its\(\mathcal A\)-inverse

$$\displaystyle \begin{aligned} \mathbf A^{-1} = (\mathbf A + \frac{1}{D} {\mathbf{1}}_{D}{\mathbf{1}}_{D}^T)^{-1} - \frac{1}{D} {\mathbf{1}}_{D}{\mathbf{1}}_{D}^T = \mathbf V(\mathbf V^T\mathbf A\mathbf V)^{-1}\mathbf V^T= \mathbf P_V \begin{pmatrix} {\mathbf A^*}^{-1} & \mathbf 0_{D-1}\\ \mathbf 0_{D-1}^T & 0\end{pmatrix} \mathbf P_V^T. \end{aligned}$$ -

3.

ilrV(AB) = ilrV(A)ilrV(B). IfAis invertible, then ilrV(A−1) = (ilrV(A))−1. If (ilrV(A))1∕2exists, then ilrV(A1∕2) = (ilrV(A))1∕2.

Note that a matrix A of the algebra \(\mathcal {A}\) is never invertible in the space of matrices in the classical sense. But it may be invertible in the sense of the algebra, and its \(\mathcal A\)-inverse then coincides with the Moore–Penrose pseudo-inverse of A in the usual sense. The matrix ilrV(A) is simply the matrix corresponding to A in coordinate space when the coordinates are defined by ilrV. We also extend the definition of the clr transformations to matrices.

Theorem 2

For a D × D matrixB, let us define its clr transformation by

We then have the following properties:

-

1.

If\(\mathbf {A} \in \mathcal {A},\)then clr(A) = A.

-

2.

If \(\mathbf {B} \notin \mathcal {A},\) then \( \mathit{\text{clr}}(\mathbf {B}) \in \mathcal {A}\) and for any \(\mathbf {x} \in \mathcal {S}^D\)

$$\displaystyle \begin{aligned} \mathbf{B} \boxdot \mathbf{x} := \mathit{\text{clr}}^{-1}(\mathit{\text{clr}}(\mathbf{B})\mathit{\text{clr}}(\mathbf{x})) = \mathit{\text{clr}}(\mathbf{B}) \boxdot \mathbf{x}. \end{aligned} $$(6) -

3.

If\(\mathbf {B} \notin \mathcal {A},\)then the unique element\(\mathbf {A} \in \mathcal {A}\)such that ilrV(A) = ilrV(B) isA = clr(B).

-

4.

For any contrast matrixVand any\(\mathbf {A} \in \mathcal {A}\), we have clr(A) = VilrV(A)VT.

Note that the matrix product \(\boxdot \) can be defined even when the matrix B does not belong to \(\mathcal {A}\), but in that case it is not linear. Note also that the ilr and clr transformations preserve symmetry.

The next proposition links the eigen-elements of A to those of ilr(A). Let us first define the notion of \(\mathcal {A}\)-diagonalizable for a matrix of \(\mathcal {A}.\)

Definition 1

A matrix \(\mathbf {A} \in \mathcal {A}\) is said \(\mathcal {A}\)-diagonalizable if there exists a basis e1, …, eD−1 of \(\mathcal {S}^D\) and D − 1 reals λj (j = 1, …, D − 1) such that

We will say that ej is an \(\mathcal {A}\)-eigenvector of A. It is clear that clr(ej) is then an eigenvector of clr(A) = A and that for any contrast matrix V, ilrV(ej) is an eigenvector of ilrV(A). Note that 1D is an eigenvector of \(\mathcal {A}\) associated to the eigenvalue 0. It is natural to say that a matrix \(\mathbf {A}\in \mathcal {A}\) is diagonal in a given basis e1, …, eD−1 of \(\mathcal {S}^D\) if Eq. (7) is satisfied for these vectors.

Theorem 3

LetVbe a D × (D − 1) contrast matrix. For a D × D matrix\(\mathbf {A} \in \mathcal {A},\)we have the following properties:

-

1.

If\(\mathbf e_j^* \in \mathbb {R}^{D-1}\)is an eigenvector of ilrV(A), then\(\mathbf e_j =\mathit{\text{ilr}}^{-1}(\mathbf e_j^*) \in \mathcal {S}^D\)is an\(\mathcal {A}\)-eigenvector ofAand\(\mathbf w_j=\mathit{\text{clr}}(\mathbf e_j) \in \mathbb {R}^D\)an eigenvector ofA.

-

2.

The set of eigenvalues ofAcontains the eigenvalue 0. The other D − 1 eigenvalues ofAcoincide with the eigenvalues of ilrV(A) for any contrast matrixV.

-

3.

ilrV(A) is diagonalizable if and only ifAis diagonalizable, and if and only ifAis\(\mathcal {A}\)-diagonalizable.

All symmetric matrices in \(\mathcal {A}\) are \(\mathcal {A}\)-diagonalizable. Note that the vectors \(\mathbf e_j = \text{clr}^{-1}(\mathbf e_j^*)\) are independent of the contrast matrix V. Let A be a symmetric matrix of \(\mathcal {A}\). Since the vector 1D is an eigenvector of A, A cannot be diagonal in the canonical basis of \(\mathbb {R}^D\), but it can be diagonal in a basis obtained by completing \({\mathbf {w}}_D=\frac {1}{D}{\mathbf {1}}_D\) with D − 1 orthogonal eigenvectors in \({\mathbf {1}}_D^\perp \), say w1, …, wD−1. Then \({\mathbf {e}}_j=\text{clr}^{-1}({\mathbf {w}}_j)\in \mathcal {S}^D\) (j = 1, …, D − 1) is an orthonormal basis of \(\mathcal {S}^D\) for the Aitchison metric since < ei, ej >A =< wi, wj >E = δij, where δij = 1 if i = j and 0 otherwise, and these vectors are \(\mathcal {A}\)-eigenvectors of A. If W = [w1…, wD−1] is the corresponding contrast matrix, then \(\text{ilr}_W(\mathbf {A})_{ij}={\mathbf {w}}_i^T\mathbf {A}{\mathbf {w}}_j= \lambda _j {\mathbf {w}}_i^T{\mathbf {w}}_j=\lambda _i \delta _{ij}\), which shows that ilrW(A) is the (D − 1) × (D − 1) diagonal matrix Λ with the λi as diagonal elements. Then using Theorem 1, we can write that \(\mathbf {A} = {\mathbf {P}}_W \begin {pmatrix} \boldsymbol {\Lambda } & \mathbf 0_{D-1} \\\ \mathbf 0_{D-1}^T & 0\end {pmatrix} {\mathbf {P}}_W^T\)showing that A is similar to the diagonal matrix \(\begin {pmatrix} \boldsymbol {\Lambda } & \mathbf 0_{D-1} \\\ \mathbf 0_{D-1}^T& 0\end {pmatrix}.\) This last result gives us the general form of diagonal matrices of \(\mathcal {A}\) with the corresponding spectral representation \(\mathbf {A} = \sum _{i=1}^{D-1}\lambda _i {\mathbf {w}}_i{\mathbf {w}}_i^T.\)

4.2 One-Step M-Scatter Functionals of a Compositional Random Vector

For a simplex-valued random vector X (see Pawlowsky-Glahn et al. 2015), let us recall the following definition of expectation:

and the following definition of the (clr-)covariance matrix COV⊕X (see Aitchison 1982) given by the D × D matrix

Note that, by Theorem 2, we can see that COV⊕X is also equal to clr−1(COV(clr(X))). Using the same principles, let us now introduce a simplex adapted definition of the square Mahalanobis distance as being the square Mahalanobis distance in the usual sense of the clr coordinates of X

In the same line, let us define the following one-step M-scatter matrix of a simplex-valued random vector as the corresponding scatter of its clr coordinates

For w(d) = d∕(D + 2), we get the fourth-moment-based scatter matrix \({\mathbf {COV}}_4^\oplus \mathbf {X}\):

All these characteristics can also be expressed using the ilr coordinates associated to any contrast matrix V by the following formulas:

and thus

and similarly

Note that the scatter functionals \({\mathbf {COV}}_w^\oplus \mathbf {X}\) belong to the algebra \(\mathcal {A}\), and thus we also have

Given a sample of size n, the empirical versions of the previous scatter matrices can be derived easily.

4.3 Elliptical Distribution in the Simplex

Nguyen (2019) introduces the Student distribution in the simplex with an application to political economy. Mateu-Figueras et al. (2021) review some distributions in the simplex including the multivariate Student distribution. We define a new family of elliptical distributions with second moment in the simplex. A random vector X with values in \(\mathcal {S}^D\) is said to follow an elliptical distribution if any of its ilr coordinates follows an elliptical distribution with second moment in \(\mathbb {R}^{D-1}.\) This definition makes sense due to the following theorem.

Theorem 4

Given two contrast matricesVandW, if\({\mathbf X}_V^* = \mathit{\text{ilr}}_V(\mathbf X)\)follows an elliptical distribution with parameters\(\boldsymbol \mu _V^*=\mathbf E(\mathbf X_V^*)\)and\(\boldsymbol \Sigma _V^*={\mathbf {COV}}(\mathbf X_V^*)\), then\(\mathbf X_W^* = \mathit{\text{ilr}}_W(\mathbf X)\)follows an elliptical distribution with parameters\((\boldsymbol \mu _W^*,\boldsymbol \Sigma _W^*)\)with

From this theorem, we can say that μclr = Vμ∗ = E(clr(X)) is an invariant that characterizes the location parameter of the elliptical distribution in clr coordinate space, and μ = clr−1(μclr) = E⊕(X) is an invariant that characterizes the location parameter in the simplex. Moreover, \(\boldsymbol {\Sigma } = \mathbf V \boldsymbol {\Sigma }^*_V \mathbf V^T = \text{ilr}_V^{-1}(\boldsymbol {\Sigma }_V^*)= \mathbf {COV}^{\oplus }\mathbf {X}\) is an invariant that characterizes the scatter matrix in the simplex. Similarly, \(\mathbf Q= \mathbf V{\boldsymbol {\Sigma }_V^*}^{-1}\mathbf V^T = \text{ilr}_V^{-1}({\boldsymbol {\Sigma }^*_{\boldsymbol {V}}}^{-1})\) is an invariant that characterizes the precision matrix of this distribution in the simplex. As in Pawlowsky-Glahn et al. (2015), it is easy to write the density of this distribution with respect to Lebesgue measure in coordinate space as well as with respect to the Aitchison measure in the simplex. As in Comas-Cufí et al. (2016), we can extend this definition to a mixture of elliptical distributions.

5 ICS for Compositional Data

5.1 ICS in Coordinate Space

With the definitions introduced in Sect. 4.2, we can now define ICS for a compositional random vector X. For a given choice of contrast matrix V, let X∗ = ilrV(X). In the ilr coordinate space, ICS consists of the joint diagonalization of two scatter matrices S1(X∗) and S2(X∗). Following Archimbaud et al. (2018a), let us focus on S1(X∗) = COV(X∗) and S2(X∗) = COV4(X∗). From Eq. (3) in Sect. 2.2, we can derive the affine invariant coordinates by diagonalizing the (D − 1) × (D − 1) symmetric matrix

Let λ1 ≥… ≥ λD−1 be the eigenvalues of L∗ in descending order, and Λ be the (D − 1) × (D − 1) diagonal matrix with the vector of eigenvalues on its diagonal. Let \({\mathbf {u}}^*_k\), k ranging from 1 to D − 1, be the D − 1 corresponding eigenvectors of L∗ and \({\mathbf {U}}^* = [{\mathbf {u}}^*_1 \ldots {\mathbf {u}}^*_{D-1}]\) be the matrix whose columns are these eigenvectors. By construction, the matrix U∗ is orthogonal (with respect to the standard scalar product in \({\mathbb R}^{D-1}\)). We have for all k = 1, …, D − 1:

If we denote by \(\mathbf h_k^*\), k = 1, …, D − 1 the column vectors of H∗ = COV(X∗)−1∕2U∗, we have

Equations (8) and (9) correspond to the joint diagonalization of COV(X∗) and COV4(X∗). As for Eq. (2), we also have

The scores or invariant coordinates of X∗ are given by

or equivalently by \(z_k^*=<\mathbf h_k^*,{\mathbf {X}}^* - {\mathbf E}{\mathbf {X}}^*>\), k = 1, …, D − 1.

5.2 ICS in the Simplex

Let us now use Sect. 4 to obtain a formulation of the previous results back in the simplex. This presentation of ICS involves elements (scatter matrices, eigenvalues, and eigenvectors) that are independent of the particular choice of contrast matrix, thus justifying this approach. Let us denote by L the following matrix:

By Theorem 1, we have that

and by Theorem 3, we have that, for k = 1, …, D,

where \(\mathbf u_k= \text{ilr}_{V}^{-1}(\mathbf u_k^*)\) for k = 1, …, D − 1, and \({\mathbf {u}}_D = \mathbf 1_D/\sqrt {D}\) corresponding to λD = 0. We have < uk, ul >A = δkl, for k, l = 1, …, D. The vectors uk are the \(\mathcal {A}\)-eigenvectors of L. We can write the following spectral representation of L:

If we denote by \(\mathbf h_k=\text{ilr}_{V}^{-1}(\mathbf h_k^*)= ({\mathbf {COV}}^\oplus \mathbf {X})^{-1/2} \boxdot \mathbf u_k\), k = 1, …, D, we get

and

The scores \(\mathbf Z^*=(z_1^*,\ldots ,z_{D-1})\) defined by (10) do not depend on the contrast matrix as already mentioned in Muehlmann et al. (2021) and are given by

This equation shows that the scores can be used for outlier detection independently of the contrast matrix.

5.3 Reconstruction Formula

From (10), it is easy to derive the reconstruction formula in coordinate space:

Let \(\mathbf a_k^*\) denote the column vectors of the matrix (H∗T)−1 = COV(X∗)1∕2U∗ for k = 1, …, D − 1. Let us define the scalar product with respect to the metric COV(X∗)−1 by

Equation (8) shows that the vectors \(\mathbf a_k^*\), k = 1, …, D − 1, are orthonormal in the sense of this scalar product since the equation can be rewritten as

This orthogonality implies that the reconstruction formula can also be obtained by

The scalar products \(< \mathbf a_k^*, \mathbf X^*-{\mathbf E}{\mathbf {X}}^*>_{ {\mathbf {COV}}({\mathbf {X}}^*)^{-1}}\), k = 1, …, D − 1, are the coordinates of the (D − 1) vector:

Using (14), this vector can be written:

and thus

where \((z_1^*,\ldots ,z_{D-1}^*)\) denote the coordinates of Z∗.

Combining with (16), we get the final reconstruction formula in coordinate space

Applying \(\text{ilr}_{V}^{-1}\) to Eq. (18), we get the following simplex version of the reconstruction formula

where

The vectors ak are related to the \(\mathcal {A}\)-eigenvectors uk of L by (20). They generate simplex lines called ICS axes that are the sets of vectors α ⊙ak, for \(\alpha \in \mathbb {R}.\) In the next section, we use the empirical version of the reconstruction formula (19) in order to plot the projection of the data on the vector a1 in some ternary diagrams in situations where the number of selected invariant coordinates is one.

We can also write (19) in terms of the vectors hk:

6 Examples of Application

We first consider two artificial datasets following a mixture of two normal distributions with 10% of observations that differ from the 90% constituting the main bulk of the data. The dimension is D = 3 for the first example and D = 20 for the second one. The contrast matrices we use for the ilr transformations in this section are triangular Helmert matrices corresponding to the original ilr transformation defined by Egozcue et al. (2003).

6.1 Toy Examples

For the first example, the contrast matrix is given by \(\mathbf V^T=\begin {pmatrix} \frac {1}{\sqrt {2}} & -\frac {1}{\sqrt {2}} & 0\\ -\frac {1}{2}\sqrt {\frac {2}{3}} & -\frac {1}{2}\sqrt {\frac {2}{3}} & \sqrt {\frac {2}{3}} \end {pmatrix}.\)

In this toy example, n = 100 observations are generated in the ilr space with D − 1 = 2 dimensions from a mixture of two Gaussian distributions. The mean and the covariance matrix of the first 90% of the observations (sample 1) are, respectively,

while the mean vector and the covariance matrix of the remaining 10% (sample 2) are

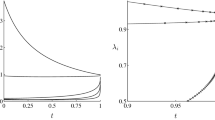

Figure 1 on the left (resp., in the middle) shows the dataset in the simplex \(\mathcal {S}^3\) (resp., in the ilr space). The points in cyan (resp., magenta) belong to sample 1 (resp., sample 2), and we can see that component x2 has higher values in sample 2 than in sample 1, to the detriment of x1 and x3. We perform the ICS method in the ilr space using the ICSOutlier package (Archimbaud et al. 2018b). The eigenvalues are 1.57 and 0.81, and the D’Agostino test for normality leads to the selection of a single invariant component. Note that this test is based on the ICS scores and thus does not depend on the ilr transformation (see Archimbaud et al. 2018a, for more details). Figure 1 on the right reports the ICS distances as in Eq. (5) for each observation. The horizontal line represents a cutoff value based on Monte Carlo simulations and a 90% quantile. The choice of the quantile order can be done with respect to the expected percentage of outliers in the data. The ICS distances and the cutoff are also independent of the ilr transformation since they depend on the ICS scores only. Figure 1 on the right allows us to identify outliers represented by filled circles. On this example, all 10 observations from sample 2 are identified as outliers, whereas only 1 out of the 90 observations from sample 1 is incorrectly identified (at the limit of the cutoff value).

The two vectors generating the ICS axes (dashed lines in Fig. 2) are equal to \(\mathbf a_1^*=(0.31,-0.1)\) and \(\mathbf a_2^*=(0.12,0.22)\) in the ilr space and a1 = (0.27, 0.43, 0.30) and a2 = (0.28, 0.33, 0.39) in the simplex space. To better understand the role of the ICS components and how they discriminate the observations, we represent in Fig. 2 the projections of the observations on the first ICS axis (left plots) and the second ICS axis (right plots) in the ilr space (top plots) and in the simplex space (bottom plots). The first ICS axis allows to discriminate the observations with a high value of x2 relatively to the other shares and results in a good discrimination of the two groups. On the contrary, the second axis that seems to separate observations with high values of x1 against observations with high values of x3 does not allow to discriminate the two groups.

Finally, using the cutoff value, we represent in gray in Fig. 3 the zones or areas of the ilr space (left plot) and of the simplex (right plot) where the observations are considered as outliers. This confirms that the observations with a large or a small value of x2 relatively to the other shares are in the outlying zone.

For the second toy example, we generate a higher dimensional dataset with D = 20, using two multivariate Gaussian distributions. The first sample is of size n1 = 90 with

and the second sample is of size n2 = 10 with

When D > 3, several options can be used for representing compositional data. One possibility is to plot ternary diagrams using sub-compositions as described in van den Boogaart and Tolosana-Delgado (2008). An alternative is to plot a ternary diagram with x1, x2 and the sum of the remaining parts x3 + … + xD. Another possibility is to replace the sum of the remaining parts by their geometric mean. If D > 3 is not too large, these sub-ternary diagrams can be gathered in a square matrix of dimension D(D − 1)∕2.

In order to identify the outliers, we implement the ICS method using ICSOutlier in coordinate space. The procedure selects only the first invariant component. The left plot of Fig. 4 displays the ICS distances and the cutoff value as an horizontal line to identify outliers. This plot is the same for all ilr transformations. 9 observations out of 10 are detected as outliers in sample 2, while none of the observations from sample 1 are identified as outliers. The symbols for the points are as in Fig. 1.

The right plot represents several sub-ternary diagrams, but not all of them because of the large dimension D = 20. The selected ternary diagrams plot two parts among x1 to x5 against the geometric mean of the rest denoted by ∗. However, the diagrams that are not shown are very similar to the ones that focus on x3, x4, and x5 (see the rows and columns 3, 4, and 5 on the matrix plot). Observations with the cross (resp., circle) symbol belong to sample 1 (resp., sample 2). The sub-ternary diagrams confirm that x1 and x2 are the composition parts playing a role in explaining the outlyingness of the red points. In fact, the observations of sample 1 are clearly visible and separated from the other group when considering the diagram with the x1 and x2 components and the geometric mean of the other parts. On the contrary, when looking at the ternary diagrams that do not take x1 and x2 separately from the other parts, the outliers are not distinct from the other observations.

We represent in Fig. 5 the sub-ternary diagram (x1, x2, ∗) (where ∗ represents the geometric mean of the rest), with small circles in cyan (resp., magenta) for sample 1 (resp., sample 2). The vector a1 is plotted together with the ICS axis represented by a dashed line. We see that the data projected on the first ICS axis are clearly discriminated by high values of x2 relatively to x1.

6.2 Market Shares Example

This market share dataset has been simulated from a model fitted on the real European cars market in 2015 and is available in Barreiro et al. (2022). The plot on the top of Fig. 6 represents the shares in the French automobile market of 5 segments (D = 5), from January 2003 to August 2015, denoted by A, B, C, D, and E (European cars market segments, from the cheapest cars to the most powerful and luxury ones). We perform the ICS method in the ilr space and represent in the bottom of Fig. 6 the ICS distances for each observation. The normality test of the ICS procedure reveals that only the first component is important for outlier identification. The cutoff value is based on the quantile of order 97.5%. All the identified outliers are concentrated in a time interval between September 2008 and May 2009. It turns out that during this period, the global automobile market was undergoing a crisis with worldwide sales significantly down and political solutions have been provided such as the scrapping bonus at the end of 2008.

As before, in Fig. 7, we represent the matrix of sub-ternary diagrams with detected outliers in red. The ternary diagram vertices consist of two selected parts, and the third part indicated by ∗ corresponds to the geometric mean of the remaining parts. It seems that among all ternary diagrams, the ones including segment A are the best possible in order to identify the outliers. More precisely, the sub-ternary diagram that includes segments A, D, and the others separates the most the two groups. Thus, we plot in Fig. 8 the data in the sub-ternary diagram (A, D, ∗). We also represent the vector a1, the ICS axis, and the projections of the data on this axis.

The time points that are detected as outlying correspond to observations with high values of segment A, compared to more normal values of D and low values of the geometric mean of B, C and E. This interpretation is confirmed when looking at the top plots of Fig. 6.

7 Conclusion

The present contribution extends ICS for outlier detection to the context of compositional data. As for standard data, ICS with the scatter pair COV and COV4 is a powerful tool to detect a small proportion of outliers. The definition of ICS in coordinate space is straightforward, and the identification of outliers does not depend on the choice of the isometric log-ratio transformation. The definition of ICS in the simplex is more challenging, and some algebra tools have been introduced to tackle the problem. Using a reconstruction formula, ICS axes can be plotted on ternary diagrams that help interpreting the outliers. Further interpretation tools are work in progress. Among the perspectives, we can mention the extension of ICS to compositional functional data (see Rieser and Filzmoser (2022) and Archimbaud et al. (2022)). Some supplementary material is available on https://github.com/tibo31/ics_coda in order to permit the reproducibility of the empirical analyses contained in the present paper.

References

Aitchison, J. (1982). The statistical analysis of compositional data. Journal of the Royal Statistical Society: Series B (Methodological), 44(2), 139–160.

Archimbaud, A., Boulfani, F., Gendre, X., Nordhausen, K., Ruiz-Gazen, A., & Virta, J. (2022). ICS for multivariate functional anomaly detection with applications to predictive maintenance and quality control. Econometrics and Statistics, In press.

Archimbaud, A., Nordhausen, K., & Ruiz-Gazen, A. (2018a). ICS for multivariate outlier detection with application to quality control. Computational Statistics & Data Analysis, 128, 184–199.

Archimbaud, A., Nordhausen, K., & Ruiz-Gazen, A. (2018b). ICSOutlier: Unsupervised outlier detection for low-dimensional contamination structure. The R Journal, 10(1), 234–250.

Barreiro, I. R., Laurent, T., & Thomas-Agnan, C. (2022). Regression models involving compositional variables. R package, https://github.com/tibo31/codareg.

Bilodeau, M., & Brenner, D. (2008). Theory of Multivariate Statistics. New York: Springer.

Comas-Cufí, M., Martín-Fernández, J. A., & Mateu-Figueras, G. (2016). Log-ratio methods in mixture models for compositional data sets. Sort, 1, 349–374.

Egozcue, J., Pawlowsky-Glahn, V., Mateu-Figueras, G., & Barceló-Vidal, C. (2003). Isometric logratio transformations for compositional data analysis. Mathematical Geology, 35(3), 279–300.

Egozcue, J. J., Barceló-Vidal, C., Martín-Fernández, J. A., Jarauta-Bragulat, E., Díaz-Barrero, J. L., & Mateu-Figueras, G. (2011). Elements of simplicial linear algebra and geometry. In V. Pawlowsky-Glahn, & A. Buccianti (Eds.), Compositional data analysis, chapter 11 (pp. 139–157). New York: Wiley.

Filzmoser, P., Hron, K., & Reimann, C. (2012). Interpretation of multivariate outliers for compositional data. Computers & Geosciences, 39, 77–85.

Filzmoser, P., Hron, K., & Templ, M. (2018). Applied compositional data analysis: With worked examples in R. Berlin: Springer.

Filzmoser, P., Ruiz-Gazen, A., & Thomas-Agnan, C. (2014). Identification of local multivariate outliers. Statistical Papers, 55(1), 29–47.

Mateu-Figueras, G., Monti, G. S., & Egozcue, J. (2021). Distributions on the simplex revisited. In Advances in Compositional Data Analysis (pp. 61–82). Berlin: Springer.

Muehlmann, C., Fačevicová, K., Gardlo, A., Janečková, H., & Nordhausen, K. (2021). Independent component analysis for compositional data. In A. Daouia, & A. Ruiz-Gazen (Eds.), Advances in Contemporary Statistics and Econometrics: Festschrift in Honor of Christine Thomas-Agnan (pp. 525–545). New York: Springer.

Nguyen, T. H. A. (2019). Contribution to the statistical analysis of compositional data with an application to political economy. PhD thesis, TSE, University Toulouse 1 Capitole.

Nordhausen, K. & Ruiz-Gazen, A. (2022). On the usage of joint diagonalization in multivariate statistics. Journal of Multivariate Analysis, 188, 104844.

Nordhausen, K. & Tyler, D. E. (2015). A cautionary note on robust covariance plug-in methods. Biometrika, 102(3), 573–588.

Nordhausen, K. & Virta, J. (2019). An overview of properties and extensions of FOBI. Knowledge-Based Systems, 173, 113–116.

Pawlowsky-Glahn, V., Egozcue, J. J., & Tolosana-Delgado, R. (2015). Modelling and Analysis of Compositional Data. New York: Wiley.

Rieser, C. & Filzmoser, P. (2022). Outlier detection for pandemic-related data using compositional functional data analysis. In Pandemics: Insurance and Social Protection (pp. 251–266). Cham: Springer.

Rousseeuw, P. J. & Van Zomeren, B. C. (1990). Unmasking multivariate outliers and leverage points. Journal of the American Statistical Association, 85(411), 633–639.

Theis, F. J. & Inouye, Y. (2006). On the use of joint diagonalization in blind signal processing. In IEEE International Symposium on Circuits and Systems (pp. 3589–3593). New York: IEEE.

Tyler, D. E., Critchley, F., Dümbgen, L., & Oja, H. (2009). Invariant co-ordinate selection. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 71(3), 549–592.

van den Boogaart, K. G. & Tolosana-Delgado, R. (2008). “Compositions”: A unified R package to analyze compositional data. Computers & Geosciences, 34(4), 320–338.

Acknowledgements

The authors would like to thank Pr. Dr. David Tyler for all his work in multivariate data analysis, which is an immense source of inspiration. We would like to thank also the editors and two reviewers for their suggestions and comments that helped us to improve the paper. We acknowledge funding from the French National Research Agency (ANR) under the Investments for the Future (Investissements d’Avenir) program, grant ANR-17-EURE-0010.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendix

Appendix

Proof of Theorem 1

-

1.

Let PV be the D × D block matrix \([\mathbf V \, \frac {1}{\sqrt {D}}{\mathbf {1}}_D].\) Then \(\mathbf P_V^T\mathbf P_V= {\mathbf {I}}_D\) and \(\mathbf P_V\mathbf P_V^T= \mathbf V\mathbf V^T + \frac {1}{D}{\mathbf {1}}_D{\mathbf {1}}_D^T = {\mathbf {I}}_D\); therefore, PV is invertible, and its inverse is equal to \(\mathbf P_V^T\). If A = VA∗VT for a (D − 1) × (D − 1) matrix A∗, then \(\mathbf A = \mathbf P_V \begin {pmatrix} \mathbf A^* & \mathbf 0_{D-1} \\ \mathbf 0_{D-1}^T & 0\end {pmatrix} \mathbf P_V^T= \mathbf P_V \begin {pmatrix} \mathbf A^* & \mathbf 0_{D-1} \\\mathbf 0_{D-1}^T & 0\end {pmatrix} \mathbf P_V^{-1}\); therefore, A is similar to A∗ and their rank is equal.

-

2.

If A∗ is invertible, by the previous property, A = VA∗VT is also invertible. Then, let us first prove that \((\mathbf A + \frac {1}{D} {\mathbf {1}}_{D}{\mathbf {1}}_{D}^T)\) is invertible. We can write

$$\displaystyle \begin{aligned} \mathbf P_V \begin{pmatrix} \mathbf A^* & \mathbf 0_{D-1}\\ \mathbf 0_{D-1}^T & 1\end{pmatrix} \mathbf P_V^T= \mathbf A + \frac{1}{D} {\mathbf{1}}_{D}{\mathbf{1}}_{D}^T. \end{aligned}$$The rank of the central matrix is D; therefore, \( \mathbf A + \frac {1}{D}{\mathbf {1}}_{D}{\mathbf {1}}_{D}^T\) is invertible, and its inverse is given by

$$\displaystyle \begin{aligned} \left(\mathbf P_V \begin{pmatrix} \mathbf A^* & \mathbf 0_{D-1}\\ \mathbf 0_{D-1}^T & 1\end{pmatrix} \mathbf P_V^T\right) ^{-1} &= \left(\mathbf P_V \begin{pmatrix} \mathbf A^* & \mathbf 0_{D-1}\\ \mathbf 0_{D-1}^T & 1\end{pmatrix} \mathbf P_V^{-1}\right) ^{-1}\\ &= \mathbf P_V \begin{pmatrix} {\mathbf A^*}^{-1} & \mathbf 0_{D-1}\\ \mathbf 0_{D-1}^T& 1\end{pmatrix}\mathbf P_V^T. \end{aligned} $$Then let us check that the inverse of A in \(\mathcal {A}\) is given by \(\mathbf P_V \begin {pmatrix} {\mathbf A^*}^{-1} & \mathbf 0_{D-1}\\ \mathbf 0_{D-1}^T & 0\end {pmatrix} \mathbf P_V^T.\) Indeed

\(\mathbf P_V \begin {pmatrix} {\mathbf A^*}^{-1} & \mathbf 0_{D-1}\\ \mathbf 0_{D-1}^T & 0\end {pmatrix} \mathbf P_V^T\mathbf A=\mathbf P_V \begin {pmatrix} {\mathbf A^*}^{-1} & \mathbf 0_{D-1}\\ \mathbf 0_{D-1}^T & 0\end {pmatrix}\mathbf P_V^T\mathbf P_V \begin {pmatrix} {\mathbf A^*} & \mathbf 0_{D-1}\\ \mathbf 0_{D-1}^T & 0\end {pmatrix}\mathbf P_V^T=\mathbf V \mathbf V^T=\mathbf G_D.\) Same for the other direction. Since \(\mathbf P_V \begin {pmatrix} \mathbf 0_{D-1}\mathbf 0_{D-1}^T & \mathbf 0_{D-1}\\ \mathbf 0_{D-1}^T & 1\end {pmatrix} \mathbf P_V^T = \frac {1}{D} {\mathbf {1}}_{D}{\mathbf {1}}_{D}^T,\) we have

$$\displaystyle \begin{aligned} \mathbf A^{-1} &= \mathbf P_V \begin{pmatrix} {\mathbf A^*}^{-1} & \mathbf 0_{D-1}\\ \mathbf 0_{D-1}^T & 0\end{pmatrix} \mathbf P_V^T\\ &= \mathbf P_V \begin{pmatrix} {\mathbf A^*}^{-1} & \mathbf 0_{D-1}\\ \mathbf 0_{D-1}^T & 1\end{pmatrix} \mathbf P_V^T - \mathbf P_V \begin{pmatrix}\mathbf 0_{D-1}\mathbf 0_{D-1}^T & \mathbf 0_{D-1}\\ \mathbf 0_{D-1}^T & 1\end{pmatrix} \mathbf P_V^T \end{aligned} $$and thus \(\mathbf A^{-1}= (\mathbf A + \frac {1}{D} {\mathbf {1}}_{D}{\mathbf {1}}_{D}^T)^{-1} - \frac {1}{D} {\mathbf {1}}_{D}{\mathbf {1}}_{D}^T\). An alternative formula is

$$\displaystyle \begin{aligned} \mathbf A^{-1}= \mathbf V(\mathbf V^T \mathbf A \mathbf V)^{-1}\mathbf V^T. \end{aligned}$$ -

3.

ilrV(A)ilrV(B) = VTAVVTBV = VTABV = ilrV(AB). If A is invertible, then ilrV(A−1) = VTV(VTAV)−1VTV = (VTAV)−1 = (ilrV(A))−1. If (ilrV(A))1∕2 exists, let us define \(\mathbf A^{1/2}=\text{ilr}^{-1}\left ((\text{ilr}_V(\mathbf A))^{1/2}\right )= \mathbf V (\text{ilr}_V(\mathbf A))^{1/2} \mathbf V^T\). We have A1∕2A1∕2 = V(ilrV(A))1∕2VTV(ilrV(A))1∕2VT = VilrV(A)VT = A.

Proof of Theorem 2

-

1.

1 is a clear consequence of the fact that GD is the neutral element of \(\mathcal {A}. \)

-

2.

It is clear that \( \text{clr}( \mathbf {B}) \text{clr}(\mathbf {x}) \in {\mathbf {1}}_D^\perp ;\) hence, by definition, \( \text{clr}^{-1}(\text{clr}(\mathbf {B})\text{clr}(\mathbf {x})) = \text{clr}(\mathbf {B}) \boxdot \mathbf {x}\).

-

3.

If VTBV = VTAV, then multiplying on the left by V and on the right by VT and using the fact that VVT = GD, we get GDBGD = GDAGD, and hence, clr(A) = clr(B). Then if \(\mathbf {A} \in \mathcal {A}\), then clr(A) = A = clr(B).

-

4.

By Theorem 1, we have A = VilrV(A)VT and A = clr(A) by 1.

Proof of Theorem 3

A∗ is diagonalizable if there exists a basis \(\mathbf v^*_1, \ldots , \mathbf v^*_{D-1}\) of \({\mathbb R}^{D-1}\) and D − 1 real values λj such that \(\mathbf A^* \mathbf v^*_j = \lambda _j \mathbf v^*_j.\) Then let \({\mathbf {e}}_j= \text{ilr}_{V}^{-1}(\mathbf v^*_j);\) we get by applying ilr−1: \(\mathbf A \boxdot {\mathbf {e}}_j= \text{ilr}_{V}^{-1}(\lambda _j \mathbf v^*_j)= \lambda _j \odot \text{ilr}_{V}^{-1}(\mathbf v^*_j)= \lambda _j \odot {\mathbf {e}}_j\) so that ej is an \(\mathcal A\)-eigenvector of A. Now applying the clr transformation, we also get that if wj := clr(ej), then Aclr(ej) = λjclr(ej) so that Awj = λjwj showing that wj is an eigenvector of A. \(\mathbf 1_D/\sqrt {D}\) is an eigenvector of A associated to the eigenvalue 0 when \(\mathbf A \in \mathcal {A}\), and this completes the basis in \({\mathbb R}^D\) since the vectors wj belong to \({\mathbf 1}_D^\perp \), j = 1, …, D − 1.

Proof of Theorem 4

The density of the elliptical distribution of \({\mathbf {X}}_V^* = \text{ilr}_{V}(\mathbf {X})\) is a function of \(R= (\text{ilr}_{V}(\mathbf {X})- \boldsymbol \mu _V^*)^T{\boldsymbol \Sigma _V^*}^{-1}(\text{ilr}_{V}(\mathbf {X})- \boldsymbol \mu _V^*).\) Since ilrV(X) = VTclr(X), an alternative formulation for R is

Now if we let \(\boldsymbol \mu _W^* = \mathbf W^T \mathbf V\boldsymbol \mu _V^*,\) we have \(\mathbf W \boldsymbol \mu _W^* = \mathbf V\boldsymbol \mu _V^*.\) Similarly, let \(\boldsymbol \Sigma _W^* = \mathbf W^T \mathbf V \boldsymbol \Sigma _V^* \mathbf V^T \mathbf W,\) and we have \(\mathbf W\boldsymbol \Sigma _W^*\mathbf W^T=\mathbf V\boldsymbol \Sigma _V^*\mathbf V^T. \) Therefore, substituting this expression in R, we see that R is invariant to the specification of the contrast matrix, and going backward, we can rewrite \(R= (\text{ilr}_{W}(\mathbf {X})- \boldsymbol \mu _W^*)^T{\boldsymbol \Sigma _W^*}^{-1}(\text{ilr}_{W}(\mathbf {X})- \boldsymbol \mu _W^*)\), which shows that ilrV(X) follows an elliptical distribution with parameters \(\boldsymbol \mu _W^*\) and \(\boldsymbol \Sigma _W^*.\) Now using the properties of contrast matrices VVT = GD and VTV = ID−1, we have

which proves the last part of the theorem.

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Cite this chapter

Ruiz-Gazen, A., Thomas-Agnan, C., Laurent, T., Mondon, C. (2023). Detecting Outliers in Compositional Data Using Invariant Coordinate Selection. In: Yi, M., Nordhausen, K. (eds) Robust and Multivariate Statistical Methods. Springer, Cham. https://doi.org/10.1007/978-3-031-22687-8_10

Download citation

DOI: https://doi.org/10.1007/978-3-031-22687-8_10

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-22686-1

Online ISBN: 978-3-031-22687-8

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)