Abstract

Recently, numerous authoring tools for Augmented Reality (AR) have been proposed, in both industry and academia, with the aim to enable non-expert users, without programming skills, to scaffold educational AR activities. This is a promising authoring approach that can democratize AR for learning. However, there is no systematic analysis of these emerging tools regarding what AR features and modalities they offer (RQ1). Furthermore, little is known as to how these emerging tools support teachers’ needs (RQ2). Following a two-fold approach, we first analyzed a corpus of 21 authoring tools from industry and academia and formulated a comprehensive design space with four dimensions: (1) authoring workflow, (2) AR modality, (3) AR use, and (4) content and user management. We then analyzed two workshops with 19 teachers to understand their needs for AR activities and how existing tools support them. Ultimately, we discuss how our work can support researchers and designers of educational AR authoring tools.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Augmented Reality (AR) is now used by thousands of users for formal and non-formal learning and training [5]. Because AR offers an immersive medium for representing and interacting with content [14], it is increasingly put forward to support active and experiential learning in many disciplines, including art, science, technology, engineering, mathematics, and medicine [2, 9]. AR engages users by combining real and virtual worlds [13] and by creating authentic experiences through motion, sight, touch, sound, and haptic, which is essential for multisensorial learning [15]. Further, AR feels meaningful to users because it transforms real-world into a playground with a prominent game factor [10]. Research has advocated that educators should integrate AR in their curricula to create sensory-rich and engaging activities, increase dwell-time, and facilitate comprehension of content and phenomena [3].

However, recent reviews have underlined that creating AR experiences that fit pedagogical needs remains a salient challenge because of (i) the complexity of authoring AR and (ii) lacks of evidence-based methods to integrating AR in everyday classrooms [1, 5, 16]. Currently, authoring AR experiences requires significant technical knowledge and skills [12]. The vast majority of AR applications are created using advanced programming and complex toolkits, such as Unity3d, Unrealengine, Vuforia, ARCore, Threejs, to name a few. The programming approach has two main limitations. First, it is only accessible to a small group of people with advanced programming skills. Second, toolkits have limited “built-in” support for helping teachers use AR to its full pedagogical capacities [5, 6]. Such limitations make it harder for teachers to harness this new learning medium for their everyday classrooms.

To lower the barriers to creating AR experiences in educational settings, recent research and industry have been empowering non-expert end-users to create AR applications with authoring tools. Such tools offer user-friendly interactions, such as taking a photo of an object (e.g., poster, book cover, drawing, QRCode) and adding augmentations (e.g., texts, images, 3D models). This AR activity can then be saved and shared with other users who will be able to view the augmentations via their devices (e.g., phones, tablets, glasses) (see Fig. 1-(b)-T8,T17).

Recently, many authoring tools have emerged in both academia and industry. Each tool has unique design elements, affordances, features and modalities. There are significant differences between existing tools, which make it difficult for researchers and designers to grasp a holistic view of the rapidly-growing AR authoring research and practice. The few studies that have analyzed authoring tools [5, 11, 12] focused mostly on (i) technical aspects and (ii) tools that require programming (i.e., toolkits), (iii) including non-educational tools. Therefore, we still lack a characterization of existing educational AR authoring tools that do not require programming. In this view, we aim to address two research questions:

-

RQ1: What AR features and modalities do emerging educational AR authoring tools offer, mainly tools that are suitable for non-expert users?

-

RQ2: How do emerging authoring tools support teachers’ needs?

In the following, we first present previous studies on AR authoring tools. Then, in Sect. 3, we present our method to fill the gap. In Sect. 4, we propose the first design space of educational AR authoring tools. In the field of Human-Computer Interactions, the term “design space” is a conceptual metaphor for knowledge that “enables us to investigate how a design solution emerges” [7]. Design spaces aim at formulating a comprehensive view of design dimensions and options underlying an area of interest. We distilled our design space based on the analysis of 21 recent authoring tools from industry and academia. In addition, we conducted two workshops with 19 teachers to identify their needs and how existing tools might support them, presented in Sect. 5. Ultimately, in Sect. 6, we show how our work can provide insights, as well as practical guidance, for researchers, educators and technology designers to engage with the design and use of AR authoring tools.

2 Background and Related Work

Recently, a dozen systematic reviews have been conducted to reveal trends, benefits, and challenges of educational AR [1, 2, 5, 6, 8, 9, 13]. For example, Radu [13] analyzed 26 studies that compare AR to non-AR-learning. Garzón et al. [6] reviewed the impact of pedagogical factors on AR learning, such as approaches, intervention duration and environment of use. Ibáñez and Delgado-Kloos [9] reviewed AR literature in STEM fields and characterized AR applications, instructional processes, research approaches and problems reported. However, these systematic reviews were silent on authoring tools and their underlying design considerations and functionalities.

Very few studies reviewed design aspects underlying AR authoring tools. Nebeling and Speicher [12], classified existing authoring tools relevant to rapid prototyping of AR/VR experiences in terms of four main categories: screens, interaction (use of camera), 3D content, and 3D games. Mota et al. [11] discussed authoring tools under the lens of two main themes: the authoring paradigms (stand-alone, plug-in) and deployment strategies (platform-specific, platform-independent). Dengel et al. [5] reviewed 26 AR toolkits that are mostly cited in scientific research. However, the authors found only five authoring tools that do not require programming. While the aforementioned studies provided insights into the design and use of AR authoring tools, they focused mainly on (i) technical aspects of AR, (ii) programming toolkits, (iii) including non-educational ones. In addition, to the best of our knowledge, no study has yet analyzed authoring tools from industry, even-thought a vast majority of teachers might use commercial tools because they are advertised.

Yet, systematic reviews have raised several design challenges, such as usability difficulties, lacks of ways to customize the experiences, inadequacy of the technology for teachers, difficulty to design experiences, expensive technology, and lack of design principles for AR [5, 6, 9, 13]. A further study of the functionalities offered by AR authoring tool and their adequacy with teachers seems necessary. Inspired by design space [e.g., 7, 16], we conduct this type of analysis to (i) identify design dimensions and options of educational AR authoring tools, that do not require programming, from both academia and industry, and (ii) link them to the AR activities that teachers aspire for.

3 Method

We conduct this work in the context of a design-based research project that involves end-users (teachers and learners). To tackle our two research questions, we followed four main steps:

1) Defining AR Authoring Tools: We established a working definition as a frame of reference to build a corpus of tools for our analysis. We define an “AR authoring tool” as a tool that enables non-expert users to scaffold AR experiences without the need for programming code [5, 11, 12].

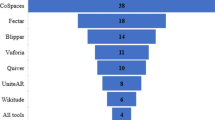

2) Building the Corpus: We aimed to build a representative corpus of most recent tools. We searched in bibliographic hubs (i.e., ACM Digital Library, Google Scholar, Science Direct, Springer) (using keywords: “education”, “learning”, “authoring tool”, “augmented reality”) and identified 9 tools from academia. The inclusion criteria was: resent research papers (published after 2019) and active research projects. Similarly, we searched for tools from industry in Google search and identified 12 tools (five of them were also cited in Dengel et al. [5] systematic review). We looked for tools and papers with varying modalities to capture the extent of variability of design space.

3) Analyzing Authoring Tools (RQ1): We followed a thematic design space analysis [4, 7]. We tested, read papers, documentations, and watched videos of the 21 tools. We met several times to discuss and iteratively formulate the dimensions and options of design space by following six steps of thematic analysis [4]. Figure 1 summarizes the results of the coding.

(a) Overview of design space and how 21 authoring tools span its dimensions (we highlight in green essential options that emerged from the teachers study). (b) Overview of 21 analyzed authoring tools. (c) The seven AR activities that we identified from the teachers study and how existing tools enable creating these activities (“\(\bullet \)”: full support by a tool, “—”: limited support). We provide supplementary materials on google drive with references of the corpus: https://bit.ly/3B2Rvxl.

4) Analyzing Teachers’ Needs (RQ2): We recruited 19 teachers via a partnership with CANOPE, a public network that offers professional training for teachers in France. Teachers were from various disciplines: [Gender: \((women=8, men=11\)), Teaching_Years: \((min=2, max=40\)), School_Level: \((elementary=5, middle=11, high=2, university=1\))]. They also have various technology-use expertise in classrooms, AR_Use: \(26.3\%\) and Smartphone_Use: \(63.2\%\). We conducted two 3-hour co-design sessions where teachers paper-prototyped AR activities they wanted to use.

4 Design Space of AR Authoring Tools

We identified four main design dimensions of AR content authoring: (1) authoring workflow, (2) AR modality, (3) AR use and (4) content and user management. Each dimension identifies categories and options [4, 7]. Figure 1 summarizes the results of our design space analysis (also online as supplementary materials: https://bit.ly/3B2Rvxl).

4.1 Dimension 1: Authoring Workflow

The authoring workflow involves production style, content sources, collaboration and platform.

Production Style: Shelf selection provides users with pre-made experiences that cannot be customized. Template editing allows users to scaffold experiences based on customizable templates. Visual editing allows users to produce AR experiences using user-friendly interactions, such as drag-and-drop and configuration menus.

Content Sources: Local files allow users to import files from their devices, such as images, 3D models and videos. Photo taking, audio and video recording allow users to take photos, record video/audio directly from their device. Embedded assets provide users with pre-made assets and resources (e.g., 3D models).

Collaboration: Enables a/synchronous collaborative authoring.

Platform: Mobiles allow authoring AR experiences using mobile devices (i.e., native apps or mobile browsers). Desktops allow authoring AR using browsers.

4.2 Dimension 2: AR Modality

The AR modality involves four main categories, namely, object tracking, object augmentation, interaction, and navigation.

Object Tracking: Single marker allows users to track a single image in a scene. Multiple markers allows users to track two or more images in a scene. Location allows users to track real-world coordinates (GPS). Marker-less allows users to track flat surfaces in a scene (e.g., floor, wall, table) in order to project augmentations.

Object Augmentation: Texts provide general information. Legends provide information about specific elements of objects. Drawings support free-form writings and annotations of objects. Images show pictorial information on objects. Videos & Audios associate visual and auditory media to objects. 3D models illustrate objects in 3D format. Finally, modals provide interactive details and contextual information, such as pop-up information sheets.

Interaction: 3D rotation allows users to rotate AR objects in the three-dimensional space. Zoom allows users to change the scale and explore AR objects in more detail. Drag allows users to change AR objects’ positions. Click allows users to change the augmentations state or transition to another state in an AR experience. Haptic provides users with kinesthetic feedback, using touch sense (e.g., vibrations). Animation allows users to explore animated content.

Navigation: Coordinated-views involves multiple views showing information that is both simultaneous and separate in an AR experience. For example, in Fig. 1-(b)-T1, when a user clicks on the left view (menu) it updates the model view in the center. Multi-scene allows users to navigate in multiple scenes (e.g., Fig. 1-(b)-T3).

4.3 Dimension 3: AR Use

We identified four categories in relation to how end-users use AR: device type, usage, content collection, connectivity, and language.

Device Type: Hand-held allows users to view augmentations, either using desktop browsers or mobile apps. Head-held allows users to view content using AR headsets/glasses. Screens allow users to view content outside AR/VR, e.g., in browsers.

Usage: In contrast to individual, collaborative usage simultaneously engages a group of users interacting with AR objects and with one another.

Content Collection: Screenshot taking and screen recording allow users to capture cameras’ field of view, such as 3D models projected in the real world —mainly to document moments of AR experiences (e.g., Fig. 1-(b)-T6).

Connectivity: In contrast to offline, online tools require the internet to use AR.

Language: In contrast to single, multiple languages offers content in several languages.

4.4 Dimension 4: Content and User Management

Content and user management relates to handling content access. We identified three main categories: sharing, administration, and licensing.

Sharing: Public publish allows publishing AR activities in open access. Links allow generating clickable links that can be given to students. Codes allow generating simple access codes. QRCodes allow generating scannable QRCodes.

Administration: User access involves granting/revoking access to users, for example, removing an access link or code. User analytics and monitoring provides teachers with analytics on how users interacted with AR experiences.

Licensing: Involves providing free or paid content.

5 Teachers’ Needs: AR Functionalities and Activities

A) Elicitation and ideation: We conducted two co-design sessions. They started with a 30-minute elicitation phase, during which the 19 teachers explored 11 AR educational applicationsFootnote 1. We then asked them to paper-prototype (to remove technical constraints) one or more AR activities for their course. We provided them with a toolkit (large paper worksheet, markers, tablet screens printed out on paper) and guidelines to help them describe (i) the context in which the AR activity is used (ii) the objects they wanted to augment, (iii) the augmentations they wanted to add, and (iv) the interactions they wanted their students to have access to via the tablet’s screen. After the paper-prototyping, we asked the participants to present their prototypes to the group. We videotaped the workshops. We collected the recordings and 24 worksheets. The collected data is being published as a data paper. Three authors analyzed the recording and the worksheets to identify teachers’ needs.

B) Teachers’ Needs and AR activities: We identified five types of pedagogical AR activities and two ways of grouping these activities. The most common type of activity (18 out of 24) is image augmentation that allows teachers to add media resources to an image. The teachers wanted to add various types of resources, such as text, images, videos, 3D models, audio, videos, as well as modals to open multimodal information sheets or external links. A primary school teacher, for example, wants to augment the pages of a book with an audio recording of her saying specific vocabulary (e.g. “The reindeer has four hooves and 2 antlers”) and an image of the real animals so kids can compare it to the book illustrations. Another teacher wants to augment the ID photos of his high-school students with the 3D models they created in technology class. The second type of activity is image annotation in which teachers want to associate information (e.g., legends) to specific points of an object. For example, a teacher wants to indicate the names of the specific areas on a photo of a theater (e.g. stage, balconies). The third type of activity is image validation. Teachers want to create activities that students can complete on their own by using AR to automatically validate if the chosen image is correct. For example, a middle school science teacher wants to ask students to assemble the pieces of a map correctly. Another wants students to identify a specific part of a machine (e.g. motor) by scanning QRCodes on it. The fourth type of activity is image association. For example, a primary school teacher wants children to practice recognizing the same letter, written in capital and small letters. The fifth type of activity is superposition of layers on an image. For example, a university geology teacher wants students to be able to activate or deactivate layers showing various types of rock on a photo of a mountain. Finally, teachers want to group activities into a learning cluster or into a learning path as ordered activities.

6 Discussion

A) Possibilities and limitations of existing AR authoring tools: Looking at the four dimensions of design space (Fig. 1-(a)), it is clear that existing authoring tools provide many possibilities. Platforms vary from pre-made content (e.g. Lifeliqe) to pre-made templates (e.g., Assemblrworld) and visual editing (e.g. Grib3d, AWE). Most platforms provide ways to blend different media types in AR experiences, which can support experiential learning and a deeper understanding of complex topics [9]. Different media can provide multiple perspectives and make abstract concepts more concrete and engaging [3, 14]. Furthermore, interaction and navigation modalities are also provided and might allow users to interact with content and control of what and how information is presented in a scene. Such modalities can aid learning because users explore and learn information from different perspectives by changing display parameters, using kinesthetic sense and by physically enacting concepts and phenomena (motor activity), which can support cognition [13, 15].

However, there are limitations. Shelf selection and template editing platforms provide limited customization, which corroborate previous findings [13]. In addition, while some authoring tools enable users to create and import contents directly from device’ sensors, which can be important because it reduces the burden of using other external tools, they provide very limited editing functionalities. Also, only six tools provide collaborative authoring, even though collaboration can be important for content educators and learners —to collectively create and share AR activities [3]. Similarly, only six tools allow users to engage in AR collaboratively even though a meta-analysis [6] found that collaborative AR has the highest impact on learners. Another important aspect that is lacking in most analyzed tools (16/21) is learning analytics (e.g., dashboards [18]), which can provide teachers with feedback about learners’ experience, such as emotional state, progression and engagement.

B) How do existing authoring tools support teachers’ needs? The functionalities, wanted by teachers and highlighted in green in Fig. 1-(a)), are more or less covered by the existing authoring tools. In particular, eight tools seem to cover the functionalities required to create the activity image augmentation (see Fig. 1-(c)). Two tools (Assemblrworld and Meta-AR-App) can partially create image annotations because they only allow text-based annotations, whereas teachers wanted to add rich-text annotations. While all the maker-based tools can recognize an image and show augmentations, none of them fully support image validation since teachers wanted to customize the augmentations of the outcome of a validation. Image association requires tracking multiple markers, which only a few tools support. In addition, even if these tools detect multiple markers, they do not provide ways to show an augmentation when multiple markers are simultaneously present in a scene. Lifeliqe provides coordinated views to navigate in layers of 3D models, which is similar to image superposition. However, the content is limited to 3D models and not customizable (shelf selection) and the coordinated views are only possible in screen mode (browser) not in AR. Assemblrworld allows creating multi-scenes without a specific order, which can support learning clusters. Similarly, Cospace allows creating multi-scenes with a specific order, which can support learning paths. However, the tool is marker-less, so teachers can not add markers for the activities. In general, most of these tools are commercial, that provide mainly limited free accounts which is a notable limitation because teachers highlighted that it would be difficult for them to secure funding. Only five tools provide activities that can be done offline even though teachers highlighted that their schools have limited access or no internet. In addition, the tablets they use do not have access to mobile data. This makes it impossible to access online outdoor AR activities, which are shown to have a positive impact on AR learning [6]. Teachers also wanted to record audio for their AR activities and most tools allow video recording but not audio. Also, most teachers raised the need for modals, e.g., interactive menus and buttons, that show contextual multimodal information and only three tools support modals.

C) Recommendations on how to design educational AR activities: While existing tools provide several modalities, we found little guidance on how to design effective educational AR activities, beyond technical tutorials. Based on literature, we provide three recommendations. First, we recommend that designers incorporate pedagogical approaches, such as collaborative learning, project-based learning, inquiry-based learning, situated learning, and multimedia learning to support educators in creating pedagogy-based AR activities [6]. Second, we recommend that designers engage with teachers to uncover pedagogical activities to support [e.g., 17]. For example, our teacher study revealed seven activities. Authoring tools can provide scaffolds for such activities. And finally, because authoring tools are targeting non-expert users (not professional designers), it seems important to accompany such tools with guidance to design AR content best. Guidance might cover multimedia, contiguity, coherence, modality, personalization, and signaling principles [16].

D) Implication for design and research: Our work can be useful for future research in four main ways. First, current design space synthesizes important dimensions and options of authoring AR activities. This work can inform researchers and designers about emerging interactive authoring technologies for AR from industry and academia. Second, we provide a characterization of teachers AR activities, which can inform researchers and designers about teachers’ pedagogical needs for AR in ecological settings. Third, as highlighted above, existing tools provide little support for teachers’ AR activities and the proposed design space can help setting up an authoring tool that leverages ideas from existing tools to support pedagogically-driven AR activities. Finally, future studies can use our work as a framework to design comparative studies that investigate the impact of different modalities of AR applications [1].

E) Limitation: We recognize that a keyword-based search could omit papers or tools that might be relevant to our work. However, our goal was not to find all the tools and papers fitting our definition but to build a representative corpus for analysis. The teachers who participated in the co-design workshops were self-selected, which might represent self-motivated teachers. Furthermore, we did not cover the gamified factors of educational AR authoring tools as well as immersive AR, which might require another study.

7 Conclusion

We analyzed 21 educational authoring tools from academia and industry and formulated a comprehensive design space of four dimensions: (1) authoring workflow, (2) AR modality, (3) AR use, and (4) content and user management. In addition, we analyzed two co-design workshops with 19 teachers and uncovered seven AR activities to support teachers. While existing tools provide a wide range of modalities, they provide limited support for authentic and pedagogical activities. We hope our work provides design-based insights and practical guidance to educators, researchers, and technology designers to inform the design and use of educational AR authoring tools.

Notes

- 1.

Foxar, SpacecraftAR, Voyage AR, DEVAR, ARLOOPA, AnatomyAR, ARC, Le Chaudron Magique, SPART, Mountain Peak AR, SkyView Free. P.S. These were not part of our corpus because they are applications not authoring tools.

References

Akçayır, M., Akçayır, G.: Advantages and challenges associated with augmented reality for education: a systematic review of the literature. Educ. Res. Rev. 20, 1–11 (2017)

Arici, F., Yildirim, P., Caliklar, Ş., Yilmaz, R.M.: Research trends in the use of augmented reality in science education: content and bibliometric mapping analysis. Comput. Educ. 142, 103647 (2019)

Billinghurst, M., Duenser, A.: Augmented reality in the classroom. Computer 45(7), 56–63 (2012)

Braun, V., Clarke, V.: Using thematic analysis in psychology. Qual. Res. Psychol. 3, 77–101 (2001)

Dengel, A., Iqbal, M.Z., Grafe, S., Mangina, E.: A review on augmented reality authoring toolkits for education. Front. Virtual Reality 3 (2022)

Garzón, J., Kinshuk, Baldiris, S., Gutiérrez, J., Pavón, J.: How do pedagogical approaches affect the impact of augmented reality on education? A meta-analysis and research synthesis. Educ. Res. Rev. 31, 100334 (2020)

Halskov, K., Lundqvist, C.: Filtering and informing the design space: towards design-space thinking. ACM Trans. Comput.-Hum. Interact. 28(1), 1–28 (2021)

Hincapie, M., Diaz, C., Valencia, A., Contero, M., Güemes-Castorena, D.: Educational applications of augmented reality: a bibliometric study. Comput. Electr. Eng. 93, 107289 (2021)

Ibáñez, M.-B., Delgado-Kloos, C.: Augmented reality for STEM learning: a systematic review. Comput. Educ. 123, 109–123 (2018)

Laato, S., Rauti, S., Islam, A.N., Sutinen, E.: Why playing augmented reality games feels meaningful to players? The roles of imagination and social experience. Comput. Hum. Behav. 121, 106816 (2021)

Mota, R.C., Roberto, R.A., Teichrieb, V.: [POSTER] Authoring tools in augmented reality: an analysis and classification of content design tools. In: IEEE International Symposium on Mixed and Augmented Reality 2015, pp. 164–167 (2015)

Nebeling, M., Speicher, M.: The trouble with augmented reality/virtual reality authoring tools. In: 2018 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), pp. 333–337 (2018)

Radu, I.: Augmented reality in education: a meta-review and cross-media analysis. Pers. Ubiquit. Comput. 18(6), 1533–1543 (2014)

Roopa, D., Prabha, R., Senthil, G.: Revolutionizing education system with interactive augmented reality for quality education. Mater. Today: Proc. 46, 3860–3863 (2021)

Shams, L., Seitz, A.R.: Benefits of multisensory learning. Trends Cogn. Sci. 12(11), 411–417 (2008)

Yang, K., Zhou, X., Radu, I.: XR-Ed framework: designing instruction-driven and learner-centered extended reality systems for education. arXiv:2010.13779 [cs] (2020)

Ez-Zaouia, M.: Teacher-centered dashboards design process. In: 2nd International Workshop on eXplainable Learning Analytics, Companion Proceedings of the 10th International Conference on Learning Analytics & Knowledge LAK20 (2020)

Ez-Zaouia, M., Tabard, A., Lavoué, E.: EMODASH: a dashboard supporting retrospective awareness of emotions in online learning. Int. J. Hum.-Comput. Stud. 139, 102411 (2020)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Ez-zaouia, M., Marfisi-Schottman, I., Oueslati, M., Mercier, C., Karoui, A., George, S. (2022). A Design Space of Educational Authoring Tools for Augmented Reality. In: Kiili, K., Antti, K., de Rosa, F., Dindar, M., Kickmeier-Rust, M., Bellotti, F. (eds) Games and Learning Alliance. GALA 2022. Lecture Notes in Computer Science, vol 13647. Springer, Cham. https://doi.org/10.1007/978-3-031-22124-8_25

Download citation

DOI: https://doi.org/10.1007/978-3-031-22124-8_25

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-22123-1

Online ISBN: 978-3-031-22124-8

eBook Packages: Computer ScienceComputer Science (R0)