Abstract

Semantic segmentation of LiDAR point clouds is an important task in autonomous driving. However, training deep models via conventional supervised methods requires large datasets which are costly to label. It is critical to have label-efficient segmentation approaches to scale up the model to new operational domains or to improve performance on rare cases. While most prior works focus on indoor scenes, we are one of the first to propose a label-efficient semantic segmentation pipeline for outdoor scenes with LiDAR point clouds. Our method co-designs an efficient labeling process with semi/weakly supervised learning and is applicable to nearly any 3D semantic segmentation backbones. Specifically, we leverage geometry patterns in outdoor scenes to have a heuristic pre-segmentation to reduce the manual labeling and jointly design the learning targets with the labeling process. In the learning step, we leverage prototype learning to get more descriptive point embeddings and use multi-scan distillation to exploit richer semantics from temporally aggregated point clouds to boost the performance of single-scan models. Evaluated on the SemanticKITTI and the nuScenes datasets, we show that our proposed method outperforms existing label-efficient methods. With extremely limited human annotations (e.g., 0.1% point labels), our proposed method is even highly competitive compared to the fully supervised counterpart with 100% labels.

M. Liu—Work done during internship at Waymo LLC.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

1 Introduction

Light detection and ranging (LiDAR) sensors have become a necessity for most autonomous vehicles. They capture more precise depth measurements and are more robust against various lighting conditions compared to visual cameras. Semantic segmentation for LiDAR point clouds is an indispensable technology as it provides fine-grained scene understanding, complementary to object detection. For example, semantic segmentation help self-driving cars distinguish drivable and non-drivable road surfaces and reason about their functionalities, like parking areas and sidewalks, which is beyond the scope of modern object detectors.

We compare LESS with Cylinder3D [68] (our fully-supervised counterpart), ContrastiveSceneContext [23], SQN [24], OneThingOneClick [33], and ReDAL [55] on the SemanticKITTI [4] and nuScenes [5] validation sets. The ratio between labels used and all points is listed below each bar. Please note that all competing label-efficient methods mainly focus on indoor settings and are not specially designed for outdoor LiDAR segmentation.

Based on large-scale public driving-scene datasets [4, 5], several LiDAR semantic segmentation approaches have recently been developed [9, 50, 59, 62, 68]. Typically, these methods require fully labeled point clouds during training. Since a LiDAR sensor may perceive millions of points per second, exhaustively labeling all points is extremely laborious and time-consuming. Moreover, it may fail to scale when we extend the operational domain (e.g., various cities and weather conditions) and seek to cover more rare cases. Therefore, to scale up the system, it is critical to have label-efficient approaches for LiDAR semantic segmentation, whose goal is to minimize the quantity of human annotations while still achieving high performance.

While there are some prior works studying label-efficient semantic segmentation, they mostly focus on indoor scenes [3, 11] or 3D object parts [6], which are quite different in point cloud appearance and object type distribution, compared to the outdoor driving scenes (e.g., significant variances in point density, extremely unbalanced point counts between common types, like ground and vehicles, and less common ones, such as cyclists and pedestrians). Besides, most prior explorations tend to address the problem from two independent perspectives, which may be less effective in our outdoor setting. Specifically, one perspective is improving labeling efficiency, where the methods resort to active learning [34, 47, 55], weak labels [44, 54], and 2D supervision [53] to reduce labeling efforts. The other perspective focuses on training, where the efforts assume the partial labels are given and design semi/weakly supervised learning algorithms to exploit the limited labels and strive for better performance [20, 33, 34, 44, 60, 61, 66].

This paper proposes a novel framework, label-efficient semantic segmentation (LESS), for LiDAR point clouds captured by self-driving cars. Different from prior works, our method co-designs the labeling process and the model learning. Our co-design is based on two principles: 1) the labeling step is designed to provide bare minimum supervision, which is suitable for state-of-the-art semi/weakly supervised segmentation methods; 2) the model training step can tap into the labeling policy as a prior and deduce more learning targets. The proposed method can fit in a straightforward way with most state-of-the-art LiDAR segmentation backbones without introducing any network architectural change or extra computational complexity when deployed onboard. Our approach is suitable for effectively labeling and learning from scratch. It is also highly compatible with mining long-tail instances, where, in practice, we mainly want to identify and annotate rare cases based on trained models.

Specifically, we leverage a philosophy that outdoor-scene objects are often well-separated when isolating ground points and design a heuristic approach to pre-segment an outdoor scene into a set of connected components. The component proposals are of high purity (i.e., only contain one or a few classes) and cover most of the points. Then, instead of meticulously labeling all points, the annotators are only required to label one point per class for each component. In the model learning process, we train the backbone segmentation network with the sparse labels directly annotated by humans as well as the derived labels based on component proposals. To encourage a more descriptive embedding space, we employ contrastive prototype learning [18, 29, 33, 48, 63], which increases intra-class similarity and inter-class separation. We also leverage a multi-scan teacher model to exploit richer semantics within the temporally fused point clouds and distill the knowledge to boost the performance of the single-scan model.

We evaluate the proposed method on two large-scale autonomous driving datasets, SemanticKITTI [4] and nuScenes [5]. We show that our method significantly outperforms existing label-efficient methods (see Fig. 1). With extremely limited human annotations, such as 0.1% labeled points, the approach achieves highly competitive performance compared to the fully supervised counterpart, demonstrating the potential of practical deployment.

In summary, our contribution mainly includes:

-

Analyze how label-efficient segmentation of outdoor LiDAR point clouds differs from the indoor settings, and show that the unbalanced category distribution is one of the main challenges.

-

Leverage the unique geometric structure of LiDAR point clouds and design a heuristic algorithm to pre-segment input points into high-purity connected components. A customized labeling policy is then proposed to exploit the components with tailored labels and losses.

-

Adapt beneficial components into label-efficient LiDAR segmentation and carefully design a network-agnostic pipeline that achieves on-par performance with the fully supervised counterpart.

-

Evaluate the proposed pipeline on two large-scale autonomous driving datasets and extensively ablate each module.

2 Related Work

2.1 Segmentation Networks for LiDAR Point Clouds

In contrast to indoor-scene point clouds, outdoor LiDAR point clouds’ large scale, varying density, and sparsity require the segmentation networks to be more efficient. Many works project the 3D point clouds from spherical view [2, 10, 12, 27, 30, 39, 43, 58] (i.e., range images) or bird’s-eye-view [45, 65] onto 2D images, or try to fuse different views [1, 19, 31]. There are also some works directly consuming point clouds [8, 14, 25, 52]. They aim to structure the irregular data more efficiently. Zhu et al. [68] employ the voxel-based representation and alleviate the high computational burden by leveraging cylindrical partition and sparse asymmetrical convolution. Recent works also try to fuse the point and voxel representations [9, 50, 62, 64], and even with range images [59]. All of these works can serve as the backbone network in our label-efficient framework.

2.2 Label-Efficient 3D Semantic Segmentation

Label-efficient 3D semantic segmentation has recently received lots of attention [17]. Previous explorations are mainly two-fold: labeling and training.

As for labeling, several approaches seek active learning [34, 47, 55], which iteratively selects and requests points to be labeled during the network training. Hou et al. [23] utilize features from unsupervised pre-training to choose points for labeling. Wang et al. [53] project the point clouds to 2D and leverage 2D supervision signals. Some works utilize scene-level or sub-cloud-level weak labels [44, 54]. There are also several approaches using rule-based heuristics or handcrafted features to help annotation [20, 37, 51].

As for training, Xie et al. [23, 57] utilize contrastive learning for unsupervised pre-training. Some approaches employ self-training to generate pseudo-labels [33, 44, 60]. Lots of works use Conditional Random Fields (CRFs) [20, 33, 34, 61] or random walk [66] to propagate labels. Moreover, there are also works that utilize prototype learning [33, 66], siamese learning [44, 61], temporal constraints [36], smoothness constraints [44, 47], attention [54, 66], cross task consistency [44], and synthetic data [56] to help training.

However, most recent works mainly focus on indoor scenes [3, 11] or 3D object parts [6], while outdoor scenarios are largely under-explored.

3 Method

In this section, we present our LESS framework. Since existing label-efficient segmentation works typically address domains other than autonomous driving, we first conduct a pilot study to understand the challenges in this novel setting and introduce motivations behind LESS (Sect. 3.1). After briefly going over our LESS framework (Sect. 3.2), we dive into the details of each part (Sects. 3.3 to 3.6).

3.1 Pilot Study: What Should We Pay Attention To?

Previous works [23, 33, 44, 47, 53, 54, 61, 66] on label-efficient 3D semantic segmentation mainly focused on indoor datasets, such as ScanNet-v2 [11] and S3DIS [3]. In these datasets, input points are sampled from high-quality reconstructed meshes and are thus densely and uniformly distributed. Also, objects in indoor scenarios typically share similar sizes and have a relatively balanced class distribution. However, in outdoor settings, input point clouds demonstrate substantially higher complexity due to the varying point density and the ubiquitous occlusions throughout the scene. Moreover, in outdoor driving scenes, the sample distribution across different categories is highly unbalanced due to factors including occurring frequency and object size. Table 1 shows the point distribution over two autonomous driving datasets, where the numbers of road points are 1,197 and 2,242 times larger than that of bicycle points, respectively. The extremely unbalanced distribution adds extra difficulty for label-efficient segmentation, whose goal is to only label a tiny portion of points (Fig. 2).

Pilot study: performances (IoU) of the most common and rarest categories. Models are trained with 100% of labels and 0.1% of labels (in terms of points or scans) on SemanticKITTI [4].

We conduct a pilot study to further examine this challenge. Specifically, we train a state-of-the-art semantic segmentation network, Cylinder3D [68], on the SemanticKITTI dataset with three intuitive setups: (a) \(100\%\) labels, (b) randomly annotating 0.1% points per scan, and (c) randomly selecting 0.1% scans and annotating all points for the selected scans. The results are shown in Sect. 4.2. Without any special efforts, “0.1% random points” can already achieve a mean IoU of 48.0%, compared to 65.9% by the fully supervised version. On common categories, such as car, road, building, and vegetation, the performances of the “0.1% label” models are close to the fully supervised model. However, on the underrepresented categories, such as bicycle, person, and motorcycle, we observe substantial performance gaps compared to the fully supervised model. These categories tend to have small sizes, appear less frequently, and are thus more vulnerable when reducing the annotation budget. However, they are still critical for many applications such as autonomous driving. Moreover, we find that “0.1% random points” outperforms “0.1% random scans” by a large margin, mainly due to its label diversity.

These observations inspire us to rethink the existing paradigm of label-efficient segmentation. While prior works typically focus on either efficient labeling or improving training approaches, we argue that it can be more effective to address the problem by co-designing both. By integrating the two parts, we may cover more underrepresented instances with a limited labeling budget, and exploit the labeling efforts more effectively during network training.

3.2 Overview

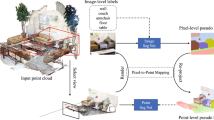

Overview of our LESS pipeline. (a) We first utilize a heuristic algorithm to pre-segment each LiDAR sequence into a set of connected components. (b) Examples of the proposed components. Different colors indicate different components. For clear visualization, components of ground points are not shown. (c) Human annotators only need to coarsely label each component. Each color denotes a proposed component, and each click icon indicates a labeled point. Only sparse labels are directly annotated by humans. (d) We then train the network to digest various labels and utilize multi-scan distillation to exploit richer semantics in the temporally fused point clouds. (Color figure online)

Our LESS framework integrates pre-segmentation, labeling, and network training. It can work with most existing LiDAR segmentation backbones without changing their network architectures or inference latency. As shown in Fig. 3, our pipeline takes raw LiDAR sequences as input. It first employs a heuristic method to partition the point clouds into a set of high-purity components (Sect. 3.3). Instead of exhaustively labeling all points, annotators only need to quickly label a few points for each component proposal (e.g., one point label for each class that appears). Besides the human-annotated sparse labels, we derive other types of labels so as to train the network with more context information (Sect. 3.4). During the network training, we employ contrastive prototype learning to realize a more descriptive embedding space (Sect. 3.5). We also boost the single-scan model by distilling the knowledge from a multi-scan teacher, which exploits richer semantics within the temporally fused point clouds (Sect. 3.6).

3.3 Pre-segmentation

We design a heuristic pre-segmentation to subdivide the point cloud into a collection of components. Each resulting component proposal is of high purity, containing only one or a few categories, which facilitates annotators to coarsely label all the proposals, i.e., one point label per class (Sect. 3.4). In this way, we can derive dense supervision by disseminating the sparse point-wise annotations to the whole components. Since modern networks can learn the semantics of homogeneous neighborhoods from sparse annotations, spending lots of annotation budgets on large objects may be futile. Our component-wise coarse annotation is agnostic to the object size, which benefits underrepresented small objects.

For indoor scenarios, many prior arts [20, 33, 47] leverage the surface normal and color information to generate super voxels and assume that the points within each super voxel share the same category. These approaches, however, might not generalize to outdoor LiDAR point clouds, where the surface can be noisy and color information is not available. Since the homogeneity assumption is hard to hold, we instead propose to lift this constraint and allow each component to contain more than one category.

Unlike indoor scenarios, objects in outdoor scans are often well-separated after detecting and isolating the ground points. Inspired by this philosophy, we design an intuitive approach to pre-segment each LiDAR sequence, which includes four steps: (a) Fuse overlapping scans. We first split a LiDAR sequence into sub-sequences, each containing t consecutive scans. We then fuse the scans of each sub-sequence based on the provided ego-poses. In this way, we can label the same instance across overlapping scans at one click. (b) Detect ground points. While the ground surface may not be flat at the full-scene scale, we assume for each local region (e.g., 5 m \(\times \) 5 m), the ground points can be fitted by a plane. We thus partition the whole scene into a uniform grid according to the xy coordinates, and then employ the RANSAC algorithm [15] to detect the ground points for each local cell. Since the ground points may belong to different categories (e.g., parking zone, sidewalk, and road), we regard the ground points from each local cell as a single component instead of merging all of them. We allow a single ground component to contain multiple classes, and one point per class will be labeled later. (c) Construct connected components. After detecting and isolating the ground points, the remaining objects are often well-separated. We build a graph G, where each node represents a point. We connect every pair of points (u, v) in the graph, whose Euclidean distance is smaller than a threshold \(\tau \). We then divide the points into groups by calculating the connected components for the graph G. Due to the non-uniform point density distribution of the LiDAR point clouds, it is hard to use a fixed threshold across different ranges. We thus propose an adaptive threshold \(\tau (u,v) = \max (r_u, r_v)\times d\) to compensate for the varying density, where \(r_u\) and \(r_v\) are the distances between the points and the sensor centers, and d is a pre-defined hyper-parameter. (d) Subdivide large components. After step (c), there usually exist some connected components covering an enormous area (e.g., buildings and vegetation), which are prone to include some small objects. To keep each component of high purity and facilitate network training, we subdivide oversized components to ensure each component is bounded within a fixed size. Also, we ignore small components with only a few points, which tend to be noisy and can lead to excessive component proposals.

In practice, we find our pre-segmentation generates a small number of components for each sequence. The component proposals cover most of the points, and each component tends to have high purity. These open up the possibility of quickly bootstrapping the labeling from scratch. Moreover, unlike other methods [20, 33, 47] relying on various handcrafted features, our method only utilizes the simple geometrical connectivity, allowing it to generalize to various scenarios without tuning lots of hyper-parameters. Please refer to Sect. 4.4 for statistics of the pre-segmentation results and the supplementary material for more details.

3.4 Annotation Policy and Training Labels

Instead of meticulously labeling every point, we propose to coarsely annotate the component proposals. Specifically, for each component proposal, an annotator needs to first skim through the component and then label only one point for each identified category. Figure 3 (c) illustrates an example where the pre-segmentation yields three components colored in red, blue, and green, respectively. Because the blue component only has traffic-sign points, the annotator only needs to randomly select one point to label. The green component is similar, as it only contains road points. In the red component, there is a bicycle lying against a traffic sign, and the annotator needs to select one point for each class to label. By coarsely labeling all components, we are unlikely to miss any underrepresented instances, as the proposed components cover the majority of points. Moreover, since the number of components is orders of magnitude smaller than that of points and our coarse annotation policy frees annotators from carefully labeling instance boundaries (required in the labeling process to build SemanticKITTI [4] dataset), we are thus able to reduce manual labeling costs.

Based on the component proposals, we can obtain three types of labels. Sparse labels: points directly labeled by annotators. Although only a tiny subset of points are labeled, sparse labels provide the most accurate and diverse supervision. Weak labels: classes that appear in each component. Weak labels are derived based on human-annotated sparse labels within each component. In the example of Fig. 3 (c), all red points can only be either bicycles or traffic signs. We disseminate weak labels from each component to the points therein. The multi-category weak labels provide weak but dense supervision and cover most points. Propagated labels: for the pure components (i.e., only one category appears), we can propagate the label to the entire component. Given the effectiveness of our pre-segmentation approach, the propagated labels also cover a wide range of points. However, since some categories may be easier to be separated and prone to form pure components, the distribution of the propagated labels may be biased and less diverse than the sparse labels.

We formulate a joint loss function by exploiting the three types of labels: \( \mathcal {L} = \mathcal {L}_{\text {sparse}} + \mathcal {L}_{\text {propagated}} + \mathcal {L}_{\text {weak}} \), where \(\mathcal {L}_{\text {sparse}}\) and \(\mathcal {L}_{\text {propagated}}\) are weighted cross-entropy loss with respect to the sparse labels and propagated labels, respectively. We utilize inverse square root of label frequency [35, 38, 69] as category weights to emphasize underrepresented categories. Here, we calculate a cross-entropy loss for each label type separately, because propagated labels significantly outnumber sparse labels while sparse labels provide more diverse supervision.

Denote the weak labels as binary masks \(l_{ij}\) for point i and category j. \(l_{ij} = 1\) when point i belongs to a component that contains category j. We exploit the multi-category weak labels by penalizing the impossible predictions:

where \(p_{ij}\) is the predicted probability of point i, and n is the number of points. Prior approaches [44, 54] aggregate per-point predictions into component-level predictions and then utilize the multiple-instance learning loss (MIL) [41, 42] to supervise the learning. Here, we only penalize the negative predictions without encouraging the positive ones. This is because our network takes a single-scan point cloud as input, but the labels are collected and derived over the temporally fused point clouds. Hence, a positive instance may not always appear in each individual scan, due to occlusions or limited sensor coverage.

3.5 Contrastive Prototype Learning

Besides the great success in self-supervised representation learning [7, 21, 40], contrastive learning has also shown effectiveness in supervised learning and few-shot learning [18, 26, 46, 49]. It can overcome shortcomings of the cross-entropy loss, such as poor margins [13, 26, 32, 63], and construct a more descriptive embedding space. Following [18, 29, 33, 48, 63], we exploit the limited annotations by learning distinctive class prototypes (i.e., class centroids in the feature space). Without pre-training, a contrastive prototype loss \(\mathcal {L}_{\text {proto}}\) is added to Sect. 3.4 as an auxiliary loss. Due to the limited annotations and unbalanced label distribution, only using samples within each batch to determine class prototypes may lead to unstable results. Inspired by the idea of momentum contrast [21], we instead learn the class prototypes \(\textbf{P}_{c}\) by using a moving average over iterations:

where \(f(x_i)\) is the embedding of point \(x_i\), h is a linear projection head with vector normalization, \({\text {stopgrad}}\) denotes the stop gradient operation, \(y_i\) is the label of \(x_i\), \(n_c\) is the number of points with label c in a batch, and m is a momentum coefficient. In the beginning, \(\textbf{P}_{c}\) are initialized randomly.

The prototype loss \(\mathcal {L}_{\text {proto}}\) is calculated for the points with sparse labels and propagated labels within each batch:

where \(h(f(x_i)) \cdot \textbf{P}_{y_i}\) indicates the cosine similarity between the projected embedding and the prototype, \(\tau \) is a temperature hyper-parameter, n is the number of points, and \(w_{y_i}\) is the inverse square root weight of category \(y_i\). \(\mathcal {L}_{\text {proto}}\) aims to learn a better embedding space by increasing intra-class compactness and inter-class separability.

3.6 Multi-scan Distillation

We aim to learn a segmentation network that takes a single LiDAR scan as input and can be deployed in real-time onboard applications. During our label-efficient training, we can train a multi-scan network as a teacher model. It applies temporal fusion of multiple scans and takes the densified point cloud as input, compensating for the sparsity and incompleteness within a single scan. The teacher model is thus expected to exploit the richer semantics and perform better than a single-scan model. Especially, it may improve the performance for those underrepresented categories, which tend to be small and sparse. After that, we distill the knowledge from the multi-scan teacher model to boost the performance of the single-scan student model.

Specifically, for a scan at time t, we fuse the point clouds of neighboring scans at time \(\{t + i\varDelta ; i \in [-2, 2]\}\) (\(\varDelta \) is a time interval) using the ego-poses of the LiDAR sensor. To enable a large batch size, we use voxel subsampling [67] to normalize the fused point cloud to a fixed size. Labels are then fused accordingly. Besides the spatial coordinates, we also concatenate an additional channel indicating the time index i of each point. The teacher model is trained using the loss functions introduced in Sects. 3.4 and 3.5.

The student model shares the same backbone network and is first trained from scratch in the same way as the teacher model except for the single-scan input. We then fine-tune it by incorporating an additional distillation loss \(\mathcal {L}_{\text {dis}}\). Specifically, following [22], we match student predictions with the soft pseudo-labels generated by the teacher model via a cross-entropy loss:

where \(u_{ic}\) and \(v_{ic}\) are the predicted logits for point i and category c by the teacher and student models respectively, and T is a temperature hyper-parameter. A higher temperature is typically used so that the probability distribution across classes is smoother, and the distillation is thus encouraged to match the negative logits, which also contain rich information. The cross-entropy is multiplied by \(T^2\) to align the magnitudes of the gradients with existing other losses [22].

Please note that the idea of multi-scan distillation may only be beneficial for our label-efficient LiDAR segmentation setting. For the fully supervised setting, all labels are already available and accurate, and there is no need to leverage the pseudo labels. For the indoor setting, all points are sampled from high-quality reconstructed meshes, and there is no need for a multi-scan teacher model.

4 Experiments

We employ Cylinder3D [68], a recent state-of-the-art method for LiDAR semantic segmentation, as our backbone network. We utilize ground truth labels to mimic the obtained human annotations, and no extra noise is added. Please refer to the supplementary material for more implementation and training details.

We evaluate the proposed method on two large-scale autonomous driving datasets, SemanticKITTI [4] and nuScenes [5]. SemanticKITTI [4] is collected in Germany with 64-beam LiDAR sensors. The (sensor) capture and annotation frequency 10 Hz. It contains 10 training sequences (19k scans), 1 validation sequence (4k scans), and 11 testing sequences (20k scans). 19 classes are used for segmentation. nuScenes [5] is collected in Boston and Singapore with 32-beam LiDAR sensors. Although the (sensor) capture frequency 20 Hz, the annotation frequency is 2 Hz. It contains 700 training sequences (28k scans), 150 validation sequences (6k scans), and 150 testing sequences (6k scans). 16 classes are used for segmentation. For both datasets, we follow the official guidance [4, 5] to use mean intersection-over-union (mIoU) as the evaluation metric.

4.1 Comparison on SemanticKITTI

We compare the proposed method with both label-efficient [23, 24, 33, 55] and fully supervised [1, 12, 16, 27, 31, 50, 58, 65, 68] methods. Please note that all competing label-efficient methods mainly focus on indoor settings and are not specially designed for outdoor LiDAR segmentation. Among them, ContrastiveSC [23] employs contrastive learning as unsupervised pre-training and uses the learned features for active labeling, ReDAL [55] also employs active labeling, OneThingOneClick [33] proposes a self-training approach and iteratively propagate the labels, and SQN [24] presents a network by leveraging the similarity between neighboring points. We report the results on the validation set. Since ContrastiveSC [23] and OneThingOneClick [33] are only tested on indoor datasets in the original paper, we adapt the source code published by the authors and train their models on SemanticKITTI [4]. For other methods, the results are either obtained from the literature or correspondences with the authors.

Qualitative examples on the SemanticKITTI [4] (first row) and nuScenes [5] (second row) validation sets. Please zoom in for the details. Red rectangles highlight the wrong predictions. Our results are similar to the fully supervised counterpart, while ContrastiveSceneContext [23] produces worse results on underrepresented categories (see persons and bicycles). Please note that, points in two datasets (with different density) are visualized in different point size for better visualization. (Color figure online)

Table 2 lists the results, where our method outperforms existing label-efficient methods by a large margin. With only 0.1% sparse labels (as defined in Sect. 3.4), it even completely match the performance of the fully supervised baseline Cylinder3D [68], which demonstrates the potential of deployment into real applications. By checking the breakdown results, we find that the differences between methods mainly come from the underrepresented categories, such as bicycle, motorcycle, person, and bicyclist. Existing label-efficient methods, which are mainly designed for indoor settings, suffer a lot from the highly unbalanced sample distribution, while our method is remarkably competitive in those underrepresented classes. See Fig. 4 for further demonstration. OneThingOneClick [33] fails to produce decent results, which is partially due to its pure super-voxel assumption that does not always hold in outdoor scenes. As for the 0.01% annotations setting, the performance of SQN [24] drops drastically to 38.3%, whereas our proposed method can still achieve a high mIoU of 61.0%. For completeness, we also re-train Cylinder3D [68] with our proposed prototype learning and multi-scan distillation. We find that the two strategies provide marginal gain in the fully-supervised setting, where all labels are available and accurate.

4.2 Comparison on nuScenes

We also compare the proposed method with existing approaches on the nuScenes [5] dataset and report the results on the validation set. Since the author-released model of Cylinder3D [68] utilizes SemanticKITTI for pre-training, here, we report its result based on training the model from scratch for a fair comparison.

For other fully-supervised methods [9, 31, 50, 59], the results are either obtained from the literature or correspondences with the authors. Since no prior label-efficient work is tested on the nuScenes [5] dataset, we adapt the source code published by the authors to train ContrastiveSceneContext [23] from scratch.

We want to point out that points in the nuScenes dataset are much sparser than those in SemanticKITTI. In nuScenes, only 2 scans per second are labeled, while in SemanticKITTI, 10 scans per second are labeled. Due to the difference of sensors (32-beam vs. 64-beam), the number of points per scan in nuScenes is also much smaller (26k vs. 120k). See the right inset for the comparison of two datasets (fused points for 0.5 s). Considering the sparsity of the original ground truth labels, here we report the 0.2% and 0.9% annotation settings.

Table 3 shows the results, where our proposed method outperforms ContrastiveSceneContext [23] by a large margin. With only 0.2% sparse labels, our result is also highly competitive with the fully-supervised counterpart [68].

4.3 Ablation Study

Table 4 shows the ablation study of each component. The first row is the result of training with 0.1% random point labels. By incorporating the pre-segmentation, we spend the limited annotation budget on more underrepresented instances, thereby significantly increasing mIoU from 48.1% to 59.3%. Derived from the component proposals, weak labels and propagated labels complement the human-annotated sparse labels and provide dense supervision. Compared to multi-category weak labels, propagated labels provide more accurate supervision and thus lead to a slightly higher gain. Both contrastive prototype learning and multi-scan distillation further boost the performance and finally close the gap between LESS and the fully-supervised counterpart in terms of mIoU.

4.4 Analysis of Pre-segmentation and Labeling

By leveraging the unique geometric structure and a careful design, our pre-segmentation works well for outdoor LiDAR point clouds. Table 5 summarizes some statistics of the pre-segmentation and labeling results. For both datasets, only less than \(10\%\) of the components contain more than two categories, which validates that our pre-segmentation generates high-purity components. The high “coverage of propagated labels” indicates that we thus deduce a good amount of “free” supervision from the pure components. The low “coverage of sparse labels” shows that annotators indeed only need to label a tiny portion of points, thus reducing human effort. The “coverage of weak labels” confirms that the proposed components can faithfully cover most points. Furthermore, the consistent results across two distinct datasets verify that our method generalizes well in practice.

Table 6 shows the comparison of different annotation policies (i.e., how to use the labeling budget). The first two baselines are introduced in Sect. 3.1, “active labeling” utilizes the features from contrastive pre-training to actively select points [23], “uniform grid partition” uniformly divides the fused point clouds into a grid according to the xy coordinates and treats each cell as a component, “geometric partition” extracts handcrafted geometric features and solves a minimal partition problem [28, 68]. All of them are trained with the same backbone Cylinder3D [68]. The first three methods employ no pre-segmentation and are trained with \(\mathcal {L}_{\text {sparse}}\) only. The other approaches utilize our labeling policy (i.e., one label per class for each component) and are trained with additional \(\mathcal {L}_{\text {propagated}}\), \(\mathcal {L}_{\text {weak}}\), and \(\mathcal {L}_{\text {proto}}\). As a result, their performances are much higher than the first three methods. We also report the number of labels and the IoU for an underrepresented category. We see that our policy leads to more useful supervisions and higher IoUs for underrepresented categories.

4.5 Analysis of Multi-scan Distillation

Table 7 and Fig. 5 show the results of multi-scan distillation. The teacher model exploits the densified point clouds via temporal fusion and thus performs better than the single-scan model (even compared to the fully supervised single-scan model). Through knowledge distillation from the teacher model, the student model improves a lot in the underrepresented classes and completely matches the fully supervised model in mIoU.

5 Conclusion and Future Work

We study label-efficient LiDAR point cloud semantic segmentation and propose a pipeline that co-designs the labeling and the model learning and can work with most 3D segmentation backbones. We show that our method can utilize bare minimum human annotations to achieve highly competitive performance.

We have shown LESS is an effective approach for bootstrapping labeling and learning from scratch. In addition, LESS is also highly compatible for efficiently improving a performant model. With the predictions of an existing model, the proposed pipeline can be used for annotators to pick and label component proposals of high-values, such as underrepresented classes, long-tail instances, classes with most failures, etc. We leave this for future exploration.

References

Alnaggar, Y.A., Afifi, M., Amer, K., ElHelw, M.: Multi projection fusion for real-time semantic segmentation of 3D lidar point clouds. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp. 1800–1809 (2021)

Alonso, I., Riazuelo, L., Montesano, L., Murillo, A.C.: 3D-MiniNet: learning a 2D representation from point clouds for fast and efficient 3D LIDAR semantic segmentation. IEEE Rob. Autom. Lett. 5(4), 5432–5439 (2020)

Armeni, I., Sax, S., Zamir, A.R., Savarese, S.: Joint 2D–3D-semantic data for indoor scene understanding. arXiv preprint arXiv:1702.01105 (2017)

Behley, J., et al.: SemanticKITTI: a dataset for semantic scene understanding of lidar sequences. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV), pp. 9297–9307 (2019)

Caesar, H., et al.: nuScenes: a multimodal dataset for autonomous driving. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 11621–11631 (2020)

Chang, A.X., et al.: ShapeNet: an information-rich 3D model repository. arXiv preprint arXiv:1512.03012 (2015)

Chen, T., Kornblith, S., Norouzi, M., Hinton, G.: A simple framework for contrastive learning of visual representations. In: Proceedings of the International Conference on Machine Learning (ICML), pp. 1597–1607. PMLR (2020)

Cheng, M., Hui, L., Xie, J., Yang, J., Kong, H.: Cascaded non-local neural network for point cloud semantic segmentation. In: 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 8447–8452. IEEE (2020)

Cheng, R., Razani, R., Taghavi, E., Li, E., Liu, B.: AF2-S3Net: attentive feature fusion with adaptive feature selection for sparse semantic segmentation network. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 12547–12556 (2021)

Cortinhal, T., Tzelepis, G., Erdal Aksoy, E.: SalsaNext: fast, uncertainty-aware semantic segmentation of LiDAR point clouds. In: Bebis, G., et al. (eds.) ISVC 2020. LNCS, vol. 12510, pp. 207–222. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-64559-5_16

Dai, A., Chang, A.X., Savva, M., Halber, M., Funkhouser, T., Nießner, M.: ScanNet: richly-annotated 3D reconstructions of indoor scenes. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 5828–5839 (2017)

Duerr, F., Pfaller, M., Weigel, H., Beyerer, J.: LiDAR-based recurrent 3D semantic segmentation with temporal memory alignment. In: Proceedings of the International Conference on 3D Vision (3DV), pp. 781–790. IEEE (2020)

Elsayed, G.F., Krishnan, D., Mobahi, H., Regan, K., Bengio, S.: Large margin deep networks for classification. In: Advances in Neural Information Processing Systems (NeurIPS) (2018)

Fang, Y., Xu, C., Cui, Z., Zong, Y., Yang, J.: Spatial transformer point convolution. arXiv preprint arXiv:2009.01427 (2020)

Fischler, M.A., Bolles, R.C.: Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 24(6), 381–395 (1981)

Gan, L., Zhang, R., Grizzle, J.W., Eustice, R.M., Ghaffari, M.: Bayesian spatial kernel smoothing for scalable dense semantic mapping. IEEE Rob. Autom. Lett. 5(2), 790–797 (2020)

Gao, B., Pan, Y., Li, C., Geng, S., Zhao, H.: Are we hungry for 3d lidar data for semantic segmentation? ArXiv abs/2006.04307 3, 20 (2020)

Gao, Y., Fei, N., Liu, G., Lu, Z., Xiang, T., Huang, S.: Contrastive prototype learning with augmented embeddings for few-shot learning. arXiv preprint arXiv:2101.09499 (2021)

Gerdzhev, M., Razani, R., Taghavi, E., Bingbing, L.: TORNADO-Net: mulTiview tOtal vaRiatioN semantic segmentAtion with diamond inceptiOn module. In: Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), pp. 9543–9549. IEEE (2021)

Guinard, S., Landrieu, L.: Weakly supervised segmentation-aided classification of urban scenes from 3D LiDAR point clouds. In: ISPRS Workshop 2017 (2017)

He, K., Fan, H., Wu, Y., Xie, S., Girshick, R.: Momentum contrast for unsupervised visual representation learning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 9729–9738 (2020)

Hinton, G., Vinyals, O., Dean, J.: Distilling the knowledge in a neural network. In: Advances in Neural Information Processing Systems (NeurIPS) (2015)

Hou, J., Graham, B., Nießner, M., Xie, S.: Exploring data-efficient 3D scene understanding with contrastive scene contexts. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 15587–15597 (2021)

Hu, Q., et al.: SQN: weakly-supervised semantic segmentation of large-scale 3D point clouds with 1000x fewer labels. arXiv preprint arXiv:2104.04891 (2021)

Hu, Q., et al.: RandLA-Net: efficient semantic segmentation of large-scale point clouds. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 11108–11117 (2020)

Khosla, P., et al.: Supervised contrastive learning. In: Advances in Neural Information Processing Systems (NeurIPS) (2020)

Kochanov, D., Nejadasl, F.K., Booij, O.: KPRNet: improving projection-based lidar semantic segmentation. arXiv preprint arXiv:2007.12668 (2020)

Landrieu, L., Simonovsky, M.: Large-scale point cloud semantic segmentation with superpoint graphs. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 4558–4567 (2018)

Li, J., Zhou, P., Xiong, C., Hoi, S.C.: Prototypical contrastive learning of unsupervised representations. In: Proceedings of the International Conference on Learning Representations (ICLR) (2020)

Li, S., Chen, X., Liu, Y., Dai, D., Stachniss, C., Gall, J.: Multi-scale interaction for real-time lidar data segmentation on an embedded platform. arXiv preprint arXiv:2008.09162 (2020)

Liong, V.E., Nguyen, T.N.T., Widjaja, S., Sharma, D., Chong, Z.J.: AMVNet: assertion-based multi-view fusion network for lidar semantic segmentation. arXiv preprint arXiv:2012.04934 (2020)

Liu, W., Wen, Y., Yu, Z., Yang, M.: Large-margin softmax loss for convolutional neural networks. In: Proceedings of the International Conference on Machine Learning (ICML), vol. 2, p. 7 (2016)

Liu, Z., Qi, X., Fu, C.W.: One thing one click: a self-training approach for weakly supervised 3D semantic segmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1726–1736 (2021)

Luo, H., et al.: Semantic labeling of mobile lidar point clouds via active learning and higher order MRF. IEEE Trans. Geosci. Remote Sens. 56(7), 3631–3644 (2018)

Mahajan, D., et al.: Exploring the limits of weakly supervised pretraining. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11206, pp. 185–201. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01216-8_12

Mei, J., Gao, B., Xu, D., Yao, W., Zhao, X., Zhao, H.: Semantic segmentation of 3D lidar data in dynamic scene using semi-supervised learning. IEEE Trans. Intell. Transp. Syst. 21(6), 2496–2509 (2019)

Mei, J., Zhao, H.: Incorporating human domain knowledge in 3-D LiDAR-based semantic segmentation. IEEE Transa. Intell. Veh. 5(2), 178–187 (2019)

Mikolov, T., Sutskever, I., Chen, K., Corrado, G.S., Dean, J.: Distributed representations of words and phrases and their compositionality. In: Advances in Neural Information Processing Systems (NeurIPS), pp. 3111–3119 (2013)

Milioto, A., Vizzo, I., Behley, J., Stachniss, C.: RangeNet++: fast and accurate lidar semantic segmentation. In: 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 4213–4220. IEEE (2019)

Oord, A.V.D., Li, Y., Vinyals, O.: Representation learning with contrastive predictive coding. arXiv preprint arXiv:1807.03748 (2018)

Pathak, D., Shelhamer, E., Long, J., Darrell, T.: Fully convolutional multi-class multiple instance learning. arXiv preprint arXiv:1412.7144 (2014)

Pinheiro, P.O., Collobert, R.: From image-level to pixel-level labeling with convolutional networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1713–1721 (2015)

Razani, R., Cheng, R., Taghavi, E., Bingbing, L.: Lite-HDSeg: LiDAR semantic segmentation using lite harmonic dense convolutions. arXiv preprint arXiv:2103.08852 (2021)

Ren, Z., Misra, I., Schwing, A.G., Girdhar, R.: 3D spatial recognition without spatially labeled 3D. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 13204–13213 (2021)

Rist, C.B., Schmidt, D., Enzweiler, M., Gavrila, D.M.: SCSSNet: learning spatially-conditioned scene segmentation on LiDAR point clouds. In: 2020 IEEE Intelligent Vehicles Symposium (IV), pp. 1086–1093. IEEE (2020)

Schroff, F., Kalenichenko, D., Philbin, J.: FaceNet: a unified embedding for face recognition and clustering. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 815–823 (2015)

Shi, X., Xu, X., Chen, K., Cai, L., Foo, C.S., Jia, K.: Label-efficient point cloud semantic segmentation: an active learning approach. arXiv preprint arXiv:2101.06931 (2021)

Snell, J., Swersky, K., Zemel, R.S.: Prototypical networks for few-shot learning. In: Advances in Neural Information Processing Systems (NeurIPS) (2017)

Sohn, K.: Improved deep metric learning with multi-class N-pair loss objective. In: Advances in Neural Information Processing Systems (NeurIPS), pp. 1857–1865 (2016)

Tang, H., et al.: Searching efficient 3D architectures with sparse point-voxel convolution. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12373, pp. 685–702. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58604-1_41

Thomas, H., Agro, B., Gridseth, M., Zhang, J., Barfoot, T.D.: Self-supervised learning of lidar segmentation for autonomous indoor navigation. In: Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), pp. 14047–14053. IEEE (2021)

Thomas, H., Qi, C.R., Deschaud, J.E., Marcotegui, B., Goulette, F., Guibas, L.J.: KPConv: flexible and deformable convolution for point clouds. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV), pp. 6411–6420 (2019)

Wang, H., Rong, X., Yang, L., Feng, J., Xiao, J., Tian, Y.: Weakly supervised semantic segmentation in 3D graph-structured point clouds of wild scenes. arXiv preprint arXiv:2004.12498 (2020)

Wei, J., Lin, G., Yap, K.H., Hung, T.Y., Xie, L.: Multi-path region mining for weakly supervised 3D semantic segmentation on point clouds. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 4384–4393 (2020)

Wu, T.H., et al.: ReDAL: region-based and diversity-aware active learning for point cloud semantic segmentation. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV), pp. 15510–15519 (2021)

Xiao, A., Huang, J., Guan, D., Zhan, F., Lu, S.: SynLiDAR: learning from synthetic LiDAR sequential point cloud for semantic segmentation. arXiv preprint arXiv:2107.05399 (2021)

Xie, S., Gu, J., Guo, D., Qi, C.R., Guibas, L., Litany, O.: PointContrast: unsupervised pre-training for 3D point cloud understanding. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12348, pp. 574–591. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58580-8_34

Xu, C., et al.: SqueezeSegV3: spatially-adaptive convolution for efficient point-cloud segmentation. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12373, pp. 1–19. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58604-1_1

Xu, J., Zhang, R., Dou, J., Zhu, Y., Sun, J., Pu, S.: RpvNet: a deep and efficient range-point-voxel fusion network for LiDAR point cloud segmentation. arXiv preprint arXiv:2103.12978 (2021)

Xu, K., Yao, Y., Murasaki, K., Ando, S., Sagata, A.: Semantic segmentation of sparsely annotated 3D point clouds by pseudo-labelling. In: Proceedings of the International Conference on 3D Vision (3DV), pp. 463–471. IEEE (2019)

Xu, X., Lee, G.H.: Weakly supervised semantic point cloud segmentation: towards 10x fewer labels. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 13706–13715 (2020)

Yan, X., et al.: Sparse single sweep LiDAR point cloud segmentation via learning contextual shape priors from scene completion. In: Proceedings of the AAAI Conference on Artificial Intelligence (AAAI) (2020)

Yang, H.M., Zhang, X.Y., Yin, F., Liu, C.L.: Robust classification with convolutional prototype learning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 3474–3482 (2018)

Zhang, F., Fang, J., Wah, B., Torr, P.: Deep FusionNet for point cloud semantic segmentation. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12369, pp. 644–663. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58586-0_38

Zhang, Y., et al.: PolarNet: an improved grid representation for online lidar point clouds semantic segmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 9601–9610 (2020)

Zhao, N., Chua, T.S., Lee, G.H.: Few-shot 3D point cloud semantic segmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 8873–8882 (2021)

Zhou, Y., Tuzel, O.: VoxelNet: end-to-end learning for point cloud based 3D object detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2018

Zhu, X., et al.: Cylindrical and asymmetrical 3D convolution networks for LiDAR segmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 9939–9948 (2021)

Zou, Y., Weinacker, H., Koch, B.: Towards urban scene semantic segmentation with deep learning from LiDAR point clouds: a case study in Baden-Württemberg, Germany. Remote Sens. 13(16), 3220 (2021)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Liu, M., Zhou, Y., Qi, C.R., Gong, B., Su, H., Anguelov, D. (2022). LESS: Label-Efficient Semantic Segmentation for LiDAR Point Clouds. In: Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T. (eds) Computer Vision – ECCV 2022. ECCV 2022. Lecture Notes in Computer Science, vol 13699. Springer, Cham. https://doi.org/10.1007/978-3-031-19842-7_5

Download citation

DOI: https://doi.org/10.1007/978-3-031-19842-7_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-19841-0

Online ISBN: 978-3-031-19842-7

eBook Packages: Computer ScienceComputer Science (R0)