Abstract

Natural language sentence matching is the task of comparing two sentences and identifying the relationship between them. It has a wide range of applications in natural language processing tasks such as reading comprehension, question and answer systems. The main approach is to compute the interaction between text representations and sentence pairs through an attention mechanism, which can extract the semantic information between sentence pairs well. However, this kind of methods fail to capture deep semantic information and effectively fuse the semantic information of the sentence. To solve this problem, we propose a sentence matching method based on deep interaction and fusion. We first use pre-trained word vectors Glove and character-level word vectors to obtain word embedding representations of the two sentences. In the encoding layer, we use bidirectional LSTM to encode the sentence pairs. In the interaction layer, we initially fuse the information of the sentence pairs to obtain low-level semantic information; at the same time, we use the bi-directional attention in the machine reading comprehension model and self-attention to obtain the high-level semantic information. We use a heuristic fusion function to fuse the low-level semantic information and the high-level semantic information to obtain the final semantic information, and finally we use the neural network to predict the answer. We evaluate our model on two tasks: text implication recognition and paraphrase recognition. We conducted experiments on the SNLI datasets for the recognizing textual entailment task, the Quora dataset for the paraphrase recognition task. The experimental results show that the proposed algorithm can effectively fuse different semantic information that verify the effectiveness of the algorithm on sentence matching tasks.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Natural language sentence matching is the task of comparing two sentences and identifying the relationship between them. It is a fundamental technique for a variety of tasks. For example, in the paraphrase recognition task, it is used to determine whether two sentences are paraphrased. In the text implication recognition task, it is possible to determine whether a hypothetical sentence can be inferred from a predicate sentence.

Recognizing Textual Entailment (RTE), proposed by Dagan [6], is a study of the relationship between premises and assumptions. It mainly includes entailment, contradiction, and neutrality. The main methods for recognizing textual entailment include the following: similarity-based methods [15], rule-based methods [11], alignment feature-based machine learning methods [18], etc. However, These methods can’t perform well in recognition because they didn’t extract the semantic information of the sentences well. In recent years, deep learning-based methods have been effective in semantic modeling, achieving good results in many tasks in NLP [12, 13, 23]. Therefore, on the task of recognizing textual entailment, deep learning-based methods have outperformed earlier approaches and become the dominant recognizing textual entailment method. For example, Bowman et al. used recurrent neural networks to model premises and hypotheses, which have the advantage of making full use of syntactic information [2]. After that, he first applied LSTM sentence models to the RTE domain by encoding premises and hypotheses through LSTM to obtain sentence vectors [3]. WANG et al. proposed mLSTM model on this basis, which focuses on splicing attention weights in the hidden states of the LSTM, focusing on the part of the semantic match between the premise and the hypothesis. The experimental results showed that the method achieved good results on the SNLI dataset [20].

Paraphrase recognition is also called paraphrase detection. The task of paraphrase recognition is to determine whether two texts hold the same meaning. If they have the same meaning, they are called paraphrase pairs. Traditional paraphrase recognition methods focus on text features. However, there are problems such as low accuracy rate. Therefore, deep learning-based paraphrase recognition methods have become a hot research topic. Deep learning-based paraphrase recognition methods are mainly divided into two types; 1) calculated word vectors by neural networks, and then calculated word vector distances to determine whether they were paraphrase pairs. For example, Huang et al. used an improved EMD method to calculate the semantic distance between vectors and obtain the interpretation relationship [7]. 2) Directly determining whether a text pair is a paraphrased pair by a neural network model, which is essentially a binary classification algorithm. Wang et al. proposed the BIMPM model, which first encodes sentence pairs by a bidirectional LSTM and then matches the encoding results from multiple perspectives in both directions [21]. Chen et al. proposed an ESIM model that uses a two-layer bidirectional LSTM and a self-attention mechanism for encoding, then it extracts features through the average pooling layer and the maximum pooling layer, and finally performs classification [5].

These models mentioned above have achieved good results on specific tasks, but most of these models have difficulty extracting deep semantic information and effectively fusing the extracted semantic information, in this paper, we propose a sentence matching model based on deep interaction and fusion. We use the bi-directional attention and self-attention to obtain the high-level semantic information. Then, we use a heuristic fusion function to fuse the low-level semantic information and the high-level semantic information to obtain the final semantic information. We conducted experiments on the SNLI datasets for the recognizing textual entailment task, the Quora dataset for the paraphrase recognition task. The results showed that the accuracy of the proposed algorithm on the SNLI test set is 87.1%, and the accuracy of the Quora test set is 86.8%. Our contributions can be summarized as follows:

-

We propose a sentence matching model based on deep interaction and fusion. It introduces bidirectional attention mechanism into sentence matching task for the first time.

-

We propose a heuristic fusion function. It can learn the weights of fusion by neural network to achieve deep fusion.

-

We evaluate our model on two different tasks and Validate the effectiveness of the model.

2 BIDAF Model Based on Bi-directional Attention Flow

In the task of extractive machine reading comprehension, Seo et al. first proposed a bi-directional attention flow model BIDAF (Bi-Directional Attention Flow) for question-to-article and article-to-question [16]. Its structure is shown in Fig. 1.

The model mainly consists of an embed layer, a contextual encoder layer, an attention flow layer, a modeling layer, and an output layer. After the character-level word embedding and the pre-trained word vector Glove word embedding, the contextual representations X and Y of the article and the question are obtained by a bidirectional LSTM, respectively. The bi-directional attention flow between them is computed, and it proceeds as follows:

-

a)

The similarity matrix between the question and the article is calculated. The calculation formula is shown in Eq. 1.

$$\begin{aligned} \begin{aligned}&K_{tj}=W^T\left[ X_{:t};Y_{:j};X_{:t}\odot Y_{:j} \right] \end{aligned} \end{aligned}$$(1)where \(K_{tj}\) is the similarity of the t-th article word to the j-th question word, \(X_{:t}\) is the t-th column vector of X, \(Y_{:j}\) is the j-th column vector of Y, and W is a trainable weight vector.

-

b)

Calculating the article-to-question attention. Firstly, the normalization operation is performed on the above similarity matrix, and then the weighted sum of the problem vector is calculated to obtain the article-to-problem attention, which is calculated as shown in Eq. 2.

$$\begin{aligned} \begin{aligned}&x_t=soft\max \left( K \right) \\&\hat{Y}_{:t}=\sum _j{x_{tj}Y_{:j}} \end{aligned} \end{aligned}$$(2) -

c)

Query-to-context (Q2C) attention signifies which context words have the closest similarity to one of the query words and are hence critical for answering the query. We obtain the attention weights on the context words by \(y=softmax\!\,(max_{col}\!\,(K))\in R^T\), where the maximum function \(\max _{col}\) is performed across the column. Then the attended context vector is \(\hat{x}=\sum _t{y_tX_{:t}}\). This vector indicates the weighted sum of the most important words in the context with respect to the query. \(\hat{x}\) is tiled T times across the column, thus giving \(\hat{X}\in R^{2d*T}\).

-

d)

Fusion of bidirectional attention streams. The bidirectional attention streams obtained above are stitched together to obtain the new representation, which is calculated as shown in Eq. 3.

$$\begin{aligned} \begin{aligned}&L_{:t}=\left[ X_{:t};\hat{Y}_{:t};X_{:t}\odot \hat{Y}_{:t};X_{:t}\odot \hat{X}_{:t} \right] \end{aligned} \end{aligned}$$(3)

We builds on this work by looking at sentence pairs in a natural language sentence matching task as articles and problems for reading comprehension. We use the bi-directional attention and self-attention to obtain the high-level semantic information. Then, we use a heuristic fusion function to fuse the low-level semantic information and the high-level semantic information to obtain the final semantic information.

3 Method

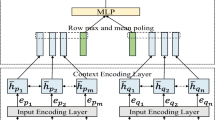

In this section, we describe our model in detail. As shown in Fig. 2, our model mainly consists of an embedding layer, a contextual encoder layer, an interaction layer, a fusion layer, and an output layer.

3.1 Embedding Layer

The purpose of the embedding layer is to map the input sentence A and sentence B into word vectors. The traditional mapping method is one-hot encoding. However, it is spatially expensive and inefficient, so we use pre-trained word vectors for word embedding. These word vectors are constant during training.

Since the text contains unregistered words, we also use character-level word vector embedding. Each word can be seen as a concatenation of characters and characters, and then we use LSTM to get character-level word vectors. It can effectively handle unregistered words.

We assume that the pre-trained word vector for word h is \({h_w}\), and character-level word vector is \({h_c}\), we splice the two vectors and use a two-tier highway network [25] to get the word vector representation of word h:\(h = [{h_\mathrm{{1}}};{h_\mathrm{{2}}}] \in {R^{{d_1} + {d_2}}}\) , where \({d_1}\) is the dimension of Glove word embedding and \({d_2}\) is the dimension of character-level word embedding. Finally, we obtain the word embedding matrix \(X \in {R^{n\mathrm{{*}}({d_1} + {d_2})}}\) for sentence A and the word embedding matrix \(Y \in {R^{m*({d_1} + {d_2})}}\) for sentence B, where n, m represent the number of words in sentence A and sentence B.

3.2 Contextual Encoder Layer

The purpose of the contextual encoder layer is to fully exploit the contextual relationship features of the sentences. We use bidirectional LSTM for encoding which can mine the contextual relationship features of the sentences. Then, we can obtain its representation \(H \in {R^{2d*n}}\) and \(P \in {R^{2d*m}}\) , where d is the hidden layer dimension.

3.3 Interaction Layer

The purpose of the interaction layer is to extract the effective features between sentences. In this module, we can obtain low-level semantic information and high-level semantic information.

Low-Level Semantic Information. The purpose of this module initially fuses two sentences to get the low-level semantic information. We first calculate the similarity matrix S of the context-encoded information H and P, which is shown in Eq. 4.

where \({{S}_{ij}}\) denotes the similarity between the i-th word of H and the j-th word of P, \({{W}_{s}}\) is weight matrices, h is the i-th column of H, and p is the j-th column of P. Then, we calculate the low-level semantic information V of A and B, which is shown in Eq. 5.

High-Level Semantic Information. The purpose of this module is mine the deep semantics of the text, and to generate high-level semantic information. In this module, we frist calculate the bidirectional attention of H and P that is the attention of \(H\rightarrow P\) and \(P\rightarrow H\). It is calculated as follows.

\(H\rightarrow P\): The attention describes which words in the sentence P are most relevant to H. The calculation process is as follows; firstly, each row of the similarity matrix is normalized to get the attention weight, and then the new text representation \(Q\in {{R}^{2d*n}}\) is obtained by weighted summation with each column of P, which is calculated as shown in Eq. 6.

where \({q_{:t}}\) is the t-th column of Q.

\(P\rightarrow H\): The attention indicates which words in H are most similar to P. The calculation process is as follows: firstly, the column with the largest value in the similarity matrix \(\boldsymbol{S}\) is taken to obtain the attention weight, then the weighted sum of H is expanded by n time steps to obtain \(C\in {{R}^{2d*n}}\), which is calculated as shown in Eq. 7.

After obtaining the attention matrix Q of \(H\rightarrow P\) and the attention matrix C of \(P\rightarrow H\), we splice the attention in these two directions by a multilayer perceptron. Finally, we get the spliced contextual representation G, which is calculated as shown in Eq. 8.

Then, we calculate its self-attention [19], which is calculated as shown in Eq. 9.

Finally, we pass the above semantic information Z through a bi-directional LSTM to obtain high-level semantic information U.

3.4 Fusion Layer

The purpose of the fusion layer is to fuse the low-level semantic information V and the high-level semantic information U. We innovatively propose a heuristic fusion function, it can learn the weights of fusion by neural network to achieve deep fusion. We fuse V and U to obtain the text representation \(L=fusion(U, V)\in {{R}^{n*2d}}\) , where the fusion function is defined as shown in Eq. 10:

where \({{W}_{1}}\) and \({{W}_{2}}\) are weight matrices, and g is a gating mechanism to control the weight of the intermediate vectors in the output vector. In this paper, x refers to U and y refers to V.

3.5 Output Layer

The purpose of the output layer is to output the results. In this paper, we use a linear layer to get the results of sentence matching. The process is shown in Eq. 11.

where both W and b are trainable parameters. Z is the vector after splicing its first and last vectors.

4 Experimental Results and Analysis

In this section, we validate our model on two datasets from two tasks. We first present some details of the model implementation, and secondly, we show the experimental results on the dataset. Finally, we analyze the experimental results.

4.1 Experimental Details

Loss Function. In this paper, the cross-entropy loss function can be chosen as shown in Eq. 12.

where N is the number of samples, K is the total number of categories and \({{\hat{y}}^{(i, k)}}\) is the true label of the i-th sample.

Dataset. In this paper, we use the natural language inference datasets SNLI, and the paraphrase recognition dataset Quora to validate our model. The SNLI dataset contains 570K manually labeled and categorically balanced sentence pairs. The Quora question pair dataset contains over 400k pairs of data that each with binary annotations, with 1 being a duplicate and 0 being a non-duplicate. The statistical descriptions of SNLI and Quora data are shown in Table 1.

Parameter Settings. This experiment is conducted in a hardware environment with a graphics card RTX5000 and 16G of video memory. The system is Ubuntu 20.04, the development language is Python 3.7, and the deep learning framework is Pytorch 1.8.

In the model training process, a 300-dimensional Glove word vector are used for word embedding, and the maximum length of text sentences is set to 300 and 50 words on the SNLI and Quora datasets, respectively. The specific hyperparameter settings are shown in Table 2.

4.2 Experimental Results and Analysis

We compare the experimental results of the sentence matching model based on deep interaction and fusion on the SNLI dataset with other published models. The evaluation metric we use is the accuracy rate. The results are shown in Table 3. As can be seen from Table 3, our model achieves an accuracy rate of 0. 871 on the SNLI dataset, which achieves better results in the listed models. Compared with the LSTM, it is improved by 0. 065. Compared with Star-Transformer model, it is improved by 0. 004. Compared with some other models, it is observed that our model is better than the others model. We conduct experiments on the Quora dataset, and the evaluation metric is accuracy. The experimental results on the Quora dataset are shown in Table 4. As can be seen from Table 4, the accuracy of our method on the test set is 0.868. The experimental results improve the accuracy by 0.054 compared to the traditional LSTM model. Compared with the enhanced sequential inference model ESIM, it is improved by 0.004. The experimental results achieved good results compared to some current popular deep learning methods. Our model achieve relatively good results in both tasks, which illustrates the effectiveness of our model.

4.3 Ablation Experiments

To explore the role played by each module, we conduct an ablation experiment on the SNLI dataset . Without using the fusion function, which means that the low-level semantic information are directly spliced with the high-level semantic information. The experimental results are shown in Table 5.

We first verify the effectiveness of character embedding. Specifically, we remove the character embedding for the experiment, and its accuracy drops by 1.5% points, proving that character embedding plays an important role in improving the performance of the model.

In addition, we verify the effectiveness of the semantic information and fusion modules. We removed low-level semantic information and high-level semantic information from the original model, and its accuracy dropped by 1.2% points and 7.6% points. At the same time, we remove the fusion function, and its accuracy drops by about 1.0% points. It shows that the different semantic information and the fusion function are beneficial to improve the accuracy of the model, with the high-level semantic information being more significant for the model.

Finally, we verify the effectiveness of each attention on the model. We remove the attention from P to H, the attention from H to P, and the self-attention module respectively. Their accuracy rates decreased by 2.5% points, 0.9% points, and 1.3% points. It shows that all the various attention mechanisms improve the performance of the model, with the P to H attention being more significant for the model.

The ablation experiments show that each component of our model plays an important role, especially the high-level semantic information module and the P to H attention module, which have a greater impact on the performance of the model. Meanwhile, the character embedding and fusion function also play an important role in our model.

5 Conclusion

we investigate natural language sentence matching methods and propose an effective deep interaction and fusion model for sentence matching. Our model first uses the bi-directional attention in the machine reading comprehension model and self-attention to obtain the high-level semantic information. Then, we use a heuristic fusion function to fuse the semantic information that we get. Finally, we use a linear layer to get the results of sentence matching . We conducted experiments on SNLI and Quora datasets. The experimental results show that the model proposed in this paper can achieve good results in two tasks. In this work, we find that our proposed interaction module and fusion module occupie the dominant position and have a great impact on our model. However, Our model is not as powerful as the pre-trained model in terms of feature extraction and lacks external knowledge. The next research work plan will focus on the following two points: 1) we use more powerful feature extractors, such as BERT pre-trained model as text feature extractors; 2) the introduction of external knowledge will be considered. For example, WordNet, an external knowledge base, contains many sets of synonyms, and for each input word, its synonyms are retrieved from WordNet and embedded in the word vector representation of the word to further improve the performance of the model.

References

Borges, L., Martins, B., Calado, P.: Combining similarity features and deep representation learning for stance detection in the context of checking fake news. J. Data Inf. Qual. (JDIQ) 11(3), 1–26 (2019)

Bowman, S., Potts, C., Manning, C.D.: Recursive neural networks can learn logical semantics. In: Proceedings of the 3rd Workshop on Continuous Vector Space Models and their Compositionality, pp. 12–21 (2015)

Bowman, S.R., Angeli, G., Potts, C., Manning, C.D.: A large annotated corpus for learning natural language inference. In: Conference on Empirical Methods in Natural Language Processing, EMNLP 2015, pp. 632–642. Association for Computational Linguistics (ACL) (2015)

Bowman, S.R., Gupta, R., Gauthier, J., Manning, C.D., Rastogi, A., Potts, C.: A fast unified model for parsing and sentence understanding. In: 54th Annual Meeting of the Association for Computational Linguistics, ACL 2016, pp. 1466–1477. Association for Computational Linguistics (ACL) (2016)

Chen, Q., Zhu, X., Ling, Z.H., Wei, S., Jiang, H., Inkpen, D.: Enhanced LSTM for natural language inference. In: Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pp. 1657–1668 (2017)

Dagan, I., Glickman, O.: Probabilistic textual entailment: generic applied modeling of language variability. Learn. Methods Text Underst. Min. 2004, 26–29 (2004)

Dong-hong, H.: Convolutional network-based semantic similarity model of sentences. J. South China Univ. Technol. (Nat. Sci.) 45(3), 68–75 (2017)

Guo, Q., Qiu, X., Liu, P., Shao, Y., Xue, X., Zhang, Z.: Star-transformer. In: Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), pp. 1315–1325 (2019)

Han, K., et al.: Delta: a deep learning based language technology platform. arXiv preprint arXiv:1908.01853 (2019)

He, H., Lin, J.: Pairwise word interaction modeling with deep neural networks for semantic similarity measurement. In: Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pp. 937–948 (2016)

Hu, C., Wu, C., Yang, Y.: Extended S-LSTM based textual entailment recognition. J. Comput. Res. Dev. 57(7), 1481–1489 (2020)

Jin, J., Zhao, Y., Cui, R.: Research on multi-granularity ensemble learning based on korean. In: The 2nd International Conference on Computing and Data Science, pp. 1–6 (2021)

Li, F., Zhao, Y., Yang, F., Cui, R.: Incorporating translation quality estimation into chinese-korean neural machine translation. In: Li, S., et al. (eds.) CCL 2021. LNCS (LNAI), vol. 12869, pp. 45–57. Springer, Cham (2021). https://doi.org/10.1007/978-3-030-84186-7_4

Mu, N., Yao, Z., Gholami, A., Keutzer, K., Mahoney, M.: Parameter re-initialization through cyclical batch size schedules. arXiv preprint arXiv:1812.01216 (2018)

Ren, H., Sheng, Y., Feng, W.: Recognizing textualentailmentbasedonknowledgetopicmodels. J. Chin. Inf. Process. 29(6), 119–127 (2015)

Seo, M., Kembhavi, A., Farhadi, A., Hajishirzi, H.: Bidirectional attention flow for machine comprehension. arXiv preprint arXiv:1611.01603 (2016)

Shen, D., et al.: Baseline needs more love: on simple word-embedding-based models and associated pooling mechanisms. In: Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pp. 440–450 (2018)

Sultan, M.A., Bethard, S., Sumner, T.: Feature-rich two-stage logistic regression for monolingual alignment. In: Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, pp. 949–959 (2015)

Vaswani, A., et al.: Attention is all you need. In: Proceedings of the 31st International Conference on Neural Information Processing Systems, pp. 6000–6010 (2017)

Wang, S., Jiang, J.: Learning natural language inference with LSTM. In: Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pp. 1442–1451 (2016)

Wang, Z., Hamza, W., Florian, R.: Bilateral multi-perspective matching for natural language sentences. In: Proceedings of the 26th International Joint Conference on Artificial Intelligence, pp. 4144–4150 (2017)

Yang, D., Ke, X., Yu, Q.: A question similarity calculation method based on RCNN. J. Comput. Eng. Sci. 43(6), 1076–1080 (2021)

Yang, F., Zhao, Y., Cui, R.: Recognition method of important words in korean text based on reinforcement learning. In: Sun, M., Li, S., Zhang, Y., Liu, Y., He, S., Rao, G. (eds.) CCL 2020. LNCS (LNAI), vol. 12522, pp. 261–272. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-63031-7_19

Zhao, Q., Du, Y., Lu, T.: Algorithm of text similarity analysis based on capsule-BIGRU. J. Comput. Eng. Appl. 57(15), 171–177 (2021)

Zilly, J.G., Srivastava, R.K., Koutnık, J., Schmidhuber, J.: Recurrent highway networks. In: International Conference on Machine Learning, pp. 4189–4198. PMLR (2017)

Acknowledgements

This work is supported by National Natural Science Foundation of China [grant numbers 62162062]. State Language Commission of China under Grant No. YB135-76, scientific research project for building world top discipline of Foreign Languages and Literatures of Yanbian University under Grant No. 18YLPY13. Doctor Starting Grants of Yanbian University [2020-16], the school-enterprise cooperation project of Yanbian University [2020-15].

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Jiang, K., Zhao, Y., Cui, R. (2022). DIFM: An Effective Deep Interaction and Fusion Model for Sentence Matching. In: Sun, M., et al. Chinese Computational Linguistics. CCL 2022. Lecture Notes in Computer Science(), vol 13603. Springer, Cham. https://doi.org/10.1007/978-3-031-18315-7_2

Download citation

DOI: https://doi.org/10.1007/978-3-031-18315-7_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-18314-0

Online ISBN: 978-3-031-18315-7

eBook Packages: Computer ScienceComputer Science (R0)