Abstract

The Rado graph, also known as the random graph G(∞, p), is a classical limit object for finite graphs. We study natural ball walks as a way of understanding the geometry of this graph. For the walk started at i, we show that order \(\log _2^*i\) steps are sufficient, and for infinitely many i, necessary for convergence to stationarity. The proof involves an application of Hardy’s inequality for trees.

Dedicated to our friend and coauthor Harold Widom.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

Mathematics Subject Classification (2020)

1 Introduction

The Rado graph R is a natural limit of the set of all finite graphs (Fraissé limit, see Sect. 2.1). In Rado’s construction, the vertex set is \(\mathbb {N} = \{0,1,2,\ldots \}\). There is an undirected edge from i to j if i < j and the i th binary digit of j is a one (where the 0th digit is the first digit from the right). Thus, 0 is connected to all odd numbers, 1 is connected to 0 and all j which are 2 or 3 (mod 4) and so on. There are many alternative constructions. For p ∈ (0, 1), connecting i and j with probability p gives the Erdős–Rényi graph G(∞, p), which is (almost surely) isomorphic to R. Further constructions are in Sect. 2.1.

Let \((Q(j))_{0\leqslant j<\infty }\) be a positive probability on \(\mathbb {N}\) (so, Q(j) > 0 for all j, and \(\sum _{j=0}^\infty Q(j)=1\)). We study a ‘ball walk’ on R generated by Q: from \(i\in \mathbb {N}\), pick j ∈ N(i) with probability proportional to Q(j), where N(i) = {j : j ∼ i} is the set of neighbors of i in R. Thus, the probability of moving from i to j in one step is

As explained below, this walk is connected, aperiodic and reversible, with stationary distribution

where Z is the normalizing constant.

It is natural to study the mixing time—the rate of convergence to stationarity. The following result shows that convergence is extremely rapid. Starting at \(i\in \mathbb {N}\), order \(\log _2^* i\) steps suffice, and for infinitely many i, are needed.

Theorem 1

Let Q(j) = 2−(j+1), \(0\leqslant j<\infty \) . For K(i, j) and π defined at (1) and (2) on the Rado graph R,

-

1.

For universal A, B > 0, we have for all \(i\in \mathbb {N}\), \(\ell \geqslant 1\),

$$\displaystyle \begin{aligned} \|K_i^\ell - \pi\| \leqslant A e^{\log_2^*i} e^{-B\ell}. \end{aligned}$$ -

2.

For universal C > 0, if \(2^{(k)} = 2^{2^{\cdot ^{\cdot ^{\cdot ^{2}}}}}\) is the tower of 2’s of height k,

$$\displaystyle \begin{aligned} \|K^\ell_{2^{(k)}} - \pi\| \geqslant C \end{aligned}$$for all \(\ell \leqslant k\) . Here \(\|K_i^\ell - \pi \| = \frac {1}{2}\sum _{j=0}^\infty |K^\ell (i,j) - \pi (j)|\) is the total variation distance and \(\log _2^* i \) is the number of times log2 needs to be applied, starting from i, to get a result \(\leqslant 1\).

The proofs allow for some variation in the measure Q. They also work for the G(∞, p) model of R, though some modification is needed since then K and π are random.

Theorem 1 answers a question in Diaconis and Malliaris [8], who proved the lower bound. Most Markov chains on countable graphs restrict attention to locally finite graphs [25]. For Cayley graphs, Bendikov and Saloff-Coste [1] begin the study of more general transitions and point out how few tools are available. See also [12, 20]. Studying the geometry of a space (here R) by studying the properties of the Laplacian (here I − K) is a classical pursuit (“Can you hear the shape of a drum?”)—see [16].

Section 2 gives background on the Rado graph, Markov chains, ball walks, and Hardy’s inequalities. Section 3 gives preliminaries on the behavior of the neighborhoods of the G(∞, p) model. The lower bound in Theorem 1 is proved in Sect. 4. Both Sects. 3 and 4 give insight into the geometry of R. The upper bound in Theorem 1 is proved by proving that the Markov chain K has a spectral gap. Usually, a spectral gap alone does not give sharp rates of convergence. Here, for any start i, we show the chain is in a neighborhood of 0 after order \(\log _2^* i\) steps. Then the spectral gap shows convergence in a bounded number of further steps. This argument works for both models of R. It is given in Sect. 5.

The spectral gap for the G(∞, p) model is proved in Sect. 6 using a version of Cheeger’s inequality for trees. For Rado’s binary model, the spectral gap is proved by a novel version of Hardy’s inequality for trees in Sect. 7. This is the first probabilistic application of this technique, which we hope will be useful more generally. There are two appendices containing technical details for the needed versions of Cheeger’s and Hardy’s inequalities.

2 Background on R, Markov Chains, and Hardy’s Inequalities

2.1 The Rado Graph

A definitive survey on the Rado graph (with full proofs) is in Peter Cameron’s fine article [6]. We have also found the Wikipedia entry on the Rado graph and Cameron’s follow-up paper [7] useful.

In Rado’s model, the graph R has vertex set \(\mathbb {N} = \{0,1,2,\ldots \}\) and an undirected edge from i to j if i < j and the i th digit of j is a one. There are many other constructions. The vertex set can be taken as the prime numbers that are 1 (mod 4) with an edge from p to q if the Legendre symbol \((\frac {p}{q}) = 1\). In [8], the graph appears as an induced subgraph of the commuting graph of the group U(∞, q)—infinite upper-triangular matrices with ones on the diagonal and entries in \(\mathbb {F}_q\). The vertices are points of U(∞, q). There is an edge from x to y if and only if the commutator x −1 y −1 xy is zero. The infinite Erdős–Rényi graphs G(∞, p) are almost surely isomorphic to R for all p, 0 < p < 1.

The graph R has a host of fascinating properties:

-

It is stable in the sense that deleting any finite number of vertices or edges yields an isomorphic graph. So does taking the complement.

-

It contains all finite or countable graphs as induced subgraphs. Thus, the (countable) empty graph and complete graphs both appear as induced subgraphs.

-

The diameter of R is two—consider any \(i\ne j \in \mathbb {N}\) and let k be a binary number with ones in positions i and j and zero elsewhere. Then i ∼ k ∼ j.

-

Each vertex is connected to “half” of the other vertices: 0 is connected to all the odd vertices, 1 to 0 and all numbers congruent to 2 or 3 (mod 4), and so on.

-

R is highly symmetric: Any automorphism between two induced subgraphs can be extended to all of R (this is called homogeneity). The automorphism group has the cardinality of the continuum.

-

R is the “limit” if the collection of all finite graphs (Fraissé limit). Let us spell this out. A relational structure is a set with a finite collection of relations (we are working in first order logic without constants or functions). For example, \(\mathbb {Q}\) with x < y is a relational structure. A graph is a set with one symmetric relation. The idea of a “relational sub-structure” clearly makes sense. A class \(\mathcal {C}\) of structures has the amalgamation property if for any \(A, B_1, B_2\in \mathcal {C}\) with embeddings

and

and  , there exists \(C\in \mathcal {C}\) and embeddings

, there exists \(C\in \mathcal {C}\) and embeddings  and

and  such that g

1

f

1 = g

2

f

2. A countable relational structure M is homogeneous if any isomorphism between finite substructures can be extended to an automorphism of M. Graphs and \(\mathbb {Q}\) are homogeneous relational structures. A class \(\mathcal {C}\) has the joint embedding property if for any \(A,B \in \mathcal {C}\) there is a \(C \in \mathcal {C}\) so that A and B are embeddable in C.

such that g

1

f

1 = g

2

f

2. A countable relational structure M is homogeneous if any isomorphism between finite substructures can be extended to an automorphism of M. Graphs and \(\mathbb {Q}\) are homogeneous relational structures. A class \(\mathcal {C}\) has the joint embedding property if for any \(A,B \in \mathcal {C}\) there is a \(C \in \mathcal {C}\) so that A and B are embeddable in C.Theorem 2 (Fraissé)

Let \(\mathcal {C}\) be a countable class of finite structures with the joint embedding property and closed under ‘induced’ isomorphism with amalgamation. Then there exists a unique countable homogeneous \(\mathcal {M}\) with \(\mathcal {C}\) as induced substructures.

The rationals \(\mathbb {Q}\) are the Fraissé limit of finite ordered sets. The Rado graph R is the Fraissé limit of finite graphs. We have (several times!) been told “for a model theorist, the Rado graph is just as interesting as the rationals”.

There are many further, fascinating properties of R; see [6].

2.2 Markov Chains

A transition matrix K(i, j), \(0\leqslant i,j<\infty \), \(K(i,j)\geqslant 0\), \(\sum _{j=0}^\infty K(i,j)=1\) for all i, \(0\leqslant i<\infty \), generates a Markov chain through its powers

A probability distribution π(i), \(0\leqslant i<\infty \), is reversible for K if

Example

With definitions (1), (2) on the Rado graph, if i ∼ j,

(Both sides are zero if i≁j.)

In the above example, we think of K(i, j) as a ‘ball walk’: From i, pick a neighbor j with probability proportional to Q(j) and move to j. We initially found the neat reversible measure surprising. Indeed, we and a generation of others thought that ball walks would have Q as a stationary distribution. Yuval Peres points out that, given a probability Q(j) on the vertices, assigning symmetric weight Q(i)Q(j) to i ∼ j gives this K for the weighted local walk. A double ball walk—“from i, choose a neighbor j with probability proportional to Q(j), and from j, choose a neighbor k with probability proportional to Q(k)∕Q(N(k))”—results in a reversible Markov chain with Q as reversing measure. Note that these double ball walks don’t require knowledge of normalizing constants. All of this suggests ball walks as reasonable objects to study.

Reversibility (3) shows that π is a stationary distribution for K:

In our setting, since the Rado graph has diameter 2, the walk is connected. It is easy to see that it is aperiodic. Thus, the π in (2) is the unique stationary distribution. Now, the fundamental theorem of Markov chain theory shows, for every starting state i, K ℓ(i, j) → π(j) as ℓ →∞, and indeed,

Reversible Markov chains have real spectrum. Say that (K, π) has a spectral gap if there is A > 0 such that for every f ∈ ℓ 2(π),

where \(\overline {f} = \sum _{i=0}^\infty f(i)\pi (i)\). (Then the gap is at least 1∕A.) For chains with a spectral gap, for any i,

Background on Markov chains, particularly rates of convergence, can be found in the readable book of Levin and Peres [19]. For the analytic part of the theory, particularly (4) and (5), and many refinements, we recommend [23].

There has been a healthy development in Markov chain circles around the theme ‘How does a Markov chain on a random graph behave?’. One motivation being, ‘What does a typical convergence rate look like?’. The graphs can be restricted in various natural ways (Cayley graphs, regular graphs of fixed degree or fixed average degree, etc.). A survey of by now classical work is Hildebrand’s survey of ‘random-random walks’ [14]. Recent work by Bordenave and coauthors can be found from [4, 5]. For sparse Erdős–Rényi graphs, there is remarkable work on the walk restricted to the giant component. See [22], [11] and [3].

It is worth contrasting these works with the present efforts. The above results pick a neighbor uniformly at random. In the present paper, the ball walk drives the walk back towards zero. The papers above are all on finite graphs. The Markov chain of Theorem 1 makes perfect sense on finite graphs. The statements and proofs go through (with small changes) to show that order \(\log _2^* i\) steps are necessary and sufficient. (For the uniform walk on G(n, 1∕2), a bounded number of steps suffice from most initial states, but there are states from which \(\log _2^*n \) steps are needed.)

2.3 Hardy’s Inequalities

A key part of the proof of Theorem 1 applies Hardy’s inequalities for trees to prove a Poincaré inequality (Cf. (4)) and hence a bound on the spectral gap. Despite a large expository literature, Hardy’s inequalities remain little known among probabilists. Our application can be read without this expository section but we hope that some readers find it useful. Extensive further references, trying to bridge the gap between probabilists and analysts, is in [17].

Start with a discrete form of Hardy’s original inequality [13, pp. 239–243]. This says that if \(a_n\geqslant 0\), A n = a 1 + ⋯ + a n, then

and the constant 4 is sharp. Analysts say that “the Hardy operator taking {a n} to {A n∕n} is bounded from ℓ 2 to ℓ 2”. Later writers showed how to put weights in. If μ(n) and ν(n) are positive functions, one aims for

for an explicit A depending on μ(n) and ν(n). If μ(n) = 1∕n 2 and ν(n) = 1, this gives the original Hardy inequality. To make the transition to a probabilistic application, take a(n) = g(n) − g(n − 1) for g in ℓ 2. The inequality becomes

Consider a ‘birth and death chain’ which transits from j to j + 1 with probability b(j) and from j to j − 1 with probability d(j). Suppose that this has stationary distribution μ(j) and that ∑j g(j)μ(j) = 0. Set ν(j) = μ(j)d(j). Then (6) becomes (following simple manipulations)

with K(j, k) the transition matrix of the birth and death chain. This gives a Poincaré inequality and spectral gap estimate. A crucial ingredient for applying this program is that the constant A must be explicit and manageable. For birth-death chains, this is indeed the case. See [21] or the applications in [9]. The transition from (6) to (7) leans on the one-dimensional setup of birth-death chains. While there is work on Hardy’s inequalities in higher dimensions, it is much more complex; in particular, useful forms of good constants A seem out of reach. In [21], Miclo has shown that for a general Markov transition matrix K(i, j), a spanning tree in the graph underlying K can be found. There is a useful version of Hardy’s inequality for trees due to Evans, Harris and Pick [10]. This is the approach developed in Sect. 7 below which gives further background and details.

Is approximation by trees good enough? There is some hope that the best tree is good enough (see [2]). In the present application, the tree chosen gives the needed result.

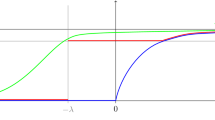

2.4 The \(\log ^*\) Function

Take any a > 1. The following is a careful definition of \(\log _a^*x\) for \(x\geqslant 0\). First, an easy verification shows that the map \(x\mapsto (\log x)/x\) on (0, ∞) is unimodal, with a unique maximum at x = e (where its value is 1∕e), and decaying to −∞ as x → 0 and to 0 as x →∞. Thus, if a > e 1∕e, then for any x > 0,

Since loga is a continuous map, this shows that if we start with any x > 0, iterative applications of loga will eventually lead to a point in (0, a) (because there are no fixed points of loga above that, by the above inequality), and then another application of loga will yield a negative number. This allows us to define \(\log _a^*x\) as the minimum number of applications of loga, starting from x, that gets us a nonpositive result.

If \(a \leqslant e^{1/e}\), the situation is a bit more complicated. Here, \(\log a \leqslant 1/e\), which is the maximum value of the unimodal map \(x\mapsto (\log x)/x\). This implies that there exist exactly two points \(0 < y_a\leqslant x_a\) that are fixed points of loga (with y a = x a if a = e 1∕e). Moreover, loga x < x if x∉[y a, x a], and \(\log _a x\geqslant x\) if x ∈ [y a, x a]. Thus, the previous definition does not work. Instead, we define \(\log _a^*x\) to be the minimum number of applications of loga, starting from x, that leads us to a result \(\leqslant x_a\). In both cases, defining \(\log _a^*0=0\) is consistent with the conventions. Note that \(\log _a^* x\geqslant 0\) for all \(x\geqslant 0\).

3 The Geometry of the Random Model

Throughout this section the graph is G(∞, 1∕2) — an Erdős–Rényi graph on \(\mathbb {N}=\{0,1,2,\ldots \}\) with probability 1∕2 for each possible edge. From here on, we will use the notation \(\mathbb {N}_+\) to denote the set {1, 2, …} of strictly positive integers. Let Q(x) = 2−(x+1) for \(x \in \mathbb {N}\). The transition matrix

and its stationary distribution π(x) = Z −1 Q(x)Q(N(x)) are thus random variables. Note that N(x), the neighborhood of x, is random. The main result of this section shows that this graph, with vertices weighted by Q(x), has its geometry controlled by a tree rooted at 0. This tree will appear in both lower and upper bounds on the mixing time for the random model.

To describe things, let \(p(x)=\min N(x)\) (p is for ‘parent’, not to be confused with the edge probability p in G(∞, p)). We need some preliminaries about p(x).

Lemma 1

Let \(\mathcal {B}\) be the event that for all \(x\in \mathbb {N}_+\) , p(x) < x. Then we have that \(\mathbb {P}(\mathcal {B})\geqslant 1/4\).

Proof

Denote  , and for any \(e\in \overline E\), consider

, and for any \(e\in \overline E\), consider  , where E is the set of edges in G(∞, 1∕2), so that \((B_e)_{e\in \overline E}\) is a family of independent Bernoulli variables of parameter 1∕2.

, where E is the set of edges in G(∞, 1∕2), so that \((B_e)_{e\in \overline E}\) is a family of independent Bernoulli variables of parameter 1∕2.

For \(x\in \mathbb {N}_+\), define A x the event that x is not linked in \(\mathcal {G}\) to a smaller vertex. Namely, we have formally

where  . Note that the family \((A_x)_{x\in \mathbb {N}_+}\) is independent, and in particular, its events are pairwise independent. We are thus in position to apply Kounias–Hunter–Worsley bounds [15, 18, 26] (see also the survey [24]), to see that for any \(n\in \mathbb {N}_+\),

. Note that the family \((A_x)_{x\in \mathbb {N}_+}\) is independent, and in particular, its events are pairwise independent. We are thus in position to apply Kounias–Hunter–Worsley bounds [15, 18, 26] (see also the survey [24]), to see that for any \(n\in \mathbb {N}_+\),

where we used that \( \mathbb {P}(A_1)\geqslant \mathbb {P}(A_2)\geqslant \cdots \geqslant \mathbb {P}(A_n)\), which holds because

We deduce that

Letting n tends to infinity, we get \( \mathbb {P}\!\left (\bigcup _{x\in \mathbb {N}_+} A_x\right )\leqslant \frac 34\). To conclude, note that \( \mathcal {B}^{\mathrm {c}}=\bigcup _{x\in \mathbb {N}_+} A_x\). □

Remark 1

Assume that instead of 1∕2, the edges of \(\overline E\) belong to E with probability p ∈ (0, 1) (still independently), the corresponding notions receive p in index. The above computations show \( \mathbb {P}_p(\mathcal {B})\geqslant 1- (2-3p +p^2)\wedge 1\), so that \(\mathbb {P}_p(\mathcal {B})\) goes to 1 as p goes to 1, but this bounds provides no information for \(p\in (0,(3-\sqrt {5})/2]\).

In fact the above observation shows that the Kounias–Hunter–Worsley bound is not optimal, at least for small p > 0. So let us give another computation of \(\mathbb {P}_p(\mathcal {B})\):

Lemma 2

Consider the situation described in Remark 1 , with p ∈ (0, 1). We have

where p(n) is the number of partitions of n. In particular \(\mathbb {P}(\mathcal {B})>0\) for all p ∈ (0, 1).

Proof

Indeed, we have \( \mathcal {B}=\bigcap _{x\in \mathbb {N}_+} A_x^{\mathrm {c}}\), so that by independence of the A x, for \(x\in \mathbb {N}_+\),

Let \(\mathcal {N}\) be the set of sequences of integers \((n_l)_{l\in \mathbb {N}_+}\) with all but finitely many elements equal to zero. Applying the distributive law to the above expression, we have

where p(n) is the number of ways to write n as \(\sum _{x\in \mathbb {N}_+} xn_x\), with \((n_l)_{l\in \mathbb {N}_+}\in \mathcal {N}\). □

Consider the set of edges

and the corresponding graph  . Under \(\mathcal {B}\), it is clear that \(\mathcal {T}\) is a tree. But this is always true:

. Under \(\mathcal {B}\), it is clear that \(\mathcal {T}\) is a tree. But this is always true:

Lemma 3

The graph \(\mathcal {T}\) is a tree.

Proof

The argument is by contradiction. Assume that \(\mathcal {T}\) contains a cycle, say \((x_l)_{l\in \mathbb {Z}_n}\) with \(n\geqslant 3\). Let us direct the a priori unoriented edges {x l, x l+1}, for \(l\in \mathbb {Z}_n\), by putting an arrow from x l to x l+1 (respectively from x l+1 to x l) if p(x l) = x l+1 (resp. p(x l+1) = x l). Note that we either have

because otherwise there would exist \(l\in \mathbb {Z}_n\) with two arrows exiting from x l, a contradiction. Up to reindexing \((x_l)_{l\in \mathbb {Z}_n}\) as \((x_{-l})_{l\in \mathbb {Z}_n}\), we can assume that (8) holds.

Fix some \(l\in \mathbb {Z}_n\). Since p(x l) = x l+1, we have x l ∈ N(x l+1), so \(x_{l+2}=p(x_{l+1})\leqslant x_l\). Due to the fact that x l ≠ x l+2 (recall that \(n\geqslant 3\)), we get x l+2 < x l. Starting from x 0 and iterating this relation (in a minimal way, n∕2 times if n is even, or n times if n is odd), we obtain a contradiction: x 0 < x 0. Thus, \(\mathcal {T}\) must be a tree. □

Let us come back to the case where p = 1∕2. The following result gives an idea of how far p(x) is from x, for \(x\in \mathbb {N}_+\).

Lemma 4

Almost surely, there exist only finitely many \(x\in \mathbb {N}_+\) such that p(x) > 2log2(1 + x). In particular, a.s. there exists a (random) finite \(C\geqslant 2\) such that

Proof

The first assertion follows from the Borel–Cantelli lemma, as follows. For any \(x\in \mathbb {N}_+\), consider the event

Denoting ⌊⋅⌋ the the integer part, we compute

Having shown that a.s. there exists only a finite number of integers \(x\in \mathbb {N}_+\) satisfying p(x) > 2log2(1 + x), denote these points as x

1, …, x

N, with \(N\in \mathbb {N}\). To get the second assertion, it is sufficient to take  , with the convention that

, with the convention that  if N = 0. □

if N = 0. □

4 The Lower Bound

The lower bound in Theorem 1, showing that order \(\log _2^*i\) steps are necessary for infinitely many i is proved in [8] for the binary model of the Rado graph and we refer there for the proof. A different argument is needed for the G(∞, 1∕2) model. This section gives the details (see Theorem 3 below).

Let μ be the stationary distribution of our random walk on G(∞, 1∕2) (with Q(j) = 2−(j+1), as in Theorem 1), given a realization of the graph. Note that μ is random. For each \(x\in \mathbb {N}\), let τ x be the mixing time of the walk starting from x, that is, the smallest n such that the law of the walk at time n, starting from x, has total variation distance \(\leqslant 1/4\) from μ. Note that the τ x’s are also random.

Theorem 3

Let τ x be as above. Then with probability one,

Equivalently, with probability one, given any ε > 0, \(\tau _{x} \geqslant (1-\varepsilon )\log _{16}^* x\) for infinitely many x.

We need the following lemma.

Lemma 5

With probability one, there is an infinite sequence \(x_0< x_1<x_2<\cdots \in \mathbb {N}\) such that:

-

1.

For each i, x i+1 is connected to x i by an edge, but not connected by an edge to any other number in {0, 1, …, 2x i − 1}.

-

2.

For each i, \(2^{3x_i}\leqslant x_{i+1} \leqslant 2^{3x_i+1}-1\).

Proof

Define a sequence y 0, y 1, y 2, … inductively as follows. Let y 0 be an arbitrary element of \(\mathbb {N}\). For each i, let y i+1 be the smallest element in \(\{2^{3y_i}, 2^{3y_i}+1,\ldots ,2^{3y_i+1}-1\}\) that has an edge to y i, but to no other number in {0, 1, …, 2y i − 1}. If there exists no such number, then the process stops. Let A i be the event that y i exists. Note that A 0 ⊇ A 1 ⊇ A 2 ⊇⋯.

Let F(x) := 23x, G(x) := 23x+1 − 1, a 0 = b 0 = y 0, and for each \(i\geqslant 1\), let

Since \(2^{3y_i} \leqslant y_{i+1}\leqslant 2^{3y_i+1}-1\) for each i, it follows by induction that \(a_i\leqslant y_i\leqslant b_i\) for each i (if y i exists). Now fix some \(i\geqslant 1\). Since the event A i−1 is determined by y 1, …, y i−1, and these random variables can take only finitely many values (by the above paragraph), we can write A i−1 as a finite union of events of the form {y 1 = c 1, …, y i−1 = c i−1}, where \(c_1< c_2 <\cdots < c_{i-1}\in \mathbb {N}\).

Now note that for any c

1 < ⋯ < c

i−1, the event  happens if and only if {y

1 = c

1, …, y

i−1 = c

i−1} happens and there is some \(y\in \{2^{3c_{i-1}}, 2^{3c_{i-1}}+1,\ldots ,2^{3c_{i-1}+1}-1\}\) that has an edge to c

i−1, but to no other number in {0, …, 2c

i−1 − 1}. The event {y

1 = c

1, …, y

i−1 = c

i−1} is in \(\mathcal {F}_{c_{i-1}}\), where \(\mathcal {F}_x\) denotes the σ-algebra generated by the edges between all numbers in {0, …, x}. On the other hand, on the event {y

1 = c

1, …, y

i−1 = c

i−1}, it is not hard to see that

happens if and only if {y

1 = c

1, …, y

i−1 = c

i−1} happens and there is some \(y\in \{2^{3c_{i-1}}, 2^{3c_{i-1}}+1,\ldots ,2^{3c_{i-1}+1}-1\}\) that has an edge to c

i−1, but to no other number in {0, …, 2c

i−1 − 1}. The event {y

1 = c

1, …, y

i−1 = c

i−1} is in \(\mathcal {F}_{c_{i-1}}\), where \(\mathcal {F}_x\) denotes the σ-algebra generated by the edges between all numbers in {0, …, x}. On the other hand, on the event {y

1 = c

1, …, y

i−1 = c

i−1}, it is not hard to see that

Thus,

where in the last step we used the inequality \(0\leqslant 1-x\leqslant e^{-x}\) (which holds for all x ∈ [0, 1]). Note that the term inside the parentheses on the right side is an increasing function of c

i−1, and the maximum possible value of y

i−1 is b

i−1. Thus, summing both sides over all values of c

1, …, c

i−1 such that {y

1 = c

1, …, y

i−1 = c

i−1}⊆ A

i−1, we get  . Proceeding inductively, this gives

. Proceeding inductively, this gives

Taking i →∞, we get \(\mathbb {P}(B)\geqslant \prod _{k=0}^\infty (1-e^{-2^{b_k}})\), where \(B := \bigcap _{k=1}^\infty A_k\). Now recall that the event B, as well as the numbers b 0, b 1, …, are dependent on our choice of y 0. To emphasize this dependence, let us write them as B(y 0) and b k(y 0). Then by the above inequality,

where B(y 0)c denotes the complement of B(y 0). Due to the extremely rapid growth of b k(y 0) as k →∞, and the fact that b 0(y 0) = y 0, it is not hard to see that the right side is finite. Therefore, by the Borel–Cantelli lemma, B(y 0)c happens for only finitely many y 0 with probability one. In particular, with probability one, B(y 0) happens for some y 0. This completes the proof. □

Proof (Of Theorem 3)

Fix a realization of G(∞, 1∕2). Let x be so large that μ([x, ∞)) < 1∕10, and \( \prod _{k=1}^\infty (1-2^{-a_k(x)+1})\geqslant {9}/{10}\).

Let x 0, x 1, x 2, … be a sequence having the properties listed in Lemma 5 (which exists with probability one, by the lemma). Discarding some initial values if necessary, let us assume that x 0 > x. By the listed properties, it is obvious that x i →∞ as i →∞. Thus, to prove Theorem 3, it suffices to prove that

We will now deduce this from the properties of the sequence.

Suppose that our random walk starts from x i for some \(i\geqslant 1\). Since x i connects to x i−1 by an edge, but not to any other number in {0, …, 2x i−1 − 1}, we see that the probability of the walk landing up at x i−1 in the next step is at least

Proceeding by induction, this shows that the chance that the walk lands up at x 0 at step i is at least \( \prod _{k=1}^i(1- 2^{-x_k + 1}) \). Let μ i be the law of walk at step i (starting from x i, and conditional on the fixed realization of our random graph). Then by the above deduction and the facts that x 0 > x and \(x_k \geqslant a_k(x_0)\geqslant a_k(x)\), we have

By our choice of x, the last expression is bounded below by 9∕10. But μ([x, ∞)) < 1∕10. Thus, the total variation distance between μ i and μ is at least 8∕10. In particular, \(\tau _{x_i} > i\). Now, \(x_i \leqslant 2^{3x_{i-1} + 1}-1 \leqslant 16^{x_{i-1}}\), which shows that \(\log _{16}^*x_i \leqslant \log _{16}^* x_{i-1} + 1\). Proceeding inductively, we get \( \log _{16}^*x_i \leqslant i + \log _{16}^* x_0 \). Thus, \(\tau _{x_i} > \log _{16}^* x_i - \log _{16}^* x_0\). This proves (9). □

5 The Upper Bound (Assuming a Spectral Gap)

This section gives the upper bound for both the binary and random model of the Rado graph. Indeed, the proof works for a somewhat general class of graphs and more general base measures Q. The argument assumes that we have a spectral gap estimate. These are proved below in Sects. 6 and 7. We give this part of the argument first because, as with earlier sections, it gives a useful picture of the random graph.

Take any undirected graph on the nonnegative integers, with the property:

Let \(\{X_n\}_{n\geqslant 0}\) be the Markov chain on this graph, which, starting at state i, jumps to a neighbor j with probability proportional to Q(j) = 2−(j+1). The following is the main result of this section.

Theorem 4

Let K be the transition kernel of the Markov chain defined above. Suppose that K has a spectral gap. Let μ be the stationary distribution of the chain, and let a := e 1∕C . Then for any \(i\in \mathbb {N}\) and any \(\ell \geqslant 1\),

where C 1 and C 2 are positive constants that depend only the properties of the chain (and not on i or ℓ).

By Lemma 4, G(∞, 1∕2) satisfies the property (10) with probability one, for some C that may depend on the realization of the graph. The Rado graph also satisfies property (10), with \(K = 1/\log 2\). Thus, the random walk starting from j mixes in time \(\log _2^* j\) on the Rado graph, provided that it has a spectral gap. For G(∞, 1∕2), assuming that the walk has a spectral gap, the mixing time starting from j is \(\log _a^* j\), where a depends on the realization of the graph. The spectral gap for G(∞, 1∕2) will be proved in Sect. 6, and the spectral gap for the Rado graph will be established in Sect. 7. Therefore, this proves Theorem 1 and also the analogous result for G(∞, 1∕2).

Proof (Of Theorem 4)

Note that a > 1. Let \(Z_n := \log _a^* X_n\). We claim that there is some j 0 sufficiently large, and some positive constant c, such that

where \(\mathcal {F}_n\) is the σ-algebra generated by X 0, …, X n. (The proof is given below.) This implies that if we define the stopping time \(S := \min \{n\geqslant 0: X_n\leqslant j_0\}\), then \(\{e^{Z_{S\wedge n} + c(S\wedge n)}\}_{n\geqslant 0}\) is a supermartingale with respect to the filtration \(\{\mathcal {F}_{n}\}_{n\geqslant 0}\) (see details below). Moreover, it is nonnegative. Thus, if we start from the deterministic initial condition X 0 = j, then for any n,

But \(Z_{S\wedge n}\geqslant 0\). Thus, \(\mathbb {E}(e^{c(S\wedge n)}|X_0=j)\leqslant e^{\log _a^* j}\). Taking n →∞ and applying the monotone convergence theorem, we get

Now take any \(j\geqslant 1\) and \(n\geqslant 1\). Let μ be the stationary distribution, and let μ j,n be the law of X n when X 0 = j. Take any A ⊆{0, 1, …}. Then for any \(m\leqslant n\),

But

and

Thus, |μ j,n(A) − μ(A)| can be bounded above by

Now, if our Markov chain has a spectral gap, there exist constants C 1 and C 2 depending only on j 0 and the spectral gap, such that

for all \(0\leqslant i\leqslant m\) and \(0\leqslant l\leqslant j_0\). Using this bound and the bound (12) on \(\mathbb {E}(e^{cS}|X_0=j)\) obtained above, we get

Taking m = ⌈n∕2⌉, we get the desired result. □

Proof (Of inequality (11))

It suffices to take n = 0. Suppose that X 0 = j for some \(j\geqslant 1\). By assumption, there is a neighbor k of j such that \(k\leqslant K\log j = \log _a j\). Assuming that j is sufficiently large (depending on K), we have that for any \(l\leqslant k\),

Also, \(\log _a^*l \leqslant \log _a^* j\) for any \(l\leqslant j\). Thus,

Now for any \(l \geqslant k\),

Thus,

which is less than 1∕4 if j is sufficiently large (since \(k\leqslant \log _a^*j\)). Next, let L be the set of all l > k that are connected to j. Then

Since the map x↦x∕(2−k + x) is increasing, this shows that

Combining, we get that for sufficiently large j,

□

Proof (Of the Supermartingale Property)

Note that

The events {S = i} are \(\mathcal {F}_{n}\)-measurable for all \(0\leqslant i\leqslant n\), and so is the event {S > n}. Moreover, Z 0, …, Z n are also \(\mathcal {F}_{n}\)-measurable. Thus, the above expression shows that

But if S > n, then Z n > j 0, and therefore by (11),

Thus,

□

6 Spectral Gap for the Random Model

Our next goal is to show that the random reversible couple (K, π) admits a spectral gap. The arguments make use of the ideas and notation of Sect. 3. In particular, recall the event \(\mathcal {B} = \{p(x) < x \ \ \forall \ x\in \mathbb {N}_+\}\) from Lemma 1 and the random tree \(\mathcal {T}\) with edge set F from Lemma 3. The argument uses a version of Cheeger’s inequality for trees which is further developed in Appendix 1.

Proposition 1

On \(\mathcal {B}\) , there exists a random constant Λ > 0 such that

where in the r.h.s. \(\mathcal {E}\) is the Dirichlet form defined by

Taking into account that for any f ∈ L 2(π), the variance π[(f − π[f])2] of f with respect to π is bounded above by π[(f − f(0))2], the previous result is an immediate consequence of the following existence of positive first Dirichlet eigenvalue under \(\mathcal {B}\).

Proposition 2

On \(\mathcal {B}\) , there exists a random constant Λ > 0 such that

The proof of Proposition 2 is based on the pruning of \(\mathcal {G}\) into \(\mathcal {T}\) and then resorting to Cheeger’s inequalities for trees. More precisely, let us introduce the following notations. Define the Markov kernel \(K_{\mathcal {T}}\) as

Note that this kernel is reversible with respect to π. The corresponding Dirichlet form is given, for any f ∈ L 2(π), by

It will be convenient to work with  , where Z is the normalizing constant of π, as in equation (2). Define a nonnegative measure μ on \(\mathbb {N}_+\) as

, where Z is the normalizing constant of π, as in equation (2). Define a nonnegative measure μ on \(\mathbb {N}_+\) as

Proposition 3

On \(\mathcal {B}\) , there exists λ > 0 such that

This result immediately implies Proposition 2. Indeed, due on one hand to the inclusion  and on the other hand to the nature of Q, we have

and on the other hand to the nature of Q, we have

Thus for any f ∈ L 2(μ),

and thus, Proposition 2 holds with  .

.

The proof of Proposition 3 is based on a Dirichlet-variant of the Cheeger inequality (which is in fact slightly simpler than the classical one, see Appendix 1). For any \(A\subset \mathbb {N}_+\), define  . Endow \(\overline E\) with the measure ν induced by, for any \( \{x,y\}\in \overline E\),

. Endow \(\overline E\) with the measure ν induced by, for any \( \{x,y\}\in \overline E\),

Define the Dirichlet–Cheeger constant

where  . The proof of the traditional Markovian Cheeger’s inequality given in the lectures by Saloff-Coste [23] implies directly that the best constant λ in Proposition 3 satisfies \( \lambda \geqslant {\iota ^2}/{2}\). Thus it remains to check:

. The proof of the traditional Markovian Cheeger’s inequality given in the lectures by Saloff-Coste [23] implies directly that the best constant λ in Proposition 3 satisfies \( \lambda \geqslant {\iota ^2}/{2}\). Thus it remains to check:

Proposition 4

On \(\mathcal {B}\) , we have \(\iota \geqslant 1/2\) and in particular ι > 0.

Proof

Take any nonempty \(A\in \mathcal {A}\) and decompose it into its connected components with respect to \(\mathcal {T}\): \( A=\bigsqcup _{i\in \mathcal {I}} A_i\), where the index set \(\mathcal {I}\) is at most denumerable. Note that

where the second identity holds because there are no edges in F connecting two different A i’s. Thus, it follows that \( \iota = \inf _{A\in \widetilde {\mathcal {A}}}{\nu (\partial A)}/{\mu (A)}\), where \(\widetilde {\mathcal {A}}\) is the set of subsets of \(\mathcal {A}\) which are \(\mathcal {T}\)-connected.

Consider \(A\in \widetilde {\mathcal {A}}\), it has a smallest element \(a\in \mathbb {N}_+\) (since 0∉A). Let T a be the subtree of descendants of A in \(\mathcal {T}\) (i.e., the set of vertices from \(\mathbb {N}_+\) whose non-self-intersecting path to 0 passes through a). We have A ⊂ T a, and ∂A ⊃{a, p(a)} = ∂T a, and it follows that \( {\nu (\partial A)}/{\mu (A)}\geqslant {\nu (\partial T_a)}/{\mu (T_a)}\). We deduce that

On \(\mathcal {B}\), we have for any \(a\in \mathbb {N}_+\), on the one hand

and on the other hand

We get μ(T a) equals

It follows that \( \iota \geqslant 1/2\). □

Lemma 4 can now be used to see that the ball Markov chain on the random graph has a.s. a spectral gap. Indeed, we deduce from Lemma 4 that there exists a (random) vertex \(x_0\in \mathbb {N}\) such that for any x > x 0, p(x) < x. Consider

It follows that for any a > x 1, we have, for all ∀ x ∈ T a, p(x) < x. (To see this, take any path a 0, a 1, … in T a, starting at a 0 = a, so that p(a i) = a i−1 for each i. Let k be the first index such that \(a_{k} \geqslant a_{k+1}\), assuming that there exists such a k. Then \(a_{k+1}\leqslant x_0\), and so \(a_k = p(a_{k+1})\leqslant x_1\). But this is impossible, since \(a_0\leqslant a_k\) and a 0 > x 1.) In particular, we see that (17) and (18) hold for a > x 1. As a consequence,

By the finiteness of  , we also have

, we also have  . So, finally,

. So, finally,

which shows that G(∞, 1∕2) has a spectral gap a.s.

7 Spectral Gap for the Rado Graph

This section proves the needed spectral gap for the Rado graph. Here the graph has vertex set \(\mathbb {N}\) and an edge from i to j if i is less than j and the ith bit of j is a one. We treat carefully the case of a more general base measure, Q(x) = (1 − δ)δ x. As delta tends to 1, sampling from this Q is a better surrogate for “pick a neighboring vertex uniformly”. Since the normalization doesn’t enter, throughout take Q(x) = δ x. The heart of the argument is a discrete version of Hardy’s inequality for trees. This is developed below with full details in Appendix 2.

Consider the transition kernel K reversible with respect to π and associated to the measure Q given by  for all \(x\in \mathbb {N}\), where δ ∈ (0, 1) (instead of δ = 1∕2 as in the introduction, up to the normalization). Recall that

for all \(x\in \mathbb {N}\), where δ ∈ (0, 1) (instead of δ = 1∕2 as in the introduction, up to the normalization). Recall that

where N(x) is the set of neighbors of x induced by K and where Z > 0 is the normalizing constant. Here is the equivalent of Proposition 3:

Proposition 5

We have

This bound will be proved via Hardy’s inequalities. If we resort to Dirichlet–Cheeger, we rather get

To see the advantage of Proposition 5, let δ come closer and closer to 1, namely, approach the problematic case of “pick a neighbor uniformly at random”. In this situation, the r.h.s. of the bound of Proposition 5 is of order

which is better than (19) as δ goes to 1 −.

Here we present the Hardy’s inequalities method to get Proposition 5 announced above. Our goal is to show that K admits a positive first Dirichlet eigenvalue:

Proposition 6

There exists Λ > 0 depending on δ ∈ (0, 1) such that

It follows that the reversible couple (K, π) admits a spectral gap bounded below by Λ given above. Indeed, it is an immediate consequence of the fact that for any f ∈ L 2(π), the variance of f with respect to π is bounded above by π[(f − f(0))2].

The proof of Proposition 6 is based on a pruning of K and Hardy’s inequalities for trees. Consider the set of unoriented edges induced by K:  (in particular, E does not contain the self-edges or singletons). For any \(x\in \mathbb {N}_+\), let p(x) the smallest bit equal to 1 in the binary expansion of x, i.e.,

(in particular, E does not contain the self-edges or singletons). For any \(x\in \mathbb {N}_+\), let p(x) the smallest bit equal to 1 in the binary expansion of x, i.e.,

Define the subset F of E by

and the function ν on F via

To any f ∈ L 2(π), associate the function (df)2 on F given by

Finally, consider the (non-negative) measure μ defined on \(\mathbb {N}_+\) via

Then we have:

Proposition 7

There exists λ > 0 depending on δ ∈ (0, 1) such that

This result implies Proposition 6. Indeed, note that by the definition of Q,

Thus, for any f ∈ L 2(μ),

namely Proposition 6 holds with  .

.

Note that \(\mathbb {N}\) endowed with the set of non-oriented edges F has the structure of a tree. We interpret 0 as its root, so that for any \(x\in \mathbb {N}_+\), p(x) is the parent of x. Note that for any \(x\in \mathbb {N}\), the children of x are exactly the numbers y2x, where y is an odd number. We will denote h(x) the height of x with respect to the root 0 (thus, the odd numbers are exactly the elements of \(\mathbb {N}\) whose height is equal to 1).

According to [21] (see also Evans, Harris and Pick [10]), the best constant λ in Proposition 7, say λ 0, can be estimated up to a factor 16 via Hardy’s inequalities for trees, see (23) below. To describe them we need several notations.

Let \(\mathcal {T}\) the set of subsets T of \(\mathbb {N}_+\) satisfying the following conditions

-

T is non-empty and connected (with respect to F),

-

T does not contain 0,

-

there exists \(M\geqslant 1\) such that \(h(x)\leqslant M\) for all x ∈ T,

-

if x ∈ T has a child in T, then all children of x belong to T.

Note that any \(T\in \mathcal {T}\) admits a closest element to 0, call it m(T). Note that m(T) ≠ 0. When T is not reduced to the singleton {m(T)}, then T ∖{m(T)} has a denumerable infinity of connected components which are indexed by the children of m(T). Since these children are exactly the y2m(T), where \(y\in \mathcal {I}\), the set of odd numbers, call \(T_{y2^{m(T)}}\) the connected component of T ∖{m(T)} associated to y2m(T). Note that \(T_{y2^{m(T)}}\in \mathcal {T}\). We extend ν as a functional on \(\mathcal {T}\), via the iteration

-

when T is the singleton {m(T)}, we take

,

, -

when T is not a singleton, decompose T as \(\{m(T)\}\sqcup \bigsqcup _{y\in \mathcal {I}}T_{y2^{m(T)}}\), then ν is defined as

$$\displaystyle \begin{aligned} \begin{array}{rcl} {} \frac 1{\nu(T)}& =&\displaystyle \frac 1{\nu(\{m(T)\})}+\frac 1{\sum_{y\in\mathcal{I}} \nu(T_{y2^{m(T)}})}.\end{array} \end{aligned} $$(22)

For \(x\in \mathbb {N}_+\), let S x be the set of vertices \(y\in \mathbb {N}_+\) whose path to 0 passes through x. For any \(T\in \mathcal {T}\) we associate the subset

where L(T) is the set of leaves of T, namely the x ∈ T having no children in T. Equivalently, T ∗ is the set of all descendants of the leaves of T, themselves included.

Consider \(\mathcal {S}\subset \mathcal {T}\) the set of \(T\in \mathcal {T}\) which are such that m(T) is an odd number. Finally, define

We are interested in this quantity because of the Hardy inequalities:

where recall that λ 0 is the best constant in Proposition 7. (In [21], only finite trees were considered, the extension to infinite trees is given in Appendix 2). So, to prove Proposition 7, it is sufficient to show that A is finite. To investigate A, we need some further definitions. For any \(x\in \mathbb {N}_+\), let

A finite path from 0 in the direction to infinity is a finite sequence  of elements of \(\mathbb {N}_+\) such that z

0 = 0 and p(z

n) = z

n−1 for any

of elements of \(\mathbb {N}_+\) such that z

0 = 0 and p(z

n) = z

n−1 for any  . On such a path z, we define the quantity

. On such a path z, we define the quantity

The following technical result is crucial for our purpose of showing that A is finite.

Lemma 6

For any finite path from 0 in the direction to infinity

, we have

\(B(z)\leqslant C\)

, where

, we have

\(B(z)\leqslant C\)

, where

.

.

Proof

Note that for any  , h(z

n) = n. Furthermore, for any \(x\in \mathbb {N}_+\), we have \(h(x)\leqslant x\) and we get h(p(z

n)) = h(z

n) − 1 = n − 1, so that \(p(z_n)\geqslant n-1\). Writing \(z_n=y_n2^{p(z_n)}\), for some odd number y

n, it follows that

, h(z

n) = n. Furthermore, for any \(x\in \mathbb {N}_+\), we have \(h(x)\leqslant x\) and we get h(p(z

n)) = h(z

n) − 1 = n − 1, so that \(p(z_n)\geqslant n-1\). Writing \(z_n=y_n2^{p(z_n)}\), for some odd number y

n, it follows that

The desired result follows at once. □

We need two ingredients about ratios μ(T ∗)∕ν(T). Here is the first one.

Lemma 7

For any \(T\in \mathcal {T}\) which is a singleton, we have \( \frac {\mu (T^*)}{\nu (T)}\leqslant \frac {1}{1-\delta } \).

Proof

When T is the singleton {m(T)}, on the one hand we have

On the other hand, T ∗ is the subtree growing from m(T), namely the subtree containing all the descendants of m(T). Note two properties of T ∗:

and we further have \(p(y)\geqslant m(T)\) for any y ∈ T ∗∖{m(T)}. It follows that

Thus, we get \( {\mu (T^*)}/{\nu (T)}\leqslant \frac 1{1-\delta }\). □

For the second ingredient, we need some further definitions. The length ℓ(T) of \(T\in \mathcal {T}\) is given by  , and for any \(l\in \mathbb {N}\), we define

, and for any \(l\in \mathbb {N}\), we define

Lemma 8

For any \(l\in \mathbb {N}\) , we have \( \sup _{T\in \mathcal {T}_l}\frac {\mu (T^*)}{\nu (T)}<+\infty \).

Proof

We will prove the finiteness by induction over \(l\in \mathbb {N}\). First, note that \(\mathcal {T}_0\) is the set of singletons, and so Lemma 7 implies that \( \sup _{T\in \mathcal {T}_0}\frac {\mu (T^*)}{\nu (T)}\leqslant \frac 1{1-\delta }\). Next, assume that the supremum is finite for some \(l\in \mathbb {N}\) and let us show that it is also finite for l + 1.

Consider \(T\in \mathcal {T}_{l+1}\), with ℓ(T) = l + 1; in particular, T is not a singleton. Decompose T as \(\{m(T)\}\sqcup \bigsqcup _{y\in \mathcal {I}}T_{y2^{m(T)}}\) and recall the relation (22). Since \( T^*=\bigsqcup _{y\in \mathcal {I}}T_{y2^{m(T)}}^*\), it follows that

Consider the first term on the right. Given \(y\in \mathcal {I}\), the smallest possible element of \(T_{y2^{m(T)}}^*\) is y2m(T), and we have for any \(x\in T_{y2^{m(T)}}^*\),

Thus we have the equivalent of (24):

Following the computation (25), we get

where the inequality is strict, because in (27) we cannot have equality for all \(x\in \bigsqcup _{y\in \mathcal {I}}T_{y2^{m(T)}}^*\). It follows that

where C is the constant introduced in Lemma 6. Since for any \(y\in \mathcal {I}\), we have \(T_{y2^{m(T)}}\in \mathcal {T}_l\), we deduce the desired result from the induction hypothesis. □

We are now ready to prove Proposition 7.

Proof (Of Proposition 7)

Fix some \(T\in \mathcal {S}\), we are going to show that \({\mu (T^*)}/{\nu (T)}\leqslant {1+C}/{(1-\delta )}\), where C is the constant introduced in Lemma 6. Due to Lemma 7, this bound is clear if T is a singleton. When T is not the singleton {m(T)}, decompose T as \(\{m(T)\}\sqcup \bigsqcup _{y\in \mathcal {I}}T_{y2^{m(T)}}\) and let us come back to (26). Denote  and

and

which is positive according to (28). Coming back to (26), we have shown

where \(z_2\in \{y2^{m(T)}\,:\, y\in \mathcal {I}\}\) is such that

To get the existence of z 2, we used that the supremum is finite, as ensured by Lemma 8.

By iterating this procedure, define a finite path from 0 in the direction to infinity  , such that for any

, such that for any  ,

,

and \(T_{z_N}\) is a singleton. We have \(N\leqslant \max \{h(x)\,:\, x\in T\}\). We deduce that

as desired. □

To get an explicit bound in terms of δ, it remains to investigate the quantity C.

Lemma 9

We have

Proof

Consider  . Elementary computations show that

. Elementary computations show that

so we get

Since we have for any \( l\in \mathbb {N}\), \( 2^{2^l}-l\geqslant 0\), we deduce

It is not difficult to check that for any \( l\geqslant 1\), \(2^{2^l}-l\geqslant \frac 12 2^{2^l}\), so that

The announced result follows from the fact

□

The following observations show that Q needs to be at least decaying exponentially for the Hardy inequality approach to work.

Remark 2

-

(a)

In view of the expression of π, it is natural to try to replace (20) by

But then in Lemma 7, where we want the ratios μ(T ∗)∕ν(T) to be bounded above for singletons T, we end up with the fact that

$$\displaystyle \begin{aligned} \frac{Q(N(m(T)))}{Q(p(m(T)))}=\frac{\mu(T)}{\nu(T)}\leqslant \frac{\mu(T^*)}{\nu(T)} \end{aligned}$$must be bounded above for singletons T. Namely an extension of (21) must hold: there exists a constant c > 0 such that

$$\displaystyle \begin{aligned} \begin{array}{rcl} {} \forall\ x\in\mathbb{N}_+,\qquad Q(N(x))& \leqslant &\displaystyle c Q(p(x)).\end{array} \end{aligned} $$(29)Writing x = y2p, with \(y\in \mathcal {I}\) and \(p\in \mathbb {N}\), we must have \( Q(N(y2^p))\leqslant c Q(p)\). Take y = 1 + 2 + 4 + ⋯ + 2l, then we get that p, p + 1, …, p + l all belong to Q(N(y2p)), so that \( Q(\{p, p+1, \ldots , p+l\})\leqslant c Q(p)\), and letting l go to infinity, it follows that

, namely, Q has exponential tails.

, namely, Q has exponential tails. -

(b)

Other subtrees of the graph generated by K could have been considered. It amounts to choose the parent of any \(x\in \mathbb {N}_+\). But among all possible choices of such a neighbor, the one with most weight is p(x), at least if Q is decreasing. In view of the requirement (29), it looks like the best possible choice.

-

(c)

If one is only interested in Proposition 7 with μ defined by (20), then many more probability measures Q can be considered, in particular any polynomial probability of the form

, for any \(x\in \mathbb {N}\), where ζ is the Riemann function and l > 1.

, for any \(x\in \mathbb {N}\), where ζ is the Riemann function and l > 1.

References

A. Bendikov, L. Saloff-Coste, Random walks on some countable groups, in Groups, Graphs and Random Walks (2011), pp. 77–103

I. Benjamini, O. Schramm, Every graph with a positive Cheeger constant contains a tree with a positive Cheeger constant. Geom. Funct. Anal. 7(3), 403–419 (1997)

N. Berestycki, E. Lubetzky, Y. Peres, A. Sly, Random walks on the random graph. Ann. Probab. 46(1), 456–490 (2018)

C. Bordenave, P. Caputo, D. Chafaï, Spectrum of large random reversible Markov chains: heavy-tailed weights on the complete graph. Ann. Probab. 39(4), 1544–1590 (2011)

C. Bordenave, P. Caputo, J. Salez, Random walk on sparse random digraphs. Probab. Theory Related Fields 170(3–4), 933–960 (2018)

P.J. Cameron, The random graph, in The Mathematics of Paul Erdős II (1997), 333–351

P.J. Cameron, The random graph revisited, in European Congress of Mathematics (Birkhäuser, Basel, 2001), pp. 267–274

P. Diaconis, M. Malliaris, Complexity and randomness in the Heisenberg groups (and beyond) (2021). arXiv:2107.02923v2

P. Diaconis, P.M. Wood, Random doubly stochastic tridiagonal matrices. Random Struct. Algor. 42(4), 403–437 (2013)

W.D. Evans, D.J. Harris, L. Pick, Weighted Hardy and Poincaré inequalities on trees. J. Lond. Math. Soc. (2) 52(1), 121–136 (1995)

N. Fountoulakis, B.A. Reed, The evolution of the mixing rate of a simple random walk on the giant component of a random graph. Random Struct. Algor. 33(1), 68–86 (2008)

A. Georgakopoulos, J. Haslegrave, Percolation on an infinitely generated group. Combin. Probab. Comput. 29(4), 587–615 (2020)

G.H. Hardy, J.E. Littlewood, G. Pólya, Inequalities, 2nd edn. (Cambridge University Press, Cambridge, 1952)

M. Hildebrand, A survey of results on random random walks on finite groups. Probab. Surv. 2, 33–63 (2005)

D. Hunter, An upper bound for the probability of a union. J. Appl. Probab. 13, 597–603 (1976)

J. Jorgenson, S. Lang, The ubiquitous heat kernel, in Mathematics Unlimited—2001 and Beyond (Springer, Berlin, Heidelberg, 2001), pp. 655–683

C.A.J. Klaassen, J.A. Wellner, Hardy’s inequality and its descendants: a probability approach. Electron. J. Probab. 26, 1–34 (2021)

E.G. Kounias, Bounds for the probability of a union, with applications. Ann. Math. Stat. 39, 2154–2158 (1968)

D.A. Levin, Y. Peres, Markov Chains and Mixing Times (American Mathematical Society, Providence, 2017)

S. Martineau, Locally infinite graphs and symmetries. Grad. J. Math. 2(2), 42–50 (2017)

L. Miclo, Relations entre isopérimétrie et trou spectral pour les chaînes de Markov finies. Probab. Theory Related Fields 114(4), 431–485 (1999)

A. Nachmias, Y. Peres, Critical random graphs: diameter and mixing time. Ann. Probab. 36(4), 1267–1286 (2008)

L. Saloff-Coste, Lectures on finite Markov chains, in Lectures on Probability Theory and Statistics (Saint-Flour, 1996). Lecture Notes in Mathematics, vol. 1665 (Springer, Berlin, 1997), pp. 301–413

Wikipedia contributors. Pairwise independence - Wikipedia, the free encyclopedia (2021). https://en.wikipedia.org/wiki/Pairwise_independence [Online: Accessed 18 September 2021]

W. Woess, Random Walks on Infinite Graphs and Groups (Cambridge University Press, Cambridge, 2000)

K.J. Worsley, An improved Bonferroni inequality and applications. Biometrika 69, 297–302 (1982)

Acknowledgements

We thank Peter Cameron, Maryanthe Malliaris, Sebastien Martineau, Yuval Peres, and Laurent Saloff-Coste for their help. S. C.’s research was partially supported by NSF grants DMS-1855484 and DMS-2113242. P. D.’s research was partially suppported by NSF grant DMS-1954042. L. M. acknowledges funding from ANR grant ANR-17-EUR-0010.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendices

Appendix 1: Dirichlet–Cheeger Inequalities

We begin by showing the Dirichlet–Cheeger inequality that we have been using in the previous sections. It is a direct extension (even simplification) of the proof of the Cheeger inequality given in Saloff-Coste [23]. We end this appendix by proving that it is in general not possible to compare linearly the Dirichlet–Cheeger constant of an absorbed Markov chain with the largest Dirichlet–Cheeger constant induced on a spanning subtree.

Let us work in continuous time. Consider L a sub-Markovian generator on a finite set V . Namely,  , whose off-diagonal entries are non-negative and whose row sums are non-positive. Assume that L is irreducible and reversible with respect to a probability π on V .

, whose off-diagonal entries are non-negative and whose row sums are non-positive. Assume that L is irreducible and reversible with respect to a probability π on V .

Let λ(L) be the smallest eigenvalue of − L (often called the Dirichlet eigenvalue). The variational formula for eigenvalues shows that

The Dirichlet–Cheeger constant ι(L) is defined similarly, except that only indicator functions are considered in the minimum:

Here is the Dirichlet–Cheeger inequality:

Theorem 5

Assuming L ≠ 0, we have

where

.

.

When L is Markovian, the above inequalities are trivial and reduce to ι(L) = λ(L) = 0. Indeed, it is sufficient to consider  and A = V respectively in the r.h.s. of (30) and (31). Thus there is no harm in supposing furthermore that L is strictly sub-Markovian: at least one of the row sums is negative. To bring this situation back to a Markovian setting, it is usual to extend V into

and A = V respectively in the r.h.s. of (30) and (31). Thus there is no harm in supposing furthermore that L is strictly sub-Markovian: at least one of the row sums is negative. To bring this situation back to a Markovian setting, it is usual to extend V into  where 0∉V is a new point. Then one introduces the extended Markov generator \(\overline L\) on \(\overline V\) via

where 0∉V is a new point. Then one introduces the extended Markov generator \(\overline L\) on \(\overline V\) via

Note that the point 0 is absorbing for the Markov processes associated to \(\overline L\).

It is convenient to give another expression for ι(L). Consider the set of edges  . We define a measure μ on \(\overline E\):

. We define a measure μ on \(\overline E\):

(Note that the reversibility assumption was used to ensure that the first line is well-defined.) Extend any \(f\in \mathbb {R}^V\) into the function \(\overline f\) on \(\overline V\) by making it vanish at 0 and define

With these definitions we can check that

These notations enable to see (31) as a L 1 version of (30):

Proposition 8

We have

Proof

Restricting the minimum in the r.h.s. to indicator functions, we recover the r.h.s. of (31). It is thus sufficient to show that for any given \(f\in \mathbb {R}^V\setminus \{0\}\),

Note that \(\vert d\overline f \vert (e)\geqslant \vert d \vert \overline f\vert \vert (e)\) for any \( e\in \overline E\), so without lost of generality, we can assume \(f\geqslant 0\). For any \(t\geqslant 0\), consider the set F t and its indicator function given by

Note that

so that by integration,

Furthermore, we have

where for any A ⊂ V , we define

Note that for any such A, we have  , so that

, so that

showing (32). □

Proof (Of Theorem 5)

Given \(g\in \mathbb {R}^V\), let f = g 2. By Proposition 8, we compute

Thus, we have

which gives the desired lower bound for λ(L). The upper bound is immediate. □

The unoriented graph associated to L is  where

where  . Consider \(\mathbb {T}\), the set of all subtrees of \(\overline G\), and for any \(T\in \mathbb {T}\), consider the sub-Markovian generator L

T on V associated to T via

. Consider \(\mathbb {T}\), the set of all subtrees of \(\overline G\), and for any \(T\in \mathbb {T}\), consider the sub-Markovian generator L

T on V associated to T via

where x, y ∈ V and \(\overline E(T)\) is the set of (unoriented) edges of T.

Note that L T is also reversible with respect to π (it is irreducible if and only if 0 belongs to a unique edge of \(\overline E(T)\)). Denote μ T the corresponding measure on \(\overline E\). It is clear that \(\mu _T\leqslant \mu \), so we get \( \iota (L_T)\leqslant \iota (L)\). In the spirit of Benjamini and Schramm [2], we may wonder if conversely, ι(L) could be bounded above in terms of \(\max _{T\in \mathbb {T}}\iota (L_T)\). A linear comparison is not possible:

Proposition 9

It does not exist a universal constant χ > 0 such that for any L as above, \( \chi \iota (L)\leqslant \max _{T\in \mathbb {T}}\iota (L_T)\).

Proof

Let us construct a family \((L^{(n)})_{n\in \mathbb {N}_+}\) of sub-Markovian generators such that

For any \(n\in \mathbb {N}_+\), the state space V

(n) of L

(n) is  (more generally, all notions associated to L

(n) will marked by the exponent (n)). Denote

(more generally, all notions associated to L

(n) will marked by the exponent (n)). Denote  and

and  . We take

. We take

where x, y ∈ V (n), and 𝜖 > 0, that will depend on n, is such that n𝜖 < 1∕2.

Recall that 0 is the cemetery point added to V (n), we have

Note that π (n) is the uniform probability on V (n). Let us show that

Consider any ∅ ≠ A ⊂ V

(n), and decompose A = A

0 ⊔ A

1, with  and

and  . Denote

. Denote  and

and  . We have that ∂A is given by

. We have that ∂A is given by

and thus \( \mu ^{(n)}(\partial A)=\frac 1{2n}(\epsilon (a_0(n-a_1)+a_1(n-a_0))+a_0)\). It follows that

Taking into account that 1 − 2𝜖a

1 > 0, the r.h.s. is minimized with respect to  when a

0 = 0 and we then get (independently of a

1), μ

(n)(∂A)∕π

(n)(A) = n𝜖. We deduce (34).

when a

0 = 0 and we then get (independently of a

1), μ

(n)(∂A)∕π

(n)(A) = n𝜖. We deduce (34).

Consider any \(T\in \mathbb {T}^{(n)}\) and let us check that

Observe there exists \(x\in V^{(n)}_1\) such that there is a unique \(y\in V^{(n)}_0\) with {x, y} being an edge of T. Indeed, put on the edges of T the orientation toward the root 0. Thus from any vertex \(x\in V_1^{(n)}\) there is a unique exiting edge (but it is possible there are several incoming edges). Necessarily, there is a vertex in \(V^{(n)}_0\) whose edge exits to 0. So there are at most n − 1 vertices from \(V^{(n)}_0\) whose exit edge points toward \(V^{(n)}_1\). In particular, there is at least one vertex from \(V^{(n)}_1\) which is not pointed out by a vertex from \(V^{(n)}_0\). We can take x to be this vertex from \(V^{(n)}_1\) and \(y\in V^{(n)}_0\) is the vertex pointed out by the oriented edge exiting from x.

Considering the singleton {x}, we get

implying (35) (a little more work would prove that an equality holds there). As a consequence, we see that \( \max _{T\in \mathbb {T}^{(n)}}\iota (L^{(n)}_T)\leqslant \epsilon \). Taking for instance  to fulfill the condition n𝜖 < 1∕2, we obtain \( \frac {\max _{T\in \mathbb {T}^{(n)}}\iota (L^{(n)}_T)}{\iota (L^{(n)})}\leqslant \frac 1{n}\), and (33) follows. □

to fulfill the condition n𝜖 < 1∕2, we obtain \( \frac {\max _{T\in \mathbb {T}^{(n)}}\iota (L^{(n)}_T)}{\iota (L^{(n)})}\leqslant \frac 1{n}\), and (33) follows. □

Appendix 2: Hardy’s Inequalities

Our goal here is to extend the validity of Hardy’s inequalities on finite trees to general denumerable trees, without assumption of local finiteness. We begin by recalling the Hardy’s inequalities on finite trees. Consider \(\mathcal {T}=(\overline V, \overline E,0)\) a finite tree rooted in 0, whose vertex and (undirected) edge sets are \(\overline V\) and \(\overline E\). Denote  , for each x ∈ V , the parent p(x) of x is the neighbor of x in the direction of 0. The other neighbors of x are called the children of x and their set is written C(x). For x = 0, by convention C(0) is the set of neighbors of 0. Let be given two positive measures μ, ν defined on V . Consider c(μ, ν) the best constant \(c\geqslant 0\) in the inequality

, for each x ∈ V , the parent p(x) of x is the neighbor of x in the direction of 0. The other neighbors of x are called the children of x and their set is written C(x). For x = 0, by convention C(0) is the set of neighbors of 0. Let be given two positive measures μ, ν defined on V . Consider c(μ, ν) the best constant \(c\geqslant 0\) in the inequality

where f was extended to 0 via  .

.

According to [21] (see also Evans, Harris and Pick [10]), c(μ, ν) can be estimated up to a factor 16 via Hardy’s inequalities for trees, see (39) below. To describe them we need several notations.

Let \(\mathbb {T}\) the set of subsets T of V satisfying the following conditions

-

T is non-empty and connected (in \(\mathcal {T}\)),

-

T does not contain 0,

-

if x ∈ T has a child in T, then all children of x belong to T.

Note that any \(T\in \mathbb {T}\) admits a closest element to 0, call it m(T), we have m(T) ≠ 0. When T is not reduced to the singleton {m(T)}, the connected components of T ∖{m(T)} are indexed by the set of the children of m(T), namely C(m(T)). For y ∈ C(m(T)), denote by T y the connected component of T ∖{m(T)} containing y. Note that \(T_{y}\in \mathbb {T}\).

We extend ν as a functional on \(\mathbb {T}\), via the iteration

-

when T is the singleton {m(T)}, we take

,

, -

when T is not a singleton, decompose T as {m(T)}⊔⊔y ∈ C(m(T)) T y, then ν satisfies

$$\displaystyle \begin{aligned} \begin{array}{rcl} {} \frac 1{\nu(T)}& =&\displaystyle \frac 1{\nu(m(T))}+\frac 1{\sum_{y\in C(m(T))} \nu(T_{y})}.\end{array} \end{aligned} $$(37)

For x ∈ V , let S x be the set of vertices y ∈ V whose path to 0 pass through x. For any \(T\in \mathbb {T}\) we associate the subset

where L(T) is the set of leaves of T, namely the x ∈ T having no children in T. Equivalently, T ∗ is the set of all descendants of the leaves of T, themselves included.

Consider \(\mathbb {S}\subset \mathbb {T}\), the set of \(T\in \mathbb {T}\) which are such that m(T) is a child of 0. Finally, define

We are interested in this quantity because of the Hardy inequality:

Our goal here is to extend this inequality to the situation where V is denumerable and where μ and ν are two positive measures on V , with ∑x ∈ V μ(x) < +∞.

Remark 3

Without lost of generality, we can assume 0 has only one child, because what happens on different S x and S y, where both x and y are children of 0, can be treated separately.

More precisely, while V is now (denumerable) infinite, we first assume that the height of  is finite (implying that \(\mathcal {T}\) cannot be locally finite). Recall that the height h(x) of a vertex \(x\in \overline V\) is the smallest number of edges linking x to 0. The assumption that \(\sup _{x\in \overline V} h(x)<+\infty \) has the advantage that the iteration (37) enables us to compute ν on \(\mathbb {T}\), starting from the highest vertices from an element of \(\mathbb {T}\). Then b(μ, ν) is defined exactly as in (38), except the maximum has to be replaced by a supremum. Extend c(μ, ν) as the minimal constant \(c\geqslant 0\) such that (36) is satisfied, with the possibility that c(μ, ν) = +∞ when there is no such c. Note that in (36), the space \(\mathbb {R}^V\) can be reduced and replaced by \(\mathcal {B}(V)\), the space of bounded mappings on V :

is finite (implying that \(\mathcal {T}\) cannot be locally finite). Recall that the height h(x) of a vertex \(x\in \overline V\) is the smallest number of edges linking x to 0. The assumption that \(\sup _{x\in \overline V} h(x)<+\infty \) has the advantage that the iteration (37) enables us to compute ν on \(\mathbb {T}\), starting from the highest vertices from an element of \(\mathbb {T}\). Then b(μ, ν) is defined exactly as in (38), except the maximum has to be replaced by a supremum. Extend c(μ, ν) as the minimal constant \(c\geqslant 0\) such that (36) is satisfied, with the possibility that c(μ, ν) = +∞ when there is no such c. Note that in (36), the space \(\mathbb {R}^V\) can be reduced and replaced by \(\mathcal {B}(V)\), the space of bounded mappings on V :

Lemma 10

We have

Proof

Denote \(\widetilde c(\mu ,\nu )\) the above r.h.s. A priori we have \(c(\mu ,\nu )\geqslant \widetilde c(\mu ,\nu )\). To prove the reverse bound, consider any \(f\in \mathbb {R}^V\) and consider for M > 0,  . Note that

. Note that

(This a general property of Dirichlet forms and comes from the 1-Lipschitzianity of the mapping \(\mathbb {R}\ni r\mapsto (r\wedge M)\vee (-M)\).) Since \(f_M\in \mathcal {B}(V)\), we have

Letting M go to infinity, we get at the limit by monotonous convergence

Since this is true for all \(f\in \mathbb {R}^V\), we deduce that \(c(\mu ,\nu )\leqslant \widetilde c(\mu ,\nu )\). □

Consider \((x_n)_{n\in \mathbb {N}_+}\) an exhaustive sequence of \(\overline V\), with x

0 = 0 and such that for any \(n\in \mathbb {N}_+\),  is connected. We denote \(\mathcal {T}_n\) the tree rooted on 0 induced by \(\mathcal {T}\) on \(\overline V_n\) and as above,

is connected. We denote \(\mathcal {T}_n\) the tree rooted on 0 induced by \(\mathcal {T}\) on \(\overline V_n\) and as above,  . For any \(n\in \mathbb {N}_+\) and x ∈ V

n, introduce the set

. For any \(n\in \mathbb {N}_+\) and x ∈ V

n, introduce the set

In words, this is the set of elements of V whose path to 0 first enters V n at x.

From now on, we assume that 0 has only one child, taking into account Remark 3. It follows that

Let μ n and ν n be the measures defined on V n via

The advantage of the μ n and ν n is that they brought us back to the finite situation while enabling to approximate c(μ, ν):

Proposition 10

We have limn→∞ c(μ n, ν n) = c(μ, ν).

Proof

We first check that the limit exists. For \(n\in \mathbb {N}_+\), consider the sigma-field \(\mathcal {F}_n\) generated by the partition (40). To each \(\mathcal {F}_n\)-measurable function f, associate the function f n defined on V n by

This function determines f, since for any x ∈ V n and any y ∈ R n(x), f(y) = f n(x). Furthermore, we have:

It follows that

where \(\mathcal {B}(\mathcal {F}_n)\) is the set of \(\mathcal {F}_n\)-measurable functions, which are necessarily bounded, i.e., belong to \(\mathcal {B}(V)\). Since for any \(n\in \mathbb {N}_+\) we have \(\mathcal {F}_n\subset \mathcal {F}_{n+1}\), we get that the sequence \((c(\mu _n,\nu _n))_{n\in \mathbb {N}_+}\) is non-decreasing and, taking into account Lemma 10, that

To get the reverse bound, first assume that c(μ, ν) < +∞. For given 𝜖 > 0, find a function \(f\in \mathcal {B}(V)\) with

Consider π the normalization of μ into a probability measure and let f n be the conditional expectation of f with respect to π and to the sigma-field \(\mathcal {F}_n\). Note that the f n are uniformly bounded by \(\left \Vert f\right \Vert { }_{\infty }\). Thus by the bounded martingale convergence theorem and since π gives a positive weight to any point of V , we have

From Fatou’s lemma, we deduce

By another application of the bounded martingale convergence theorem, we get

so that

It follows that \( \lim _{n\rightarrow \infty } c(\mu _n,\nu _n)\geqslant c(\mu ,\nu )-\epsilon \), and since 𝜖 > 0 can be chosen arbitrary small,

It remains to deal with the case where c(μ, ν) = +∞. Then for any M > 0, we can find a function \(f\in \mathcal {B}(V)\) with

By the above arguments, we end up with \( \lim _{n\rightarrow \infty } c(\mu _n,\nu _n)\geqslant M\), and since M can be arbitrary large, limn→∞ c(μ n, ν n) = +∞ = c(μ, ν). □

Our next goal is to show the same result holds for b(μ, ν). We need some additional notations. The integer \(n\in \mathbb {N}_+\) being fixed, denote \(\mathbb {T}_n\) and \(\mathbb {S}_n\) the sets \(\mathbb {T}\) and \(\mathbb {S}\) associated to \(\mathcal {T}_n\). The functional ν n is extended to \(\mathbb {T}_n\) via the iteration (37) understood in \(\mathcal {T}_n\). To any \(T\in \mathbb {T}_n\), associate T n the minimal element of \(\mathbb {T}\) containing T. It is obtained in the following way: to any x ∈ T, if x has a child in T, then add all the children of x in V , and otherwise do not add any other points.

Lemma 11

We have the comparisons

where T ∗ is understood in \(\mathcal {T}_n\) (and \(T_n^*\) in \(\mathcal {T}\) ).

Proof

The first bound is proven by iteration on the height of \(T\in \mathbb {T}_n\).

-

If this height is zero, then T is a singleton and T n is the same singleton, so that ν n(T) = ν(T n).

-

If the height h(T) of T is at least equal to 1, decompose

$$\displaystyle \begin{aligned} \begin{array}{rcl} T& =&\displaystyle \{m_n(T)\}\sqcup \bigsqcup_{y\in C_n(m_n(T))}T_{n,y}\end{array} \end{aligned} $$where m n(⋅), C n(⋅) and T n,⋅ are the notions corresponding to m(⋅), C(⋅) and T ⋅ in \(\mathcal {T}_n\).

Note that T and T n have the same height and decompose

$$\displaystyle \begin{aligned} \begin{array}{rcl} T_n& =&\displaystyle \{m( T_n)\}\sqcup \bigsqcup_{z\in C(m( T_n))} T_{n,z}.\end{array} \end{aligned} $$On the one hand, we have m(T n) = m n(T) and C n(m n(T)) ⊂ C(m n(T)) and on the other hand, we have for any y ∈ C n(m n(T)), \( \nu _n(T_y)\geqslant \nu ( (T_y)_n) =\nu ( T_{n,y})\), due to the iteration assumption and to the fact that the common height of T y and (T y)n is at most equal to h(T) − 1. The equality (T y)n = T n,y is due to the fact that T n,y is obtained by the same completion of T y as the one presented for T just above the statement of Lemma 11, and thus coincides with (T y)n. It follows that

$$\displaystyle \begin{aligned} \begin{array}{rcl} \frac 1{\nu_n(T)}& =&\displaystyle \frac 1{\nu_n(m_n(T))}+\frac 1{\sum_{y\in C_n(m_n(T))} \nu_n(T_{y})}\\& =&\displaystyle \!\!\frac 1{\nu(m( T_n))}\!+\!\frac 1{\sum_{y\in C_n(m_n(T))} \nu_n(T_{y})} \! \leq\! \frac 1{\nu(m( T_n))}\! +\! \frac 1{\sum_{y\in C_n(m_n(T))} \nu(T_{n,y})}\\ & \leqslant &\displaystyle \frac 1{\nu(m( T_n))}+\frac 1{\sum_{y\in C(m( T_n))} \nu(T_{n,y})} =\frac 1{\nu( T_n)}, \end{array} \end{aligned} $$establishing the wanted bound \(\nu _n(T)\geqslant \nu ( T_n)\). We now come to the second bound of the above lemma. By definition, we have

$$\displaystyle \begin{aligned} \begin{array}{rcl} T^*& =&\displaystyle \sqcup_{x\in L_n(T)}S_{n,y},\end{array} \end{aligned} $$where L n(T) is the set of leaves of T in \(\mathcal {T}_n\) and S n,y is the subtree rooted in y in \(\mathcal {T}_n\).

Note that L n(T) ⊂ L(T n) and by definition of μ n, we have

It follows that

□

Let \(\widetilde {\mathbb {S}}_n\) be the image of \(\mathbb {S}_n\) under the mapping \(\mathbb {S}_n\ni T\mapsto T_n\in \mathbb {S}\). Since \(\mathbb {S}_n\ni T\mapsto T_n\in \widetilde {\mathbb {S}}_n\) is a bijection, we get from Lemma 11,

so that

Let us show more precisely:

Proposition 11

We have limn→∞ b(μ n, ν n) = b(μ, ν).

Proof

According to (41), it remains to show that

Consider \(T\in \mathbb {S}\) such that the ration μ(T ∗)∕ν(T) serves to approximate b(μ, ν), namely up to an arbitrary small 𝜖 > 0 if b(μ, ν) < +∞ or is an arbitrary large quantity if b(μ, ν) = +∞. Define

Arguing as at the end of the proof of Proposition 10, we will deduce (42) from

where (T (n))∗ is understood in \(\mathcal {T}_n\). This convergence will be the consequence of

For (43), note that

and as we have seen at the end of the proof of Lemma 11,

Thus (43) follows by dominated convergence (since μ(V ) < +∞), from

To show the latter convergences, consider two cases:

-

If x ∈ L(T), then we will have x ∈ L n(T (n)) as soon as x ∈ V n.

-

If x ∈ T ∖ L(T), then we will have x∉L n(T (n)) as soon as V n contains one of the children of x in T.

We now come to (44), and more generally let us prove by iteration over their height, that for any \(\widetilde T\in \mathbb {T}\) and \(\widetilde T\subset T\), we have

i.e., the limit is non-decreasing. Indeed, if \(\widetilde T\) has height 0, it is a singleton {x}, we have  as soon as x belongs to V

n, insuring (45).

as soon as x belongs to V

n, insuring (45).

Assume that \(\widetilde T\) has height a \(h\geqslant 1\) and that (45) holds for any \(\widetilde T\) whose height is at most equal to h − 1. Write as usual

Assume that n is large enough so that  and in particular \(m(\widetilde T)\in V_n\) and

and in particular \(m(\widetilde T)\in V_n\) and  . Thus we also have

. Thus we also have

On the one hand, the set \(C_n(m(\widetilde T))\) is non-decreasing and its limit is \(C(m(\widetilde T))\), and on the other hand, due to the induction hypothesis, we have for any \(y\in C(m(\widetilde T))\),

By monotone convergence, we get

which leads to (45), via (46) and (47). This ends the proof of (42). □

The conjunction of Propositions 10 and 11 leads to the validity of (39), when V is denumerable with \(\mathcal {T}\) of finite height.

Let us now remove the assumption of finite height. The arguments are very similar to the previous one, except that the definition of b(μ, ν) has to be modified (μ and ν are still positive measures on V , with μ of finite total mass). More precisely, for any \(M\in \mathbb {N}_+\), consider  . Define on V

M the measure ν

M as the restriction to V

M of ν and μ

M via

. Define on V

M the measure ν

M as the restriction to V

M of ν and μ

M via

By definition, we take

This limit exists and the convergence is monotone, since he have for any \( M\in \mathbb {N}_+\), \(b(\mu _M,\nu _M)=\max _{T\in \mathbb {S}_M} \frac {\mu (T^*)}{\nu (T)}\), where  . Note that a direct definition of b(μ, ν) via the iteration (37) is not possible: we could not start from leaves that are singletons.

. Note that a direct definition of b(μ, ν) via the iteration (37) is not possible: we could not start from leaves that are singletons.

By definition, c(μ, ν) is the best constant in (36). It also satisfies  , as can be seen by adapting the proof of Proposition 10. We conclude that (39) holds by passing at the limit in

, as can be seen by adapting the proof of Proposition 10. We conclude that (39) holds by passing at the limit in

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Cite this chapter

Chatterjee, S., Diaconis, P., Miclo, L. (2022). A Random Walk on the Rado Graph. In: Basor, E., Böttcher, A., Ehrhardt, T., Tracy, C.A. (eds) Toeplitz Operators and Random Matrices. Operator Theory: Advances and Applications, vol 289. Birkhäuser, Cham. https://doi.org/10.1007/978-3-031-13851-5_13

Download citation

DOI: https://doi.org/10.1007/978-3-031-13851-5_13

Published:

Publisher Name: Birkhäuser, Cham

Print ISBN: 978-3-031-13850-8

Online ISBN: 978-3-031-13851-5

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)

and

and  , there exists

, there exists  and

and  such that g

1

f

1 = g

2

f

2. A countable relational structure M is homogeneous if any isomorphism between finite substructures can be extended to an automorphism of M. Graphs and

such that g

1

f

1 = g

2

f

2. A countable relational structure M is homogeneous if any isomorphism between finite substructures can be extended to an automorphism of M. Graphs and  ,

,

, namely, Q has exponential tails.

, namely, Q has exponential tails. , for any

, for any  ,

,