Abstract

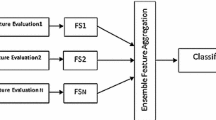

In the selection of feature subsets, stability is an important factor. In literature, however, stability receives less emphasis. Stability analysis of an algorithm is used to determine the reproducibility of algorithm findings. Ensemble approaches are becoming increasingly prominent in predictive analytics due to their accuracy and stability. The accuracy and stability dilemma for high-dimensional data is a significant research topic. The purpose of this research is to investigate the stability of ensemble feature selection and utilize that information to improve system accuracy in high-dimensional datasets. We conducted a stability analysis of the ensemble feature selection approaches ChS-R and SU-R using the jaccard similarity index. Ensemble approaches have been found to be more stable than previous feature selection methods such as SU and ChS for high-dimensional datasets. The average stability of the SU-R and ChS-R ensemble approaches is 56.03 and 50.71%, respectively. Accuracy improvement achieved is 4 to 5%.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Kim, H., Choi, B.S., Huh, M.Y.: Booster in high dimensional data classification. IEEE Trans. Knowl. Data Eng. 28(1), 29–40 (2016). https://doi.org/10.1109/TKDE.2015.2458867

Nogueira, S., Brown, G.: Measuring the stability of feature selection. In: Frasconi, P., Landwehr, N., Manco, G., Vreeken, J. (eds.) ECML PKDD 2016. LNCS (LNAI), vol. 9852, pp. 442–457. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46227-1_28

Das, S.: Filters, wrappers and a boosting-based hybrid for feature selection. In: ICML, vol. 1 (2001)

Xing, E.P., Jordan, M.I., Karp, R.M.: Feature selection for high-dimensional genomic microarray data. In: ICML, vol. 1 (2001)

Kalousis, A., Prados, J., Hilario, M.: Stability of feature selection algorithms: a study on high-dimensional spaces. Knowl. Inf. Syst. 12, 95–116 (2007). https://doi.org/10.1007/s10115-006-0040-8

Kuncheva, L.I.: A stability index for feature selection. In: Artificial Intelligence and Applications, pp. 421–427 (2007)

Lustgarten, J.L., Gopalakrishnan, V., Visweswaran, S.: Measuring stability of feature selection in biomedical datasets. In: AMIA, pp. 406–410 (2009)

Dunne, K., Cunningham, P., Azuaje, F.: Solutions to instability problems with sequential wrapper-based approaches to feature selection. J. Mach. Learn. Res. 1, 22 (2002)

Alelyani, S.: Stable bagging feature selection on medical data. J. Big Data 8(1), 1–18 (2021). https://doi.org/10.1186/s40537-020-00385-8

Ben Brahim, A.: Stable feature selection based on instance learning, redundancy elimination and efficient subsets fusion. Neural Comput. Appl. 33(4), 1221–1232 (2020). https://doi.org/10.1007/s00521-020-04971-y

Peng, H., Long, F., Ding, C.: Feature selection based on mutual information: criteria of max-dependency, max-relevance, and min-redundancy. IEEE Trans. Pattern Anal. Mach. Intell. 27, 1226–1238 (2005)

Haury, A.C., Gestraud, P., Vert, J.P.: The influence of feature selection methods on accuracy, stability and interpretability of molecular signatures. PLoS ONE 6(12), e28210 (2011). https://doi.org/10.1371/journal.pone.0028210

Sumant, A.S., Patil, D.: Ensemble feature subset selection: integration of symmetric uncertainty and Chi-square techniques with RReliefF. J. Inst. Eng. (India) Ser. B 103, 831–844 (2021). https://doi.org/10.1007/s40031-021-00684-5

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Sumant, A.S., Patil, D. (2022). Stability Investigation of Ensemble Feature Selection for High Dimensional Data Analytics. In: Chen, J.IZ., Tavares, J.M.R.S., Shi, F. (eds) Third International Conference on Image Processing and Capsule Networks. ICIPCN 2022. Lecture Notes in Networks and Systems, vol 514. Springer, Cham. https://doi.org/10.1007/978-3-031-12413-6_63

Download citation

DOI: https://doi.org/10.1007/978-3-031-12413-6_63

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-12412-9

Online ISBN: 978-3-031-12413-6

eBook Packages: Intelligent Technologies and RoboticsIntelligent Technologies and Robotics (R0)