Abstract

This study aims to examine massive open online course (MOOC) students’ experiences with a natural language processing-based Q&A chatbot. Following the definition of ‘inclusive learning’ in MOOCs from the Universal Design of Learning approach, this study firstly compares students’ behavioral intentions before and after using the chatbot. Next, this study investigates students’ levels of several other learning experience domains after using the chatbot—teaching presence, cognitive presence, social presence, enjoyment, perceived use of ease, etc. After examining students’ possible disparate learning experiences in these domains, this study investigates how age, gender, region, and native language factors influence students’ learning experiences with the chatbot. Lastly, but most importantly, this study explores how demographic factors influence students’ perception of chatbot interactions. If any are found, this study will focus on possible negative demographic factors that affect only certain groups of students to further examine how to improve a Q&A chatbot for inclusive learning in MOOCs.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

1.1 Background

To solve common problems with Q&A webpages, including text heaviness and cognitive overload, massive open online course (MOOC) providers are becoming interested in using natural language processing-based chatbots to provide more prompt responses to individual queries. Chatbots are software programs that communicate with users through natural language interaction interfaces [11,12,13]. Although chatbots are limited in their ability to correctly respond to every possible question, especially in the initial adoption phase, they can provide human-like responses through dialogue-based interactions which feel more immediate and customized compared to webpages. However, considering the distinctively broad spectrum in a MOOC student population, providing an inclusive learning environment becomes extremely important. Therefore, MOOC providers should make sure any demographic factors do not create inequitable learning experiences for certain groups of students upon making any technological changes, including the utilization of chatbots. A study with a small sample size examined whether the learning experience from using an FAQ chatbot was disparate from using an FAQ webpage [6]. The results indicated a significant difference between the two interfaces in the level of perceived barriers (i.e., a higher level for the chatbot group) and the level of intention to use the assigned interface (i.e., a lower level for the chatbot group). However, their Q&A quality and enjoyment levels were equivalent. Among the demographic factors investigated, region and native language factors were significantly influential in creating disparate experiences. Most importantly, implications of the necessity of a chatbot interface and how to improve the students’ Q&A experience with an FAQ chatbot were found in the study.

This study will primarily examine students’ learning experiences with an enhanced Q&A chatbot with a larger sample size to build upon the previous study results. In detail, this study compares the levels of students’ behavioral intentions before and after using the chatbot, following the definition of ‘inclusive learning’ in MOOCs [6] from the Universal Design of Learning (UDL) approach. Next, this study investigates students’ levels of several other learning experience domains after using the chatbot—teaching presence, cognitive presence, social presence, enjoyment, perceived use of ease, and so on from the Community of Inquiry framework [4] and Technology Adoption Model [3]. After examining students’ possible disparate learning experiences in these domains, this study investigates how age, gender, region, and native language factors influence students’ learning experiences with the chatbot. Lastly, but most importantly, this study explores how demographic factors influence students’ perception of chatbot interactions. Next, if any are found, this study will focus on possible negative demographic factors that affect only certain groups of students to further examine how to improve a Q&A chatbot for inclusive learning in MOOCs.

Notably, the meaning of inclusiveness will be further investigated from the UDL approach first in this study. The result of this conceptualization will be utilized in multiple aspects: evaluating students’ experiences with the chatbot and examining better chatbot response design strategies to support students’ equitable learning in MOOCs.

1.2 Research Questions

What demographic factors are influential in creating different learning experiences with a Q&A chatbot in massive open online courses, and what measures can be taken to mitigate possible negative learning experiences by changing chatbot response designs to promote an equitable learning environment?

Detailed Research Questions.

There are three detailed research questions as follows:

-

What is the relationship between students’ demographic factors (i.e., age, gender, region, and native language) and their learning experience domains (i.e., teaching presence, cognitive presence, social presence, enjoyment, perceived use of ease, etc.) with a Q&A chatbot in massive open online courses?

-

What is the structural relationship between their learning experience domains? Are there any differences among the demographic groups investigated in the study?

-

From a phenomenological perspective, what are their lived learning experiences like with the chatbot when they possess demographic factor(s) identified as negatively influencing their interaction with the chatbot? How do they want the chatbot to respond to their questions?

2 Methods

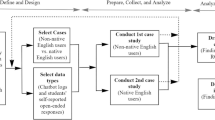

This mixed methods explanatory sequential study has two phases: a user testing-oriented survey and interviews. For the user testing-oriented survey phase, I will enhance the FAQ chatbot developed for the previous studies [6, 7] to evolve into a learning assistant Q&A chatbot by taking the following two measures. First, a topic analysis will be conducted utilizing an unsupervised machine learning modeling technique, Latent Dirichlet Allocation, with relevant online forum content of the research site’s courses provided for the recent three years. The extracted topics will be compared with the existing training set on the Dialogflow platform, and new topics and their content will consist of a new training set. Second, training set refinement (for the old training set) and language adjustment (for both old and new training sets) will be conducted based on the findings from the previous studies. In summary, the chatbot’s knowledge base will be enhanced by a new training set from the accumulated forum posting contents of the research site in the site’s learning management system for recent years. The manner of presenting the chatbot’s response will be changed based on the findings from the two previous studies, which suggested some implications of promoting students’ equitable learning experiences.

Once the chatbot on the Dialogflow platform is sufficiently trained based on the new and refined training sets, it will be deployed on a website enabling the study participants to interact by participating in the user testing-oriented survey. The survey questions will consist of five categories in the following order: initial behavioral intentions, user-testing, post-usage learning experience domain levels, expectations/challenges, and demographics. The questions regarding demographics and some regarding post-usage learning experience domains are designed to collect quantitative data, and the others of learning experience domains and expectations/challenges will collect qualitative data. Appendix A shows the tentative students’ learning experience domains with the chatbot in the user-testing-based survey.

In the interview phase, phenomenological interviews will be conducted with a small group of students. Purposeful sampling will be conducted according to the quantitative analysis of the survey data to see the possible inequitable learning experiences influenced by students’ demographic factors while interacting with the chatbot. With interview data, the students’ self-reported elaborations about the challenges they experienced will also be utilized to see if any certain positioning influenced their perceptions in using the chatbot. Appendix B includes some examples of interview questions and prompts.

The recruited research site is located in the Southwestern U.S. and provides MOOCs for journalists’ professional development. This site launches 15 courses on average per year, attracting students from 160 unique countries, and student numbers per course have ranged from 1000 to 5000 in the recent three years. Course(s) in this study will be delivered in English and last for four weeks in 2022 and 2023. The active students who make progress in the courses during the designated time period will be asked to participate in this study after receiving approval from an institutional review board. This study will aim to recruit over 100 students to attain statistical power in the quantitative data analysis for the survey. For interviews, students (n = around 10) will be recruited. The preliminary quantitative data analyses will determine the criteria for recruiting interview participants.

3 Significance of the Study

According to a generally-accepted value proposition of MOOCs, MOOCs should support everyone willing to learn with open access by utilizing the flexible features of affordable courses [2]. Considering MOOC students often report they are not sufficiently guided by instructors, course providers, or peers in how to become successful learners with their course activities [c.f., 1, 8, 10], providing a Q&A chatbot could improve students’ learning experience by adding one more tool for student support. More importantly, investigating students’ learning experiences with new technology in MOOCs, such as chatbots, requires multiple aspects: learning theory, technology-enhanced learning environment, and technology acceptance. Considering a theory-agnostic research approach has been arguably pervasive in the MOOC study community due to its multidisciplinary nature [5, 9], this study will contribute to the field by providing what aspects to consider for inclusive learning when adopting new technologies in the MOOC space from concrete theoretical backgrounds. Moreover, the findings will suggest critical chatbot response design points that better serve diverse student needs in MOOCs.

References

Bouchet, F., Labarthe, H., Bachelet, R., Yacef, K.: Who wants to chat on a MOOC? Lessons from a peer recommender system. In: Delgado Kloos, C., Jermann, P., Pérez-Sanagustín, M., Seaton, D.T., White, S. (eds.) Digital education: out to the world an back to the campus, pp. 150–159. Springer International Publishing, Cham (2017)

Coughlan, T., Lister, K., Seale, J., Scanlon, E., Weller, M.: Accessible inclusive learning: foundations. In: Ferguson, R., Jones, A., Scanlon, E. (eds.) Educational Visions: Lessons from 40 Years of Innovation, pp. 51–73. Ubiquity Press, London (2019)

Davis, F.D.: Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 13, 319–340 (1989). https://doi.org/10.2307/249008

Garrison, D.R.: E-Learning in the 21st Century: A Community of Inquiry Framework for Research and Practice. Routledge, New York (2016)

Gasevic, D., Kovanovic, V., Joksimovic, S., Siemens, G.: Where is research on massive open online courses headed? A data analysis of the MOOC research initiative. Int. Rev. Res. Open Distrib. Learn. 15 (2014). https://doi.org/10.19173/irrodl.v15i5.1954

Han, S., Lee, M.K.: FAQ chatbot and inclusive learning in massive open online courses. Comput. Educ. 179 (2022). https://doi.org/10.1016/j.compedu.2021.104395.Article no. 104395

Han, S., Liu, M., Pan, Z., Cai, Y., Shao, P.: Making FAQ chatbots more Inclusive: an examination of non-native English users’ interactions with new technology in massive open online courses. Revision Submitted (n.d.)

Julia, K., Peter, V.R., Marco, K.: Educational scalability in MOOCs: analysing instructional designs to find best practices. Comput. Educ. 161 (2021). https://doi.org/10.1016/j.compedu.2020.104054. Article no. 104054

Kovanović, V., et al.: Exploring communities of inquiry in massive open online courses. Comput. Educ. 119, 44–58 (2018). https://doi.org/10.1016/j.compedu.2017.11.010

Liu, M., Zou, W., Shi, Y., Pan, Z., Li, C.: What do participants think of today’s MOOCs: an updated look at the benefits and challenges of MOOCs designed for working professionals. J. Comput. High. Educ. 32(2), 307–329 (2019). https://doi.org/10.1007/s12528-019-09234-x

Rubin, V.L., Chen, Y., Thorimbert, L.M.: Artificially intelligent conversational agents in libraries. Libr. Hi Tech 28, 496–522 (2010). https://doi.org/10.1108/07378831011096196

Shawar, B.A., Atwell, E.S.: Chatbots: are they really useful? J. Lang. Technol. Comput. Linguist. 22, 29–49 (2007)

Wambsganss, T., Winkler, R., Söllner, M., Leimeister, J.M.: A conversational agent to improve response quality in course evaluations. In: Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu HI USA, pp. 1–9. ACM (2020)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendices

Appendix A

Tentative student’ learning experience domains with the chatbot.

Appendix B

All interviews will be structured as open-ended conversations about their lived learning experiences with the chatbot. I will let participant responses shape the following questions asked during the interviews. Some examples of questions and prompts that I will use are as follows:

-

1.

What was your experience like to use the chatbot?

-

2.

Can you walk me through some of your thoughts about the chatbot’s responses that you just received?

-

3.

Can you tell me more about what you said when you described […]?

-

4.

What thoughts are standing out to you as you see the [erroneous] response here?

-

5.

Earlier you mentioned that you were experiencing […] Are you noticing the same thing here?

-

6.

Some people describe […] as they see this kind of response. Are you noticing something similar? Different? In what way?

-

7.

Can you tell me more about this response that you received here? Do you find that it helps you or interferes with what you’re seeking?

-

8.

Can you describe any feelings that were generated as you used the chatbot?

-

9.

Did you notice that the chatbot cannot respond to this kind of question? Do you think there should be a response to this kind of question?

-

10.

I am going to observe you while you use the chatbot in this session. During the observation, I noticed […]; did you realize that you were doing […]? Can you help me to understand more about […]?

-

11.

Is there anything that you’d like to share about your experiences with the chatbot?

Rights and permissions

Copyright information

© 2022 Springer Nature Switzerland AG

About this paper

Cite this paper

Han, S., Liu, M. (2022). Developing an Inclusive Q&A Chatbot in Massive Open Online Courses. In: Rodrigo, M.M., Matsuda, N., Cristea, A.I., Dimitrova, V. (eds) Artificial Intelligence in Education. Posters and Late Breaking Results, Workshops and Tutorials, Industry and Innovation Tracks, Practitioners’ and Doctoral Consortium. AIED 2022. Lecture Notes in Computer Science, vol 13356. Springer, Cham. https://doi.org/10.1007/978-3-031-11647-6_2

Download citation

DOI: https://doi.org/10.1007/978-3-031-11647-6_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-11646-9

Online ISBN: 978-3-031-11647-6

eBook Packages: Computer ScienceComputer Science (R0)