Abstract

In this chapter, the authors resume the main articles published in the last years about Neuroradiology and Artificial Intelligence (AI), highlighting the main concepts from each one and the knowledge that was built over the years. Neuroradiology, from its beginning, is a field of radiology that has always been developing alongside technological innovation. It has embraced new imaging techniques such as computed tomography and magnetic resonance, which were used to develop AI algorithms since 2010. Nowadays, there are many AI models already available in the market, making the everyday work of neuroradiologists more productive and bringing new information (especially quantitative data) to the reports. With the discussion of these topics in the next pages, the authors hope to give a glimpse of what are the main applications for AI in neuroradiology, the main task that they could aid, what is already available in the market, and which are the main challenges and opportunities in the near future.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

One approach to understanding the future perspective of neuroradiology is to look at the past, see what has already happened, and compare it with the predictions made before. From this information, it is possible to try to make new forethoughts in the future.

In the PubMed search for articles with the words “neuroradiology” and “future” in the title or abstract, there were almost 200 results, from which the most interesting articles were included in this chapter. The first article in the list is the Neuroradiology editorial of 1976 [1]. The author, G Salomon, presented a lot of enthusiasm for his future perspectives, especially with the new imaging technique called computed tomography (CT). Dr. Godfred Hounsfield built the first CT scanner in 1971 and performed the first imaging of a 41-year-old patient with a brain tumor. Needless to say, this new technology has changed the day-to-day lives of medical doctors, improving patient quality of life and survival in many cases.

In another article from 1990, “Neuroradiology: Past, Present, Future,” published in Radiology [2] by JM Taveras, takes us back in time. He reviewed all eras of neuroradiology from the first period of development (from 1918 to 1939, when the first international conference on cranial radiology occurred). Through the second period of development (from 1939 to 1972, with the introduction of computed tomography), and ending in the modern period from 1973 to 1989 (remember that Taveras wrote the article in 1990), magnetic resonance imaging (MRI) began to be introduced. With the incorporation of new invasive neuroimaging, diagnostic, and therapeutic methods, a subfield of interventional neuroradiology has been established.

Another fascinating article is “Neuroradiology Back to the Future: Brain Imaging,” published in AJNR in 2012 [3]. Hoeffner et al. reviewed the entire path of neuroradiology up to 2012, showing how specialty has always been connected to the emergence, adaptation, and incorporation of new technologies. In their conclusion, the authors state,’ It is impossible to know (but exciting to contemplate) what developments will occur in neuroradiology in the next 100 years. Hopefully, progress will continue to lead us to increasingly less invasive, safer, faster, and more specific techniques that result in earlier diagnosis and treatment with a positive impact on patient outcome.

If we compare the content of these articles with the current state of neuroradiology, we can see that they were correct in most of their predictions. However, sometimes these predictions do not match the current situation, presenting flaws concerning our present.

One of the reasons that may explain this is the concept of known and unknown, addressed in the famous speech by Donald Rumsfeld, the defense secretary of the US government in 2002. When we form our discourse, we base it on our previous knowledge of the world, and there are many things that we do not know and consider in our predictions, which can make them entirely wrong in the future. In his speech, Rumsfeld stated that there were: (1) Known Known: we know of their existence and understand them; (2) Known Unknown: we know of their existence, but do not understand them; (3) Unknown Known: we do not know of their existence, but understand them; and (4) Unknown Unknown: we do not know of their existence, nor do we understand them.

In the 2014 TextOre article on Analytics, Knows, and Unknowns [4], the authors said that it is precisely in these “Unknown Unknowns” that the possibility of analyzing large datasets (Big Data) through artificial intelligence (using machine learning/deep learning) may help us discover new relationships/hierarchization and create predictions based on them, which would not be possible without the use of these tools.

Therefore, if artificial intelligence may help us better understand our world, why is there so much fear about it? Whenever a new technology appears, we follow a pattern called the hype circle. This shows how the visibility of the new technology behaves over time after its appearance. Initially, there is a peak in inflated expectations, thinking that the new technology will solve all problems. There is a trough of disillusionment when we get frustrated because the new technology does not provide the answers/functionalities imagined before. After that, there is a slope of enlightenment when people begin to realize the actual applications of the new technology and in which activities it will really make a difference. Finally, there is a plateau in productivity, in which the new technology has a well-defined role in the day-to-day life of the professionals involved in its use.

As an example, we could use magnetic resonance imaging (MRI). Initially, MRI was believed to solve all medical problems related to diagnostic imaging since it would be so detailed that it would provide doctors with the correct diagnosis to treat their patients correctly. Moreover, it would be interpretable that radiology ceases as a specialty. Currently, we know that this did not occur. In contrast, several subspecialties in radiology have arisen precisely because of the degree of complexity of interpreting MRI images.

As we can see by comparing Gartner’s 2020 hype cycle with the 2021 hype cycle [5], most artificial intelligence techniques (natural language processing, machine learning, and deep learning) are descending into the trough of disillusionment. Some experts predicted that these technologies would already be at a plateau of productivity within two to five years, which is very encouraging.

Therefore, if you use the past to understand what led to the present state of neuroradiology and to predict its future, based on the “Known Knows” and discarding the fear caused by inflated expectations related to the implementation of new artificial intelligence (AI) technologies, it is plausible to consider that in the coming years, artificial intelligence algorithms will have practical applicability in the daily routine of neuroradiologists. With this, neuroradiologists will be able to better use their work time, either to contact the patient or the requesting physician, expanding their functionalities by adapting these tools in their daily tasks, which will bring new important information to the reports (such as quantitative data and biomarkers), making them more efficient and accurate for the patients.

In this way, radiologists will be able to better understand the “Unknown Unknowns” present in neuroradiology, growing, updating, improving, and expanding their abilities using these new technologies, and determining a more precise and positive medicine for patients.

2 AI in Neuroradiology

2.1 Overview of Articles and Main CNS Subjects

In a review of articles published in recent years using the terms “artificial intelligence,” “machine learning,” “deep learning,” and “neuroradiology” in the PubMed search tool. Sixty-one published papers were found, with only one published from 1996, one in 2017, and the others from 2018 onwards.

In addition to its historical value, the 1996 publication [6] describes the use of computer-aided design (CAD) to aid the work of neuroradiologists. The software offered an interactive interface with the radiologist, characterized by data entry from CT and MRI images. The system provides a list of diagnostic hypotheses from the entered data by using a decision tree.

Since 1996 to the present day, computing power and processing speed have significantly increased, and it has become possible to create and implement increasingly complex artificial neural networks.

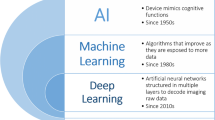

To be able to understand this subject a little more, especially machine learning (“machine learning”) and deep learning (“deep learning”), an article published in 2018 in AJNR has an excellent summary of all areas of artificial intelligence, with a good review for the reader [7]. Chapter ‘Introduction Chapter: AI and Big Data for Intelligent Health: Promise and Potential’ of this book can also be reviewed.

Another interesting article reviewed manuscripts published between 2014 and 2018 [8], discussing the 10 main areas of neuroradiology that presented research using machine learning techniques. These include Alzheimer’s disease, mild cognitive decline, brain tumors, schizophrenia, depression, Parkinson’s disease, attention deficit/hyperactivity disorder, autism spectrum disorder, epilepsy, multiple sclerosis, stroke, and traumatic brain injury. The main limitation reported in this study was the size of the study datasets, which were mostly small, with a sample (n) between 120 and 200 patients.

In the 2020 A systematic review article in Radiology: Artificial Intelligence [9] showed that the size of the dataset remains one of the main limitations observed when reviewing published articles discussing applications of AI in neuroradiology. In this study, most (80%) of the reviewed articles had datasets smaller than 1000, and 34% used a dataset smaller than 100. These numbers limit the generalizability of the AI model used in these studies because the quality and size of these datasets significantly influence the results presented, and may not reflect the proper performance of the algorithm when tested on datasets from other institutions (generalization). Quantitative evaluation methods also varied widely among the studies, making it difficult to interpret and compare these papers, even for readers familiar with machine learning. Another problem was the lack of description of the methodology for implementing the algorithms, which may render them irreproducible. Finally, few studies have provided clinical validation for their models, limiting their implementation in a single healthcare setting. This subject will be addressed more extensively when we discuss the review paper by Olthof et al. [10], which evaluates algorithms that are already on the market.

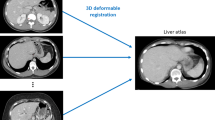

Among other articles, many presented applications of automatic segmentation with classification, especially for tumors [11]. One of these articles, published by Rauschecker et al. [12], drew much attention for its excellent performance in providing differential diagnoses (involving 19 neurological pathologies, some common and some rare). This AI system combines deep learning techniques to analyze quantitative data extracted from images (using atlas-based registration and segmentation). These imaging features were combined with five clinical features using Bayesian inference to develop differential diagnoses ranked by probability. This algorithm performed similarly to a neuroradiologist, and its performance was better than that of general radiologists, neuroradiology residents, and general radiology residents. However, the dataset size was smaller than 100 (82 and 96 patients in the training and test sets, respectively), which may limit its generalizability and reduce the performance of this algorithm “in the real world”. However, this high level of accuracy in common and rare diseases has never been demonstrated before. This article is one of the many works that cause uneasiness in the reader and rekindle doubt about the possibility of replacing neuroradiologists with AI.

2.2 A Systematic Review of Applications Already Available

Olthof et al. [10] put our minds at ease because they carried out a systematic review (technographic, since it is a technological development analysis) of the possibilities of using AI in neuroradiology, evaluating the algorithms available on the market, and checking their potential impacts on the work of neuroradiologists. The purpose was to answer two questions: whether and how AI will influence the daily practice of neuroradiologists. This article identified all software offered on the market from 2017 to 2019, collecting structured information from them and grouping their potential impacts into supporting, extending, and replacing neuroradiologists’ tasks. They identified 37 applications from 27 different companies that together offered more than 111 features.

For the most part, these functions supported neuroradiologists’ activities, such as detecting and interpreting imaging findings, or extending their tasks, such as algorithms allowing the identification of additional information on imaging examinations (e.g., those providing quantitative information on pathological findings). Only a small group of applications sought to replace tasks such as warnings about the occlusion of a large vessel in intracranial arterial angiotomography.

Another important point addressed by the authors was the scientific validation of AI products, which is usually limited, even with approval from regulatory agencies (FDA in the US and EC in the European Economic Area). More than half of the software products (68%) received regulatory approval from at least one of these entities. However, approximately 50% of the evaluated software did not provide information about their scientific validation. Furthermore, it is impossible to determine the actual clinical impact of these tools.

Another important piece of information highlighted in this work is that knowing the strengths and weaknesses of the application in use is crucial for improving quality, ensuring security, and understanding the eventual artifacts related to already known mechanisms. However, no specific information about the technical details of the algorithms or the training and validation data is available in the general information on these software websites. These data are essential for analyzing the reliability and applicability of these algorithms. Without this, the AI tool becomes a rugged black box for interpretation.

As for the imaging examinations on which the algorithms were based, half of them used MRI and half used CT scans. Most algorithms aim at only one pathology, and the most common pathologies are mild cognitive impairment and dementia, including Alzheimer’s disease (7 applications; 19%), multiple sclerosis (4 applications; 11%), tumors (4 applications; 11%), traumatic brain injury (3 applications; 8%), Parkinson’s disease (2 applications; 5%), and intracranial aneurysm (1 application; 3%). In all three regulatory approval groups (FDA, EC, and others), ischemic stroke, intracranial hemorrhage, and dementia were more frequent than in the other categories. An example of an AI algorithm for Alzheimer Disease is shown in Fig. 1.

The functionalities presented are divided into (shown in Fig. 2):

-

1.

Quantitative information about pathology (13 applications, 12%): This measures the characteristics of pathological findings, as in the case of the algorithm that marks the location of hemorrhagic stroke and calculates the volume of the hematoma to aid in diagnosis and determine the patient’s prognosis.

-

2.

Marking of regions of interest or change detection (38 applications, 34%): visually marks the abnormal finding, as in the case of algorithms that automatically assess the presence of sizeable arterial trunk occlusion in intracranial CTA.

-

3.

Classification, diagnosis, or probability of outcome (19 applications, 17%): interprets the imaging findings and provides a standardized diagnosis or classification, as in the case of algorithms that evaluate ischemic infarcts and provide the ASPECTS.

-

4.

Report Preparation (15 applications, 14%): Organizes the diagnostic findings into a report, such as providing a comparative analysis with individuals of the same age group.

-

5.

Automatic derivation of brain biomarkers (12 applications, 11%): compares quantitative information derived from normal or pathological findings with a specific disease group, such as hippocampal volume, with population curves to aid in the diagnosis of Alzheimer’s disease.

-

6.

Workflow and triage organization (12 applications, 11%): This facilitates the effectiveness of the diagnostic process, for example, by warning of abnormal tests that need to be reported as a priority.

-

7.

Anatomical segmentation (two applications, 2%): Segment anatomical areas, such as algorithms that calculate the volume of brain regions.

Most of the algorithms’ functionalities (39 applications; 54%) ‘support’ radiologists in performing their current tasks. Some other applications ‘extend’ the radiologists’ work by providing quantitative information, which was impossible to extract without the use of these algorithms (23 applications; 32%). Only a few algorithms (10 applications; 14%) offered functions that replaced specific tasks. A typical example of functionality substitution is the preparation of a report (with schematic reports filled with information). In both approved and not yet approved software, the most frequent category is “support,” followed by “extend” and “replace.”

The most numerous functionalities are directly related to the core of the radiologist’s business: finding and interpreting abnormalities, and making the correct diagnosis. The daily workflow of a neuroradiologist involves the tasks of information, indication, decision support, verification, acquisition, post-processing (of the imaging modality itself and within the PACS), prioritization, detection, segmentation, and quantification (of anatomical and pathological findings), interpretation, reporting, communication of imaging findings, reporting of critical findings, case discussion, peer review, and quality control. In general, AI will partially affect many tasks within this workflow, but others may not be changed.

As a result of this study [10], the main functionalities of the available software are to support (in detection and interpretation) and extend (with quantitative and biomarker information) the neuroradiologist’s tasks. The few algorithms that can replace physicians do so only for a limited set of tasks, such as reporting and analyzing a stroke patient. The authors concluded that AI is already a reality, and is currently available in clinical practice. However, none of the applications can replace the profession as a whole, although they can substitute for some specific tasks.

Therefore, AI algorithms could be used in:

Prioritizing studies in the PACS worklist based on the presence of pathology

-

Workflow optimization

Quantification of anatomical structures and comparison with a control group based on age and biomarker derivation.

-

Automated pathology detection and segmentation

Automated classification of pathology based on specific guidelines and criteria.

-

Imaging screening and longitudinal analysis and follow-up of lesions Tumors and multiple sclerosis.

This list shows that neuroradiology will not be the same in the near future with the use of AI facilitating daily work.

2.3 Main Review Articles Published

In addition to this technographic review, several other review articles have been published in the past two years.

Lui et al. [13] presented the current status and future directions of AI in neuroradiology, with graphics showing an increasing number of publications, articles, and meeting posters/abstracts of neuroradiology on the subject. They also discussed the most promising clinical applications of AI in neuroradiology, such as classification of abnormalities (e.g. urgent findings, such as hemorrhage, infarct, and mass effect) and detection of lesions (e.g. metastasis) and prediction of outcomes (e.g. predicting final stroke volume, tumor type, and prognosis), post-processing tools (for example, brain tumor volume quantification), and image reconstruction (e.g. fast MRI, low-dose CT) and enhancement (e.g. noise reduction, super-resolution) and workflow (for example, automate protocol choice and optimize scanner efficiency), citing many examples in the literature.

Kaka et al. [14], focus especially in the main tasks as: hemorrhage detection, stroke imaging, intracranial aneurysm screening, multiple sclerosis imaging, neuro-oncology, head and tumor imaging, and spine imaging. Duong et al. [15] divided the main applications of worklist prioritization, lesion detection, anatomic segmentation and volumetry, patient safety and quality improvement, precision medical education, and multimodal integration (in multiple sclerosis, epilepsy, and neurodegenerative disease), explaining many machine learning articles for each section. Kitamura et al. [16] also illustrated some applications of AI in neuroradiology and reviewed the machine learning challenges related to neuroradiology.

There are also specific reviews, such as the two stroke imaging reviews by Yedavalli et al. [17] and Soun et al. [18], describing the AI algorithms available in stroke imaging and summarizing the literature of AI applications for acute stroke triage, surveillance, and prediction, using different methods such as CT angiography and MRI. An example of a possible application of AI in acute stroke is shown in Fig. 3.

There are also many articles related to neuro-oncology, including those on the use of radiomics and radiogenomics, which will be discussed further in Chap. 12. There is one recent review dedicated to neuroradiologists, “Radiomics, machine learning, and artificial intelligence—what the neuroradiologist needs to know” [19], which explains the main principles (as shown in Fig. 4), utilization, and bias related to the use of these techniques.

3 Conclusion

The conclusion of that older article we cited [6], dated 1996, is nevertheless still very current: “The computer can function in one of two roles: as an independent interpreter that will analyze images with little or no input from the radiologist; or as an accessory interpreter that would act as an auxiliary brain for the radiologist.

Computers could assist radiologists, and radiologists could act as an eye for the computer rather than being displaced by it. This relationship represents ideal collaboration between both parties, complementing strengths and weaknesses.” This is the path that we should walk hand-in-hand.

Moreover, to conclude, we will use the words of the July 2019 Editorial of the Journal of Neuroradiology, [20] in which the authors make an almost poetic observation: “Artificial intelligence and neuroradiology cannot coexist side-by-side; they must be brought together to advance knowledge. Artificial intelligence must be a human-driven activity that shapes but does not replace the future of neuroradiology and neuroradiologists by extending our human skills to provide the best possible medical care.” With the use of AI in neuroradiology, we aimed to deliver better medicine with precision and positive clinical impact on the lives of our patients.

Perspectives:

Neuroradiology has been created and developed alongside with technological development. It could not be different with the assimilation of AI algorithm in daily routine for neuroradiological tasks. In the near future, AI application will help to improve and complement with new information the neuroradiology’s reports, delivering a more accurate and personalized medicine for the patients.

Core messages:

The use of AI applications in neuroradiology is already a reality, making it necessary for neuroradiologists to understand how AI algorithms are made, which are the main bias and problems that they need to be aware of, as well as the main improvements and additional information that could be added to the reports, helping to diagnose and to better treat neurological diseases.

Short expert opinion:

In 30 years, we believe that AI algorithm will improve the neuroradiologists performance and reports, helping them to spend less time in laborious repetitive tasks and more time in important ones such as adding volumetric, biometric and quantitative information in the reports, positively impacting on the patients diagnosis, prognosis and treatment.

References

Salamon G (1976) Editorial: situation and future of neuroradiology. Neuroradiology 11(1):1–2. https://doi.org/10.1007/BF00327250 PMID: 778653

Taveras JM (1990) Diamond Jubilee lecture. Neuroradiology: past, present, future. Radiology 175(3):593–602. https://doi.org/10.1148/radiology.175.3.2188291. PMID: 2188291

Hoeffner EG, Mukherji SK, Srinivasan A, Quint DJ (2012) Neuroradiology back to the future: brain imaging. AJNR Am J Neuroradiol 33(1):5–11. https://doi.org/10.3174/ajnr.A2936. Epub 2011 Dec 8. PMID: 22158930; PMCID: PMC7966158

TextOre Article, https://www.textore.net/blog/2018/4/23/analytics-knowns-and-unknowns

Gartner Hype Cycle, https://www.gartner.com/en/articles/the-4-trends-that-prevail-on-the-gartner-hype-cycle-for-ai-2021

Rasuli P, Rasouli F, Hammond DI, Amiri F (1996) An artificial intelligence program for the radiologic diagnosis of brain lesions. Radiographics 16(5):1207–1213. https://doi.org/10.1148/radiographics.16.5.8888400 PMID: 8888400

Zaharchuk G, Gong E, Wintermark M, Rubin D, Langlotz CP (2018) Deep learning in neuroradiology. AJNR Am J Neuroradiol 39(10):1776–1784. https://doi.org/10.3174/ajnr.A5543

Sakai K, Yamada K (2019) Machine learning studies on major brain diseases: 5-year trends of 2014–2018. Jpn J Radiol 37(1):34–72. https://doi.org/10.1007/s11604-018-0794-4

Yao AD, Cheng DL, Pan I, Kitamura F (2020) Deep learning in neuroradiology: a systematic review of current algorithms and approaches for the new wave of imaging technology. Radiol Artif Intel. https://doi.org/10.1148/ryai.2020190026

Olthof AW, van Ooijen PMA, Rezazade Mehrizi MH (2020) Promises of artificial intelligence in neuroradiology: a systematic technographic review [published online ahead of print, 2020 Apr 22]. Neuroradiology. https://doi.org/10.1007/s00234-020-02424-w

Mzoughi H, Njeh I, Wali A et al (2020) Deep multi-scale 3D convolutional neural network (CNN) for MRI gliomas brain tumor classification [published online ahead of print, 2020 May 21]. J Digit Imaging. https://doi.org/10.1007/s10278-020-00347-9

Rauschecker AM, Rudie JD, Xie L et al (2020) Artificial intelligence system approaching neuroradiologist-level differential diagnosis accuracy at brain MRI. Radiology 295(3):626–637. https://doi.org/10.1148/radiol.2020190283

Lui YW, Chang PD, Zaharchuk G, Barboriak DP, Flanders AE, Wintermark M, Hess CP, Filippi CG (2020) Artificial intelligence in neuroradiology: current status and future directions. AJNR Am J Neuroradiol 41(8):E52–E59. https://doi.org/10.3174/ajnr.A6681. Epub 2020 Jul 30. PMID: 32732276; PMCID: PMC7658873

Kaka H, Zhang E, Khan N (2021) Artificial intelligence and deep learning in neuroradiology: exploring the new frontier. Can Assoc Radiol J 72(1):35–44. https://doi.org/10.1177/0846537120954293 Epub 2020 Sep 18 PMID: 32946272

Duong MT, Rauschecker AM, Mohan S (2020) Diverse applications of artificial intelligence in neuroradiology. Neuroimaging Clin N Am 30(4):505–516. https://doi.org/10.1016/j.nic.2020.07.003. Epub 2020 Sep 17. PMID: 33039000; PMCID: PMC8530432

Kitamura FC, Pan I, Ferraciolli SF, Yeom KW, Abdala N (2021) Clinical artificial intelligence applications in radiology: neuro. Radiol Clin North Am 59(6):1003–1012. https://doi.org/10.1016/j.rcl.2021.07.002 PMID: 34689869

Yedavalli VS, Tong E, Martin D, Yeom KW, Forkert ND (2021) Artificial intelligence in stroke imaging: current and future perspectives. Clin Imaging 69:246–254. https://doi.org/10.1016/j.clinimag.2020.09.005 Epub 2020 Sep 21 PMID: 32980785

Soun JE, Chow DS, Nagamine M, Takhtawala RS, Filippi CG, Yu W, Chang PD (2021) Artificial intelligence and acute stroke imaging. AJNR Am J Neuroradiol 42(1):2–11. https://doi.org/10.3174/ajnr.A6883. Epub 2020 Nov 26. PMID: 33243898; PMCID: PMC7814792

Wagner MW, Namdar K, Biswas A, Monah S, Khalvati F, Ertl-Wagner BB (2021) Radiomics, machine learning, and artificial intelligence-what the neuroradiologist needs to know. Neuroradiology 63(12):1957–1967. https://doi.org/10.1007/s00234-021-02813-9. Epub 2021 Sep 18. PMID: 34537858; PMCID: PMC844969

Attyé A, Ognard J, Rousseau F, Ben SD (2019) Artificial neuroradiology: between human and artificial networks of neurons? J Neuroradiol 46(5):279–280. https://doi.org/10.1016/j.neurad.2019.07.001

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Cite this chapter

Ferraciolli, S.F., Mota, A.L., Ayres, A.S., Polsin, L.L.M., Kitamura, F., da Costa Leite, C. (2022). Neuroradiology: Current Status and Future Prospects. In: Sakly, H., Yeom, K., Halabi, S., Said, M., Seekins, J., Tagina, M. (eds) Trends of Artificial Intelligence and Big Data for E-Health. Integrated Science, vol 9. Springer, Cham. https://doi.org/10.1007/978-3-031-11199-0_4

Download citation

DOI: https://doi.org/10.1007/978-3-031-11199-0_4

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-11198-3

Online ISBN: 978-3-031-11199-0

eBook Packages: Biomedical and Life SciencesBiomedical and Life Sciences (R0)