Abstract

Feature selection (FS) has proven its importance as a preprocessing for improving classification performance. The success of FS methods depends on extracting all the possible relations among features to estimate their informative amount well. Fuzzy information measures are powerful solutions that extract the different feature relations without information loss. However, estimating fuzzy information measures consumes high resources such as space and time. To reduce the high cost of these resources, this paper proposes a novel method to generate FS based on fuzzy information measures using descriptive statistics data (DS) instead of the original data (OD). The main assumption behind this is that the descriptive statistics of features can hold the same relations as the original features. Over 15 benchmark datasets, the effectiveness of using DS has been evaluated on five FS methods according to the classification performance and feature selection cost.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Nowadays, classification systems can be founded in many real-world problems of different domains such as medical, software engineering, and industrial domain [3, 10]. In real-world problems, classification data may contain a lot of features, but not all the features are significant [22]. The bad effect of irrelevant and redundant features reduces the classification performance and increases the computational cost of classification systems [21]. FS is an effective preprocessing on classification data to select the most informative feature subset by keeping only the relevant features and filtering out the undesirable features [2].

The existing FS methods can be defined as one of three types [1, 6]: filter, wrapper, and embedded. Our study focus on the filter type due to its advantage over other types as simplicity of usage, efficiency in the computational cost, and independence of classifiers [1, 15].

Information measures are popular and widely used in the filter type [21]. Estimating these measures requires discretizing the continuous features with the risk of information loss [23]. To avoid this risk, fuzzy information measures have been introduced as an extension of information measures, by mapping each feature into a fuzzy relation matrix (FRM). The matrix size expands with increasing the length of features. Thus, estimating the fuzzy information measures consumes high computational cost in the space and time resources [18]. To reduce the high cost of these resources, this paper proposes a novel method to generate FS based on fuzzy information measures using descriptive statistics data (DS) instead of the original data (OD). The main assumption behind this is that the descriptive statistics of features can hold the same relations as the original features.

In this paper, the remaining sections are designed as follows: Sect. 2 introduces the related work. Section 3 presents the proposed method. The design of the experiment is described in Sect. 4 while the results are analyzed in Sect. 5. Finally, Sect. 6 introduces the conclusion of this paper.

2 Related Work

According to the structure of FRM, FS methods based on fuzzy information measures can be divided into two categories: FS based on feature-vector relationship and FS based on feature-feature relationship. However, both categories require high computational cost for mapping the original features into a FRM to avoid the discretization process.

In the category of FS based on feature-vector, Luukka et al. introduced FS method based on fuzzy entropy, called FES, to estimate the informative amount of each feature [12]. FES depends on the relationship between the feature and its ideal vector to map the feature into a FRM. Ideal vector is a user-defined set of samples that represents the class information as possible [12, 18]. The highest informative feature (lowest entropy) is suggested for selection while the lowest informative feature (highest entropy) is suggested for denying. An improved version of FES is proposed, called FSAE [11]. FSAE depends on an additional scaling factor to consider the distance among ideal vectors with the aim to adjust the informative level of each ideal vector. Shen et al. [19] conducted a comparison among the different components of FES method to study the effect of the combination among the different components. The main limitation of the previous methods is that no consideration for important feature relations such as redundancy and complementarity.

In the second category, Hu et al. proposed FS method based on fuzzy entropy to deal with the heterogeneous data [8]. In [7], Hu et al. used a positive region of data to improve the original method. However, these methods still suffer from denying important feature relations such as redundancy and complementarity. To overcome this drawback, Yu et al. proposed FS methods based on fuzzy mutual information, called FMI-mRMR [23]. In [20], Tsai et al. conducted a detailed comparison between FS methods based on mutual and fuzzy mutual information. The experimental results confirm the outperformance of FS method based on fuzzy mutual information in terms of feature stability and classification accuracy. Salem et al., in [16], proposed an ensemble FS method, called FFS-RRD, which depends on fuzzy information and fuzzy rough measures to extract the different feature relations. In [17], Salem et al. proposed a new FS method based on fuzzy joint mutual information, called FJMI, to extract the different relations based on the joint discriminative ability, in contrast to the traditional methods which depend on the individual discriminative ability.

3 Proposed Method

Most of the current FS methods depend on the original features. In this paper, we propose using descriptive statistics to summarize the feature information and reduce the computational cost of FS methods.

3.1 Fuzzy Relation Matrix Based on the Original Data

In the following, we illustrate the FRM structure based on the original data according to the methods of feature-feature relationship and feature-vector relationship.

Feature-Feature Relationship: Suppose \(F=\{x_1, x_2, ..., x_m\}\) is a feature of m samples. The FRM between the feature and itself will be as follows:

where \(s_{i*j} \in [0,1]\) is the similarity degree between \(x_i\) and \(x_j\), where \(i,j \in \{1,2,\dots ,m\}\).

Feature-Vector Relationship: Suppose \(F=\{x_1, x_2, ..., x_m\}\) is a feature of m samples and \(V=\{y_1, y_2, ..., y_t\}\) is an ideal vector of t samples. The FRM between the feature and its ideal vector will be as follows:

where \(s_{i*j} \in [0,1]\) is the similarity degree between \(x_i\) and \(y_j\), where \(i \in \{1,2,\dots ,m\}\) and \(j \in \{1,2,\dots ,t\}\).

3.2 Descriptive Statistics

Descriptive statistics (DS) is a set of measures that describe the structure of data [4, 9]. DS has two main types of measures: central tendency and dispersion (variation). Central tendency is a single measurement that describes the set of samples via their average, midpoint, and most frequently sample. Measures of dispersion (variation) describe how much the samples vary or are close to the central tendency. In this study, we use well-known statistics measures such as minimum (min), maximum (max), mean, median, mode, and standard deviation (Std). The basic definitions of the six measures are as follows:

-

Mean (or arithmetic mean) is the average value of a set of samples.

-

Median is the midpoint value of an ordered set of samples.

-

Mode is the most frequently occurring sample in the set of samples.

-

Minimum (Min) is the lowest value in a set of samples.

-

Maximum (Max) is the highest value in a set of samples.

-

Standard deviation (Std) is a spread measure that describes how much each sample varies or is close to the mean of the set of samples.

3.3 FS Based on Descriptive Statistics

Traditional FS methods of fuzzy information measures depend on the FRM to represent the feature structure as possible. However, generating the FRM is expensive in the space and time cost. To overcome the cost limitations, we suggest generating the FRM by the descriptive statistics data instead of original data. Based on DS, the values of six statistics measures can represent the samples of feature with respect to the class label. In this way, we can reduce the size of FRM as well as cost. Figure 1 shows the main process of FS based on DS. Firstly, we calculate the descriptive statistics of the original data. Then, we apply the FS method on DS. The indexes of the selected features are used to return the selected features from the original data.

The main procedure for generating a new dataset based on DS is described in Algorithm 1. The input of the algorithm is a dataset D, which consists of a set of features F and class label C. Firstly, we initialize the output dataset Newdata as an empty list (line 1). Then, we divide the dataset into subsets of data Fsubset, according to the class label (lines 2–3). Line 4 defines an empty list Fnew to store the descriptive statistics for each feature \(f_i\) in Fsubset. The descriptive statistics of \(f_i\) are calculated, stored in Dstat, and added to Fnew list as shown in lines (5–9). After that, the class label is added to Fnew (line 10). Each Fnew of class \(c_i\) is appended to the final output Newdata. Finally, a Newdata is returned in line 13.

4 Experimental Design

The main phases of the experimental framework (data preparation, feature selection, and evaluation) are designed as shown in Fig. 2.

4.1 Data Preparation

To justify the effectiveness and efficiency of using DS, the experiment was conducted on 15 benchmark datasets collected fromFootnote 1\(^,\)Footnote 2. Table 1 shows the main properties of the used datasets. In this phase, the output is the original data (OD) and a new data of descriptive statistics (DS).

4.2 Feature Selection

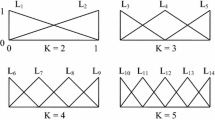

To confirm the effectiveness of using DS, five FS methods (with 50% threshold of ranked features) have been used in the experimental comparison. FS methods is divided into two categories: feature-vector methods (FES [12] and FSAE [11]) with time complexity O(mtn) and feature-feature methods (FJMI [17], FMI-mRMR [23], and FFS-RRD [16]) with time complexity \(O(dnm^2)\), where m is the number of samples in the feature, t is the number of samples in the ideal vector, n is the total number of features, and d is the number of selected information.

4.3 Evaluation

The evaluation of our experiment depends on two parts: classification performance and feature selection cost.

Classification Performance: In the experiment, three well-known classifiers are used to verify the improvement of classification performance as Naive Bayes (NB) [5], k-Nearest Neighbors (KNN, K = 3) [13], and Decision Tree (DT) [13]. The main measures of classification performance are:

-

1- Accuracy: is the percentage of the correctly predicted instances.

-

2- F-measure: is the harmonic average of the classification precision and recall.

-

3- Area under the ROC curve (AUC): AUC is the size of the area under the ROC curve. ROC is a curve graph that represents the relation between the true positive rate and the false positive rate.

Feature Selection Cost: In this paper, the experiments were conducted in a computer system with Ryzen 7 4800H (2.9 GHz) CPU and 16 GB RAM.

-

4- Space cost: the space cost is defined by the size of the FRM. The matrix size of each feature with OD is \(m*t\) for feature-vector methods while \(m^2\) for feature-feature methods, where m is the number of samples in the feature and t is the number of samples in the ideal vector. For DS, the matrix size of each feature is \((6*h)*t\) for feature-vector methods while \((6*h)^2\) for feature-feature methods, where h is the number of classes. The reduced percentage of the FRM was also computed to show the reduction size that DS achieved compared to OD as [14]:

$$\begin{aligned} MR (\%)=100-\frac{w1}{w2}*100 \end{aligned}$$(3)where w1 is the relation matrix size of the feature with DS and w2 is the relation matrix size with OD.

-

5- Runtime cost: the execution time of the FS methods represents the runtime cost. The reduced percentage of time was also computed to show the reduction time that DS achieved compared to OD as [14]:

$$\begin{aligned} TR (\%)=100-\frac{r1}{r2}*100 \end{aligned}$$(4)where r1 is the runtime of DS and r2 is the runtime of OD.

5 Results and Analysis

5.1 Accuracy

The accuracy results of NB obtained by the different FS methods are shown in Table 2. Using DS improved the average accuracy of all FS methods with OD. FES with DS (for simplicity, FES (DS)) improved FES (OD) by 0.2%. Similarly, DS improved the average accuracy of FSAE, FJMI, FMI-mRMR, and FFS-RRD with OD by 0.5%, 5.9%, 0.3%, and 3.2%, respectively.

Table 3 shows the accuracy results of KNN obtained by the different FS methods. Among five methods, DS improved the average accuracy of three methods FJMI, FMI-mRMR, and FFS-RRD with OD by 2.8%, 1.2%, and 0.2%, respectively. For methods of FES and FSAE, OS improved the average accuracy of the used methods with DS by 0.6% and 0.3%, respectively.

The accuracy results of DT obtained by the different FS methods are shown in Table 4. DS improved the average accuracy of FES, FSAE, FJMI, FMI-mRMR, and FFS-RRD with OD by 0.3%, 0.1%, 2.3%, 0.4%, and 1.9%, respectively.

5.2 F-measure

Figure 3 shows the F-measure results of the three classifiers obtained by the FS methods. According to NB, DS improved the average F-measure of FES, FSAE, FJMI, FMI-mRMR, and FFS-RRD by 1.1%, 0.3%, 5%, 0.7%, and 1%. Using KNN, FES(OD), FSAE(OD), and FFS-RRD(OD) have more average F-measure than the same methods with DS by 0.8%, 0.1%, and 0.9%, respectively. FJMI(DS) and FMI-mRMR(DS) outperformed the original methods by 3.8% and 1.4%, respectively. For DT, DS improved the average F-measure of all used FS methods except FES. In FES, using OD has more F-measure by 0.5%. The remaining methods FSAE(DS), FJMI(DS), FMI-mRMR(DS), and FFS-RRD(DS) achieved more average F-measure compared to the original methods by 0.6%, 1.5%, 0.5%, and 1.9%, respectively.

5.3 AUC

Figure 4 shows the AUC results of the used classifiers obtained by the FS methods. In FES, FS methods outperformed on NB with DS by 0.5% while outperformed on DT with OD by 0.2%. FES achieved the same result with DS and OD. Respectively, methods of FSAE, FJMI, and FMI-mRMR have been improved with DS by 1.5%, 1.9%, and 0.1% using NB, 0.1%, 4.1%, and 1.5% using KNN, and 1.9%, 2.8%, and 0.8% using DT. In FFS-RRD, the AUC was better with OD by 0.6%, 1.1%, and 0.9% using NB, KNN, and DT, respectively.

5.4 Space Cost

Table 5 reports the relation matrix size of the feature in each dataset with OD and DS. It also shows the reduction percentage of matrix size (MR) induced by DS. It is obvious that FS methods with DS have a smaller matrix size than the same methods with OD. The reduction range induced by DS is from 52% to 99.84% using FES and FSAE while from 76.96% to around 100% using the remaining methods.

5.5 Runtime Cost

Table 6 reports the runtime efficiency on the FS methods with OD and DS. It also shows the reduction percentage of time (TR) induced by DS. It is obvious that FS methods with DS have a smaller runtime than the same methods with OD. The reduction range induced by DS is from 83.9% to 99.99% using FES, 9.65% to 89.37% using FSAE, 1.36% to 76.22% using FJMI, 63.19% to 99.99% using FMI-mRMR, and 62.03% to 99.99% using FFS-RRD.

Overall, It is obvious that FS methods with DS achieved the best classification performance in most cases. It justifies that summarizing the feature information by DS helps to define the feature information better. Moreover, DS reduced the size of FRM on each feature. This is because DS maps the feature into a smaller size of samples. As a result, the cardinal value of the feature based on DS is usually less than the cardinal value of the feature based on OD. This is also the same reason of why FS methods with DS have a smaller runtime than the same methods with OD.

6 Conclusion

Fuzzy information measures are powerful solutions for developing effective FS methods. However, the estimation cost of these measures is relative to the size of input data where increasing the former depends on increasing the latter. In this paper, we have introduced a novel method to reduce the high cost of FS methods based on fuzzy information measures. To achieve that, we generated descriptive statistics data (DS) from the original data (OD) to reduce the input data of FS methods. Consequently, the cost of FS methods based on fuzzy information measures has been reduced. The effectiveness of using DS has been evaluated on five FS methods. The experimental results confirm reducing the cost of FS methods and improving the classification performance in most cases. In future work, we plan to extend our study to cover more DS measures with the aim to highlight the importance of using DS for enhancing the FS process.

References

Bolón-Canedo, V., Sánchez-Maroño, N., Alonso-Betanzos, A.: Feature selection for high-dimensional data. Progr. Artif. Intell. 5(2), 65–75 (2016). https://doi.org/10.1007/s13748-015-0080-y

Bommert, A., Sun, X., Bischl, B., Rahnenführer, J., Lang, M.: Benchmark for filter methods for feature selection in high-dimensional classification data. Comput. Stat. Data Anal. 143, 106839 (2020)

Cateni, S., Colla, V., Vannucci, M.: A method for resampling imbalanced datasets in binary classification tasks for real-world problems. Neurocomputing 135, 32–41 (2014)

Cavallaro, M., Fidell, L.: Basic descriptive statistics: commonly encountered terms and examples. Am. J. EEG Technol. 34(3), 138–152 (1994)

Cheng, J., Greiner, R.: Comparing bayesian network classifiers. arXiv preprint arXiv:1301.6684 (2013)

Guyon, I., Gunn, S., Nikravesh, M., Zadeh, L.A.: Feature Extraction: Foundations and Applications, vol. 207. Springer, Heidelberg (2008). https://doi.org/10.1007/978-3-540-35488-8

Hu, Q., Xie, Z., Yu, D.: Hybrid attribute reduction based on a novel fuzzy-rough model and information granulation. Pattern Recogn. 40(12), 3509–3521 (2007)

Hu, Q., Yu, D., Xie, Z.: Information-preserving hybrid data reduction based on fuzzy-rough techniques. Pattern Recogn. Lett. 27(5), 414–423 (2006)

Kaur, P., Stoltzfus, J., Yellapu, V., et al.: Descriptive statistics. Int. J. Acad. Medi. 4(1), 60 (2018)

Laradji, I.H., Alshayeb, M., Ghouti, L.: Software defect prediction using ensemble learning on selected features. Inf. Softw. Technol. 58, 388–402 (2015)

Lohrmann, C., Luukka, P., Jablonska-Sabuka, M., Kauranne, T.: A combination of fuzzy similarity measures and fuzzy entropy measures for supervised feature selection. Exp. Syst. Appl. 110, 216–236 (2018)

Luukka, P.: Feature selection using fuzzy entropy measures with similarity classifier. Exp. Syst. Appl. 38(4), 4600–4607 (2011)

Patrick, E.A., Fischer, F.P.: A generalized k-nearest neighbor rule. Inf. Control 16(2), 128–152 (1970)

Raza, M.S., Qamar, U.: Feature selection using rough set-based direct dependency calculation by avoiding the positive region. Int. J. Approx. Reason. 92, 175–197 (2018)

Saeys, Y., Inza, I., Larrañaga, P.: A review of feature selection techniques in bioinformatics. bioinformatics 23(19), 2507–2517 (2007)

Salem, O.A., Liu, F., Chen, Y.P.P., Chen, X.: Ensemble fuzzy feature selection based on relevancy, redundancy, and dependency criteria. Entropy 22(7), 757 (2020)

Salem, O.A., Liu, F., Chen, Y.P.P., Chen, X.: Feature selection and threshold method based on fuzzy joint mutual information. Int. J. Approx. Reason. 132, 107–126 (2021)

Salem, O.A., Liu, F., Chen, Y.P.P., Hamed, A., Chen, X.: Fuzzy joint mutual information feature selection based on ideal vector. Exp. Syst. Appl. 193, 116453 (2022)

Shen, Z., Chen, X., Garibaldi, J.: Performance optimization of a fuzzy entropy based feature selection and classification framework. In: 2018 IEEE International Conference on Systems, Man, and Cybernetics (SMC), pp. 1361–1367. IEEE (2018)

Tsai, Y.S., Yang, U.C., Chung, I.F., Huang, C.D.: A comparison of mutual and fuzzy-mutual information-based feature selection strategies. In: 2013 IEEE International Conference on, Fuzzy Systems (FUZZ), pp. 1–6. IEEE (2013)

Vergara, J.R., Estévez, P.A.: A review of feature selection methods based on mutual information. Neural Comput. Appl. 24(1), 175–186 (2013). https://doi.org/10.1007/s00521-013-1368-0

Xue, B., Zhang, M., Browne, W.N., Yao, X.: A survey on evolutionary computation approaches to feature selection. IEEE Trans. Evol. Comput. 20(4), 606–626 (2015)

Yu, D., An, S., Hu, Q.: Fuzzy mutual information based min-redundancy and max-relevance heterogeneous feature selection. Int. J. Comput. Intell. Syst. 4(4), 619–633 (2011)

Acknowledgement

This research has been supported by the National Natural Science Foundation of China (62172309).

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Salem, O.A.M., Liu, H., Liu, F., Chen, YP.P., Chen, X. (2022). Fuzzy Information Measures Feature Selection Using Descriptive Statistics Data. In: Memmi, G., Yang, B., Kong, L., Zhang, T., Qiu, M. (eds) Knowledge Science, Engineering and Management. KSEM 2022. Lecture Notes in Computer Science(), vol 13370. Springer, Cham. https://doi.org/10.1007/978-3-031-10989-8_7

Download citation

DOI: https://doi.org/10.1007/978-3-031-10989-8_7

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-10988-1

Online ISBN: 978-3-031-10989-8

eBook Packages: Computer ScienceComputer Science (R0)