Abstract

In this paper, we study several profile estimation methods for the generalized semiparametric varying-coefficient additive model for longitudinal data by utilizing the within-subject correlations. The model is flexible in allowing time-varying effects for some covariates and constant effects for others, and in having the option to choose different link functions which can used to analyze both discrete and continuous longitudinal responses. We investigated the profile generalized estimating equation (GEE) approaches and the profile quadratic inference function (QIF) approach. The profile estimations are assisted with the local linear smoothing technique to estimate the time-varying effects. Several approaches that incorporate the within-subject correlations are investigated including the quasi-likelihood (QL), the minimum generalized variance (MGV), the quadratic inference function, and the weighted least squares (WLS). The proposed estimation procedures can accommodate flexible sampling schemes. These methods provide a unified approach that works well for discrete longitudinal responses as well as for continuous longitudinal responses. Finite sample performances of these methods are examined through Monto Carlo simulations under various correlation structures for both discrete and continuous longitudinal responses. The simulation results show efficiency improvement over the working independence approach by utilizing the within-subject correlations as well as comparative performances of different approaches.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Discrete and continuous longitudinal responses

- Generalized estimating equations

- Generalized semiparametric varying-coefficient additive model

- Local linear smoothing

- Quadratic inference function

- Quasi-likelihood approach

- Within-subject correlation

1 Introduction

The repeated measurements on same individuals over time are common in medical and public health researches. In AIDS clinical trials, for example, the viral load and CD4 cell counts, which are considered as surrogate endpoints for HIV disease progression and HIV transmission to others, are measured repeatedly during the course of studies for trial participants. The repeated measurements in the longitudinal follow-up often display temporal effects and are correlated. We investigate several estimation methods for analyzing longitudinal data under the generalized semiparametric varying-coefficient additive models by incorporating the within-subject correlations.

Suppose that there is a random sample of n subjects. For the ith subject, let Y i(t) be the response at time t and let X i(t) and Z i(t) be the possibly time-dependent covariates of dimensions p + 1 and q, respectively, over the time interval [0, τ], where τ is the end of follow-up. Let μ i(t) = E{Y i(t)|X i(t), Z i(t)} be the conditional expectation of Y i(t) given X i(t) and Z i(t) at time t. The generalized semiparametric regression model speculates that

for 0 ≤ t ≤ τ, where g(⋅) is a known link function, α(t) is a (p + 1)-dimensional vector of unspecified functions and β is a q-dimensional vector of unknown parameters. The notation θ T represents transpose of a vector or matrix θ. When the link function g(⋅) is the identity function, model (1) is known as the semiparametric additive model. When the link function is the natural logarithm function and X i(t) = 1, model (1) is known as the proportional means model. Setting the first component of X i(t) as 1 gives a nonparametric baseline function. Under model (1), the effects of some covariates are constant while others are time-varying. Model (1) is more flexible than the parametric regression model where all the regression coefficients are time-independent and more desirable for model building than the nonparametric regression model where every covariate effect is an unspecified function of time. Different link functions can be selected to provide a rich family of models for longitudinal data. Both the categorical and continuous longitudinal responses can be modeled with appropriately chosen link functions. For example, the identity and logarithm link functions can be used for the continuous response variables while the logit link function can be used for the binary responses.

The semiparametric additive model for longitudinal data has been studied extensively for decades. These approaches include the nonparametric kernel smoothing by Hoover et al. (1998), the joint modeling of longitudinal responses and sampling times by Martinussen and Scheike (1999) and Lin and Ying (2001), the backfitting method by Zeger and Diggle (1994) and Wu and Liang (2004), and the profile kernel smoothing approach by Sun and Wu (2005). Fan and Li (2004) considered the profile local linear approach and the joint modeling for partially linear models. Hu et al. (2004) showed that for partially linear models, the backfitting is less efficient than the profile kernel method. Sun et al. (2013) investigated the generalized semiparametric additive model (1) using the local linear profile estimation method. The aforementioned estimation and inference procedures are derived without considering the correlations of longitudinal responses within subjects known as the working independence approach. The estimation methods under the working independence are valid and yield asymptotically unbiased estimators.

Correlation among repeated measurements on the same subject often exists for longitudinal data or clustered data. Incorporating such within-subject correlation into estimation procedure can lead to improved efficiency. Liang and Zeger (1986) introduced the idea of using a working correlation matrix with a small set of nuisance parameters to avoid specification of correlation between measurements within the cluster. Severini and Staniswalis (1994) and Lin and Carroll (2001a,b) estimated α(t) using the kernel method by ignoring the within-subject correlation while estimating β using weighted least squares by accounting for the within-subject correlation when X i(t) ≡ 1. Chen and Jin (2006) studied the method of generalized estimating equations by modeling the within-cluster correlation. Using piecewise local polynomial approximation of α(t), Chen and Jin (2006) showed that the weighted least square estimator of β achieves the semiparametric efficiency. Fan et al. (2007) proposed a profile local linear approach by imposing certain correlation structure for the longitudinal data for improved efficiency. Fan et al. (2007) proposed two methods to estimate for the within-subject correlation by optimizing the quasi-likelihood (QL) and by minimizing the generalized variance of the estimator of β (MGV). Following the generalized method of moments of Hansen (1982) and Qu et al. (2000) proposed the quadratic inference function method (QIF) by representing the inverse of working correlation matrix by a linear combination of basis matrices. Song et al. (2009), Madsen et al. (2011), and Tang et al. (2019) studied a mean-correlation parametric regression method for a family of discrete longitudinal responses by assuming that the marginal distributions of longitudinal responses follow an exponential family distribution and the joint distributions of the discrete responses from the same subject are modeled by a copula model. These approaches have a limitation of not allowing for time-varying covariate effects.

Semiparametric statistical modeling of discrete longitudinal responses beyond the marginal approach has been understudied. We investigate several profile estimation methods for the generalized semiparametric varying-coefficient additive model (1) by incorporating the within-subject correlations including the profile generalized estimating equation (GEE) approaches and the quadratic inference function approach. These methods provide a unified approach that work well for discrete longitudinal responses as well as for continuous longitudinal responses. Different methods for estimating the within-subject correlations such as the QL and MGV methods as well as a newly proposed profile weighted least square (WLS) approach fall under the umbrella of profile GEE approaches. The performances of these different methods are examined through extensive simulation studies under a variety of models and the within-subject correlation structures. The proposed semiparametric methods utilizing the within-subject correlations work well for discrete longitudinal responses as well as for continuous longitudinal responses.

The rest of the paper is organized as follows. The profile GEE estimation using fixed working covariance matrices is presented in Sect. 2.1. The methods for estimating the correlations are described in Sect. 2.2. An alternative profile estimation of model (1) via quadratic inference function is proposed in Sect. 2.3. The computational algorithms of the proposed procedures are summarized in Sect. 2.4. Section 3 presents the results of simulation studies for evaluating the finite sample performances of different methods. The results of simulation studies for continuous longitudinal responses are presented in Sect. 3.1 and the results of simulation studies for discrete longitudinal responses are given in Sect. 3.2. Some concluding remarks are given in Sect. 4.

2 Profile GEE Estimation Procedures

This section presents several profile estimation methods for the generalized semiparametric varying-coefficient additive model (1) by incorporating the within-subject correlations and the approaches for estimating the within-subject correlations. Choices of kernel function, bandwidth, and link function are also discussed.

2.1 Model Estimation Using Fixed Working Covariance Matrices

Suppose that the longitudinal response Y i(t) and the possibly time-dependent covariates X i(t) and Z i(t) are observed at the sampling times \(T_{i1}< T_{i2}< \cdots <T_{iJ_i}\), where J i is the total number of observations on the ith subject. Let Y ij = Y i(T ij), X ij = X i(T ij) and Z ij = Z i(T ij). Let \(Y_i=(Y_{i1},\cdots ,Y_{iJ_i})^T\) be the vector of responses for individual i. Similarly, define \(X_i=(X_{i1},\cdots ,X_{iJ_i})^T\), \(Z_i=(Z_{i1},\cdots ,Z_{iJ_i})^T\) and \(T_i=(T_{i1},\cdots ,T_{iJ_i})\). The sampling times {T ij, j = 1, …, J i} varies among individuals under random designs, while they are not dependent on i under fixed designs. We propose the kernel assisted profile method to estimate the nonparametric functions α(t) and parametric coefficients β under model (1) by taking into consideration of the within-subject correlations.

For given β, let \(\alpha (t)=\alpha (t_0)+\dot \alpha (t_0)(t-t_0)+O((t-t_0)^2)\) be the first-order Taylor expansion of α(⋅) for \(t \in {\mathcal {N}}_{t_0}\), a neighborhood of t 0, where \(\dot \alpha (t_0)\) is the derivative of α(t) at t = t 0. Denote \({\alpha }^*(t_0)=({\alpha }^T(t_0),\dot { \alpha }^T(t_0))^T\) and \({X}^*_i(t,t-t_0)={X}_i(t)\otimes (1,t-t_0)^T\), where ⊗ is the Kronecker product. Then for \(t \in {\mathcal {N}}_{t_0}\), model (1) can be approximated by

Let \({X}^*_{ij}(t_0)={X}_{ij}\otimes (1,T_{ij}-t_0)^T\), j = 1, …, J i. The approximated conditional expectation of Y ij for \(T_{ij}\in {\mathcal {N}}_{t_0}\) is given by \(\mu ^*_{ij} (t_0)= {\mu }\{{\alpha }^{*T}(t_0) {X}^*_{ij}(t_0)+{\beta }^TZ_{ij}\}\), where μ(⋅) = g −1(⋅). Denote \(\dot {\mu }_{ij}^*(t_0)= \dot {\mu }\{{\alpha }^{*T}(t_0) {X}^*_{ij}(t_0)+{\beta }^TZ_{ij}\}\) where \(\dot {\mu }(\cdot )\) is the first derivative of μ(⋅). Let \(\mu _i^*(t_0)=(\mu ^*_{i1}(t_0),\cdots ,\mu ^*_{iJ_i}(t_0))^T\). Let \(X^*_{i}(t_0)\) denote a 2(p + 1) × J i matrix with the jth column vector being the \({X}^*_{ij}(t_0)\), j = 1, …, J i.

Let K(⋅) be a nonnegative kernel function and h = h n > 0 a bandwidth parameter. Let K ih(t 0) = diag{K h(T ij − t 0), j = 1, …, J i} be the J i × J i diagonal matrix with {K h(T ij − t 0), j = 1, …, J i}, on the diagonal and zero elsewhere, where K h(⋅) = K(⋅∕h)∕h. At each t 0 and for fixed β, we consider the following local linear estimating function for α ∗(t 0):

where \(\Delta _i^*(t_0)=diag\{\dot {\mu }_{ij}^* (t_0), j=1,\ldots ,J_i\}\) and \(V^{-1}_{1i} \) is the inverse of the working covariance matrix for estimating α ∗(t 0). The solution to the equation U α(α ∗;β, t 0) = 0 is denoted by \(\tilde {{\alpha }}^*(t_0,\beta )\). We denote the first p + 1 components of \(\tilde {\alpha }^*(t_0,\beta )\) by \(\tilde {\alpha }(t_0,\beta )\).

Let \(\tilde \mu _{ij}(\beta )=\mu \{\tilde \alpha ^T(T_{ij}, \beta )X_{ij}+\beta ^TZ_{ij}\}\) and \(\tilde \mu _i(\beta )=(\tilde \mu _{i1}(\beta ),\ldots ,\tilde \mu _{iJ_i}(\beta ))^T\). The profile weighted least squares estimator \(\hat \beta \) is obtained by minimizing the following profile least squares function:

where \(V^{-1}_{2i}\) is the inverse of the working covariance matrix for estimating β.

Let \(A_{ij}={\partial \tilde {\alpha }^T(T_{ij},\beta )}/{\partial \beta }\) be the derivative of \(\tilde {\alpha }^T(T_{ij},\beta )\) with respect to β, which is a q × (p + 1) matrix with the kth row having the partial derivative of \(\tilde {\alpha }^T(T_{ij},\beta )\) with respect to the kth component of β k, 1 ≤ k ≤ q. Let \(\frac {\partial \tilde {\alpha }^T(T_{i},\beta )}{\partial \beta } =\big ( \frac {\partial \tilde {\alpha }^T(T_{i1},\beta )}{\partial \beta },\cdots ,\frac {\partial \tilde {\alpha }^T(T_{iJ_i},\beta )}{\partial \beta }\big )\) and \(\tilde X_i=diag\big \{X_{ij}, j=1,\ldots ,J_i\big \}\). Then \(\frac {\partial \tilde {\alpha }^T(T_{i},\beta )}{\partial \beta } \tilde X_i =\big ( \frac {\partial \tilde {\alpha }^T(T_{i1},\beta )}{\partial \beta }X_{i1},\cdots ,\frac {\partial \tilde {\alpha }^T(T_{iJ_i},\beta ) }{\partial \beta }X_{iJ_i}\big )\) is a q × J i matrix.

Taking the derivative of ℓ β(β) with respect to β, we have the score function

where \(\tilde \Delta _i =diag\{\dot {\tilde \mu }_{ij}, j=1,\ldots ,J_i\}\), \(\dot {\tilde \mu }_{ij} = \dot {\mu }\{\tilde \alpha ^T(T_{ij}, \beta )X_{ij}+\beta ^TZ_{ij}\}\), and \(\tilde Z_i= \big (Z_{i1},\cdots ,Z_{iJ_i}\big )\) is a q × J i matrix.

For given working covariance matrices V 1i and V 2i, the profile GEE estimator \(\hat \beta \) of β is obtained by solving the estimating equation U β(β) = 0. The profile GEE estimator for α(t) is given by \(\hat \alpha (t)=\tilde {\alpha }(t,\hat \beta )\).

Note that \({\partial \tilde {\alpha }^T(t,\beta ) }/{\partial \beta }\) is the first p + 1 columns of ∂α ∗T(t, β)∕∂β. Next we show that ∂α ∗T(t, β)∕∂β can be expressed in terms of the partial derivatives of U α(α ∗;β, t) at \(\alpha ^*=\tilde {\alpha }^*(t,\beta )\). Specifically, since \(U_\alpha (\tilde {\alpha }^*(t,\beta );\beta ,t)\equiv {\mathbf {0}}_{2(p+1)}\) by (3), it follows that \(\tilde {\alpha }^*(t,\beta )\) satisfies

Therefore,

where

and

Under the identity link in model (1), \(\tilde {\alpha }^*(t,\beta )\) and \(\hat \beta \) can be solved explicitly as the roots of the score functions (3) and (5), respectively. When there are no explicit solutions, the Newton–Raphson iterative algorithm can be used to solve the equations. The estimation procedure iteratively updates estimates of the nonparametric component \(\tilde \alpha ^*(t,\beta )\) and the parametric component \(\hat \beta \) until convergence. We denote the first p + 1 components of the convergent \(\tilde \alpha ^*(t,\beta )\) as \(\hat \alpha (t)\).

Let \(\dot {\hat {\mu }}_{ij}=\dot \mu \{\hat {\alpha }^T(T_{ij}){X}_{ij}+\beta ^T{Z}_{ij}\}\) and \(\hat \Delta _i=diag\{\dot {\hat {\mu }}_{ij}\}\). Define \(\hat E_{11}(t)=n^{-1}\sum _{i=1}^{n} X_{i}\hat \Delta _i K^{1/2}_{ih}(t)\) \(V^{-1}_{1i} K^{1/2}_{ih}(t)\hat \Delta _i X_{i}^T\) and \(\hat E_{12}(t)= n^{-1}\sum _{i=1}^{n} X_{i}\) \(\hat \Delta _i K^{1/2}_{ih}(t)V^{-1}_{1i} K^{1/2}_{ih}(t)\) \(\hat \Delta _i Z_{i}^T.\) Let \(\hat B_{ij}=-\hat E^T_{12}(T_{ij})\) \(\hat E^{-1}_{11}(T_{ij})X_{ij}+Z_{ij}\) and \(\hat B_i=(\hat B_{i1},\cdots ,\hat B_{iJ_i})^T.\) Following the derivations in Fan et al. (2007), we estimate the variance of \(\hat \beta \) by \( \hat P^{-1} \hat D \hat P^{-1}\) for given covariance matrices V 1i and V 2i, where

and

2.2 Estimation of the Within-Subject Covariance Matrix

The conditional within-subject correlation of longitudinal responses Y i(⋅) at times s, t ∈ [0, τ] can be measured by the Pearson correlation coefficient ρ i(s, t) = Corr(Y i(s), Y i(t)|X i(⋅), Z i(⋅)) = Cov(Y i(s), Y i(t)|X i(⋅), Z i(⋅))∕(σ i(s)σ i(t)), where σ i(t) be the conditional standard deviation of Y i(t) given X i(t) and Z i(t), 0 ≤ t ≤ τ. For simplicity, we assume that both σ i(t) and ρ i(s, t) do not depend on the covariates X i(⋅) and Z i(⋅). Thus we use the notations σ(t) and ρ(s, t) in place of σ i(t) and ρ i(s, t), respectively. In practice, the correlation structure ρ(s, t) is often unknown or complex, and a working correlation is employed by assuming a correlation model for ρ(s, t). The working independence corresponds to assuming ρ(s, t) = 0 for s ≠ t. Other commonly used correlation models include the compound symmetry or exchangeable structure (Exchangeable) with ρ(s, t) = θ, |θ| < 1; a generalized the first-order autoregressive (AR(1)) with ρ(s, t) = θ |s−t|, 0 < θ < 1, which is a generalization of AR(1) model in time series to allow the possibility of unequally spaced times; and a generalization of the first-order autoregressive moving-average (ARMA(1,1)) with ρ(s, t) = pq |s−t|, where |p| < 1 and q > 0. Fan et al. (2007) considered more complex correlation structure by embedding the working correlation into a collection of the correlation families ρ 0(s, t, θ 0), …, ρ m(s, t):

where θ = (θ 0, b 0, θ 1, b 1, …, b m, θ m) and b 0 + ⋯ + b m = 1 with all b i ≥ 0.

Let ρ k(s, t, θ), θ ∈ Θ, be the working correlation function for Y i(t), 0 ≤ t ≤ τ, for k = 1, 2. We consider decomposition of the working covariance V ki of \((Y_{i1},\cdots ,Y_{iJ_i})\) into

where A i = diag{σ(T ij), j = 1, …, J i}, and R ki(θ) is the working correlation matrix of \((Y_{i1},\cdots ,Y_{iJ_i})\) under the working correlation model ρ k(s, t, θ) for k = 1, 2.

The examples of correlation matrices R i(θ) of \((Y_{i1},\cdots ,Y_{iJ_i})\) at the measurement times \(t_1, \ldots , t_{J_i}\) for J i = 4 for Exchangeable, AR(1) and ARMA(1,1) correlations are shown in the following:

The GEE estimation of the regression coefficients is consistent even when the true correlation matrix is not an element of the class of working correlation matrices, and are efficient when the working correlation is correctly specified (Liang and Zeger 1986). Lin and Carroll (2000) showed that the most efficient estimation of the nonparametric component α(t) can be achieved by ignoring the within-subject correlation. However, more efficient estimation for the parametric component β is obtained by letting V 2i in (5) to be to the inverse of true covariance matrix of Y i; see Lin and Carroll (2001a,b), Wang et al. (2005), and Fan et al. (2007). Thus we set R 1i(θ) to be the identity matrix and focus on discussing the approaches for estimating A i and R 2i(θ). For convenience, we use the notation ρ(s, t, θ) for ρ 2(s, t, θ) and R i(θ) for R 2i(θ).

2.2.1 Estimation of Marginal Variance

Let \(\hat \alpha _0(t)\) and \(\hat \beta _0\) be the marginal estimators of α(t) and β in Sect. 2 by setting V ki to the identity matrix for k = 1, 2. Define the residual \(\hat r_{ij}=Y_{ij}-\hat \mu _{ij}\), where \(\hat \mu _{ij}=g^{-1}\{\hat \alpha _0^T(T_{ij}){X}_{ij}+\hat \beta _0^TZ_{ij}\}\). Following Fan et al. (2007), we estimate the marginal variance of response Y i(t) when it is continuous using kernel smoothing:

where \(K^*_h(\cdot )=K^*(\cdot /h)/h\), K ∗(⋅) is a nonnegative kernel function and h = h n > 0 a bandwidth parameter.

When the response Y i(t) is a discrete random variable, the variance estimation can take different form to account for the model structure of the particular distribution family. For example, \(\hat r_{ij}^2\) is replaced by \(\hat \mu _{ij}(1-\hat \mu _{ij})\) if the response Y i(t) is a Bernoulli random variable, and by \(\hat \mu _{ij}\) if Y i(t) is a Poisson random variable. We refer to Liang and Zeger (1986) for the relationship between variance and the model parameters when marginal distribution of Y i(t) belongs to an exponential family.

2.2.2 Estimation of Correlation Coefficients

We study different approaches to estimate θ of the correlation matrix R(θ). Two of the methods, the quasi-likelihood approach and the minimum generalized variance approach, were adopted from Fan et al. (2007) for model (1) with the identity link function. We also propose the minimum weighted least squares approach to estimate θ.

The QL estimation of θ is obtained by maximizing the quasi-likelihood function:

where R i(θ) and \(\hat A_i= diag\{\hat \sigma (T_{ij}), j=1,\ldots ,J_i\}\) are defined the same as in Eq. (10), \(\hat r_i=\{\hat r_{i1},\ldots , \hat r_{iJ_i}\}\) is the estimator for vector 𝜖 i and \(\hat r_{ij}\) are defined above.

Let \(\Sigma _{\hat \beta }({\hat \sigma }^2, \theta )\) be the estimated covariance matrix of \(\hat \beta \) under the working correlation model ρ k(s, t, θ), which depends on the estimated marginal variance \({\hat \sigma }^2\) and the correlation parameter vector θ. Defining the generalized variance of \(\hat \beta \) as the determinant \(\vert \Sigma _{\hat \beta }({\hat \sigma }^2, \theta )\vert \) of \(\Sigma _{\hat \beta }({\hat \sigma }^2, \theta )\). By Dempster (1969, Section 3.5), the volume of the ellipsoid of \((\hat \beta -\beta )^T\Sigma ^{-1}_{\hat \beta }({\hat \sigma }^2, \theta )\) \((\hat \beta -\beta ) <c\) for any positive constant c equals \(\pi ^{q/2} c^{1/2} \vert \Sigma _{\hat \beta }({\hat \sigma }^2, \theta )\vert ^{1/2} \big /\Gamma (\frac {q}{2}+1)\), where Γ(⋅) is the gamma function. It follows that minimizing the volume of the confidence ellipsoid of \((\hat \beta -\beta )^T\Sigma ^{-1}_{\hat \beta }({\hat \sigma }^2, \theta )\) \((\hat \beta -\beta ) <c\) over θ ∈ Θ is equivalent to minimizing \(\vert \Sigma _{\hat \beta }({\hat \sigma }^2, \theta )\vert \) for θ ∈ Θ and that the minimizer of the volume of the confidence ellipsoid over θ ∈ Θ is not affected by c. Here c can be viewed as a constant associated with a confidence level. The MGV estimation of θ by Fan et al. (2007) is obtained by minimizing the generalized variance of \(\hat \beta \):

Following the idea of the quasi-likelihood approach of Fan et al. (2007), we also study estimation of θ obtained by minimizing the weighted least squares:

2.3 Profile Estimation via Quadratic Inference Function

Qu et al. (2000) proposed the method of quadratic inference functions that does not involve direct estimation of the correlation parameter. The idea is to represent the inverse of the working correlation matrix by the linear combination of basis matrices:

where M 1 is the identity matrix, and M 2, ⋯ , M K are symmetric matrices, and a 1, ⋯ , a K are constant coefficients. The representation is applicable to many commonly used working correlations (Qu et al. 2000). For example, if the correlation structure exchangeable, then R(θ) has 1’s on the diagonal, and θ’s everywhere off the diagonal. The inversion R −1 can be written as a 1 M 1 + a 2 M 2, where M 1 is the identity matrix, and M 2 is a matrix with 0 on the diagonal and 1 off the diagonal. For the AR(1) correlation with ρ(s, t) = θ |s−t|, the inversion R −1 of a J × J correlation matrix can be written as a linear combination of three basis matrices, where M 1 is the identity matrix, and M 2 has 1 on the two main off-diagonals and 0 elsewhere, and M 2 has 1 on the corners (1, 1) and (J, J), and 0 elsewhere.

Applying the QIF approach, we propose an alternative profile estimation of model (1). We replace the GEE estimator of β that solves U β(β) = 0 in Sect. 2 by the estimator that minimizes the quadratic inference function while keep the estimation for \(\tilde {\alpha }(t,\beta )\) as the root of (3) unchanged. Applying idea of the QIF, we define the “extended score” function:

The quadratic inference function is defined as \(Q_n(\beta )=g_n^T(\beta ) C_n^{-1}(\beta ) g_n(\beta )\), where \(C_n(\beta )=(1/n^2)\sum _{i=1}^n g_i(\beta ) g_i^T(\beta )\). The profile QIF estimator is the minimizer of Q n(β):

Following the derivations of the asymptotic properties shown in Qu et al. (2000), we estimate the variance of the QIF estimator \(\hat \beta \) by \(\{ {\dot g}_n (\hat \beta ) C_n^{-1}(\hat \beta ) {\dot g}_n^T(\hat \beta )\}^{-1}\), where

2.4 Computational Algorithms

The iterative algorithms of the procedures using the QL, MGV, WLS, and QIF approaches for estimating α(t) and β under model (1) are outlined in the following.

-

1.

Calculate the estimates of α(t) and β using the working independence approach and use them as the initial estimates \(\hat \alpha ^{\{0\}}(t)\) and \(\hat \beta ^{\{0\}}\);

-

2.

Given the m-step estimates \(\hat \alpha ^{\{m\}}(t)\) and \(\hat \beta ^{\{m\}}\), calculate \(\hat r_{ij}=Y_{ij}- g^{-1}\{ (\hat \alpha ^{\{m\}}(T_{ij}))^T\) \({X}_{ij}+ (\hat \beta ^{\{m\}})^T Z_{ij}\}\) and obtain the matrix \(\hat A_i^{\{m\}}\) whose diagonal elements are estimated by (11);

-

3.

For the QL, MGV, and WLS approaches for estimating the correlation matrix, obtain the estimate \(\hat \theta ^{\{m\}}\) using one of the QL, MGV, and WLS methods described in Sect. 2.2.2; Set \(\hat V_{2i}^{\{m\}}=\hat A_i^{\{m\}} R_i \big (\hat \theta ^{\{m\}} \big ) \hat A_i^{\{m\}}\) as in (10); Then update the estimate of β to \(\hat \beta ^{\{m+1\}}\) by solving (5) and the estimate of α(t) to \(\hat \alpha ^{\{m+1\}}(t)=\tilde \alpha (t, \hat \beta ^{\{m+1\}})\);

-

4.

For the QIF approach, update the estimate of β to \(\hat \beta ^{\{m+1\}}\) obtained by minimizing \(Q_n(\beta )=g_n^T(\beta ) C_n^{-1}(\beta ) g_n(\beta )\) where and \(\hat A_i\) in g n(β) is replaced by \(\hat A_i^{\{m\}}\), and then update the estimate of α(t) to \(\hat \alpha ^{\{m+1\}}(t)=\tilde \alpha (t, \hat \beta ^{\{m+1\}})\);

-

5.

Repeating steps 2 to 4 until convergence, which is usually achieved within a few iterations.

2.5 Choices of Kernel Function, Bandwidth, and Link Function

We employ local linear techniques to estimate the nonparametric time-varying effects α(t). The kernel function is designed to give greater weight to observations with sampling time near t than those further away. In kernel density estimation, the Epanechnikov kernel function \(K(x)=\frac {3}{4}(1-x^2)_+\) is asymptotically optimal with the smallest mean integrated squared error among probability density functions. Silverman (1986, p.43) showed that there is not much variation in the efficiency in the choice of kernel function: the asymptotic relative efficiency of the Tukey kernel function \(K(x)=\frac {15}{16}(1-x^2)_+^2\) compared to the optimal Epanechnikov kernel is 99%, the Gaussian kernel has a relative efficiency of 95% and the rectangular kernel has a relative efficiency about 93%. We expect that the choice of kernel function has little effect on the performance of the proposed estimators for model (1) as well. It is common to assume compact support for technical simplicity. This assumption can be relaxed to include the Gaussian kernel (Silverman 1986, p.38).

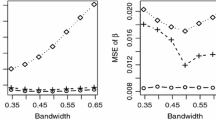

The bandwidth, on the other hand, is much more of a concern. The cross-validation bandwidth selection is widely used to choose the bandwidth. Rice and Silverman (1991) suggested a leave-one-subject-out cross-validation approach. We recommend the K-fold cross-validation bandwidth selection considered by Sun et al. (2013) in the marginal estimation approach for the generalized semiparametric regression model (1). Specifically, subjects are divided into K approximately equal-sized groups. Let D k denote the kth subgroup of data, then the kth prediction error is given by

for k = 1, …, K, where \(\hat \alpha _{(-k)}(t)\) and \(\hat \beta _{(-k)}\) are the estimators of α(t) and β based on the data without the subgroup D k, and [t 1, t 2] ⊂ (0, τ). The subset [t 1, t 2] is considered to avoid possible instability in estimating α(t) near the boundary. In practice, this interval can be taken to be close to [0, τ]. The data-driven bandwidth selection based on the K-fold cross-validation is to choose the bandwidth h that minimizes the total prediction error \(PE(h)=\sum _{k=1}^K PE_k(h)\). The K-fold cross-validation bandwidth selection provides a working tool for locating an appropriate bandwidth.

The proposed estimation procedure holds for a wide class of link functions under model (1). A link function needs to be selected for a particular data application. The choice may be clear for some applications based on prior knowledge, but more often one needs to choose a link function that gives the “best fit” of the data. One criterion proposed by Sun et al. (2013) is to access the model fit by the regression deviation defined as

where h cv is the bandwidth selected based on the K-fold cross-validation method for the given link function g(⋅) described above, and \(\hat \alpha _g(t)\) and \(\hat \beta _g\) are the estimators of α(t) and β under model (1) with the bandwidth h cv. In practice, the link function g(⋅) can be selected to minimize the regression deviation. Further examination of model fitness should be accompanied by model assessment tools such as the residual plots and formal goodness-of-fit tests.

3 Simulation Studies

In this section, we conduct a simulation study to assess the performances of the profile estimation methods using the QL, MGV, WLS, and QIF approaches presented in Sect. 2 under various models for longitudinal responses, different types of the within-subject correlation structures and different models for the measurement times. For convenience, we refer to the profile estimators resulted from these approaches as the QL, MGV, WLS, and QIF estimators. Section 3.1 presents a study of model (1) for continuous longitudinal responses and Sect. 3.2 shows the performances of these approaches for discrete longitudinal responses.

3.1 Continuous Longitudinal Responses

We study the performances of the proposed methods for continuous longitudinal responses under model (1) with the identity link function: Y i(t) = α T(t)X i(t) + β T Z i(t) + 𝜖 i(t). We consider two simulation settings. In the first simulation setting (C1), the true correlation structure of the longitudinal responses is ARMA(1,1) and the measurement times are independent of covariates. In the second simulation setting (C2), the true correlation structure of the longitudinal responses is Exchangeable and the measurement times are dependent of covariates.

Simulation Setting (C1)

Similar to Fan et al. (2007), for each subject i, we consider time-independent covariates X i(t) = (X 1i(t), X 2i(t))T and Z i(t) = (Z 1i(t), Z 2i)T, where X 1i = 1, (X 2i(t), Z 1i(t)) are time-varying covariates having a bivariate normal distribution with mean 0, variance 1 and correlation coefficient of 0.5 at each time t, and Z 2i is a time-independent covariate from Bernoulli distribution with success probability 0.5. We take \(\alpha (t) =(\sqrt {t/12}, \sin {}(2\pi t/12))^T\) and β = (1, 2)T. The error 𝜖 i(t) is a Gaussian process with mean 0, variance varying with time \(\sigma ^2(t)=0.5\exp (t/12)\) and of the ARMA(1,1) correlation structure, i.e., Corr(Y i(s), Y i(t)) = γρ |t−s| for s ≠ t. We take (γ, ρ) = (0.85, 0.9) and (0.85, 0.6) for strong and moderate, respectively. All subjects have the same scheduled measurement time points, {0,1,2,…,12}, but each of the scheduled time points has a 20% probability of being skipped except for the time 0. A random perturbation generated from the uniform distribution on [0, 1] is added to the non-skipped scheduled time points. Every subject has approximately 7 to 13 observations with an average of 11.

Simulation Setting (C2)

Similar to Sun et al. (2013), for each subject i, we let Z 1i(t) be a time-varying covariate from a uniform distribution on [0, 1], Z 2i a time-independent Bernoulli random variable with the success probability of 0.5, X 1i = 1, and X 2i(t) a time-varying Bernoulli random variable with the success probability of 0.5 at each time t. Let \(\alpha (t) =(0.5\sqrt {t}, 0.5\sin {}(2t) )^T\) and β = (0.5, 1)T. The error 𝜖 i(t) has a normal distribution with mean ϕ i and variance ν 2, where ϕ i is a random variable from N(0, 1). Thus 𝜖 i(t) has an Exchangeable correlation structure with the correlation coefficients equal to θ = 0.8 and θ = 0.5 for ν = 0.5 and ν = 1, respectively. The measurement times T ij for each subject i follow a Poisson process with the intensity \(h_i(t)=0.6\exp (0.7Z_{2i})\), for 0 ≤ t ≤ τ with τ = 3.5. The censoring times C i are generated from a uniform distribution on [1.5, 8]. There are approximately three observations per subject on [0, τ] and about 30% of subjects are censored before τ = 3.5.

The performances of the profile GEE estimators using the QL, MGV, and WLS approaches for estimating the correlation parameter θ as well as the profile estimators via the QIF approach are examined under the settings (C1) and (C2). We let V 1i to be the identity matrix for all the estimators while using different correlation structures are assumed for V 2i. The working independence estimator (WI) is obtained by letting \(V^{-1}_{2i}\) be the identity matrix. The Epanechnikov kernel function \(K(x)=\frac {3}{4}(1-x^2)_+\) is used in the study.

The simulation results for estimating β under the setting (C1) and ARMA(1,1) correlation with strong and moderate correlations are shown in Tables 1 and 2, respectively. The simulation results for estimating β under the setting (C2) and Exchangeable correlation with strong and moderate correlations are shown in Tables 3 and 4, respectively. The tables summarize the estimation bias (Bias), the sample standard error of estimates (SEE), the sample mean of the estimated standard errors (ESE), and the 95% empirical coverage probability (CP) for n = 200. Each entry of the table is calculated based on 1000 repetitions. The bandwidth used for each table is selected based on the 10-fold cross-validation of a single simulation that minimizes the total prediction error PE(h) for h in [0.7, 1.3] and carried it over for all 1000 repetitions.

The results for WI is obtained by assuming working independence case. The performances of the estimators QL, MGV, WLS and QIF are examined under both the correctly specified correlation model and the misspecified correlation models. The results under “Assuming Exchangeable Correlation” are obtained by assuming exchangeable correlation in the estimation, the results under “Assuming ARMA(1,1) Correlation” are obtained by assuming ARMA(1,1) correlation in the estimation, while the results under “Assuming Mixed Correlation” are obtained by assuming the correlation to be the mix of the exchangeable and AR(1) correlation in the estimation. The basis matrices for the QIF estimator are taken as a combination the basis matrices for Exchangeable and AR(1) when ARMA(1,1) and Mixed Correlation Structures are assumed.

The simulation study shows that all estimators are consistent with small estimation bias. The WLS, QL, MGV and QIF estimators all perform well and improve the estimation efficiency compared with the working independence (WI) method. The methods utilizing the within-subject correlations show reduced estimation standard errors in SEE and ESE. More efficiency is gained by assuming the true or mixed correlation structures than the scenarios where correlation structures are misspecified. More efficiency gain is also observed in the settings with stronger within-subject correlations than with moderate within-subject correlations. For example, compared with the WI estimator, the sample standard errors of the QL, MGV, WLS, and QIF estimators for β 1 reduced between 30% to 46% in Table 1 for strong within-subject correlation and the sample standard errors reduced between 8% to 25% in Table 2 for moderate within-subject correlations under the true ARMA(1,1) correlation. Similarly, compared with the WI estimator, the sample standard errors of the QL, MGV, WLS, and QIF estimators for β 1 reduced between 18% to 44% in Table 3 for strong within-subject correlation and the sample standard errors reduced between 6% to 18% in Table 4 for moderate within-subject correlations under the true Exchangeable correlation. The efficiency improved is more evident in estimating the effect of time-varying covariate than for the time-invariant covariate. This phenomenon also appeared in the simulation studies in Lin and Carroll (2001b) and Wang et al. (2005).

The performances of the estimators by assuming ARMA(1,1) working correlation and those under the mixed working correlation are close. The QL estimator appeared to achieve most efficiency gain out of these estimators in most scenarios. The above observations hold for both covariate-independent and covariate-dependent measurement times.

3.2 Discrete Longitudinal Responses

In this section we examine the performance of the proposed methods for model (1) for discrete longitudinal responses. We consider binary longitudinal responses in the simulation setting (D1), and Poisson count responses in the simulation setting (D2). Both settings have an Exchangeable correlation structure.

Simulation Setting (D1): The Bernoulli Model

For binary longitudinal responses, we let \(g(\mu )=\log \{\mu /(1-\mu )\}\) be the logistic link function. The observation times are generated similarly to the simulation setting (C1). All subjects have the same scheduled observation time points, {0,1,2,…,8}, but each of the scheduled time points has a 20% probability of being skipped except for the time 0. A random perturbation generated from the uniform distribution on [0, 1] is added to the non-skipped scheduled time points. The number of observations, J i, ranges from 4 to 9. At each observation time T ij, j = 1, …, J i, X ij = 1, Z 1ij and Z 2ij are independent standard normal random variables that do not vary with time. Let \(\alpha (t)=\sin {}(\pi t/30)-0.5\) and β = (0.01, 0.01)T and μ ij = P(Y ij = 1|X ij, Z ij). The binary longitudinal responses Y ij = Y i(T ij), j = 1, …, J i, are generated with the marginal means following the logit model logit(μ ij) = α(T ij)X ij + β T Z ij and with constant correlation coefficient Corr(Y i(s), Y i(t)) = 0.5 for s ≠ t. We refer to Macke et al. (2009) for the techniques for simulating correlated binary responses. Our simulation used the Matlab code provided in the paper to generate the correlated binary variables with the specified mean and covariance.

Simulation Setting (D2): The Poisson Model

Suppose that T ij, X ij and Z ij are the same as in the simulation setting (D1). We also use \(\alpha (t)=\sin {}(\pi t/30)-0.5\) and β = (0.01, 0.01)T. Let μ ij = E(Y ij|X ij, Z ij). Using the method of Macke et al. (2009), we generate Poisson longitudinal process Y ij = Y i(T ij), j = 1, …, J i, with the conditional marginal mean model \(\log (\mu _{ij})=\alpha (T_{ij}) X_{ij}+ \beta ^T Z_{ij}\) and with constant correlation coefficient Corr(Y i(s), Y i(t)) = 0.5 for s ≠ t.

The estimation results under the simulation settings (D1) and (D2) are summarized in Tables 5 and 6, respectively. The simulation shows that estimation bias is small for all estimators. The QL, MGV, WLS, and QIF estimators that utilize the within-subject correlations show improved efficiency compared with using the working independence (WI) method with the sample standard errors reduced between 17% to 24% in Table 5 and between 10% to 30% in Table 6. The QL estimator achieved most efficiency gain out of these estimators. Efficiency gains are slightly higher when the true or mixed correlation structures are assumed compared to assuming the ARMA(1,1) correlation structures.

4 Concluding Remarks

The generalized semiparametric varying-coefficient additive model (1) specifies a model for the conditional mean of longitudinal responses. The model allows time-varying effects for some covariates and constant effects for others and is an umbrella for many different models with selections of the link function. The intensively studied semiparametric additive model obtained by using the identity link function is popular for modeling continuous longitudinal responses. Semiparametric statistical modeling of discrete longitudinal responses has been understudied. With selection of link functions, model (1) can be used to model both continuous and discrete responses. Sun et al. (2013) investigated the local linear profile marginal estimation method for model (1) under the working independence. The estimation methods under working independence that ignore the within-subject correlation are valid and yield asymptotically unbiased estimators.

In this paper, we studied several profile estimation methods for model (1) that utilize the within-subject correlations to improve estimation efficiency. Several profile estimation methods that utilize the within-subject correlations including the profile GEE approaches and the profile QIF approach were investigated. The profile estimations are assisted with the local linear smoothing technique by approximating the time-varying effects with linear functions in the neighborhood of each time. The profile GEE approaches include the quasi-likelihood, the minimum generalized variance, and the weighted least squares. These methods differ by different procedures used in estimating the within-subject correlations. The proposed profile estimation methods for the generalized semiparametric varying-coefficient additive model (1) provide a unified approach that work well for discrete longitudinal responses as well as for continuous longitudinal responses.

Finite sample performances of these different methods are examined through Monto Carlo simulations under various correlation structures for both discrete and continuous longitudinal responses. Our study showed significant efficiency improvement of all the estimators, the QL, WLS, WLS, and QIF estimators, over the working independence approach. The QL estimator appeared to achieve most efficiency gain out of all estimators in most scenarios. The efficiency improved is more evident in estimating the effects of time-varying covariates than for the time-invariant covariates. Efficiency gains are higher when the true or mixed correlation structures are assumed compared to the misspecified correlation structures. The above observations hold for both covariate-independent and covariate-dependent measurement times.

References

Chen, K., & Jin, Z. (2006). Partial linear regression models for clustered data. Journal of the American Statistical Association, 101(473), 195–204.

Dempster, A. P. (1969). Elements of continuous multivariate analysis. Reading, MA: Addison-Wesley.

Fan, J., & Li, R. (2004). New estimation and model selection procedures for semiparametric modeling in longitudinal data analysis. Journal of the American Statistical Association, 99(467), 710–723.

Fan, J., Huang, T., & Li, R. (2007). Analysis of longitudinal data with semiparametric estimation of covariance function. Journal of the American Statistical Association, 102(478), 632–641.

Hansen, L. P. (1982). Large sample properties of generalized method of moments estimators. Econometrica: Journal of the Econometric Society, 50(4), 1029–1054.

Hoover, D. R., Rice, J. A., Wu, C. O., & Yang, L.-P. (1998). Nonparametric smoothing estimates of time-varying coefficient models with longitudinal data. Biometrika, 85(4), 809–822.

Hu, Z., Wang, N., & Carroll, R. J. (2004). Profile-kernel versus backfitting in the partially linear models for longitudinal/clustered data. Biometrika, 91(2), 251–262.

Liang, K.-Y. & Zeger, S. L. (1986). Longitudinal data analysis using generalized linear models. Biometrika, 73(1), 13–22.

Lin, X., & Carroll, R. J. (2000). Nonparametric function estimation for clustered data when the predictor is measured without/with error. Journal of the American Statistical Association, 95(450), 520–534.

Lin, X., & Carroll, R. J. (2001a). Semiparametric regression for clustered data. Biometrika 88(4), 1179–1185.

Lin, X., & Carroll, R. J. (2001b). Semiparametric regression for clustered data using generalized estimating equations. Journal of the American Statistical Association, 96(455), 1045–1056.

Lin, D., & Ying, Z. (2001). Semiparametric and nonparametric regression analysis of longitudinal data. Journal of the American Statistical Association, 96(453), 103–126.

Macke, J. H., Berens, P., Ecker, A. S., Tolias, A. S., & Bethge, M. (2009). Generating spike trains with specified correlation coefficients. Neural Computation, 21(2), 397–423.

Madsen, L., Fang, Y., Song, P. X.-K., Li, M., & Yuan, Y. (2011). Joint regression analysis for discrete longitudinal data. Biometrics, 67(3), 1171–1176.

Martinussen, T., & Scheike, T. H. (1999). A semiparametric additive regression model for longitudinal data. Biometrika, 86, 691–702.

Qu, A., Lindsay, B. G., & Li, B. (2000). Improving generalised estimating equations using quadratic inference functions. Biometrika, 87(4), 823–836.

Rice, J. A., & Silverman, B. W. (1991). Estimating the mean and covariance structure nonparametrically when the data are curves. Journal of the Royal Statistical Society. Series B (Methodological), 53(1), 233–243.

Severini, T. A., & Staniswalis, J. G. (1994). Quasi-likelihood estimation in semiparametric models. Journal of the American statistical Association, 89(426), 501–511.

Silverman, B. (1986). Density estimation for statistics and data analysis. London: Chapman and Hall.

Song, P. X.-K., Li, M., & Yuan, Y. (2009). Joint regression analysis of correlated data using gaussian copulas. Biometrics, 65(1), 60–68.

Sun, Y., & Wu, H. (2005). Semiparametric time-varying coefficients regression model for longitudinal data. Scandinavian Journal of Statistics, 32(1), 21–47.

Sun, Y., Sun, L., & Zhou, J. (2013). Profile local linear estimation of generalized semiparametric regression model for longitudinal data. Lifetime Data Analysis, 19(3), 317–349. PMCID: PMC3710313.

Tang, C. Y., Zhang, W., & Leng, C. (2019). Discrete longitudinal data modeling with a mean-correlation regression approach. Statistica Sinica, 29(2), 853–876.

Wang, N., Carroll, R. J., & Lin, X. (2005). Efficient semiparametric marginal estimation for longitudinal/clustered data. Journal of the American Statistical Association, 100(469), 147–157.

Wu, H., & Liang, H. (2004). Backfitting random varying-coefficient models with time-dependent smoothing covariates. Scandinavian Journal of Statistics, 31(1), 3–19.

Zeger, S. L., & Diggle, P. J. (1994). Semiparametric models for longitudinal data with application to cd4 cell numbers in HIV seroconverters. Biometrics, 50(3), 689–699.

Acknowledgements

We thank the editors for their handling of our manuscript. We also thank the reviewer’s valuable comments and suggestions that have improved the paper. This research was partially supported by the NIAID NIH award number R37AI054165 and the National Science Foundation grant DMS1915829. The authors would like to thank professor Runzi Li for providing the program code for Fan, Huang and Li (2007).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Cite this chapter

Sun, Y., Fang, F. (2022). Profile Estimation of Generalized Semiparametric Varying-Coefficient Additive Models for Longitudinal Data with Within-Subject Correlations. In: He, W., Wang, L., Chen, J., Lin, C.D. (eds) Advances and Innovations in Statistics and Data Science. ICSA Book Series in Statistics. Springer, Cham. https://doi.org/10.1007/978-3-031-08329-7_8

Download citation

DOI: https://doi.org/10.1007/978-3-031-08329-7_8

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-08328-0

Online ISBN: 978-3-031-08329-7

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)