Abstract

The aim of this paper is to present the concept of a neural model of a manufacturing process. The goal of the model is to forecast the number of defective products based on the historical values of the manufacturing process parameters and the historical values of the number of defective products. The paper describes the creation of the model on the example of a manufacturing process in a glass factory. The use of the model allowed for a more accurate prediction of the number of defective products, which is treated as the improvement of the manufacturing predictability mentioned in the title of the paper. The model includes several NARX artificial neural networks. Each NARX network considers data from a different part of the production line. The forecast results on four test data sets are also presented. These results were compared with the classic approach, which uses a single neural network. The created model allowed for a significant reduction in the prediction error in four test data sets considered.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The production process is defined as the process of transforming the input vector of the production system into the output vector. The input vector consists of technical means of production, work items, energy factors, personnel, capital, and information. In turn, the output vector includes products, services, information, waste, as well as defective products. Predicting the results of a manufacturing process that are part of the output vector can be a difficult task, especially when dealing with complex production processes. Therefore, one of the most important tasks of modern production engineering is the development of methods and techniques allowing to predict what will be the result of the production process. Exemplary predictive tasks in this context may be related to the prediction of the number of products produced at the given values of the input vector, as well as the prediction of defective products produced at the given values of the manufacturing process parameters.

The elements of the output vector, the prediction of which can be particularly important, include the number of non-compliant or defective products. The task of predicting the number of defects is a part of quality assurance. Product quality assurance is a key element of the strategy of modern manufacturing companies. Therefore, the role of quality assurance research is becoming more and more significant. Such research can help identify the causes of defects in products or determine the method of eliminating product defects.

Information extracted from data that comes from a manufacturing process can be used to support the quality assurance process. Currently, many manufacturing companies face the problem of huge amounts of data. These data are generated, inter alia, by various types of devices that carry out operations in the technological process or also by production process monitoring systems. Properly conducted analysis of production data can reveal important information useful for predicting the quality of products. In the era of rapid development of technologies for collecting and processing large amounts of data, it is worth paying special attention to the possibility of supporting the quality assurance process based on data obtained from the manufacturing process.

This paper proposes an approach using the exploration of production data and machine learning techniques in the problem of predicting the number of defective products. A set of artificial neural networks (ANNs) was used for prediction. Each ANN included in the set is a NARX network (nonlinear autoregressive with external input). The proposed approach allows to reduce the prediction error compared to a single NARX network. It was demonstrated on an example of data obtained from the production process in a glass factory. The set of ANNs constitutes a model of a part of the manufacturing process from which the obtained data are derived.

The rest of the paper is organized as follows. Section 2 describes the Cross-Industry Standard Process for Data Mining (CRISP-DM) that was used in the methodology introduced in this paper. Moreover, the applications of data mining in production companies are discussed, in particular the use of ANNs for the analysis of manufacturing data. Section 3 describes a case study of manufacturing process in a glass factory, defines the proposed approach to solving the problem under consideration, as well as shows the results of the approach. Section 4 provides information on the advantages and limitations of the approach.

2 Data Mining in Manufacturing

The constantly growing data resources are a challenge for manufacturing companies in every industry branch. This is due, inter alia, to a significant increase in the number of devices that generate data. Currently, the concept of Industry 4.0 and related technologies, such as Big Data or the Internet of Things (IoT), are very popular. These technologies facilitate the collection, processing, and use of large amounts of data. Data obtained from IoT can be exploited by many data mining techniques, including all data science methods known in the literature [1].

2.1 Knowledge Discovery from Data

Knowledge discovery from databases is a process whose task is a comprehensive data analysis, starting from a proper understanding of the problem under investigation, through the preparation of data, the building of appropriate models, and its evaluation [2]. Some variations of the knowledge discovery process are encountered in the literature. The most commonly used methodology among researchers is CRISP-DM. CRISP-DM defines the knowledge discovery process by dividing it into six phases [3]: (1) understanding the business domain from which the data comes; (2) detailed understanding of the data; (3) data preparation; (4) creating models; (5) evaluation of the obtained results; (6) implementation of the discovered knowledge in the business domain.

The first phase allows us to establish data mining goals in the context of the business domain. The second phase is the selection of appropriate data and the preliminary data analysis, which helps to understand the data [4]. Data sets obtained from industrial enterprises often contain incomplete, inconsistent, or erroneous data, which may be caused, for example, by failures in measuring or recording equipment. The third phase of CRISP-DM is to counteract these negative phenomena. In this phase, appropriate data preprocessing procedures are performed, such as removing observations containing erroneous data visible as outliers. Some methods of data analysis are sensitive to outliers; therefore, it may be important to remove or replace them [5]. Another problem encountered in the data is the missing observations resulting from improper work of the recording apparatus. If possible, missing observations should be replaced by using other observations from the data set. Otherwise, cases containing missing data must be omitted [6]. The stage of data pre-processing of consists also data transformation that results in converting the data to the appropriate types.

The fourth phase of CRISP-DM is modeling, the task of which is to search for certain dependencies, regularities, and patterns hidden in the data. The main tasks of data mining that appear most often in the literature are: detecting anomalies in data, modeling dependencies using association rules, object classification and clustering, regression, creating summaries in the form of reports and visualizations [2]. Generally, the methods used here can be divided into two groups: (1) classical statistical methods, e.g., linear regression, analysis of variance; (2) methods based on machine learning, such as classification and regression trees, ANNs, or support vector machines.

The fifth phase is the patterns interpretation of the obtained patterns and validation of the developed models. At this stage, it is checked whether the phenomena identified through exploration occur only in the analyzed data, or whether they can also be observed in a wider range of data. If the created models pass the validation phase, they can be deployed to the business domain and applied to new data (sixth phase).

2.2 ANNs and Production Data

For many years, the literature has witnessed a noticeable increase in the number of described applications of machine learning in production engineering. Such applications include, for example, the use of decision trees to determine the impact of production process parameters on the state of a blade [7], the application of random forests to investigate the influence of production process parameters on the number of defective products [8] as well as the application of decision trees in conjunction with various types of wavelets to predict the remaining useful life of cutting tools based on force and torque monitoring data [9]. Many papers have been published showing the wide range of possibilities offered by ANNs, among others. The work [10] shows the possibility of using data-driven models to predict failures of machines used in continuous technological processes. The aim of the research is to predict the total downtime of the machines involved in production.

A large part of the literature dealing with the use of data analysis in manufacturing companies is devoted to publications on product quality problems. The authors of the paper [11] point out that the prediction of the influence of the technological process parameters on the number of defects is very often based mainly on the experience and knowledge of the people working in the manufacturing company. In the problem of pressure casting, they propose models of ANNs that predict the influence of process parameters on the properties of products (e.g., clotting time, number of defects).

Article [12] deals with the production of galvanized steel. The aim of the research was to create a neural model that predicts the quality of steel expressed by its mechanical properties. The paper [13] describes the use of an ANN to model the relationship between turning parameters and the quality of the product expressed by the surface roughness. In turn, the authors of the paper [14] propose a model based on ANNs to predict the quality of products during turning on numerically controlled machine tools. In the article [15], the ANNs were used, inter alia, to identify key parameters that influence the quality of products manufactured in the injection molding process. The results of the research described in [16] show that approaches using ANNs give comparable results to other methods of quality control, including control charts and statistical techniques. The paper [17] refers to the glass coating process. It describes the use of ANN and the decision tree to model the relationship between these process parameters and the quality of the glass coating. The work [18] describes the use of a two-layer hierarchical ANN in the petrochemical industry. It was used to predict the quality of the product derived from the industrial pyrolysis of ethylene. The paper [19] describes the use of the bootstrap aggregation technique and multilayer perceptron ANNs to assess how the parameters of the manufacturing process affect the quality of the products.

In conclusion, the literature points out that in order to handle with the dynamic growth of data, there is a need to create tools that not only automatically collect data. These tools should be able to select the most appropriate data and apply proper analyzes to extract knowledge from the data [20]. More and more often, machine learning methods are replacing or supplementing classical methods. The main advantages of machine learning techniques are the ability to discover non-linear relationships in data and visualize interactions between variables. Moreover, machine learning techniques do not require meeting as many statistical assumptions in relation to the data as in the case of classical methods.

3 Research Problem

The research problem under consideration concerns the prediction of the number of defective products. The prediction was made based on data obtained from a glassworks that manufactures glass packaging. The data come from the production process monitoring system, which records the values of the selected parameters of this process. These are parameters related to:

-

the operation of the blast furnace (e.g. glass level, glass temperature),

-

cooling glass molds,

-

atmospheric conditions in the production hall (atmospheric pressure, humidity, air temperature),

-

the operation of the forehearth, which is divided into 6 sections (the data cover all sections and concerns, for example, the position of cooling valves or the temperature of the glass flowing through the forehearth).

In addition, data were obtained on the number of products with air bubbles in the glass, which is treated as a product defect. Data on the number of defective products and data on the values of the manufacturing process parameters come from the same period, which is 27 days. The values are recorded at intervals of 10 min.

3.1 Proposed Approach Based on Manufacturing Process in a Glassworks

The data obtained from the glassworks were divided as follows:

-

The values of the production process parameters from production monitoring systems can potentially affect the number of defective products, therefore, they were treated as input (explanatory) variables,

-

The number of defective products is a prediction target and therefore serves as an output variable (response variable).

The proposed approach to predicting the number of defective products is shown in Fig. 1. This approach is based on the CRISP-DM process; therefore, it begins with the business understanding phase. All the places from which the data serving as input variables were obtained are symbolically presented here. Each place is associated with an appropriate number of input variables (number of recorder parameters). The largest number of parameters obtained applies to the sixth section of the forehearth (15), and the smallest number of variables describes the atmospheric conditions and the cooling of the glass molds (3). In the first phase, contact with the company’s employees is extremely valuable, because of them it is easier to understand the problem under study and to better select the process parameters that should be taken into account during the study.

The second phase was to understand the data and the relationship between the manufacturing process parameters. Understanding the data enables a better selection of data analysis techniques; therefore, it is also an important step in the proposed approach. In this phase, exploratory data analysis techniques, different types of visualizations (time series plots, histograms, box plots, quantile plots), and descriptive statistics were used. Research has shown that the acquired data are characterized by heterogeneous variance and the presence of several constant levels around which the values fluctuate. For some variables, outliers or missing observations were revealed. Furthermore, statistical tests and quantile plots did not show the consistency of the distribution of variables with the normal distribution. For this reason, classic methods of statistical data analysis should be used with caution, because some of them require the fulfillment of appropriate assumptions. Therefore, it was assumed that the posed problem of predicting the number of defective products would be solved by machine learning methods, which do not require meeting the assumptions about the normality of the distribution or homogeneity of the variance.

The third phase consists of preparing the data for analysis (pre-processing). At this point, appropriate operations should be applied, for example, filling in missing observations or replacing outliers. The selection of these operations must depend on the data. These operations can be applied to both the input variables and the output variable.

Due to the fact that it was decided to use machine learning techniques, in the third phase, the data set should also be divided into three subsets: training, validation (verify), and test. Typically, machine learning methods assume a random division of the original data set into the three subsets, where each case is randomly assigned to one of the subsets. In the proposed approach, a different method of data division was used. In the problem under consideration, we deal with time series, so subsequent cases in the data set follow each other. Therefore, the prediction task will require predicting the next values based on the previous values. Random allocation of cases to the training, validation, and test sets could adversely affect the training process, because cases from the validation and test subset are not taken into account during training and would be treated as gaps in the training data. This would disrupt data continuity and the correct sequence of cases.

For the data under consideration, the following fragments of each variable were distinguished (see Fig. 2):

-

Training subset: cases number 1–800; 1001–1400; 1601–2700; 2901–3600,

-

Validation subset: 801–900; 1401–1500; 2701–2800; 3601–3700,

-

Test subset: 901–1000; 1501–1600; 2801–2900; 3701–3800.

This division of the data was dictated by the course of the explained variable presented in Fig. 2. The first of the four training ranges covered the average level of the number of defective products. The validation and test ranges immediately following it are designed to test the predictive ability of the model to represent the average level of the dependent variable. The second training range covers slightly lower values of the explained variable, not counting single outliers.

The third training range includes a clear increase in the average level of the explained variable, and the validation and test ranges immediately following it are designed to check the predictive ability of the model to reflect the increased defectiveness of products. The highest average level of the dependent variable covers the fourth range of the training subset. The training subsets in total include about 80% of the cases, while the validation and test subsets contain approximately 10% of the cases each.

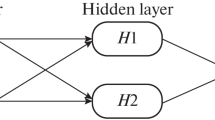

The fourth phase is the modeling stage. It was divided into two parts. The first part was about creating and training the nine ANNs. In the proposed approach, a separate ANN was created for each data source. Therefore, for each of the ANNs, only some of the input variables were used. The output variable for all ANNs was the number of defective products.

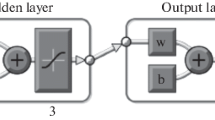

Due to the nature of the problem under consideration (prediction of time series), NARX ANNs were used. The visualization of the NARX network in an open form is shown in Fig. 3. During the training process, the NARX network takes into account the values of the explained variable y(t) with the appropriate delay d, as well as the values of the explanatory variables x(t) also with the delay d. For this reason, the predicted value of the explained variable y at time t is determined on the basis of d previous values of the variable y and d previous values of the explanatory variables x, which is expressed by formula (1):

In the problem discussed, it was established, after consulting with the company’s employees, that the number of defective products at a given moment can be most influenced by the values of the manufacturing process parameters from the period of one hour back. Given that the interval between consecutive observations is 10 min, it has been agreed that the delay d will be 6.

The input values go to the neurons in the hidden layer by connections with appropriate weights. The hidden neuron first performs the operation of summing inputs multiplied by weights with bias. Then, the value of the activation function (in this case, the hyperbolic tangent) is calculated. For simplicity, it was assumed that each NARX network used has 10 hidden neurons. The calculated value of the activation function of hidden neurons goes to the output layer, where there is a neuron with the linear activation function. The task of the output neuron is to calculate the prediction result of the explained variable.

The training subset was used to train each NARX network. During training, the prediction error was calculated on the training, validation, and test subsets. The plot of the prediction error determined during the training process of one of the created NARX networks is shown in Fig. 4. The prediction error on the validation subset is used to prevent overfitting of the NARX networks. When this error increases its value in six successive epochs of the training algorithm, the training process of the NARX network is interrupted. The result of the learning process is the state of the NARX network at the moment when the validation error started to increase (the epoch denoted as Best in Fig. 4). In turn, the error calculated on the test subset was used in the second part of the modeling phase.

The role of the prediction error was played by mean squared error (MSE), which is one of the basic and most popular indicators for assessing models that generate numerical outputs. The MSE value is determined according to formula (2):

where n–number of cases in the data set, \({y}_{i}\)–actual value of the explained variable for the i-th case, \(y_{i}^{*}\)–predicted value of the explained variable for the i-th case.

The second part of the modeling phase was to create and train a single NARX network. This time the NARX network took into account all 69 parameters of the manufacturing process, treating them as explanatory variables. However, the values of these variables were converted in a suitable manner before starting the training process. The transformation of the variables consisted of dividing each value of a given explanatory variable by the value of the prediction error calculated on the test subset for this NARX network from the first part of the modeling stage, which used the given variable as its input. In that way, the prediction errors computed in the first part were included in the second part of the modeling step. These errors act as weights that reduce the value of the explanatory variables. The higher the value of the prediction error of the NARX network from the first part of the modeling step, the more the values of the explanatory variables of this network were reduced. According to this approach, the explanatory variables, the use of which resulted in greater prediction errors in the first part, were reduced in the second part of the modeling step, so as to have less impact on the final prediction results of the number of defective products.

The fifth phase assesses the set of ANNs created in the fourth phase, which consists of ten NARX networks. For the evaluation of the set of ANNs, four data sets were used, which contain the values of all 69 explanatory variables and the explained variable. None of these data sets were used in the modeling stage.

The assumption of the fifth stage is to simulate the operation of the ANNs set in a situation in which a forecast of several future values of the number of defective products should be made. For this purpose, the structure of all NARX networks was changed to a closed form (see Fig. 5). The open NARX network allows for performing only one-step-ahead prediction. In turn, the closed form of the NARX network causes the network to contain a feedback loop. This gives the possibility to perform multi-step-ahead prediction. In this case, the prediction results of y(t) will be used in place of the actual future values of y(t).

The nine NARX networks established in the first part of the modeling phase were first evaluated. Each of these ANNs made predictions for the given values of the input variables, taking into account only the input variables that are assigned to the given ANN. The prediction of the number of defective products is made by each ANN six steps forward, taking into account the six previous values of the explanatory variables and the explained variable. The prediction error of each of the NARX networks is then calculated by comparing them with the actual values of the number of defective products. Prediction errors are treated as weights that reduce the values of the explanatory variables, similarly to the modeling phase: the values of the explanatory variables are divided by the prediction error of the corresponding NARX network.

The data set prepared in this way goes to the final NARX network, which takes into account all explanatory variables. This network predicts the number of defective products six steps forward, taking into account six past values of the explanatory variables.

For the new data used in the evaluation phase, the actual number of defective products is known (although the NARX network does not take this into account when predicting). Therefore, it is possible to evaluate the predictions of individual NARX networks as well as the entire set of ANNs. If the prediction error is at an acceptable level, then the ANNs set can be deployed to operate in the business domain. However, if the prediction error is unacceptably high, then the created model should be modified and returned to the modeling phase. The modification may consist of selecting a different number of hidden neurons. If possible, it can also be extending the training dataset and re-training the ANNs.

3.2 Results and Conclusions

The quality of ANN depends not only on the training process and the training subset but also on the initial values of the weights of connections between neurons. The initial weight values are randomized and then modified in the ANN training process. The course of the training process can largely depend on the initial values drawn, and this, in turn, has a significant impact on the prediction ability of the ANN. Therefore, an approach to generate ten ANNs was used for each NARX network in the ANNs set. Each of the ten ANNs was trained using the same training subset. After completing the training, the quality of the ANNs was assessed using the test subset. The ANNs set included the ANN that had the smallest prediction error calculated on the test subset.

Figure 6 shows a comparison of the actual number of defective products with the number of defective products predicted by one of the NARX networks. The period included in Fig. 6 covers the entire training, validation, and test subset. It should be noted that the predictions in this case were generated by the NARX network in an open form. Therefore, the plot depicts the one-step-ahead predictions. Looking at the course of the time series of the dependent variable (upper part of Fig. 6) it can be seen that the considered NARX network makes the smallest errors in the case of the initial data fragment (up to approximately 1900 cases).

Later in the time series, the differences between the actual values and the predicted values are greater, which is clearly shown in the error graph at the bottom of Fig. 6. Moreover, it can be noticed that the NARX network in open form has a worse prediction of the highest values of the number of defective products. In such situations, the NARX network understates the prediction result. This observation is apparent for all three subsets: training, validation, and test. However, it is not the task of the neural model developed to predict outliers. Instead, the neural model created should estimate whether, with the given parameters of the manufacturing process, the number of defective products will remain at a low level or whether an increase in the number of defects will be observed. This task is accomplished by the NARX network because it is able to reproduce the increase in the number of defective products, which starts from approximately timestep number 2000. Moreover, it should be considered that the NARX network discussed comes from the first part of the modeling phase, which means that this ANN was trained only on a small subset of the manufacturing process parameters. Considering all the process parameters in the second part of the prediction modeling stage, the result of the prediction can be better.

One-step-ahead prediction, the results of which are shown in Fig. 6, usually does not reflect the real predictive ability of the created model, because in real applications the created model of the manufacturing process will be used to predict the number of defective products several timesteps ahead (e.g., for the next hour, which in this case means six-timesteps-ahead prediction). Therefore, a more reliable measure of the predictive ability of the model is the prediction error computed for the multi-step-ahead prediction. To determine the multi-step-ahead prediction, an additional test data set was used, which was divided into four periods. The prediction for these periods was performed by the NARX networks in closed form.

In addition, one additional NARX network (designation “single ANN”) was created to serve as a benchmark. The single ANN was trained in an open form using the training subset, which included all the manufacturing process parameters. Thus, for the single ANN, all possible input variables were given without additional information about the significance of the input variables or their influence on the output variable. The single ANN in closed form was then used to predict four test periods. The single ANN prediction results were taken as the benchmark generated to compare the proposed approach with the single ANN approach. If the benchmark gives a prediction error close to the prediction error of the ANNs set or if the benchmark prediction error is smaller, then creating the ANNs set according to the proposed approach would be unjustified.

As with the rest of the NARX networks, also with the single ANN the approach was used, in which 10 ANNs were generated with the same parameters, and the ANN that made the smallest error on the test subset was selected. To make it easier to interpret the size of the prediction error, the RMSE (root mean squared error) measure was used, the result of which is expressed in the same units as the values of the explained variable. RMSE prediction errors calculated on the training, validation and test subsets of the best of the ten ANNs were 22.03, 18.61, 19.13, respectively. On the other hand, the corresponding measures for the NARX network from the second part of a modeling phase had the value of 18.09, 18.84, 18.87. Comparing the obtained RMSE errors, it can be concluded that the results for both approaches are similar. Therefore, there is no clear advantage of the proposed neural model over the NARX network treated as a benchmark. However, different conclusions can be drawn after analyzing the prediction results for the additional test data set, divided into four periods.

Figure 7 shows the actual and predicted values of the explained variable in one of the four test periods. The forecast was made 6 steps forward. The results show a clearly better accuracy of the forecast made with the use of the ANNs set. Prediction by the single ANN underestimates the value of the explained variable at each of the six points. Also, in the remaining three test periods, the predictions made using the ANNs set are burdened with a smaller error compared to the benchmark single ANN. The RMSE prediction errors for all four test periods are shown in Table 1. In addition, Table 1 considers the prediction results of each of the nine NARX networks created in the first part of the modeling phase. For most of the rows, it can be seen that the RMSE values increase in subsequent periods. This may be due to the fact that each subsequent period is farther away from the training data.

Another interesting pattern can be seen in the case of the first period. The RMSE values generated for NARX networks based on forehearth sections number 1, 2, 3, 4, and 5 are lower than the RMSE of the ANNs set. This could suggest that the data from these five forehearths sections are sufficient to make the forecast. However, in the next three periods, the ANNs set has a clear advantage over NARX networks, with only the five sections listed. This example shows that when assessing the effectiveness of ANNs models, it is not worth relying on just one test period. The more possibilities to test the forecasts, the more reliable the evaluation of the created ANNs model.

The aforementioned research problem, which concerned the prediction of the number of defective products, is worth attention because by predicting this number, it is possible to determine how much glass cullet waste will appear after production. This allows the glassworks workers to better plan the demand for raw materials (glass cullet is one of the raw materials used). In addition, knowing the number of defective products, it is possible to more precisely determine the time needed to produce a given number of products.

Plans for further research may include more tests of the proposed approach, e.g. using data from other production lines in a glass factory or from other manufacturing companies. Furthermore, in the proposed approach, it is possible to add the step of selecting the appropriate number of hidden neurons in NARX networks, which could improve the prediction result. It also could be valuable to compare the predictive ability of the proposed ANNs model with other machine learning techniques (e.g., regression trees, random forests, support vector regression). In the proposed approach, for example, a random forest can be used instead of ANNs. Such an experiment could show whether the proposed approach is appropriate also for other types of models based on machine learning techniques.

4 Summary

The paper describes the process of creating a neural model of the production process on the example of a glassworks. The application of the created model to predict the number of defective products was presented on the basis of historical data on the values of selected manufacturing process parameters and the corresponding number of defective products. A characteristic feature of the proposed model is the creation of separate NARX ANNs for each stage of the manufacturing process. Each of the ANNs takes into consideration only that part of the manufacturing process parameters, which is assigned to a given stage of the process. The additional NARX ANN then considers all parameters along with the prediction results of previous ANNs.

The proposed neural model allows reducing the prediction error in comparison with the classic approach in which only one ANN is used, including all process parameters. Moreover, the proposed approach is independent of the business field. The presented example concerns the glass industry, but the approach can also be used in other industries or branches of industry. It seems that for each research problem in which the relationship between the input variables divided into certain subgroups and the quantitative output variable is sought, the proposed approach can be applied.

The time devoted to building the neural model may be a limitation of the proposed approach. The neural model contains many separate ANNs, each of which must first be trained and then tested. This takes more time than the classic approach based on just one ANN. The second limitation is the number of historical values that must be available to make a forecast. In the example of a glassworks considered, it was assumed that the six previous values of the manufacturing process parameters and the number of defective products should be considered, because the previous six values have the greatest impact on the future number of defective products. Due to the fact that the proposed model works in two stages, it is necessary to have twelve previous values of the parameters and the number of defective products. In the case of the classic approach with one ANN, it is sufficient to have only six previous values of the parameters mentioned.

References

Taşıran, A.C.: Internet of things and statistical analysis. In: Al-Turjman, F. (eds.) Performability in Internet of Things. EAI/Springer Innovations in Communication and Computing, pp. 127–136. Springer, Cham (2019). https://doi.org/10.1007/978-3-319-93557-7_8

Larose, D.T., Larose, C.D.: Discovering Knowledge in Data: An Introduction to Data Mining. Wiley, Hoboken, New Jersey (2014)

Chapman, P., et al.: CRISP-DM 1.0: step-by-step data mining guide. Computer Science (2000)

Witten, I.H., Frank, E., Hall, M.A., Pal, C.J.: Data mining: Practical Machine Learning Tools and Techniques, 4th edn. Morgan Kaufmann Publishers Inc., San Francisco (2016)

Čížek, P., Sadıkoglu, S.: Robust nonparametric regression: a review. WIREs Comput. Stat. 12(3), 1–16 (2020)

Amiri, M., Jensen, R.: Missing data imputation using fuzzy-rough methods. Neurocomputing 205, 152–164 (2016)

Antosz, K., Mazurkiewicz, D., Kozłowski, E., Sęp, J., Żabiński, T. Machining process time series data analysis with a decision support tool. In: Machado, J., Soares, F., Trojanowska, J., Ottaviano, E. (eds.) Innovations in Mechanical Engineering. ICIENG 2021. LNME. Springer, Cham (2022). https://doi.org/10.1007/978-3-030-79165-0_2

Setlak, G., Pasko, L.: Random forests in a glassworks: knowledge discovery from industrial data. In: Świątek, J., Borzemski, L., Wilimowska, Z. (eds.) ISAT 2019. AISC, vol. 1051, pp. 179–188. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-30604-5_16

Kozłowski, E., Antosz, K., Mazurkiewicz, D., Sęp, J., Żabiński, T.: Integrating advanced measurement and signal processing for reliability decision-making. Eksploatacja i Niezawodnosc Maint. Reliab. 23(4), 777–787 (2021)

Merh, N.: Applying predictive analytics in a continuous process industry. In: Laha, A. (eds.) Advances in Analytics and Applications. Springer Proceedings in Business and Economics. Springer, Singapore (2019). https://doi.org/10.1007/978-981-13-1208-3_10

Krimpenis, A., Benardos, P.G., Vosniakos, G.-C., Koukouvitaki, A.: Simulation-based selection of optimum pressure die-casting process parameters using neural nets and genetic algorithms. Int. J. Adv. Manuf. Technol. 27(5), 509–517 (2006)

Meré, J.B.O., Marcos, A.G., González, J.A., Rubio, V.L.: Estimation of mechanical properties of steel strip in hot dip galvanising lines. Ironmak. Steelmak. 31(1), 43–50 (2004)

Lin, W.S., Wang, K.S.: Modelling and optimization of turning processes for slender parts. Int. J. Prod. Res. 38(3), 587–606 (2000)

Suneel, T.S., Pande, S.S., Date, P.P.: A technical note on integrated product quality model using artificial neural networks. J. Mater. Process. Technol. 121(1), 77–86 (2002)

Ali, I.G., Chen, Y.T.: Design quality and robustness with neural networks. IEEE Trans. Neural Netw. 10(6), 1518–1527 (1999)

Ozcelik, B., Erzurumlu, T.: Comparison of the warpage optimization in the plastic injection molding using ANOVA, neural network model and genetic algorithm. J. Mater. Process. Technol. 171(3), 437–445 (2006)

Li, M., Feng, S., Sethi, I.K., Luciow, J., Wagner, K.: Mining production data with neural network & CART. In: Third IEEE International Conference on Data Mining, pp. 731–734 (2003)

Zhou, Q., Xiong, Z., Zhang, J., Xu, Y.: Hierarchical neural network based product quality prediction of industrial ethylene pyrolysis process. In: Wang, J., Yi, Z., Zurada, J.M., Lu, B.L., Yin, H. (eds.) Advances in Neural Networks - ISNN 2006. ISNN 2006. LNCS, vol. 3973, pp. 1132–1137. Springer, Berlin, Heidelberg (2006). https://doi.org/10.1007/11760191_165

Paśko, Ł, Kuś, A.: Bootstrap aggregation technique for evaluating the significance of manufacturing process parameters in the glass industry. Tech. Sci. 24, 135–155 (2021)

Peters, H., Link, N.: Cause & effect analysis of quality deficiencies at steel production using automatic data mining technologies. IFAC Proc. Vol. 43(9), 56–61 (2010)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Editor(s) (if applicable) and The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Paśko, Ł., Antosz, K. (2022). Neural Model of Manufacturing Process as a Way to Improve Predictability of Manufacturing. In: Gapiński, B., Ciszak, O., Ivanov, V. (eds) Advances in Manufacturing III. MANUFACTURING 2022. Lecture Notes in Mechanical Engineering. Springer, Cham. https://doi.org/10.1007/978-3-031-00805-4_3

Download citation

DOI: https://doi.org/10.1007/978-3-031-00805-4_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-00804-7

Online ISBN: 978-3-031-00805-4

eBook Packages: EngineeringEngineering (R0)