Abstract

The particles encountered in plastics and paints display a wide range of physical and chemical characteristics. Many of these characteristics affect both the way that the particles process during plastic or paint manufacture as well as the impact they have on the end-use properties of the plastic or paint. In this chapter, we make a broad, high-level survey of the various analytical techniques used to characterize particles, including their appearance, size distribution, elemental composition, bulk properties, and surface properties, to make the reader aware of what analytical options are available and what these options can reveal.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Introduction

There is a wide range of physical and chemical analyses by which we can characterize small particles. Each technique reveals a different aspect of the particle composition, behavior, or both. By understanding the analytical options available, the paint or plastics formulator can design experiments to elucidate particle properties that affect the end-use properties of importance in a specific product or application. In some cases, the results of an analysis can be directly related to a specific end-use property, while in other cases the results from different techniques must be combined to gain a more complete understanding of particle performance.

Most of the techniques that we will consider in this chapter are discussed in books devoted entirely to each individual method, an indicator of the high level of detail and complexity that has been developed on them. Due to space limitations, as well as to keep within the intended scope of this book, we will only briefly summarize the details of each technique, with the hope that this information is enough to give formulators an understanding of the scope and limitations of the different characterization methods available to them.

Particle Size and Size Distribution

The defining attribute of the particles discussed in this book is their size. As detailed in Chap. 1, our interest is in particles with sizes ranging from a few tens of nanometers to roughly 20 microns. Most particle properties are sensitive to size within this range, and for this reason, it is important to characterize particle sizes as completely as possible. There are several techniques for measuring size and they do not always agree. In some cases, this is because of limitations in the assumptions that are made about the measurement technique or its interpretation, while in other cases, it is due to the very definition of what, exactly, a particle is.

Defining a Particle

We have been using the term “small particle” to indicate a single object of a certain size. While this definition may appear explicit, there is in fact some ambiguity in it. This ambiguity originates from the tendency of particles to stick to one another with varying strengths of attachment. Individual particles are referred to as primary particles. Two or more primary particles that are attached to one another but can be easily separated by typical dispersion processes are referred to as agglomerates. This is typical of particles that are held together by physical attractions. By contrast, aggregates are groups of particles that are held so strongly together that they cannot be separated by particle dispersion processes. Aggregates are typically treated as if they are single, larger particles. Primary particles in aggregates are held together by chemical bonds. Aggregates are often created when particles are heated to high temperatures during their production.

Defining a Particle Size

The meaning of particle size is quite simple when all particles have a regular shape (e.g., a sphere or cube). In such instances a number of distances can be used to characterize size, for example, diameter for a sphere, side or diagonal length for a cube, etc. However, except for resin particles, it is nearly always the case that particle shapes are not homogenous across the entire population of particles. Such distributions of particles are known as “polydisperse”. A distribution in which all particles are of the same is known as “monodisperse”.

In such cases, how do we characterize the size of the particle with a single number? There are several options available. We could, for example, measure the longest dimension (of importance if we wish to filter the material), or, if the particles are of uniform shape, a specific dimension of the particle (e.g., the length of rod-like particles). One of the more common methods is to characterize size based on the size of a sphere that would have the same properties. For example, a sphere of the same volume as the particle, or of the same area as the particle, or that would settle at the same rate as the particle, or that has the same diameter as the maximum or minimum length of the particle. It is critical that if we are measuring particle size for a specific purpose—such as determining the amount of time it would remain suspended in air, or how well it scatters light—we use the measurement parameter that is of the most relevance.

When interpreting the results of a given type of particle size measurement, it is important to keep in mind the basis by which size is defined. This is particularly true when reconciling differences in the results of different types of measurement.

Particle Size Distributions

It is rare that all the particles of a given material have the same size. Instead, a distribution of sizes is common. Such a distribution typically follows a log-normal distribution. This distribution is skewed to the right when plotted on a linear X-axis but takes the form of a symmetric bell curve when plotted with a logarithmic X-axis (Fig. 2.1) [1]. This curve type is predicted theoretically for small particles that are attritted from larger particles (i.e., made from the top down) and also for particles that are grown by adding atoms or molecules to smaller (seed) crystals (i.e., made from the bottom up) [2,3,4,5].

Particle size distribution curves can be presented in two ways: as a probability density function and as a cumulative distribution function. Figure 2.2a is an example of the probability density function for a normal (Gaussian) distribution. Here, we find the probability of a particle with a size between x1 and x2 as the area under the curve between these two sizes. When presented as a cumulative distribution function (Fig. 2.2b), the y-value corresponding to an x-value is the fraction of particles with size x or lower. In this way of presenting the distribution, the probability of a particle with a size between x1 and x2 is the difference in y-values between these two x-values. Both ways of expressing the particle size distribution are equally valid, and the information contained within these graphs is the same. They are simply presented on a different basis.

Because larger particles can be broken up into millions of smaller particles, it is important when we describe the size characteristics of a sample of powder that we specify whether we are making this characterization on a number basis or a weight basis. For example, if we begin with two 15 micron particles, and crush one of the particles into 3.375 million particles that are 0.1 micron in diameter, then we can describe the average particle size based on weight as being 7.55 microns (there is an equal weight of 15 micron particles and 0.1 micron particles), while the average size per particle would be a very small amount greater than 0.1 micron based on the number (there is less than 1 ppm of large particles). In most cases in the coatings and plastics industries, we choose to characterize particle size on a weight basis rather than a number basis. This is because we develop a given formulation based on the weight of the constituent particles, not on their number.

The attractive forces between particles of the dimensions typically seen in paints and plastics (0.1 micron–20 microns) are strong enough that these particles are rarely present as individuals, but rather are found bound together in agglomerates. The sizes of agglomerates are controlled by a number of factors, but as typically encountered in the coatings and plastics industries, these agglomerates are composed of tens to millions of primary particles. When measuring particle size, it is important to bear in mind that agglomerates would be counted as a single large particle if left intact. For this reason, the sample is typically sheared prior to measurement, with the intention that this will disrupt, at least temporarily, the agglomerates. Ideally, the shear intensity will match that of the process used to manufacture the paint or plastic so that the particle size distribution as measured will match the distribution in use.

Most particle size measurements are done in a slurry, and dispersion is accomplished through one of the methods outlined in Chap. 11. In the case of in-process slurries or final paints, no additional dispersion beyond that used to make the slurry or paint is used for particle size testing.

Analytic Techniques

Several distinct technologies have been developed to measure particle size [6,7,8]. Some are technologies that already existed and could be used for this purpose, while others are technologies specifically designed with this application in mind. A common aspect of all methods and techniques is the necessity of testing a truly representative sample of the larger population. Certain preparation techniques can inadvertently segregate particles in some way—by size, shape, electrical charge, etc., and it is incumbent on the analyst to assure that such segregations are avoided (or at least minimized).

Below we review some of the more common techniques used to quantify particle size and size distribution.

Microscopy

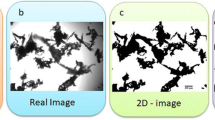

Perhaps the most obvious means of characterizing particle size is to image the particles of interest and physically determine the dimensions of a large number of particles from these images. Computer algorithms are available to measure these dimensions both rapidly and accurately. Optical microscopes can be used on particles at the large end of the range of our interest, but in most cases, electron microscopes are needed for this type of analysis (these instruments are covered in greater detail in a later section of this chapter).

While there is much to be said of the old adages that a picture is worth a thousand words and that seeing is believing, there are several potential sources of error in these measurements. As discussed above, sampling inhomogeneity, which is always a concern, is especially relevant to microscopy, for three reasons. First, the deposition of the particles onto the microscope grid can result in the preferential placement of particles based on their size. Second, we are looking at an infinitesimally small number of particles compared to the number of particles present in even 1 gram of material, and even sampling thousands of particles may not give a true representation of particle variability. Finally, there is the concern that irregularly shaped particles show a preferential orientation when placed on the microscope grid, potentially accentuating certain particle directions while de-emphasizing others.

X-Ray Line Broadening

Solids can be classified as crystalline or amorphous depending on both the short-range and long-range order of their constituent atoms. In a crystalline solid, the atoms are arranged in a regular, repeating pattern. Light of the appropriate wavelength (roughly the atomic scale, meaning light in the X-ray range), when directed at a crystalline material, will be scattered by the atoms within it. Since these atoms are in a repeating arrangement, under certain conditions, which depend on the wavelength of light, the direction of the light and the distances between repeating atoms in the crystal, the light scattered by different crystalline elements will constructively interfere, resulting in a strong intensity of the light in specific directions. This scattering is referred to as X-ray diffraction.

When crystal sizes are small the number of repeating units is limited, and the implicit assumption in this process—that the atoms repeat infinitely in all directions—breaks down. This leads to a broadening of the X-ray beams that scatter from the crystal. By measuring the breadth of the beams, one can calculate the size of the crystal [9]. This technique can be applied to crystals of sizes up to about 0.20 microns.

There are several limitations to this technique. The first is that it can be applied only to crystalline materials. It also assumes that the crystals are perfect—any imperfections or deviations, for instance, due to the partial replacement of one component (such as sodium) by another (such as potassium), or due to any stress on the crystal itself, will also broaden the beam. Finally, what we may consider a single or primary particle may in fact be made of several individual crystalline domains that are not aligned with one another. For example, TiO2 particles typically consist of twins or higher-order intergrowths (Fig. 2.3).

Light Scattering

Perhaps the most common method of determining the size of particles of interest to us is the use of light scattering techniques. This is because particles in this size domain typically scatter light strongly, which is often the very reason that these size domains are used. There are two broad classes of light scattering technologies used for this purpose: dynamic light scattering and static light scattering.

Dynamic light scattering (also known as photon correlation spectroscopy) relies on the principles of Brownian motion [10]. In this measurement, laser light is directed at a suspension of the particles of interest. Light scattered by different particles will combine constructively or destructively with light scattered by other particles, modifying the total scattering intensity. By monitoring intensity over short periods of time (microseconds), the velocities of the particles can be determined. Particle sizes can then be calculated based on the measured velocities.

This technique assumes that the light is scattered equally in all directions (Rayleigh scattering), which is only true for particle sizes that are significantly shorter than the wavelength of light. This creates an upper particle size limit for this technique of roughly one-half microns, restricting its application primarily to nanoparticles. The size reported is the hydrodynamic diameter of the particle, which is the size of a sphere that will diffuse through the solvent in the same way as the particle.

In the static light scattering measurement, particle sizes from a few tenths of a micron to as large as a few millimeters are characterized by measuring the degree to which a focused laser beam is deflected when passed through a dilute suspension of particles. In this particle size regime, light scattering occurs by a different mechanism (Mie scattering—see Chap. 3) than in the regime probed by dynamic light scattering. In Mie scattering, light is deflected by a certain angle, rather than being completely randomized as in Rayleigh scattering. The intensity of light as a function of deflection angle is used to calculate the particle size distribution. The resolution of static light scattering measurements is dependent in part on the distance between the sample and detector, since diverging light beams spread over distance, and so these instruments tend to be larger than those based on other principles.

An important aspect of both of these types of light scattering-based techniques is that the suspensions probed must be very dilute (e.g., 0.1% by weight or less). This is because these techniques rely on the light being scattered only once. At higher particle concentrations, the degree of multiple light scattering, which interferes with the interpretation of the scattering results, is unacceptable.

Acoustic Spectroscopy

A major drawback of the light scattering technologies discussed above is that they can be done only on dilute slurries—much more dilute than a typical paint. This restriction does not apply to acoustic spectroscopy, which is fully capable of measuring particle sizes in concentrated systems (50% or more by volume) [11,12,13]. An additional benefit of this technique is that dynamic processes such as flocculation or dispersion can be followed over time.

The basis of this method is that sound waves are attenuated when they pass through a dispersion of particles in a liquid. The exact form of this attenuation, and its frequency dependence, is determined by a number of factors that include the particle size distribution. This technique can measure particle sizes from roughly tens of nanometers to millimeters.

Sedimentation

Sedimentation techniques rely on the separation of particles based on the rates at which they settle in a liquid. Several factors control this rate, such as particle and solvent densities, viscosity, etc., as shown in Stokes’ law (Eq. 2.1), but when these are controlled, sedimentation rates are determined by particle size.

where:

v is the sedimentation velocity,

η is the viscosity of the liquid,

ρp is the density of the particle,

ρl is the density of the liquid,

g is the external force (either gravitational or centrifugal), and

r is the particle diameter.

Sedimentation occurs when particles are set in motion by an external force. In theory, gravity can provide this force, but, for particles in the size ranges of interest to us, the time needed for separation by gravity can be too long to be practical. Instead, slurries or suspensions are typically rotated at high speeds and so separated by centrifugal forces [14]. In some instruments, the particles are collected based on size, and then the amount of each size is determined [15]. In others, the separated particles are passed through an X-ray source and detector [16]. The degree of X-ray intensity attenuation is determined by the concentration of the particles.

Large Aggregates

Our interest is often in the size of particle aggregates rather than the size of the individual particles themselves. This is particularly true when evaluating the degree to which particles are separated after a dispersion process (see Chaps. 11 and 12), where aggregate size can be as high as several hundred microns. The method used to quantify these large aggregates is dependent on the end-use application (coatings or plastics).

To determine the aggregate content of a liquid paint (or of a particle slurry used to make a liquid paint), the material of interest is placed on a metal block into which a tapered channel has been machined. A metal bar (“doctor blade”) is then used to draw the paint down through the channel, from the deep end of the groove to the narrow end (which is flush with the block surface). The channel is characterized by its width, its length, and its maximum depth, and can be customized to a specific paint. The most common block is the “Hegman gauge”, which has a maximum channel depth of 100 microns (4 milsFootnote 1).

The drawdown is examined by eye under a bright light or automatically with a camera. The surface should be smooth and glossy at the deep end of the channel, but then will appear rough at a certain depth. This is known as the fineness line for the dispersion and represents the size of the majority of the aggregates remaining in the slurry or paint grind. When large (“oversize”) particles or aggregates are present, they cause a line in the drawdown due to being dragged by the doctor’s blade. The size of the aggregate can be estimated based on the initial position of this line in the channel. The side of the channel typically has markings indicating depth, in units of microns, mils, or “Hegman Units”, as indicated in Table 2.1.

There are two situations in which a drawdown is used. The first is to test the quality of a finished paint or slurry during production. It is often the case that the degree of dispersion of a paint batch is monitored over time, to determine when the dispersion process is complete and the paint is ready to proceed to the next stage of production. The second is to intentionally use less energy than required for complete grind (“low shear dispersion”). Here, the intent is to determine the ease or speed of dispersion. This analysis is typically done to characterize the raw materials of the paint, rather than the paint itself, and is often a product quality release test for, for example, titanium dioxide pigments.

Undispersed aggregates present in plastics can be quantified using a variety of techniques. A common technique for plastic film application is to illuminate a semi-translucent film from behind [17]. Undispersed matter is highlighted as a dark spot on the film using either the human eye or, more commonly, optical cameras. The dark spots are counted for a defined weight or area of film. This highly relied upon technique has the drawback of being incapable of identifying the composition of the dark spot. The spot could be gels of polymer or undispersed solids.

A second method is to extrude a molten plastic that contains particles through a series of screens, referred to as a “screen pack”. The layer of screens is often made with a gradation of mesh opening sizes. After a predetermined amount of polymer melt has been passed through the screen pack, the screens are removed, the plastic residue is removed and the amount of trapped solid material is determined with a variety of analytical techniques. Typically, X-ray fluorescence techniques are used to identify the elemental components of the retained solid. The intensity of the fluorescence is calibrated with standards to provide a quantitative value of retained matter from the sample.

An alternative is to extrude the melt through the screen pack while monitoring pressure [18]. This extrusion technique provides a ”filter pressure value” which is the pressure differential when extruding resin through the screen pack before and after the sample has been processed. If desired, the trapped material on the screen pack can be examined under a microscope to determine the chemical composition of the aggregated particles.

Undertone of TiO2 Pigment

While it is not possible to see individual TiO2 particles by eye, there are perceptible differences in the appearance of TiO2 pigment based on particle size. This is because the efficiency of visible light scattering by a TiO2 particle is partly determined by the size of the particle. Slightly smaller particles preferentially scatter shorter wavelength light (blue), while slightly larger particles scatter all wavelengths relatively evenly (neutral).

To test for TiO2 undertone, the TiO2 pigment of interest and a black pigment (typically carbon black or black iron oxide) are mulled together in mineral oil, and the color is determined. Alternatively, for plastics applications, films containing the two types of pigments can be cast. Although subtle, the differences in appearance can be readily measured and can be seen by eye in side-by-side comparisons. An example of the colors for plastics films of TiO2 grades at the two ends of the size range used in pigments (means sizes of 0.23 and 0.26 microns) is shown in Fig. 2.4.Footnote 2

Gas Absorption Measurements

Much can be learned about particle surfaces by measuring their interactions with other molecules. Of particular interest are their interactions with inert gas molecules [19],Footnote 3 which are discussed in this section, and their interactions in water suspensions with dissolved ions, which are discussed later in the section on surface charge.

There is generally an energetically favorable interaction between surfaces and the gas molecules to which they are exposed, as this leads to a reduction in surface energy. As a result, gases generally adsorb onto surfaces. The amount of gas absorbed and the strength of this adsorption are functions of the concentration of the gas (gas pressure is usually used as a proxy for this), the temperature of this exposure, the area occupied by a single gas molecule, the surface area, and the surface energy (gas molecules attach more strongly to higher energy surfaces). We can therefore calculate surface area and surface energy if we measure the pressure of a set amount of gas that is exposed to the particles, the temperature, the weight of the sample, and use standard values for the area occupied by a gas molecule (i.e., the adsorption footprint). In addition, when 50 nm or smaller pores are present, their size and volume can be calculated.

In the typical gas adsorption experiment, a known quantity of the powder of interest is heated under a vacuum to remove any initially adsorbed species. The sample is then cooled (typically to liquid nitrogen temperatures) and known quantities of a gas (typically nitrogen) are introduced step-wise into the sample vessel. Between each dose, the gas is allowed to equilibrate with the surface and the gas pressure is measured. The number of non-adsorbed gas molecules can be calculated from the measured gas pressure using the ideal gas law and, by difference with the amount of gas added to the vessel, the number of adsorbed molecules can be determined.

Ideally, a single layer of molecules will adsorb onto the surface (Langmuir adsorption). However, there are two related factors that complicate this process. The first is that the gas molecules can adsorb onto already adsorbed molecules (i.e., the adsorbed molecules are themselves adsorption sites). This is very similar to the formation of the liquid phase of the gas, in the sense that the interactions between the already adsorbed molecules and the gaseous molecules are comparable to the interactions between condensed molecules and their counterparts in the gaseous state.

The second complication is that surfaces are not completely flat. Surface features such as edges and corners tend to have higher surface energies than the flat areas of the particle and so the gas molecules will preferentially adsorb onto them. However, of greater importance is the presence of pores within some particles, and the pores between particles. The adsorbing gas molecules can condense in these pores, increasing the uptake of the particles. While this complicates our interpretation of surface area, it is in some ways beneficial because it provides us with a means to calculate the volumes of pores as a function of their size over a certain size range.

As a result of these complications, the isotherms for adsorbed molecules can deviate greatly from an ideal curve. Six different adsorption types have been classified by the IUPAC. These are shown in Fig. 2.5. Note that, in some instances, the curves show a hysteresis—that is, surface coverage as a function of gas pressure is different when we are adding molecules to the gas phase (i.e., increasing pressure) than when we are removing molecules from the gas phase.

Surface Area

For most particles, the results of the gas adsorption measurement can be modeled using the “BET” equations, so named using the initials of its three developers (Brunauer, Emmett and Teller) [20]. These equations apply when multiple layers of gas molecules adsorb onto the particle surface (Fig. 2.6). In this situation, there are two energies of importance—the energy released when a gas molecule attaches to the particle surface, and the energy released when a gas molecule attaches to an already present molecule. The energy associated with the latter interactions is approximately the heat of condensation for that molecule. The energy of interaction between the gas and the surface is a function of the chemical identities of the gas and particle as well as a function of surface energy—the higher the surface energy, the greater the energy of this interaction.

The fractional surface coverage θ can be found based on the pressure measurements and a constant denoted as “c” by using Eq. (2.2):

where:

θ is the fraction of surface covered by one or more molecules (θ = 1 for monolayer coverage),

p is the measured (equilibrium) pressure,

p0 is the saturated pressure, which is the vapor pressure of the condensed (liquid) molecules at the adsorption temperature, and

c is a unitless constant.

In this equation and elsewhere, P/P0 is referred to as the relative pressure of the gas.

This relationship is often expressed using Eq. (2.3):

where:

νa is the amount of gas adsorbed,

νm is the amount of gas in one monolayer, and

p, p0, and c are as in Eq. (2.2).

Note that νa/νm from Eq. (2.3) equals the θ term in Eq. (2.2).

The advantage of Eq. (2.3) is that plotting \(\frac{1}{{\nu _{\text{a}} \left[ {\left( {{\text{p}}_0 - {\text{p}}} \right) - 1} \right]}} = \frac{{({\text{c}} - 1)}}{{\nu _{\text{m}} }}\left( {{\text{p}}_0 - {\text{p}}} \right) + \frac{1}{{\nu _{\text{m}} {\text{c}}}}\) against \({\upnu }_{m} = \frac{1}{Sl + Int}\) gives a straight line from which the monolayer gas amount (νm) and the constant c can be calculated by using the slope of the line (Sl) and intercept (Int) using Eqs. (2.4) and (2.5):

Caution must be exercised when interpreting the measured surface area values for different grades of pigmentary TiO2. All TiO2 pigment particles used in paints, and many used in plastics, are surface treated with a few percent alumina and/or silica, in order to improve end-use performance properties (this is discussed in detail in Chap. 7). Importantly, the alumina is present as small (nano) particles affixed to the pigment surface. Although the size of the TiO2 particles themselves are essentially the same in all pigment grades, the surface areas of these materials can vary from roughly 10 m2/g to 50 m2/g, depending on the surface treatment. For comparison, the surface area of pure TiO2 particles before surface treatment is roughly 6–7 m2/g.

The BET technique overestimates the surface areas of particles that contain micropores (smaller than 2 nm). This is because these pores fill with condensed gas at the same pressures as the gas molecules that coat the flat surfaces of the particles, and so the information about surface adsorption is convoluted with the information about pore filling. It is difficult to separate the volume of gas that is condensed in these pores from the volume adsorbed on the particle surfaces, although some methods to do this have been proposed [21].

Pore Characterization

There are two fundamentally different types of pores that can exist within a powder. The first, intra-particle pores, are found within the individual particles. An excellent example of this is the pores found in particles of diatomaceous earth (Fig. 2.7). Diatomaceous earth is composed of fossilized skeletons of diatoms (microscopic sea organisms). These particles have a nearly endless variety of shapes and sizes (up to approximately 1 mm), and nearly all have an extensive pore content with sizes varying from a few nanometers to a few microns or more.

The second type of pores is those that exist between particles. These pores are significantly larger than the intra-particle pores and are strongly affected both by the same factors that affect both bulk density and by the measurement technique itself. That is, the inter-particle pore structure is affected by the arrangement of the particles in the sample.

Intra-particle pore sizes have been categorized by the IUPAC into three ranges, as shown in Table 2.2.

This classification is based in part on the practical consideration of pore size detection limits for different pore size techniques. Pore size distributions are presented in the same manner as particle size distributions (we can, for this purpose, consider pores to be air “particles” within a solid matrix). These presentation methods are discussed above in the section on particle size and size distribution.

A number of different techniques have been developed to measure the pore size distribution of materials [23, 24]. These methods fall into two categories. The first is high-pressure infiltration of a non-wetting liquid (typically mercury) into the pores. A non-wetting liquid is used because it will only penetrate a pore when forced to do so using pressure. For idealized cylindrical pores, the pressure required to fill a pore is determined by Washburn’s equation (Eq. 2.6) and is inversely proportional to the pore diameter [25]. By monitoring the amount of liquid filling the pores as a function of pressure, one can construct a pore size distribution of the material.

where:

γ = surface tension,

θ = contact angle (typically 180° for a non-wetting liquid),

p = pressure, and

Dp = pore diameter.

The minimum pore size detectable by this method is dependent on the maximum pressure that can be applied, but in most commercial instruments is in the range from 3 to 6 nm (i.e., near the lower limit of the definition of a mesopore).

Micropores and mesopores can be measured using the second category of techniques—inert gas adsorption and desorption. These techniques are an extension of those used to determine surface area, and commercial instruments are typically capable of both types of measurement. In this measurement, the sample is evacuated and heated, then placed under a high vacuum and the adsorbing gas is added, similar to the BET measurement. However, for pore size analysis, the pressure is first increased until all the pores are filled. Once the sample has equilibrated, the pressure is decreased stepwise. It is the desorption data that is then used in the pore distribution calculation (as shown in Fig. 2.5, the adsorption and desorption curves deviate when pores are present).

The distinguishing factor between micropores and mesopores is their size in relation to the adsorbing molecules (Fig. 2.8). In this figure, all molecules are chemically identical, but those within the pore that are not in direct contact with a surface and are close enough to be perturbed by it, are shown in white. In mesopores, the pore diameter is large compared to the adsorbing molecules, and so the majority of molecules found within the pores are in an environment similar to that of the molecules in the liquid state (i.e, the proportion of molecules attached to a surface or strongly perturbed by it is low). In Fig. 2.8a, these molecules are indicated by the darkest circles. We will refer to the collection of these molecules as the condensed phase. By contrast, although not all the molecules in a micropore are attached to a surface, those that are not are still close enough that their environment is significantly perturbed by the surface in comparison to the environment in the liquid state, so there are no condensed phase molecules in these pores (Fig. 2.8b).

The most common mathematical model for mesopore size determination is the BJH method, so named using the initials of its three developers (Barret, Joyner and Halenda) [26]. This model assumes (a) that gas molecules in the condensed phase within a filled mesopore volatilize sharply at a certain pressure that is dependent on the pore size diameter and (b) that the molecules adsorbed onto the walls of the pore or near enough to the wall to be perturbed by it are desorbed over a range of pressures rather than all at one pressure.

A different model is needed for micropores, since most of the molecules occupying these pores are perturbed by the pore surface, and only a small fraction, if any, can be considered to be in the condensed phase. In this case, density functional theory (DFT) is used to calculate the pore size distribution [27,28,29]. This theory, which is based on statistical mechanics, bridges the gap between the macroscopic and molecular scales. Here, the equilibrium particle density is determined based on the direct interactions between the surfaces and the gas molecules.

Surface Energy, Contact Angle, and Wettability

Particles interact with their environments through their surfaces, and so surface properties are very important with regard to both the ease with which the particles will be wetted by a liquid (solvent or water for paints, molten polymer for plastic) and with which they can be subsequently dispersed in a milling step. This aspect of particles is determined by their surface energy.

As described in Chap. 1, surface energy is the energy required to disrupt the interactions between atoms or molecules within the bulk of a solid when the solid is broken and a new surface is created. We can group surface energies into two classes based on both their magnitude and the type of solid. It is relatively easy to disrupt solids that are held together through weak forces, such as van der Waals forces, and as such, they are considered low surface energy materials. These include most paints and plastics, which are composed of organic polymer molecules that are held together by relatively weak intermolecular forces.

On the other hand, solids that are held together by networks of bonds, which includes most of the particles found in paints and plastics,Footnote 4 require high energies to disrupt, and as such these materials are high surface energy materials. An organic surface treatment is often applied to some types of particles (e.g., pigmentary TiO2 or calcium carbonate) in part to decrease the surface energy and favorably change certain particle properties.

Broadly speaking, we can say that atoms and molecules can lower their energy by associating with other atoms or molecules. In the bulk of a solid or liquid, this interaction is with other atoms or molecules of the same type. However, the surface atoms and molecules can interact with any moleculesFootnote 5 with which they come into contact, particularly gas molecules. Therefore, we see that the energy required to break a particle into two (i.e., the surface energy of a solid or liquidFootnote 6) is a special case of a more general type of interaction—that between molecules of any sort.

We can characterize the energetics of a surface based on how that surface interacts with different molecules or atoms—not just other molecules or atoms of the same type. We would expect to see different degrees of interaction, which can be quantified based on the energy released on adsorption when different atoms or molecules are the interacting species.

For example, one method to quantify the surface energy of a particle is by determining the interactions between its surface and a gas molecule. Returning to the previous section, we noted that the c-value in Eq. (2.2) is a function of surface energy. More precisely, it is proportional to the exponential of the difference in heat of adsorption for the first layer on the surface to the heat of condensation of the adsorbing molecule (i.e., the heat released when the gas condenses to a liquid), as shown in Eq. (2.7).

where:

c is the constant in Eq. (2.2),

qa is the heat of absorption for the first layer of gas molecules (gray circles in Fig. 2.6),

qL is the heat of condensation of the gas molecules,

R is the gas law constant, and

T is the absolute temperature.

The quantity qL is determined in part by the surface energy of the particle, and so c is related directly to particle surface energy. In this case, c tells us about the energetics of the interaction between the surface and the adsorbing atom or molecule.

Of greater interest to the paint or plastics producer is the degree to which particle surfaces interact with the liquid molecules used in that specific paint or plastics manufacture. In this case, the situation is significantly more complex than when considering only the interactions between a surface and a gas. Here, we must consider the strength of the interactions between the surface and the liquid molecules as well as the strength of interactions between the liquid molecules with one another (this is a function of the surface tension of the liquid, which is surface energy per unit area).

This latter consideration is an important one. When we immerse a particle into a liquid we are, in effect, creating a liquid surface in a manner similar to when we create a solid surface by breaking a solid particle apart. In this case, however, the new surface is not in contact with the atmosphere, but rather is in contact with the particle surface. The criterion that determines the ease with which a particle will immerse in a liquid is not the absolute strength of the interaction between the liquid and surface particles, but rather it is the difference in interaction strengths between this interaction and the interactions between the liquid molecules with one another.

A convenient way to measure the interactions between a surface and a liquid is through contact angle [30]. The most common technique for measuring this is to place a drop of the liquid on the surface and optically measure the angle at the air/surface/liquid interface (Fig. 2.9). This is normally done using large surfaces (on the order of several mm2; for example, a paint film), rather than particles, although this measurement is sometimes done on pressed pellets. In this case, care must be taken to ensure that the pellet surface is completely smooth because surface roughness can alter the apparent angle at the interface. Powders can also be measured by penetration of the test liquid into a sample of uncompressed powder, as discussed below.

Contact angle θFootnote 7 is determined by the relative interaction energies between the liquid and solid (γls) compared to the interactions between the liquid molecules with one another (i.e., the surface tension or surface energy of the liquid, γl), and the surface energy of the solid (γs).Footnote 8 This relationship is given by Young’s equation:

When the liquid is water, we refer to θ as the water contact angle. If θ > 90°, the surface is hydrophobic; if θ < 90°, the surface is hydrophilic, and if θ = 0° the surface is completely wetted.

There is a complication to this analysis that arises when measuring contact angle on a powder pellet, even a smooth one, which is that there can be a difference in advancing and receding contact angles (this complication is termed contact angle hysteresis). This difference can be quantified in a dynamic contact angle measurement. In this test, a droplet is applied to the surface of interest. Additional liquid is then injected into this droplet, causing it to grow laterally—that is, the intersection between air, droplet, and solid surface is pushed outward. The angle measured at this moving interface is the advancing contact angle. Similarly, liquid can be pulled out of the droplet and the receding contact angle measured.

The degree of contact angle hysteresis is dependent on a number of factors, including the liquid used, the chemical homogeneity of the particle surfaces, and, importantly, any roughness at the pellet surface, if present. This situation is very complex and the subject of much active research.

An alternative means of measuring the contact angle of a particle is to measure the penetration of a liquid into a particle bed using the Washburn capillary rise technique [31]. In this test, a powder is loaded into a tube with a porous bottom and the tube is then immersed in the wetting liquid. The height of the rising liquid front is then measured as a function of time (Fig. 2.10). In practice, the amount of infiltrating liquid is most often determined by weight gain of the column, which is attached to a tensiometer for mass change measurements. The contact angle can then be calculated by Eqs. (2.9) or (2.10). Equation (2.9) is often rearranged into Eq. (2.11), with contact angle being determined from the slope of the line relating the square of height to immersion time.

where:

C is a material constant that is calculated as shown in Eq. (2.10),

ρ is the liquid density,

γl is the surface energy (tension) of the liquid,

m is the mass of the liquid that has penetrated the powder, and

t is time.

where:

reff is the effective radius of the pores,

A is the cross-sectional area of the tube containing the powder, and

ε is the porosity of the powder within the tube.

Alternatively:

where:

h is the height of the penetrating liquid,

reff is the effective radius of the pores,

γl is the surface energy (tension) of the liquid,

η is the viscosity of the liquid, and

t is time.

The constant “C” in Eq. (2.9)Footnote 9 can be measured, rather than calculated, by performing this test using a low surface energy liquid such as hexane, which can be assumed to have a contact angle of zero. Equation (2.9) is then rearranged to calculate C, since all other variables will be known, and this value of C is then used for other liquids.

The importance of contact angle for powders is that it is related to the wetting dynamics of the powder. As discussed in Chap. 11, an important aspect of the dispersion of particles into the liquid carrier of a paint is the ease with which the surface wets. During wetting the solid/air interfaces of the particles are replaced by solid/liquid interfaces. Wetting can be enhanced by adding a wetting agent, or surfactant, to the liquid. This reduces the surface energy, or tension, of the liquid. Referring back to Eq. (2.8), we see that a decrease in surface tension (γl) is offset by an increase in the cosine of the contact angle, which means a decrease in the contact angle.

Bulk Density, Bulk Flow, and Powder Compressibility

The bulk behavior of particles is determined by the degree of attraction that the particles have towards one another [32]. In the extreme, these attractions can cause particles to irreversibly stick to one another, but even at lower levels, the degree of attraction has a significant impact on bulk properties because this attraction causes resistance to particle motion. When particles that are near one another, but not touching, move away from one another, their separation distance increases, which requires energy. For particles that are touching one another, or touching the walls of a bin or container, movement is impeded by friction, which, in turn, is controlled by the strength of attraction between particles and one another or with the bin walls.

The degree to which particles can flow past one another affects three properties of the powder: the density at which it settles, the degree to which the entire powder can flow, and the extent to which a powder compresses when placed under pressure. We will consider the measurement of each of these bulk properties, but will first discuss aspects of particle packing.

Particle Packing

Unlike true density, the loose bulk density of powder samples is highly dependent on the measurement technique, the sample preparation technique, and the stress state of the sample. Some sample preparation procedures are designed to minimize bulk density (e.g., gentle sifting of the powder into a measurement container) and therefore give a lower limit for bulk density. Other procedures densify the pigment in a known and (hopefully) reproducible manner. There are two common methods to do this. The first is tapping a filled container on the countertop until the occupied volume no longer changes. This is known as the “tapped” or “tamped” bulk density of the material. The second is to press the powder into a pellet, which gives the ultimate packed or compressed bulk density of the material.

The prevalence of voids in collections of small particles, such as those introduced into the chamber of a pellet press, leads to the concept of powder compressibility. If we place the piston in the press and apply pressure with a hydraulic ram, the powder bed compresses. In doing so, we add work energy to the system (in the form of the force applied by the ram multiplied by the distance the piston moves). This energy is used to overcome inter-particle forces (i.e., friction and cohesion) and wall friction, as well as to rearrange the particles (which mainly takes place at the beginning of the compaction). Because the particles become more concentrated, the number of particle-particle contacts (known as the coordination number) increases. At any given time in this process, the piston compresses the powder bed to the point at which the force applied by the ram equals the combined forces of (1) inter-particle friction, (2) inter-particle cohesion, and (3) particle–wall friction or adhesion. If the force applied to the piston is increased, the powder will densify further until, again, the combined frictional forces equal the force applied by the piston.

The compressibility response of powder beds can be compared to the placement of a weight onto a compression spring. The spring compresses until its restoring force equals the gravitational force of the applied weight. There is a crucial difference between the spring and the bed of particles, however: when the weight is removed from the spring, it returns to its original state. When the force from the hydraulic ram is removed from the pellet press, the particles do not return to their original packing state, since doing so would require restoring motion, which in turn would require the particles to slide past and over one another. Additional energy would also be required to overcome the higher gravitational potential of the original particle arrangement.

For this reason, the bulk density of small particles is very sensitive to the history of the sample. If a sample is compressed, for example by stacking bags of particles on top of each other, or by being driven over a bumpy road, then it will retain its higher bulk density even after the compression force is removed. On the other hand, if a sample is handled carefully, without jarring or compression, then the bulk density will remain low. Because of this, it is not possible to state the bulk density of a sample with a single value. Instead, bulk density values should be reported in a way that indicates the sample history (e.g., tapped or loose).

Measurement

Bulk density is simply measured by weighing a known volume of the powder. As indicated above, it is essential that powders be handled in a controlled and repeatable way.

There is no universally agreed-on definition of powder flowability, nor is there a means of measuring it in a way that is applicable to every situation. However, for bulk storage purposes, critical “rathole” diameters are often used as a means to characterize the expected flow from the silo outlet [33, 34]. The term “ratholing” refers to the tendency of powders to exhibit “funnel flow” out of a silo (Fig. 2.11). Here, a core channel immediately above the outlet forms, with the powder within the channel flowing while the rest of the powder remains in place. The width of the channel is referred to as the critical rathole index and is measured in units of distance (typically meters or feet for silos used industrially).

The critical rathole diameter for a powder depends on the compressibility of the powder as well as the size, shape, and materials of construction of the silo. To determine the critical rathole diameter of a powder, the powder is loaded into a cylindrical hole in a sintered metal base that has the ability to vent air from the sample. A piston is then placed on top of the powder and pressure is applied to compress the sample under a controlled and reproducible load. The base is then removed from around the powder, leaving a free-standing column of it. The piston is again applied on top of the column and the stress required to cause the column to fail is determined. This stress is a measure of cohesive strength. The critical rathole index is then calculated from the ratio of the cohesive strength of the powder to its bulk density, using a constant that is specific to the metal base and piston.

The compressibility of a powder is also measured in a piston press. In this case, the volume of powder at a certain load is determined by using the distance over which the piston is moved. A curve of density versus consolidation stress is then plotted, and from this, a relative compressibility is calculated.

Oil Absorption

In general, we are deferring characterization techniques that are important to only a limited set of end-use applications to the chapters that describe formulation (Chaps. 16 for paint, Chap. 17 for plastics, and Chap. 18 for paper laminates). However, we will include here a discussion on oil absorption, which is a technique that is only important to coatings, because oil absorption values are determined by five of the physical properties that are described above—surface area, surface energy, intra-particle void volume, inter-particle void volume, and powder compressibility. For this reason, a description of oil absorption follows naturally from our discussions above.

The oil absorption test is used to determine the packing efficiency of particles in linseed oil [35, 36]. In this test, linseed oil is added to a single particle type or to a mix of particles. The particle mixes are typically the particles formulated into a paint (pigments and extenders), at the same relative proportions as in the paint. As the oil is added, it is incorporated into the particle mass by vigorously working the mass with a spatula or a rubber policeman. This process is often referred to as a “rub-out”. The mix of oil and particles begins as a moist powder, but at a certain point, it converts into a paste—that is, a thick liquid. This is the defined endpoint of the test. At this point, all of the particles have been wetted with the oil (i.e., have a monolayer coating of oil on their surfaces) and there is just enough remaining oil to fill the voids between the particles.

Oil absorption is generally reported as the number of grams of oil needed to bring 100 g of particles to the test endpoint. In some contexts, this value is referred to as the “oil demand” of the particles, and the endpoint in the test is referred to as “satisfying the demand”. The oil absorption value is important in paint formulation because it can be used to determine the minimum amount of organic resin required to form a solid film from a set volume of particles, a quantity known as the resin demand of the pigment. The conversion between oil absorption and resin demand is given in Eq. (2.12). Resin demand is important as it determines the critical pigment volume concentration (CPVC) of the paint. This is discussed in greater detail in Chaps. 4 and 16.

As mentioned above, oil absorption values are affected by five particle parameters. Three of these are determined by the individual particles themselves—their surface area, surface energy, and intra-particle porosity. The effects of these are quite straightforward. The higher the surface area, the greater the amount of oil required to wet the particles, and so the higher the oil absorption value. Similarly, the greater the intra-particle void volume, the greater the amount of oil needed to fill the voids. Surface energy affects the wetting of the particles by the oil, and so also has a direct effect on oil absorption values.

Inter-particle voids are as important as intra-particle voids since all pores must be filled with oil. The filling of intra-particle voids, such as those found in diatomaceous earth (Fig. 2.7), is quite straightforward, and it is clear how the void volumes affect the amount of oil required to satisfy the particles. However, the filling of inter-particle voids is more complicated. When the oil/particle mix is worked, much of the energy applied to the system is used to compress the particle packing arrangement, decreasing the void volume of the particle mix. This is similar to the effect on bulk density of adding energy into a particle bed, both in terms of compressing the particles and in terms of being very dependent on the exact history of the particle mix. The compressibility of the powder is, therefore, the fifth particle property that affects oil absorption. The more energy introduced during the oil absorption test, the greater this compression and the lower the void volume of the resulting particle mix. This, in turn, decreases the measured oil absorption value of the mix. This dependence is a significant source of error for this test since different operators are likely to apply a different degree of force when incorporating the oil into the powder.

In addition to the uncontrolled application of energy, this test also suffers imprecision due to the need to interpret the true endpoint of the test. There is generally not a sharp change to a paste consistency as oil is incorporated into the particle bed, and different operators may have different definitions as to when the mass becomes a paste.

Finally, there is a sixth parameter that affects oil absorption but is not determined by the particle. This parameter is the exact identity of the oil used in the test. There are many types of linseed oil that vary in chemical composition, in particular, in the density of carboxylic acid groups in the polymer chain. The chemical composition is expressed as the acid number of the oil, and this value partially determines the ability of the oil to efficiently wet the particle. A comparison of oil absorption values for a variety of TiO2 pigments using two different linseed oils, which differ in acid number, is shown in Fig. 2.12.

Thermal Techniques

Different materials react to temperature changes in different, and often unique, ways. We are familiar with the change in spatial dimensions on heating (normally an expansion). In addition, heating can lead to a weight loss due to the volatilization of some (or all) components of a sample, and it can lead to changes in the heat capacity of the material (i.e., the change in temperature that occurs when a unit amount of heat is applied). Both weight loss and heat capacity change as a function of temperature is used to characterize particles.

TGA

Thermal gravimetric analysis, or TGA, is a test in which weight loss is determined as a function of temperature. In this method, a small amount of power is placed on a balance that is itself in an oven. A gas is flowed over the sample as it is controllably heated and weight as a function of temperature is recorded.

Weight loss occurs for two reasons. The first is the burning of organic components when the analysis is done in air. Different organic materials or classes of organic materials have different ignition temperatures, and the onset of combustion can be used to identify these materials. The second cause of weight loss is the decomposition of materials that contain volatile constituents. The volatile product of such decompositions is typically water molecules from hydrated materials, but it can also be small molecules such as ammonia if it is present. A gas chromatograph or mass spectrometer is often used to analyze the composition of the evolved gases.

Many of the particles used in paints and plastics are hydrated minerals. These not only contain internal waters of hydration but also surface water adsorbed from the atmosphere. The latter normally evolves at a temperature near or slightly below 100 °C. Waters of hydration, on the other hand, can be released at significantly higher temperatures.Footnote 10 The temperature at which this occurs is dependent on the chemical composition of the sample. When the material is crystalline and well defined, all of the water is in the same environment and so volatilizes at the same temperature, giving a sharp weight loss in the temperature scan. On the other hand, in amorphous or poorly defined materials, each water molecule is in a slightly different local environment and so the weight loss is spread out over a wide temperature range.

A TGA scan of a mixture of amorphous hydrous alumina (Al2O3·nH2O) and crystalline hydrous alumina (Al2O3·3H2O) is shown in Fig. 2.13. Here, the weight loss due to the amorphous alumina is seen as a broad decrease over the entirety of the scan, while the weight loss due to the crystalline alumina is indicated by the relatively sharp feature between 250 and 300 °C. The amount of weight lost (13.4%) can be used to calculate the weight proportion of the crystalline material in this mixture (38.8%, since Al2O3·3H2O is 34.6% water).

TGA weight loss scan of a mixture of amorphous hydrous alumina and crystalline hydrous alumina. Losses below approximately 100 °C are due to waters of adsorption. Broad losses between 100 and 700 °C are due to water loss from the amorphous alumina. Sharp loss between 250 and 300 °C is due to the decomposition of the crystalline alumina

DSC

Differential scanning calorimetry is used to characterize solids that undergo a temperature-dependent transition at the molecular level. In this test, the heat capacity of a material is measured as a function of temperature. The foundation of this test is that heat added to a sample can affect it in two ways. The first, and most obvious, is that it can increase the temperature of the material. The relationship between heat input and temperature rise for a unit mass of material (normally one gram) is the specific heat of the material. For most particles found in paints or plastics, at the temperatures of interest to us, specific heat generally varies only slightly with temperature (the exception is organic resin particles, for which specific heat changes sharply at certain temperatures, as discussed below). This results in a linear relationship between temperature and heat added to the sample, as shown in Fig. 2.14a.

The second effect of heat added to a sample is that it can cause a physical or chemical change within the material. In this case, heat enters the sample but does not increase its temperature, and so this is often referred to as latent heat. The most obvious of these transitions are melting and boiling (Fig. 2.14b). Here, there are abrupt changes in the motion of molecules and these changes require energy.

Another example of such a transition is a change in the crystalline structure, or crystalline phase, of a material. For example, one solid phase of alumina may change into another when heat is added to the sample at a certain temperature, or an amorphous material may crystallize at a certain temperature (the crystallization temperature, or Tc). These transitions are not accompanied by any change in mass or temperature. Although crystalline changes are important in the plastics industry when a polymer is heated or cooled, the particles found within the plastic, or within a paint, do not typically undergo a phase change within the temperature range of most applications.

The final thermally induced change to a particle is its glass transition. This transition occurs in the polymer particles found in a latex paint, as well as in many plastics. At a specific temperature, termed the glass transition temperature and indicated with the abbreviation “Tg”, there is a step-change increase in the mobility of sections of the polymer chains. This is accompanied by a change in the heat capacity of the material and is seen as a discontinuity in the curve relating heat added to a sample and its temperature (Fig. 2.14c). The glass transition and its importance to the resin found in waterborne coatings are discussed in more detail in Chap. 10.

The DSC experiment is simple in principle [37]. A known amount of heat is added to a sample, and the corresponding temperature change is determined as a function of this added heat. A control sample of known thermal characteristics is used as a reference for this measurement. There are two fundamentally different types of DSC instruments. Heat flow, or flux, instruments use a single furnace for the unknown and reference samples. The temperature difference between the samples is monitored and heat flow is calculated based on this difference. By contrast, a power-compensated DSC uses two furnaces, one for the unknown and the other for the reference. The two samples are kept at the same temperature during heating. The power required to heat the sample furnace to the same temperature as the reference furnace is monitored and the energy input per incremental increase in temperature is calculated from this.

Elemental Analysis

A quantitative determination of the amounts of the elements found in a material is a powerful tool for determining the identity of any material, including powders or powder mixtures. This can be used for gross characterization of the sample as well as the detection of trace impurities. Such impurities often reveal the process used to make the particles (e.g., the sulfate and chloride routes for producing TiO2 each have difficulties in removing certain impurities) or the geographical origin of a mineral sample (impurities are generally specific to a particular region).

There are a number of ways to measure the elemental composition of a powder, but we will focus on three—X-ray fluorescence spectroscopy (XRF), atomic absorption spectroscopy (AAS), and inductively coupled plasma atomic emission spectroscopy (ICP-AES).

In the XRF measurement, the material of interest is subjected to X-ray radiation. The atoms within the sample absorb X-rays with a concomitant ejection of a certain electron from the atomic inner core. This leads to a high energy excited state of the atom. An electron from the outer core of the atom will typically transfer to the inner core, replacing the ejected electron, and in the process will lose energy as a photon with a characteristic wavelength (also in the X-ray region of the light spectrum). The overall process of light absorption and re-emission at a lower energy wavelength is referred to as fluorescence.

The elemental composition of the material can be calculated by comparing the intensities of the fluorescent X-rays to those of known standards. This technique can be used to detect atoms between sodium and uranium on the periodic table, as the fluorescent X-rays for lighter elements are typically reabsorbed before they can leave the material.

AAS is based on the light absorption characteristics of gaseous atoms. In this analysis, a solution of the material of interest is heated (often by an argon plasma generated in an inductively coupled heating process) to a temperature that causes it to decompose. This generates individual atoms in the gas phase. A light is then applied to the gas and its intensity spectrum after passing through the sample is measured. Individual atoms of each element exhibit a characteristic absorption spectrum, and so the amount of a particular element can be determined by adsorption spikes in the spectrum. The wavelength of light can be modified to match the absorption features of a specific atom.

The final technique that we will consider is ICP-AES. In this technique, the sample of interest is again dissolved and subject to high enough temperatures to vaporize the material. In this case, an inductively coupled plasma is used to heat the sample and ionize the resulting vapor. These high-energy ions typically radiate, or emit, certain wavelengths of light. A detector determines the intensity of the emitted light and compares this to intensities for standards of the elements present. This technique is often used for trace metals analysis in addition to routine elemental analysis.

Crystalline Phase Composition

When discussing the use of X-ray line broadening to determine particle size, we described the interaction of X-rays with crystalline materials. These interactions cause X-rays to scatter with certain intensities in certain directions (angles). The intensities are governed by the type of elements in the crystal and the directions are determined by the arrangement of these atoms within the crystal lattice (i.e., the crystal phase).

The combination of scattering angles and intensities is a fingerprint for a specific crystal type. These parameters are measured by scanning a powder sample with X-rays, simultaneously changing the angle of the incoming beam and the angle of the detector (Fig. 2.15). The results are typically reported as detected intensity as a function of angle (2θ). Different components for even very complex mixtures of crystals can normally be resolved and an approximate composition determined (Fig. 2.16).

Microscopy

We mentioned microscopy earlier as a means for characterizing the size distribution of certain particles or particle mixtures. While it is quite capable of doing this, the overall usefulness of microscopy to particle characterization is significantly broader.

Two types of microscopes are available to the researcher—optical microscopes and electron microscopes. Optical microscopes are useful for imaging particles at the larger end of the size range of interest to us, as well as agglomerates of particles, and this level of detection is adequate for many needs of the paint or plastics formulator. However, resolution in microscopy is theoretically limited to about one-half of the wavelength of the illuminating light,Footnote 11 although in practice it is impossible to resolve features smaller than a few microns using light microscopy. This is due to limitations on the depth of focus. Because of this, particles at the smaller end of the range of interest to us cannot be imaged using this technique.

This issue can be avoided by using probes of much smaller wavelengths. However, there are practical issues with this when the probe is light (X-rays). Instead, a high-energy electron beam is used for this purpose. Because of wave/particle duality, these electrons have associated with them a characteristic wavelength. The resolution limit of these electrons is about 0.1 nm—an improvement of several orders of magnitude compared to optical microscopes.

There are two fundamentally different ways to use electrons to image a sample, leading to two types of electron microscopes—scanning electron microscopes (SEMs) and transmission electron microscopes (TEMs). In both cases, an electron beam is focused on the sample. In the case of an SEM, the degree of interaction between the beam and sample is quantified in some way (i.e., a signal is created and processed), while in the case of a TEM the electrons that interact with the sample are projected onto a screen, directly creating an image of the particles without the need to create or process a signal.

There are three main detection approaches for the SEM: detecting backscattered electrons, detecting secondary electrons (these are electrons that are ejected from atoms in the sample by the incoming electron beam), and detecting X-rays generated from the sample when the atoms that have ejected secondary electrons relax to a lower energy state (similar to X-ray fluorescence, discussed above, except using electrons rather than X-rays to eject a core electron from the atom). These three processes are shown schematically in Fig. 2.17.

For all three detection methods, the electron beam in an SEM is rastered across the sample of interest, and the image created from this scan is essentially a map of interaction intensity as a function of beam location. In effect, the intensity is measured for a pixel of area, and the pixels are combined to give an image. Note that this form of imaging is fundamentally different from optical imaging (or TEM imaging, discussed below), where a wave source (visible light or electrons) is applied to the entire sample at once, and the resulting waves are focused and imaged.

The three detection methods provide a somewhat different view of the sample. Because secondary electrons are easily absorbed, only those at the topmost region of the surface (1–10 nm) can escape the sample and be detected. In addition, these electrons are less likely to escape from valley or pore regions than they are from regions of prominence. These two factors enhance the topographical (surface) features of the sample, providing a three-dimensional appearance to these images (Fig. 2.18).

The sensitivity of secondary electrons to surface features can also be used for depth studies. In this case, the energy of the beam electrons is changed—higher energies allow for deeper penetration. An example of this is shown in Fig. 2.19. These show the same region of a paint film containing only TiO2 and resin. The electron beam energy for one image was 3 keV and for the other was 10 keV. Film surface features and the topmost TiO2 particles are seen at 3 keV while the top micron or so of the film is imaged at the higher beam energy.

The intensity of electron backscattering is controlled by the atomic number of the atom. Heavier atoms have more electrons, and so there is a greater chance of an electron in the beam being scattered backward by the electrons in heavy atoms than is the case for lighter atoms. The contrast in these images, therefore, is determined by the elemental identities of the atoms in the sample, with the heavier atoms appearing brighter.

A comparison of secondary electron images and backscattered electron images for a paint sample is shown in Fig. 2.20. The surface-specific nature of the secondary electron technique, and the elemental contrast of the backscatter technique, are clearly seen in these images.

Finally, analysis of the X-ray energies can give information as to the elemental identity of the atoms present. This can either provide an element map of the entire image area or a spectrum for an individual region within the image. An example of X-ray fluorescence is given in Fig. 2.21. Here, four images are given for a sample mixture of hollow silica sphere microparticles (diameter 0.3 microns) and gold nanoparticles (40 nm). The secondary electron and backscatter electron images are shown in Fig. 2.21a and b. In the secondary electron image, there is no contrast between the two particle types, while in the backscatter electron image the gold nanoparticles are clearly highlighted in comparison to the silica microparticles. Figure 2.21c and d shows elemental maps for the two metal atoms found in the different particle types.

SEM imaging of materials that are electrically insulating, which describes nearly all particles of interest to us, is complicated by the accumulation of electrical charge on the material surface. This electrical charge arises from electrons in the beam becoming trapped within the sample. Because the electrical fields created by this excess charge will deflect the electrons in the beam, the sample image becomes distorted. This problem can be solved by coating (sputtering) the sample with an electrically conductive material (e.g., gold, osmium, or carbon). However, this unavoidably alters the sample surface at the atomic level, and so surface features of only a few nanometers cannot be properly imaged when these coatings are used.

Unlike the SEM, the TEM, as the name implies, analyzes electrons that are transmitted through the sample. For this reason, the sample must be thin enough (typically less than 100 nm) to allow some of the electrons in the beam to pass through. After passing through the sample, beam is then expanded using a magnetic lensFootnote 12 and directed at a phosphor screen.

Electrons in the electron beam interact with the sample in two ways—through elastic scattering, which preserves the energy of the beam, and through inelastic scattering, during which the beam loses energy. Both types of interactions can be used to image the sample.

The TEM is capable of higher magnification than the SEM and can, in fact, resolve individual atoms or columns of atoms. This can be seen in Fig. 2.22, which is a TEM image of a pigmentary TiO2 crystal coated with 3 wt% amorphous silica. Here arrayed columns of titanium atoms are clearly visible near the TiO2/silica interface, whereas the amorphous coating shows no indication of regularity. The contrast seen in TEM images is controlled by the thickness of the sample, and the atomic identity of the sample—as with SEM, the electron beam in the TEM process interacts more intensely with heavier atoms than lighter atoms, making the amorphous silica in Fig. 2.22 lighter than the TiO2 portion of the particle. In addition, resolution can be increased by using phase contrast.

Elemental analysis and mapping are also possible with the TEM. In this case, a technique known as electron energy loss spectroscopy (EELS) is used. Here, the electron beam causes an electron in a sample atom to eject, decreasing the energies of the beam electrons by a specific amount. The energies of the beam electrons are measured after passing through the sample, and from that, the elemental identity of the sample can be determined. This measurement is so precise that the oxidation state of the atom and even the crystalline phase of the material (which sets the local environment around the sample atoms) can be differentiated. An example of an EELS image is shown in Fig. 2.23 for a TiO2 particle with a patchy alumina coverage.

Surface Charge

The surfaces of particles dispersed in water typically carry an electrical charge. The sign of this charge, and its magnitude, are governed by dissolved ions in the water (especially H+ and OH−, the concentrations of which are determined by pH), particularly when the particle is a metal oxide.

Any un-neutralized or excess charge in a particle will be found at or near the particle surface. A charged particle surface in water will create an electrical double layer that surrounds that particle [38]. The inner electrical layer consists of ions (either from water dissociation or any dissolved salts) that are tightly adsorbed onto the particle surface. These ions are not swept away if the solution around the particle moves relative to the particle. The outer layer is composed of counterions that remain in solution and screen the charge on the inner layer.

The exact distribution of ions around the charged surface, and the electrical potential as a function of distance from the particle surface, has been the subject of much experimental and theoretical attention since Helmholtz first reported his analysis of electrodes immersed in ion-containing solutions in 1853. Because this layer can extend into a relatively large region around the particles, it is often referred to as the diffuse layer. The amount to which it extends and the electrical potential as a function of distance are determined by the magnitude of the surface charge layer and the concentration of ionic species in the liquid phase (the diffuse layer is more compact if the ionic concentration is high).