Abstract

Standard tools to update approximations to a matrix A (for example, Quasi-Newton Hessian approximations in optimization) incorporate computationally expensive one-sided samples \(A\,V\). This article develops randomized algorithms to efficiently approximate A by iteratively incorporating cheaper two-sided samples \(U^\top A\,V\). Theoretical convergence rates are proved and realized in numerical experiments. A heuristic accelerated variant is developed and shown to be competitive with existing methods based on one-sided samples.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction and Motivation from Optimization

Effective nonlinear optimization algorithms require 1st derivative information, \(\nabla f(x)\), while superlinear convergence requires some 2nd derivative approximation [12]. For example, standard Quasi-Newton (QN) methods such as BFGS (complete gradient and an approximate Hessian generated from gradient differences) have superlinear terminal convergence. Limited-Memory (LM) QN methods [11], such as LBFGS, which approximate the Hessian efficiently by storing only the most recent gradient differences, are widely used in large-scale optimization. This article formulates randomized QN like algorithms which can be used to approximate Hessians (as well as general matrices) with reduced cost.

Alternatively, consider Stochastic Gradient Descent (SGD) [14], which is a common dimension reduction technique in statistics and Machine learning. SGD minimizes the average of cost functions \(f_i:\mathbb {R}^m \rightarrow \mathbb {R}\),

by approximating \(\nabla f \approx s^{-1} \sum _{i=1}^s \nabla f_i\). Here, \(f_i\) is associated with the i-th entry of a large (n entry) data or training set. The SGD approximation is simply \(\nabla f \approx F\,p\), where F is the matrix with ith column \(\nabla f_i\) and the sparse vector p has s non-zero entries of 1/s at sampled indices. Our algorithms generalize SGD by incorporating flexible sampling in \(\mathbb {R}^n\) along with sampling in the parameter space \(\mathbb {R}^m\) to provide flexibility to tune our algorithms to computational hardware.

Section 2 introduces the fundamental problem, two-sided samples and terminology. Section 3 reviews randomized one-sided Quasi-Newton algorithms while Sect. 4 develops our randomized, two-sided Quasi-Newton algorithms. Section 5 provides probabilistic convergence rates and error estimates. Section 6 numerically demonstrates the convergence of the algorithms. Section 7 incorporates an inner block power iteration to accelerate our two-sided algorithms and compares the result to one-sided algorithms based on similar heuristics.

2 Fundamental Problem, Samples, and Terminology

The fundamental problem our algorithms addresses is how to efficiently construct a sequence of approximations to a matrix, \(A\in \mathbb {R}^{m\times n}\), from a stream of incomplete and possibly noisy data. Specifically, we develop and analyze algorithms to iteratively embed aggregate information from

These weighted linear combinations of the rows and columns of the data A are called two-sided samples. This is in contrast to weighted linear combinations of the rows, \(U^\top \,A\), or weighted linear combinations of the columns, \(A\,V\), which we refer to as one-sided samples. Two-sided samples have been used before in non-iterative algorithms: [9] compares Schatten-p norm estimates (pth root of the sum of the pth power of the singular values) using two-sided samples, \(U^{\top }A\,V\), (termed a bi-linear sketch) to estimates using one-sided samples, \(A\,V\). Large eigenvalues estimates using two-sided random projectors are examined in [1]; and two-sided samples are used in [2] to tighten bounds on low-rank approximations. We follow their lead by simply counting sample entries to estimate data cost: \(m\, s_2\) for the one-sided samples, \(A\,V\), and \(s_1\, s_2\) for the two-sided sample, \(U^{\top }A\,V\). Algorithms using two-sided samples are a subset of randomized numerical linear algebra. An overview of existing algorithms and applications is provided by the extensive list of articles citing the comprehensive review [7]. The algorithms in [1, 7] expend significant up front effort computing projections \(\varOmega \) and \(\varPsi \) so that the projected matrix, \(\varOmega ^\top \,A\,\varPsi \), approximates A on dominant eigenvalues with the goal that uniform random sampling of \(\varOmega ^\top \,A\,\varPsi \) yields good approximations to A. In contrast, our algorithms produce an improving sequence of approximations by iteratively embedding small randomized two-sided samples, \(U^\top \,A\,V\), with sample dimensions \(s_1\times s_2\) that can be chosen to suit available hardware. Throughout we compare algorithms using the cost estimates (respectively \(s_1\, s_2\) and \(m\, s_2\) for the samples \(U^\top \,A\,V\) and \(A\,V\)) from [9].

We use notation motivated by QN algorithms in non-linear optimization. SPD means symmetric positive definite and W is an SPD weight matrix. \(X^+\) denotes the Moore-Penrose pseudo-inverse of X; \(\langle X, Y \rangle _F = \mathrm Tr\left[ X^\top Y\right] \) and \(\Vert X\Vert _F^2 = \langle X, X \rangle _F\) are the Frobenius inner product and norm. For conforming SPD weights \(W_1\) and \(W_2\) the weighted Frobenius norm (with special case \(X = X^\top \) and \(W=W_1=W_2\) written \(F(W^{-1})\)) is

The W-weighted projector \(\mathcal {P}\), which projects onto the column space of \(W\,U\),

satisfies \(\mathcal {P} \, W = W \, \mathcal {P}^\top = \mathcal {P} \, W \, \mathcal {P}^\top \quad \text{ and } \quad W^{-1}\mathcal {P} = \mathcal {P}^\top W^{-1} = \mathcal {P}^\top \, W^{-1} \, \mathcal {P}\).

3 Randomized One-Sided Quasi-Newton Algorithms

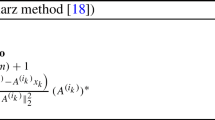

Our iterative approximations to A using two-sided samples are motivated by the one-sided sampled algorithms in [6] and QN optimization algorithms. Classical QN schemes for SPD matrices A are formulated as constrained minimum change updates for \(B\approx A\) or \(H\approx A^{-1}\) in weighted Frobenius norms [12]: the constraint enforces the new information while the minimum change condition stabilizes the update. The one-sided sampled update algorithms in [5, 6] are given by the KKT [8, 12] equations (with particular choices of weight W) for the quadratic programs

The analytical updates defined by Eqs. (2) and (3), are

where the weighted projectors \(\mathcal {P}_B\) and \(\mathcal {P}_H\) defined by Eq. (1) are

Note, these are two different updates using the same one-sided sample \(A \, U_k\) which are not simply connected by the Sherman-Morrison-Woodbury (SMW) formula. In Eq. (4), \(B_{k+1}\) is an improved approximation to A while in Eq. (5), \(H_{k+1}\) is an improved approximation to \(A^{-1}\). Familiar algorithms are obtained by selecting different weights, W. Block DFP [15] is Eq. (4) with \(W = A\)

where \( \mathcal {P}_{\text {DFP}} = P_{A^{-1},U_k} = A \,U_k(U_k^\top \, A \,U_k)^{-1}U_k^\top \). Block BFGS [5, 6] is the result of inverting Eq. (4) with \(W=A^{-1}\) using the SMW formula

QN algorithms are commonly initialized with multiples of the identity.

4 Randomized Two-Sided Quasi-Newton Algorithms

A general algorithm (defined by SPD weight matrices \(W_1\) and \(W_2\)) to approximate non-square matrices and two distinct algorithms specialized to symmetric matrices are developed. As with one-sided sampled algorithms, different weights give different algorithms. Algorithms and theorems are developed for a generic initialization \(B_0\).

4.1 General Two-Sided Sampled Update

Analogous to Eq. (2), our first algorithm is defined by the minimization

Solving the KKT equations for Eq. (6) gives the self-correcting update

Since this update explicitly corrects the projected residual sample \( R_k = U_k^\top (A-B_k)\, V_k\), it decreases the weighted Frobenius norm \(\Vert A-B_k\Vert _{F(W_{1}^{-1},W_{2}^{-1})}^2\) unless the approximation is correct on the sampled spaces, i.e., \(U_k^\top (A-B_k)\, V_k = 0\).

Given \(A\in \mathbb {R}^{m\times n}\), initial approximation \(B_0\in \mathbb {R}^{m\times n}\), two-sided sample sizes \(\{s_1, s_2\}\), and SPD weights \(\{W_{1},W_2\}\), Eq. (7) generates a sequence \(\{B_k\}\) that converges monotonically to A in the appropriate weighted Frobenius norm. Pseudocode is provided in Algorithm 1: boxed values give the two-sided sample size per iteration; double boxed values the total for all iterations. For symmetric A, the independent left and right hand sampling fails to preserve symmetry.

4.2 Symmetric Update

Unsurprisingly, the fully symmetrized general algorithm (\(A=A^\top ,B_0=B_0^\top \), \(V_k=U_k\) and \(W=W_1=W_2\)) give symmetric approximations. Pseudocode is provided in Algorithm 2 with sample counts boxed as before.

Remark 1

Algorithm 2 can give non-SPD updates from SPD input e.g.

4.3 Multi-step Symmetric Updates

Enforcing symmetry for \(A=A^\top \) and \(B_0 = B_0^\top \) with an internal step

gives convergence comparable to Algorithm 2. However, the two-step algorithm

has superior convergence properties and requires no additional data since

Pseudocode is provided in Algorithm 3 with sample counts boxed as before.

5 Convergence Analysis

Our convergence results rely on properties of randomly generated projectors. In our experiments, we orthogonalize square matrices with entries drawn from N(0, 1) to generate rotations from a rotationally invariant distribution [16]. Our algorithms use symmetric rank s projectors defined by an SPD weight W

where U is simply the first s columns of a random rotation. The smallest and largest eigenvalues \(\lambda _1\) and \(\lambda _n\) of the expectation \(\mathbf{E} [\hat{z}]\) of these random projections determines convergence of our algorithms with optimal rates when \(\lambda _1=\lambda _n\).

Definition 1

A random matrix, \(\hat{X} \in \mathbb {R}^{m \times n}\), is rotationally invariant if the distribution of \(Q_m\,\hat{X}\,Q_n\) is the same for all rotations \(Q_i \in \mathcal {O}(i)\).

Proposition 1

Let \(\mathcal {Z}\) be any distribution of real, rank s projectors in \(\mathbb {R}^n\). Then,

Further, if \(\hat{z}\) is rotationally invariant, then \(\mathbf{E} [\hat{z}] = \frac{s}{n}I_n\).

Proposition 2

For \(R \in \mathbb {R}^{m \times n}\) and conforming symmetric projections \(\hat{y},\hat{z}\),

Proposition 3

For any \(R \in \mathbb {R}^{m \times n}\) and conforming symmetric positive semi-definite matrices \(S_1\), \(S_2\), and (in the special case \(m=n\) ) S we have the bounds:

Remark 2

Convergence results for Algorithms 1 to 3. are for \(\mathbf{E} [\Vert B-A\Vert _F^2]\). Such results dominate similar results for \(\Vert \mathbf{E} [B-A]\Vert _F^2\) since

Theorem 1

(Convergence of Algorithm 1 - NS). For \(A \in \mathbb {R}^{m \times n}\) and \(B_0 \in \mathbb {R}^{m \times n}\) with \(W_1 \in \mathbb {R}^{m \times m}\) and \(W_2 \in \mathbb {R}^{n \times n}\) fixed SPD weights. If \(U_{k} \in \mathbb {R}^{m \times s_1}\) and \(V_{k} \in \mathbb {R}^{n \times s_2}\) are random, independently selected orthogonal matrices with full column rank (with probability one), then \(B_{k}\) from Algorithm 1 satisfies

where \(\rho _{\text {NS}} = 1-\lambda _{\min }(\mathbf{E} [\hat{y}])\, \lambda _{\min }(\mathbf{E} [\hat{z}])\), with

Proof

Define the kth residual as \(R_k:=W_{1}^{-1/2}(B_k-A)W_{2}^{-1/2}\). With some algebraic manipulation, Eq. (7) can be re-written as \(R_{k+1} = R_k - \hat{y}_{k}R_{k}\hat{z}_{k}\). Computing the squared Frobenius norm of both sides,

where we have made use of Proposition 2. Taking the expected value with respect to independent samples \(U_{k}\) (leaving \(V_{k}\) and \(R_k\) fixed) gives

where we applied Proposition 3 to the symmetric positive semi-definite matrix \(\mathbf{E} [\hat{y}_{k}]\), and used Eq. (9). Taking the expected value with respect to independent samples \(V_{k}\) and leaving \(R_k\) fixed gives

Taking the full expectation and noting \(\mathbf{E} [\Vert R_{k+1}\Vert _F^2] = \mathbf{E} [\Vert B_k - A\Vert _{F(W_1^{-1},W_2^{-1})}^2\)

gives the result by unrolling the recurrence. Note, independence of \(U_{k}\) and \(V_{k}\) justifies \(\mathbf{E} [\langle \hat{y}_{k}R_{k}\hat{z}_{k}, R_{k}\hat{z}_{k} \rangle _F] = \langle \mathbf{E} [\hat{y}_{k}]R_{k}\hat{z}_{k}, R_{k}\hat{z}_{k} \rangle _F\).

Theorem 2

(Convergence of Algorithm 2 - SS1). Let \(A, W \in \mathbb {R}^{n \times n}\) be fixed SPD matrices and \(U_k \in \mathbb {R}^{n\times s}\) be a randomly selected matrix having full column rank with probability 1. If \(B_0 \in \mathbb {R}^{n \times n}\) is an initial guess for A with \(B_0 = B_0^\top \), then \(B_{k}\) from Algorithm 2 satisfies

where \(\rho _{\text {SS1}} = 1-\lambda _{\min }(\mathbf{E} [\hat{z}])^2\) and \(\hat{z}_{k} = W^{1/2}U_{k}(U_{k}^\top \,W \, U_{k})^{-1}U_{k}^\top W^{1/2}\).

Proof

Following similar steps outlined in the proof in Theorem 1, we arrive at

Taking the expected value with respect to \(U_k\) leaving \(R_k\) fixed we have

where the inequality arises from application of Jensen’s Inequality. Simplifying and applying Proposition 3,

Taking the full expectation and un-rolling the recurrence yields the desired result.

Theorem 3

(Convergence of Algorithm 3 - SS2). Let \(A,U_k,V_k\) and \(B_0\) be defined as in Theorem 1, and let W be a fixed SPD matrix then \(B_{k}\) from Algorithm 3 satisfies

where

Proof

Let \(R_k\) be the kth residual \(R_k\), and \(\hat{y}_{k}, \hat{z}_{k}\) be projectors as in Theorem 1 with \(W = W_1 = W_2\). Eq. (8) can be re-written in terms of \(R_k\) as follows.

Theorem 1 gives

and a repeated application of Theorem 1 gives

Lastly, we observe via the triangle inequality that

Un-rolling the loop for k iterations gives the desired result.

With the relevant rate \(\rho \) below error bounds for Algorithms 1 to 3 are

where \(y_1 = \lambda _{\min }(\mathbf{E} [\hat{y}])\), \(z_1 = \lambda _{\min }(\mathbf{E} [\hat{z}])\), and

Since any symmetric rank s random projection \(\hat{z}\) on \(\mathbb {R}^n\) satisfies \(0\le z_1 \le \frac{s}{n} \le z_n \le 1 \) and rotationally invariant distributions, e.g. \(U U^+\) with \(U\sim N(0,1)^{n \times s}\), further satisfy \(\mathbf{E} [\hat{z}] = \frac{s}{n}\), minimizing the various convergence rates \(\rho \) over the appropriate domains gives the following optimal rates.

Corollary 1

The optimal convergence rates for Algorithms 1 to 3 are obtained attained for \(U_k\) and \(V_k\) sampled from rotationally invariant distributions,

Remark 3

Theorems 1 to 3 all assume the weight matrix W and distributions are fixed. All our non-accelerated numerical experiments use fixed weights and sample from fixed rotationally invariant distributions.

Remark 4

Corollary 1 is an extremely strong result. Consider for simplicity \(s_1=s_2=s\). Although the convergence rates are \(\sim 1-\left( \frac{s}{n}\right) ^2\), only \(s\times s\) aggregated pieces of information are used each iteration. If a one-sided sampled algorithm uses \(s \times n\) pieces of information, e.g. [6], our algorithm can take \(\frac{n}{s}\) iterations with the same amount of information. Consequently the error decrease after \(\frac{n}{s}\) iterations, is comparable to convergence rates of one-sided sampled QN methods.

Lower bounds on the convergence rates (analogous to the upper bounds in Theorems 1 to 3 but using the upper bounds in Proposition 3) are easily derived. For example, the two-sided error bound for Algorithm 1 is

where as before \(y_1 \le y_2 \le \cdots \le y_m\) is the spectrum of \(\mathbf{E} [\hat{y}]\), \(z_1 \le z_2 \le \cdots \le z_n\) is the spectrum of \(\mathbf{E} [\hat{z}]\) and the explicit form for \(\rho _{\text {NS}}\) is in Eq. (11). We collect the similar results for Algorithms 1 to 3 in Corollary 2.

Corollary 2

(Two-Sided Convergence Rates). Given the assumptions of Theorems 1 to 3 the explicit formulas Eq. (11) for \(\rho \) give two-sided bounds,

where \(y_1,y_m, z_1, z_n\) are the extreme eigenvalues of \(\mathbf{E} [\hat{y}]\) and \(\mathbf{E} [\hat{z}]\).

Remark 5

If \(\hat{y}\) and \(\hat{z}\) are rotationally invariant, the upper and lower probabilistic bounds in Corollary 2 coincide since \(z_1=z_n = \frac{s_1}{n}\) and \(y_1=y_m = \frac{s_2}{m}\). Algorithms 1 to 3 all use rotationally invariant distributions and converge predictably at the expected rate. The algorithms still converge with other distributions provided the smallest eigenvalue of the expectation is positive.

6 Numerical Results

Algorithm 1 to 3 were implemented in the MATLAB framework from [6] and tested on representative SPD matrices from the same article: \(A=XX^\top \) with \(X \sim \mathcal {N}(0,1)^{n \times n}\); the Gisette-Scale ridge regression matrix from [3]; and the NASA matrix from [4]. The author’s website [13] contains MATLAB scripts and similar results for all matrices from [6]. Computations were performed on Superior, the HPC facility at Michigan Technological University.

Many metrics can be used to objectively compare algorithmic costs. Common metrics include number of FLOPS, total memory used, communication overhead, and for matrix-free black-box procedures the number of individual matrix-vector products \(A\, v\). As noted by [9] the analogous metric for a black box procedure to compute the matrix product required for our \(s_1 \times s_2\) two-sided sample \(U^\top A \, V\) is the number of sampled entries, \(s_1 \, s_2\). As an explicit example, for \(f:\mathbb {R}^m\rightarrow \mathbb {R}\), Forward-Forward mode [10] Algorithmic Differentiation (AD) simultaneously computes f(x) and a directional 2nd derivative \(u^\top \nabla ^2f(x)\,v\). With sufficient shared-memory processors, AD can efficiently compute f(x) and the two-sided sample \(U^\top \nabla ^2f(x)\, V\) of the Hessian with cost \(s_1 \, s_2\).

Algorithms in [6] are for symmetric matrices. We compare the convergence of (unweighted i.e. \(W=I\)) Algorithms 1 to 3 (sample size \(s=\lceil \sqrt{n}\rceil \) matching [6]) to the one sided algorithms in [6]: Fig. 1a for \(A = X\,X^\top \) with \(X \sim \mathcal {N}(0,1)^{5000\times 5000}\); Fig. 1b for Gisette-Scale; and Fig. 1c) for NASA. Our algorithms achieve the theoretical convergence rates from Eq. (12) (dotted lines). Weighted algorithms DFP and BFGS use \(B_0=I\), un-weighted Algorithms 1 to 3 use \(B_0=0\). Runs were terminated after \(5n^2\) iterations or when the relative residual norm fell below 0.01. The one-sided algorithms DFP and BFGS have target dependent weight matrices: DFP is Eq. (4) with weight \(W=A\) while BFGS is the Sherman-Morrison-Woodbury inversion of Eq. (5) with \(W=A^{-1}\). Figure 1a shows our algorithms outperforming both BFGS and DFP for small tolerances. Figure 1b shows enhanced initial convergence for DFP and BFGS, but Fig. 1c demonstrates that BFGS may not converge. In contrast, our two-sided algorithms converge consistently, achieving the theoretical convergence rates.

7 Heuristic Accelerated Schemes

Algorithm 2 corrects the symmetric projected residual \(U_k^\top (A-B_k)U_k\) at each stage; significant corrections occur if \(U_k\) aligns with large eigenvalues of \(R_k\). Block power iteration is a standard heuristic [7] to enhance alignment.

Incorporating p steps of a block power iteration to enrich \(U_k\) produces the hybrid algorithm in Algorithm 2: the loop from line 4 to line 9 enriches a random U by multiplying by the residual and re-orthogonalizing p times. As before, work estimates are boxed on the right (p block power iterations require \(p\,n\,s\) and the square symmetric sample requires \(s^2\)) with the total double boxed. Although the inner iteration requires significantly more matrix samples per iteration, conventional wisdom [7] suggests one or two inner iterations are likely to be beneficial. Our experiments show Algorithm 2 is competitive for \(p=2\).

Acceleration Convergence Results

Algorithm 4 (rotationally invariant samples with \(p=2\)) is compared to BFGS-A (the result of applying the SMW formula to the accelerated method AdaRBFGS from [6]) and the three one-sided, non-accelerated algorithms: S1 and DFP defined by Eq. (4) with weights \(W=I\) and \(W = A\) (respectively), and BFGS defined by applying the SMW formula to Eq. (5) with weight \(W=A^{-1}\). We use the same test matrices, initialization, and termination conditions described in Sect. 6: Fig. 2a shows results for \(A=X X^\top \); Fig. 2b shows results for the Hessian matrix Gisette Scale [3]; and Fig. 2c shows results for NASA4704 [4]. SS1A matches or outperforms all other algorithms for the three matrices. As before [13] contains MATLAB scripts and results for all matrices from [6]. Both BFGS-A and SS1A adaptively sample their update spaces. BFGS-A samples from the columns of the Cholesky decomposition of \(B_k\) while SS1-A effectively samples from a small power of the residual \(A-B_k\). Comparing BFGS to BFGS-A and S1 to SS1-A shows the benefits of adaptivity. The hybrid SS1A performs consistently well.

8 Conclusions and Future Work

Algorithms 1 to 3 iteratively generate matrix approximations to a fixed target from two-sided samples. Rotationally invariant sampling gives optimal theoretical convergence in general and predicted convergence rates are experimentally verified for several real world matrices, with comparable performance to existing one-sided algorithms. A hybrid method combining simultaneous iteration (to enrich a subspace) with the two-sided sampled update is developed and shown to be competitive with existing one-sided accelerated schemes.

The algorithms systematically make minimal changes and drive weighted residual norms for a fixed A monotonically to zero. Such self-correcting algorithms can potentially approximate slowly changing matrices, A(x). For example, QN optimization algorithms have a slowly changing Hessian target \(\nabla _x^2 f(x_k)\) while solvers for stiff ODEs \(y'(x) = f(y(x))\) have a slowly changing Jacobian target \(\nabla _y f(y(x_k))\). The two-sided sampled matrix approximation algorithms and theory presented in the article provide a general foundation for these and other applications. Efficient factorized updates, compact low rank approximations, inverse approximation, and sparse matrix sampling are all planned.

References

Andoni, A., Nguyen, H.L.: Eigenvalues of a matrix in the streaming model, pp. 1729–1737. SIAM (2013). https://doi.org/10.1137/1.9781611973105.124

Avron, H., Clarkson, K.L., Woodruff, D.P.: Sharper bounds for regularized data fitting. In: Jansen, K., Rolim, J.D.P., Williamson, D., Vempala, S.S. (eds.) Approximation, Randomization, and Combinatorial Optimization. Algorithms and Techniques, Leibniz International Proceedings in Informatics (LIPIcs), vol. 81, pp. 27:1–27:22. Schloss Dagstuhl-Leibniz-Zentrum fuer Informatik, Dagstuhl (2017). https://doi.org/10.4230/LIPIcs.APPROX-RANDOM.2017.27

Chang, C.C., Lin, C.J.: LIBSVM: a library for support vector machines. ACM Trans. Intell. Syst. Technol. 2, 27:1–27:27 (2011)

Davis, T.A., Hu, Y.: The university of florida sparse matrix collection. ACM Trans. Math. Softw. 38(1), 1:1–1:25 (2011). https://doi.org/10.1145/2049662.2049663

Gao, W., Goldfarb, D.: Block BFGS methods. SIAM J. Optim. 28(2), 1205–1231 (2018). https://doi.org/10.1137/16M1092106

Gower, R.M., Richtárik, P.: Randomized Quasi-Newton updates are linearly convergent matrix inversion algorithms. SIAM J. Matrix Anal. Appl. 38(4), 1380–1409 (2017). https://doi.org/10.1137/16M1062053

Halko, N., Martinsson, P., Tropp, J.: Finding structure with randomness: probabilistic algorithms for constructing approximate matrix decompositions. SIAM Rev. 53(2), 217–288 (2011). https://doi.org/10.1137/090771806

Karush, W.: Minima of Functions of Several Variables with Inequalities as Side Conditions. ProQuest LLC, Ann Arbor, MI (1939). Thesis (SM)-The University of Chicago

Li, Y., Nguyen, H.L., Woodruff, D.P.: On Sketching Matrix Norms and the Top Singular Vector, pp. 1562–1581. SIAM (2014). https://doi.org/10.1137/1.9781611973402.114

Naumann, U.: The Art of Differentiating Computer Programs. Society for Industrial and Applied Mathematics (2011). https://doi.org/10.1137/1.9781611972078

Nocedal, J.: Updating Quasi-Newton matrices with limited storage. Math. Comput. 35(151), 773–782 (1980). https://doi.org/10.2307/2006193

Nocedal, J., Wright, S.J.: Numerical Optimization. Springer Series in Operations Research and Financial Engineering, 2nd edn. Springer, New York (2006). https://doi.org/10.1007/978-0-387-40065-5

Ong, B., Azzam, J., Struthers, A.: Randomized iterative methods for matrix approximation - supplementary material and software repository (2021). https://www.mathgeek.us/publications.html

Robbins, H., Monro, S.: A stochastic approximation method. Ann. Math. Stat. 22(3), 400–407 (1951). https://doi.org/10.1214/aoms/1177729586

Schnabel, R.: Quasi-newton methods using multiple secant equations. Computer Science Technical Reports 244, 41 (1983). https://scholar.colorado.edu/csci_techreports/244/

Stewart, G.: The efficient generation of random orthogonal matrices with an application to condition estimators. SIAM J. Numer. Anal. 17(3), 403–409 (1980). https://doi.org/10.1137/0717034

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 Springer Nature Switzerland AG

About this paper

Cite this paper

Azzam, J., Ong, B.W., Struthers, A.A. (2022). Randomized Iterative Methods for Matrix Approximation. In: Nicosia, G., et al. Machine Learning, Optimization, and Data Science. LOD 2021. Lecture Notes in Computer Science(), vol 13164. Springer, Cham. https://doi.org/10.1007/978-3-030-95470-3_17

Download citation

DOI: https://doi.org/10.1007/978-3-030-95470-3_17

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-95469-7

Online ISBN: 978-3-030-95470-3

eBook Packages: Computer ScienceComputer Science (R0)