Abstract

Mathematical models have been widely used to understand the dynamics of the ongoing coronavirus disease 2019 (COVID-19) pandemic as well as to predict future trends and assess intervention strategies. The asynchronicity of infection patterns during this pandemic illustrates the need for models that can capture dynamics beyond a single-peak trajectory to forecast the worldwide spread and for the spread within nations and within other sub-regions at various geographic scales. Here, we demonstrate a five-parameter sub-epidemic wave modeling framework that provides a simple characterization of unfolding trajectories of COVID-19 epidemics that are progressing across the world at different spatial scales. We calibrate the model to daily reported COVID-19 incidence data to generate six sequential weekly forecasts for five European countries and five hotspot states within the United States. The sub-epidemic approach captures the rise to an initial peak followed by a wide range of post-peak behavior, ranging from a typical decline to a steady incidence level to repeated small waves for sub-epidemic outbreaks. We show that the sub-epidemic model outperforms a three-parameter Richards model, in terms of calibration and forecasting performance, and yields excellent short- and intermediate-term forecasts that are not attainable with other single-peak transmission models of similar complexity. Overall, this approach predicts that a relaxation of social distancing measures would result in continuing sub-epidemics and ongoing endemic transmission. We illustrate how this view of the epidemic could help data scientists and policymakers better understand and predict the underlying transmission dynamics of COVID-19, as early detection of potential sub-epidemics can inform model-based decisions for tighter distancing controls.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

1 Introduction

Throughout history, many of the large-scale infectious disease outbreaks, such as pandemic influenza (1918; 2009–2010), measles, and Ebola (2014–2016), have resulted in a single peak followed by a steady decline; therefore, many of the mathematical models commonly used to model outbreaks tend to follow this pattern. Other outbreaks, like HIV/AIDS and Ebola (2018–2020), however, result in more complicated trajectories that require models that can extend beyond the standard single-peak trends. The ongoing COVID-19 pandemic is accompanied by complicating factors in many countries, like delayed action for social distancing measures and premature relaxation of these measures. The sub-epidemic model presented here is flexible to these complex patterns, while remaining relatively simple. The model results and forecasts provide information for data scientists and policy makers to inform future decisions regarding relaxation of intervention measures or tighter controls for social distancing. Further, the model can infer the start of another outbreak wave from the data, which allows hospitals and health care settings to prepare for another potential increase of cases.

The asynchronicity of the infection patterns of the current coronavirus disease 2019 (COVID-19) pandemic illustrates the need for models that can capture complex dynamics beyond a single-peak trajectory to forecast the worldwide spread. This is also true for the spread within nations and within other sub-regions at various geographic scales. The infections in these asynchronous transmission networks underlie the reported infection data and need to be accounted for in forecasting models.

We analyze the COVID-19 pandemic assuming that the total number of new infections is the sum of all the infections created in multiple asynchronous outbreaks at differing spatial scales. We assume there are weak ties across sub-populations, and we represent the overall epidemic as an aggregation of sub-epidemics, rather than a single, universally connected outbreak. The sub-epidemics can start at different time points and affect different segments of the population in different geographic areas. Thus, we model sub-epidemics associated with transmission chains that are asynchronously triggered and that progress somewhat independently from the other sub-epidemics.

Jewell et al. [1] review the difficulties associated with long-term forecasting of the ongoing COVID-19 pandemic using statistical models that are not based on transmission dynamics. They also describe the limitations of models that use established mortality curves to calculate the pace of growth, the most likely inflection point, and subsequent diminution of the epidemic. The review analyzes the need for broad uncertainty bands, particularly for sub-national estimates. It also addresses the unavoidable volatility of both reporting and estimates based on reports. The analysis, delivered in the spirit of caution rather than remonstration, implies the need for other approaches that depend on overall transmission dynamics or large-scale agent-based simulations. Our sub-epidemic approach addresses this need in both the emerging and endemic stages of an epidemic.

This approach is analogous to the model used by Blower et al. [2] to demonstrate how the rise and endemic leveling of tuberculosis outbreaks could be explained by dynamical changes in the transmission parameters. A related multi-stage approach was used by Garnett [3] to explain the pattern of spread for sexually transmitted diseases and changes in the reproductive number during the course of an epidemic. Rothenberg et al. [4] demonstrated that the national curve of Penicillinase-Producing Neisseria gonorrhoeae occurrence resulted from multiple asynchronous outbreaks.

As with HIV/AIDS, which has now entered a phase of intractable endemic transmission in some areas [5], COVID-19 is likely to become endemic. New vaccines and pharmacotherapy might mitigate the transmission, but the disease will not be eradicated in the foreseeable future. Some earlier predictions based on mathematical models predicted that COVID-19 would soon disappear or approach a very low-level endemic equilibrium determined by herd immunity. To avoid unrealistic medium-range projections, some investigators artificially truncate the model projections before the model reaches these unrealistic forecasts.

Here, we demonstrate a five-parameter sub-epidemic wave modeling framework that provides a simple characterization of unfolding trajectories of COVID-19 epidemics that are progressing across the world at different spatial scales [6]. We systematically assess calibration and forecasting performance for the ongoing COVID-19 pandemic in hotspots located in the USA and Europe using the sub-epidemic wave model, and we compare results with those obtained using the Richards model, a well-known three-parameter single-peak growth model [7]. The sub-epidemic approach captures the rise to an initial peak followed by a wide range of post-peak behavior, ranging from a typical decline to a steady incidence level to repeated small waves for sub-epidemic outbreaks. This framework yields excellent short- and intermediate-term forecasts that are not attainable with other single-peak transmission models of similar complexity, whether mechanistic or phenomenological. We illustrate how this view of the epidemic could help data scientists and policymakers better understand and predict the underlying transmission dynamics of COVID-19.

2 Methods

2.1 Country-Level Data

We retrieved daily reported cumulative case data of the COVID-19 pandemic for France, the United Kingdom (UK), and the United States of America (USA) from the World Health Organization (WHO) website [8] and for Spain and Italy from the corresponding governmental websites [9, 10] from early February to May 24, 2020. We calculated the daily incidence from the cumulative trajectory and analyzed the incidence trajectory for the 5 countries.

2.2 State-Level US Data

We also retrieved daily cumulative case count data from The COVID Tracking Project [11] from February 27, 2020 to May 24, 2020 for five representative COVID-19 hotspot states in the USA, namely New York, Louisiana, Georgia, Arizona and Washington.

3 Methodology Overview: Parameter Estimation and Short-term Forecasts with Quantified Uncertainty

3.1 Parameter Estimation

Given a model, parameter estimation is the process of finding the parameter values and their uncertainty that best explain empirical data. In this section we briefly describe the parameter quantification method described in refs. [12]. First, we define the general form of a dynamic model composed by a system of h ordinary differential equations as follows:

where \( \dot{x_i} \)denotes the rate of change of the system state x i where i = 1, 2, …, h and Θ = (θ 1, θ 2, …, θ m) is the set of model parameters.

To calibrate dynamic models describing the trajectory of epidemics, researchers require temporal data for one or more states of the system (e.g., daily number of new outpatients, inpatients and deaths). In this paper, we consider the case with only one state of the system

The temporal resolution of the data typically varies according to the time scale at which relevant processes operate (e.g., daily, weekly, yearly) and the frequency at which the state of the system is measured. We denote the time series of n longitudinal observations of the single state by

where i = 1, 2, …, nwhere t i are the time points of the time series data and n is the number of observation time points. Let f(t, Θ) denote the mean of observed incidence series y t over time, which corresponds to \( \dot{x}(t) \)if x(t)denotes the cumulative count at time t. Usually the incidence series \( {y}_{t_i} \)is assumed to have a Poisson distribution with mean \( \dot{x}(t) \)or a negative binomial distribution if over-dispersion is present. Modeling an error structure using a negative binomial distribution would require the estimation of the over-dispersion coefficient. In the same way we estimate other model parameters, one would need to estimate the extra parameter from data.

Model parameters are estimated by fitting the model solution to the observed data via nonlinear least squares [13]. This is achieved by searching for the set of parameters \( \hat{\varTheta}=\left({\hat{\theta}}_1,{\hat{\theta}}_2,\dots, {\hat{\theta}}_m\right) \)that minimizes the sum of squared differences between the observed data \( {y}_{t_i=}{y}_{t_1,}{y}_{t_2}\dots ..{y}_{t_n} \)and the model mean which corresponds to f(t i, Θ). That is, Θ = (θ 1, θ 2, …, θ m) is estimated by \( \hat{\varTheta}=\arg \min\ \sum_{i=1}^n{\left(f\left({t}_i,\varTheta \right)-{y}_{t_i}\right)}^2 \).

Then, \( \hat{\varTheta} \) is the parameter set that yields the smallest differences between the data and model. This parameter estimation method gives the same weight to all of the data points. This method does not require a specific distributional assumption for y t, except for the first moment E[y t] = f(t i; Θ); meaning, the mean at time t is equivalent to the count (e.g., number of cases) at time t [14]. Moreover, this method yields asymptotically unbiased point estimates regardless of any misspecification of the variance-covariance error structure. Hence, the model mean \( f\left({t}_i,\hat{\varTheta}\right) \) yields the best fit to observed data \( {y}_{t_i} \)in terms of squared L2 norm. More generally, this objective function can be extended to simultaneously fit more than one state variable to their corresponding observed time series.

If we assume a Poisson error structure in the data, the parameters can be estimated via maximum likelihood estimation (MLE). Consider the probability density function (PDF) that specifies the probability of observing data y t given the parameter set Θ, or f(y t| Θ); given a set of parameter values, the PDF can show which data are more probable, or more likely [14]. MLE aims to determine the values of the parameter set that maximizes the likelihood function, where the likelihood function is defined as L(Θ| y t) = f(y t| Θ) [14, 15]. The resulting parameter set is called the MLE estimate, the most likely to have generated the observed data. Specifically, the MLE estimate is obtained by maximizing the corresponding log-likelihood function. For count data with variability characterized by the Poisson distribution, we utilize Poisson-MLE [16, 17], where the log-likelihood function is given by:

and the Poisson-MLE estimate is expressed as

.

In Matlab, we can use the fmincon function to set the optimization problem.

To quantify parameter uncertainty, we follow a parametric bootstrapping approach which is particularly powerful, as it allows the computation of standard errors and related statistics in the absence of closed-form formulas [18, 19]. As previously described in ref. [12], we generate S replicates from the best-fit model \( f\left({t}_i,\hat{\varTheta}\right) \) by assuming an error structure in the data (e.g., Poisson) in order to quantify the uncertainty of the parameter estimates and construct confidence intervals. Specifically, using the best-fit model \( f\left({t}_i,\hat{\varTheta}\right) \), we generate S-times replicated simulated datasets, where the observation at time t iis sampled from the Poisson distribution with mean \( f\left({t}_i,\hat{\varTheta}\right) \). Next, we refit the model to each of the S simulated datasets to re-estimate parameters for each of the S-simulated realizations. The new parameter estimates for each realization are denoted by \( {\hat{\varTheta}}_i \)where i = 1, 2, …, S. Using the sets of re-estimated parameters \( \left({\hat{\varTheta}}_i\right), \)it is possible to characterize the empirical distribution of each estimate and construct confidence intervals for each parameter. Moreover, the resulting uncertainty around the model fit is given by \( f\left(t,{\hat{\varTheta}}_1\right), \) \( f\left(t,{\hat{\varTheta}}_2\right),\dots, f\left(t,{\hat{\varTheta}}_S\right) \). It is worth noting that a Poisson error structure is the most common for modeling count data where the mean of the distribution equals the variance. In situations where the time series data show over-dispersion, a negative binomial distribution can be employed instead [12].

3.2 Model-Based Forecasts with Quantified Uncertainty

Forecasting from a given model \( f\left(t,\hat{\varTheta}\right),h \) units of time ahead is straight forward:

\( f\left(t+h,\hat{\varTheta}\right) \). We can use the RMSE to quantify the forecasting performance of the models. The uncertainty of the forecasted value can be obtained using the previously described parametric bootstrap method. Let

denote the forecasted value of the current state of the system propagated by a horizon of h time units, where \( {\hat{\varTheta}}_i \) denotes the estimation of parameter set Θ from the ith bootstrap sample. We can calculate the bootstrap variance of the estimates to measure the uncertainty of the forecasts, and the 2.5% and 97.5% percentiles to construct 95% confidence intervals.

4 Generalized Growth Model (GGM)

The generalized growth model (GGM) is a simple model that characterizes the early ascending phase of the epidemic. Previous studies have highlighted the occurrence of early sub-exponential growth patterns in various infectious disease outbreaks. This model allows for the relaxation of exponential growth by modulating a “scaling of growth parameter”, p, which allows the model to capture a range of epidemic growth profiles [20]. The GGM is given by the following differential equation:

In this equation C ′(t) describes the incidence curve over time t, C(t) describes the cumulative number of cases at time t, p∈[0,1] is a “deceleration or scaling of growth” parameter and r is the growth rate. This model represents constant incidence over time if p = 0 and exponential growth for cumulative cases if p = 1. If p is in the range 0 < p < 1, then the model indicates sub-exponential or polynomial growth dynamics [20,21,22,23,24,25].

5 Generalized Logistic Growth Model

The generalized logistic growth model (GLM) is an extension of the simple logistic growth model that allows for capturing a range of epidemic growth profiles, including sub-exponential and exponential growth dynamics. The GLM characterizes epidemic growth through the intrinsic growth rate r, a dimensionless “deceleration of growth” parameter p, and the final epidemic size, k 0. The deceleration parameter modulates the epidemic growth patterns including sub-exponential growth (0 < p < 1), constant incidence (p = 0) and exponential growth dynamics (p = 1). The GLM is given by the following differential equation:

where \( \frac{dC(t)}{dt} \) describes the incidence over time t, and the cumulative number of cases at time t is given by (t) [25,26,27,28].

6 Richards Growth Model

The Richards growth model is also an extension of the simple logistic growth model and relies on three parameters. It extends the simple logistic growth model by incorporating a scaling parameter, a, that measures the deviation from the symmetric simple logistic growth curve [7, 21, 29]. The Richards model is given by the differential equation:

where c(t) represents the cumulative case count at time t, r is the growth rate, a is a scaling parameter and k 0 is the final epidemic size.

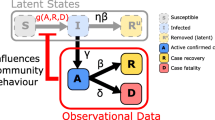

6.1 Sub-epidemic Wave Modeling Motivation

The concept of weak ties was originally proposed by Granovetter in 1973 [30] to form a connection between microevents and macro events. We use this idea to link the person-to-person viral transmission of severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2) to the trajectory of the COVID-19 epidemic. The transient connection between two people with different personal networks that results in the transference of the virus between the networks can be viewed as a weak tie. This event can cause asynchronous epidemic curves within the overall network. The events can spread the infection between sub-populations defined by neighborhoods, zip codes, counties, states, or countries. The resulting epidemic curve can be modeled as the sum of asynchronous sub-epidemics that reflect the movement of the virus into new populations.

In the absence of native immunity, specific viricidal treatment, or a working vaccine, our non-pharmacological preventive tools—testing, contact tracing, social separation, isolation, lockdown—are the key influences on sub-epidemic spread [31,32,33,34]. The continued importation of new cases will result in low-level endemic transmission. A model based on sub-epidemic events can forecast the level of endemic spread at a steady state. This can then be used to guide intervention efforts accounting for the continued seeding of new infections.

6.2 Sub-epidemic Modeling Approach

We use a five-parameter epidemic wave model that aggregates linked overlapping sub-epidemics [6]. The strength (e.g., weak vs. strong) of the overlap determines when the next sub-epidemic is triggered and is controlled by the onset threshold parameter, C thrs. The incidence defines a generalized-logistic growth model (GLM) differential equation for the cumulative number of cases, C t, at time t:

Here, r is the fixed growth rate, and p is the scaling of growth parameter, and K 0 is the final size of the initial sub-epidemic. The growth rate depends on the parameter . If p = 0, then the early incidence is constant over time, while if p = 1 then the early incidence grows exponentially. Intermediate values of (0 < p< 1) describe early sub-exponential (e.g. polynomial) growth patterns.

The sub-epidemics are modeled by a system of coupled differential equations:

Here C i(t) is the cumulative number of infections for sub-epidemic i, and K i is the size of the i th sub-epidemic where i = 1, …, n. Starting from an initial sub-epidemic size K 0, the size of consecutive sub-epidemics K i decline at the rate q following an exponential or power-law function.

The onset timing of the (i + 1)th sub-epidemic is determined by the indicator variable A

i

(t). This results in a coupled system of sub-epidemics where the (i + 1)th sub-epidemic is triggered when the cumulative number of cases for the i

th sub-epidemic exceeds a total of

cases. The sub-epidemics are overlapping because the C

thr sub-epidemic takes off before the

cases. The sub-epidemics are overlapping because the C

thr sub-epidemic takes off before the

sub-epidemic completes its course. That is,

sub-epidemic completes its course. That is,

The threshold parameters are defined so 1 C th K 0 and A 0(t) = 1 for the first sub-epdemic.

This framework allows the size of the i th sub-epidemic (K i) to remain steady or decline based on the factors underlying the transmission dynamics. These factors could include a gradually increasing effect of public health interventions or population behavior changes that mitigate transmission. We consider both exponential and inverse decline functions to model the size of consecutive sub-epidemics.

6.3 Exponential Decline of Sub-epidemic Sizes

If consecutive sub-epidemics decline exponentially, then K i is given by:

where K 0 is the size of the initial sub-epidemic (K 1 = K 0). If q = 0, then the model predicts an epidemic wave comprising sub-epidemics of the same size. When q > 0, then the total number of sub-epidemics n tot is finite and depends on C thr, q, and K 0 The sub-epidemic is only triggered if C thr ≤ K i, resulting in a finite number of sub-epidemics,

The brackets ⌊∗⌋ denote the largest integer that is smaller than or equal to *. The total size of the epidemic wave composed of n tot overlapping sub-epidemics has a closed-form solution:

6.4 Inverse Decline of Sub-epidemic Sizes

The consecutive sub-epidemics decline according to the inverse function given by:

When q > 0, then the total number of sub-epidemics n tot is finite and is given by:

The total size of an epidemic wave is the sum of n overlapping sub-epidemics,

In the absence of control interventions or behavior change (q = 0), the total epidemic size depends on a given number n of sub-epidemics,

The initial number of cases is given by C 1(0) = I 0 where I 0 is the initial number of cases in the observed case data. The cumulative cases, C(t), is the sum of all cumulative infections over the n overlapping sub-epidemics waves:

6.5 Parameter Estimation

Fitting the model to the time series of case incidence requires estimating up to five model parameters Θ = (C thr,q,r, p,K). If a single sub-epidemic is sufficient to fit the data, then the model is simplified to the three-parameter generalized-logistic growth model. The model parameters were estimated by a nonlinear least square fit of the model solution to the observed incidence data [13]. This is achieved by searching for the set of parameter \( \hat{\varTheta}=\left({\hat{\theta}}_1,\kern0.10em {\hat{\theta}}_2, \ldots,{\hat{\theta}}_m\right) \) that minimizes the sum of squared differences between the observed incidence data \( {y}_{t_i}={y}_{t_1},{y}_{t_2},\dots, {y}_{t_N} \) and the corresponding mean incidence curve denoted by f(t i, ). That is, the parameters are estimated by

where t i are the time points at which the time-series data are observed, and N is the number of data points available for inference. Hence, the model solution \( f\left({t}_i,\hat{\varTheta}\right) \) yields the best fit to the time series data \( {y}_{t_i} \) where \( \hat{\varTheta} \) is the vector of parameter estimates.

We solve the nonlinear least squares problem using the trust-region reflective algorithm. We used parametric bootstrap, assuming an error structure described in the next section, to quantify the uncertainty in the parameters obtained by a non-linear least squares fit of the data, as described in refs. [12, 35]. Our best-fit model solution is given by \( f\left(t,\hat{\varTheta}\right) \) where \( \hat{\varTheta} \) is the vector of parameter estimates. Our MATLAB (The MathWorks, Inc) code for model fitting along with outbreak datasets is publicly available [36].

The confidence interval for each estimated parameter and 95% prediction intervals of the model fits were obtained using parametric bootstrap [12]. Let S denote the number of bootstrap realizations and \( {\hat{\varTheta}}_i \) denote the re-estimation of parameter set Θ from the ith bootstrap sample. The variance and confidence interval for \( \hat{\varTheta} \) are estimated from \( {\hat{\varTheta}}_1,\dots, {\hat{\varTheta}}_S. \) Similarly, the uncertainty of the model forecasts, \( f\left(t,\hat{\varTheta}\right) \), is estimated using the variance of the parametric bootstrap samples

where \( {\hat{\varTheta}}_i \) denotes the estimation of parameter set Θ from the ith bootstrap sample. The 95% prediction intervals of the forecasts in the examples are calculated from the 2.5% and 97.5% percentiles of the bootstrap forecasts.

6.6 Error Structure

We model a negative binomial distribution for the error structure and assume a constant variance/mean ratio over time (i.e., the overdispersion parameter). To estimate this constant ratio, we group every four daily observations into a bin across time, calculate the mean and variance for each bin, and then estimate a constant variance/mean ratio by calculating the average of the variance/mean ratios over these bins. Exploratory analyses indicate that this ratio is frequently stable across bins, except for 1–2 extremely large values, which could result from a sudden increase or decrease in the number of reported cases. These sudden changes could result from changes in case definition or a weekend effect whereby the number of reported cases decreases systematically during weekends. Hence, these extreme large values of variance/mean ratio are excluded when estimating the constant variance/mean ratio.

6.7 Model Calibration and Forecasting Approach

For each of the ten regions, we analyzed six weekly sequential forecasts, conducted on March 30, April 6, April 13, April 20, April 27, and May 4, 2020, and assessed the calibration and forecasting performances at increasing time horizons of 2, 4, 6, …, and 20 days ahead. The models were sequentially re-calibrated each week using the most up-to-date daily curve of COVID-19 reported cases. That is, each sequential forecast included one additional week of data than the previous forecast. For comparison, we also generated forecasts using the Richards model, a well-known single-peak growth model with three parameters [7, 37].

6.8 Model Performance

To assess both the quality of the model fit and the short-term forecasts, we used four performance metrics: the mean absolute error (MAE), the mean squared error (MSE), the coverage of the 95% prediction intervals, and the mean interval score (MIS) [38]. The mean absolute error (MAE) is given by:

Here \( {y}_{t_i} \) is the time series of incident cases describing the epidemic wave where t i are the time points of the time series data [39]. Similarly, the mean squared error (MSE) is given by:

In addition, we assessed the coverage of the 95% prediction interval, e.g., the proportion of the observations that fell within the 95% prediction interval as well as a metric that addresses the width of the 95% prediction interval as well as coverage via the mean interval score (MIS) [38, 40] which is given by:

where L t and U t are the lower and upper bounds of the 95% prediction interval and I{} is an indicator function. Thus, this metric rewards for narrow 95% prediction intervals and penalizes at the points where the observations are outside the bounds specified by the 95% prediction interval where the width of the prediction interval adds up to the penalty (if any) [38].

The mean interval score (MIS) and the coverage of the 95% prediction intervals take into account the uncertainty of the predictions whereas the mean absolute error (MAE) and mean squared error (MSE) only assess the closeness of the mean trajectory of the epidemic to the observations [41]. These performance metrics have also been adopted in international forecasting competitions [40].

For comparison purposes, we compare the performance of the sub-epidemic wave model with that obtained from the 3-parameter Richards model [7], a well-known single-peak growth model given by:

where θ determines the deviation from symmetry, and again r is the growth rate, and K is the final epidemic size.

7 Results

7.1 Model Parameters and Calibration Performance

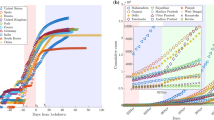

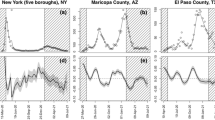

A five-parameter dynamic model, postulating sub-exponential growth in linked sub-epidemics, captures the aggregated growth curve in diverse settings (Figs. 1, 2, and 3 and Figs. S3, S4, S5, S6, S7, S8, and S9). Using national-level data from five countries, we estimate the initial sub-exponential growth parameter (p) with a mean ranging from 0.7 to 0.9. Our analysis of five representative hotspot states in the USA indicates that early growth was sub-exponential in New York, Arizona, Georgia, and Washington (mean p ~ 0.5–0.9) and exponential in Louisiana (Table 1). Moreover, the rate of sub-epidemic decline that captures the effects of interventions and population behavior changes is shown in Fig. S10. The decay rate was fastest for Italy, followed by France, with the lowest decline rate in the USA (Table 1). Within the USA, the decline rate was the fastest for New York and Louisiana and more gradual for Georgia and Washington (Fig. S10).

The best fit of the sub-epidemic model to the COVID-19 epidemic in Spain. The sub-epidemic wave model successfully captures the multimodal pattern of the COVID-19 epidemic. Further, parameter estimates are well identified, as indicated by their relatively narrow confidence intervals. The top panels display the empirical distribution of estimated parameters. Bottom panels show the model fit (left), the sub-epidemic profile (center), and the residuals (right). Black circles correspond to the data points. The best model fit (solid red line) and 95% prediction interval (dashed red lines) are also shown. Cyan curves are the associated uncertainty from individual bootstrapped curves. Three hundred realizations of the sub-epidemic waves are plotted using different colors

The best fit of the sub-epidemic model to the COVID-19 epidemic in the USA. The sub-epidemic wave model successfully captures the multimodal pattern of the COVID-19 epidemic. Further, parameter estimates are well identified, as indicated by their relatively narrow confidence intervals. The top panels display the empirical distribution of estimated parameters. Bottom panels show the model fit (left), the sub-epidemic profile (center), and the residuals (right). Black circles correspond to the data points. The best model fit (solid red line) and 95% prediction interval (dashed red lines) are also shown. Cyan curves are the associated uncertainty from individual bootstrapped curves. Three hundred realizations of the sub-epidemic waves are plotted using different colors

The best fit of the sub-epidemic model to the COVID-19 epidemic in New York State. The sub-epidemic wave model successfully captures the overlapping sub-epidemic growth pattern of the COVID-19 epidemic. Further, parameter estimates are well identified, as indicated by their relatively narrow confidence intervals. The top panels display the empirical distribution of estimated parameters. Bottom panels show the model fit (left), the sub-epidemic profile (center), and the residuals (right). Black circles correspond to the data points. The best model fit (solid red line) and 95% prediction interval (dashed red lines) are also shown. Cyan curves are the associated uncertainty from individual bootstrapped curves. Three hundred realizations of the sub-epidemic waves are plotted using different colors

The calibration performance across all regions presented in Figs. S1 and S2 is substantially better for the overlapping sub-epidemic model compared to the Richards model based on each of the performance metrics (for MAE, MSE, and MIS, smaller is better; for 95% PI coverage, larger is better). An informative example of the model fit to the trajectory of the COVID-19 epidemic in Spain (Fig. 1) shows the early growth of the epidemic in a single large sub-epidemic followed by a smaller sub-epidemic (blue in row 2, column 2 of Fig. 1), which is then followed by a much smaller sub-epidemic (green). In row 1 (Fig. 1), the parameter distributions demonstrate relatively small confidence intervals. Thus, the model captures a common phenomenon in epidemic situations: an initial steep rise, followed by a leveling or decline, then a second rise, and a subsequent repeat of the same pattern. A somewhat different pattern is observed in the USA, which experienced sustained transmission with high mortality for a long period (Fig. 2). A single epidemic wave failed to capture the early growth phase and the later leveling off; whereas, the aggregation of multiple sub-epidemics produces a better fit to the observed dynamics. In comparison, New York, the early epicenter of the pandemic in the USA, displays a similar sub-epidemic profile, while the sub-epidemic sizes decline at a much faster rate (Fig. 3).

Similar composite figures for the remaining regions (Figs. S3, S4, S5, S6, S7, S8, and S9) demonstrate diverse patterns of underlying sub-epidemic waves. For example, Italy experienced a single peak, largely the result of an initial sub-epidemic (in red), that was quickly followed by several rapidly declining sub-epidemics that slowed the downward progression (Fig. S3). The UK’s sub-epidemic profile resembles that of the USA, but the sub-epidemics decline at a faster rate (Fig. S5; Table 1).

7.2 Forecasting Performance

The sub-epidemic wave model outperformed the simpler Richards model in most of the 2-20 day ahead forecasts (see Fig. 4 and Figs. S11, S12, S13, S14, S15, S16, S17, S18, and S19). We observe that the sub-epidemic model forecasting accuracy increases as evidence for the second sub-epidemic appears in the data. For instance, the initial forecasts for the USA using the sub-epidemic model (Figs. 5 and S20) underestimate reported incidence for the 20 days after April 7th, which is likely attributable to the unexpected leveling off of the epidemic wave. However, this model provided more accurate forecasts in subsequent 20-day forecasts.

Sub-epidemic profiles of the sequential 20-day ahead forecasts for the COVID-19 epidemic in the USA. Different colors represent different sub-epidemics of the epidemic wave profile. The aggregated trajectories are shown in gray, and black circles correspond to the data points. The vertical line separates the calibration period (left) from the forecasting period (right). The sequential forecasts were conducted on March 30, April 6, April 13, April 20, April 27, and May 4, 2020

Similarly, sub-national models of the USA state trajectories confirm the general findings of fit and 20-day forecasting (see supplementary materials). Among the most striking of these is the sub-epidemic structure modeled for New York state (Fig. S25). When the sub-epidemic model is calibrated by April 7, 2020, a single sub-epidemic is observed; however, subsequent weeks of data helped infer an underlying overlapping sub-epidemic structure and correctly forecasted the subsequent downward trend. With variation, other states shown in the supplementary materials provided similar confirmation of the method.

8 Discussion

Our sub-epidemic modeling framework is based on the premise that the aggregation of regular sub-epidemic dynamics can determine the shape of the trajectory of epidemic waves observed at larger spatial scales. This framework has been particularly suitable for forecasting the spatial wave dynamics of the COVID-19 pandemic, where the trajectory of the epidemic at different spatial scales does not display a single peak followed by a “burnout” period, but instead follows more complex transmission patterns including leveling off, plateaus, and long-tail decline periods. The model overwhelmingly outperformed a standard growth model that only allows for single-peak transmission dynamics. Model parameters also inform the effect of interventions and population behavior changes in terms of the sub-epidemic decay rate.

Overall, this approach predicts that a relaxation of the tools currently at our disposal—primarily aimed at preventing person-to-person and person-to-surface contact—would result in continuing sub-epidemics and ongoing endemic transmission. If we add widespread availability of testing, contact tracing, and cluster investigation (e.g. nursing homes, meatpacking plants, and other sites of unavoidable congregation), early suppression of sub-epidemics may be possible. The United States leads in the total number of tests performed, but it is currently ranked 25th among all nations in testing per capita [42]. The sub-epidemic description of COVID-19 transmission provides a rationale for substantial increases in testing.

Parsimony in model construction is not an absolute requirement, but it has several advantages. With fewer parameters to estimate, the joint simulations are more efficient and more understandable. Degenerate results are more easily avoided, and, when properly constructed, confidence intervals for the key parameters are more constrained. In our projections, we fit five parameters to the data:

-

1.

The onset threshold parameter, C thr, that triggers the onset of a new sub-epidemic and determines if the overlap is weak or strong,

-

2.

The new epidemic starting size, K 0,

-

3.

The size of consecutive sub-epidemics decline rates q,

-

4.

The positive parameter r denoting the growth rate of a sub-epidemic, and

-

5.

The “scaling of growth” parameter p ∈ [0,1](exponential or sub-exponential).

As shown in Fig. 1, the confidence limits for these parameters are narrow, and the scaling of growth parameter is constantly in the 0.8–0.9 range (Table 1).

Short-term forecasting is an important attribute of the model. Though long-term forecasts are of value, their dependability varies inversely with the time horizon. The 20-day forecasts are most valuable for the monitoring, management, and relaxation of the social distancing requirements. The early detection of potential sub-epidemics can signal the need for strict distancing controls, and the reports of cases can identify the geographic location of incubating sub-epidemics. No single model or method can provide an unerring approach to epidemic control. The multiplicity of models now available can be viewed as a source of confusion, but it is better thought of as a strength that provides multiple perspectives [43,44,45]. The sub-epidemic approach adds to the current armamentarium for guiding us through the COVID-19 pandemic.

References

Jewell NP, Lewnard JA, Jewell BL. Caution Warranted: Using the Institute for Health Metrics and Evaluation Model for Predicting the Course of the COVID-19 Pandemic. Annals of Internal Medicine 2020;173:xxx-xxx. https://doi.org/107326/M20-1565.

Blower SM, McLean AR, Porco TC, Small PM, Hopewell PC, Sanchez MA, et al. The intrinsic transmission dynamics of tuberculosis epidemics [see comments]. Nature Medicine. 1995;95(8):815-21.

Garnett GP. The geographical and temporal evolution of sexually transmitted disease epidemics. Sexually Transmitted Infections. 2002;78(Suppl 1):14-9.

Rothenberg R, Voigt R. Epidemiologic Aspects of Control of Penicillinase-Producing Neisseria gonorrhoeae. Sexually Transmitted Diseases. 1988;15(4):211-6.

Rothenberg R, Dai D, Adams MA, Heath JW. The HIV endemic: maintaining disease transmission in at-risk urban areas. Sexually Transmitted Diseases. 2017;44(2):71-8.

Chowell G, Tariq A, Hyman JM. A novel sub-epidemic modeling framework for short-term forecasting epidemic waves. BMC Medicine. 2019;17(1):164.

Wang XS, Wu J, Yang Y. Richards model revisited: validation by and application to infection dynamics. Journal of theoretical biology. 2012;313:12-9.

Nossiter A. Male reports first death from Ebola. New York Times [2014 Oct 24]. Available from: http://www.nytimes.com/2014/10/25/world/africa/mali-reports-first-death-from-ebola.html (accessed on 2015 Jan 13). 2014.

Onishi N, Santora M. Ebola patient in Dallas lied on screening form, Liberian airport official says. New York Times [2014 Oct 2]. Available from: http://www.nytimes.com/2014/10/03/world/africa/dallas-ebola-patient-thomas-duncan-airport-screening.html (accessed on 2015 Feb 28). 2014.

Onishi N. Last known Ebola patient in Liberia is discharged. New York Times [2015 Mar 5]. Available from: http://www.nytimes.com/2015/03/06/world/africa/last-ebola-patient-in-liberia-beatrice-yardolo-discharged-from-treatment.html?ref=topics&_r=0 (accessed on 2015 Mar 6). 2015.

The COVID Tracking Project [Available from: https://covidtracking.com/data.

Chowell G. Fitting dynamic models to epidemic outbreaks with quantified uncertainty: A Primer for parameter uncertainty, identifiability, and forecasts. Infect Dis Model. 2017;2(3):379-98.

Banks HT, Hu S, Thompson WC. Modeling and inverse problems in the presence of uncertainty: CRC Press; 2014.

Myung IJ. Tutorial on maximum likelihood estimation. Journal of Mathematical Pyschology; 2003. p. 90-100.

Kashin K. Statistical Inference: Maximum Likelihood Estimation. 2014.

Roosa K, Luo R, Chowell G. Comparative assessment of parameter estimation methods in the presence of overdispersion: a simulation study. Mathematical biosciences and engineering : MBE. 2019;16(5):4299-313.

Yan P, Chowell G. Quantitative methods for investigating infectious disease outbreaks. Switzerland: Springer Nature; 2019.

Friedman J, Hastie T, Tibshirani R. The Elements of Statistical Learning: Data Mining, Inference, and Prediction. New York, NY.: Springer-Verlag New York; 2009.

Smirnova A, Chowell G. A primer on stable parameter estimation and forecasting in epidemiology by a problem-oriented regularized least squares algorithm. Infect Dis Model. 2017;2(2):268-75.

Viboud C, Simonsen L, Chowell G. A generalized-growth model to characterize the early ascending phase of infectious disease outbreaks. Epidemics. 2016;15:27-37.

Chowell G. Fitting dynamic models to epidemic outbreaks with quantified uncertainty: A primer for parameter uncertainty, identifiability, and forecasts. Infectious Disease Modelling. 2017;2(3):379-98.

Chowell G, Sattenspiel L, Bansal S, Viboud C. Mathematical models to characterize early epidemic growth: A review. Physics of Life Reviews. 2016;18:66-97.

Roosa K, Lee Y, Luo R, Kirpich A, Rothenberg R, Hyman JM, et al. Real-time forecasts of the COVID-19 epidemic in China from February 5th to February 24th, 2020. Infect Dis Model. 2020;5:256-63.

Roosa K, Lee Y, Luo R, Kirpich A, Rothenberg R, Hyman JM, et al. Short-term Forecasts of the COVID-19 Epidemic in Guangdong and Zhejiang, China: February 13-23, 2020. J Clin Med. 2020;9(2).

Pell B, Kuang Y, Viboud C, Chowell G. Using phenomenological models for forecasting the 2015 Ebola challenge. Epidemics. 2018;22:62-70.

Shanafelt DW, Jones G, Lima M, Perrings C, Chowell G. Forecasting the 2001 Foot-and-Mouth Disease Epidemic in the UK. Ecohealth. 2018;15(2):338-47.

Chowell G, Hincapie-Palacio D, Ospina J, Pell B, Tariq A, Dahal S, et al. Using Phenomenological Models to Characterize Transmissibility and Forecast Patterns and Final Burden of Zika Epidemics. PLoS currents. 2016;8:ecurrents.outbreaks.f14b2217c902f453d9320a43a35b583.

Shanafelt DW, Jones G, Lima M, Perrings C, Chowell G. Forecasting the 2001 Foot-and-Mouth Disease Epidemic in the UK. EcoHealth. 2017.

Richards FJ. A Flexible Growth Function for Empirical Use. Journal of Experimental Botany. 1959;10(2):290-301.

Granovetter MS. The strength of weak ties. American Journal of Sociology. 1973;78(6):1360-80.

Cheng VCC, Wong S-C, To KKW, Ho PL, Yuen K-Y. Preparedness and proactive infection control measures against the emerging Wuhan coronavirus pneumonia in China. Journal of Hospital Infection. 2020.

Pan J, Yao Y, Liu Z, Li M, Wang Y, Dong W, et al. Effectiveness of control strategies for Coronavirus Disease 2019: a SEIR dynamic modeling study. https://doi.org/10.1101/2020.02.19.200253872020.

Prem K, Liu Y, Russell T, Kucharski AJ, Eggo RM, Davies N, et al. The effect of control strategies that reduce social mixing on outcomes of the COVID-19 epidemic in Wuhan, China. 2020. https://doi.org/10.1101/2020.03.09.20033050.

Lai S, Ruktanonchai NW, Zhou L, Prosper O, Luo W, Floyd JR, et al. Effect of non-pharmaceutical interventions for containing the COVID-19 outbreak: an observational and modelling study. 2020. https://doi.org/10.1101/2020.03.03.20029843.

Chowell G, Ammon CE, Hengartner NW, Hyman JM. Transmission dynamics of the great influenza pandemic of 1918 in Geneva, Switzerland: Assessing the effects of hypothetical interventions. Journal of theoretical biology. 2006;241(2):193-204.

Chowell G, Tariq A, Hyman JM. A novel sub-epidemic modeling framework for short-term forecasting epidemic waves: Datasets and fitting code. figshare. Available from: https://doi.org/10.6084/m9.figshare.8867882. 2019.

Hsieh YH, Cheng YS. Real-time forecast of multiphase outbreak. Emerging infectious diseases. 2006;12(1):122-7.

Gneiting T, Raftery AE. Strictly proper scoring rules, prediction, and estimation. J Am Stat Assoc. 2007;102(477):359-78.

Kuhn M, Johnson K. Applied predictive modeling: New York: Springer; 2013.

M4Competition. Competitor’s Guide: Prizes and Rules. Available from: https://www.m4.unic.ac.cy/wp-content/uploads/2018/03/M4-Competitors-Guide.pdf (accessed 04/01/2019) [

Funk S, Camacho A, Kucharski AJ, Lowe R, Eggo RM, Edmunds WJ. Assessing the performance of real-time epidemic forecasts: A case study of Ebola in the Western Area region of Sierra Leone, 2014-15. PLoS computational biology. 2019;15(2):e1006785.

COVID-19 coronavirus / cases [Internet]. 2020. Available from: https://www.worldometers.info/coronavirus/coronavirus-cases/.

Roosa K, Tariq A, Yan P, Hyman JM, Chowell G. Multi-model forecasts of the ongoing Ebola epidemic in the Democratic Republic of Congo, March-October 2019. Journal of the Royal Society, Interface/the Royal Society. 2020;17(169):20200447.

Chowell G, Viboud C, Simonsen L, Merler S, Vespignani A. Perspectives on model forecasts of the 2014-2015 Ebola epidemic in West Africa: lessons and the way forward. BMC medicine. 2017;15(1):42.

Viboud C, Sun K, Gaffey R, Ajelli M, Fumanelli L, Merler S, et al. The RAPIDD ebola forecasting challenge: Synthesis and lessons learnt. Epidemics. 2018;22:13-21. Worldometer. (Accessed May 11, 2020, at https://www.worldometers.info/coronavirus/.)

Acknowledgments

Funding: GC was supported by grants NSF 1414374 as part of the joint NSF-National Institutes of Health NIH-United States Department of Agriculture USDA Ecology and Evolution of Infectious Diseases program; UK Biotechnology and Biological Sciences Research Council [grant BB/M008894/1] and RAPID NSF 2026797.

Author contributions: GC conceived the study. KR and AT contributed to data analysis. All authors contributed to the interpretation of the results. GC and RR wrote the first draft of the manuscript. All authors contributed to writing subsequent drafts of the manuscript. All authors read and approved the final manuscript.

Competing interests: Authors declare no competing interests.

Data and materials availability: All data are publicly available.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendix

Appendix

The best fit of the sub-epidemic model to the COVID-19 epidemic in Italy. The sub-epidemic wave model successfully captures the multimodal pattern of the COVID-19 epidemic. Further, parameter estimates are well identified, as indicated by their relatively narrow confidence intervals. The top panels display the empirical distribution of r, p, K, and q. Bottom panels show the model fit (left), the sub-epidemic profile (center), and the residuals (right). Black circles correspond to the data points. The best model fit (solid red line) and 95% prediction interval (dashed red lines) are also shown. Cyan curves are the associated uncertainty from individual bootstrapped curves. Three hundred realizations of the sub-epidemic waves are plotted using different colors

The best fit of the sub-epidemic model to the COVID-19 epidemic in France. The sub-epidemic wave model successfully captures the multimodal pattern of the COVID-19 epidemic. Further, parameter estimates are well identified, as indicated by their relatively narrow confidence intervals. The top panels display the empirical distribution of r, p, K, and q. Bottom panels show the model fit (left), the sub-epidemic profile (center), and the residuals (right). Black circles correspond to the data points. The best model fit (solid red line) and 95% prediction interval (dashed red lines) are also shown. Cyan curves are the associated uncertainty from individual bootstrapped curves. Three hundred realizations of the sub-epidemic waves are plotted using different colors

The best fit of the sub-epidemic model to the COVID-19 epidemic in the United Kingdom. The sub-epidemic wave model successfully captures the multimodal pattern of the COVID-19 epidemic. Further, parameter estimates are well identified, as indicated by their relatively narrow confidence intervals. The top panels display the empirical distribution of r, p, K, and q. Bottom panels show the model fit (left), the sub-epidemic profile (center), and the residuals (right). Black circles correspond to the data points. The best model fit (solid red line) and 95% prediction interval (dashed red lines) are also shown. Cyan curves are the associated uncertainty from individual bootstrapped curves. Three hundred realizations of the sub-epidemic waves are plotted using different colors

The best fit of the sub-epidemic model to the COVID-19 epidemic in Louisiana, USA. The sub-epidemic wave model successfully captures the multimodal pattern of the COVID-19 epidemic. Further, parameter estimates are well identified, as indicated by their relatively narrow confidence intervals. The top panels display the empirical distribution of r, p, K, and q. Bottom panels show the model fit (left), the sub-epidemic profile (center), and the residuals (right). Black circles correspond to the data points. The best model fit (solid red line) and 95% prediction interval (dashed red lines) are also shown. Cyan curves are the associated uncertainty from individual bootstrapped curves. Three hundred realizations of the sub-epidemic waves are plotted using different colors

The best fit of the sub-epidemic model to the COVID-19 epidemic in Georgia, USA. The sub-epidemic wave model successfully captures the multimodal pattern of the COVID-19 epidemic. Further, parameter estimates are well identified, as indicated by their relatively narrow confidence intervals. The top panels display the empirical distribution of r, p, K, and q. Bottom panels show the model fit (left), the sub-epidemic profile (center), and the residuals (right). Black circles correspond to the data points. The best model fit (solid red line) and 95% prediction interval (dashed red lines) are also shown. Cyan curves are the associated uncertainty from individual bootstrapped curves. Three hundred realizations of the sub-epidemic waves are plotted using different colors

The best fit of the sub-epidemic model to the COVID-19 epidemic in Arizona, USA. The sub-epidemic wave model successfully captures the multimodal pattern of the COVID-19 epidemic. Further, parameter estimates are well identified, as indicated by their relatively narrow confidence intervals. The top panels display the empirical distribution of r, p, K, and q. Bottom panels show the model fit (left), the sub-epidemic profile (center), and the residuals (right). Black circles correspond to the data points. The best model fit (solid red line) and 95% prediction interval (dashed red lines) are also shown. Cyan curves are the associated uncertainty from individual bootstrapped curves. Three hundred realizations of the sub-epidemic waves are plotted using different colors

The best fit of the sub-epidemic model to the COVID-19 epidemic in Washington. The sub-epidemic wave model successfully captures the multimodal pattern of the COVID-19 epidemic. Further, parameter estimates are well identified, as indicated by their relatively narrow confidence intervals. The top panels display the empirical distribution of r, p, K, and q. Bottom panels show the model fit (left), the sub-epidemic profile (center), and the residuals (right). Black circles correspond to the data points. The best model fit (solid red line) and 95% prediction interval (dashed red lines) are also shown. Cyan curves are the associated uncertainty from individual bootstrapped curves. Three hundred realizations of the sub-epidemic waves are plotted using different color

The sub-epidemic decline function across countries and USA states based on results presented in Table 1

Mean performance of the sub-epidemic wave and the Richards models in 2–20 day ahead forecasts conducted during the epidemic in Spain. The sub-epidemic model outperformed the Richards model across all metrics and forecasting horizons, but the MSE and MAE reached similar values at longer forecasting horizons

Mean performance of the sub-epidemic wave and the Richards models in 2–20 day ahead forecasts conducted during the epidemic in the UK. The sub-epidemic model outperformed the Richards model across all metrics and forecasting horizons except for 2-day ahead forecasts for which the Richards model reached somewhat better performance

Mean performance of the sub-epidemic wave and the Richards models in 2–20 day ahead forecasts conducted during the epidemic in New York. The sub-epidemic model outperformed the Richards model across all forecasting horizons based on the PI Coverage and the MIS except for 2-day ahead forecasts. However, the Richards model more frequently outperformed the sub-epidemic wave model based on the MAE and MSE

Sequential 20-day ahead forecasts of the sub-epidemic wave model for the COVID-19 epidemic in the USA. Black circles correspond to the data points. The model fit (solid red line) and 95% prediction interval (dashed red lines) are also shown. The vertical line separates the calibration period (left) from the forecasting period (right). The sequential forecasts were conducted on March 30, April 6, April 13, April 20, April 27, and May 4, 2020

Sequential 20-day ahead forecasts of the sub-epidemic wave model for the COVID-19 epidemic in Italy. Black circles correspond to the data points. The model fit (solid red line) and 95% prediction interval (dashed red lines) are also shown. The vertical line separates the calibration period (left) from the forecasting period (right). The sequential forecasts were conducted on March 30, April 6, April 13, April 20, April 27, and May 4, 2020

Sequential 20-day ahead forecasts of the sub-epidemic wave model for the COVID-19 epidemic in France. Black circles correspond to the data points. The model fit (solid red line) and 95% prediction interval (dashed red lines) are also shown. The vertical line separates the calibration period (left) from the forecasting period (right). The sequential forecasts were conducted on March 30, April 6, April 13, April 20, April 27, and May 4, 2020

Sequential 20-day ahead forecasts of the sub-epidemic wave model for the COVID-19 epidemic in Spain. Black circles correspond to the data points. The model fit (solid red line) and 95% prediction interval (dashed red lines) are also shown. The vertical line separates the calibration period (left) from the forecasting period (right). The sequential forecasts were conducted on March 30, April 6, April 13, April 20, April 27, and May 4, 2020

Sequential 20-day ahead forecasts of the sub-epidemic wave model for the COVID-19 epidemic in the UK. Black circles correspond to the data points. The model fit (solid red line) and 95% prediction interval (dashed red lines) are also shown. The vertical line separates the calibration period (left) from the forecasting period (right). The sequential forecasts were conducted on March 30, April 6, April 13, April 20, April 27, and May 4, 2020

Sequential 20-day ahead forecasts of the sub-epidemic wave model for the COVID-19 epidemic in New York State. Black circles correspond to the data points. The model fit (solid red line) and 95% prediction interval (dashed red lines) are also shown. The vertical line separates the calibration period (left) from the forecasting period (right). The sequential forecasts were conducted on March 30, April 6, April 13, April 20, April 27, and May 4, 2020

Sub-epidemic profiles of the sequential 20-day ahead forecasts for the COVID-19 epidemic in New York. Different colors represent different sub-epidemics of the epidemic wave profile. The aggregated trajectories are shown in gray and black circles correspond to the data points. The vertical line separates the calibration period (left) from the forecasting period (right). The sequential forecasts were conducted on March 30, April 6, April 13, April 20, April 27, and May 4, 2020

Sequential 20-day ahead forecasts of the sub-epidemic wave model for the COVID-19 epidemic in Louisiana. Black circles correspond to the data points. The model fit (solid red line) and 95% prediction interval (dashed red lines) are also shown. The vertical line separates the calibration period (left) from the forecasting period (right). The sequential forecasts were conducted on March 30, April 6, April 13, April 20, April 27, and May 4, 2020

Sequential 20-day ahead forecasts of the sub-epidemic wave model for the COVID-19 epidemic in Georgia. Black circles correspond to the data points. The model fit (solid red line) and 95% prediction interval (dashed red lines) are also shown. The vertical line separates the calibration period (left) from the forecasting period (right). The sequential forecasts were conducted on March 30, April 6, April 13, April 20, April 27, and May 4, 2020

Sequential 20-day ahead forecasts of the sub-epidemic wave model for the COVID-19 epidemic in Arizona. Black circles correspond to the data points. The model fit (solid red line) and 95% prediction interval (dashed red lines) are also shown. The vertical line separates the calibration period (left) from the forecasting period (right). The sequential forecasts were conducted on March 30, April 6, April 13, April 20, April 27, and May 4, 2020

Sequential 20-day ahead forecasts of the sub-epidemic wave model for the COVID-19 epidemic in Washington. Black circles correspond to the data points. The model fit (solid red line) and 95% prediction interval (dashed red lines) are also shown. The vertical line separates the calibration period (left) from the forecasting period (right). The sequential forecasts were conducted on March 30, April 6, April 13, April 20, April 27, and May 4, 2020

Rights and permissions

Copyright information

© 2022 Springer Nature Switzerland AG

About this chapter

Cite this chapter

Chowell, G., Rothenberg, R., Roosa, K., Tariq, A., Hyman, J.M., Luo, R. (2022). Sub-epidemic Model Forecasts During the First Wave of the COVID-19 Pandemic in the USA and European Hotspots. In: Murty, V.K., Wu, J. (eds) Mathematics of Public Health. Fields Institute Communications, vol 85. Springer, Cham. https://doi.org/10.1007/978-3-030-85053-1_5

Download citation

DOI: https://doi.org/10.1007/978-3-030-85053-1_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-85052-4

Online ISBN: 978-3-030-85053-1

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)