Abstract

We explore Granger causality and cointegration between main stock indices, macroeconomic indicators (PMI) and central banks monetary expansion for US data in presence of extreme market movements: bubbles and crashes. Two stock indices are caused in Granger sense either by economic fundamentals or by money supply provided by Federal Reserve’s monetary policy. The causation is found to be dynamic: vanishing during moderate expansions and recurring around long–term market peaks followed by market crashes. Cointegration between the time series dynamics, here considered within Vector Autoregressive framework, has been empirically shown to be a time–variant, recurrent phenomenon, too.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Economic and mechanical systems evolve in time according to their specific multivariate and often nonlinear dynamics measured by a set of underlying variables. As systems are mostly stochastic, these variables are modelled by a collection of stochastic processes exhibiting intertemporal dependencies. Some of the processes can either exert leading/lagging influence on one another (causation), or feature subtle joint evolution pattern (cointegration). The framework is general enough to study multivariate empirical data stemming from a number of scientific disciplines.

In this paper we will study dynamics of the US economy and financial market by means of selected driving processes, aiming at capturing possible causality or cointegration which themselves can be subject to evolution over time. Overall US stock market capitalization exceeds 30 trillion \(= 3\times 10^{13}\) USD. According to classic rules, long–term price trends (bull vs. bear market) coincide with current macroeconomic background measured by e.g.: GDP dynamics, inflation, unemployment rate, industrial output. Recently however, monetary expansion employed by central banks has found its place among main drivers of stock price dynamics, envisaged here by two benchmark indices: S&P500 and NASDAQ Composite.

Vast empirical research of the last decade shows that ample monetary stimuli lead to massive decoupling of stock prices from underlying fundamentals, resulting in asset bubbles followed by crashes. Therefore it seems important to inspect the cause and effect dynamics, namely which sets of inputs influence which other variables. Some classic techniques are used both to detect sources of causation (transmitted via the time axis) and capture contemporaneous dependencies between seemingly unrelated variables. Tracking these interactions between financial markets, real economy and central banks balance sheets is still more challenging in the presence of bubbles and crashes (known as boom–bust cycles).

The paper is organized as follows. In Sect. 2 we introduce empirical data sets to be processed throughout the paper and perform preliminary transformations ensuring stationarity. Section 3 deals with testing Granger causality with respect to the two stock indices, considered in moving 2–year time windows to track presence or absence of causality. In Sect. 4 we focus on verifying cointegration between indices, economic fundamentals and central banks balance sheets. Section 5 concludes the paper and provides promising topics for further research.

2 Data Sets Description and Preliminary Processing

2.1 Empirical Data Sets

In our empirical study we focus on specific econometric data from the United States throughout years 2003–2019, sampled monthly. Each original series consists of \(n=204\) entries recorded at month ends, provided by Saint Louis FED database [7].

The data are as follows:

-

I

Manufacturing PMI index – measure of activity at US factories,

-

II

Federal Reserve Bank (FED) balance sheet – overall value of assets held by US central bank which can be treated as a market liquidity proxy,

-

III

S&P 500 index quotes,

-

IV

NASDAQ Composite index quotes,

-

V

Aggregate balance sheet of three main central banks (FED, European Central Bank, Bank of Japan) expressed in USD based on respective month–end currency crosses, namely EURUSD, USDJPY.

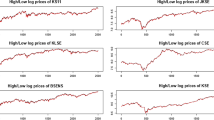

Accordingly, entire empirical data set constitutes a \(204\times 5\) matrix or, equivalently, 5–dimensional uniformly sampled econometric time series arranged columnwise. The data sets are visualized on subsequent plots of Figs. 1 and 2 below.

The US Manufacturing PMI is rather range–bound with faint low–frequency cyclical behavior. Stock indices and central banks balance sheets are evidently nonstationary because of distinct trends, sometimes even faster than linear. Since 2009 the two US indices have experienced secular bull market, accompanied by explosive growth of FED and other main central banks assets. Fundamental environment is described by Manufacturing PMI index which is recorded monthly. GDP and corporate profits are measured only quarterly and due to weak data resolution we exclude these series here.

Indeed, KPSS test proposed in [5] strongly rejects stationarity hypothesis (narrowly only in case of PMI), whereas the augmented Dickey–Fuller (ADF) test does not reject unit–root hypothesis in case of stock indices and balance sheet data.

Granger causality analysis requires covariance–stationary data, therefore we will need transformed data according to well–known techniques aiming at eliminating trends and tapering heteroscedasticity. For each univariate time series \(\{X_{t}\}_{1\le t\le n}\) we define a transformed series \(\{\varDelta X_{t}\}\) as either logreturn or common differencing:

The latter transform is applied only to the low–volatility PMI series with no linear trend, instead exhibiting only a slowly varying cyclical component. The remaining four empirical time series are subject to transform yielding logreturns. Both schemes are additive over larger time horizons in case temporal aggregation were necessary.

Stationarity tests are now comfortably passed, no unit–roots are detected. Sudden spike in FED balance sheet in late 2008 is a distinct outlier.

For convenience, we will denote the transformed series as \(\{\varDelta (Nasdaq)_{t}\}\), \(\{\varDelta (PMI)_{t}\}\) and so on. Now we proceed to explore the Granger causality between fundamental, monetary data on one hand, and financial time series on the other.

3 Granger Causality Study: What Drives Stock Indices Returns?

3.1 Growing Impact of Monetary Stimuli on Financial Markets

In the classic study of economics systems dynamics, especially in the context of stock market performance relative to macro input variables, there has been well understood role of leading and lagging variables driving economy and long–term stock returns within a given cycle phase. For instance, unemployment rate is a typical lagging indicator, whereas yield curve has performed quite well as leading indicator (especially inversions preceding recessions by a 1–2 year margin). These interdependencies used to be modelled by wide variety of time series models, e.g. regression, vector ARIMA’s, conditional copulae, GARCH–type models (Fig. 3).

Currently, especially since the financial crisis of 2008–09, there is a growing impact of monetary interventions upon stock prices dynamics. The US Federal Reserve Bank has engaged in four large–scale asset purchase programs known as quantitative easing (QE). Simultaneously, European Central Bank, Bank of Japan and Bank of England have been carrying out their own QE’s. This contributes to releveraging the overall financial system and artificial risk suppression. The issue of markets decoupling from economic fundamentals has been raised since around 2012–13. Typical economic cycles have become over–extended and stock valuations have run well above commonly approved long–term averages.

3.2 Granger Causality Test

Crucial question facing asset managers and creators of macroeconomic policies is which econometric or/and financial time series tend to lead (here: cause) which other time series. Granger (1969) has developed a novel testing procedure devised for detecting causality between time series, see [2].

Definition 31

Wide–sense stationary time series \(\{X_{t}\}_{t\in \mathbb {Z}}\) is said to Granger cause another w.–s. s. time series \(\{Y_{t}\}_{t\in \mathbb {Z}}\) if for any fixed \(t\in \mathbb {Z}\)

where \(\sigma ^{2}(\hat{Y}_{t}|\mathbb {F}_{t-1})=\mathbb {E}\big \{\big (\hat{Y}_{t}-\mathbb {E}(\hat{Y}_{t}|\mathbb {F}_{t-1})\big )^{2}\big |\mathbb {F}_{t-1}\big \}\) is the variance of the optimal linear forecast \(\hat{Y}_{t}\) based on filtration \(\mathbb {F}_{t-1}\) i.e. \(\sigma \)–algebra generated by the history of processes \(\{X_{t}\}\) and \(\{Y_{t}\}\).

The above definition states that including \(\{X_{s}\}_{s<t}\) into forecast equation defining \(\hat{Y}_{t}\) improves forecasting of \(Y_{t}\) compared to the case of including only \(\{Y_{s}\}_{s<t}\). The variance is commonly estimated by MSE.

Granger test serves to verify whether inequality (2) holds. Technically, the restricted versus unrestricted hypothesis is subject to testing by a Wald–type procedure. Null hypothesis \(H_{0}\) states no Granger causality, equivalently: no need to include past \(\{X_{s}\}\) into forecasting the present value \(X_{t}\). The alternative is Granger causality: \(\{X_{t}\}\) significantly improves the forecast performance. Detailed theoretic derivations can be found e.g. in [3] and [6].

Figures 4 and 5 below show detailed results of Granger causality tests in relation to the US stock market measured by S&P500 and NASDAQ Composite benchmarks. Main questions we ask is: do economic fundamentals and/or monetary stimuli exert statistically significant causal influence on large–cap stock prices?

The answer is critically important both for long–term asset allocation strategies and undertaking preemptive steps to reduce risk prior to possible major downturn. A notation \(X\longrightarrow Y\)(lag) stands for “\(\{X_{t}\}\) up to \(h=\) lag time units backwards Granger causes \(\{Y_{t}\}\)” as in Definition 31. Lags of up to 3 have been considered, as the test in many cases can be sensitive to the lag imposed ad hoc.

Statistically significant entries are highlighted in bold and colour, namely: orange – significance at 0.05 level; yellow – significance at 0.1 level; light blue – at 0.2 level. Obviously, in rigid statistical inference mainly test sizes of 0.01 or 0.05 are considered, but here we emphasize pairs of the series and accompanying time frames for which at least slight causality can be conjectured (0.1, 0.2 level).

It is clearly seen that during heightened financial stress fundamentals (here: PMI index) Granger–cause the stock price dynamics. Secondly, during the period of massive balance sheet expansion after 2008 crisis, asset valuations are driven more by liquidity than underlying economic fundamentals. FED’s activity has exerted visible causal impact on indices performance between 2014 and 2017. Interestingly, the FED in 2015 partly reverted its easing course but facing a market breakdown in 2018 it returned to lowering interest rates and since summer 2019 restarted QE. Thus, FED’s reverse course caused market declines or at least more rapid corrections in 2015, 2016 and twice in 2018. Other main central banks kept on expanding their assets pool and this may explain further market overvaluation, albeit it is not seen in the statistical causality tests.

3.3 Modelling and Forecasting Example: VAR(3) Model

As shown above, there are periods – especially around market peaks and severe drops/crashes – when stock indices are Granger–caused both by PMI and liquidity. One such time span covers subprime–driven market crash and financial crisis of 2008–09. Now we will show how vector autoregressive modelling framework VAR(3) works for this selected 2–year period (2008–2009), in which Granger causality has been confirmed.

As a reminder, a VAR(p) model for a p–dimensional time series \(\{\mathbb {X}_{t}\}\) in its matrix form is defined as

for some \(p\times p\) matrices \(\mathbb {A}_{i}=\big [a_{r,s}^{(i)}\big ]\), \(1\le i,r,s\le p\) and a vector white noise \(\{u_{t}\}\). In our setup we consider

where \(\varDelta X_{t}\) denotes respective differenced or log–differenced coordinatewise univariate series according to transformations described in Sect. 2.

We choose the 24 months of years 2008–09 as a training set, fit a VAR model with the aid of vars R–package [9] by Pfaff (2018) and use it for constructing point and interval forecasts 12 months ahead, i.e. spanning over year 2010. As we are interested in modelling the stock indices, we will restrict our attention to the first row of the VAR model, setting \(p=3\) in accordance with maximal lag considered in Granger causality testing reported in Figs. 4 and 5.

Specifically, we focus on the following extracted model for monthly logreturns of S&P500 index:

for \(4\le t\le 24\) (within the 2008–09 time frame).

With the OLS estimators \(\hat{\mathbb {A}}_{i}\) the resulting forecast for S&P500 logreturns in 2010 is given by

Recall from the previous subsection that within the 2008–09 horizon the FED series did not Granger cause the S&P500 index, whereas PMI did. Besides, additional AR(2) term for the index itself proved statistically significant. Hence in (5) only two out of nine coefficients \(\widehat{a_{1,s}^{(i)}}\) are significant at 0.05 level, i.e. the null hypothesis \(H_{0}\): \(a_{1,s}^{(i)}=0\) has been rejected in these two cases, namely \(a_{1,1}^{(2)}\ne 0\) and \(a_{1,2}^{(1)}\ne 0\). Thus the model (5) reduces to

We conclude this section with Fig. 6 showing visualization of point and interval (\(95\%\)) forecasts (blue points and red cylinders, respectively) derived from the above VAR(3) model (6), and confronted with real index monthly logreturns.

Although the point forecasts seem a bit too pessimistic (which should come as no surprise after traumatic 2008–2009 period), the real logreturns fall comfortably within all 12 confidence intervals. This study has proved reliable applicational value of tracking Granger causality and resulting VAR modelling.

4 Vanishing and Recurring Cointegrations

As mentioned at the beginning, the original empirical time series are highly nonstationary. According to ADF test, I(1) dynamics seems plausible (consequently removed by differencing schemes for purposes of Sect. 3) even though one can argue for explosive indices behavior under the bubble regime.

In this chapter we will analyze co–movements of the tech–heavy NASDAQ Composite index, grouping IT and innovative companies, and Federal Reserve Bank’s assets value. More importantly, only a handful of megacorporat-ions have steadily increasing weights in the benchmark, making it critically fragile in case of a sudden downturn. In February 2020 just four trillion–dollar market value corporations: Apple, Microsoft, Alphabet/Google and Amazon cover as much as 40% of NASDAQ 100 non–financial companies index (accounting for major part of broader NASDAQ Composite).

Cointegration concept was first conceived by Engle and Granger (1987) [1] in order to model contemporaneous dynamics of nonstationary (integrated) time series. In certain circumstances linear combinations of such nonstationary processes may yield a stationary output.

Definition 41

A k–dimensional time series \(\{\mathbb {X}_{t}\}=\{(X_{t,1},\ldots ,X_{t,k})^{T}\}\) with coordinates having unit roots of order \(d\in \mathbb {N}\) is said to be cointegrated (of order d) if there exists a vector \(\mathbf {v}\in \mathbb {R}^{k}\) such that the series \(U_{t}=\mathbf {v}^{T}\mathbb {X}_{t}\) is wide–sense stationary.

Here \(\mathbf {v}=[v_{1},\ldots ,v_{k}]\) is called a cointegrating vector, besides notation \(\{\mathbb {X}_{t}\}\sim I(d)\) is commonly used. Without loss of generality \(\mathbf {v}\) can be normalized so that \(v_{1}=1\), which is typically assumed in statistical software.

For \(k>2\) the cointegration is often considered within a vector autoregressive error–correction model framework (VECM), stemming from VAR models by taking differences. In this section we will conduct brief case study of detecting possible cointegration within our data. Specifically, a testing procedure proposed by Johansen (1991) [4] based on eigenvalues of a matrix present in VECM model formulation will be employed.

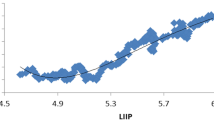

Let us consider the empirical time series of PMI, FED balance sheet and NASDAQ Composite, recorded monthly throughout 2003–2019 and introduced in Sect. 2. We have already detected occasional Granger causality, enabling to better explain dynamics of stock indices by incorporating PMI and/or FED data. Now we intend to track contemporaneous interconnections between the series at a given time t. FED and NASDAQ Composite are shown in Fig. 7. It is clearly seen that after the 2008 crash CB balance sheets and NASDAQ quotes are driven by strong trends. As argumented in vast research, central banks liquidity provided by successive rounds of QE programs has incited risk–on behavior resulting in aggressive speculation.

In our empirical study of the Johansen test performance we first test the entire time span, next we divide it into 3 distinct subperiods: pre–crisis, post–crisis 2009–14 and the most recent one, 2015–19. In the three tables below we report the test results of the null \(H_{0}\) of no cointegration for selected series collections. Table 3 in fact performs multivariate version of the test, because for \(k\ge 3\) time series there may exist multiple (up to k) cointegrating relationships (Tables 1 and 2).

The maximum eigenvalue Johansen test detected cointegrations in each of the three series combinations, mainly within 2003–2008 period (bold entries in respective tables). Hence we obtain according cointegrating vectors:

NASDAQ \(\longleftrightarrow \) PMI: \(\mathbf {v}_{1}=[1\), 703.65] in 2009–14

NASDAQ \(\longleftrightarrow \) FED: \(\mathbf {v}_{2}=[1\), \(-0.001]\) in 2009–14

NASDAQ \(\longleftrightarrow \) PMI \(\longleftrightarrow \) FED:

\(\mathbf {v}_{3,1}=[1\), 129.26, 0.005] in 2003–08

\(\mathbf {v}_{3,2}=[1\), 31.486, \(-0.001]\) in 2009–14

Oddly enough, aggregated 3 central banks balance sheet does not significantly Granger cause the indices returns.

For illustrative purposes we will consider one instance of cointegration, namely between FED balance and NASDAQ Comp. index during the 2009–14 horizon. With the eigenvector \(\mathbf {v}_{2}\) corresponding to the maximum eigenvalue from Johansen’s test we compose the stationary combination:

for \(73\le t\le 144\) (which refers to years 2009–14).

As shown in Fig. 8 the process \(\{U_{t}\}\) given by (78) resembles stationary behavior following a lower–order AR dynamics. Indeed, KPSS test confirms its stationarity. Similar reasoning can be carried out for remaining cointegrating combinations.

Cointegration analysis is very helpful in identifying seemingly unrelated empirical processes which share similar dynamics due to common trend. It must not be confused with spurious regression encountered in case of nonstationary variables. From the portfolio and risk analysis viewpoint, constructing investment strategies based on cointegrated inputs greatly helps in robustifying the allocation performance against nonstationary irregularities exhibited by single components. Once cointegration has been detected, one can take advantage of simultaneous exposure to various segments of financial markets, e.g. currencies, stocks, commodities. Another promising field of research in the context of cointegration and VECM modelling can be climate and natural sciences.

5 Conclusions and Further Research

By strict statistical inference we showed that within 17–year time span, namely between 2003 and 2019, two main US stock indices are Granger–caused in 2–yearly subperiods either by economic fundamentals, here measured by manufacturing activity index PMI, or by money supply provided by Federal Reserve’s monetary interventions known as QE. Once the causality is detected, respective variables appear as statistically significant in vector autoregressive models fitted to current indices dynamics. The research has proved temporal instability of causation itself: it vanishes during moderate expansions and reemerges around long–term market peaks concluding bubble–style price dynamics, followed by severe stocks prices declines. This finding neccessitates using mechanisms of adaptive detection and modelling techniques lest once accepted model does not become irrelevant.

Separately, cointegration behavior has been proved to be a recurrent phenomenon. We detected it during the monetary expansion of 2009–2014. Such large money supply dynamics tends to robustify stock movements against economic deceleration featured by hard fundamental data (market decoupling). As of February 2020, by many well–adopted valuation metrics the US market is currently extremely overvalued. For instance, in case of S&P500 index Schiller 10–year Price/Earnings indicator exceeds 30 compared to long–term average around 18. Corporate profits after tax do not confirm the huge market appreciation, either. Last time such a bubbly decoupling happened at 2000 dotcom bubble peak and now, since 2016 this divergence has become by far larger, see Fig. 9 provided by Bloomberg [8].

Dynamic approach to causality and cointegration coupled with adaptive, varying coefficient/smooth transition modelling is a promising further research path. Separately, more elaborate VARMAX-type models can be considered, as well as robustified versions of cointegration tests can be implemented. Incorporating additional time series like aggregate portfolio positioning (net long/short open interest held by large speculators) or sentiment indicators may prove helpful in timely identifying potential critical turning points on stock markets within fragile, speculatively inflated price dynamics regime.

Finally, the above methods can serve equally promisingly for inspection purposes of mechanical systems (production lines, large machinery working control), as sudden changes in cointegrations or Granger causations between the driving processes may be indicative of a major failure or malfunction.

References

Engle, R., Granger, C.: Co-integration and error correction: representation estimation and testing. Econometrica 55(2), 251–276 (1987)

Granger, C.: Investigating Causal Relationships by Econometric Models and Cross Spectral Methods. Econometrica 37, 425–435 (1969)

Granger, C.: Testing for causality: a personal viewpoint. J. Econ. Dyn. Control 2, 329–352 (1980)

Johansen, S.: Estimation and hypothesis testing of cointegration vectors in gaussian vector autoregressive models. Econometrica 59(6), 1551–1580 (1991)

Kwiatkowski, D., Phillips, P., Schmidt, P., Shin, Y.: Testing the null hypothesis of stationarity against the alternative of a unit root. J. Econ. 54, 159–178 (1992)

Pearl, J.: Causality: Models, Reasoning, and Inference. Cambridge University Press, Cambridge (2000)

Saint Louis FED webpage (2020). www.stlouisfed.org

Bloomberg webpage (2020). www.bloomberg.com

Pfaff, B.: Vars R-package (2018). www.pfaffikus.de

Acknowledgement

The author would like to thank two referees for their comments and remarks which considerably improved the quality of the final version of the paper.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Stawiarski, B. (2022). Granger Causality and Cointegration During Stock Bubbles and Market Crashes. In: Chaari, F., Leskow, J., Wylomanska, A., Zimroz, R., Napolitano, A. (eds) Nonstationary Systems: Theory and Applications. WNSTA 2021. Applied Condition Monitoring, vol 18. Springer, Cham. https://doi.org/10.1007/978-3-030-82110-4_5

Download citation

DOI: https://doi.org/10.1007/978-3-030-82110-4_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-82191-3

Online ISBN: 978-3-030-82110-4

eBook Packages: EngineeringEngineering (R0)