Abstract

Time integration of advection dominated advection–diffusion problems on refined meshes can be a challenging task, since local refinement can lead to a severe time step restriction, whereas standard implicit time stepping is usually hardly suitable for treating advection terms. We show that exponential time integrators can be an efficient, yet conceptually simple, option in this case. Our comparison includes three exponential integrators and one conventional scheme, the two-stage Rosenbrock method ROS2 which has been a popular alternative to splitting methods for solving advection–diffusion problems.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

1 Introduction

Time integration of unsteady advection–diffusion problems discretized in space on locally refined meshes can be a challenging problem. This is especially the case for advection dominated problems. On the one hand, requirements of accuracy, monotonicity and total variation diminishing (TVD) usually rule out the use of implicit time integration for advection terms [? , Chapter III.1.3] (for a notable exception see [31]). On the other hand, locally refined meshes can impose a severe CFL stability restriction on the time step, thus making explicit schemes very inefficient.

Within the method of lines framework, i.e., when discretization in space is followed by time integration, different approaches exist to cope with this problem. A straightforward and widely used approach is operator splitting [? , Chapter IV],[43, Chapter 3], when advection is usually treated explicitly in time and diffusion implicitly. Though being conceptually simple and easy to apply in practice, splitting methods unavoidably lead to splitting errors, see, e.g., [10, 27]. Moreover, a proper use of boundary conditions within the splitting is sometimes not trivial and may lead to error order reduction [15, 38]. To reduce splitting errors many various approaches have been proposed, with none being fully successful. Here we mention source splitting techniques [42] and Rosenbrock schemes [? , Chapter IV.5].

Other possible approaches to integrate advection–diffusion problems efficiently include implicit-explicit (IMEX) methods [? , Chapter IV.4] and multirate schemes [9, 34, 35].

In this paper we show that in some cases exponential time integration schemes can serve as an efficient yet simple way to integrate advection–diffusion problems in time on locally refined meshes. Similar to implicit schemes, exponential schemes have attractive stability properties. However, exponential schemes have also excellent accuracy properties and in some cases, especially for linear ODE (ordinary differential equation) systems, are able to produce exact solution to initial-value problem (IVP) being solved. This is the property we exploit in this work. An example of an IVP that can be solved exactly by an exponential solver is

where \(v,g\in \mathbb {R}^N\) are given and \(A\in \mathbb {R}^{N\times N}\) represents the advection–diffusion operator discretized in space. Exponential solvers involve matrix-vector products with the matrix exponential and related, the so-called φ functions. More specifically, the exact solution y(t) of (1) can be written as

where a matrix-vector product of the matrix function φ(−tA) and the vector g − Av has to be computed. Here the function φ is defined as [21]

In case g ≡ 0 expression (2) reduces to a familiar relation \(y(t)= \exp (-tA)v\), \(t\geqslant 0\). Note that such an “all-at-once” exact solution of systems (1) is also possible if g is a given vector function g(t) of time t, see [1]. Furthermore, to solve general nonlinear IVPs within this approach, across-time iterations of the waveform relaxation type can successfully be employed [25]. Then, at each waveform relaxation iteration, a linear IVP of the type (1) is solved by an exponential scheme. Such an approach is attractive because no time stepping is involved and solution can often be obtained for the whole time interval t ∈ [0, T].

Exponential time integration is a rapidly developing research area [21]. Two classes of exponential schemes can be distinguished: (1) time-stepping schemes having a certain accuracy order where the matrix exponential or related matrix functions are evaluated within each time step and (2) schemes which, formally speaking, deliver an exact solution to the IVP, where the actions of the matrix exponential or related matrix functions are employed across a certain time interval. The former class includes extrapolated second order exponential Euler method (EE2, discussed and tested below), exponential Rosenbrock schemes [22], and predictor-corrector exponential schemes [30]. Examples of the latter class are the scheme (2) applied to IVP (1), exponential waveform relaxation schemes [5] where solution to (3) is sought by replacing A with a preconditioner M ≈ A and iterating, and the exponential block Krylov (EBK) method discussed and tested below. Note that exponential schemes of the both classes can handle nonlinear problems, in particular, compressible [28, 29] and incompressible [26] Navier-Stokes equations.

In this work we present comparison results for three different exponential solvers. All these methods are based on the Krylov subspace techniques discussed in [1, 6, 36]. Krylov subspace methods have been successfully used for evaluating matrix exponential and related matrix functions since the eighties, see in chronological order [11, 12, 20, 24, 32? , 33]. An attractive property of the Krylov subspace methods, which distinguishes them from the other methods used for large matrix function evaluations f(A)v, is their adaptivity with respect to the spectral properties of A and the particular vector v, see [? ]. To work efficiently, Krylov subspace methods often need a restarting [14, 18], a mechanism allowing to keep Krylov subspace dimension restricted while preserving convergence of the unrestarted method.

The structure of this paper is as follows. In Sect. 2 the problem and methods used for its solution are presented. Section 3 is devoted to numerical experiments, here the methods are compared and comparison results are discussed. Finally, some conclusions are drawn in Sect. 4.

2 Problem Formulation and Methods

In this paper we assume that a linear PDE of the advection–diffusion type is solved by the method of lines and, after a suitable space discretization, the following IVP has to be solved

Here \(A\in \mathbb {R}^{N\times N}\) represents the discretized advection–diffusion operator and the given vector function g(t) accounts for time dependent sources or boundary conditions.

2.1 Exponential Time Integrators

Perhaps the simplest exponential integrator is exponential Euler method which, applied to problem (3), reads

where Δt is the time step size, y n is numerical solution at time t = Δtn and g n = g( Δtn). The method is inspired by relation (2) and for constant source term g is exact. It is not difficult to check that it is first order accurate.

Using extrapolation [3, 44], i.e., by combining solutions obtained with different time steps, higher order methods can be obtained. Globally extrapolated second order exponential Euler method (EE2) is considered in [1],

where its combination with EXPOKIT [36], used to evaluate the φ matrix vector products, is argued to be a competitive integrator. The phiv function of EXPOKIT is able to efficiently compute actions of the φ matrix functions by a restarted Arnoldi process, where the restarting is done by time stepping based on an error estimation. In experiments presented below we show that EE2/EXPOKIT can be significantly improved by introducing residual-based error control [4, 8, 13] and replacing the restarting procedure by the residual-time (RT) restarting presented in [6, 7].

EE2 is an exponential integrator which evaluates matrix functions within a time stepping procedure: at each time step φ is computed by a Krylov subspace method. It is often more efficient [2, 5], if possible, to organize work in such a way that numerical linear algebra work for matrix function evaluations is done across time stepping, for a certain time interval. For instance, as shown in [1], if for a certain time range g(t) allows an approximation

then (3) can be solved for this time range by a single projection on a block Krylov subspace. The matrix U and function p in (6) can be easily constructed by truncated singular value decomposition (SVD) of the vectors g(t i), at a small additional cost [1]. This procedure simultaneously provides an error estimation in (6), so that a proper value for m can be chosen.

To be more specific, consider problem (1), where for simplicity and without loss of generality assume v = 0. A usual Krylov subspace solution of (1) constructs a matrix \(V_k\in \mathbb {R}^{N\times k}\), whose orthonormal columns span the Krylov subspace [? ]

and, searching for an approximate solution y k(t) = V k u(t) ≈ y(t), reduces (1) to its Galerkin projection

where \(H_k=V_k^T A V_k\), \(e_1=(1,0,\dots ,0)^T\in \mathbb {R}^k\) is the first canonical basis vector and β = ∥g∥. We have \(V_k^T g=V_k^T V_k(\beta e_1)=\beta e_1\) because, by construction, the first column of V k is g∕∥g∥. The small projected IVP (7) can be solved by relation (2), evaluating φ(−tH k) with well developed matrix function techniques for small matrices (see, e.g., [19]).

Now consider, still assuming v = 0, problem (3) where g(t) allows (6). Projecting (3) on a block Krylov subspace [? ]

we can reduce (3) to its projected form

where we now have \(H_{k}\in \mathbb {R}^{km\times km}\) and \(E_1\in \mathbb {R}^{km\times m}\) is a matrix whose columns are the first m columns of the km × km identity matrix. These observations lead to the exponential block Krylov (EBK) method described in [1].

The EBK solver exploits a stopping criterion and restarting which are based on the exponential residual concept [4, 8, 13]. In particular, EBK iterations stop as soon as for the computed approximate solution y k(t) holds

The EBK method can be summarized as follows (see [1] for details):

Comparing the EE2 and EBK schemes, we note that an attractive feature of EE2 is its relative simplicity. On the other hand, a strong point for EBK is its potential efficiency, as a single block Krylov subspace has to be constructed for the whole time interval t ∈ [0, T].

2.2 ROS2 Method: Beyond Splitting

Rosenbrock schemes [? , Chapter IV.5] have been a popular alternative to splitting methods, as they allow to reduce splitting errors and avoid other negative effects related to splitting, such as order reduction. Let f(t, y) = −Ay(t) + g(t) be the ODE right hand side in (3). The two-stage Rosenbrock method ROS2 reads

The method is second order consistent for any \(\widehat {A}\in \mathbb {R}^{N\times N}\) and, to have good stability properties, one usually takes \(\widehat {A}\approx A\). Typically, \(\widehat {A}\) corresponds to the terms in A which have to be integrated implicitly in time. For instance in [41], for advection-diffusion-reaction problems, \(\widehat {A}\) is taken such that

where A diff contains diffusion terms and A react is the reaction Jacobian. In this work we take \(\widehat {A}\) to be either A or the diffusion part of A. Following suggestion in [? , Chapter IV.5, Remark 5.2] we set γ = 1.

3 Numerical Experiments

Numerical experiments described here are carried in Matlab on a Linux PC with 6 Intel Core i5-8400 2.80 GHz CPUs with 16 GB RAM.

3.1 Test 1: Time Dependent Source and Boundary Conditions

In this test we solve (3) where A is a finite-element discretization of the two-dimensional advection–diffusion operator:

where ν is the viscosity parameter and the velocity field is v = [v 1(x, y), v 2(x, y)],

For this test, the function g(t) in (3) takes the form

where y ex(t) is exact solution function chosen as

Here \(g_{\mathrm {bc}}\in \mathbb {R}^N\) is a vector containing Dirichlet boundary values prescribed below and the vector \(g_{\mathrm {peak}}\in \mathbb {R}^N\) consists of the values of function \(\mathrm{e} ^{-10x^2 -50y^2}\) on the mesh. The boundary conditions imposing by g bc are

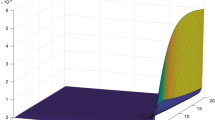

Note that A −1 g bc is the steady state solution of (3) for g(t) ≡ g bc and Tφ(−TA)g peak is the solution of (3) with g(t) ≡ g peak at time t = T. The final time is T = 1000 in this test. In Fig. 1 the exact solution y ex(T) is plotted.

Solution function (12) on the mesh 256 × 256 at final time T = 1000 as surface (left) and contour (right) plots

In this test the IFISS finite element discretization [16, 37] by bilinear quadrilateral (Q 1) finite elements with the streamline upwind Petrov–Galerkin (SUPG) stabilization is employed. We set viscosity to ν = 1∕6400 and use nonuniform Cartesian stretched 256 × 256 and 512 × 512 grids with default refinement parameters, which get finer near the domain boundaries, see Table 1.

When constructing an advection-diffusion matrix, the IFISS package provides the value the maximum finite element grid Peclet number, evaluated per element as

where h x,y and v 1,2 are respectively the element sizes and the velocity components. The maximum element Peclet numbers reported for these meshes are given in Table 1. Due to the SUPG stabilization, the resulting matrices for both meshes are weakly nonsymmetric: the ratio ∥A − A T∥1∕∥A + A T∥1 amounts approximately to 0.022 (mesh 256 × 256) and 0.012 (mesh 512 × 512).

In addition to the requested accuracy tolerance, two input parameters have to provided to EBK: the number of the truncated SVD terms m and the number of time snapshots n

s to construct approximation (6). From the problem description, we see that y

ex(t) is a linear combination of two linearly independent vectors for any t. Hence, g(t) is a linear combination of no more than four vectors and we should take \(m\leqslant 4\). The actual situation is displayed by the singular values available from the thin SVD of the time samples: the largest truncated singular value σ

m+1 is an upper bound for the truncation error  , see, e.g., [17]. In this case it turns out that taking m = 2 is sufficient. A proper snapshot number n

s can be estimated from given α(t) or by checking, for constructed U and p(t), the actual error ∥g(t) − Up(t)∥ a posteriori, see (Table 2). Based on this, we set n

s = 120 in all EBK runs in this test. This selection procedure for n

s is computationally very cheap and can be done once, before all the test runs.

, see, e.g., [17]. In this case it turns out that taking m = 2 is sufficient. A proper snapshot number n

s can be estimated from given α(t) or by checking, for constructed U and p(t), the actual error ∥g(t) − Up(t)∥ a posteriori, see (Table 2). Based on this, we set n

s = 120 in all EBK runs in this test. This selection procedure for n

s is computationally very cheap and can be done once, before all the test runs.

As the problem is two-dimensional, linear systems with A can be solved efficiently by sparse direct methods. Therefore to solve the linear systems in ROS2, we use the Matlab standard sparse LU factorization (provided by UMFPACK) computing it once and using at each time step.

The error reported below for the test runs is measured as

The results of the test runs are presented in Tables 3 and 4. As we see, EBK turns out to be more efficient than the other solvers. Within the EE2 integrator, the change of the Krylov subspace solver from EXPOKIT’s phiv to the RT-restarted algorithm leads to a significant increase in efficiency. Note that this gain is not due to the restarting but due a more reliable residual-based error control. Restarting is usually not done because, due to a sufficiently small Δt, just a couple Krylov steps are carried out in EE2 each time step. In both EE2/EXPOKIT and EE2/RT we should be careful with setting a proper tolerance value, which is used at each time step for stopping the Krylov subspace method evaluating the φ matrix function. Taking a large tolerance value may lead to an accuracy loss. For increasingly small tolerance values the same accuracy will be observed (as it is determined by the time step size) at a higher cost: more matrix-vector multiplications per time step will be needed for the φ matrix function evaluations.

From Tables 3 and 4 we also see that the ROS2 solver becomes less efficient than EE2/RT on the finer mesh as the costs for solving linear systems become more pronounced.

3.2 Test 2: Time Dependent Boundary Conditions

In the previous test we see that the EBK solver apparently profits from the specific source function, exhibiting a very quick convergence. Although this is not an unusual situation, we now consider another test problem which appears more difficult for EBK. We take the same matrix A as in the first test and the following initial value vector v and source function g(t):

where α(t) and g bc are the same as in (12). This test problem does not have a known analytical solution and we compute a reference solution y ref(t) by running EE2/RT with a tiny time step size. The errors of computed numerical solutions y(t) reported below are

Note that y ref(t) is influenced by the same space error as y(t), hence, the error shows solely the time error.

From the problem definition we see that the number of SVD terms m can be at most 2. Therefore, in this test EBK is run with the block size m = 2 and n s = 80 time snapshots (the value is determined in the same way as in Test 1). For this test we include in comparisons the two solvers which come out as best in the first test, EBK and EE2/RT. The results presented in Table 5 show that EBK does require more steps for this test but is still significantly more efficient than EE2/RT.

4 Conclusions

We show that exponential time integrators can be an attractive option for integrating advection–diffusion problems in time, as they possess good accuracy as well as stability properties. In presented tests, they outperform state-of-the-art implicit-explicit ROS2 solvers. Exponential solvers which are able to exploit their matrix function evaluation machinery for a whole time interval (such as EBK in this paper) appear to be preferable to exponential integrators where matrix functions have to be evaluated at each time step.

References

Botchev, M.A.: A block Krylov subspace time-exact solution method for linear ordinary differential equation systems. Numer. Linear Algebra Appl. 20(4), 557–574 (2013) https://doi.org/10.1002/nla.1865

Botchev, M.A.: Krylov subspace exponential time domain solution of Maxwell’s equations in photonic crystal modeling. J. Comput. Appl. Math. 293, 24–30 (2016) https://doi.org/10.1016/j.cam.2015.04.022

Botchev, M.A., Verwer, J.G.: Numerical integration of damped Maxwell equations. SIAM J. Sci. Comput. 31(2), 1322–1346 (2009) https://doi.org/10.1137/08072108X

Botchev, M.A., Grimm, V., Hochbruck, M.: Residual, restarting and Richardson iteration for the matrix exponential. SIAM J. Sci. Comput. 35(3), A1376–A1397 (2013) https://doi.org/10.1137/110820191

Botchev, M.A., Oseledets, I.V., Tyrtyshnikov, E.E.: Iterative across-time solution of linear differential equations: Krylov subspace versus waveform relaxation. Comput. Math. Appl. 67(12), 2088–2098 (2014) https://doi.org/10.1016/j.camwa.2014.03.002

Botchev, M.A., Knizhnerman, L.A.: ART: Adaptive residual-time restarting for Krylov subspace matrix exponential evaluations. J. Comput. Appl. Math. 364, 112311 (2020) https://doi.org/10.1016/j.cam.2019.06.027

Botchev, M.A., Knizhnerman, L.A., Tyrtyshnikov, E.E.: A residual concept for Krylov subspace evaluation of the φ matrix function (2020). Preprint arXiv:2010.08494. https://arxiv.org/abs/2010.08494

Celledoni, E., Moret, I.: A Krylov projection method for systems of ODEs. Appl. Numer. Math. 24(2–3), 365–378 (1997) https://doi.org/10.1016/S0168-9274(97)00033-0

Constantinescu, E.M., Sandu, A.: Multirate timestepping methods for hyperbolic conservation laws. J. Sci. Comput. 33(3), 239–278 (2007)

Csomós, P., Faragó, I., Havasi, Á.: Weighted sequential splittings and their analysis. Comput. Math. with Appl. 50(7), 1017–1031 (2005)

Druskin, V.L., Knizhnerman, L.A.: Two polynomial methods of calculating functions of symmetric matrices. U.S.S.R. Comput. Maths. Math. Phys. 29(6), 112–121 (1989)

Druskin, V.L., Knizhnerman, L.A.: Krylov subspace approximations of eigenpairs and matrix functions in exact and computer arithmetic. Numer. Lin. Alg. Appl. 2, 205–217 (1995)

Druskin, V.L., Greenbaum, A., Knizhnerman, L.A.: Using nonorthogonal Lanczos vectors in the computation of matrix functions. SIAM J. Sci. Comput. 19(1), 38–54 (1998) https://doi.org/10.1137/S1064827596303661

Eiermann, M., Ernst, O.G.: A restarted Krylov subspace method for the evaluation of matrix functions. SIAM J. Numer. Anal. 44, 2481–2504 (2006)

Einkemmer, L., Ostermann, A.: Overcoming order reduction in diffusion-reaction splitting. Part 1: Dirichlet boundary conditions. SIAM J. Sci. Comput. 37(3), A1577–A1592 (2015) https://doi.org/10.1137/140994204

Elman, H.C., Ramage, A., Silvester, D.J.: IFISS: A computational laboratory for investigating incompressible flow problems. SIAM Rev. 56(2), 261–273 (2014)

Golub, G.H., Van Loan, C.F.: Matrix Computations, 3rd edn. The Johns Hopkins University Press, Baltimore and London (1996)

Güttel, S., Frommer, A., Schweitzer., M.: Efficient and stable Arnoldi restarts for matrix functions based on quadrature. SIAM J. Matrix Anal. Appl 35(2), 661–683 (2014)

Higham, N.J.: Functions of Matrices: Theory and Computation. Society for Industrial and Applied Mathematics, Philadelphia (2008)

Hochbruck, M., Lubich, C.: On Krylov subspace approximations to the matrix exponential operator. SIAM J. Numer. Anal. 34(5), 1911–1925 (1997)

Hochbruck, M., Ostermann, A.: Exponential integrators. Acta Numer. 19, 209–286 (2010) https://doi.org/10.1017/S0962492910000048

Hochbruck, M., Ostermann, A., Schweitzer, J.: Exponential Rosenbrock-type methods. SIAM J. Numer. Anal. 47(1), 786–803 (2008/2009). https://doi.org/10.1137/080717717

Hundsdorfer, W., Verwer, J.G.: Numerical Solution of Time-Dependent Advection-Diffusion-Reaction Equations. Springer, Berlin (2003)

Knizhnerman, L.A.: Calculation of functions of unsymmetric matrices using Arnoldi’s method. U.S.S.R. Comput. Maths. Math. Phys. 31(1), 1–9 (1991)

Kooij, G.L., Botchev, M.A., Geurts, B.J.: A block Krylov subspace implementation of the time-parallel Paraexp method and its extension for nonlinear partial differential equations. J. Comput. Appl. Math. 316(Supplement C), 229–246 (2017) https://doi.org/10.1016/j.cam.2016.09.036

Kooij, G., Botchev, M.A., Geurts, B.J.: An exponential time integrator for the incompressible Navier–Stokes equation. SIAM J. Sci. Comput. 40(3), B684–B705 (2018) https://doi.org/10.1137/17M1121950

Lanser, D., Verwer, J.G.: Analysis of operator splitting for advection–diffusion–reaction problems from air pollution modelling. J. Comput. Appl. Math. 111(1–2), 201–216 (1999)

Li, S.J.: Efficient p-multigrid method based on an exponential time discretization for compressible steady flows (2018). arXiv preprint 1807.01151. https://arxiv.org/abs/1807.01151

Li, S.J.: Time advancement of the Navier-Stokes equations: p-adaptive exponential methods. J. Flow Control Measurement Vis. 8(2), 63–76 (2020) https://doi.org/10.4236/jfcmv.2020.82004

Li, S.J., Luo, L.S., Wang, Z.J., Ju, L.: An exponential time-integrator scheme for steady and unsteady inviscid flows. J. Comput. Phys. 365, 206–225 (2018) https://doi.org/10.1016/j.jcp.2018.03.020

Lie, K.A., Mykkeltvedt, T.S., Møyner, O.: A fully implicit WENO scheme on stratigraphic and unstructured polyhedral grids. Comput. Geosci. 24(2), 405–423 (2020)

Park, T.J., Light, J.C.: Unitary quantum time evolution by iterative Lanczos reduction. J. Chem. Phys. 85, 5870–5876 (1986)

Saad, Y.: Analysis of some Krylov subspace approximations to the matrix exponential operator. SIAM J. Numer. Anal. 29(1), 209–228 (1992)

Savcenco, V., Hundsdorfer, W., Verwer, J.G.: A multirate time stepping strategy for stiff ordinary differential equations. BIT Numer. Math. 47(1), 137–155 (2007)

Schlegel, M., Knoth, O., Arnold, M., Wolke, R.: Multirate Runge–Kutta schemes for advection equations. J. Comput. Appl. Math. 226(2), 345–357 (2009). https://doi.org/10.1016/j.cam.2008.08.009

Sidje, R.B.: Expokit. A software package for computing matrix exponentials. ACM Trans. Math. Softw. 24(1), 130–156 (1998) www.maths.uq.edu.au/expokit/

Silvester, D.J., Elman, H.C., Ramage, A.: Incompressible flow & iterative solver software (2019). http://www.manchester.ac.uk/ifiss/

Sommeijer, B.P., van der Houwen, P.J., Verwer, J.G.: On the treatment of time-dependent boundary conditions in splitting methods for parabolic differential equations. J. Numer. Methods Engrg. 17(3), 335–346 (1981). https://doi.org/10.1002/nme.1620170304

van der Vorst, H.A.: An iterative solution method for solving f(A)x = b, using Krylov subspace information obtained for the symmetric positive definite matrix A. J. Comput. Appl. Math. 18, 249–263 (1987)

van der Vorst, H.A.: Iterative Krylov Methods for Large Linear Systems. Cambridge University Press, Cambridge (2003)

Verwer, J.G., Spee, E.J., Blom, J.G., Hundsdorfer, W.: A second order Rosenbrock method applied to photochemical dispersion problems. SIAM J. Sci. Comput. 20, 456–480 (1999)

Verwer, J.G., Hundsdorfer, W., Blom, J.G.: Numerical time integration for air pollution models. Surv. Math. Ind. 10, 107–174 (2002) https://ir.cwi.nl/pub/4620

Zlatev, Z.: Computer Treatment of Large Air Pollution Models. Kluwer Academic Publishers, New York (1995)

Zlatev, Z., Dimov, I., Faragó, I., Havasi, Á.: Richardson Extrapolation: Practical Aspects and Applications. De Gruyter, Berlin (2018)

Acknowledgement

This work is supported by the Russian Science Foundation under grant No. 19-11-00338.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Botchev, M.A. (2021). Exponential Time Integrators for Unsteady Advection–Diffusion Problems on Refined Meshes. In: Garanzha, V.A., Kamenski, L., Si, H. (eds) Numerical Geometry, Grid Generation and Scientific Computing. Lecture Notes in Computational Science and Engineering, vol 143. Springer, Cham. https://doi.org/10.1007/978-3-030-76798-3_25

Download citation

DOI: https://doi.org/10.1007/978-3-030-76798-3_25

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-76797-6

Online ISBN: 978-3-030-76798-3

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)