Abstract

In many queuing systems, the inter-arrival time and service distributions are dependent. In this paper we analyze such a system where the dependence is through a semi-Markov process. For this we assume that the arrival and service processes evolve in a finite number of phases/stages according to a Markov chain. So, the product space of the two finite sets of states (phases) is considered. The nature of transitions in the states of the combined process are such that transition rates at which the states of the combined process changes depend on the phase in which each ‘marginal’ process is currently in and (the phases of) the state to be visited next. We derive the stability condition and the effect of the interdependence on the stability of the system is brought out. A numerical investigation of the steady state characteristics of the system is also carried out.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

In queueing theory literature, we notice that the problems investigated at the beginning (from the time Erlang analyzed problems in telephone systems by modeling that as a queueing problem) were considering arrival and service process to be mutually independent of each other and further inter-arrival and service times were also independent. With the introduction of the Markovian arrival/service process (MAP/MSP), the evolution within arrival/service could be modeled as dependent-two consecutive inter-arrival time/service duration are dependent. These were then extended to batch Markovian arrival and batch Markovian service processes. For a comprehensive review of such work done up to the beginning of this century, see Chakravarthy [1]. Achyutha Krishnamoorthy and Anu Nuthan Joshua extended this to consider a BMAP/BMSP/1 queue with Markov chain dependence of two successive arrival batch size and similarly that between successive service batches [2].

The above-indicated dependence is within each component process of a queuing problem. Naturally one will be curious to know the behavior of service systems when the two-component processes are correlated; this could be between the time between the n\( ^{th} \) and the \((n+1)^{th} \) customers and the service time of the \((n+1)^{th} \) customer. There are several ways of introducing interdependence of the two processes. First, we give below a brief survey.

Conolly [3], Conolly and Hadidi [4] and Cidon et al. [5] assume that s\( _n \), the service time of the n\( ^{th} \) arrival depends on the inter-arrival time between n-1 and n\( ^{th} \) customer. Cidon et al. [6] assume that an inter-arrival time depends on the previous service time. Conolly and Choo [7], Hadidi [8], Hadidi [9] study queueing models where the service time \(s_n\) is correlated to the inter-arrival time \(a_n\) through some density such as the bivariate exponential. Iyer and Manjunath [10] study a generalization of these models. Fendick et al. [11] study the effect of various dependencies between arrival and service processes in packet communication network queues. Combe and Boxma [12] describe how Batch Markovian Arrival Process (BMAP) can be used to model correlated inter-arrival and service times. Boxma and Perry [13] study fluid production and inventory models with dependence between service and subsequent inter-arrival time. Adan and Kulkarni [14] consider a generalization of the MAP/G/1 queue by assuming a correlation between inter-arrival and service times. They assume that the inter-arrival and service times are regulated by an irreducible discrete-time Markov chain. They derive the Laplace-Stiltjes transforms of the steady-state waiting time and the queue length distribution. Valsiou et al. [15] consider a multi-station alternating queue where preparation and service times are correlated. They consider two cases: in the first case, the correlation is determined by a discrete-time Markov chain as in Adan and Kulkarni [14] and in the second case the service time depend on the previous preparation time through their joint Laplace transform. A generalization of the G/G/1 queue with dependence between inter-arrival and service times is studied in Badila et al. [16]. In none of the above models the matrix analytic methods developed by Neuts [17,18,19] are used. Nor the semi-Markov approach was employed for investigation of the problem.

Sengupta [20] analyses a semi-Markovian queue with correlated inter-arrival and service times using the techniques developed in Sengupta [21]. Sengupta shows that the distributions of waiting time, time in system and virtual waiting time are matrix exponential, having phase-type representations. However, a matrix-geometric solution for the number of customers is obtained only when the inter-arrival and service times are independent. Lambert et al. [22] employ matrix-analytic methods to a queueing model where the service time of a customer depends on the inter-arrival time between himself and the previous customer. They perform the analysis, without a state-space explosion by keeping track of the age of customer in service. However, this assumption brought infinitely many parameters in to their model. Lambert et al. do not discuss the estimation part of the parameters. van Houdt [23] generalizes the above paper by presenting a queueing model where the inter-arrival times and service time are correlated, which can be analyzed as an MMAP[K]/PH[K]/1 queue for which matrix-geometric solution algorithms are available. Buchholz and Kriege [24] study a special case of van Houdt, where the dependence between inter-arrival and service times is brought in by relating phases of the arrival process with the service time distribution. More importantly, they present methods for estimating the parameters of the model.

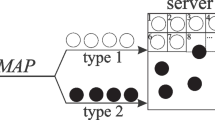

Next, we give a slightly detailed report on Bhaskar Sengupta (Stochastic Models, 1990) [20] and B. van Houdt (Performance Evaluation, 2012) [23]. Sengupta and van Houdt have something in common in that the latter starts from the former to fill some gaps in it. Both of them delve on specific queues-the inter-arrival and service time distributions are specified. So a brief presentation of the former is sufficient. Sengupta considers the inter-arrival time sequence \(\{a_n\}\) and the sequence \(\{s_n\}\) duration of successive service times. Together with these, the sequence of phases where service started for successive customers and the sequence of phases from which service of customers got completed, are also taken into account. For the \(k^{th}\) customer, while taken for service, the information, \(a_1, \cdots , a_{k-1}; s_1,\cdots ,s_{k-1} \) together with the service commencement phases of the first \(k-1\) customers and the phases from which service completion took place for the first \(k-1\) customers, are taken into consideration. Thus, too much history is required to study evolution. On closely examining the papers in the above review, we notice that the queueing models described therein could be expressed in forms like “G/G/1 queue with interdependence of arrival and service processes”. In this paper, we approach the interdependence through a semi-Markov approach. For this, we need to model arrival and service processes to evolve in stages/phases. An event occurrence means either an arrival or a service completion (temporarily absorbing state). This means that we have to have finite state space first-order Markov chains to describe the evolution of the arrival and service processes. Each one has an initial probability vector and a one-step transition probability matrix. Now we take the product space of these two Markov chains (as are essential for a Markov chain). So, elements in this product space are two-dimensional objects with the first one representing the stage of current arrival and the second represents the stage in which the customer is served at present, provided there is a customer undergoing service. We use the symbol {(X\( _n \) \(\times \) Y\( _n \)), n \( \geqq \) 1} to describe the Markov chain on the product space. The two Markov chains are independent if \(P\{(X_{n+1},Y_{n+1})|(X_n,Y_n)\} = P(X_{n+1}|X_n).P(Y_{n+1}|Y_n);\) if this equality does not hold, then the component Markov chains are interdependent. We assume that the two Markov chains to be interdependent. Transitions in this Markov chain take place according to a semi-Markov process: the sojourn time in each state (a pair) depends on the state in which it is in and the state to be visited next. On occurrence of an event, for example an arrival, we sample out the initial state from the initial probability vector of the Markov chain describing the arrival, to start the next arrival; similar explanation for the service completion and start of the next service, provided there is a customer waiting; else the server waits till the arrival of the next customer and sample out the stage to start service, from the initial probability vector of the Markov chain describing the service process.

Though we discuss a problem arising in the service system, the procedure we employ here can be applied to any branch of study where at least two processes are involved. Further, the interdependence can be group wise; those within a group are interdependent, but not between two distinct groups. Thus, the method could be applied to a wide range of study, from Economics to Medicine, Management, Commerce and even literature.

2 Interdependent Processes

We consider a queue in which arrival and service processes are related through a semi-Markov process. To this end, we assume that arrival and service of customers are in phases, as in a phase-type or MAP/MSP. There assume that we have two distinct finite state space Markov chains describing the transitions in the arrival and service processes. Each has one absorbing state-the one for the arrival process represents the occurrence of an arrival and that for the service process indicates a service completion.Assume that for the arrival process the state space of the Markov chain is \(\{1,2,\cdots ,m,m+1\}\) and that for the service the state space is \(\{1,2,\cdots ,n,n+1\}\) such that \(m+1\) and \(n+1\) are the respective absorbing states. Now consider the product space of these two sets: \(\{(i, j) \mid 1 \le i\le m + 1; 1\le j\le n + 1\}\) and the Markov chain on this product space as follows: Suppose that it is in state (i, j). After staying in this state for an exponentially distributed amount of time, it moves to state (i\( ^\prime \), j) or (i, j\( ^\prime \)) or stays in that state itself. The sojourn time in (i, j) depends on both (i, j) and the state to be visited next. A transition with change in first coordinate represents arrival phase change with an arrival (jumping to \(m+1\)) or without an arrival (resulting in one of \(1,2,\cdots ,m\), other than the one in which already in); similarly a transition with change in the service coordinate represents a service completion (jumping to \(n+1\)) or without a service completion (resulting in one of the states \(1,2,\cdots ,n\), other than the one in which already in). Note that there cannot be a transition in a short interval of time \((t,t+h)\)) with positive probability, when both the coordinates change. Further note that the two Markov chains are independent if and only if

In our case, this relation does not hold.

The idea of interdependence among random processes, evolving continuously/discrete in time, in the manner described above, was introduced by Krishnamoorthy through a series of webinars: SMARTY (Karelian Republic, August 2020); DCCN (Trapeznikov Institute of Control Sciences, Moscow, September 2020); Professor C.R. Rao Birth Centenary Talk (SreeVenkateswara University, Tirupati, September 2020), and a few others.

3 Dependence by a Row Vector

In the present study, we take two Markov Chains \(\{X_i\}\) and \(\{Y_i\}\) each with finite state spaces \(\{1,2,\cdots ,m,m+1\}\) and \(\{1,2,\cdots ,n,n+1\}\) and initial probability vectors \(\alpha \) and \(\beta \) where states \(m+1\) and \(n+1\) are absorbing states. We assume that the state transition probabilities of the chain \(\{Y_i\}\) depend on the state in which the chain \(\{X_i\}\) is. We also assume that the dependence of \(\{Y_i\}\) on \(\{X_i\}\) is such that the rates at which the chain \(\{Y_i\}\) changes its states given that the chain \(\{X_i\}\) is in state k is \(p_k S\), where S is an \((n+1)\times (n+1)\) matrix, \(k=1,2,\cdots ,m\).

Now consider the product \(X=\{X_i\times Y_i\}\) of these chains with state space \(\{(i,j)|1 \le i \le m+1, 1 \le j \le n+1, i \ne m+1,\, j = n+1,\, j \ne n+1,\, i = m + 1\}\) with the Markovian property \(P \{(X_{k+1}, Y_{k+1}) = (i^\prime , j^\prime )|(X_{k},Y_{k}) = (i, j)\), \((X_{k-1}, Y_{k-1}) = (i_1, j_1 ),\cdots , (X_0, Y_0) = (i_k, j_k)\} = P\{(X_{k+1}, Y_{k+1}) = (i^\prime , j^\prime )|\) \((X_k, Y_k) = (i, j)\} = r_{(i,j),(i^\prime ,j^\prime )}\).

Thus, \(X\times Y\) is a Markov chain with temporary/instantaneous absorbing states \(\{(i, n + 1)|i = 1,\cdots ,m\}\) and \(\{(m + 1, j)|j = 1,\cdots ,n\}\). This chain induces a birth death process. This birth death process can be regarded as a Ph/Ph/1 queue in which the state transition times of the arrival process and that of the service process are dependent. With these assumptions, we have the following transition probabilities for the product chain:

-

1.

\(P\left\{ (X\times Y)(t+\triangle t)=(i,j)/(X\times Y)(t)=(i,k) \right\} =p_i S_{kj}\triangle t, 1 \le i \le m, 1 \le j, k\le n,j \ne k\), without Type 1 or Type 2 event occurrences;

-

2.

\(P\left\{ (X\times Y)(t+\triangle t)=(l,j)/(X\times Y)(t)=(i,j) \right\} = T_{il}\triangle t, 1\le l, i \le m, 1\le j \le n, l\ne i\), without Type 1 or Type 2 event occurrences;

-

3.

\(P\left\{ (X\times Y)(t+\triangle t)=(i,j)/(X\times Y)(t)=(i,k) \right\} =p_i S_{k,n+1} \triangle t \beta _j, 1 \le i \le m, 1\le j, k \le n\), with a Type 2 event occurrences;

-

4.

\(P\left\{ (X\times Y)(t+\triangle t)=(l,j)/(X\times Y)(t)=(i,j) \right\} =T_{k,m+1} \triangle t \alpha _l, 1 \le i, l\le m, 1\le j\le n\), with a Type 1 event occurrences;

where \(T = [T_{ij}]\) is the state transition rates of the chain \(\{X_i\}\). The interdependent process will have representation

where \(J_m = diag(p_1,p_2,\cdot ,p_m)\).

A study of the above described system is motivated by the health care models, telecommunication models etc., where the essential service has to be given to more customers within the stipulated time if the demand is high. For example, if the arrival of patients to a health care system is very high so that for giving service to all without much delay, the treatment protocol may be changed so that all the patients may get treatments required. The present scenario arising out of the COVID-19 outbreak proposes such a model. In the initial stages of the pandemic the number of patients was so small that the health care system could handle the situation easily. They are discharged from the hospitals only after three consecutive negative test results! But in the later stages when the disease is widely spread, the hospitals became crowded and the treatment protocol had to be changed so that many lives could be saved. To manage the congestion, the patients were discharged from the hospitals at a higher rate.

4 Mathematical Model

Consider a service facility where the arrival and service processes are interdependent. The assumption of dependence is motivated by the following present day scenario. In the early stages of the covid-19 outbreak, the health care system in our country took at most care in the treatment of the patients, they were kept in isolation and were sent home only after several tests. But as the number of cases increased the system had to accommodate more and more patients and so the way of treatment was changed. Here the treatment given (service) depends on the number of patients (arrival). To model such a system we use above discussed QBD as follows:

At time t, let N(t) be the number of customers in the system including the one being served, \(S_1(t)\) be phase of arrival and \(S_2(t)\) be the phase of service. Then

will be a Markov chain with state space

\(E = \{(0, \textit{k})|1 \le \textit{k} \le \textit{m}\} \cup \{(\textit{i, k, l})|\textit{i} \ge 1, 1 \le \textit{k} \le \textit{m}, 1 \le l \le \textit{n}\}\).

The state space of the Markov chain can be partitioned into levels \(\tilde{i}\) defined as

In the following sequel, e denotes a column matrix of 1’s of appropriate order. The infinitesimal matrix of the chain is

where

and

5 Stability Analysis

Let \(A = A_0+ A_1+ A_2\)

\(= T^0\otimes \beta \otimes I_n + T\otimes I_n + J_m \otimes S + J_m \otimes S^0 \otimes \alpha \)

\(= (T^0 \otimes \beta + T) \otimes I_n + J_m \otimes (S + S^0 \otimes \alpha )\).

If \(\pi \) and \(\theta \) are the stationary probability vectors of T\( ^0 \) \( \otimes \) \(\beta \) + T and S + S\( ^0 \) \( \otimes \) \(\alpha \), respectively, then

\((\pi \otimes \theta )\textit{A} = (\pi \otimes \theta )(\textit{T}^0 \otimes \beta + \textit{T} ) \otimes \textit{I}_n + (\pi \otimes \theta )\textit{J}_m \otimes (\textit{S} + \textit{S}^{0} \otimes \alpha )\)

\(= \pi (\textit{T}^0 \otimes \beta + \textit{T} ) \otimes \theta + \pi J_m \otimes \theta (\textit{S} + \textit{S}^0\alpha ) = 0\).

Hence, \((\pi \otimes \theta )\) is the stationary probability vector of A.

Now \((\pi \otimes \theta )\textit{A}_0 e = \pi \textit{T}^0\) and \((\pi \otimes \theta )A_2 e = (\pi \textit{P}^\prime )(\theta S^0)\), where \(\textit{P} = [p_1,p_2,\cdots ,p_n]\).

Thus, the stability condition reduces to \(\pi \)T\( ^0 \) < (\(\pi \)P\( ^\prime \)) (\(\theta \)S\( ^0 \)). Hence, we have the following theorem.

Theorem 1

The Markov chain under consideration is stable if and only if \( \frac{\lambda ^\prime }{\mu ^\prime } \) \( < \) \( \pi P^\prime \) where \(\lambda ^\prime = \pi \textit{T}^0 \) and \(\mu ^\prime = \theta \textit{S}^0 \).

6 Steady State Analysis

The stationary probability vector z of Q is of the form \((z_0, z_1, z_1 R, z_1 R^2,\dots )\), where R is the minimal solution of the matrix quadratic equation

The stationary probability vector is obtained by solving the equations:

Hence, \(z_1\) can be determined up to a multiplicative constant using the equation

7 Performance Measures

The performance of the system at stationary can be analysed using the stationary probability vector (z\( _0 \), z\( _1 \), z\( _1 \)R, z\( _1 \)R\( ^2 \), ...). \(z_n e\) gives the probability that there are n customers in the system. Other measures like a system idle probability, expected queue length etc. follows from this. Since, our focus is to adjust the service process to control the system size even when the arrival rate is high, any information about the queue length is important.

8 Numerical Illustration

Numerical experiments are carried out to examine how the vector P influences the queue length. We chose the following values for the parameters.

In this example, the arrival of customers has two phases and the rate at which the phase of the service changes depends on which phase the arrival is in. The correlation between these two is determined by the vector P. The components of P represent the degree of dependence of the transition rates of the service with the phases of arrival. Notice that in the pattern of arrivals considered, occurrence of the event corresponding to the arrival of a customer happens in a higher rate from its second state than from the first. We found the effective service rate, ESR \(=\mu ^\prime \). \(\pi \textit{P}^\prime \), expected size E(C) of the system, the server idle probability P(I) and the probability \(P(N>K)\) that the size of the system is enormously large (here we considered the case N is greater than 10) for various choices of P.

Table 1 shows the variation in these parameters as p\( _1 \) increases while p\( _2 \) is kept at 1. As it can be seen from the values of ESR, the service rate increases with p\( _1 \). Consequently E(C) and \(P(N > K)\) decrease and P(I) increases. This is a result of increase in the transitions rates of the service while the arrival is in the first phase. Figure 1 depicts this increment. The fact that this dependence was linear resulted in a straight line graph. As the effective service rate increases, the system idle probability P(I) also increases for obvious reason. From Fig. 2, it can be seen that P(I) increases faster for small values of p\( _1 \) and slows down as P(I) becomes high. The expected number of customers E(C) decreases as p\( _1 \) takes higher values. Figure 3 illustrates this exponential decay. The probability of a highly populated system which is of much interest when we study healthcare models shows a similar behavior as E(C). This can be seen from Fig. 4.

Table 2 helps to compare these system characteristics when \(p_1=1\) and \(p_2\) changes. In our example, the dependence of the transition rates of service with the second phase of arrival is determined by p\( _2 \). As the arrival of customers to the system is high in this phase, p\( _2 \) has more effect on the system characteristics than \(p_1\). Hence, the figures corresponding to p\( _2 \) (Figs. 5, 6, 7 and 8) have the same nature of the respective figures with respect to changes in p\( _1 \) but with a greater slope.

This study illustrates how the interdependence of arrival and service processes affects the system characteristics. The system behaves according to the nature of this dependence. Thus, by making P as a control variable we can modify the system characteristics to an optimum level.

9 Conclusion

In this study, we analysed a system in which the service process is dependent on the arrival process. A control over this dependency may contribute towards the system stability. In the model illustrated in the previous section, the vector P determines the degree of dependency between the two process. Thus, the numerical analysis reveals that choosing optimal values for P is possible so that the system runs efficiently. In our future work, we intent to find the service time distribution as well as the waiting time distribution of the discussed model.

References

Chakravarthy, S. R.: The batch Markovian arrival process: a review and future work. In: Advances in Probability and Stochastic Processes, pp. 21–49 (2001)

Krishnamoorthy A., Joshua A.N.: A BMAP/BMSP/1 queue with Markov dependent arrival and Markov dependent service batches. J. Ind. Manage. Optim. 3(5) (2020). https://doi.org/10.3934/jimo.2020101

Conolly, B.: The waiting time for a certain correlated queue. Oper. Res. 15, 1006–1015 (1968)

Conolly, B., Hadidi, N.: A correlated queue. Appl. Prob. 6, 122–136 (1969)

Cidon, I., Guerin, R., Khamisy, A., Sidi, M.: On queues with inter-arrival times proportional to service times. Technical repurt. EE PUB, 811, Technion (1991)

Cidon, I., Guerin, R., Khamisy, A., Sidi, M.: Analysis of a correlated queue in communication systems. Technical report. EE PUB, 812, Technion (1991)

Conolly, B., Choo, Q.H.: The waiting process for a generalized correlated queue with exponential demand and service. SIAM J. Appl. Math. 37, 263–275 (1979)

Hadidi, N.: Queues with partial correlation. SIAM J. Appl. Math. 40, 467–475 (1981)

Hadidi, N.: Further results on queues with partial correlation. Oper. Res. 33, 203–209 (1985)

Srikanth, K.I., Manjunath, D.: Queues with dependency between interarrival and service times using mixtures of bivariates. Stoch. Models 22(1), 3–20 (2006)

Fendick, K.W., Saksena, V.R., Whitt, W.: Dependence in packet queues. IEEE Trans. Commun. 37(11), 1173–1183 (1989)

Combe, M.B., Boxma, O.J.: BMAP modeling of a correlated queue. In: Walrand, J., Bagchi, K., Zobrist, G.W. (eds.) Network Performance Modeling and Simulation, pp. 177–196. Gordon and Breach Science Publishers, Philadelphia (1999)

Boxma, O.J., Perry, D.: A queueing model with dependence between service and interarrival time. Eur. J. Oper. Res. 128(13), 611–624 (2001)

Adan, I.J.B.F., Kulkarni, V.G.: Single-server queue with Markov-dependent inter-arrival and service times. Queueing Syst. 45(2), 113–134 (2003)

Valsiou, M., Adan, I.J.B.F., Boxma, O.J.: A two-station queue with dependent preparation and service times. Eur. J. Oper. Res. 195(1), 104–116 (2009)

Badila, E.S., Boxma, O.J., Resing, J.A.C.: Queues and risk processes with dependencies. Stoch. Models. 30(3), 390–419 (2014)

Neuts, M.F.: Markov chains with applications to queueing theory, which have a matrix-geometric invariant probability vector. Adv. Appl. Prob. 10, 125–212 (1978)

Neuts, M.F.: Matrix Geometric Solutions for Stochastic Models. John Hopkins University Press, Baltimore (1981)

Neuts, M.F., Pagano, M.E.: Generating random variates from a distribution of phase type. In: Oren, T.I., Delfosse, C.M., Shub, C.M. (eds.) Winter Simulation Conference, pp. 381–387. IEEE (1981)

Sengupta, B.: The semi-Markovian queue: theory and applications. Stoch. Models 6(3), 383–413 (1990)

Sengupta, B.: Markov processes whose steady state distribution is matrix exponential with an application to the GI/PH/1 queue. Adv. Appl. Prob. 21, 159–180 (1989)

Lambert, J., van Houdt, B., Blondia, C.: Queue with correlated service and inter-arrival times and its application to optical buffers. Stoch. Models 22(2), 233–251 (2006)

van Houdt, B.: A matrix geometric representation for the queue length distribution of multitype semi-Markovian queues. Perform. Eval. 69(7–8), 299–314 (2012)

Buchholz, P., Kriege, J.: Fitting correlated arrival and service times and related queueing performance. Queueing Syst. 85, 337–359 (2017)

Asmussen, S., Nerman, O., Olsson, M.: Fitting phase-type distributions via the EM algorithm. Scand. J. Stat. 23(4), 419–441 (1996)

Breuer, L.: An EM algorithm for batch Markovian arrival processes and its comparison to a simpler estimation procedure. Ann. Oper. Res. 112, 123–138 (2002). https://doi.org/10.1023/A:1020981005544

Horváth, G., Okamura, H.: A fast EM algorithm for fitting marked Markovian arrival processes with a new special structure. In: Balsamo, M.S., Knottenbelt, W.J., Marin, A. (eds.) EPEW 2013. LNCS, vol. 8168, pp. 119–133. Springer, Heidelberg (2013). https://doi.org/10.1007/978-3-642-40725-3_10

Ozawa, T.: Analysis of queues with Markovian service process. Stoch. Models. 20(4), 391–413 (2004)

Whitt, W., You, W.: Using Robust queueing to expose the impact of dependence in single-server queues. Oper. Res. 66(1), 184–199 (2017)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Ranjith, K.R., Krishnamoorthy, A., Gopakumar, B., Nair, S.S. (2021). Analysis of a Single Server Queue with Interdependence of Arrival and Service Processes – A Semi-Markov Approach. In: Dudin, A., Nazarov, A., Moiseev, A. (eds) Information Technologies and Mathematical Modelling. Queueing Theory and Applications. ITMM 2020. Communications in Computer and Information Science, vol 1391. Springer, Cham. https://doi.org/10.1007/978-3-030-72247-0_31

Download citation

DOI: https://doi.org/10.1007/978-3-030-72247-0_31

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-72246-3

Online ISBN: 978-3-030-72247-0

eBook Packages: Computer ScienceComputer Science (R0)