Abstract

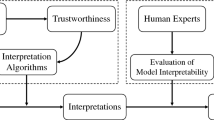

The interpretability research of deep learning is closely related to engineering, machine learning, mathematics, cognitive psychology and other disciplines. It has important theoretical research significance and practical application value in many fields such as information push, medical research, unmanned driving and information security. Past research has made some contributions to the black box problem of deep learning, but we still face a variety of challenges. For this reason, this paper first summarizes the history and related work of deep learning interpretability research. The present research status is introduced from three aspects: visual analysis, robust perturbation analysis and sensitivity analysis. This paper introduces the research on the construction of interpretable deep learning model from four aspects: model agent, logical reasoning, network node association analysis and traditional machine learning model. In addition, this paper also analyzes and discusses the shortcomings of the existing methods. Finally, the typical applications of interpretable deep learning are listed, and the possible future research directions in this field are prospected, and corresponding suggestions are put forward.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Haralick, R.M., Shanmugam, K., Dinstein, I.: Textural features for image classification. IEEE Trans. Syst. Man Cybern. SMC-3(6), 610–621 (1973)

Ying, Z., Chao, W., Wenya, G., Xiaojie, Y.: Multi-source emotion tagging for online news comments using bi-directional hierarchical semantic representation model. J. Comput. Res. Dev. 55(5), 933–944 (2018). (in Chinese)

Xin, Y., Jing, Y., Chuheng, T., Siqiao, G.: An overlapping semantic community detection algorithm based on local semantic cluster. J. Comput. Res. Dev. 52(7), 1510–1521 (2015). (in Chinese)

Hongkai, X., Xing, G., Shaohui, L., et al.: Interpretable, structured and multimodal deep neural networks . Pattern Recogn. Artif. Intell. 31(1), 1–11 (2018). (in Chinese)

Koenigstein, N., Dror, G., Koren, Y.: Yahoo! Music recommendations: modeling music ratings with temporal dynamics and item taxonomy. In: Proceedings of the Fifth ACM Conference on Recommender Systems, pp. 165–172. ACM (2011)

Heaton, J.B., Polson, N.G., Witte, J.H.: Deep learning for finance: deep portfolios. Appl. Stoch. Model. Bus. Ind. 33(1), 3–12 (2017)

Fukushima, K., Miyake, S.: Neocognitron: a self organizing neural network model for a mechanism of visual pattern recognition. In: Amari, S., Arbib, M.A. (eds.) Competition and Cooperation in Neural Nets. LNBM, vol. 45, pp. 267–285. Springer, Heidelberg (1982). https://doi.org/10.1007/978-3-642-46466-9_18

Cheng, A., Yeung, Y., et al.: Sensitivity analysis of neocognitron. IEEE Trans. Syst. Man Cybern. Part C 29(2), 238–249 (1999)

Garson, G.D.: Interpreting neural-network connection weights. AI Expert 6(4), 46–51 (1991)

Fei, W., Binbing, L., Yahong, H.: Interpretability of deep learning . Aviat. Weapons 26(1), 39–46 (2019)

Zeiler, M., Fergus, R.: Visualizing and understanding convolutional networks. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014. LNCS, vol. 8689, pp. 818–833. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10590-1_53

Olden, J.D., Jackson, D.A.: Illuminating the “black box”: a randomization approach for understanding variable contributions in artificial neural networks. Ecol. Model. 154(1–2), 135–150 (2002)

Koh, P.W., Liang, P.: Understanding black-box predictions via influence functions. In: Proceedings of the 34th International Conference on Machine Learning-Volume 70, pp. 1885–1894. JMLR.org (2017)

Lipton, Z.C.: The mythos of model interpretability. ACM Queue 16(10), 30 (2018)

Steiner, T., Verborgh, R., Troncy, R., et al.: Adding realtime coverage to the Google knowledge graph. In: International Semantic Web Conference, pp. 65–68 (2012)

Hu, Z., Ma, X., Liu, Z., et al.: Harnessing deep neural networks with logic rules. In: Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pp. 2410–2420 (2016)

Sabor, S., Frosst, N., Hinton, G.: Matrix capsules with EM routing. In: Proceedings of the 6th International Conference on Learning Representations, La Jolla, CA. ICLR, pp. 1884–2020 (2018)

Sabour, S., Frosst, N., Hinton, G.E.: Dynamic routing between capsules. In: Advances in Neural Information Processing Systems 30 - Proceedings of the 2017 Conference, pp. 3857–3867 (2017)

Krizhevsky, A., Sutskever, I., Hinton, G.E., et al.: ImageNet classification with deep convolutional neural networks. Neural Inf. Process. Syst. 141(5), 1097–1105 (2012)

Wang, Z., Zhang, J., Feng, J., et al.: Knowledge graph embedding by translating on hyperplanes. In: Twenty-Eighth AAAI Conference on Artificial Intelligence (2014)

Niu, G., Zhang, Y., Li, B., et al.: Rule-guided compositional representation learning on knowledge graphs. arXiv preprint arXiv:1911.08935 (2019)

Rezende, D.J., Mohamed, S., Wierstra, D.: Stochastic backpropagation and approximate inference in deep generative models. arXiv preprint arXiv:1401.4082 (2014)

Szegedy, C., Zaremba, W., Sutskever, I., et al.: Intriguing properties of neural networks. arXiv preprint arXiv:1312.6199 (2013)

Wu, Z., Wang, X., Jiang, Y.G., et al.: Modeling spatial-temporal clues in a hybrid deep learning framework for video classification. IEEE Trans. Multimedia (2015)

Lei, W., Wensheng, Z., Jue, W.: Hidden topic variable graphical model based on deep learning framework. J. Comput. Res. Dev. 52(1), 191–199 (2015)

Cangelosi, D., Blengio, F., Versteeg, R., et al.: Logic learning machine creates explicit and stable rules stratifying neuroblastoma patients. BMC Bioinformatics 14(7), S12 (2013)

Ma, X., Li, Y., Cui, Z., et al.: Forecasting transportation network speed using deep capsule networks with nested LSTM models (2018)

Zilke, J.R., Mencía, E.L., Janssen, F.: DeepRED–rule extraction from deep neural networks. In: Calders, T., Ceci, M., Malerba, D. (eds.) Discovery Science. DS 2016. LNCS, vol. 9956, pp. 457–473. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46307-0_29

Sato, M., Tsukimoto, H.: Rule extraction from neural networks via decision tree induction. In: International Joint Conference on Neural Networks (IJCNN 2001), vol. 3, pp. 1870–1875. IEEE (2001)

Augasta, M.G., Kathirvalavakumar, T.: Reverse engineering the neural networks for rule extraction in classification problems. Neural Process. Lett. 35(2), 131–150 (2012)

Feng, J., Zhou, Z.H.: Autoencoder by forest. In: Thirty-Second AAAI Conference on Artificial Intelligence (2018)

Catherine, R., Cohen, W.: Personalized recommendations using knowledge graphs: a probabilistic logic programming approach. In: Proceedings of the 10th ACM Conference on Recommender Systems, pp. 325–332. ACM (2016)

Cao, Y., Wang, X., He, X., et al.: Unifying knowledge graph learning and recommendation: towards a better understanding of user preferences. In: The Web Conference, pp. 151–161 (2019)

Kang, W.C., Wan, M., McAuley, J.: Recommendation through mixtures of heterogeneous item relationships. In: Proceedings of the 27th ACM International Conference on Information and Knowledge Management, pp. 1143–1152. ACM (2018)

Katuwal, G.J., Cahill, N.D., Baum, S.A., et al.: The predictive power of structural MRI in Autism diagnosis. In: International Conference of the IEEE Engineering in Medicine & Biology Society. IEEE (2015)

Wen, M., Zhang, Z., Niu, S., et al.: Deep-learning-based drug-target interaction prediction. J. Proteome Res. 16(4), 1401–1409 (2017)

Acknowledgement

This research is supported by National Natural Science Foundation of China (61972183, 61672268) and National Engineering Laboratory Director Foundation of Big Data Application for Social Security Risk Perception and Prevention.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Cheng, K., Wang, N., Li, M. (2021). Interpretability of Deep Learning: A Survey. In: Meng, H., Lei, T., Li, M., Li, K., Xiong, N., Wang, L. (eds) Advances in Natural Computation, Fuzzy Systems and Knowledge Discovery. ICNC-FSKD 2020. Lecture Notes on Data Engineering and Communications Technologies, vol 88. Springer, Cham. https://doi.org/10.1007/978-3-030-70665-4_54

Download citation

DOI: https://doi.org/10.1007/978-3-030-70665-4_54

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-70664-7

Online ISBN: 978-3-030-70665-4

eBook Packages: Intelligent Technologies and RoboticsIntelligent Technologies and Robotics (R0)