Abstract

A blind sub-carrier recognition algorithm of TT&C communication is proposed based on JTFA(Joint Time-Frequency Analysis)and Fast-ICA Algorithm. In this method, we use time-frequency analysis technology to extract the features of the satellite signals, and Fast-ICA to enhance SNR ( Signal Noise Ratio) effectively. As one of the best tools to analyze the non-stationary signals, it shows information in the joint time-frequency domain and makes us know about the change of frequency along with the time clearly. Before the time-frequency analysis of the satellite signal, the premise is to remove noise. This paper presents a method of Satellite TT&C signal recognition. The analysis results show that the algorithm has good effect and good convergence in the satellite TT&C signal extraction. The characteristic of this algorithm is that we need not any prior information of signals to recognize any TT&C signals of satellite.

This paper is funded by Guangdong Province higher vocational colleges & schools Pearl River scholar funded scheme (2016).

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

In modern military information wars, Satellite monitoring plays an important role because satellites have important mechanics and advantages. At present, in the information reconnaissance of communication, S-band (USB) monitoring system is widely used, so it is a prominent problem to extract multiple subcarriers from a monitoring frequency band. In the identification of satellite monitoring subcarrier signals, unknown bandwidth and uncorrelated, the manual detection method is mainly used. However, this method has the disadvantages of complex operation, high cost and high false detection rate, which can not meet the needs of satellite monitoring information reconnaissance and can not adapt to the environment of information war.

The time-frequency analysis technique of signal processing is a powerful tool when it is used to analyze non-stationary signals. The number is called time-frequency distribution. Using time-frequency distribution to analyze the signal can give the instantaneous of each moment frequency and its amplitude, and can be used for time-frequency filtering and time-varying signal research.

The so-called joint time-frequency analysis refers to mapping the time-domain signal s(t) to the time-frequency plane (phase plane), so as to analyze the local spectrum characteristics of the signal at a certain time. This method overcomes the shortcoming that the traditional Fourier transform can’t describe the local characteristics of signals, so it has been widely used in signal and image analysis, seismic signal processing, speech analysis and synthesis, nondestructive testing and other non-stationary signal processing, and has achieved great success.

The advantage of independent component analysis is that it does not need instantaneous mixing parameters, and only needs a small amount of statistical information (mutual independence and Gaussian distribution) to recover the source signal from the observed signal. The signal can be extracted without statistical information.

In signal detection, this algorithm can effectively divide the signals with overlapping spectrum. ICA algorithm based on negative entropy maximization and time-frequency analysis is applied to satellite signal recognition algorithm. The analysis shows that it has a great application prospect in military communication.

1.1 Independent Component Analysis

ICA is based on the assumption that the source signals are independent of each other. Based on this premise, the algorithm can use a linear transformation matrix to transform the variables in the case of unknown source signal and mixed matrix, so that the output variables and source signals are independent.

In this paper,a fast ICA algorithm based on negative entropy maximization combined with time-frequency analysis is adopted. The algorithm will be introduced step by step.

1.2 Algorithm Theory

The central limit theorem states that: when \(X_{i} (i = 1,2, \cdots )\) are independently identically distributed, \(Y_{n} = \sum\limits_{i = 1}^{n} {\left( {X_{i} - n\mu_{s} } \right)/\sqrt n \sigma_{x} } (n = 1,2, \cdots )\); when \(n \to \infty\), \(Y_{n} \sim N(0,1)\).

Before this separation and extraction algorithm for satellite monitoring signals, we make the following assumptions:

-

(a)

The influence of noise is not considered;

-

(b)

Satellite signal is a typical stationary independent random signal.

(1) Preprocessing

Firstly, we need to preprocess the signal, including centralized processing and whitening. Hypothesis is to delete the average value of x from it, so that x becomes the zero mean vector. The meaning of whitening is to make the components independent of each other through linear transformation Q of observation vector, and they also have a unit covariance matrix (for example), whitening is realized through PCA network. \({\rm{v = Qx = \ddot{\rm{E}}}}^{{ - 1/2}} {\rm{U}}^{{\rm{T}}} {\rm{x}}\), where \({\ddot{\rm{E}}}\,{ = }\,diag(d_{1,} \cdots ,d_{n} )\) is diagonal matrix of N maxima of correlation matrix \({\rm{R}}_{{\rm{x}}} = {\rm{E\{ xx}}^{{\rm{T}}} {\rm{\} }}\) on its diagonal line, and \({\rm{U}} \in C^{m \times n}\) is a matrix consists of corresponding eigenvectors.

(2) ICA Algorithm Based on the Maximization of Negentropy

In information theory, the negative entropy of Gaussian variable is the largest among all random variables with the same variance. We can use this theory to measure the degree of non Gaussian of a variable. Negative entropy is a kind of modified entropy.. Let \({\rm{y}}_{{\rm{G}}}\) is the combination vector of \(n\) Gauss random variables, with the same mean and variance matrix of y, then \(J({\rm{y}}) = H_{G} ({\rm{y}}) - H({\rm{y}}).\)

The parameters of the output signal can be expressed as Negentropy: \(I({\rm{y}}) = J({\rm{y}}) - \sum\limits_{i = 1}^{n} {J({\rm{y}}_{i} )}\). So the cost function based on maximization of Negentropy is \(\Phi_{NM} ({\rm{W}}) = - \log \left| {\det {\rm{W}}} \right| - \sum\limits_{i = 1}^{n} {J({\rm{y}}_{i} )} + H_{G} ({\rm{y}}) - H({\rm{x}})\)

The measurement of the independence between different signals can be accomplished by the calculation of negative entropy. However, the calculation of negative entropy needs to estimate the probability density function of random variables. It is very complex to estimate the probability density function. The effectiveness of the estimation depends on the selected parameters, and the amount of calculation will become larger.

(3) The Algorithm Step

The formula of Negentropy calculation is as follows \(J({\rm{y}}) \approx \sum\limits_{i = 1}^{p} {k_{i} \{ E[G_{i} ({\rm{y}})] - E[G_{i} ({\rm{v}})]\}^{2} }\). \(G_{i}\) is a non-quadratic function,

Maximizing this expression respect to \(E[G({\rm{y}})]\) = \(E[G({\rm{w}}^{{\rm{T}}} {\rm{X}})]\), namely \(E^{\prime}[G({\rm{w}}^{{\rm{T}}} {\rm{X}})] = E[{\rm{Xg(w}}^{{\rm{T}}} {\rm{X)}}] = 0\), where \(g(x)\) is the derivative of \(G(x)\).

Multiply both sides of the equation by \(E[{\rm{g^{\prime}(w}}^{{\rm{T}}} {\rm{X)}}]\), we get \({\rm{W^{\prime}}}E[{\rm{g^{\prime}(w}}^{{\rm{T}}} {\rm{X)}}]{\rm{ = W}}E[{\rm{g^{\prime}(w}}^{{\rm{T}}} {\rm{X)}}] - E[{\rm{Xg(w}}^{{\rm{T}}} {\rm{X)}}]\).

Let \({\rm{W}}^{ + } = - {\rm{W^{\prime}}}E[{\rm{g^{\prime}(w}}^{{\rm{T}}} {\rm{X)}}]\), after transformation we get \({\rm{W}}^{ + } = E[{\rm{Xg(w}}^{{\rm{T}}} {\rm{X)}}] - E[{\rm{g^{\prime}(w}}^{{\rm{T}}} {\rm{X)}}]{\rm{W}}\).

Let \({\rm{W}}^{*} = {\rm{W}}^{ + } /\left\| {{\rm{W}}^{ + } } \right\|\). If the result does not converge, continue to repeat the above steps until it converges. This algorithm can be divided into five steps.

-

(1)

Set \(n = 0\), initializing the weighting vector \({\rm{W(0)}}\);

-

(2)

Set \(n = n + 1\), computing \(y(t) = {\rm{w}}^{{\rm{T}}} (n)x(t)\);

-

(3)

Computing \({\rm{w}}(n + 1)\), and de-correlated \({\rm{w}}(n + 1)\) from \({\rm{w}}_{{1}} {\rm{,w}}_{{2}} {,} \cdots {\rm{w}}_{{\rm{n}}}\).\({\rm{W(n + 1)}} = E[{\rm{Xg(w}}^{{\rm{T}}} {\rm{(n)X)}}] - E[{\rm{g^{\prime}(w}}^{{\rm{T}}} {\rm{(n)X)}}]{\rm{W(n)}}\);

-

(4)

Normalizing \({\rm{W(n + 1)}} = {\rm{W(n + 1)}}/\left\| {\rm{W(n + 1)}} \right\|\);

When the result of the algorithm is not convergent, jump to the second step, continue the iteration;

-

(5)

When \(\left| {\rm{W(n + 1) - W(n)}} \right| < \varepsilon\), the algorithm converges, we can get an independent component \(y_{1} = \hat{s}_{1} = {\rm{WX}}\).

When \({\rm{W}}_{i + 1}\) is computed, we use Gram-Schmidt de-correlating algorithm, \({\rm{w}}^{{\rm{T}}}_{{1}} {\rm{x,w}}^{{\rm{T}}}_{{2}} {\rm{x,}} \cdots {\rm{w}}^{{\rm{T}}}_{{\rm{n}}} {\rm{x}}\) will be de-correlated. \({\rm{W}}_{i + 1} {(}n + 1{)}\) will be After each iteration, the following formula is reused to decorrelate.

2 Short Time-Fourier Transform

STFT(Short Time-Fourier Transform) is one solution of JTFA. The basic idea is to use window function to intercept the signal. Assuming that the signal in the window is stationary, the local frequency domain information can be obtained by Fourier transform. Corresponding to a certain moment t, STFT only analyzes the signal near the window and can roughly reflect the local spectrum information of the signal near the window. Then moving the window function along the signal, we can get the time-frequency distribution of the signal. It is defined as

-

Where: x(t)-signal.

-

\(\gamma (\tau - {\rm{t}}){\rm{e}}^{{ - {\rm{j}}\omega \tau }}\)-basic function.

-

t-time.

-

\(\omega\)-frequency.

2.1 Simulation Results and Analysis

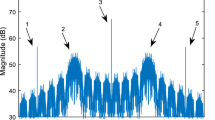

In this paper, a large number of simulation experiments are carried out to solve the problem of extracting and identifying satellite monitoring signals. In this paper, two signals randomly monitored by two satellites are selected randomly. Taking the signal of satellite downlink channel as an example, the two monitoring signals are generated by cortex monitoring terminal. Next, the two signals are extracted and separated, and the test results are verified. Firstly, the time-frequency analysis method is combined with fast ICA algorithm to separate the mixed signals. After three iterations, the first independent component is extracted. It can be seen from the figure that although there are some changes in the amplitude of the source signal, from the point of view of the signal waveform, the separation result is to achieve the desired goal (Figs. 1 and 2).

Simulation results show that:

-

(a)

This method analyzes the statistical independence of the signal. Firstly, it avoids the limitation of signal complexity in the previous signal extraction algorithm, and can also identify the signal with wrong parameters, which greatly improves the recognition accuracy.

-

(b)

Because the number of sampling points has an impact on the effect of extraction and separation, the parameters of different signals are different, so the extraction effect depends on the total number of sampling points. Generally speaking, with the increase of the number of sampling points, the higher the extraction accuracy; the greater the difference of parameters between signals, the higher the extraction accuracy. When the parameters of the signal are close, if the same number of sampling points can not be used to extract the signal properly, it can be solved by increasing the number of sampling points.

3 Conclusions

This paper presents an algorithm that combines time-frequency analysis with independent variables. As the objective function of non Gaussian random variables, this function can maximize the non Gaussian property of random variables and make the output parts independent of each other. The algorithm is applied to the extraction of satellite signals, which solves the problem of signal recognition without prior knowledge, unknown number of signals and unknown bandwidth of subcarrier signals. In this paper, the algorithm of sub carrier signal detection and acquisition is studied, and each step of the algorithm is discussed in detail. The test and simulation results show that the proposed algorithm can effectively identify the satellite signal subcarriers, and has the advantages of high extraction accuracy and fast convergence speed.

References

Hyvärinen, A., Karhunen, J., Oja, E.: Independent component analysis: algorithms and applications. Neural Netw. 13(4–5), 411–430 (2000)

Comom, P.: Independent component analysis-a new concept. Signal Process. 36, 287–314 (1994)

Jutten, C., Herault, J.: Blind separation of sources, part I an adaptive algorithm based on neuromlimetic architecture. Signal Process. 24, 1–0 (1991)

Yi-long, N.I.U., Hai-yang, C.H.E.N.: Blind Signals Separate. Defense Industry University Publishing House, Beijing (2006)

Zhang, D., Wu, X., Shen, Q., Guo, X.: Online algorithm of independent component analysis and its application. J. Syst. Simul. 6(1), 17–19 (2004)

Talwar, S., Viberg, M., Paulraj, A.: Blind separation of synchronous co-channel digital signals using an antenna array -part 1: algorithm. IEEE Trans. Signal Process. 44(5), 1184–1197 (1996)

Wei-hong, Fu., Xiao-niu, Y., Xin-wen, Z., Nai-an, L.: Novel method for blind recognition communication signal based on time-frequency analysis and neural network. Signal Process. 23(5), 775–778 (2007)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 ICST Institute for Computer Sciences, Social Informatics and Telecommunications Engineering

About this paper

Cite this paper

Le, W., Guang, M., Zhang, J. (2021). Blind Recognition of TT&C Signals of Satellite Based on JTFA and Fast-ICA Algorithm. In: Guan, M., Na, Z. (eds) Machine Learning and Intelligent Communications. MLICOM 2020. Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering, vol 342. Springer, Cham. https://doi.org/10.1007/978-3-030-66785-6_24

Download citation

DOI: https://doi.org/10.1007/978-3-030-66785-6_24

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-66784-9

Online ISBN: 978-3-030-66785-6

eBook Packages: Computer ScienceComputer Science (R0)