Abstract

In order to improve the detection rate of ship information, fast independent component analysis (FastICA) algorithm is adopted to separate satellite-based automatic identification system (AIS) mixed signals. FastICA algorithm has the advantages of fast convergence rate and simple form. However, it is sensitive to the initialization of the separation matrix and the robust performance is poor. Aimed at the problem, an improved FastICA algorithm is proposed. This new algorithm is based on constant model of AIS signal and chooses Modified-M estimation function as nonlinear function so as to improve the robustness of algorithm. The improved algorithm also modifies Newton iterative algorithm. The experimental simulation results indicate that the proposed method reduces the iteration times and the accuracy has been improved.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

As a new kind of ship navigation system, AIS can be used for data transmission between ships and shore units [4]. Satellite-based AIS receives the signals through the satellite and feedbacks the information to the test center of the shore [3]. Then a global monitoring of the ship can be realized. Usually, satellite-based AIS communication range is more than 1500 nautical mile (radius), and a satellite view contains a plurality of AIS subnets. And this will cause the signal collision phenomenon which can reduce ship detection rate; thus, how to separate signal accurately is an important issue.

Since the source signal (i.e., a signal transmitted by AIS subnet) is statistically independent, the method of ICA [5] to separate the AIS mixed signal is general. ICA algorithm has been widely used in various subjects, such as speech signal processing, medical signal processing, image enhancement, pattern recognition. For example, Budiharto proposed blind speech separation system using FastICA for audio filtering and separation that can be used in education or entertainment [2]. En et al. [7] used the FastICA to denoise the voice signal having strong noises, which successfully achieves the goal of conditional continuous speech signal transmission and improves to some extent the quality of the voice transmission. ICA algorithm includes information maximization (Informax) algorithm [1] and extended Informax [10], the natural gradient algorithm [9] and FastICA algorithm [14]. Among these, Informax algorithm is only used in the situation with super-Gaussian distribution. Then the extended Informax algorithm can separate super-Gaussian and sub-Gaussian mixed signal, but its convergence rate is slow. The natural gradient algorithm is only applicable to the case that the number of observed signals is equal to the number of source signals. FastICA algorithm, also known as the fixed point algorithm, can estimate independent components only through a nonlinear function. However, its robustness is not good and it is sensitive to the initial value of the weight vector. To solve this problem, Douglas and Chao put forward an improved FastICA algorithm [6], using Huber M estimation function as a nonlinear function. And Yang et al. proposed a FastICA algorithm, which combines the Newton iterative method with gradient descent method. In this paper, an improved algorithm is proposed based on the constant model of AIS signal.

2 The Original FastICA Algorithm

2.1 Signal Separation Model

ICA technique separates mixed signals blindly without any information of mixing system. Depending on the mixing process, the blind separation can be divided into linear instantaneous mixing, linear convolution mixing and nonlinear mixing. For satellite-based AIS, received signal is linear instantaneous mixing way, and the separation model is illustrated in Fig. 1.

In Fig. 1, \(s(t)=[s_1 (t),s_2 (t),\ldots ,s_N (t)]^{T}\) shows N-dimensional statistically independent unknown signal sources, \(x(t)=[x_1 (t),x_2 (t),\ldots ,x_M (t)]^{T}\) denotes M-dimensional observed signals, \(y(t)=[y_1 (t),y_2 (t),\ldots ,y_N (t)]^{T}\) means separation signals. So mathematical model of the ICA can be expressed by matrix:

In the formula, \(\mathbf{A}\) is a \(M\times N\)-dimensional mixing matrix and it represents unknown transmission channel. The number of independent signal sources here is assumed to be equal to that of observed signal, which is \(M=N\). The task of blind source separation is to identify the signal sources by estimating the separation matrix \(\mathbf{W}\). Therefore, the separation signal is expressed as:

2.2 Signal Preprocessing

When conducting the signal separation in FastICA algorithm, preprocessing for the observed signal is necessary. Preprocessing includes signal centering and pre-whitening. The centering process is to use time average to replace statistic average and use the arithmetic mean value to replace mathematical expectation. For the convenience of discussion, it is considered that \(\mathbf{X}\) is random variable with mean value of zero. In the separation question, conduct whitening for \(\mathbf{X}\) through a linear transformation \(\mathbf{B}\), so \(\mathbf{Z}=\mathbf{BX}\) can satisfy:

In the formula, \(\mathbf{I}\) is a unit matrix, \(\mathbf{B}\) means the whitening matrix and \(\mathbf{Z}\) expresses the whiten signal. So:

Because \(\mathbf{R}_\mathbf{X} \) is generally symmetrical and nonnegative definite, it can be calculated by the principal component analysis as follows:

where \(\mathbf{U}\) is the characteristic vector matrix of \(\mathbf{R}_\mathbf{X} \), \(\mathbf{D}\) is the diagonal matrix of corresponding characteristic value. Above all, signal preprocessing makes the observed signal into unrelated components, and this can reduce the workload of the algorithm.

2.3 Component Extraction Processing

FastICA algorithm includes data processing and component extraction processing. Among them, the independent component extraction is realized by constructing and optimizing the objective function. In this paper, the objective function is based on negative entropy and its form is as follows [8]:

Here \(G(\cdot )\) is a nonlinear function, and it is desirable as \(G(y)=\ln \cdot \cosh (y)\) combined with the AIS signal non-Gaussian characteristics, \(g(\cdot )\) is the differential coefficient of \(G(\cdot )\), \(w_0 \) is the initial value of w. Using Newton iterative method with a locally quadratic convergence rate optimizes the objective function. The Newton iterative formula is given as follows:

where k is the number of iterations, and \(F(w)=J_G ^{\prime }(w)\), and there are:

Put Eqs. (8) and (9) into Eq. (7), the iterative formula is shown as follows:

When the convergence condition \(|w_{k+1} -w_k |\le \delta (\delta \) is the convergence threshold) is fulfilled, make \(w=w_{k+1} \) and extract the signal \(y_i =w_i^T \mathbf{Z}\).

3 The Improved FastICA Algorithm

The performance of FastICA algorithm is determined by the initial condition \(w_0 \) and the nonlinear function \(G(\cdot )\). At the same time, the convergence speed is affected by the Newton iteration algorithm. Therefore, the FastICA algorithm is improved based on these three areas.

3.1 The Initial Condition \(w_0\)

AIS signal is a GMSK modulation signal with constant module feature; hence, constant modulus character can be used to blind source separation to improve the FastICA algorithm. So stochastic gradient constant modulus algorithm can be used to calculate the initial weight vector. Define the cost function as follows [12]:

where \(\alpha \) is the amplitude of a desired signal, p and q are positive integers, which can affect the convergence property and complexity. Because the source signal amplitude is 1 in this simulation, let \(\alpha =1\). p and q determine the convergence characteristics and complexity of the most steep decline constant modulus algorithm. Take different p and q; the error function of the calculation formula e(n) is, respectively, given in Table 1.

This paper chooses \(p=1\) and \(q=2\); iterative formula can be deduced:

where \(e\left( k \right) =2\left[ {\hat{{w}}^{H}\left( k \right) x\left( k \right) -\frac{\hat{{w}}^{H}\left( k \right) x\left( k \right) }{\left| {\hat{{w}}^{H}\left( k \right) x\left( k \right) } \right| }} \right] \), \(\mu =\frac{1}{2\lambda _1^2 }\) is the step factor, \(\lambda _1 \) is the biggest nonzero singular value of receiving data matrix.

3.2 The Nonlinear Function \(G(\cdot )\)

In the second step of the improved algorithm, select Modified-M estimation function as a nonlinear function of the objective function, which is expressed as [11]:

where c is the impact factor, and a and b are the boarders.

In Fig. 2, u represents the residual. As shown in the weight function: First, the middle part of the data is very small residual data, and this part of the data is the most consistent with the fitting of the data which deserve a sufficient proportion of weight, so the weight of the residual value near 0 in the area should be assigned to 1; then, on both sides of the weight reduction area, the data can reflect the data distribution law in a certain degree, but the range of residual is relatively large and the weight of convergence speed should be faster; finally, when the data difference is large, retaining each observation value, the weight of the data should be assigned to a value rather than 0, which should be given much faster convergence.

3.3 The Third-Order Newton Iterative Algorithm

Finally, amend the second-order Newton iterative algorithm for the third-order Newton iterative algorithm so as to improve the convergence speed. The specific form is shown as follows [15]:

After calculation, the iterative formula of improved algorithm can be simplified to:

3.4 The Entire Improved FastICA Algorithm Process

In summary, the entire improved FastICA algorithm is described as follows:

-

(1)

Preprocessing for the observed signals;

-

(2)

Calculating the initial weight vector by stochastic gradient constant modulus algorithm;

-

(3)

Constructing the objective function combined with the initial weight vector and Modified-M estimate function;

-

(4)

Optimizing the objective function by the third-order Newton iteration algorithm so that the signal \(y_i \) can be separated;

-

(5)

If i is less than N, remove the correlation of separating vector. Then repeat step (2) until \(i=N\) (Fig. 3).

4 Experimental Results

4.1 Parameter Selection [11]

In the thesis of Dr. Wang, it is explained that when a is 1.345 and b is 3 and c is 0.3, the improved Huber function can achieve the best estimation effect. Combining with the improved algorithm in this paper, when the parameters take these values, the separation effect can be optimized best, and the robust performance of the algorithm can achieve the best.

4.2 Simulation Experiment

In order to show the performance of separation method more accurately, many papers choose mean square error SMSE as the evaluation criteria. Assume that N is the number of the signal sources, and SMSE can be obtained as:

The smaller the value of SMSE means the better separation effect of the algorithm. When SMSE is equal to zero, estimated signal is no difference in full with the source signal.

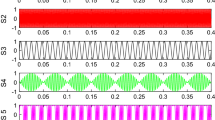

This paper performs some signal simulation experiments under the environment of MATLAB. Here, AIS source signal is GMSK modulation, bit rate is 9600 bit/s, and carrier frequency is 40 kHz. Mix 4 source signals through a mixing matrix \(\mathbf{A}\); then, adopt the improved algorithm to estimate source signal. The simulation results as follows:

In Fig. 4, the improved algorithm of this paper and the two improved algorithms in the references are compared. The improved algorithm in the reference 10 is based on the classical FastICA, and the Newton iteration method and the steepest descent method are used to improve the convergence performance of the algorithm [13]. In the reference 9, the Huber function is used as the nonlinear function in the optimization objective function to improve the robust performance of the algorithm [6]. From the experimental results, the improved algorithm of this paper is superior to the classical FastICA algorithm and the improved algorithms in references, and the convergence rate increases and robustness has been improved obviously.

As shown in Fig. 5, the FastICA algorithm can basically separate the mixed signals, except for the order of the signals, and the signals separated by PCA cannot represent any characteristics of the source signals. Similarly, the MCA algorithm cannot separate the mixed signal,which is essentially the same as PCA. It can be seen that the FastICA algorithm is superior to PCA and MCA.

Table 2 shows the iteration times of four algorithms. It can be seen that when the number of source signals is 2, the iteration times are so little difference between the four algorithms. With the increase in the number of source signals, the iteration time of the improved algorithm is much smaller than that of the other three algorithms. Hence, the method in this paper can speed up the convergence rate and reduce the number of iterations.

5 Conclusion

FastICA algorithm is the most commonly used method of blind source separation, and many new improvements have been put forward. A new and reliable algorithm based on the latest mathematical theory is proposed in this paper. First, the initial value of the separation matrix affects the convergence performance of the algorithm. Therefore, the stochastic gradient constant modulus algorithm is used to calculate the initial value to ensure the convergence performance of the algorithm. Secondly, the Modified-M estimation function is adopted as the nonlinear function of the objective function to improve the algorithm. And then using the third-order Newton iteration algorithm speeds up the convergence rate of the improved algorithm. Finally, though comparing the improved algorithm and the improved algorithm of the previous algorithm, it shows that the improved algorithm can effectively separate the useful signal and enhance the convergence and robustness.

References

A.J. Bell, T.J. Sejnowski, An information-maximization approach to blind separation and blind deconvolution. Neural Comput. 7(6), 1129–1159 (1995)

W. Budiharto, A.A.S. Gunawan, Blind speech separation system for humanoid robot with FastICA for audio filtering and separation. Int. Workshop Pattern Recognit. 1001113 (2016)

M.A. Cervera, A. Ginesi, K. Eckstein, Satellite-based vessel automatic identification system: a feasibility and performance analysis. Int. J. Satell. Commun. Netw. 29(2), 117–142 (2009)

R. Challamel, A European hybrid high performance Satellite-AIS system, in IEEE Press Advanced Satellite Multimedia Systems Conference, Baiona, pp. 246–252 (2012)

S. Choi, A. Cichocki, H.M. Park, S.Y. Lee, Blind source separation and independent component analysis: a review. Neural Inf. Process. 6(1), 1–57 (2005)

S.C. Douglas, J.C. Chao, Simple, robust, and memory-efficient FastICA algorithms using the Huber M-estimator cost function. J. VLSI Signal Process. 48(1–2), 143–159 (2007)

D. En, Y.K. Chen, Z.L. Mao, Discontinuous transmission of voice in L-PLC under low SNR based on FastICA. Comput. Eng. Appl. 52(9), 108–111 (2016)

A. Hyvärinen, E. Oja, Independent component analysis: algorithms and applications. Neural Netw. 13, 411–430 (2000)

L. Li, F. Yan, A new independent component analysis based on extended-natural gradient, in IEEE of the International Conference on Machine Learning and Cybernetics. pp. 2416–2420 (2007)

D. Liu, X. Liu, Informax algorithm based on linear ICA neural network for BSS problems, in Processing of the 4th World Congress on Intelligent Control and Automation. pp. 1949–1952 (2002)

B. Wang, Research and application of statistical data quality assessment methods. Master’s Thesis of North China Electric Power University. (2013)

L.S. Xu, G.Q. Xu, Z.X. Ma et al., Simulation analysis and FPGA implementation of constant modulus algorithm. Technol. R&D 1671–7597 (2014)

J.A. Yang, Z.Q. Zhuang, B. Wu, An improved FastICA algorithm based on negentropy maximization. J. Circuits Syst. 7(4), 37–40 (2002)

V. Zarzoso, P. Comon, Comparative speed analysis of FastICA, in Independent Component Analysis and Signal Separation. Lecture Notes in Computer Science, vol. 4666, ed. by M.E. Davies, C.J. James, S.A. Abdallah, M.D. Plumbley (Springer, Berlin, Heidelberg, 2007), pp. 293–300

R. Zhang, G.M. Xue, Modified third-order Newton iteration method. Coll. Math. 21(1), 1454–1672 (2005)

Acknowledgements

This work is supported by the National Natural Science Foundation of China (No. 61601326) and Tianjin City High School Science and Technology Fund Planning Project (No. 20140707). An earlier version of this paper was presented at the International Conference of 2016 6th International Workshop on Computer Science and Engineering.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Meng, X., Yang, L., Xie, P. et al. An Improved FastICA Algorithm Based on Modified-M Estimate Function. Circuits Syst Signal Process 37, 1134–1144 (2018). https://doi.org/10.1007/s00034-017-0595-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-017-0595-5