Abstract

Non-negative matrix factorization and its variants have been utilized for computer vision and machine learning, however, they fail to achieve robust factorization when the dataset is corrupted by outliers and noise. In this paper, we propose a roust graph regularized non-negative matrix factorization method (RGRNMF) for image clustering. To improve the clustering effect on the image dataset contaminated by outliers and noise, we propose a weighted constraint on the noise matrix and impose manifold learning into the low-dimensional representation. Experimental results demonstrate that RGRNMF can achieve better clustering performances on the face dataset corrupted by Salt and Pepper noise and Contiguous Occlusion.

This work is supported by Foundation of Chongqing Municipal Key Laboratory of Institutions of Higher Education ([2017]3), Foundation of Chongqing Development and Reform Commission (2017[1007]), Scientific and Technological Research Program of Chongqing Municipal Education Commission (Grant Nos. KJQN201901218 and KJQN201901203), Natural Science Foundation of Chongqing (Grant No. cstc2019jcyj-bshX0101), Foundation of Chongqing Three Gorges University and National Science Foundation (NSF) grant #2011927 and DoD grant #W911NF1810475.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Clustering for computer vision and subspace learning is a challenging work. Many clustering methods were proposed for image retrieval [1], image indexing [2] and image classification [3]. To achieve image clustering effectively, a widely used approach is to discover an effective low-dimensional representation for the original data. Therefore, a lot of researches were presented to dig out the geometrical structure information of the original data, which can lead to a more discriminative representation.

In the past decades, many dimensionality reduction techniques were proposed including principal components analysis (PCA) [4] and non-negative matrix factorization (NMF) [5]. Among these methods, the non-negative property of the learned representation leads to be more meaningful in image representation. NMF decomposes the original data matrix into two low-dimensional matrices (i.e. a basis matrix and an encoding matrix), whose product can be best approximate to the original data matrix. Due to the excellent property of NMF, some variants [6,7,8,9,10,11,12,13,14,15,16,17,18,19] were proposed to improve the clustering accuracy from different views.

Although traditional NMF performs very well in learning a parts-based representation for clustering, it fails to achieve clustering while the original data is heavily corrupted. In the view of recent researches, the loss function of traditional NMF are very sensitive to outliers. In other words, the Frobenius norm enlarges the approximation error between the original data matrix and the product of the decomposed matrices. To address this issue, some studies [6,7,8,9,10,11,12] proposed some robust loss functions to minimize the reconstruction error. These proposed methods can reduce outliers of the representation, but they cannot remove outliers. Moreover, the learned representation cannot respect the geometrical structure of the original data contaminated by outliers and noise.

To address above-mentioned problems, we present a robust graph regularized non-negative matrix factorization (RGRNMF) for image clustering. Firstly, we propose a robust framework to measure the approximation error. Secondly, we construct a weighted graph to encode the geometrical information of the original data. Our achievements are as follows:

-

We propose a robust non-negative matrix factorization framework to remove outliers, and we incorporate the geometrical information of the original data into the learned representation.

-

Extensive experiments demonstrate that our proposed framework can achieve image clustering from the original data corrupted by Salt and Pepper noise or Contiguous Occlusion.

2 Related Works

Supposed that there are n sample images \(\{x_i\}_{i=1}^n\) and any image \(x_i\) has m features. Thus, we denote the original data matrix by \(V\in R^{m \times n}\). Due to the high-dimensional property of V, it is a challenging task to achieve image clustering. Generally, NMF is utilized to find two low-dimensional matrices \(W \in R^{m \times r}\) and \(H \in R^{r \times n}\) such that the product of W and H can be approximately equal to V. There, we have

where r is a factorization rank and \(r<<\min \{m,n\}\). Generally, problem (1) can be transformed into a non-convex optimization problem as follows:

where the loss function Error can be the Frobenius norm, \(L_1\) norm, \(L_{2,1}\) norm or Huber. Recently, Guan et al. [12] proposed a Truncated Cauchy loss (CauchyNMF) to reduce outliers, which can be summarized as follows:

where \(g(x)= {\left\{ \begin{array}{ll} ln(1+x), &{}0 \le x \le \sigma \\ ln(1+\sigma ) , &{} x>\sigma \end{array}\right. }\); \(\sigma \) and \(\gamma \) denote the scale parameter and the truncation parameter. \(\sigma \) can be obtained by three-sigma-rule, and \(\gamma \) is given by the Nagy algorithm [12]. Traditional NMF utilizes the different loss functions to reduce outliers, but they cannot remove outliers. Therefore, a robust NMF framework was proposed to eliminate outliers as follows:

where M is the original data matrix corrupted by noises, E is an error matrix, \(\lambda \) is a hyper-parameter, and the function \(\varOmega \) is the constraint term. Zhang et al. [11] proposed the Frobenius norm as the loss function and the \(L_1\) norm as the constraint on E, which can be described as follows:

where \(\parallel E \parallel _M=\sum _{ij}|e_{ij}|\).

3 Robust Graph Regularized Non-negative Matrix Factorization

3.1 Model Formulation

Previous NMF models have some defects: 1) They cannot remove outliers from the dataset corrupted by Salt and Pepper noise or Contiguous Occlusion. 2) While the dataset is corrupted by noises, the learned representation H cannot preserve the geometrical structure information.

In (5), Zhang et al. [11] supposed that the error matrix E is sparse, but the outliers in E are neglected. If the error matrix contains some outliers, then the constraint \(\parallel E \parallel _M\) is not inappropriate for outliers. Supposed that all outliers of the corrupted image matrix \(M \in R^{m \times n}\) produced by Salt and Pepper noise or Contiguous Occlusion are detected. A weight graph S can be utilized to label these outliers as follows:

Thus, we propose the constraint on E by the following form:

To learn the geometrical structure information of the original data, manifold regularization is proposed to construct the relation between the original data and the low-dimensional representation. A widely used manifold regularization term [20] can be described as follows:

where \(U_{jl}=e^{-\frac{\parallel x_j-x_l\parallel ^2}{\sigma }}\) and \(D_{ii}=\sum _j W_{ij}\). In summary, combining (7), (8) and (5) results in our robust graph regularized non-negative matrix factorization (RGRNMF), which can be summarized into the following optimization problem

where \(\lambda \) and \(\gamma \) are hyper-parameters.

4 Optimization Scheme

It is obvious that problem (9) is non-convex. Therefore, the global optimal solution cannot be searched. Supposed that the \(k-\)th solution of problem (9) is obtained. We can have the \(k+1-\)th solution by optimizing the following problems

and

and

It is easy to obtain the solution of problems (10), (11) and (12) as follows:

5 Experimental Results

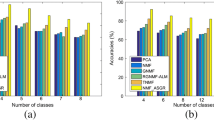

We compare our proposed method (RGRNMF) with NMF [5], RNMF [9], MahNMF [8] and CauchyNMF [12] on the clustering performances of the ORL dataset. To verify the clustering ability on the corrupted data, we propose two corruptions including Salt and Pepper noise and Contiguous Occlusion. For Salt and Pepper noise, there are several percentages of corrupted pixels from 1% to 25%. Similarly, we vary the corrupted block size for Contiguous Occlusion from 1 to 16.

To evaluate the clustering effect of all methods, we propose Accuracy (AC) and Normalized Mutual Information (NMI) [21]. Let \(\lambda =100\) and \(\gamma =100\). Figure 1 and 2 show the clustering performances on the ORL dataset contaminated by Salt and Pepper noise and Contiguous Occlusion. From these figures, we observe that:

-

CauthyNMF achieves satisfactory clustering ACs and NMIs from the ORL dataset corrupted by Salt and Pepper noise and Contiguous Occlusion in the beginning, however, it obtains the poor clustering effect finally. This phenomenon indicates that CauthyNMF cannot handle heavy outliers.

-

NMF, PCA Kmeans and GNMF fail to achieve clustering. This means that They cannot handle outliers.

-

RGRNMF has relatively stable clustering performances on the Salt and Pepper noise and Contiguous Occlusion, that is to say, RGRNMF is more robust to outliers.

6 Conclusion

This paper proposed robust graph regularized non-negative matrix factorization (RGRNMF) to handle Salt and Pepper noise and Contiguous Occlusion. Clustering results demonstrate that our proposed NMF framework has the following properties. Firstly, RGRNMF can learn a more effective and discriminative parts-based representation from the ORL dataset corrupted by handle Salt and Pepper noise or Contiguous Occlusion. Secondly, RGRNMF is more robust to outliers than existing NMF methods.

References

Chen, L., Xu, D., Tsang, I.W., Li, X.: Spectral embedded hashing for scalable image retrieval. IEEE Trans. Cybern. 44(7), 1180–1190 (2014)

Datta, R., Joshi, D., Jia, L.I., Wang, J.Z.: Image retrieval: ideas, influences, and trends of the new age. ACM Comput. Surv. 40(2), 35–94 (2008)

Banerjee, B., Bovolo, F., Bhattacharya, A., Bruzzone, L., Chaudhuri, S., Mohan, B.K.: A new self-training-based unsupervised satellite image classification technique using cluster ensemble strategy. IEEE Geoence Remote. Sens. Lett. 12(4), 741–745 (2015)

Turk, M., Pentland, A.: Eigenfaces for recognition. J. Cogn. Neurosci. 3(1), 71–86 (1991)

Lee, D.D., Seung, H.S.: Learning the parts of objects by non-negative matrix factorization. Nature 401(6755), 788–791 (1999)

Hamza, A.B., Brady, D.J.: Reconstruction of reflectance spectra using robust nonnegative matrix factorization. IEEE Trans. Signal Process. 54(9), 3637–3642 (2006)

Kong, D., Ding, C., Huang, H.: Robust nonnegative matrix factorization using L21-norm. In: Proceedings of the 20th ACM International Conference on Information And Knowledge Management, pp. 673–682 (2011)

Guan, N., Tao, D., Luo, Z., Shawetaylor, J.: MahNMF: Manhattan non-negative matrix factorization. J. Mach. Learn. Res. arXiv:1207.3438v1 (2012)

Gao, H., Nie, F., Cai, W., Huang, H.: Robust capped norm nonnegative matrix factorization. In: ACM International on Conference on Information and Knowledge Management, pp. 871–880 (2015)

Du, L., Li, X., Shen, Y.: Robust nonnegative matrix factorization via half-quadratic minimization. In: IEEE International Conference on Data Mining, pp. 201–210 (2012)

Zhang, L., Chen, Z., Zheng, M., He, X.: Robust non-negative matrix factorization. Front. Electr. Electron. Eng. China 6(2), 192–200 (2015)

Guan, N., Liu, T., Zhang, Y., Tao, D., Davis, L.: Truncated cauchy non-negative matrix factorization. IEEE Trans. Pattern Anal. Mach. Intell. 41(1), 246–259 (2018)

Casalino, G., Del Buon, N., Mencar, C.: Subtractive clustering for seeding non-negative matrix factorizations. Inf. Sci. 257, 369–387 (2014)

Wu, W., Jia, Y., Kwong, S., Hou, J.: Pairwise constraint propagation-induced symmetric nonnegative matrix factorization. IEEE Trans. Neural Netw. Learn. Syst. 29(12), 6348–6361 (2018)

Li, H., Li, K., An, J., Zhang, W., Li, K.: An efficient manifold regularized sparse non-negative matrix factorization model for large-scale recommender systems on GPUs. Inf. Sci. (2018). https://doi.org/10.1016/j.ins.2018.07.060

Liu, X., Wang, W., He, D., Jiao, P., Jin, D., Cannistraci, C.V.: Semi-supervised community detection based on non-negative matrix factorization with node popularity. Inf. Sci. 381, 304–321 (2017)

Peng, X., Chen, D., Xu, D.: Hyperplane-based nonnegative matrix factorization with label information. Inf. Sci. 493, 1–9 (2019)

Kang, Z., Pan, H., Hoi, S., Xu, Z.: Robust graph learning from noisy data. IEEE Trans. Cybern. (2019)

Li, Z., Tang, J., He, X.: Robust structured nonnegative matrix factorization for image representation. IEEE Trans. Neural Netw. Learn. Syst. 29(5), 1947–1960 (2018)

Cai, D., He, X., Han, J., Huang, T.S.: Graph regularized nonnegative matrix factorization for data representation. IEEE Trans. Pattern Anal. Mach. Intell. 33(8), 1548–1560 (2011)

Cai, D., He, X., Han, J.: Document clustering using locality preserving indexing. IEEE Trans. Knowl. Data Eng. 17(12), 1624–1637 (2005)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Dai, X., Zhang, K., Li, J., Xiong, J., Zhang, N. (2020). Robust Graph Regularized Non-negative Matrix Factorization for Image Clustering. In: Han, M., Qin, S., Zhang, N. (eds) Advances in Neural Networks – ISNN 2020. ISNN 2020. Lecture Notes in Computer Science(), vol 12557. Springer, Cham. https://doi.org/10.1007/978-3-030-64221-1_21

Download citation

DOI: https://doi.org/10.1007/978-3-030-64221-1_21

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-64220-4

Online ISBN: 978-3-030-64221-1

eBook Packages: Computer ScienceComputer Science (R0)