Abstract

Assistive robots operate in complex environments and in presence of human beings, as such they are influenced by several factors which may lead to undesired outcomes: wrong sensor readings, unexpected environmental conditions or algorithmic errors represent just few examples. When the safety of the user must be guaranteed, a possible solution is to rely on a human-in-the-loop approach, e.g. to monitor if the robot performs a wrong action or environmental conditions affect safety during the interaction, and provide a feedback accordingly. The proposed work presents a human supervised smart wheelchair, i.e. an electric powered wheelchair with semiautonomous navigation capabilities of elaborating a path planning, whose user is equipped with a Brain Computer Interface (BCI) to provide safety feedbacks. During the wheelchair navigation towards a desired destination in an indoor scenario, possible problems (e.g. obstacles) along the trajectory cause the generation of error-related potentials signals (ErrPs) when noticed by the user. These signals are captured by the interface and are used to provide a feedback to the navigation task, in order to preserve safety and avoiding possible navigation issues modifying the trajectory planning.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

1 Introduction

Human robot cooperation and interaction have experienced a significant growth in the last years to support people with reduced motor skills, both from the academia and industrial point of view. In particular, real time feedback from the user to the robot is an emerging requirement, with the main goal of ensuring human safety, better than a common sensory set equipping the robot can ensure. In cooperative tasks, a prompt feedback from the human to the robot allows to handle possible environmental factors that can change and affect the cooperative performance, and possibly mitigate the effects of unexpected factors, as investigated in the literature [1,2,3,4,5]. Wrong sensor readings, unexpected environmental conditions or algorithmic errors are just some of the factors which can expose the users to serious safety risks. For these reasons, it is fundamental that the human operator is included within the robot control loop, so that she/he can modify the robot’s decisions during human-robot interaction if needed [6]. Different works, such as [7, 8], have investigated these kind of applications by considering real-time feedbacks about the surrounding environment as well as robot control architecture and behavior via electroencephalographic (EEG) signals. In several different robotic applications, the human electroencephalogram encodes internal states, which can be detected online in single trial and can be used to improve robotic behavior, e.g., smoother interaction, in rehabilitation tasks and user workload adjustments [9]. During previous researches [10, 11], a cursor movement was used to evoke the correct or erroneous potentials. Such a research reported the 80% of accuracy in detecting the Error-related Potentials (ErrPs), leading to a reduction of decoding error from 30% to less than 9%. As a consequence, the classification accuracy increased from 70% to almost 80% by using the online ErrP-based correction. Another research [12] supports the feasibility of the ErrPs use by the combination of BMI (Brain-Machine Interface) signal to decode the action commands, while the ErrP decoding to correct the erroneous actions. The consequent offline analysis showed an improvement into the decoding of movement-related potentials, thanks to the introduction of ErrP classification. The average recognition of the ErrP was about the 80%, showing a significant reduction of the global error rate in discriminating movements.

The aim of the present paper is to develop a human-in-the loop approach for addressing accurate autonomous navigation of an assistive mobile platform, while simultaneously accounting for unexpected and undetected errors via human correction. In detail, a specific assistive mobile robot is investigated adding the possibility of modifying its pre-planned navigation when it receives a message from the human operator. The robot is a smart wheelchair, capable of performing semiautonomous navigation, while human-robot communication is performed via Brain Computer Interface (BCI): this device is especially useful for people who have very limited mobility and whose physical interaction with the wheelchair must be minimal. In detail, when the user notices the presence of an obstacle not detected by the sensors installed on the wheelchair, then the ErrP signals generated in his/her brain are recorded by the BCI system, as investigated in [13]. The ErrPs are evoked potentials recorded when the subject recognizes an error during a pre-planned task, and described by a variation in the brain signal within 500 ms after the erroneous response. Consequently, an alert message is sent to the mobile robot in order to redefine the navigation task at the path planning level. The possibility for the user to participate to the human-robot cooperation task allows to face all those environmental changes that the system may not be able to manage, as well as to correct possible erroneous robot decisions due to software and/or hardware problems. The size and shape of any undetected object, its distance from the wheelchair, together with the relative speed, as well as EEG signal classification and communication speed, all play an important role and should be given great consideration.

The paper is organized as follows. The proposed approach is introduced in Sect. 2 mainly focusing on the robot trajectory planning and the EEG methods for human-robot interaction. The hardware of the system is described in Sect. 3, while Sect. 4 discusses preliminary results of the proposed approach. Conclusions and future improvements end the paper in the last Sect. 5.

2 Proposed Approach

Assistive robots, employed to support the mobility of impaired users, are usually equipped with several sensors, especially to detect possible obstacles on the way. However, in some cases, these sensors can not correctly detect objects (e.g., holes in the ground, stairs and small objects are often missed by laser rangefinders). The proposed idea is that of including the human observation within the robot control loop, by recording ErrP signals to detect possible changes, and sending a feedback to the robot. The proposed human-in-the-loop approach is sketched in Fig. 1. The ROS ecosystem was used as a base to build the proposed solution, due to its flexibility and wide range of tools and libraries for sensing, robot control and user interface. Description of the core modules of the proposed approach are given in the following subsections.

2.1 Robot Navigation

The main goal of the navigation algorithm is to determine the global and local trajectories that the robot follows to move to a desired point, defined as navigation goal, from the starting position, considering possible obstacles not included in the maps [14]. The navigation task performed by the smart wheelchair is mainly composed by three different steps: localization, map building [15] and path planning [16]. Each step is introduced in the following.

-

Localization: the estimation of the current robot position in the environment; the localization method is based on the AMCL (Adaptive Monte Carlo Localization), which exploits recursive Bayesian estimation and a particle filter to determine the actual robot pose. The Monte Carlo algorithm is used to map the odometry estimation acquired after the mapping phase [17]. When the smart wheelchair moves, it records changes in the environment. The algorithm uses the “Importance Sampling” method [18], a technique that allows to determine the property of a particular distribution, starting from samples generated by a different distribution with respect to that of interest. The localization approach by Monte carlo can be resumed into two phases. During the first one, the algorithm predicts the generation of n new positions and each of this is randomly generated from the samples determined in the previous step. In the latter, the sensorial recordings are included and weighted in the pool of samples as it happens with the Bayes rules in the Markov localization.

-

Mapping: the representation of the environment where the mobile robot operates, which should contain enough information to let the robot accomplishes the task of interest; it mainly relies on the laser rangefinder, positioned on a fixed support at the base of the smart wheelchair and able to measure the distance in the space and to delineate the profile of a possible obstacle, drawing the environment map.

-

Path Planning: the choice of the path to reach a desired goal location, given the robot position; it takes into account possible obstacles detected by the laser rangefinder. The applied navigation algorithm is the Dynamic Window Approach (DWA) [19]. The velocity space of the robot is limited by the configuration of the obstacles in the space and by its physical characteristics. The obstacles near the robot impose restrictions on the translation and rotation velocities. All the allowable velocities are calculated by a function that evaluates the distance from the nearest obstacle to a certain trajectory and gives a score choosing the best solution among all the trajectories.

All the steps described above are performed via ROS modules: the wheelchair software is basically composed of ROS packages and nodes, which acquire data from the sensor sets, elaborate the information and command the wheels accordingly. A description of these functionalities can be found in [20].

2.2 EEG-Based Feedback

In the proposed humal-in-the-loop approach, the wheelchair operator can interact with the robot when he/she observes a problem during the navigation task (e.g., the wheelchair is about to fall into something unexpected, such as a hole or an obstacle not detected by the laser rangefinder). In detail, the system allows the operator to send a signal to the robot in order to change the predefined path. This interaction requires the addition of software packages to the navigation modules, whose aim is to build the link between the BCI and navigation stack of the smart wheelchair. The new packages implement the following steps:

-

1.

subscribe or listen to the BCI for the trigger;

-

2.

create the obstacle geometry;

-

3.

pose the obstacle within the built map;

-

4.

request a new iteration of the path planning algorithm.

When a trigger from the BCI is received, then a new unexpected obstacle is virtually created and positioned within the map built. In this way, the path planning module can elaborate a new way to the goal, taking into account the newly introduced obstacle.

The BCI software is used to collect the ErrP signals and specific algorithms are implemented to recognize them. The ErrP wave and its shape is detectable at almost 500 ms from the error recognition by the user and it is defined by a huge positive peak, preceded and followed by two negative peaks as in Fig. 2. Consistently to the literature, the error potentials are most prominent in the central electrodes, i.e. Cz, Fz and Pz, as occurs in similar researches in the most cases.

Example of typical ErrP wave shape [1]

As already stated in Sect. 1, authors of [12] supported the feasibility of the ErrPs use by the combination of Brain-Machine Interface signal to decode the action commands while the ErrP decoding to correct the erroneous actions. This approach has been reproduced in the present study, simulating the presence of obstacles in the path, originally chosen by the smartwheelchair, in order to use the generated ErrP signals, recorded by the BCI system, to give a feedback and try to avoid the obstacles.

2.3 Navigation: EEG Feedback Integration via ROS Nodes

The integration between the smart wheelchair navigation and the EEG feedback was realized by the creation of dedicated ROS nodes. The ROS navigation stack takes information from odometry and sensor streams and outputs velocity commands to send to the smart wheelchair. The best way to apply a correction action on the wheelchair trajectory is by creating imagery obstacles on the map layer. The navigation stack changes dynamically the cost map, by using sensor data or by sending point cloud. In particular, a new software package has been created which allows a link between the BCI and the navigation task.

The implemented package is able to:

-

subscribe and listen continuously to the robot position;

-

transform the robot pose from the robot frame to the map frame;

-

subscribe/listen to the BCI for the trigger;

-

create the obstacle geometry and position it on the map;

-

convert it to ROS point clouds.

Then, the point clouds are published in the ROS navigation stack where the local and global cost map parameters are modified. The implementation of the nodes architecture is represented and detailed in Fig. 3, while the flowchart of the algorithm is introduced in Fig. 4.

3 Experimental Setup

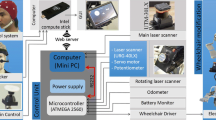

In this section both the smart wheelchair and the BCI used for building a prototype of the proposed system are presented. A scheme of the system setup is reported in Fig. 5 in order to show how the different sensors and components of the system are connected to each other. Please note that the proposed approach can be generalized to other hardware as well (i.e., different BCI systems, mobile robots or robotic arms).

3.1 Smart Wheelchair

The mobile robot used for this study is based on the Quickie Salsa \(R^2\), an electric powered wheelchair produced by Sunrise Medical company. Its compact size and its low seat to floor height (starting from 42 cm) gives it flexibility and grant it easy access under tables, allowing a good accessibility in an indoor scenario. The mechanical system is composed of two rear driving wheels and two forward caster wheels; these last are not actuated wheels, but they are able to rotate around a vertical axis. The wheelchair is equipped with an internal control module, the OMNI interface device, manufactured by PG Drivers Technology. This controller has the ability to receive input from different devices of SIDs (Standard Input Devices) and to convert them to specific output commands compatible with the R-net control system. In addition, an Arduino MEGA 2560 microcontroller, a Microstrain 3DM-GX3-25 inertial measurement unit, two Sicod F3-1200-824-BZ-K-CV-01 encoders, an Hokuyo URG-04LX laser scanner and a Webcam Logitech C270 complete the smart wheelchair equipment. The encoders, inertial measurement unit and the OMNI are connected to the microcontroller, while the microcontroller itself and the other sensors are connected via USB to a computer running ROS. Signals from the Sicod and Microstrain devices are converted by the Arduino and sent to the ROS localization module. The information provided by the Hokuyo lase scanner is used by the mapping module and by the path planning module for obstacle avoidance. Once a waypoint is chosen by the user, the path planning module creates the predefined path: this can be then modified by a trigger coming from BCI signal as described before.

3.2 BCI

The EEG data used to classify the ErrP signal were acquired using a BCI system, constituted by a cap with 8 electrodes, and an amplifier, normally used due to the weakness of the brain signals. The adopted equipment is composed by:

-

g.GAMMAcap, equipped with different kind of active/passive electrodes, specifically for EEG recordings. The disposition of the electrodes follows the International 10–20 System;

-

g.MOBIlab + ADC, that collects data from a g.MOBIlab+ device, a tool for recording multimodal biosignal data on the laptop or PC, namely an amplifier which transmits the data wirelessly via Bluetooth 2.0;

-

the software BCI2000.

The device supports 8 analog input channels digitalized at 16 bit resolution and sampled at a fixed 256 Hz sampling rate, which guarantees data quality and an high signal-to-noise ratio. The selected eight electrodes are AF8, Fz, Cz, P3, Pz, P4, PO7 and PO8 and they are positioned according to the representation in Fig. 6. The amplifier has a sensitivity of ±500 \(\upmu \)V.

4 Preliminary Results

The system has been preliminary tested by simulating the smart wheelchair in the open-source 3D robotics simulator Gazebo, together with its sensor set, and using a real BCI for triggering the feedback signal from the user. We focus only on step (1) of the “EEG-based feedback” described in Sect. 2.2. As such, the trials were performed in two phases, namely the training of participants for the ErrP detection, and the test in a simulator environment, where the wheelchair moves and the user reacts to the appearance of a simulated obstacle.

4.1 BCI Training

The experimental trial was composed by two different phases. The first related to the participants training for the ErrP detection, and the other related to the robot task simulation in a simulator environment. The defined protocol for the participant training consisted of a short video with a pointer that flowed horizontally on the screen, from the left to the right, whose goal was to reach the final destination, defined as a red X. After that, the recorded ErrP signals were classified in order to recognize the generated ErrP when the subject observed the obstacles appearance in the 3D simulated scenario, i.e. Gazebo, on the way of the smart wheelchair trajectory. The video also randomly showed pointers which did not arrive to the expected goal: this “error” evoked the ErrP, considering the wrong position interpretation with respect to the human intent. Three participants were enrolled and received the previous described training before the acquisition. The preliminary results in this training phase allowed to recognize the ErrP signals applying a logistic classifier [21] that distinguishes between target and non target stimuli. The average percentage of classification obtained was of \(85\%\) for not target stimuli and of \(75\%\) for the target ones.

4.2 Simulation Testing

After the training, the subjects became “virtual users” of the smart wheelchair: equipped with the BCI system, they were positioned in front of a PC where a virtual wheelchair was moving in the simulated environment (Gazebo robot simulation). After choosing the end point and generating the predefined path, new obstacles were positioned on the screen. The recorded ErrP signals were classified in order to recognize the generated ErrP when the subjects observed the obstacle appearance.

At the moment, the ErrP signals, generated by each user, were recorded and recognized by the system and a message was written in a ROS node, interacting with the navigation system of the smart wheelchair in order to communicate the necessity of recalculating the path. During the ErrP acquisition phase, the velocity of the wheelchair has been decreased, and once a message was obtained, the wheelchair was stopped in order to have time to elaborate the new path planning (see Fig. 7).

5 Conclusion

The study investigates the use of ErrP signals in a closed-loop system, proposing a human-in-the-loop approach for path planning correction of assistive mobile robots. In particular, this study supports the possibility of a real-time feedback between the smart wheelchair and the BCI acquisition system, allowing the user to actively participate to the control of the planned trajectory, avoiding factors in the environment which may negatively affect user safety. This kind of interaction promotes the user intervention in robot collaborative task: the user must not only choose where to go or which object to take, but can also monitor if the task is correctly realized and provide a feedback accordingly. This approach could be a desirable solution for a user everyday’s life, especially those users who have limited physical capabilities to control the wheelchair. The presence of the user in the closed-loop system promotes his/her involvement in the human-robot interaction allowing a direct participation and control on the task execution.

So far, only the BCI trigger has been developed and tested in a ROS simulated scenario, but all the architecture system has been developed with the creation of ROS node to interface the BCI system and the smartwheelchair as described in Sect. 2. Even if the results are at a preliminary stage, the system is able to recognize the ErrPs generated by the presence of obstacles on the path of the smartwheelchair and send a feedback to avoid the obstacle.

Future works include the definition of a policy to recalculate the path and avoids wheelchair stops once the trigger has been activated, and tests in an experimental environment. Moreover, it is necessary to involve more people in the study both for the classification of the ErrP signals and to improve the time necessary to recognize the object on the trajectory of the smart wheelchair. A fast communication of the feedback from the BCI system results to the smartwheelchair is one of the most important aspect for the feasibility and the usability of the proposed system.

References

Salazar-Gomez AF, DelPreto J, Gil S, Guenther FH, Rus D (2017) Correcting robot mistakes in real time using EEG signals. In: 2017 IEEE international conference on robotics and automation (ICRA), pp 6570–6577

Iturrate I, Montesano L, Minguez J (2010) Single trial recognition of error-related potentials during observation of robot operation. In: Annual international conference of the IEEE engineering in medicine and biology 2010, pp 4181–4184

Iturrate I, Chavarriaga R, Montesano L, Minguez J, Millan JDR (2012) Latency correction of error potentials between different experiments reduces calibration time for single-trial classification. In: 2012 annual international conference of the IEEE engineering in medicine and biology society, pp 3288–3291

Zhang H, Chavarriaga R, Khaliliardali Z, Gheorghe L, Iturrate I, Millan JDR (2015) EEG-based decoding of error-related brain activity in a real-world driving task. J Neural Eng 12:1–10

Foresi G, Freddi A, Iarlori S, Monteriù A, Ortenzi D, Proietti Pagnotta D (2019) Human-robot cooperation via brain computer interface in assistive scenario. Lecture notes in electrical engineering, vol 540. Ambient Assisted Living, Italian Forum 2017, pp 115–131

Iturrate I, Antelis JM, Kubler A, Minguez J (2009) A noninvasive brain-actuated wheelchair based on a p300 neurophysiological protocol and automated navigation. IEEE Trans Robot 25:614–627

Behncke J, Schirrmeister RT, Burgard W, Ball T (2018) The signature of robot action success in EEG signals of a human observer: decoding and visualization using deep convolutional neural networks. In: 2018 6th international conference on brain-computer interface (BCI), pp 1–6

Mao X, Li M, Li W, Niu L, Xian B, Zeng M, Chen G (2017) Review article progress in EEG-based brain robot interaction systems. Comput Intell Neurosci 2017:1–25

Kim SK, Kirchner E, Stefes A, Kirchner F (2017) Intrinsic interactive reinforcement learning using error-related potentials for real world human-robot interaction. Sci Rep 7

Millan JDR (2005) You are wrong!—Automatic detection of interaction errors from brain waves, pp 1413–1418

Millan JDR (2008) Error-related EEG potentials generated during simulated brain computer interaction. IEEE Trans Bio-Med Eng 55:923–929

Artusi X, Niazi I, Lucas MF, Farina D (2012) Performance of a simulated adaptive BCI based on experimental classification of movement-related and error potentials. IEEE J Emerg Select Top Circuits Syst 1:480–488

Ferracuti F, Casadei V, Marcantoni I, Iarlori S, Burattini L, Monteri A, Porcaro C (2019) A functional source separation algorithm to enhance error-related potentials monitoring in noninvasive brain-computer interface. Comput Methods Programs Biomed 191:105419

Cavanini L, Cimini G, Ferracuti F, Freddi A, Ippoliti G, Monteri A, Verdini F (2017) A QR-code localization system for mobile robots: application to smart wheelchairs. In: 2017 European conference on mobile robots (ECMR), pp 1–6

Cavanini L, Benetazzo F, Freddi A, Longhi S, Monteriù A (2014) SLAM-based autonomous wheelchair navigation system for AAL scenarios. In: 2014 IEEE/ASME 10th international conference on mechatronic and embedded systems and applications (MESA), pp 1–5

Bonci A, Longhi S, Monteriù A, Vaccarini M (2005) Navigation system for a smart wheelchair. J Zhejiang Univ Sci A 6:110–117

Radmard S, Croft E (2011) Approximate recursive Bayesian filtering methods for robot visual search

Nordlund P, Gustafsson F (2001) Sequential monte Carlo filtering techniques applied to integrated navigation systems. In: Proceedings of the 2001 American control conference (Cat. No.01CH37148), vol 6, pp 4375–4380

Ogren P, Leonard NE (2005) A convergent dynamic window approach to obstacle avoidance. IEEE Trans Robot 21:188–195

Ciabattoni L, Ferracuti F, Freddi A, Longhi S, Monteriù A (2017) Personal monitoring and health data acquisition in smart homes. In: IET human monitoring, smart health and assisted living: techniques and technologies, pp 1–22

Peng CYJ, Lee KL, Ingersoll GM (2002) An introduction to logistic regression analysis and reporting. J Educ Res 96:3–14

Acknowledgements

Authors desire to thank the M.Sc. students Karameldeen Omer and Valentina Casadei for their contributions to this work.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Ciabattoni, L., Ferracuti, F., Freddi, A., Iarlori, S., Longhi, S., Monteriù, A. (2021). Human-in-the-Loop Approach to Safe Navigation of a Smart Wheelchair via Brain Computer Interface. In: Monteriù, A., Freddi, A., Longhi, S. (eds) Ambient Assisted Living. ForItAAL 2019. Lecture Notes in Electrical Engineering, vol 725. Springer, Cham. https://doi.org/10.1007/978-3-030-63107-9_16

Download citation

DOI: https://doi.org/10.1007/978-3-030-63107-9_16

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-63106-2

Online ISBN: 978-3-030-63107-9

eBook Packages: EngineeringEngineering (R0)