Abstract

Barry Arnold has made many fundamental and innovative contributions in different areas of statistics and econometrics, including estimation and inference, distribution theory, Bayesian inference, order statistics, income inequality measures, and characterization problems. His extensive work in the area of distribution theory includes studies on income distributions and Lorenz curves, the exact sampling distribution theory of test statistics, and the characterization of distributions. In our paper, we consider the problem of developing exact sampling distributions of various econometric and statistical estimators and test statistics. The motivation stems from the fact that inference procedures based on the asymptotic distributions may provide misleading results if the sample size is small or moderately large. In view of this, we develop a unified procedure by first observing that a large number of econometric and statistical estimators can be written as ratios of quadratic forms. Their distributions can then be straightforwardly analyzed by using Imhof’s (Biometrika 48:419–426, 1961) method. We show the applications of this procedure to develop the distribution of some commonly used statistics in applied work. The exact results developed will be helpful for practitioners to conduct appropriate inference for any given size of the sample data.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

1 Introduction

In the early twentieth century, Sir R. A. Fisher and others set in motion what is known today as the classical parametric approach to statistical estimation of a finite number of population parameters using sample data. Thus began the practice of statistical inference within the framework of estimation and hypothesis testing of univariate and multivariate probability distributions. The extensive study of conditional probability distributions followed, and hence, the estimation and testing in the conditional mean (regression) and conditional variance (volatility) models became a norm in econometrics and statistics. The estimation of parameters of regression and other models gave rise to the development of statistical properties of econometric estimators of such models like their bias, mean squared error (MSE), and distributions. Within this and in related contexts, Barry Arnold has made many fundamental and innovative contributions in different areas of statistics and econometrics, including estimation and testing, distribution theory and characterization of distributions, income distribution theory and Lorenz curves, among others. See, for example, Arnold (1983, 1987, 2012, 2015), Arnold et al. (1987), Arnold and Sarabia (2018), Coelho and Arnold (2014), Marques et al. (2011), and Villaseñor and Arnold (1984, 1989). All of these have made significant impact on the profession and have been instrumental in advancing statistics and econometrics.

The large sample limiting distribution theory was well developed, but there were challenges to develop needed analytical finite sample distributional results. In general, the large sample properties did not necessarily imply the small sample properties, and if they were used in small or moderately large sample cases, they may give misleading policy implications. This problem was posed since most of econometric estimators were nonlinear functions of multivariate random variables and it was not easy to develop their exact distributional properties. Nagar (1959) developed finite sample approximate bias and MSE of the two-stage least squares (2SLS) estimator of the parameters in a structural model. This was followed by an extensive work of many other econometrians and statisticians on the exact bias and MSE, and some on the exact distribution, of the 2SLS estimator. This literature is summarized in Ullah (2004), also see Anderson and Sawa (1973), Phillips (1980, 1986), and Bao et al. (2017). However, the exact distribution of many other econometric and statistical estimators is not yet developed.

In view of this in this paper, we develop a unified procedure to analyze the exact distribution by observing that many econometric and statistical estimators can be written as ratios of quadratic forms. Their distributions can then be straightforwardly developed by using Imhof’s (1961) result on the distribution of an indefinite quadratic form. We show the applications of this procedure to develop the distribution of some statistics used in applied work. These include squared coefficient of variation for measuring income inequality, squared Sharpe ratio commonly used in financial management, Durbin–Watson test statistic for serial correlation routinely used in practice, Moran’s test statistic for spatial correlation, and goodness of fit in regression models. The exact results developed here will be helpful for practitioners to conduct appropriate inference for any given size of the sample data.

This paper is organized as follows. In Sect. 2, we present the exact distributional results. Then in Sect. 3, we provide a numerical analysis of the exact distribution of a goodness of fit measure. Finally, the conclusion is given in Sect. 4. Throughout, I = I n is the n × n identity matrix, ι = ι n is an n × 1 vector of ones, and M 0 = I − n −1 ιι ′.

2 The Exact Distribution

Let us consider the ratio of quadratic forms as

where y is an n × 1 normal random vector with E(y) = μ and Var(y) = Σ being positive definite, N 1 and N 2 are n × n nonstochastic symmetric matrices, and N 2 is a positive semi-definite.Footnote 1 The cumulative distribution function (CDF) of this ratio is

where N=N 1−q 0 N 2. Note that y ′ Ny=y ′ Σ −1∕2 QQ ′ Σ 1∕2 N Σ 1∕2 QQ ′ Σ −1∕2 y ≡z ′ Λ z, where z = Q ′ Σ −1∕2 y ∼N(μ z, I), μ z = Q ′ Σ −1∕2 μ, Λ is a diagonal matrix of eigenvalues of Σ 1∕2 N Σ 1∕2, and Q is an orthogonal matrix of eigenvectors of Σ 1∕2 N Σ 1∕2 such that Q ′ Σ 1∕2 N Σ 1∕2 Q = Λ. So the distribution of the ratio of quadratic forms translates to that of a linear combination of independent non-central chi-squared random variables. Without loss of generality, let λ j, j = 1, …, r ≤ n, denote non-zero distinct elements of Λ, n j be the corresponding mutiplicities, \(\delta _{j}=\sum _{i\rightarrow j}\mu _{z_{i}}^{2}\), where ∑i→j denotes summing over i such that the ith element of Λ equals λ j. Then, \(\boldsymbol {z}^{\prime }\boldsymbol {\Lambda }\boldsymbol {z}=\sum _{j=1}^{r}\lambda _{j}\zeta _{j}^{2}\), where \(\zeta _{j}^{2}\sim \chi _{n_{j}}^{2}(\delta _{j})\), and they are independent of each other. For a linear combination (with weights λ j) of independent non-central chi-squared variables \(\zeta _{j}^{2}\) (with non-centrality parameter δ j and degrees of freedom n j), Imhof (1961) showed that

where

Setting \(q_{0}^{*}=0\), we have \(F(q_{0})=\Pr (\boldsymbol {y}^{\prime }\boldsymbol {N}\boldsymbol {y}\leq 0)=\Pr (\boldsymbol {y}^{\prime }\boldsymbol {N}_{1}\boldsymbol {y}/\boldsymbol {y}^{\prime }\boldsymbol {N}_{2}\boldsymbol {y}\leq q_{0}).\)

2.1 Goodness of Fit Statistic R 2

For the linear regression model y = Xβ + u, where y = (y 1, …, y n)′ is an n × 1 vector of observations on the dependent variable, X = (x 1, …, x n)′ is an n × k nonstochastic matrix of covariates (including a constant term) with coefficient vector β, and u = (u 1, …, u n)′ collects normally distributed error terms, a goodness of fit statistic is

where \(\bar {y}=n^{-1}\sum _{i=1}^{n}y_{i}\), \(\hat {y_{i}}=\boldsymbol {x}_{i}^{\prime }\hat {\boldsymbol {\beta }}\), and P = X(X ′ X)−1 X ′. We can thus evaluate the distribution of R 2 with N 1 = M 0 P and N 2 = M 0 by applying (2).

Denote M = I −P and P 0 = n −1 ιι ′. Then, we can put N = M 0 P − a M 0 = M 0((1 − a)P − a M) = P + (a − 1)P 0 − a I. Note that P is idempotent with eigenvalues 1 (of multiplicity k) and 0 (of multiplicity n − k), and P 0 is also idempotent with eigenvalues 1 (of multiplicity 1) and 0 (of multiplicity n − 1). Since P 0 v = (P 0 P)v = P 0(Pv) for any conformable vector v, we see that if v is an eigenvector of P associated with eigenvalue 0, then it must also be an eigenvector of P 0 corresponding to its eigenvalue 0. There are n − k linearly independent such vectors. Denote them by v i, i = 1, …, n − k. Further, Nv i = [P + (a − 1)P 0 − a I]v i = [0 + (a − 1) ⋅ 0 − a ⋅ 1]v i = −a ⋅v i, implying that N has eigenvalue − a with the corresponding eigenvectors v i. Similarly, if w is an eigenvector of P 0 associated with eigenvalue 1, so it is an eigenvector of P corresponding to its eigenvalue 1, and Nw = [P + (a − 1)P 0 − a I]w = [1 + (a − 1) ⋅ 1 − a ⋅ 1]w = 0 ⋅w, implying that N has eigenvalue 0 with a corresponding eigenvector w. Further, v i and w are linearly independent. Since N, P, and P 0 are all symmetric matrices, their eigenvectors span \(\mathbb {R}^{n}\) (see page 179, Exercise 7.48 of Abadir and Magnus 2005). Thus, there must exist k − 1 linearly independent vectors \(\boldsymbol {z}_{j}\in \mathbb {R}^{n}\), j = 1, …, k − 1 (also linearly independent of v i and w) to be eigenvectors of N, P, and P 0. Eigenvectors z j correspond to eigenvalue 1 of P since z j and v i are linearly independent. Eigenvectors z j also correspond to eigenvalue 0 of P 0 since z j and w are linearly independent. As such, Nz j = [P + (a − 1)P 0 − a I]z j = [1 + (a − 1) ⋅ 0 − a ⋅ 1]z j = (1 − a) ⋅z j, implying that N has eigenvalue 1 − a with the corresponding eigenvectors z j.

Given that N = M 0((1 − a)P − a M) has two non-zero eigenvalues, 1 − a and − a, with the corresponding mutiplicities k − 1 and n − k, respectively, it is convenient for us to rewrite

If the error terms are independent and identically distributed (i.i.d) with variance \(\sigma _{u}^{2}\), then \(\boldsymbol {y}^{\prime }\boldsymbol {M}_{0}\boldsymbol {P}\boldsymbol {y}/\sigma _{u}^{2}\sim \chi _{k-1}^{2}(\boldsymbol {\beta }^{\prime }\boldsymbol {X}^{\prime }\boldsymbol {M}_{0}\boldsymbol {X}\boldsymbol {\beta })\), \(\boldsymbol {y}^{\prime }\boldsymbol {M}\boldsymbol {y}/\sigma _{u}^{2}\sim \chi _{n-k}^{2}(0)\), and they are independent of each other. As such, R 2 follows a singly non-central beta distribution (see Koerts and Abrahamse 1969), and its distribution takes on the following form:

where \(I(x|a,b)=\int _{0}^{x}z^{a-1}(1-z)^{b-1}\mathrm {d}z\) is the incomplete beta function with parameters a and b. Alternatively, the distribution function can be calculated by (2) with λ 1 = 1 − a, λ 2 = −a, n 1 = k − 1, n 2 = n − k, \(\delta _{1}=\boldsymbol {\beta }^{\prime }\boldsymbol {X}^{\prime }\boldsymbol {M}_{0}\boldsymbol {X}\boldsymbol {\beta }/\sigma _{u}^{2}\), and δ 2 = 0.Footnote 2

2.2 Squared Sharpe Ratio

In financial portfolio management, a routine task is to assess a portfolio’s performance. The most widely used metric may be the Sharpe ratio, introduced by Sharpe (1966). Recently, Barillas and Shanken (2017) discussed how to compare asset pricing models under the classic Sharpe metric and showed that the quadratic form in the investment alphas is equivalent to the improvement in the squared Sharpe ratio when investment in other assets is permitted in addition to the given model’s factors.

The squared Sharpe ratio of an asset is defined as s = μ 2∕σ 2, where μ is the is mean of the asset’s excess return and σ 2 is its variance. Given a sample y = (y 1, …, y n)′ of excess returns, the sample squared Sharpe ratio is

and (2) can be sued to evaluate its exact finite sample distribution with N 1 = ιι ′∕n 2 and N 2 = M 0∕(n − 1).

When the excess return series is i.i.d. normal, the sample Sharpe ratio \(\hat {\xi }=\hat {\mu }/\hat {\sigma }\), when scaled by \(\sqrt {n}\), is equivalent to a non-central t random variable with degrees of freedom n − 1 and non-centrality parameter \(\sqrt {n}\xi \).Footnote 3 As such, the sample squared Sharpe ratio (scaled by n) follows a singly non-central F-distribution, F 1,n−1(ns, 0).Footnote 4 So we have

2.3 Squared Coefficient of Variation

The coefficient of variation (CV) has long been used in the literature as one of the income inequality indexes across regions or over time. It is defined as the ratio of the standard deviation of the variable of interest (e.g., household income) to its mean value, namely, σ∕μ. A closely related measure is the squared CV, usually called the coefficient of variation squared (CV2), denoted by α = σ 2∕μ 2. When the mean value of the variable of interest is positive, CV and CV2 are monotonic transformation of each other. As neither the population mean nor the standard deviation is known, in practice, we usually use their sample analogues to calculate CV and CV2.

Specifically, the sample CV2 is defined as

Obviously, we can set N 1 = M 0∕(n − 1) and N 2 = ιι ′∕n 2 in (2) to evaluate the exact distribution \(\Pr (\hat {\alpha }\leq a)\).

If we further assume that the data is i.i.d., then from the discussion in the previous subsection, it is obvious that the distribution of \(\hat {\alpha }\) (scaled by n −1) is F n−1,1(0, ns), where s = 1∕α. This is a special case of double non-central F-distribution, and since it is the reciprocal of F 1,n−1(ns, 0), we have

2.4 The Durbin–Watson Statistic and Moran’s I

For testing the first-order autocorrelation in the error term in the classical linear regression model, the Durbin–Watson statistic for testing H 0 : ρ = 0 against H 1 : ρ ≠ 0 in u i = ρu i−1 + e i, where e i is an i.i.d. innovation term, is calculated as

where \(\hat {\boldsymbol {u}}=(\hat {u}_{1},\ldots ,\hat {u}_{n})^{\prime }\), \(\hat {u_{i}}=y_{i}-\hat {y}_{i}\), is the residual vector, and A is a tri-diagonal matrix with − 1 on the super- and sub-diagonal positions, a 11 = a nn = 1, and a ii = 2, i = 2, …, n. So setting N 1 = MAM and N 2 = M in (2), we can evaluate the exact distribution of the Durbin–Watson statistic. Srivastava (1987) derived the asymptotic distribution of Durbin–Watson statistic under the null hypothesis \(u_{i}\sim \text{N}(0,\sigma _{u}^{2}\boldsymbol {I})\) as \([(n-k)d-{\mathrm {tr}}(\boldsymbol {A}\boldsymbol {M})]/\sqrt {2\mathrm {tr}(\boldsymbol {A}\boldsymbol {M})^{2}}\rightarrow \mathrm {N}(0,1)\).

For spatial data, Moran’s I statistic is to test for possible correlation across space. It is calculated as

where W is the so-called spatial weights matrix with zeros on the diagonal.Footnote 5 Again, its exact distribution can be straightforwardly evaluated by (2).

3 Illustration

In this section, we illustrate the performance of the exact result via (2) in comparison with the asymptotic distributional results. We focus on the statistic R 2. As discussed in Xu (2014), in the statistical and public health communities, reliable inference on R 2 has attracted a lot of attention. The literature on statistical inference of R 2 has been scarce; however, Xu (2014) developed the asymptotic distribution of R 2 in linear regression models with possibly nonnormal errors and discussed the F-distribution approximation with degrees of freedom adjustment. In Xu’s (2014) setup, the data is demeaned such that \(\bar {y}=0\). Here, we relax this restriction. We begin with the general case when the error distribution may be nonnormal. In what follows, let γ 1 and γ 2 denote the skewness and excess kurtosis coefficients of the error distribution. Obviously, when the error is normal, γ 1 = γ 2 = 0.

Recall that we have written R 2 = y ′ M 0 Py∕(y ′ M 0 Py + y ′ My). Below we present the asymptotic distributions of R 2 and two monotonic transformations of it.

Theorem 1

For the linear regression model, y = Xβ + u , where X is nonstochastic and u consists of i.i.d. errors, R 2 , R 2∕(1 − R 2), and \(\log (R^{2}/(1-R^{2}))\) have the following asymptotic distributions:

Proof

By substitution, y ′ M 0 Py = (Xβ+u)′ M 0 P(Xβ+u) = β ′ X ′ M 0 Xβ+u ′ M 0 Pu+2u ′ M 0 Xβ. Using results on the moments of quadratic forms in nonnormal random vectors (see, for example, Bao and Ullah 2010), we have \(\mathrm {E}(\boldsymbol {u}^{\prime }\boldsymbol {M}_{0}\boldsymbol {P}\boldsymbol {u})=\sigma _{u}^{2}\mathrm {tr}(\boldsymbol {M}_{0}\boldsymbol {P})=k{\sigma _{u}^{2}}-n^{-1}\boldsymbol {1}^{\prime }\boldsymbol {P}\boldsymbol {1}\) and \(\mathrm {Var}(\boldsymbol {u}^{\prime }\boldsymbol {M}_{0}\boldsymbol {P}\boldsymbol {u})={\sigma _{u}^{4}}[\gamma _{2}\mathrm {tr}(\boldsymbol {M}_{0}\boldsymbol {P}\odot \boldsymbol {M}_{0}\boldsymbol {P})+2\mathrm {tr}(\boldsymbol {M}_{0}\boldsymbol {P}\boldsymbol {M}_{0}\boldsymbol {P})]\), where ⊙ denotes the Hadamard product operator. Since the idempotent matrix P has elements of order O(n −1) and M 0 is uniformly bounded in row and column sums, we can write \(\mathrm {Var}(\boldsymbol {u}^{\prime }\boldsymbol {M}_{0}\boldsymbol {P}\boldsymbol {u})={2\sigma _{u}^{4}}\mathrm {tr}(\boldsymbol {M}_{0}\boldsymbol {P}\boldsymbol {M}_{0}\boldsymbol {P})+o(1)={2\sigma _{u}^{4}[}\mathrm {tr}(\boldsymbol {P})-2n^{-1}\boldsymbol {1}^{\prime }\boldsymbol {P}\boldsymbol {P}\boldsymbol {1}+n^{-2}(\boldsymbol {1}^{\prime }\boldsymbol {P}\boldsymbol {1})^{2}]+o(1)=O(1)\). Thus we can claim n −1∕2 u ′ M 0 Pu = o P(1). Using the central limit theorem on linear and quadratic forms in random vectors (see Kelejian and Prucha 2001), we have \(n^{-1/2}\boldsymbol {u}^{\prime }\boldsymbol {M}_{0}\boldsymbol {X}\boldsymbol {\beta }\overset {d}{\rightarrow }\mathrm {N}(0,\sigma _{u}^{2}\boldsymbol {\beta }^{\prime }\boldsymbol {\Sigma }\boldsymbol {\beta })\), where Σ =limn→∞ n −1 X ′ M 0 X. So \(n^{-1/2}(\boldsymbol {y}^{\prime }\boldsymbol {M}_{0}\boldsymbol {P}\boldsymbol {y}-\boldsymbol {\beta }^{\prime }\boldsymbol {X}^{\prime }\boldsymbol {M}_{0}\boldsymbol {X}\boldsymbol {\beta })=2n^{-1/2}\boldsymbol {u}^{\prime }\boldsymbol {M}_{0}\boldsymbol {X}\boldsymbol {\beta }+o_{P}(1)\overset {d}{\rightarrow }\mathrm {N}(0,4\sigma _{u}^{2}\boldsymbol {\beta }^{\prime }\boldsymbol {\Sigma }\boldsymbol {\beta })\). Similarly, \(n^{-1/2}\boldsymbol {u}^{\prime }\boldsymbol {M}\boldsymbol {u}=n^{-1/2}\boldsymbol {u}^{\prime }\boldsymbol {u}+o_{P}(1)\overset {d}{\rightarrow }\mathrm {N}(\sigma _{u}^{2},\sigma _{u}^{4}(2+\gamma _{2}))\). Further, Cov(u ′ M 0 Xβ, u ′ u) = E(β ′ X ′ M 0 uu ′ u) = γ 1 β ′ X ′ M 0 ι = 0. Following Kelejian and Prucha (2001) again, we can show that any linear combination of n −1∕2 u ′ M 0 Xβ and \(n^{-1/2}\boldsymbol {u}^{\prime }\boldsymbol {u}-\sigma _{u}^{2}\) (say, \(l_{1}n^{-1/2}\boldsymbol {u}^{\prime }\boldsymbol {M}_{0}\boldsymbol {X}\boldsymbol {\beta }+l_{2}(n^{-1/2}\boldsymbol {u}^{\prime }\boldsymbol {u}-\sigma _{u}^{2})\), where l 1 and l 2 are non-zero constants) is asymptotically normal (\(\mathrm {N}(0,l_{1}^{2}\sigma _{u}^{2}\boldsymbol {\beta }^{\prime }\boldsymbol {\Sigma }\boldsymbol {\beta }+l_{2}^{2}\sigma _{u}^{4}(2+\gamma _{2}))\)). Therefore,

The asymptotic distributions of R 2 = y ′ M 0 Py∕(y ′ M 0 Py + u ′ Mu), R 2∕(1 − R 2) = y ′ M 0 Py∕u ′ Mu, and \(\log (R^{2}/(1-R^{2}))=\log (\boldsymbol {y}^{\prime }\boldsymbol {M}_{0}\boldsymbol {P}\boldsymbol {y}/\boldsymbol {u}^{\prime }\boldsymbol {M}\boldsymbol {u}\)) then follow immediately from the delta method. □

Note that R 2, R 2∕(1 − R 2), and \(\log (R^{2}/(1-R^{2}))\) are monotonic transformations of each other. Thus,

When the error is normally distributed, by using (2) and setting N 1 = M 0 P and N 2 = M 0, we can calculate the exact distribution of R 2 and, equivalently, that of R 2∕(1 − R 2) or \(\log (R^{2}/(1-R^{2}))\), in light of the above relationship. The asymptotic distribution, however, crucially depends on which statistic we are using. From (13)–(14), we see that when the signal to noise ratio, measured by \(\boldsymbol {\beta }^{\prime }\boldsymbol {\Sigma }\boldsymbol {\beta }/\sigma _{u}^{2}\), increases, the asymptotic distribution of R 2∕(1 − R 2) becomes more dispersed, whereas the asymptotic distribution of \(\log (R^{2}/(1-R^{2}))\) becomes more concentrated. Its effect on the asymptotic distribution of R 2 is ambiguous, and it depends on the strength of the signal to noise ratio.Footnote 6 An interesting case is the extreme case when β = 0. In this case, while R 2 and R 2∕(1 − R 2) have well-defined asymptotic distributions, \(\log (R^{2}/(1-R^{2}))\) does not have a properly defined asymptotic distribution.Footnote 7 The exact distribution is free of this kind of pitfall and can be calculated regardless of the strength of the signal to noise ratio.

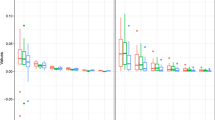

Figures 1, 2, 3, and 4 plot the cumulative distribution functions of the three statistics by comparing the true, exact, and asymptotic distributions for samples sizes 10, 20, 50, and 100, based on averages across 100,000 simulations.Footnote 8 The data generating process is y = β 0 + β 1 x 1 + β 2 x 2 + u, where x 1 and x 2 are generated from two independent i.i.d. N(0, 4) random variables, and the error term is simulated as i.i.d. \(\mathrm {N}(0,\sigma _{u}^{2})\). We fix β = (β 0, β 1, β 2)′ = (1, 0.3, 0.5)′ and set σ u = 0.1, 1, 5, 10, corresponding to scenarios from high to low signal to noise ratios. We observe that regardless of the sample size and the signal to noise ratio, the exact distribution matches precisely the true distribution. The asymptotic distributions fare reasonably well in small samples with n = 10 when σ = 0.1, corresponding to the situation of high signal to noise ratio. When σ = 10, the asymptotic distributions can be quite off the exact distribution in small samples. Xu (2014) recommended using \(\log (R^{2}/(1-R^{2}))\) by arguing that its asymptotic distribution is more stable. We see here clearly that this is not necessarily the case, as it depends on the signal to noise ratio.

4 Concluding Remarks

In this paper, we have presented a unified development of the exact distributions of many econometric statistics. These results can be straightforwardly implemented by numerical integration. In the context of the exact distribution of a goodness of fit measure, we numerically demonstrate that the asymptotic distribution may not carry forward in the small sample case. The exact distributional results developed in the paper could be easily extended to a class of some other econometric and statistical estimators used in practice that can be written as ratios of quadratic forms.

Notes

- 1.

If they are not symmetric, we can simply replace N 1 and N 2 by \((\boldsymbol {N}_{1}+\boldsymbol {N}_{1}^{\prime })/2\) and \((\boldsymbol {N}_{2}+\boldsymbol {N}_{2}^{\prime })/2\), respectively.

- 2.

The linkage between Imhof’s formula and the non-central F (see the next subsections) and beta distribution functions was discussed in Ennis and Johnson (1993).

- 3.

The connection of the Sharpe ratio to the t-distribution seems to originate in Miller and Gehr’s (1978) note on the bias of the Sharpe ratio.

- 4.

This notation is from the double non-central F-distribution with non-centrality parameters δ 1 and δ 2 and the corresponding degrees of freedom d 1 and d 2, denoted by \(F_{d_{1},d_{2}}(\delta _{1},\delta _{2}\)).

- 5.

If we are interested instead in testing whether the spatial correlation arises from the unobservable error term in a linear regression model, Moran’s I can be calculated with M replacing M 0 in (11).

- 6.

More specifically, when \(\boldsymbol {\beta }^{\prime }\boldsymbol {\Sigma }\boldsymbol {\beta }/\sigma _{u}^{2}<1\), as \(\boldsymbol {\beta }^{\prime }\boldsymbol {\Sigma }\boldsymbol {\beta }/\sigma _{u}^{2}\) goes up, the asymptotic distribution of R 2 gets more dispersed. But when \(\boldsymbol {\beta }^{\prime }\boldsymbol {\Sigma }\boldsymbol {\beta }/\sigma _{u}^{2}>1\), as \(\boldsymbol {\beta }^{\prime }\boldsymbol {\Sigma }\boldsymbol {\beta }/\sigma _{u}^{2}\) goes up, the asymptotic distribution of R 2 gets more concentrated.

- 7.

Recall that the null distribution of the F statistic for testing the overall significance of a linear regression, which is proportional to R 2∕(1 − R 2), has a well-defined distribution.

- 8.

We never know the true distribution. But we believe that averaging 100,000 simulations should give results very close to the truth. In calculating the exact distributions via (2), we used Matlab’s integral function.

References

Abadir, K. M., & Magnus, J. R. (2005). Matrix algebra. New York: Cambridge University Press.

Anderson, T. W., & Sawa, T. (1973). Distributions of estimates of coefficients of a single equation in a simultaneous system and their asymptotic expansions. Econometrica, 41, 683–714.

Arnold, B. C. (1983). Pareto distributions. Fairland: International Cooperative Publishing House.

Arnold, B. C. (1987). Majorization and the Lorenz curve. New York: Springer.

Arnold, B. C. (2012). On the Amato inequality index. Statistics and Probability Letters, 82, 1504–1506.

Arnold, B. C. (2015). Pareto distributions (2nd ed.). Boca Raton: CRC Press.

Arnold, B. C., & Sarabia, J. M. (2018). Analytic expressions for multivariate Lorenz surfaces. Sankhya A, 80, 84–111.

Arnold, B. C., Robertson, C. A., Brockett, P. L., & Shu, B.-Y. (1987). Generating ordered families of Lorenz curves by strongly unimodal distributions. Journal of Business & Economic Statistics, 5, 305–308

Bao, Y., & Ullah, A. (2010). Expectation of quadratic forms in normal and nonnormal variables with applications. Journal of Statistical Planning and Inference, 140, 193–1205.

Bao, Y., Ullah, A., & Wang, Y. (2017). Distribution of the mean reversion estimator in the Ornstein–Uhlenbeck process. Econometric Reviews, 36, 1039–1056.

Barillas, F., & Shanken, J. (2017). Which alpha? Review of Financial Studies, 30, 1316–1338

Coelho, C. A., & Arnold, B. C. (2014). On the exact and near-exact distributions of the product of generalized Gamma random variables and the generalized variance. Communications in Statistics Theory and Methods, 43, 2007–2033.

Ennis, D. M., & Johnson, N. L. (1993). Noncentral and central chi-square, F and beta distribution functions as special cases of the distribution function of an indefinite quadratic form. Communications in Statistics Theory and Methods, 22, 897–905.

Imhof, J. P. (1961). Computing the distribution of quadratic forms in normal variables. Biometrika, 48, 419–426.

Kelejian, H. H., & Prucha, I. R. (2001). On the asymptotic distribution of the Moran I test statistic with applications. Journal of Econometrics, 104, 219–257.

Koerts, J., & Abrahamse, A. P. J. (1969). On the theory and application of the general linear model. Rotterdam: Universitaire Pers Rotterdam

Marques, F. J., Coelho, C. A., & Arnold, B. C. (2011). A general near-exact distribution theory for the most common likelihood ratio test statistics used in multivariate analysis. TEST, 20, 180–203.

Miller, R. E., & Gehr, A. K. (1978). Sample size bias and Sharpe’s performance measure: A note. The Journal of Financial and Quantitative Analysis, 13, 943–946.

Nagar, A. L. (1959). The bias and moment matrix of the general k-class estimators of the parameters in simultaneous equations. Econometrica, 27, 575–595.

Phillips, P. C. B. (1980). Finite sample theory and the distributions of alternative estimators of the marginal propensity to consume. Review of Economic Studies, 47, 183–224.

Phillips, P. C. B. (1986). The exact distribution of the Wald statistic. Econometrica, 54, 881–895.

Sharpe, W. F. (1966). Mutual fund performance. The Journal of Business, 39, 119–138.

Srivastava, M. S. (1987). Asymptotic distribution of Durbin-Watson statistic. Economics Letters, 24, 157–160.

Ullah, A. (2004). Finite sample econometrics. New York: Oxford University Press.

Villaseñor, J. A., & Arnold, B. C. (1984). Some examples of fitted general quadratic Lorenz curves. Technical report no. 130, Riverside, CA: Statistics Department, University of California.

Villaseñor, J. A., & Arnold, B. C. (1989). Elliptical Lorenz curves. Journal of Econometrics, 40, 327–338.

Xu, L. (2014). R-squared Inference under Non-normal Error. Dissertation, Department of Statistics, University of Washington.

Acknowledgements

We are grateful to an anonymous referee for very helpful comments.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this chapter

Cite this chapter

Bao, Y., Liu, X., Ullah, A. (2021). On the Exact Statistical Distribution of Econometric Estimators and Test Statistics. In: Ghosh, I., Balakrishnan, N., Ng, H.K.T. (eds) Advances in Statistics - Theory and Applications. Emerging Topics in Statistics and Biostatistics . Springer, Cham. https://doi.org/10.1007/978-3-030-62900-7_6

Download citation

DOI: https://doi.org/10.1007/978-3-030-62900-7_6

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-62899-4

Online ISBN: 978-3-030-62900-7

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)