Abstract

In this chapter some ill-posed inverse electromagnetic and power engineering problems are introduced, both at a theoretical introductory and mathematical modelling level and detailed regarding their numerical solving case studies based, by the application of several regularization techniques starting from classical Tikhonov approach up to singular values decomposition procedures. Fredholm integral equation mathematical modelling is presented in the physical definition of the inverse electromagnetic and/or power engineering problems, accompanied by explanations regarding the physical significance.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

The chapter structure is as following: a first section introduces the Fredholm integral equations applied for ill-posed inverse problems including in the modelling of the electromagnetic field problems. Next, the main key performance indicators related to the inverse problems and regularization techniques to solve them are presented. In the electrical engineering domain, a power-flow case study is then introduced as a practical case study application to be numerically solved using regularization of the ill-posed problem. In this respect, three main categories of numerical methods are presented: a section dealing the so-called intermediate methods, a next section of so called special regularization methods and a last section of the chapter presenting an original and simple approach called harmonic regularization numerical method.

The established objectives are: to present how the inverse electromagnetic or power engineering problems are modelled and when their numerical solving, if ill-posed should be addressed with regularization techniques.

The first section introduces the Fredholm integral equation as a mathematical modelling tool. Then, the main key performance indicators in ill-posed inverse problems are presented using the introduction of a power flow case study. The proposed practical application is continued in the next sections, by showing different numerical regularization techniques applied on the problem. The chapter ends with useful conclusions and further proposed actions and contributions.

2 Fredholm Integral Equation

As it was indicated, a series of applications of electric or magnetic field synthesis are modelled using Fredholm Integral Equation, as incorrectly formulated inverse problems.

An integral equation is an equation in which the unknown function appears under the integral. There is no general method for solving integral equation. Their solutions and their existence depend on the particular forms under they appear.

An integral equation is called linear if the unknown function is separated by the operator under the integral, which is applied to it. The general form of a linear integral equation is:

The unknown function is φ(x), the operator function K(x, y) is called core (it can be integral square) and g(y) is called effect function (imposed or evaluated). These functions can also have complex values. The integral equation is considered homogeneous if the effect function g(y) = 0 on the indicated domain, and if g(y) is different from 0, the equation is inhomogeneous. λ is in general a parameter, called own value of the integral equation.

If the integral limits are independent of y, so as to a(y) = a and b(y) = b, express (1) is called Fredholm Integral Equation (2a, 2b). If a(y) = a and b(y) = y, the integral equation is called Volterra type (3a, 3b).

If the unknown function φ(x) appears only under the integral and f(y) = 0, we have an integral equation of the first case (2a, 3a). If f(y) = 1, the integral equation is of the second case (2b, 3b):

In the specific bibliography [1], it is specified that the linear Fredholm Integral Equations appear in the modelling of synthesis problems with causal functions, unknown, of electro/magnetic nature, and the nonlinear ones, in the modelling of synthesis problems with causal functions dependent on the material or nature geometry, the shape of the boundaries of the PIE domain [2].

No linear homogeneous Fredholm integral equation has been identified. Their appearance could mean a formulation in the absence of a desirable effect (effect zero identical effect function), which logically has no practical interest.

For the first kind Fredholm integral equation, if a disturbance is applied to the solution function φ(x) of the integral equation, dependent on a constant amplitude ε and an increasing string of values p = 1, 2… such that:

Then the corresponding disturbance in the effect function is evaluated:

According to the Riemann-Lebesgue lemma [3], the perturbation of the effect tends to zero Δg → 0, as p → ∞, so that a high value of the ||Δφ||/||Δg|| is reached with the increase of the problem domain size.

On the other hand, the idea of the disturbance in the causal function reflects the uniqueness of the solution and its instability even when the disturbance of the effect is almost unnoticeable. This fact shows the character of the mathematical model of ill-posed (incorrectly formulated) inverse problem of the first kind Fredholm integral equation.

In order to solve the Fredholm equation, in relation with the core expression, the following methods are possible [4]:

-

(1)

Separation of the core variables, \(K(x,y) = \sum {\alpha_{k} (x) \cdot \beta_{k} (y)}\) and expressing the solution function as a development after functions of the same variable \(\varphi (x) = \sum {A_{k} \cdot \alpha_{k} (x)}\), where the coefficients Ak imply the solution of a linear algebraic system of equations.

-

(2)

The serial expansion of the cause function, after a set of independent linear functions, which forms an orthogonal base—a method called collocation; the unknowns are the weighting coefficient of these basic functions in the series development and are deduced by solving an algebraic system of linear equations.

-

(3)

The discretization of the independent variables (xi, yi) so that an algebraic system of linear equations is formed, whose solution directly gives a string of values for the cause function. In order to obtain as accurately as possible, the unknown values, here is also important the method of approximation of the integral (by simple summation, with the trapezoid rule, Simpson, of Gaussian quadrature), as well as the method of interpolation of the solution.

-

(4)

Successive approximation of the solution, after the iterative relation:

$$\varphi_{n} \left( y \right) = g\left( y \right) + \lambda \cdot \int\limits_{a}^{b} {K\left( {x,y} \right) \cdot \varphi_{n - 1} \left( x \right)dx} ;\,\,\,\,\,n = 1,2, \ldots ;\,\,\,\,\varphi_{0} (x) = g(x)$$(6)

For the latter method to be convergent, the core of the integral equation and the causal function must be linear.

As it was indicated, for the first three methods listed, obtaining the solution involves solving an algebraic system of linear equations. For a square system of equations deduced from the second kind of equation, from the point of view of conditioning the solution, in the specialized bibliography no disadvantage is reported. Regarding the uniqueness of the solution, this goal can be fulfilled only if the own values of the integral equation of the second kind do not remove the determinant of the matrix operator.

For consistent details on the Fredholm integral equations, other studied works are recommended [4,5,6,7,8].

3 Key Performance Indicators in Inverse Problems Regularization

This paragraph introduces a set of indicators to characterize the level of instability of an incorrectly formulated inverse problem. The definition takes place in a wider frame than the strict one of the inverse field synthesis problems, with small examples of the author’s own contributions in the energy field.

It starts from the linear algebraic system as a matrix equation (\(A \cdot z = u\)), corresponding to the firs kind Fredholm integral equation. To the matrix of coefficients A, decomposition is applied according to singular values [9], or the decomposition according to the own values [10], corresponding to the relations:

The orthogonal matrices U, V are called single vectors, and the matrix P is called by passage and contains its own vectors. The matrices Σ and Ψ are diagonal and contain the singular values, respectively those corresponding to the coefficient matrix.

In the previous paragraph it was shown that the incorrect formulation of the inverse problems integrally modelled with Fredholm equations of first kind, appears phenomenologically due to the Green core function.

In the numerical model, this represents even the coefficient matrix. Based on such a hypothesis, it is considered that before the beginning of the solution it may be useful to characterize the level of instability of the solution.

3.1 Own Observations on the Spectrum of Singular Values

The following observations are made:

-

(1)

The multiplication of the cores with specific constants, properly amplifies the ranges of variation of the singular and own values.

-

(2)

The singular values of a matrix, as a Green core from a synthesis problem, are positive and spread over an extended range.

-

(3)

The increase of the discretization level implies a marked widening of the level on which the singular values spread.

-

(4)

The own values of a matrix, as a Green core from a synthesis problem, may coincide with the singular values of the same matrix, may be multiple, or differ substantially from them, taking even complex values.

Without drawing any further conclusions on these observations, it is recalled that solving the system (6) by decomposition according to the singular values, acts as an amplification of summed vectors, as the singular values extend on a wider level, namely:

where Ui, Vi, represents the singular vectors extracted from the orthogonal matrices, u the right member of the system of equations, as an effect vector, and σi the individual singular values obtained by decomposition.

The direct solution by the relation (7) leads to physically inadmissible solutions and a high level of instability to the disturbances that may occur [3, 9, 11].

At this point, it can be specified that a wide spread of the single values represents a sufficient indicator to characterize the level of physical instability and inconsistency of the solution of an inverse problem modelled by (6). However, this does not appear to be a novelty aspect, but it must be remembered that it works regardless of the results obtained for decomposition according to its own values.

3.2 Characterization of Stability as Disturbances with the Spectrum of Singular Values

Further, an application from the field of electricity is analysed, characterizing the natural stability of a power grid, at small perturbations that may occur due to the coupling/decoupling of some consumers [12]. The mathematical model corresponding to this case consists of a system of ordinary differential equations.

The matrix of coefficients specific to the system is subject to decomposition after it own values, and then after the singular values.

As has been observed so far, no particular emphasis is placed on numerical methods for evaluating the own values (based on factorizations, Leverrier-Fadeev, Krylov, Rayleigh, Jacobi, Givens), starting from the characteristic equation, nor on the determination of the singular values.

The existing programs used in this paper, contain algorithms with enough performance to not go into specific details. Figure 1 shows the configuration of the initially studied electrical grid, in which all the parameters of the system elements and the characteristics of the concrete operating regime are specified. To solve the system, it is possible to apply the decomposition method according to the singular values.

After the appropriate evaluations, the coefficient matrix looks like this:

The own values and the singular ones evaluated for this matrix are expressed in Table 1, together with their physical significance for the electrical system. For the studied application, the fact that there is an own value with the real side as positive, indicates an instability of the system. In addition, the imaginary part of the own values gives information about the natural frequencies of the oscillation system. What intervenes further, is as shown in Table 1, the correlation with the single values, whose range appears to be sufficiently wide.

A series of other coefficients matrices deduced from the configurations of electrical systems were afterwards tested on the same chain. In this way, without claiming a generality, the following were concluded: each time own values appear with the real side as positive, the singular values have a wide range of variation; when all the own values have the real side as negative (stability of the electrical system), the singular values fit in a restricted range. The correlation started from the Green core coefficient matrices.

The validity of characterizing the range of instability, by spreading the singular values, in the energy field can be assumed without demonstration for this class of problems, as an alternative to the indications offered by the own values.

Based on these mentions, the proposal to evaluate the spread of singular values as an indicator of stability on incorrectly formulated problems, or poorly conditioned is shown to be justified.

3.3 Picard’s Condition as Performance Indicator

Decomposition of the coefficient matrix after singular values is an efficient method of studying the character of IPIEP [13, 14]. Specialty bibliography proposes as an indicator for the solution’s degree of instability Picard’s condition. For the discrete model from (7) this condition consists in graphical representation the variance of singular values as well as the variance of the scalar product U Ti ·u repeated for i = 0…N.

If on the graph the curve of the product of the singular vectors with the effect vector, does not decrease in mean by at least as fast as the singular values, the problem is considered incorrectly formulated, with an unstable solution. The explanation stems from (7), in which between the two variances exists a discrepancy namely, the denominator becomes of ever lower values, then the single vectors Vi are amplified in the sum, thus irremediably distorting the solution.

For the same core expressions in Table 1, the graphs of the Picard indicator type are presented, in the situation of defining unit value effect functions (Fig. 2).

The Picard indicator clearly shows in all four test cases that the solution is unstable. As an addition to the current bibliography, it is proposed to evaluate the Picard condition indicator and the regularization model, and after solving it for the inverse problem studied. Thus, the performance of the degree of regularization applied can be evaluated.

3.4 Conditioning Number as a Performance Indicator

A third performance indicator in solving IEP with integral model is, as mentioned in the previous chapter, the conditioning number. Extensive treatments of this can be found in [9, 10, 14, 15].

The definition of the conditioning number based on the ratio between the coefficient matrix norms, respectively its inverse. These norms represent in fact the maximum singular value, respectively the minimum value of the matrix decomposition:

In expression (9) the spectral norm was defined as this is the most commonly used. As can be seen, in relation to the results obtained with the previous indicators, for a matrix that causes instability, the value of the conditioning number is high. However, to clearly establish a link between the instability of the solution and the conditioning number indicator, Eqs. (9) and (10) of the link between the maximum percentage variation of the solution are used, in relation to the disturbances that may occur in the right side, or in the core matrix structure. The deduction of these relationships is elegantly described in [9].

The disturbance acts on the effect vector:

where u′—the disturbed effect vector; z′—solution resulting from the effect of the disturbance.

It is obvious that a high value of the conditioning number allows a small error in the effect vector to lead to exaggerated variations of the solution. The issue is due exclusively to the core matrix of the coefficients. There is an expression on the instability of the solution, highlighted by the conditioning number: “A flapping of a butterfly in New York, causes a typhoon in Tokyo.”

The disturbances that affect the effect vector can be physical—measurement errors, or numerical—errors of rounding, truncation. Given that the relative error of the effect vector is within a percentage range of (1–5)%, if the conditioning number as an amplification factor has a value over 102 means that the initial solution may be affected by a percentage instability up to 500%.

The disturbance acts on the coefficient matrix (on the problem structure):

with P—disturbance matrix, which modifies the initial structure of the coefficient matrix.

The lower the disturbance norm than the coefficient matrix norm, and their ratio is closer to the value of the conditioning number, the weaker the immunity to disturbances of the solution. It is considered that in this case, the disturbance on the matrix structure influences the immunity of the solution to a greater degree.

The disturbances that appear in the matrix structure can be physical in nature—modification of the environmental characteristics, of the boundaries of the problem domain, or of numerical nature—rounding, truncation.

In this context, in which the conditioning number enters in direct correlation with the disturbances that may occur in the equation system (7), the author considers this instrument as an indicator. Moreover, what has not been found in the bibliography, based on the imposition of a maximum limit of the disturbance that may occur, together with an imposition of the admissible limit of the solution modification, can be estimated a value of the conditioning number, from which the problem is susceptible to unstable solutions. Inequalities (10) and (11) respectively are used, with interpretations:

when the disturbance affects the effect vector, the maximum disturbance limits are imposed εu and maximum allowable solution variation εz, the value of the conditioning number from which the system is considered to be unstable is given by the report (12).

If for the disturbances affecting the sources, the effect vector, the interpretation is immediate, in the case of those that intervene in the matrix structure, the interpretation on a minimum conditioning number, from which the instability of the solution can be considered does not appear to be relevant.

In the case of the relationship (11) it is considered appropriate to establish an admissible classification of the norm disturbance ||P||2. It is necessary to consider two cases, which verify the inequality relation (11):

Case I: (1-KA·||P||2/||A||2) > 0 implies a limitation of the perturbation norm in relation to the minimum singular value, by making the following simplifications:

that is, an upper limitation of the disturbance below the minimum singular value of the coefficient matrix. The closer the disturbance norm is to the minimum singular value, the more likely the system is to be affected by the disturbance.

Case II: (1-KA·||P||2/||A||2) ≤ 0, which would involve analogously ||P||2≥σmin, it is not possible in the inequality (11), because the relative error (percentage) cannot take negative values, being calculated on the basis of spectral norms. This observation has logical veracity, because a marked increase of the disturbance above the minimum singular value would mean a reduction of the effect of the disturbance on the system.

Basically, in this paper is admitted σmin as the maximum threshold up to which the perturbation norm for the matrix can affect the stability of the solution, in relation to the value of the conditioning number. Above this threshold, we consider that the impact on the matrix is no longer considered to have a disturbance effect, but only with a structural modification of the coefficient matrix (see the negative part of the graph in Fig. 1).

On the other hand, also from the relation (11) we indicate the minimum threshold value below which, if the perturbation norm decreases, the inequality is no longer valid (becomes irrelevant):

The tests performed on both well-conditioned matrices and on the Green core matrix confirm this classification of the perturbation norm. For the situation where the disturbances affect the effect vector, according to the inequality (12), the conditioning number as a performance indicator on the inverse problem, appears as a factor of maximum amplification of the error of the effect size.

For the situation where the disturbance affects the matrix of the coefficients, according to the inequality (13) and the classification of the disturbance in the interval (14), it is possible to follow a maximum amplification of the solution, in close correlation with the value of its norm of P.

Regarding the concept of conditioning number, the preconditioning methods have been outlined, that is to improve the stability of the solutions, through the physical [16,17,18] or numerical reformulation of the problem.

4 Conditioning and Regularization in Power Flow Case Study

Starting from the correlation between the properties of a numerical method, with the value of the conditioning number associated with a matrix in the system, in the field of electricity, the author of this paper has identified a method of improving the performance of solving nonlinear systems, when these by linearization become weakly conditioned.

Therefore, a configuration of the electric power system is introduced, in Fig. 3, having the active and reactive powers, generated and consumed, defined in nodes.

The voltage and current in node (4), are considered as balancing. Table 2 contains the sizes determined in relative units.

The unknowns to be identified are: the active and reactive power injected into the balancing node; the voltages (amplitude and phase) in the other nodes, the power flow in the network and the power losses in the system.

The corresponding mathematical model consists of a system with complex coefficients, reduced to 6 nonlinear equations, deduced from the expression of power balances in nodes. The maximum error of ε = 10−4 u.r, and the initialization of the solution is done with “flat” values [12].

As a method of calculation in the cited reference, it is also proposed to use the classical Newton method, then decoupled, respectively fast decoupled. Basically, a linear system of corrections in the iterative method is repeatedly solved, with Jacobian expressed as a block of 4 submatrices. If in classical Newton, the convergence of the numerical process is high—this is accompanied by a significant computation effort; which is why the decoupled variants give up the matrices on the secondary diagonal, these being of an order of magnitude smaller than those on the main diagonal. We show the expression of the system of corrections equations (15), respectively of the iterative relation (16), after which the solutions of the nonlinear model are evaluated:

The indices p, q, u, δ constitute abbreviations coming from the active, reactive power, the amplitude of the voltage and the voltage phase of each node respectively, and their combinations express the dependencies of power-voltage type, specific to the variations that appear in the power systems. What has been particularly noticeable in relation to the reported interpretations, is that the two matrices on the secondary diagonal have high-value conditioning numbers:

The solution variant addressed in [19], instead of giving up on the poorly conditioned submatrices, applies a local regularization to each iteration. Thus, as a contribution to the characterization of stability with the conditioning number indicator, as well as to obtaining precise and faster convergent solutions than the decoupled Newton methods, we propose the partitioned regularization at each iteration of the nonlinear system.

The regularization method was chosen to be the decomposition by the single truncated values, with the secondary diagonal matrices called abbreviated, SVD:

The results obtained using the partitioned regularization, confirm the relevance of the conditioning number as an indicator of instability, as well as the validity of the regularization method.

Table 3 shows the iterations and solutions when applying the simplified, partitioned regularized Newton method, with the Jacobian calculation only at the first iteration.

As shown in the result table, for the last case, partition regularization, the number of iterations is lower than when applying the decoupled Newton method, so a faster convergence of the solution is obtained. Compared to the classical Newton method, even if more iterations are performed through the partitioned regularization, the computation effort per iteration is significantly lower, as a whole, the method proposed in this paper presents higher performance than the classical one.

By the truncated decomposition after the singular values of the matrices on the secondary diagonal, the values of the conditioning numbers expressed by (19) are significantly reduced.

The method may be of interest in the analysis of larger power systems, where the computational effort and the stability of the solution can be an expensive issue.

In this framework, we propose firstly the use of the conditioning number, as an indicator of the degree of instability of the approximation solution from the forecast, and then the involvement of effective adjustment techniques. The research is open in this area.

5 Intermediate Methods

5.1 Normal Pseudo Solution

In the monograph [9] it is shown that for a linear algebraic system of equations, the minimum error solution, also called normal pseudo solution is obtained by orthogonalizing the matrix equation, a process analogous to the minimization of a function of quadratic mean deviation:

Regardless of the subsequent numerical methods of solving, applied to this system, especially in the case of large arrays, there are rounding errors due to the multiplication of the two matrices.

Another disadvantage is related to the fact that the new matrix AT·A, has a conditioning number K(AT·A) = K2(A), aspect deduced from the decomposition by the singular values. The normal pseudo solution is thus to a greater degree likely to be unstable in the disturbances.

It is highlighted by the relationships:

It is known that the conditioning number of an orthogonal matrix has the unit value [10].

If the core matrix is positively defined symmetric, then the squares of the singular values are the eigenvalues of the normal matrix. This observation suggests that the eigenvalues of the normal matrix must always be positive and real.

5.2 Method of Collocation

The collocation method, as a particular variant of the weighted residue method [6], implies the replacement of the unknown causal vector by a sum of vectors. f<k>, which form an orthogonal basis. In the analytical variant of the problem, the unknown function is approximated by a sum of basic, linearly independent functions [15]. Equations (21) and (22) show the principle of the method:

where ANxN is the coefficient matrix; F ϵ

Nxn matrix formed from the considered base vectors; [c] ϵ

Nxn matrix formed from the considered base vectors; [c] ϵ

Nxn the matrix of the weighting coefficients, become the unknown of the new system (20a).

Nxn the matrix of the weighting coefficients, become the unknown of the new system (20a).

Thus, the problem is reduced to determining a set of coefficients. It is of interest in the numerical evaluation of the method, the conditioning of the new matrix of the system A·F, that is, whether the collocation method can be considered as a preconditioning role.

5.3 Tikhonov Regularization Methods

The matrix expression of Tikhonov regularization was already introduced and the mathematical deduction can be achieved starting from the full analytic model, included in Tikhonov functional [9, 10, 20] or from matrix system with requirements of minimal error applied, respectively to solution stabilization.

Depending on the considered order of regularization, the two formulations are equivalent although in the paper [10], first version is named Tikhonov regularization and the second version is named linear degenerated system regularization.

We will concentrate on the second version of the formulation with focus on the followed steps in order to obtain the solution. So, in general case, where the system is undetermined, the orthogonalization method is being applied, in order to arrive at the normal pseudo-solution, which is the first step in regularization.

Further, stabilization matrix L ϵ

NxN is added (identity matrix, bidiagonal and tridiagonal matrix, the last two corresponding to a discretization of first and second order derivatives), weighted by α parameter, so the solution should be stable to perturbations and also reliable.

NxN is added (identity matrix, bidiagonal and tridiagonal matrix, the last two corresponding to a discretization of first and second order derivatives), weighted by α parameter, so the solution should be stable to perturbations and also reliable.

By an inverse matrix procedure M = (AT·A + α·L)−1 the expression becomes:

which, afterwards, may be transformed in a recurrent formula (24) or developed in series, by partial overlapping of formula.

The successive approximation formula is repeated until the difference between two consecutive approximations is smaller than an imposed threshold, and the approximation error is also below a threshold.

The order until the development goes well must take into account the computational effort required to raise the order of matrices, but also the fact that from a certain order, if the value of the regularization parameter is close to zero, the development terms can be neglected.

In all the expressions in which the matrix appears explicitly M, for large matrices it is not recommended to calculate the inverse. We call the relation (24) of iterative Tikhonov regularization, and the approximation relation of the solution \(\overline{z}\), (25) of the Tikhonov regularization through series development. Keeping only the first term in the development is equivalent to the classic Tikhonov regularization method.

Another version of Tikhonov successive approximation is expressed by the relations, with the final conditions mentioned in algorithm, but for the Eq. (24):

There are approaches according to which regularization should be treated iteratively as predictor–corrector [21].

On the conditioning through the Tikhonov regularization, the effect of the regularization parameter is emphasized, for the zero-order regularization:

Therefore, if the regularization parameter is greater than the minimum singular value, then the conditioning improves. Moreover, it can be considered as the minimum threshold for the regularization parameter, even the minimum singular value of the coefficient matrix A.

Regarding the numerical methods of effectively solving the regularized expressions, they can be any of the ones presented in the first section.

Finally, an original parallel approach is presented between the expression of Tikhonov regularization (26) and the discretized expression of the Fredholm integral equation of the second kind (27b), in the hypothesis of using the same number of discretization points for both variables Nx = Ny:

From the physical and mathematical point of view, the Fredholm equation of the second kind is considered to be a correctly formulated and well-conditioned problem, if the eigen value λ do not override the determinant of the operating matrix of the system (28) [5, 20, 22].

For the particular case where in the relation (24) is allowed as regulation parameter α = 1 and a stabilization matrix L = I and in (25) an own unit value λ = 1, then the discrete model of the Fredholm equation of the second kind can be considered as a complete limit of regularization, in the sense of implicitly obtaining a stable and achievable solution.

Basically, adding a unit value on the main diagonal of the core matrix A, improves the single values spectrum and thus significantly reduces the value of the conditioning number.

However, we avoid indicating a unit value of the adjustment parameter. Even if the parallel made converges towards a common denominator, the two formulations—Fredholm of the second kind, respectively the Tikhonov regularization—are different. The Fredholm II model appears only when the field problem specifies the sources, and the potentials imposed (or measured) are part of the same portion of the domain, as the causal potentials, unknown.

6 Special Methods of Regularization

6.1 Mixed Tikhonov—TSVD

We continue to present a mixed version of regularization, of type Tikhonov—TSVD. This implies that in the penalty function, the core matrix appears truncated. In this case, the filtering factor included in the diagonal matrix of the singular values, is defined complementarily, by the group of relations (30a–30c):

Thus, the truncated core matrix A(k) will be evaluated by TSVD decomposition:

Finally, Tikhonov’s expression is shown in Eq. (32), equivalent to (28):

Based on the arguments presented for the two types of methods, of penalty, respectively of projection, it is considered that this method guarantees a limitation of the excessive amplification of the solution, as well as a framing in the constraint imposed by the stabilization matrix operator L.

6.2 SVD Preconditioning

If from the TSVDM method the truncated matrix of the single vectors to the right is retained, V(k) ϵ

Nxk, we find that its A conjugate application, (VT·A·V), leads to a reconditioning of the matrix Eq. (1).

Nxk, we find that its A conjugate application, (VT·A·V), leads to a reconditioning of the matrix Eq. (1).

The procedure is illustrated in the following sequence of relations, related to Eq. (9):

In this form, the equivalent system in (33) is presented as undetermined, which returns us to the initial problem.

If, instead, the matrix of the coefficient’s A ϵ

NxN, is replaced by its decomposition by the singular values, and the decomposition matrices are orthonormal between them, the following equivalent formulation of the system is obtained, taking into account the orthogonality property of the single matrix to the left and to the right:

NxN, is replaced by its decomposition by the singular values, and the decomposition matrices are orthonormal between them, the following equivalent formulation of the system is obtained, taking into account the orthogonality property of the single matrix to the left and to the right:

which under the writing of the solution z, becomes:

Therefore, a truncated expression of the solution has been reached which depends on: the effect vector, the matrix of the singular values on the right and the first k singular values; and a controlled amplification of the effect.

We also observe the preconditioning/truncation property of the matrix M, which is actually the truncated diagonal matrix of the singular values. The lower the order k of retention of single vectors cond(M) = σ1/σk is, the lower the conditional number.

6.3 Conjugate Gradient Regularization Method

Returning to the conjugate gradient method, according to the assessments and demonstration in [23], it is indicated that it is possible to apply the Tikhonov regularization, or DVS. Moreover, we admit that conjugate gradient appears as an alternative to numerically solving a regularized system of equations.

6.4 Algebraic Reconstruction Technique (ART)

We propose a last numerical version, regarding its application to the incorrectly formulated equation systems. In the specialized literature it is called the algebraic reconstruction technique (ART) [24], or Kaczmarz’s method and this consists in the sequential iterative solution—for each equation—of the system (1).

In the mentioned work, it is shown that the process of obtaining the solution gains exponential convergence, if the equation of the system is randomly performed, after a sequence of generation proportional to the norms of the lines ai in the core matrix of the coefficients A.

The algorithm of the method is exposed, in its own version:

7 Regularization as a Harmonic Reconstruction of Signals

In the case of each synthetic application detailed in this chapter, graphical representations for the single vectors on the left have been drawn. According to the relation (35), the solution of the inverse problem is given by the sum of these vectors, weighted by a set of coefficients that depend on the singular values.

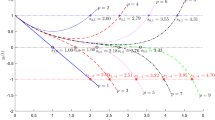

In most cases, the analysis of these graphical representations reveals an oscillatory variation (around the Ox axis) of the single vectors. For some cores, these oscillations are close to sinusoidal functions of different pulses. Figure 4 demonstrates a superposition of the single vectors on the left, starting from the first in matrix V, up to the order of 10.

The results of limited experimental character avoid the assertion of a general sinusoidal oscillation property, for the single vectors of any matrix from an inverse problem even ill conditioned. The spectrum of oscillatory vectors is very atypical for each case of core matrix.

Given the conditions, the following common observations are issued to the problems studied:

-

each singular vector has an oscillation around the Ox axis; the number of intersections the numerical function of the vector with the axis is equal to the order number of the vector in the matrix of the singular values to the left; this finding is generally valid for about 20% of the vectors constituting the matrix;

-

the average value in the module, of the sum of the elements of each singular vector, decreases with the increase of the order of the vectors in the matrix; this aspect is illustrated in Fig. 5.

By an analogy with the harmonic analysis of a no sinusoidal signal, we estimate that each numerical function—a singular vector, can be assimilated to a harmonic that enters into the composition of the solution. To argue this statement, we repeat the expression (35):

From this point of view, the solution of an inverse problem can be characterized by an overlapping of effects, harmonics, each amplified by the corresponding singular value. If we admit a hierarchy of the importance of the single vectors, in the appropriate reconstruction of the solution, according to the average value indicator in the way of the sum of the elements of the single vectors, then according to Fig. 5, as the order of the “harmonics” (single vectors) increases, they must decrease their position when reconstructing the solution.

The assertion is supported by the several numerical simulations obtained both in this chapter and in the specific literature, by the TSVD methods.

We consider this approach as being an original one, by analogy with the superposition of effects to non-sinusoidal signals, only a starting point in the development of a new method of regularization.

8 Conclusions

The authors present a special category of engineering problems called inverse in their sense that the effect is known and what is to be found is the cause. Moreover, these problems are niched to the situation when the inverse issue is accompanied by a so called ill-posed definition. This is directly reflected in the stability and numerical precision of the solutions, as a small input perturbation in the effect or in the inverse problem definition it will cause a large variation of the initial result. Thus, starting from these problem definitions in the following sections of the chapter a physical and analytical description of the ill-posed inverse problems is provided, using Fredholm integral equations. Then, several procedures of numerical regularization solving methods are presented which can both apply on the Fredholm discrete model and on direct numerical systems of equations.

Beyond the availability of these numerical regularization procedures for ill-posed problems, the authors offer a step by step example implementation on a power flow case study, which can serve as a reference case.

The comparison between these numerical methods reflect both their advantages and shortcomings that can serve as a guide on what kind of numerical instrument to choose when dealing with different electrical engineering applications.

Abbreviations

- ART:

-

Algebraic reconstruction technique

- IEP:

-

Inverse electromagnetic problem

- IPIEP:

-

Ill posed inverse electromagnetic problem

- HVPL:

-

High voltage power line

- SVD:

-

Singular value decomposition

- TSVD:

-

Truncated singular value decomposition

References

Sykulski Jan K (ed) (1995) Pawluk Krystyn, Computational magnetics (field synthesis). Chapman & Hall, London

Tikhonov AN, Glasko VB (1965) Use of the regularization method in non-linear problems. USSR Comput Math Math Phys 3:93–107

Hansen PC (2008) Regularization tools, a matlab package for analysis and solution of discrete ill posed problems, Denmark. www.imm.dtu.dk/~pch

Bronshtein IN et al (2004) Handbook of mathematics. Springer, New-York

Lavrentiev M et al (1986) Ill-posed problems of mathematical physics and analysis. American Mathematical Society

Sadiku Matthew NO (2000) Numerical techniques in electromagnetics, 2nd edn. CRC Press, New York

Palka R (1985) Synthesis of electrical fields by optimization of the shape of region boundaries. IEE Proc 132, Pt. A(1):28–32

Morozov VA (1966) Regularization of incorrectly posed problems and the choice of regularization parameter. USSR Comput Math Math Phys 1:242–251

Micu A, Micu Dan O (2002) Electromagnetic field synthesis. Ed. Dacia, Cluj-Napoca

Micu Dan O (1993) Contributions to the synthesis of the stationary electric and magnetic field. PhD thesis, Cluj-Napoca

Michael J (2004) Modular regularization algorithms. PhD thesis, Denmark

Ştefan K (2004) Metode numerice, Algoritme, Programe de calcul, Aplicaţii în Energetică, Ediţia a 3-a, Ed. Orizonturi Universitare, Timişoara

Hansen PC (1992) Analysis of discrete Ill-posed problems by means of the L-curve. SIAM Rev 34(4):561–580

Hansen PC (1992) Numerical tools for analysis and solution of Fredholm integral equations of the first kind. Inverse Probl 8:849–871

James Epperson (2002) An Introduction on Numerical Methods and Analysis. Wiley, New York

Chadebec O et al (2002) Recent improvements for solving inverse magnetostatic problem applied to thin shells. IEEE Trans Magn 38(3):1005–1009

Chadebec O et al (2003) How to well pose a magnetization identification problem. IEEE Trans Magn 39(3):1634–1637

Chadebec O et al (2004) Magnetization identification problem: illustration of an effective approach. COMPEL 23(2):518–530

Bărbulescu C, Ceclan A, Kilyeni Ş, Micu DD (2007) Power flow calculation for ill-conditioned systems. Simplified Newton method with SVD partial regularization, EUROCON, Varsovia

Tikhonov Andrev N (1981) Solution of incorrectly formulated problems. Mir, Wiley, New-York

Lamm Patricia K, Scofield Thomas L (2000) Sequential predictor-corrector methods for the variable regularization of Volterra inverse problems. Inverse Probl 16:373–399

Lavrentiev M (1967) Some improperly posed problems of mathematical physics. Springer, New-York

Gottvald A (1997) A survey of inverse methodologies, meta-evolutionary optimization and Bayesian statistics: applications to in vivo MRS. Int J Appl Electromagnet Mech 1:17–44

Strohmer T, Vershynin R (2006) A randomized solver for linear systems with exponential convergence. Random-Approx 4110:499–507

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Cite this chapter

Ceclan, A. et al. (2021). Ill-Posed Inverse Problems in Electrical Engineering Applications. In: Mahdavi Tabatabaei, N., Bizon, N. (eds) Numerical Methods for Energy Applications. Power Systems. Springer, Cham. https://doi.org/10.1007/978-3-030-62191-9_9

Download citation

DOI: https://doi.org/10.1007/978-3-030-62191-9_9

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-62190-2

Online ISBN: 978-3-030-62191-9

eBook Packages: EnergyEnergy (R0)