Abstract

We would like to prove a blow-up result for Sobolev solutions to the Cauchy problem for semi-linear structurally damped σ-evolution equations, where σ ≥ 1 and δ ∈ [0, σ) are assumed to be any fractional numbers. To deal with the fractional Laplacian (− Δ)σ and (− Δ)δ as well-known non-local operators, a modified test function method is applied to prove a blow-up result in the subcritical case and in the critical case as well.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The main goal of this paper is to discuss the critical exponent to the following Cauchy problem for semi-linear structurally damped σ-evolution models:

with some σ ≥ 1, δ ∈ [0, σ) and a given real number p > 1. Here, critical exponent p crit = p crit(n) means that for some range of admissible p > p crit there exists a global (in time) Sobolev solution for small initial data from a suitable function space. Moreover, one can find suitable small data such that there exists no global (in time) Sobolev solution if 1 < p ≤ p crit. In other words, we have, in general, only local (in time) Sobolev solutions under this assumption for the exponent p.

For the local existence of Sobolev solutions to (1), we address the interested readers to Proposition 9.1 in the paper [2]. The proof of blow-up results in the present paper is based on a contradiction argument by using the test function method. The test function method is not influenced by higher regularity of the data. For this reason, we restrict ourselves to the critical exponent to (1) in the case, where the data are supposed to belong to the energy space. In this paper, we use the following notations.

-

For given nonnegative f and g we write \(f\lesssim g\) if there exists a constant C > 0 such that f ≤ Cg. We write f ≈ g if \(g\lesssim f\lesssim g\).

-

We denote \(\widehat {v}=\widehat {v}(\xi ):= \mathfrak {F}_{x\rightarrow \xi }\big (v(x)\big )\) as the Fourier transform with respect to the spatial variables of a function v = v(x).

-

As usual, H a with a ≥ 0 stands for Bessel potential spaces based on L 2.

-

We denote by [b] the integer part of \(b \in \mathbb {R}\). We put \(\big < x\big >:= \sqrt {1+|x|{ }^2}\).

-

Moreover, we introduce the following two parameters:

$$\displaystyle \begin{aligned}\mathtt{k}^-:= \min\{\sigma;2\delta\}\quad \text{ and }\quad \mathtt{k}^+:= \max\{\sigma;2\delta\} \quad \text{ if }\delta \in [0,\sigma). \end{aligned}$$

In order to state our main result, we recall the global (in time) existence result of small data energy solutions to (1) in the following theorem.

Theorem 1 (Global Existence)

Let m ∈ [1, 2) and n > m 0 k −with\(\frac {1}{m_0}=\frac {1}{m}- \frac {1}{2}\) . We assume the conditions

Moreover, we suppose the following condition:

Then, there exists a constant ε 0 > 0 such that for any small data

satisfying the assumption \(\|u_0\|{ }_{L^m \cap H^{\mathtt {k}^+}}+ \|u_1\|{ }_{L^m \cap L^2} \le \varepsilon _0,\) we have a uniquely determined global (in time) small data energy solution

to (1). Moreover, the following estimates hold:

We are going to prove the following main result.

Theorem 2 (Blow-Up)

Let σ ≥ 1, δ ∈ [0, σ) and n > k − . We assume that we choose the initial data u 0 = 0 and u 1 ∈ L 1satisfying the following relation:

where 𝜖 0 is a suitable nonnegative constant. Moreover, we suppose the condition

Then, there is no global (in time) Sobolev solution\(u \in \mathcal {C}\big ([0,\infty ),L^2\big )\)to (1).

Remark 1

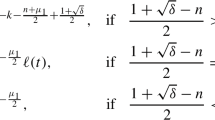

We want to underline that the lifespan T ε of Sobolev solutions to given data (0, εu 1) for any small positive constant ε in the subcritical case of Theorem 2 can be estimated as follows:

Nevertheless, catching the sharp lower bound of the lifespan T ε to verify whether the obtained upper bound in (5) is optimal or not still remains open so far.

Remark 2

If we choose m = 1 in Theorem 1, then from Theorem 2 it is clear that the critical exponent p crit = p crit(n) is given by

However, in the case \(\delta \in (\frac {\sigma }{2},\sigma )\) there appears a gap between the exponents given by \(1+\frac {2\delta +\sigma }{n-\sigma }\) from Theorem 1 and \(1+\frac {2\sigma }{n-\sigma }\) from Theorem 2 for 2σ < n ≤ 8δ. Related to such a gap in the latter case, quite recently, the authors in [3] have succeeded to indicate the global (in time) existence of small data energy solutions to (1), with σ > 1, in low space dimensions for any \(p>1+\frac {2\sigma }{n-\sigma }\) by using suitable \(L^{r_1}- L^{r_2}\) decay estimates, with 1 ≤ r 1 ≤ r 2 ≤∞, for solutions to the corresponding linear equation, after application of the stationary phase method. For this reason, at least in low space dimensions, we can claim that the critical exponent p crit = p crit(n) in the case \(\delta \in (\frac {\sigma }{2},\sigma )\) with σ > 1 is

2 Preliminaries

In this section, we collect some preliminary knowledge needed in our proofs.

Definition 1 ([8, 10])

Let s ∈ (0, 1). Let X be a suitable set of functions defined on \(\mathbb {R}^n\). Then, the fractional Laplacian (− Δ)s in \(\mathbb {R}^n\) is a non-local operator given by

as long as the right-hand side exists, where p.v. stands for Cauchy’s principal value, \(C_{n,s}:= \frac {4^s \Gamma (\frac {n}{2}+s)}{\pi ^{\frac {n}{2}}\Gamma (-s)}\) is a normalization constant and Γ denotes the Gamma function.

Lemma 1

Let q > 0. Then, the following estimate holds for any multi-index α satisfying |α|≥ 1:

Proof

First, we recall the following formula of derivatives of composed functions for |α|≥ 1:

where h = h(z) and \(h^{(k)}(z)=\frac {d^k h(z)}{dz^k}\). Applying this formula with \(h(z)= z^{-\frac {q}{2}}\) and f(x) = 1 + |x|2 we obtain

where C 1 and C 2 are some suitable constants. This completes the proof. □

Lemma 2

Let\(m \in \mathbb {Z}\) , s ∈ (0, 1) and γ := m + s. If\(v \in H^{2\gamma }(\mathbb {R}^n)\) , then it holds

One can find the proof of Lemma 2 in Remark 3.2 in [1].

Lemma 3

Let\(m \in \mathbb {Z}\) , s ∈ (0, 1) and γ := m + s. Let q > 0. Then, the following estimates hold for all\(x \in \mathbb {R}^n\):

Proof

We follow ideas from the proof of Lemma 1 in [7] devoting to the case m = 0 and \(s=\frac {1}{2}\), that is, the case \(\gamma = \frac {1}{2}\) is generalized to any fractional number γ > 0. To do this, for any s ∈ (0, 1) we shall divide the proof into two cases: m = 0 and m ≥ 1.

Let us consider the first case m = 0. Denoting by ψ = ψ(x) := <x>−q we write (− Δ)s<x>−q = (− Δ)s(ψ)(x). According to Definition 1 of fractional Laplacian as a singular integral operator, we have

A standard change of variables leads to

To deal with the first integral, after using a second order Taylor expansion for ψ we arrive at

Thanks to the above estimate and s ∈ (0, 1), we may remove the principal value of the integral at the origin to conclude

To prove the desired estimates, we shall divide our considerations into two cases. In the first subcase {x : |x|≤ 1}, we can proceed as follows:

Due to the boundedness of the above two integrals, it follows immediately

In order to deal with the second subcase {x : |x|≥ 1}, we can re-write

For the first integral, we notice that the relations |x + y|≥|y|−|x|≥|x| and |x − y|≥|y|−|x|≥|x| hold for |y|≥ 2|x|. Since ψ is a decreasing function, we obtain the following estimate:

It is clear that |y|≈|x| in the second integral domain. Moreover, it follows

For this reason, we arrive at

where we used the relation

By the change of variables r = |x + y|, we apply the inequality \(1+r^2 \ge \frac {(1+r)^2}{2}\) to get

By |x|≈<x> for |x|≥ 1, combining (12) and (13) leads to

For the third integral in (8), using again the second order Taylor expansion for ψ we obtain

Here we used the relation \(|x\pm \theta y| \ge |x|- \theta |y|\ge |x|- \frac {1}{2}|x|= \frac {1}{2}|x|\). From (8), (9), (14), and (15) we arrive at the following estimates for |x|≥ 1:

Finally, combining (7) and (16) we may conclude all desired estimates for m = 0.

Next let us turn to the second case m ≥ 1. First, a straight-forward calculation gives the following relation:

By induction argument, carrying out m steps of (17) we obtain the following formula for any m ≥ 1:

Then, thanks to Lemma 2, we derive

For this reason, in order to conclude the desired estimates, we only indicate the following estimates for k = 0, ⋯ , m:

Indeed, substituting q by q + 2(m + k) with k = 0, ⋯ , m and γ = s into (6) leads to

From these estimates, it follows immediately (20) to conclude (6) for any m ≥ 1. Summarizing, the proof of Lemma 3 is completed. □

Lemma 4

Let s ∈ (0, 1). Let ψ be a smooth function satisfying\(\partial _x^2 \psi \in L^\infty \) . For any R > 0, let ψ Rbe a function defined by

for all\(x \in \mathbb {R}^n\) . Then, (− Δ)s(ψ R) satisfies the following scaling properties for all\(x \in \mathbb {R}^n\):

Proof

Thanks to the assumption \(\partial _x^2 \psi \in L^\infty \), following the proof of Lemma 3 we may remove the principal value of the integral at the origin to conclude

This completes the proof. □

Lemma 5 (One Mapping Property in the Scale of Fractional Spaces \(\{H^s\}_{s \in \mathbb {R}}\))

Let \(\gamma ,\,s \in \mathbb {R}\) . Then, the fractional Laplacian

maps isomorphically the space H sonto H s−2γ.

This result can be found in Section 2.3.8 in [12].

Lemma 6

Let f = f(x) ∈ H sand g = g(x) ∈ H −swith\(s \in \mathbb {R}\) . Then, the following estimate holds:

The proof of Lemma 6 can be found in Theorem 16 in [6].

Lemma 7

Let\(s \in \mathbb {R}\) . Let v 1 = v 1(x) ∈ H sand v 2 = v 2(x) ∈ H −s . Then, the following relation holds:

Proof

We present the proof from Theorem 16 in [6] to make the paper self-contained. Since the space \(\mathcal {S}\) is dense in H s and H −s, there exist sequences {v 1,k}k and {v 2,k}k with \(v_{1,k}=v_{1,k}(x) \in \mathcal {S}\) and \(v_{2,k}=v_{2,k}(x) \in \mathcal {S}\) such that

On the one hand, for k →∞ we have the relations

On the other hand, by Parseval–Plancherel formula we arrive at

where \((\cdot ,\cdot )_{L^2}\) stands for the scalar product in L 2. Moreover, applying Lemma 6 we may estimate

This is equivalent to

In the same way we also derive

Summarizing from (21) to (23) we may conclude

Therefore, the proof of Lemma 7 is completed. □

3 Proof of Theorem 2

We divide the proof of Theorem 2 into several cases.

3.1 The Case that Both Parameters σ and δ Are Integers

Proof

The proof of this case can be found in the paper [2]. □

3.2 The Case that the Parameter σ Is Integer and the Parameter δ Is Fractional from (0, 1)

Proof

The first case is devoted to the subcritical case \(p<1+ \frac {2\sigma }{n- \mathtt {k}^-}\). First, we introduce the function φ = φ(|x|) := <x>−n−2δ and the function η = η(t) having the following properties:

where p′ is the conjugate of p > 1. Let R be a large parameter in [0, ∞). We define the following test function:

where η R(t) := η(R −α t) and φ R(x) := φ(R −1 x) with a fixed parameter α := 2σ −k −. We define the functionals

and

Let us assume that u = u(t, x) is a global (in time) Sobolev solution from \(\mathcal {C}\big ([0,\infty ),L^2\big )\) to (1). After multiplying the Eq. (1) by φ R = φ R(t, x), we carry out partial integration to derive

Applying Hölder’s inequality with \(\frac {1}{p}+\frac {1}{p'}=1\) we may estimate as follows:

By the change of variables \(\tilde {t}:= R^{-\alpha }t\) and \(\tilde {x}:= R^{-1}x\), a straight-forward calculation gives

Here we used \(\partial _t^2 \eta _R(t)= R^{-2\alpha }\eta ''(\tilde {t})\) and the assumption (24). Now let us turn to estimate J 2 and J 3. First, by using φ R ∈ H 2σ and \(u \in \mathcal {C}\big ([0,\infty ),L^2\big )\) we apply Lemma 7 to conclude the following relations:

Hence, we obtain

Applying Hölder’s inequality again as we estimated J 1 leads to

In order to control the above two integrals, the key tools rely on results from Lemmas 1, 3 and 4. Namely, at first carrying out the change of variables \(\tilde {t}:= R^{-\alpha }t\) and \(\tilde {x}:= R^{-1}x\) we arrive at

where we note (σ is an integer) that \((-\Delta )^{\sigma }\varphi _R(x)= R^{-2\sigma }(-\Delta )^{\sigma }\varphi (\tilde {x}).\) Using Lemma 1 implies the following estimate:

Next carrying out again the change of variables \(\tilde {t}:= R^{-\alpha }t\) and \(\tilde {x}:= R^{-1}x\) and employing Lemma 4 we can proceed J 3 as follows:

Here we used \(\partial _t \eta _R(t)= R^{-\alpha }\eta '(\tilde {t})\) and the assumption (24). To deal with the last integral, we apply Lemma 3 with q = n + 2δ and γ = δ, that is, m = 0 and s = δ to get

Because of the assumption (3), there exists a sufficiently large constant R 0 > 0 such that it holds

for all R > R 0. Combining the estimates from (25) to (29) we may arrive at

for all R > R 0. Moreover, applying the inequality

leads to

for all R > R 0. It is clear that the assumption (4) is equivalent to − 2σp′ + n + α ≤ 0. For this reason, in the subcritical case, that is, − 2σp′ + n + α < 0 letting R →∞ in (31) we obtain

This is a contradiction to the assumption (3).

Let us turn to the critical case \(p=1+ \frac {2\sigma }{n- \mathtt {k}^-}\). It follows immediately \(-2\sigma + \frac {n+\alpha }{p'}=0\). Then, repeating some arguments as we did in the subcritical case we may conclude the following estimate:

where \(C_1:= \Big (\displaystyle \int _{\mathbb {R}^n} \big < \tilde {x}\big >^{-n-2\delta }\, d\tilde {x}\Big )^{\frac {1}{p'}},\) that is,

From (32) it is obvious that \(I_R \le C_1 I_R^{\frac {1}{p}}\) and \(C_0 \le C_1 I_R^{\frac {1}{p}}\). Hence, we obtain

and

respectively. By substituting (34) into the left-hand side of (32) and calculating straightforwardly, we get

For any integer j ≥ 1, an iteration argument leads to

Now we choose the constant

in the assumption (3). Then, there exists a sufficiently large constant R 1 > 0 so that

for all R > R 1. This is equivalent to

Therefore, letting j →∞ in (35) we derive I R →∞, which is a contradiction to (33). Summarizing, the proof is completed. □

Let us now consider the case of subcritical exponent to explain the estimate for lifespan T ε of solutions in Remark 1. We assume that u = u(t, x) is a local (in time) Sobolev solution to (1) in \([0,T)\times \mathbb {R}^n\). In order to prove the lifespan estimate, we replace the initial data (0, u 1) by (0, εu 1) with a small constant ε > 0, where u 1 ∈ L 1 satisfies the assumption (3). Hence, there exists a sufficiently large constant R 2 > 0 so that we have

for any R > R 2. Repeating the steps in the above proofs we arrive at the following estimate:

with \(R= T^{\frac {1}{\alpha }}\). Finally, letting \(T\to T^-_\varepsilon \) we may conclude (5).

Remark 3

We want to underline that in the special case σ = 1 and \(\delta = \frac {1}{2}\) the authors in [4] have investigated the critical exponent \(p_{crit}=p_{crit}(n)= 1+ \frac {2}{n- 1}\). If we plug σ = 1 and \(\delta = \frac {1}{2}\) into the statements of Theorem 2, then the obtained results for the critical exponent p crit coincide.

3.3 The Case that the Parameter σ Is Integer and the Parameter δ Is Fractional from (1, σ)

Proof

We follow ideas from the proof of Sect. 3.2. At first, we denote s δ := δ − [δ]. Let us introduce test functions η = η(t) as in Sect. 3.2 and \(\varphi =\varphi (x):=\big < x\big >^{-n-2s_\delta }\). We can repeat exactly, the estimates for J 1 and J 2 as we did in the proof of Sect. 3.2 to conclude

Let us turn to estimate J 3, where δ is any fractional number in (1, σ). In the first step, applying Lemma 7 and Hölder’s inequality leads to

Now we can re-write δ = m δ + s δ, where m δ := [δ] ≥ 1 is integer and s δ is a fractional number in (0, 1). Employing Lemma 2 we derive

By the change of variables \(\tilde {x}:= R^{-1}x\) we also notice that

since m δ is an integer. Using the formula (18) we re-write

where q := n + 2s δ. For simplicity, we introduce the following functions:

with k = 0, ⋯ , m δ. As a result, by Lemma 4 we arrive at

For this reason, performing the change of variables \(\tilde {t}:= R^{-\alpha }t\) we obtain

Here we used \(\partial _t \eta _R(t)= R^{-\alpha }\eta '(\tilde {t})\) and the assumption (24). After applying Lemma 3 with q = n + 2s δ and γ = δ, i.e. m = m δ and s = s δ, we may conclude

Finally, combining (36)–(38) and repeating arguments as in Sect. 3.2 we may complete the proof of Theorem 2. □

3.4 The Case that the Parameter σ Is Fractional from (1, ∞) and the Parameter δ Is Integer

Proof

We follow ideas from the proofs of Sects. 3.2 and 3.3. At first, we denote s σ := σ − [σ]. Let us introduce test functions η = η(t) as in Sect. 3.2 and \(\varphi =\varphi (x):=\big < x\big >^{-n-2s_\sigma }\). Then, repeating the proof of Sects. 3.2 and 3.3 we may conclude what we wanted to prove. □

3.5 The Case that the Parameter σ Is Fractional from (1, ∞) and the Parameter δ Is Fractional from (0, 1)

Proof

We follow ideas from the proofs of Sects. 3.2 and 3.4. At first, we denote s σ := σ − [σ]. Next, we put \(s^*:= \min \{s_\sigma ,\,\delta \}\). It is obvious that s ∗ is fractional from (0, 1). Let us introduce test functions η = η(t) as in Sect. 3.2 and \(\varphi =\varphi (x):=\big < x\big >^{-n-2s^*}\). Then, repeating the proof of Sects. 3.2 and 3.4 we may conclude what we wanted to prove. □

3.6 The Case that the Parameter σ Is Fractional from (1, ∞) and the Parameter δ Is Fractional from (1, σ)

Proof

We follow ideas from the proofs of Sects. 3.2 and 3.5. At first, we denote s σ := σ − [σ] and s δ := δ − [δ]. Next, we put \(s^*:= \min \{s_\sigma ,\,s_\delta \}\). It is obvious that s ∗ is fractional from (0, 1). Let us introduce test functions η = η(t) as in Sect. 3.2 and \(\varphi =\varphi (x):=\big < x\big >^{-n-2s^*}\). Then, repeating the proof of Sects. 3.2 and 3.5 we may conclude what we wanted to prove. □

4 Critical Exponent Versus Critical Nonlinearity

In Remark 2 we explained that for some models (1) we determined the critical exponent p crit = p crit(n) in the scale of power nonlinearities {|u|p}p>1. But is this observation sharp? In the paper [5] the authors discussed this issue for the classical damped wave model with power nonlinearity. Here we want to extend this idea to some models of type (1). For this reason, we discuss the following model:

where σ ≥ 1, \(\delta \in [0,\frac {\sigma }{2}]\) and \(p_{crit}(n)= 1+\frac {2\sigma }{n- 2\delta }\) with n ≥ 1. Here the function μ = μ(|u|) is a suitable modulus of continuity.

4.1 Main Results

First we state a global (in time) existence result of small data Sobolev solutions to (39).

Theorem 3 (Global Existence)

Let σ ≥ 1, \(\delta \in [0,\frac {\sigma }{2}]\)and m ∈ [1, 2). Let 0 < θ ≤ σ. We assume the conditions

Moreover, we suppose the following assumptions of modulus of continuity:

and

with a sufficiently small constant C 0 > 0. Then, there exists a constant ε 0 > 0 such that for any small data

satisfying the assumption \(\|u_0\|{ }_{L^m \cap H^\theta }+ \|u_1\|{ }_{L^m \cap L^2} \le \varepsilon _0,\) we have a uniquely determined global (in time) small data Sobolev solution

to (39). The following estimates hold:

Now we state a blow-up result to (39).

Theorem 4 (Blow-Up)

Let σ ≥ 1 and\(\delta \in [0,\frac {\sigma }{2}]\)be integer numbers. We assume that we choose the initial data u 0 = 0 and u 1 ∈ L 1satisfying the following relation:

Moreover, we suppose the following assumption of modulus of continuity:

and

where C 0 > 0 is a sufficiently small constant. Then, there is no global (in time) Sobolev solution to (39).

In the following we restrict ourselves to prove the blow-up result.

4.2 Proof of Theorem 4

The ideas of the following proof are based on the recent paper [5] of the second author and his collaborators in which the authors focused on their considerations to (39) with σ = 1 and δ = 0. For simplicity, we use the abbreviations \(p_c:= p_{crit}(n)= 1+\frac {2\sigma }{n- 2\delta }\) to (39) in the following proof.

Proof of Theorem 4

First, we introduce a test function φ = φ(τ) having the following properties:

Moreover, we also introduce the function φ ∗ = φ ∗(τ) satisfying

Let R be a large parameter in [0, ∞). We define the following two functions:

and

Then it is clear that

Now we define the functional

Let us assume that u = u(t, x) is a global (in time) Sobolev solution to (39). After multiplying the Eq. (39) by φ R = φ R(t, x), we carry out partial integration to derive

Because of the assumption (43), there exists a sufficiently large constant R 0 > 0 such that for all R > R 0 it holds

Consequently, we obtain

In order to estimate J R, firstly we have

Further calculations lead to

To control (− Δ)σ φ R(t, x), we shall apply Lemma 8 as a main tool. Indeed, we divide our consideration into three sub-steps as follows:

- Step 1::

-

Applying Lemma 8 with \(h(z)= \frac {z^{\sigma -\delta }+t}{R}\) and z = f(x) = |x|2 we derive the following estimate for |α|≥ 1:

$$\displaystyle \begin{aligned} &\Big|\partial_x^\alpha \Big(\frac{|x|{}^{2(\sigma-\delta)}+t}{R}\Big)\Big| \\ &\qquad \le \sum_{k=1}^{|\alpha|} \frac{|x|{}^{2(\sigma-\delta)-2k}}{R}\left(\sum_{\substack{|\gamma_1|+\cdots+|\gamma_k|= |\alpha| \\ |\gamma_i|\ge 1}}\big|\partial_x^{\gamma_1} \big(|x|{}^2\big)\big| \cdots \big|\partial_x^{\gamma_k} \big(|x|{}^2\big)\big|\right) \\ &\qquad \le \sum_{k=1}^{|\alpha|} \frac{|x|{}^{2(\sigma-\delta)-2k}}{R}\left(\sum_{\substack{|\gamma_1|+\cdots+|\gamma_k|= |\alpha| \\ 1\le |\gamma_i|\le 2}}\big|\partial_x^{\gamma_1} \big(|x|{}^2\big)\big| \cdots \big|\partial_x^{\gamma_k} \big(|x|{}^2\big)\big|\right) \\ &\qquad \lesssim \sum_{k=1}^{|\alpha|} \frac{|x|{}^{2(\sigma-\delta)-2k}}{R} \left(\displaystyle\sum_{\substack{|\gamma_1|+\cdots+|\gamma_k|= |\alpha| \\ 1\le |\gamma_i|\le 2}}|x|{}^{2-|\gamma_1|} \cdots |x|{}^{2-|\gamma_k|}\right) \\ &\qquad \lesssim \sum_{k=1}^{|\alpha|} \frac{|x|{}^{2(\sigma-\delta)-2k}}{R} |x|{}^{2k-|\alpha|} \lesssim \frac{|x|{}^{2(\sigma-\delta)-|\alpha|}}{R}. \end{aligned} $$This estimate holds for |α|≤ 2(σ − δ). But we may conclude that it holds for all |α|≥ 1, too and small |x|. More precisely, the singularity appearing in the case |α| > 2(σ − δ) does not really bring any difficulty in the further treatment.

- Step 2::

-

Applying Lemma 8 with h(z) = φ(z) and \(z=f(x)= \frac {|x|{ }^{2(\sigma -\delta )}+ t}{R}\) we get for all |α|≥ 1 the following estimate:

$$\displaystyle \begin{aligned} &\Big|\partial_x^\alpha \varphi\Big(\frac{|x|{}^{2(\sigma-\delta)}+ t}{R}\Big)\Big| \\ &\quad \le \sum_{k=1}^{|\alpha|}\Big|\varphi^{(k)} \Big(\frac{|x|{}^{2(\sigma-\delta)}+ t}{R}\Big) \Big| \\ &\qquad \times \left(\sum_{\substack{|\gamma_1|+\cdots+|\gamma_k|= |\alpha| \\ 1 \leq |\gamma_i|\leq 2(\sigma-\delta)}} \Big|\partial_x^{\gamma_1}\Big(\frac{|x|{}^{2(\sigma-\delta)}+t}{R}\Big)\Big| \cdots \Big|\partial_x^{\gamma_k}\Big(\frac{|x|{}^{2(\sigma-\delta)}+t}{R}\Big)\Big| \right) \end{aligned} $$$$\displaystyle \begin{aligned} &\quad \le \sum_{k=1}^{|\alpha|}\Big|\varphi^{(k)} \Big(\frac{|x|{}^{2(\sigma-\delta)}+ t}{R}\Big)\Big| \\ &\qquad \times \left(\sum_{\substack{|\gamma_1|+\cdots+|\gamma_k|= |\alpha| \\ 1 \leq |\gamma_i|\leq 2(\sigma-\delta)}} \frac{|x|{}^{2(\sigma-\delta)- |\gamma_1|}}{R} \cdots \frac{|x|{}^{2(\sigma-\delta)- |\gamma_k|}}{R} \right) \\ &\quad \lesssim \sum_{k=1}^{|\alpha|} \Big(\frac{|x|{}^{2(\sigma-\delta)}}{R}\Big)^k |x|{}^{-|\alpha|} \lesssim \frac{|x|{}^{2(\sigma-\delta)- |\alpha|}}{R}\quad \big(\text{since }\,\, |x|{}^{2(\sigma-\delta)} \le R \text{ in } Q^*_R\big). \end{aligned} $$ - Step 3::

-

Applying Lemma 8 with h(z) = z n+2(σ−δ) and \(z=f(x)= \varphi \big (\frac {|x|{ }^{2(\sigma -\delta )}+ t}{R}\big )\) we obtain

$$\displaystyle \begin{aligned} &\big|(-\Delta)^{\sigma} \phi_R(t,x)\big| \lesssim \sum_{|\alpha|=2\sigma}\big|\partial_x^\alpha \phi_R(t,x)\big| \\ &\quad \lesssim \sum_{k=1}^{2\sigma} \Big(\varphi\Big(\frac{|x|{}^{2(\sigma-\delta)}+ t}{R}\Big)\Big)^{n+2(\sigma-\delta)- k} \\ &\qquad \times \left(\sum_{\substack{|\gamma_1|+\cdots+|\gamma_k|= 2\sigma \\ |\gamma_i|\ge 1}} \Big|\partial_x^{\gamma_1} \varphi\Big(\frac{|x|{}^{2(\sigma-\delta)}+ t}{R}\Big)\Big|\, \cdots \, \Big|\partial_x^{\gamma_k} \varphi\Big(\frac{|x|{}^{2(\sigma-\delta)}+ t}{R} \Big)\Big| \right) \\ &\quad \lesssim \sum_{k=1}^{2\sigma} \Big(\varphi^*\Big(\frac{|x|{}^{2(\sigma-\delta)}+ t}{R}\Big)\Big)^{n+2(\sigma-\delta)- k} \\ &\qquad \times \sum_{\substack{|\gamma_1|+\cdots+|\gamma_k|= 2\sigma \\ |\gamma_i|\ge 1}} \frac{|x|{}^{2(\sigma-\delta)- |\gamma_1|}}{R} \cdots \frac{|x|{}^{2(\sigma-\delta)- |\gamma_k|}}{R} \end{aligned} $$(49)$$\displaystyle \begin{aligned} &\quad \lesssim \sum_{k=1}^{2\sigma} \Big(\varphi^*\Big(\frac{|x|{}^{2(\sigma-\delta)}+ t}{R}\Big)\Big)^{n+2(\sigma-\delta)- k}\,\, \frac{|x|{}^{2k(\sigma-\delta)- 2\sigma}}{R^k} \\ &\quad \lesssim \frac{1}{R^{\frac{\sigma}{\sigma-\delta}}} \Big(\varphi^*\Big(\frac{|x|{}^{2(\sigma-\delta)}+ t}{R}\Big)\Big)^{n- 2\delta}\quad \big(\text{since }\,\, |x|{}^{2(\sigma-\delta)} \approx R \text{ in } Q^*_R\big). {} \end{aligned} $$(50)It is clear that if δ = 0, then |(− Δ)δ ∂ t φ R(t, x)| was estimated in (47). For the case \(\delta \in (0,\frac {\sigma }{2}]\), we can proceed in an analogous way as we controlled |(− Δ)σ φ R(t, x)| to derive

$$\displaystyle \begin{aligned} \big|(-\Delta)^{\delta}\partial_t \phi_R(t,x)\big| \lesssim \frac{1}{R^{\frac{\sigma}{\sigma-\delta}}} \Big(\varphi^*\Big(\frac{|x|{}^{2(\sigma-\delta)}+ t}{R}\Big)\Big)^{n+2(\sigma-2\delta)-1}. {} \end{aligned} $$(51)From (47) to (51), we arrive at the following estimate:

$$\displaystyle \begin{aligned} &\big|\partial_t^2 \phi_R(t,x)+ (-\Delta)^{\sigma} \phi_R(t,x)- (-\Delta)^{\delta}\partial_t \phi_R(t,x)\big| \\ &\qquad \lesssim \frac{1}{R^{\frac{\sigma}{\sigma-\delta}}} \Big(\varphi^*\Big(\frac{|x|{}^{2(\sigma-\delta)}+ t}{R}\Big)\Big)^{n-2\delta}= \frac{1}{R^{\frac{\sigma}{\sigma-\delta}}}\big(\phi^*_R(t,x)\big)^{\frac{n-2\delta}{n+2(\sigma-\delta)}}. \end{aligned} $$Hence, we may conclude

$$\displaystyle \begin{aligned} J_R= |J_R| \lesssim \frac{1}{R^{\frac{\sigma}{\sigma-\delta}}} \int_{Q_R}|u(t,x)|\, \big(\phi^*_R(t,x)\big)^{\frac{n-2\delta}{n+2(\sigma-\delta)}}\,d(x,t). {} \end{aligned} $$(52)Now we focus our attention to estimate the above integral. To do this, we introduce the function \(\Psi (s)= s^{p_c}\mu (s)\). Then, we derive

$$\displaystyle \begin{aligned} &\Psi\Big( |u(t,x)|\, \big(\phi^*_R(t,x)\big)^{\frac{n-2\delta}{n+2(\sigma-\delta)}}\Big) \\ &\qquad = |u(t,x)|{}^{p_c}\, \big(\phi^*_R(t,x)\big)^{\frac{p_c(n-2\delta)}{n+2(\sigma-\delta)}} \mu\Big( |u(t,x)|\, \big(\phi^*_R(t,x)\big)^{\frac{n-2\delta}{n+2(\sigma-\delta)}}\Big) \\ &\qquad \le |u(t,x)|{}^{p_c}\, \phi^*_R(t,x) \mu\big( |u(t,x)|\big)= \Psi\big( |u(t,x)|\big)\, \phi^*_R(t,x). {} \end{aligned} $$(53)Here we used the increasing property of the function μ = μ(s) and the relation

$$\displaystyle \begin{aligned}0\le \big(\phi^*_R(t,x)\big)^{\frac{n-2\delta}{n+2(\sigma-\delta)}} \le 1. \end{aligned}$$Due to the assumption (44), we may verify that Ψ is a convex function on a small interval (0, c 0] by the following relation:

$$\displaystyle \begin{aligned} \Psi''(s)= s^{p_c-2}\Big(p_c (p_c-1)\mu(s)+2p_c\, s\mu'(s)+ s^2 \mu''(s)\Big) \ge 0. \end{aligned}$$Moreover, we can choose a convex continuation of Ψ outside this interval to guarantee that Ψ is convex on [0, ∞). Applying Proposition 1 with h(s) = Ψ(s), \(f(t,x)= |u(t,x)|\big (\phi ^*_R(t,x)\big )^{\frac {n-2\delta }{n+2(\sigma -\delta )}}\) and γ ≡ 1 gives the following estimate:

$$\displaystyle \begin{aligned} &\Psi\Big(\frac{\int_{Q^*_R} |u(t,x)|\big(\phi^*_R(t,x)\big)^{\frac{n-2\delta}{n+2(\sigma-\delta)}}\,d(x,t)}{\int_{Q^*_R} 1\,d(x,t)}\Big) \\ &\qquad \le \frac{\int_{Q^*_R} \Psi\Big( |u(t,x)|\big(\phi^*_R(t,x)\big)^{\frac{n-2\delta}{n+2(\sigma-\delta)}}\Big)\,d(x,t)}{\int_{Q^*_R} 1\,d(x,t)}. \end{aligned} $$We may compute

$$\displaystyle \begin{aligned} \int_{Q^*_R} 1\,d(x,t)\approx R^{1+ \frac{n}{2(\sigma-\delta)}}. \end{aligned}$$Hence, we get

$$\displaystyle \begin{aligned} &\Psi\Big(\frac{\int_{Q^*_R} |u(t,x)|\big(\phi^*_R(t,x)\big)^{\frac{n-2\delta}{n+2(\sigma-\delta)}}\,d(x,t)}{R^{1+ \frac{n}{2(\sigma-\delta)}}}\Big) \\ &\qquad \le \frac{\int_{Q^*_R} \Psi\Big( |u(t,x)|\big(\phi^*_R(t,x)\big)^{\frac{n-2\delta}{n+2(\sigma-\delta)}}\Big)\,d(x,t)}{R^{1+ \frac{n}{2(\sigma-\delta)}}} \\ &\qquad \le \frac{\int_{Q_R} \Psi\Big( |u(t,x)|\big(\phi^*_R(t,x)\big)^{\frac{n-2\delta}{n+2(\sigma-\delta)}}\Big)\,d(x,t)}{R^{1+ \frac{n}{2(\sigma-\delta)}}}. {} \end{aligned} $$(54)Combining the estimates (53) and (54) we may arrive at

$$\displaystyle \begin{aligned} &\Psi\Big(\frac{\int_{Q^*_R} |u(t,x)|\big(\phi^*_R(t,x)\big)^{\frac{n-2\delta}{n+2(\sigma-\delta)}}\,d(x,t)}{R^{1+ \frac{n}{2(\sigma-\delta)}}}\Big) \\ &\qquad \le \frac{\int_{Q_R} \Psi\big( |u(t,x)|\big)\, \phi^*_R(t,x)\,d(x,t)}{R^{1+ \frac{n}{2(\sigma-\delta)}}}. {} \end{aligned} $$(55)Since μ = μ(s) is a strictly increasing function, it immediately follows that Ψ = Ψ(s) is also a strictly increasing function on [0, ∞). For this reason, from (55) we deduce

$$\displaystyle \begin{aligned} &\int_{Q_R} |u(t,x)|\big(\phi^*_R(t,x)\big)^{\frac{n-2\delta}{n+2(\sigma-\delta)}}\,d(x,t) \\ &\qquad = \int_{Q^*_R} |u(t,x)|\big(\phi^*_R(t,x)\big)^{\frac{n-2\delta}{n+2(\sigma-\delta)}}\,d(x,t) \\ &\qquad \le R^{1+ \frac{n}{2(\sigma-\delta)}}\,\Psi^{-1}\Big(\frac{\int_{Q_R} \Psi\big( |u(t,x)|\big)\, \phi^*_R(t,x)\,d(x,t)}{R^{1+ \frac{n}{2(\sigma-\delta)}}}\Big). {} \end{aligned} $$(56)From (46), (52) and (56) we may conclude

$$\displaystyle \begin{aligned} I_R \lesssim R^{\frac{n-2\delta}{2(\sigma-\delta)}}\,\Psi^{-1}\Big(\frac{\int_{Q_R} \Psi\big( |u(t,x)|\big)\, \phi^*_R(t,x)\,d(x,t)}{R^{1+ \frac{n}{2(\sigma-\delta)}}}\Big) {} \end{aligned} $$(57)for all R > R 0. Next we introduce the following two functions:

$$\displaystyle \begin{aligned} g(r)= \int_{Q_R} \Psi\big( |u(t,x)|\big)\, \phi^*_r(t,x)\,d(x,t) \quad \text{ with } r\in (0,\infty) \end{aligned}$$and

$$\displaystyle \begin{aligned} G(R)= \int_0^R g(r)r^{-1}\,dr. \end{aligned}$$Then, we re-write

$$\displaystyle \begin{aligned} G(R) &= \int_0^R \Big(\int_{Q_R} \Psi\big( |u(t,x)|\big)\, \phi^*_r(t,x)\,d(x,t)\Big) r^{-1}\,dr \\ &= \int_{Q_R} \Psi\big( |u(t,x)|\big)\Big(\int_0^R \Big(\varphi^*\Big(\frac{|x|{}^{2(\sigma-\delta)}+ t}{r}\Big)\Big)^{n+2(\sigma-\delta)} r^{-1}\,dr\Big)\,d(x,t). \end{aligned} $$Carrying out change of variables \(\tilde {r}= \frac {|x|{ }^{2(\sigma -\delta )}+ t}{r}\) we derive

(58)

(58)Moreover, it holds the following relation:

$$\displaystyle \begin{aligned} G'(R)R= g(R)= \int_{Q_R} \Psi\big( |u(t,x)|\big)\, \phi_R^*(t,x)\,d(x,t). {} \end{aligned} $$(59)$$\displaystyle \begin{aligned} \frac{G(R)}{\log(1+e)} \le I_R \le C_1 R^{\frac{n-2\delta}{2(\sigma-\delta)}}\,\Psi^{-1}\Big(\frac{G'(R)}{R^{\frac{n}{2(\sigma-\delta)}}}\Big) \end{aligned}$$for all R > R 0 and with a suitable positive constant C 1. This implies

$$\displaystyle \begin{aligned} \Psi\Big(\frac{G(R)}{C_2 R^{\frac{n-2\delta}{2(\sigma-\delta)}}}\Big) \le \frac{G'(R)}{R^{\frac{n}{2(\sigma-\delta)}}} \end{aligned}$$for all R > R 0 and \(C_2:= C_1\log (1+e)> 0\). By the definition of the function Ψ, the above inequality is equivalent to

$$\displaystyle \begin{aligned} \Big(\frac{G(R)}{C_2 R^{\frac{n-2\delta}{2(\sigma-\delta)}}}\Big)^{p_c}\mu\Big(\frac{G(R)}{C_2 R^{\frac{n}{2(\sigma-\delta)}}}\Big) \le \frac{G'(R)}{R^{\frac{n}{2(\sigma-\delta)}}} \end{aligned}$$for all R > R 0. Therefore, we have

$$\displaystyle \begin{aligned} \frac{1}{C_3 R}\mu\Big(\frac{G(R)}{C_2 R^{\frac{n-2\delta}{2(\sigma-\delta)}}}\Big) \le \frac{G'(R)}{\big(G(R)\big)^{p_c}} \end{aligned}$$for all R > R 0 and \(C_3:= C_2^{p_c}> 0\). Because G = G(R) is an increasing function, for all R > R 0 it holds the following inequality:

$$\displaystyle \begin{aligned} \frac{1}{C_3 R}\, \mu\Big(\frac{G(R_0)}{C_2 R^{\frac{n-2\delta}{2(\sigma-\delta)}}}\Big) \le \frac{G'(R)}{\big(G(R)\big)^{p_c}}. \end{aligned}$$After denoting \(\tilde {s}:= R\) and integrating two sides over [R 0, R] we arrive at

$$\displaystyle \begin{aligned} \frac{1}{C_3} \int_{R_0}^R \frac{1}{\tilde{s}}\, \mu\Big(\frac{1}{C_4 \tilde{s}^{\frac{n-2\delta}{2(\sigma-\delta)}}}\Big)\,d\tilde{s} &\le \int_{R_0}^R\frac{G'(\tilde{s})}{\big(G(\tilde{s})\big)^{p_c}}\,d\tilde{s} \\ &= \frac{n-2\delta}{2\sigma} \Big(\frac{1}{\big(G(R_0)\big)^{\frac{2\sigma}{n-2\delta}}}- \frac{1}{\big(G(R)\big)^{\frac{2\sigma}{n-2\delta}}}\Big) \\ &\le \frac{n-2\delta}{2\sigma \big(G(R_0)\big)^{\frac{2\sigma}{n-2\delta}}}, \end{aligned} $$where \(C_4:= \frac {C_2}{G(R_0)}> 0\). Letting R →∞ leads to

$$\displaystyle \begin{aligned} \frac{1}{C_3} \int_{R_0}^\infty \frac{1}{\tilde{s}}\, \mu\Big(\frac{1}{C_4 \tilde{s}^{\frac{n-2\delta}{2(\sigma-\delta)}}}\Big)\,d\tilde{s} \le \frac{n-2\delta}{2\sigma \big(G(R_0)\big)^{\frac{2\sigma}{n-2\delta}}}. \end{aligned}$$Finally, using change of variables \(s= C_4 \tilde {s}^{\frac {n-2\delta }{2(\sigma -\delta )}}\) we may conclude

$$\displaystyle \begin{aligned} C\int_{C_0}^\infty \frac{\mu\big(\frac{1}{s}\big)}{s}\,ds \le \frac{n-2\delta}{2\sigma \big(G(R_0)\big)^{\frac{2\sigma}{n-2\delta}}}, \end{aligned}$$where \(C:= \frac {2\sigma }{C_3(n-2\delta )}> 0\) and \(C_0:= C_4 R_0^{\frac {n-2\delta }{2(\sigma -\delta )}}> 0\) is a sufficiently large constant. This is a contradiction to the assumption (45). Summarizing, the proof of Theorem 4 is completed.

□

Remark 4

From the condition (42) in Theorem 3 and the condition (45) in Theorem 4, we recognize that determining the critical exponent \(p_{crit}= 1+ \frac {2\sigma }{n- 2\delta }\) in the scale of power nonlinearities {|u|p}p>1 is really sharp to (39) in the case \(\delta \in [0,\frac {\sigma }{2}]\), i.e. for “parabolic like models”. However, up to now this observation remains an open problem for “σ-evolution like models” in the remaining case \(\delta \in (\frac {\sigma }{2},\sigma ]\), the so-called “hyperbolic like models” or “wave like models” in the case σ = 1.

References

N. Abatangelo, S. Jarohs, A. Saldaña, On the loss of maximum principles for higher-order fractional Laplacians. Proc. Amer. Math. Soc. 146, 4823–4835 (2018)

M. D’Abbicco, M.R. Ebert, A new phenomenon in the critical exponent for structurally damped semi-linear evolution equations. Nonlinear Anal. 149, 1–40 (2017)

M. D’Abbicco, M.R. Ebert, The critical exponent for nonlinear damped σ-evolution equations (preprint, 2020). arXiv:2005.10946

M. D’Abbicco, M. Reissig, Semilinear structural damped waves. Math. Methods Appl. Sci. 37, 1570–1592 (2014)

M.R. Ebert, G. Girardi, M. Reissig, Critical regularity of nonlinearities in semilinear classical damped wave equations. Math. Ann. 378, 1311–1326 (2019). https://doi.org/10.1007/s00208-019-01921-5

Y.V. Egorov, B.W. Schulze, Pseudo-differential operators, singularities, applications. Birkhäuser, Basel (1997)

K. Fujiwara, A note for the global nonexistence of semirelativisticequations with nongauge invariant power type nonlinearity. Math. Methods Appl. Sci. 41, 4955–4966 (2018)

M. Kwaśnicki, Ten equivalent definitions of the fractional laplace operator. Fract. Calc. Appl. Anal. 20, 7–51 (2017)

Z. Pavić, Generalized inequalities for convex functions. J. Math. Extension 10, 77–87 (2016)

L. Silvestre, Regularity of the obstacle problem for a fractional power of the Laplace operator. Commun. Pure Appl. Math. 60(1), 67–112 (2007)

C.G. Simander, On Dirichlet Boundary Value Problem, an L p-Theory Based on a Generalization of Garding’s Inequality. Lecture Notes in Mathematics, vol. 268 (Springer, Berlin, 1972)

H. Triebel, Theory of Function Spaces. Birkhäuser, Basel (1983)

Acknowledgements

The first author would like to thank the Organizing Committee giving him the opportunity to participate at the INdAM Workshop “Anomalies in Evolution Equations”. This research of the first author (Tuan Anh Dao) is funded (or partially funded) by the Simons Foundation Grant Targeted for Institute of Mathematics, Vietnam Academy of Science and Technology. All the best to Prof. Massimo Cicognani and Prof. Michael Reissig on the occasion of their 60th birthdays.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendix

Appendix

Proposition 1 (A Generalized Jensen’s Inequality)

Let γ = γ(x) be a defined and nonnegative function almost everywhere on Ω, provided that γ is positive in a set of positive measure. Then, for each convex function h on\(\mathbb {R}\)the following inequality holds:

where f is any nonnegative function satisfying all the above integrals are meaningful.

The proof of this result can be found in [5, 9].

Lemma 8 (Useful Lemma)

The following formula of derivative of composed function holds for any multi-index α:

where h = h(z) and\(h^{(k)}(z)=\frac {d^k h(z)}{d\,z^k}\).

The result can be found in [11] at page 202.

Rights and permissions

Copyright information

© 2021 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Dao, T.A., Reissig, M. (2021). Blow-Up Results for Semi-Linear Structurally Damped σ-Evolution Equations. In: Cicognani, M., Del Santo, D., Parmeggiani, A., Reissig, M. (eds) Anomalies in Partial Differential Equations. Springer INdAM Series, vol 43. Springer, Cham. https://doi.org/10.1007/978-3-030-61346-4_10

Download citation

DOI: https://doi.org/10.1007/978-3-030-61346-4_10

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-61345-7

Online ISBN: 978-3-030-61346-4

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)