Abstract

In the near future, the 5th generation (5G) of wireless systems will be well established. They will consist of an integration of different techniques, including distributed antenna systems and massive multiple-input multiple-output (MIMO) systems, and the overall performance will highly depend on the channel coding techniques employed. Due to the nature of future wireless networks, space–time codes are no longer merely an object of choice, but will often appear naturally in the communications setting. However, as the involved communication devices often exhibit a modest computational power, the complexity of the codes to be utilised should be reasonably low for possible practical implementation. Fast-decodable codes enjoy reduced complexity of maximum-likelihood (ML) decoding due to a smart inner structure allowing for parallelisation in the ML search. The complexity reductions considered in this chapter are entirely owing to the algebraic structure of the considered codes, and could be further improved by employing non-ML decoding methods, however yielding suboptimal performance. The aim of this chapter is twofold. First, we provide a tutorial introduction to space–time coding and study powerful algebraic tools for their design and construction. Secondly, we revisit algebraic techniques used for reducing the worst-case decoding complexity of both single-user and multiuser space-time codes, alongside with general code families and illustrative examples.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

3.1 Introduction

Let us start this chapter by introducing, very briefly, the reader to the field of algebraic space–time coding. While there are various design criteria to be considered as well as a plethora of code constructions for a variety of different channel models and communications settings, we will here only review the developments most relevant to the rest of this chapter.

The first space–time code, the Alamouti code [1], was introduced in 1998 and gave rise to a massive amount of research in the attempt to construct well-performing codes for various multi-antenna wireless communications settings. It was discovered that the code matrices constituting this particular code actually depict an algebraic structure known as the Hamiltonian quaternions, and by restriction to Lipschitz (i.e., integral) quaternions, the (unconstrained) code becomes a lattice. As Hamiltonian quaternions are the most popular example of a division algebra, this finding prompted the study of general division algebra space–time lattice codes [4, 34].

Division algebras are related to achieving full diversity by maximising the rank of the code matrices [38]. Soon it was noticed that by choosing the related field extensions carefully, one can achieve non-vanishing determinants (NVD) [4] for the codewords, implying a non-vanishing coding gain [38]. As the coding gain is inversely proportional to the decoding error probability, this in turn prevents the error probability from blowing up. A related notion, the diversity–multiplexing gain [43] captures the tradeoff between the decay speed of the decoding error probability and available degrees of freedom. It is known that for symmetric systems, that is, with an equal number of transmit and receive antennas, full-rate space-time codes with the NVD property achieve the optimal tradeoff of the channel.

Several explicit constructions of space–time codes based on cyclic division algebras exist in the literature. For instance, Perfect space-time codes and their generalisations [5, 12, 30] provide orthogonal lattices for any number of antennas, whereas maximal order codes [14, 15, 39] optimise the coding gain, while giving up on the orthogonality of the underlying lattice.

In the multiuser settings considered in this chapter, multiple users are communicating to a joint destination, with or without cooperating with each other. When cooperation is allowed, it is possible to take advantage of intermediate distributed relays which aid the active transmitter in the communication process. Various protocols exist for enabling this type of diversity—the one considered here is the non-orthogonal half-duplex amplify-and-forward protocol, see [42]. The non-cooperative case is referred to as the multiple access channel (MAC), where users transmit signals independently of each other. Some algebraic MAC codes are presented in [22, 23], among others.

One of the biggest obstacles in utilising space-time lattice codes and realising the theoretical promise of performance gains is their decoding complexity. Namely, maximum-likelihood (ML) decoding boils down to closest lattice point search, the complexity of which grows exponentially in the lattice dimension. More efficient methods exist, most prominently sphere decoding [41], which limits the search to a hypersphere of a given radius. However, the complexity remains prohibitive for higher dimensional lattices. To this end, several attempts have been made to reduce the ML decoding complexity. In principle, there are two ways to do this: either one can resort to reduced-complexity decoders yielding suboptimal performance, or try to build the code lattice in such a way that its structure naturally allows for parallelisation of the decoding process, hence yielding reduction in the dimensionality of the search. In this chapter, we are interested in the latter: we will show how to design codes that inherently yield reduced complexity thanks to a carefully chosen underlying algebraic structure.

On our way to this goal, we will introduce the reader to the basics of lattices and algebraic number theory, to the extent that is relevant to this chapter. We will also lay out the typical channel models for the considered communications settings. Whenever we cannot explain everything in full detail in the interest of space, suitable references will be given for completeness. We assume the reader is familiar with basic abstract algebra and possesses some mathematical maturity, while assuming no extensive knowledge on wireless communications.

The rest of the chapter is organised as follows. We begin in Sect. 3.2 by familiarising the reader with the important notion of lattices and recall related results. Following a section introducing concepts and results from algebraic number theory, we study a particular class of central simple algebras, specifically cyclic division algebras, and their orders. We then move on to providing a background in wireless communications in Sect. 3.3, introducing the well-known multiple-input multiple-output fading channel model and related performance parameters. As a coding technique employed in this multiple-antenna communications setup, we then introduce the main object of this chapter, space–time codes. We recall code design criteria, and furthermore show how codes can be constructed from cyclic division algebras. In Sect. 3.4, maximum-likelihood decoding is introduced, and we discuss a possible decoding complexity reduction by algebraic means, defining the concept of fast-decodable space–time codes. The definition of fast decodability is then further refined, which allows us to consider more specific families of space–time codes with reduced decoding complexity. We further recall a useful iterative method for code construction. Finally, in Sect. 3.5 we discuss two specific communication scenarios as well as explicit methods to construct fast-decodable space–time codes.

3.2 Algebraic Tools for Space–Time Coding

Although space-time codes are primarily a tool for data transmission, they are of a highly mathematical nature. Indeed, design criteria derived for minimising the probability of incorrect decoding, which we will revisit in Sect. 3.3.2.1, can be met by ensuring certain algebraic properties of the underlying structure used for code construction. For this reason, we first devote a chapter to the mathematical notions needed for space–time code analysis and design.

We start with basic concepts and results about lattices, objects which are of particular interest as almost all space–time codes with good performance arise from lattice structures. This is both to ensure a linear structure—a lattice is simply a free \(\mathbb {Z}\)-module, thus an abelian group—as well as to avoid accumulation points at the receiver, to which end the discreteness property of a lattice is useful. Our main references for all lattice related results are [10, 11].

In a successive section, we then introduce relevant tools and objects from algebraic number theory, such as number fields, their rings of integers, and prime ideal factorisation. These tools will play a crucial role in the construction of space–time codes. As references, we have [28, 29].

Most importantly, we finally introduce central simple algebras and their orders, the main objects that will determine the performance of the constructed codes. Over number fields, every central simple algebra is cyclic, and we study these in detail. We refer to [6, 27, 31] for good general references.

3.2.1 Lattices

We begin with the simplest definition of a lattice in the ambient space \(\mathbb {R}^n\).

Definition 3.1

A lattice\(\Lambda \subset \mathbb {R}^n\) is the \(\mathbb {Z}\)-span of a set of vectors of \(\mathbb {R}^n\) that are linearly independent over \(\mathbb {R}\).

Note that we do not require that the number of vectors spanning Λ equals the dimension n. Indeed, any lattice is isomorphic to \(\mathbb {Z}^t\) as groups for t ≤ n. A lattice is thus a free abelian group of rank \( \operatorname {\mathrm {rk}}\left (\Lambda \right ) = t\), and is called full-rank or shortly full, if the rank and dimension coincide, i.e., t = n. We give an alternative and useful group theoretic definition.

Definition 3.2

A lattice\(\Lambda \subset \mathbb {R}^n\) is a discreteFootnote 1 subgroup of \(\mathbb {R}^n\).

A lattice \(\Lambda \subseteq \mathbb {R}^n\) can hence be expressed as a set

with \({\mathbf {b}}_i \in \mathbb {R}^n\) (and the z i uniquely determined by x). We say that \(\left \{{\mathbf {b}}_1,\ldots ,{\mathbf {b}}_t\right \}\) forms a \(\mathbb {Z}\)-basis of Λ.

We can conveniently define a generator matrix and the corresponding Gram matrix for Λ

so that every element of Λ can be expressed as x = M Λ z for some \(\mathbf {z} \in \mathbb {Z}^n\).

Example 3.1

The simplest lattice is the integer lattice \(\mathbb {Z}^n\) in arbitrary dimension n ≥ 1. A generator and Gram matrix for \(\mathbb {Z}^n\) is simply the n × n identity matrix.

A more interesting example in dimension n = 2 is the hexagonal latticeA 2. A \(\mathbb {Z}\)-basis for this lattice can be taken to be b 1 = (1, 0)t and \({\mathbf {b}}_2 = (-1/2,\sqrt {3}/2)^t\). A graphical representation of the lattice, as well as a generator and Gram matrix with respect to this basis are presented in Fig. 3.1.

To each lattice Λ, we can associate its fundamental parallelotope, defined as \(\mathcal {P}_{\Lambda } := \left \{\left . M_{\Lambda }\mathbf {y}\right | \mathbf {y} \in [0,1)^n \right \}\). Note that we can recover \(\mathbb {R}^n\) as a disjoint union of the sets \(\mathbf {x} + \mathcal {P}_{\Lambda }\) for all x ∈ Λ. Since M Λ contains a \(\mathbb {Z}\)-basis of Λ, any change of basis is obtained via an integer matrix with determinant ± 1. Hence, the Lebesgue measure of \(\mathcal {P}_{\Lambda }\) is invariant under change of basis. Thus, we define the volume of a lattice \(\Lambda \subset \mathbb {R}^n\) as the Lebesgue measure of its fundamental parallelotope,

We have defined a lattice to be a discrete subgroup of \(\mathbb {R}^n\) and they are, by definition, free \(\mathbb {Z}\)-modules. It is however possible and often desirable to extend the definition to other rings and ambient spaces, such as the ring of integers of a number field, or an order in a cyclic division algebra. In this more general context, we define a lattice Λ to be a discrete and finitely generated abelian subgroup of a real or complex ambient space V . In the previous derivations, we have set \(V = \mathbb {R}^n\). Of interest for purposes of space–time coding is to consider lattices in \(V = \operatorname {\mathrm {Mat}}(n,\mathbb {C})\). In this case, we can also identify a full lattice in V with a full lattice in \(\mathbb {R}^{2n^2}\) via the \(\mathbb {R}\)-linear isometry

We have ∥U∥F = ∥ι(U)∥, where ∥⋅∥ (resp. ∥⋅∥F) denotes the Euclidean (resp. Frobenius) norm, and ι maps full lattices in V to full lattices in the target Euclidean space. This map will be crucial for decoding considerations in later sections.

Let \(\Lambda \subset \operatorname {\mathrm {Mat}}(n,\mathbb {C})\) be a full lattice with \(\mathbb {Z}\)-basis \(\left \{B_1,\ldots ,B_n\right \},\ B_i \in \operatorname {\mathrm {Mat}}(n,\mathbb {C})\). A generator matrix and the corresponding Gram matrix for Λ can be given as

The volume of Λ is the volume of the corresponding lattice ι( Λ) in \(\mathbb {R}^{2n^2}\), i.e., \( \operatorname {\mathrm {vol}}\left (\Lambda \right ) = \sqrt {{\mathrm {det}}(G_{\Lambda })}\).

Example 3.2

We exemplify the notion of a lattice in \( \operatorname {\mathrm {Mat}}(n,\mathbb {C})\) and corresponding vectorisation on the famous Alamouti code [1]. As we shall see later, the Alamouti code is constructed from a lattice in \( \operatorname {\mathrm {Mat}}(2,\mathbb {C})\) corresponding to Hamiltonian (or more precisely Lipschitz) quaternions. More concretely, it is a finite subset

A basis of the underlying lattice ΛA consists of the matrices

Using the defined isometry ι, we can identify ΛA with a lattice in \(\mathbb {R}^8\), which we again denote by ΛA, with generator and Gram matrix

The volume of this lattice is \( \operatorname {\mathrm {vol}}\left (\Lambda _A\right ) = \sqrt {{\mathrm {det}}(G_{\Lambda _A})} = 4\).

3.2.2 Algebraic Number Theory

In this section, we recall fundamental notions from algebraic number theory which are indispensable for space–time code constructions. We assume that the reader is familiar with basic Galois theory.

Let L∕K be an arbitrary field extension. If we view L as a vector space over K, we can define the degree of the field extension as the vector space dimension, that is, \(\left [L:K\right ] := \dim _{K}(L)\). If the degree is finite, we call the extension finite. An element α ∈ L is called algebraic over K if there exists a non-zero polynomial \(f(x) \in K\left [x\right ]\) such that f(α) = 0, and the field extension L∕K is called algebraic if all elements of L are algebraic over K. Consider the homomorphism ϕ : K[x] → L, f(x)↦f(α). Since α is algebraic, \(\ker (\phi ) \neq 0\), and can be generated by a single polynomial m K,α(x), chosen to be monic of smallest degree admitting α as a root. We call this unique polynomial the minimal polynomial of α over K. When \(K=\mathbb {Q}\) or when the field is clear from context, we may shortly denote m α(x).

Definition 3.3

An algebraic number field is a finite extension of \(\mathbb {Q}\).

Example 3.3

The simplest example of a field extension over \(\mathbb {Q}\) is the Gaussian field \(\mathbb {Q}(i)=\{a+bi\,|\, a,b\in \mathbb {Q}\}\), where \(i=\sqrt {-1}\) is the imaginary unit. The minimal polynomial of \(i \in \mathbb {C}\) over \(\mathbb {Q}\) is given by m i(x) = x 2 + 1.

We will henceforth consider L∕K to be an extension of algebraic number fields. In the above example, we constructed the field \(\mathbb {Q}(i)\) by adjoining an algebraic element \(i \in \mathbb {C}\) to \(\mathbb {Q}\). By the notation \(\mathbb {Q}(i)\) we hence mean the smallest field that contains both \(\mathbb {Q}\) and i. This is a more general phenomenon.

Theorem 3.1 (Primitive Element Theorem)

Let L∕K be an extension of number fields. Then, there exists an element α ∈ L such that L = K(α).

We see that we can construct the field L by adjoining the algebraic element α ∈ L to K and, since m K,α(x) is irreducible, we have the isomorphism

It now becomes apparent that the degree of the field extension equals the degree of the minimal polynomial of the adjoined element, \(\left [L:K\right ] = \deg (m_{K,\alpha }(x))\).

Example 3.4

Consider the number field \(K = \mathbb {Q}(\sqrt {2},\sqrt {3})\). We claim that \(K = \mathbb {Q}(\sqrt {2}+\sqrt {3})\) and is hence generated by a single element. The inclusion \(\mathbb {Q}(\sqrt {2}+\sqrt {3}) \subseteq K\) is trivial, as \(\sqrt {2}+\sqrt {3} \in \mathbb {Q}(\sqrt {2},\sqrt {3})\). For the reverse inclusion, it suffices to express \(\sqrt {2}\) and \(\sqrt {3}\) in terms of elements of \(\mathbb {Q}(\sqrt {2}+\sqrt {3})\). Note that as \((\sqrt {2}+\sqrt {3})^2 = 5+2\sqrt {6}\) it follows that \(\sqrt {6} \in \mathbb {Q}(\sqrt {2}+\sqrt {3})\), and we have

This shows that \(\mathbb {Q}(\sqrt {2},\sqrt {3}) = \mathbb {Q}(\sqrt {2}+\sqrt {3})\). The minimal polynomial of \(\alpha := \sqrt {2}+\sqrt {3}\) is m α(x) = x 4 − 10x 2 + 1, and we see that \(\mathbb {Q}(\alpha )\) is an extension of degree 4.

We now define a very important ring associated with a number field K.

Definition 3.4

Let K be a number field. The integral closure of \(\mathbb {Z}\) in K consists of all the elements α ∈ K for which \(m_{\alpha }(x)\in \mathbb {Z}[x]\). The integral closure is a ring, called the ring of integers\(\mathcal {O}_K\) of K. We call any element \(\alpha \in \mathcal {O}_K\) an algebraic integer.

Example 3.5

Consider the field extension \(\mathbb {Q}(i)/\mathbb {Q}\). The ring of integers of \(\mathbb {Q}(i)\) is precisely \(\mathbb {Z}[i]\). It is however not always true that \(\mathcal {O}_{K(\alpha )} = \mathbb {Z}[\alpha ]\). Consider for example \(\mathbb {Q}(\sqrt {5})/\mathbb {Q}\). We have that \(\mathbb {Z}[\sqrt {5}]\) is composed of algebraic integers, but \(\mathbb {Z}[\sqrt {5}] \neq \mathcal {O}_K\). For example, the element \(\frac {1+\sqrt {5}}{2}\) is a root of the polynomial x 2 − x − 1, but \(\frac {1+\sqrt {5}}{2} \notin \mathbb {Z}[\sqrt {5}]\). In fact, it turns out that \(\mathcal {O}_K = \mathbb {Z}\left [\frac {1+\sqrt {5}}{2}\right ]\).

As we have seen, α ∈ K is an algebraic integer if and only if \(m_{\alpha }(x) \in \mathbb {Z}[x]\). Further, the field of fractions of \(\mathcal {O}_K\) is precisely K. In the above examples, the ring of integers \(\mathcal {O}_K = \mathbb {Z}[\theta ]\) admits a \(\mathbb {Z}\)-basis \(\left \{1,\theta \right \}\). In fact, we have the following result.

Theorem 3.2

Let K be a number field of degree n. The ring of integers \(\mathcal {O}_K\) of K is a free \(\mathbb {Z}\) -module of rank n.

As a consequence, the ring of integers \(\mathcal {O}_K\) admits an integral basis over \(\mathbb {Z}\), that is, a basis as a \(\mathbb {Z}\)-module. Given an extension L∕K of number fields, it is however not true in general that the ring of integers \(\mathcal {O}_L\) is a free \(\mathcal {O}_K\)-module. This holds for instance if \(\mathcal {O}_K\) is a principal ideal domain (PID). We will be considering extensions of \(\mathbb {Q}\) and \(\mathbb {Q}(i)\), hence circumventing this problem.Footnote 2

Consider a number field K of degree n over \(\mathbb {Q}\). We fix compatible embeddings of K into \(\mathbb {C}\), and identify the field with its image under these embeddings. More precisely, there exist exactly n pairwise distinct embeddings (i.e., injective ring homomorphisms) \(\sigma _i: K \to \mathbb {C}\), forming the set \( \operatorname {\mathrm {Hom}}_{\mathbb {Q}}(K,\mathbb {C}) = \left \{\sigma _1,\ldots ,\sigma _n\right \}\).

We split the embeddings into those whose image is real or complex, respectively. More concretely, let \(\sigma _1,\ldots ,\sigma _r: K \to \mathbb {R}\), and \(\sigma _{r+1},\ldots ,\sigma _n : K \to \mathbb {C}\). Note that the embeddings with complex image come in conjugate pairs, of which there are exactly \(s := \frac {n-r}{2}\). We call the tuple (r, s) the signature of the number field K.

We can use the embeddings to define two important functions, namely the norm and trace of elements in K. For each α ∈ K, consider the induced \(\mathbb {Q}\)-linear homomorphism φ α : K → K, where for all β ∈ K, we have φ α(β) = αβ. By fixing a basis of K over \(\mathbb {Q},\ \varphi _{\alpha }\) can be represented by a matrix \(A_{\alpha }\in \operatorname {\mathrm {Mat}}(n,\mathbb {Q})\). This is referred to as the left regular representation.

Definition 3.5

Let K be a number field of degree n, and let α ∈ K. The norm and trace of α, respectively, are defined as

These definitions are independent of the choice of a basis for A α.

We note that the norm and trace are generally rational elements. When \(\alpha \in \mathcal {O}_K\), however, we have \( \operatorname {\mathrm {Nm}}_{K}\left (\alpha \right ), \operatorname {\mathrm {Tr}}_{K}\left (\alpha \right ) \in \mathbb {Z}\).

Definition 3.6

Let K be a number field of degree n, with ring of integers \(\mathcal {O}_K\), and let \(\left \{b_1,\ldots ,b_n\right \}\) be an integral basis of \(\mathcal {O}_K\). The discriminant of K is the well-defined integer

The determinants above can indeed be shown to be equal. The discriminant d K is independent of the choice of basis, and hence an invariant of the number field.

Example 3.6

Consider the number field \(K = \mathbb {Q}(\sqrt {-5})\), with ring of integers \(\mathcal {O}_K = \mathbb {Z}[\sqrt {-5}]\). As K is a degree-2 extension of \(\mathbb {Q}\), and generated by a complex element, we have that its signature is (r, s) = (0, 1). A representative of the pair of complex embeddings is given by \(\sigma _1: \sqrt {-5} \mapsto -\sqrt {-5}\), and the complex conjugate σ 2 is simply the identity.

Given an element \(\alpha = x_0+\sqrt {-5}x_1 \in K\), the norm and trace of α can be computed to be

Moreover, we can compute the discriminant of K by choosing a basis \(\left \{1,\sqrt {-5}\right \}\) of \(\mathcal {O}_K\) and computing the determinant

The motivation for studying number fields has its origins in the factorisation of integers into primes. In the ring \(\mathbb {Z}\), prime and irreducible elements coincide, and every natural number factors uniquely into prime numbers. By generalising the ring \(\mathbb {Z}\) to the ring of integers \(\mathcal {O}_K\) of a number field, unique factorisation into prime elements is no longer guaranteed. However, the underlying structure of the ring \(\mathcal {O}_K\) allows for a generalisation of unique factorisation by making use of ideals, instead of elements.

Let K be a number field of degree n, and \(\mathfrak {a} \subset \mathcal {O}_K\) a non-zero ideal. Then \(\mathfrak {a}\) factors into a product of prime ideals, unique up to permutation,

where e i > 0. We define the norm of the ideal \(\mathfrak {a}\) as the cardinality of the finite ring \( \operatorname {\mathrm {N}}(\mathfrak {a}) := \left |\mathcal {O}_K/\mathfrak {a}\right |\). The ideal norm extends multiplicatively, and moreover \( \operatorname {\mathrm {N}}(\mathfrak {a}) \in \mathfrak {a}\). Consequently, if \( \operatorname {\mathrm {N}}(\mathfrak {a})\) is prime, then \(\mathfrak {a}\) is a prime ideal. More importantly, if \( \operatorname {\mathrm {N}}(\mathfrak {a}) = p_1^{e_1}\cdots p_k^{e_k}\) is the prime factorisation, then (as we can show that \(\mathfrak {a}\) divides \( \operatorname {\mathrm {N}}(\mathfrak {a})\mathcal {O}_K\)) it is clear that every prime divisor of \(\mathfrak {a}\) is a prime divisor of \(p_i \mathcal {O}_K\) for some i.

Remark 3.1

If all prime divisors of \(p\mathcal {O}_K\) are known for all primes \(p \in \mathbb {Z}\), then all ideals of \(\mathcal {O}_K\) are known.

Let \(\mathfrak {p} \subset \mathcal {O}_K\) be a prime ideal. Then \(\mathfrak {p}\cap \mathbb {Z} = p\mathbb {Z}\) is a prime ideal of \(\mathbb {Z},\ p\) prime. We can hence write

for \(\mathfrak {p}_i\) distinct prime ideals of \(\mathcal {O}_K\). The number \(e = e(\mathfrak {p}/p\mathbb {Z})\) is referred to as the ramification index of \(p\mathbb {Z}\) at \(\mathfrak {p}\). We further define the residue class degree of \(\mathfrak {p}/p\mathbb {Z}\) as the integer f ≥ 1 which satisfies \( \operatorname {\mathrm {N}}(\mathfrak {p}) = p^f\).

Example 3.7

Consider \(K = \mathbb {Q}(i)\), and let p > 2 be a rational prime. We want to study the factorisation of p in \(\mathcal {O}_K = \mathbb {Z}[i]\). We have the following isomorphisms:

By norm considerations, as \( \operatorname {\mathrm {N}}(p\mathbb {Z}[i]) = |\mathbb {Z}[i]/\langle p \rangle |=|\mathbb {F}_p[x]/\langle x^2+1\rangle | = p^2\), we have that p can either remain prime in \(\mathbb {Z}[i]\), or be the product of two prime ideals. On the other hand, we know that \(p\mathbb {Z}[i]\) is prime if and only if \(\mathbb {Z}[i]/\langle p \rangle \) is a field. In fact,

so that the residue class degree is f = 2. This quotient is a field precisely when x 2 + 1 is irreducible. This is the case for p≢1 mod 4.

For the case p ≡ 1 mod 4, we can factor x 2 + 1 = (x − a)(x − b), and we get a factorisation \(p\mathbb {Z}[i] = (i-a)(i-b)\).

3.2.3 Central Simple Algebras

Let K be a field, and \(\mathcal {A}\supseteq K\) a finite-dimensional associative K-algebra, i.e., a finite-dimensional K-vector space and a ring together with a K-bilinear product. The algebra is simple, if it contains no non-trivial two-sided ideals, and moreover central if its centre is precisely K. The algebra is a division algebra if all of its non-zero elements are invertible. We have the following important theorem, which is a simplified version of a more general result.

Theorem 3.3 (Wedderburn)

Every central simple K-algebra is isomorphic to \( \operatorname {\mathrm {Mat}}(n,D)\) for some uniquely determined n and some division K-algebra D, unique up to isomorphism.

If \(\mathcal {A}\) is a central simple K-algebra and D is the division algebra from the above theorem, we denote by \( \operatorname {\mathrm {ind}}(\mathcal {A}) = \sqrt {\left [D:K\right ]}\) the index, and by \(\deg (\mathcal {A}) = \sqrt {\left [\mathcal {A}:K\right ]}\) the degree of the algebra. \(\mathcal {A}\) is a division algebra if and only if \( \operatorname {\mathrm {ind}}(\mathcal {A}) = \deg (\mathcal {A})\).

If \(\mathcal {A}\) is a finite-dimensional central simple algebra over a field K, then \(\mathcal {A}\) is said to be cyclic if it contains a strictly maximal subfield L such that L∕K is a cyclic field extension, i.e., the Galois group is a cyclic group. If K is a number field, every K-central simple algebra is cyclic, and vice versa. This family of central simple algebras has been widely used for space–time coding since the work [34]. We start with the special case of cyclic algebras of degree 2, also known as quaternion algebras.

Definition 3.7

Let K be a field, and a, γ ∈ K × not necessarily distinct. A quaternion algebra (a, γ)K is a K-central algebra defined as

where the basis elements satisfy the rules

Example 3.8

The most famous example is the set of Hamiltonian quaternions, which can be defined as \(\mathbb {H} = (-1,-1)_{\mathbb {R}}\). An element \(x \in \mathbb {H}\) is of the form x = x 0 + ix 1 + jx 2 + kx 3 with \((x_0,x_1,x_2,x_3) \in \mathbb {R}^4,\ i^2 = j^2 = -1\) and ij = −ji = k.

For quaternion algebras, we have the following deep and important classification result.

Theorem 3.4

Let (a, γ)Kbe a quaternion algebra. We have two possibilities.

-

(a)

(a, γ)Kis a division algebra.

-

(b)

\((a,\gamma )_K \cong \operatorname {\mathrm {Mat}}(2,K)\).

We can determine which of the cases apply by means of a simple quaternary quadratic form. To be more precise, consider an element x = x 0 + ix 1 + jx 2 + kx 3 ∈ (a, γ)K, and define the norm of x as

where x ∗ = x 0 − ix 1 − jx 2 − kx 3 is the conjugate of x. Then, the quaternion algebra (a, γ)K is division if and only if \( \operatorname {\mathrm {Nm}}(x) = 0\) implies x = 0.

Example 3.9

Recall the set of Hamiltonian quaternions \(\mathbb {H}\). The norm of an element \(x = x_0+ix_1+jx_2+kx_3 \in \mathbb {H}\) is \( \operatorname {\mathrm {Nm}}(x) = x_0^2+x_1^2+x_2^2+x_3^2 \ge 0\). As \(x_i \in \mathbb {R}\), we have equality if and only if x = 0. Hence, \(\mathbb {H}\) is a division algebra.

A quaternion algebra is a degree-4 vector space over the centre K. They are a special case of the more general cyclic algebras, a family of central simple algebras which we study in the sequel.

Definition 3.8

Let L∕K be a degree-n cyclic Galois extension of number fields, and denote by \(\langle \sigma \rangle = \operatorname {\mathrm {Gal}}\left (L/K\right )\) its Galois group. A cyclic algebra is a tuple

where e n = γ ∈ K × and multiplication satisfies le = eσ(l) for all l ∈ L.

The algebra \(\mathcal {C}\) is K-central simple, and is called a cyclic division algebra if it is division.

The usefulness of cyclic division algebras for purposes of space–time coding starts with the existence of a matrix representation of elements of the algebra. To be more precise, each element \(x = \sum _{i=0}^{n-1}{e^i x_i} \in \mathcal {C}\) induces for all \(y \in \mathcal {C}\) a right L-linear map ρ : y↦xy, which is referred to as the left-regular representation of the algebra, and describes left multiplication with x. We can define a matrix associated with ρ, given by

Example 3.10

Let us consider again the Hamiltonian quaternions. Using the above notation, we write e = j and

with cj = jc ∗ for all \(c\in \mathbb {C}\) and j 2 = γ = −1. Note that we have intentionally chosen to represent \(\mathbb {H}\) as a right vector space in order to be compatible with the left regular representation.

Let now x = x 0 + jx 1 with \(x_0,x_1\in \mathbb {C}\). If we multiply the basis elements \(\{1,j\}_{\mathbb {C}}\) from the left by x, we get

In a matrix form, we have

Note that this matrix corresponds to the Alamouti code.

Example 3.11

Let L∕K be a number field extension of degree n = 3. Then, we can pick a basis \(\left \{1,e,e^2\right \}\) of a cyclic algebra \(\mathcal {C}\) over its maximal subfield L, where e 3 = γ ∈ K ×. Let x = x 0 + ex 1 + e 2 x 2, and consider left multiplication. Similarly as above,

We see that in this basis, left multiplication by x can be represented by the matrix

We close this section by recalling how to ensure that a cyclic algebra (L∕K, σ, γ) is division by means of the element γ ∈ K ×. The result is a simple corollary to a result due to A. Albert.

Theorem 3.5

Let \(\mathcal {C} = (L/K,\sigma ,\gamma )\) be a cyclic algebra. If γ n∕p is not a norm of some element of L for all prime divisors p of n, then \(\mathcal {C}\) is division.

3.2.3.1 Orders

Given a number field K, the collection of integral elements form the ring of integers \(\mathcal {O}_K\) of K. This ring is the unique maximal order of K, a concept which we will now recall in a more general context.

Definition 3.9

Let \(\mathcal {C} = (L/K,\sigma ,\gamma )\) be a cyclic division algebra. An \(\mathcal {O}_K\)-order Γ in \(\mathcal {C}\) is a subring of \(\mathcal {C}\) sharing the same identity as \(\mathcal {C}\) and such that Γ is a finitely generated \(\mathcal {O}_K\)-module which generates \(\mathcal {C}\) as a linear space over K.

An order is maximal if it is not properly contained in another order of \(\mathcal {C}\).

Every order of a cyclic division algebra is contained in a maximal order. Within a number field K, the ring of integers \(\mathcal {O}_K\) is integrally closed and the unique maximal order of K. In general, a maximal order Γ of \(\mathcal {C}\) is not integrally closed, and a division algebra \(\mathcal {C}\) may contain multiple maximal orders. In contrast, the following special order is often of interest due to its simple structure. It is in fact the initial source for space–time codes with non-vanishing determinants.

Definition 3.10

Let \(\mathcal {C} = (L/K,\sigma ,\gamma )\) be a cyclic division algebra. The natural order of \(\mathcal {C}\) is the \(\mathcal {O}_K\)-module

Note that Γnat is not closed under multiplication unless \(\gamma \in \mathcal {O}_K\).

Remark 3.2

Given a cyclic division algebra \(\mathcal {C} = (L/K,\sigma ,\gamma )\) and an element c ∈ Γ, where \(\Gamma \subset \mathcal {C}\) is an order, we can define concepts like the reduced norm\( \operatorname {\mathrm {nm}}(c) = {\mathrm {det}}(\rho (c))\) and reduced trace\( \operatorname {\mathrm {tr}}(c) = \operatorname {\mathrm {Tr}}(\rho (c))\). These are elements of the ring of integers of the centre, i.e., \( \operatorname {\mathrm {nm}}(c), \operatorname {\mathrm {tr}}(c) \in \mathcal {O}_K\). Consequently, for \(K = \mathbb {Q}\) or K imaginary quadratic, we have \(| \operatorname {\mathrm {nm}}(c)| \ge 1\) for any non-zero c ∈ Γ, an observation which is crucial for achieving the non-vanishing determinant property (cf. Sect. 3.3.2.1).

3.3 Physical Layer Communications

In this section, we study the characteristics and properties of a wireless channel, discussing various methods for combating the effects of fading and noise.

3.3.1 Rayleigh Fading MIMO Channel

In a wireless environment, in contrast to wired channels, the signal traverses several different paths between a transmitter and receiver. Consequently, different versions of the signal distorted by (independent) environmental effects will come together at the receiver, causing a superimposed channel output. Together with dissipation effects caused by urban scatterers as well as interference, the signal experiences fading, and various statistical models exist to describe these phenomena. Here, we consider the widely used Rayleigh fading channel model. In addition, thermal noise at the receiver further distorts the channel output.

To be more precise, assume a single source, equipped with n t ≥ 1 transmit antennas, and a single destination, with n r ≥ 1 receive antennas. If n t, n r ≥ 2 we refer to the setup as the multiple-input multiple-output (MIMO) model, while the case (n t, n r) = (1, 1) is termed the single-input single-output (SISO) channel model. The mixed cases (n t = 1, n r > 1) and (n t > 1, n r = 1) are the SIMO and MISO channel setups, respectively.

Consider a channel between n t transmit antennas and n r receive antennas. The wireless channel is modelled by a random matrix

We assume that the channel remains static for T ≥ n t time slots and then changes independently of its previous state, and refer to T as the channel delay or channel coherence time. Each of the entries h ij of H denotes the path gain from transmit antenna j to receive antenna i. They are modelled as complex variables with i.i.d. normal distributed real and imaginary parts,

yielding a Rayleigh distributed envelope

with scale parameter σ h, which gives this fading model its name.

The additive noise is modelled by a matrix \(N \in \operatorname {\mathrm {Mat}}(n_r\times T,\mathbb {C})\) with i.i.d. complex Gaussian entries with finite variance \(\sigma _n^2\). To combat the destructive effects of fading, the transmitter encodes its data into a codeword matrix \(X \in \operatorname {\mathrm {Mat}}(n_t\times T, \mathbb {C})\). Each column x i of X corresponds to the signal vector transmitted in the ith time slot, across the available transmit antennas. If we denote each column of the noise matrix N by n i, the received signal at each time slot 1 ≤ i ≤ T is given by the channel equation

We assume that the destination waits for the T subsequent transmissions before starting any decoding process. As usual, we assume perfect channel state information at the receiver, while the transmitter only has statistical channel information. The channel is supposed to remain fixed during the entire transmission process, and hence we can summarise the overall channel equation in a compact form to read

Thus, by allowing the use of multiple antennas at the transmitter and/or receiver, we have created spatial diversity. By ensuring a separation of the antennas by at least half the used wavelength, the multiple signals will fade independently of each other. On the other hand, the transmission over multiple time slots enables temporal diversity, providing copies of the signal at the receiver.

The physical conditions in an actual wireless channel are rapidly changing. Consequently, the comparison in performance of two different codes needs to be considered with respect to a standardised quantity. We define the signal-to-noise ratio (\( \operatorname {\mathrm {SNR}}\)) at the receiver as the ratio of the received signal power to noise power, that is,

3.3.1.1 Performance Parameters of a Wireless Channel

Consider a MIMO channel with n t transmit antennas and n r receive antennas. The first quantity that we need to mention is the capacity of the channel.

Definition 3.11

Assume that the receiver knows the realisation of the channel matrix H. For a fixed power constraint on the channel input, the capacity of a MIMO channel is the upper bound on the mutual information between the channel input and output, given the channel realisation.

As the capacity depends on the channel matrix, it needs to be viewed as a random variable. The ergodic capacity of a MIMO channel is given by

Recently, the authors in [24] gave criteria for algebraic space–time codes from division algebras to achieve the channel capacity up to a constant gap.

Equivalently we can interpret the capacity of the channel as the upper bound on the amount of information that can be transmitted, so that the probability of error can be maintained arbitrarily low. At high \( \operatorname {\mathrm {SNR}}\), the capacity of the channel scales with the number of antennas. More specifically, an \( \operatorname {\mathrm {SNR}}\) increase of 3 dB results in an increase in capacity by \(\min \left \{n_t,n_r\right \}\).

We now define two quantities which allow us to compare different coding strategies for the MIMO channel.

Definition 3.12

Consider a MIMO channel.

-

(i)

The diversity gain of a coding strategy is the asymptotic slope of the corresponding error probability curve with respect to the \( \operatorname {\mathrm {SNR}}\) in a \(\log -\log \) scale.

-

(ii)

The coding gain measures the difference in \( \operatorname {\mathrm {SNR}}\) required for two different full-diversity coding strategies to achieve the same error probability.

3.3.2 Space–Time Codes

This section introduces the main object of the survey: space–time codes. These codes are tailor-made for MIMO communications. We start with basic definitions and relate the enabled spatial and temporal diversity to the matrix structure of space–time codewords.

In the first subsection, the basic code design criteria for minimising the probability of incorrect decoding are derived. While the design criteria are independent of the actual code construction method and hold for any matrix codebook, various results are then introduced exposing how the criteria can be met by purely algebraic means. Hence, it becomes clear which properties the underlying structures should exhibit in order to construct well-performing codes.

After this, we utilise the algebraic tools introduced in Sect. 3.2 in order to construct space–time codes meeting the derived criteria.

3.3.2.1 Design Criteria for Space–Time Codes

Recall the Rayleigh fading n t × n r MIMO channel model with channel coherence time T. We have seen that the codewords X need to be taken from some collection of matrices \(\mathcal {X} \subset \operatorname {\mathrm {Mat}}(n_t\times T,\mathbb {C})\). Very naively, and this is our first definition, we simply define a code to be a finite collection of such matrices.

Definition 3.13

Let \(\mathfrak {C} \subset \mathbb {R}^\times \) be a finite subset and \(k\in \mathbb {Z}_+\). A space–time code is the image of an injective map \(\phi : \mathfrak {C}^k \to \operatorname {\mathrm {Mat}}(n_t\times T,\mathbb {C})\).

Having no structure, however, may lead to accumulation of the received signals. To circumvent this problem, forcing a discrete and linear structure on the code is helpful, e.g., a lattice structure. We give the more specialised definition of linear space–time codes, which we will consider henceforth.

Definition 3.14

Let \(\left \{ B_i \right \}_{i=1}^{k}\) be an \(\mathbb {R}\)-linearly independent set of matrices in \( \operatorname {\mathrm {Mat}}(n_t\times T,\mathbb {C})\). A linear space–time block code of rank k is a set of the form

where \(S \subset \mathbb {Z}\) is a finite signalling alphabet. In relation to the previous definition, we have \(\mathcal {X}=\phi (\mathfrak {C}^k) \), where \(\mathfrak {C}=S\).

As the matrices \(\left \{ B_i \right \}_{i=1}^{k}\) form a basis of a lattice\(\Lambda \subset \operatorname {\mathrm {Mat}}(n_t\times T, \mathbb {C})\), \(\mathcal {X}\) is called a space–time lattice code of rank \(k = \operatorname {\mathrm {rk}}\left (\Lambda \right )\leq 2n_tT\), the upper bound being imposed by the \(\mathbb {R}\)-dimension of \( \operatorname {\mathrm {Mat}}(n_t\times T,\mathbb {C})\).

We henceforth refer to such a code \(\mathcal {X}\) simply as a space–time code. As the transmit power consumption is directly related to the Frobenius norm of the transmitted codeword, the finite codebook is usually carved out to consist of a desired number of lattice elements with smallest possible Frobenius norms.Footnote 3

The code rate of \(\mathcal {X}\) is defined as R = k∕T real symbols per channel use.Footnote 4 In the literature, a code is often said to be full rate if all available degrees of freedom from the transmitter’s point of view are utilised, i.e., k = 2n t T and R = 2n t T∕n t = 2T. This is a consequence of mainly having considered symmetric square systems, that is, the case n t = n r = T. Here, we do not restrict to symmetric systems and define full rate as the maximum rate that still maintains the discrete structure at the receiver and allows for linear detection methods such as sphere-decoding [41]. More precisely, for n r receive antennas we define full rate as 2n r. Hence, in order to achieve full rate as defined in this chapter (avoiding accumulation points at the receiver’s space), for n r receive antennas we should choose a lattice of rank 2n r T (instead of 2n t T).

Consider a space–time code \(\mathcal {X}\), and let \(X \in \mathcal {X}\) be the transmitted codeword. A receiver observes its channel output Y and, as it is assumed to know the channel H and the noise is zero-mean, decodes a maximum-likelihood estimate of the transmitted codeword by computing

The probability \(\mathscr {P}(X \to X')\) that a codeword X′≠ X is decoded when X was transmitted is asymptotically upper bounded with increasing \( \operatorname {\mathrm {SNR}}\) as

From this upper bound, two design criteria can be derived [38]. The diversity gain of a code as defined above relates to the minimum rank of (X − X′) over all pairs of distinct code matrices \((X,X') \in \mathcal {X}^2\). Thus, the minimum rank of \(\mathcal {X}\) should satisfy

A code satisfying this criterion is called a full-diversity code.

On the other hand, the coding gain can be shown to be proportional to the determinant \({\mathrm {det}}\left ((X-X')(X-X')^\dagger \right )\). As a consequence, the minimum taken over all pairs of distinct codewords,

should be as large as possible. For the infinite code

we define the minimum determinant as the infimum

If \(\Delta _{\min }(\mathcal {X}_\infty ) > 0\), i.e., the determinants do not vanish as the code size increases, the code is said to have the non-vanishing determinant property.

We assume henceforth that the number of transmit antennas and channel delay coincide, n t = T =: n. Given a lattice \(\Lambda \subset \operatorname {\mathrm {Mat}}(n,\mathbb {C})\), we have by linearity

This implies that any lattice Λ with non-vanishing determinants can be scaled so that \(\Delta _{\min }(\Lambda )\) achieves any wanted nonzero value. Consequently, the comparison of two different lattices requires some sort of normalisation. Let Λ be a full lattice with volume \( \operatorname {\mathrm {vol}}\left (\Lambda \right )\). The normalised minimum determinant and normalised density of Λ are the normalised quantities

and satisfy the relation \(\delta (\Lambda )^2 = \eta (\Lambda )^{\frac {1}{n}}\). Thus, for a fixed minimum determinant, the coding gain can be increased by maximising the density of the code lattice. Or, the other way around, for a fixed volume, the coding gain can be increased by maximising the minimum determinant of the lattice.

3.3.2.2 Constructions from Cyclic Division Algebras

We move on to illustrate how space–time codes satisfying the two introduced criteria can be designed. We begin by ensuring full diversity, to which end the following result is helpful.

Theorem 3.6 ([34, Prop. 1])

Let K be a field and\(\mathcal {D}\)an index-n division K-algebra with a maximal subfield L. Any finite subset\(\mathcal {X}\)of the image of a ring homomorphism\(\phi : \mathcal {D} \mapsto \operatorname {\mathrm {Mat}}(n, L)\)satisfies\( \operatorname {\mathrm {rk}}\left (X-X'\right ) = n\)for any distinct\(X, X' \in \mathcal {X}\).

This leads to a straightforward approach for constructing full-diversity codes, namely by choosing the underlying structure to be a division algebra. In the same article, cyclic division algebras were proposed for code construction as a particular example of division algebras. The ring homomorphism ϕ is the link between the division algebra and a full-diversity space–time code, as we illustrate in the following.

Let \(\mathcal {C} = (L/K,\sigma ,\gamma )\) be a cyclic division algebra of degree n. The left-regular representation \(\rho : \mathcal {C} \to \operatorname {\mathrm {Mat}}(n,\mathbb {C})\) is an injective ring homomorphism (cf. Definition 3.8 and the discussion beneath). We identify elements in \(\mathcal {C}\) with elements in \( \operatorname {\mathrm {Mat}}(n,\mathbb {C})\) via ρ. This leads to the following definition.

Definition 3.15

Let \(\mathcal {C}\) be an index-n cyclic division algebra with left-regular representation \(\rho : \mathcal {C} \to \operatorname {\mathrm {Mat}}(n,\mathbb {C})\). A space–time code constructed from \(\mathcal {C}\) is a finite subset

To be consistent with Definition 3.14, let \(\left \{ B_i \right \}_{i=1}^{k}\subset \operatorname {\mathrm {Mat}}(n,\mathbb {C})\) with k ≤ 2n 2 be a set of \(\mathbb {Q}\)-linearly independent matrices in \(\rho (\mathcal {C})\). For a fixed signalling alphabet \(S \subset \mathbb {Z}\), symmetric around the origin, the space–time code \(\mathcal {X}\) is of the form

If \(\mathcal {C}\) admits a basis over \(\mathbb {Z}[i]\), we may also consider the lattice with respect to its \(\mathbb {Z}[i]\)-basis, and the signalling alphabet will then be a subset in \(\mathbb {Z}[i]\).

Note that, given an element X = ρ(x), where \(x \in \mathcal {C}\), we have that det(X) = det(ρ(x)) ∈ K. We can however restrict to certain subrings of the cyclic division algebra, for instance an order Γ. For any x ∈ Γ, we have \({\mathrm {det}}(\rho (x)) \in \mathcal {O}_K\). This yields |det(ρ(x))|≥ 1 for \(K = \mathbb {Q}\) or K an imaginary quadratic number field. Then, we can consider finite subsets of ρ( Γ) as space–time lattice codes guaranteeing non-vanishing determinants (cf. Remark 3.2).

Example 3.12

Consider a MIMO system with n = n t = T = 2, and consider the index-2 number field extension \(L/K = \mathbb {Q}(i,\sqrt {5})/\mathbb {Q}(i)\). The ring of integers of L is \(\mathcal {O}_L = \mathbb {Z}[i,\theta ]\) with \(\theta = \frac {1+\sqrt {5}}{2}\), and we pick the relative integral basis \(\left \{1,\theta \right \}\) of \(\mathcal {O}_L\) over \(\mathcal {O}_K=\mathbb {Z}[i]\). The Golden code [5] is constructed from the cyclic division algebra

with \(\sigma : \sqrt {5} \mapsto -\sqrt {5}\) and \(\gamma \in \mathbb {Q}(i)\) non-zero and such that \(\gamma \neq \operatorname {\mathrm {Nm}}_{L/K}\left (l\right )\) for any l ∈ L. We pick γ = i, leading to a (left regular) matrix representation of \(\mathcal {G}\) of the form

where x i ∈ K.

The algebra \(\mathcal {G}\) is a division algebra by Theorem 3.5, so that the Golden code is indeed a full-diversity space–time code. Moreover, by restricting the codewords to the natural order Γnat by choosing \(x_i \in \mathbb {Z}[i]\) guarantees the non-vanishing determinant property (cf. Remark 3.2).

The actual Golden code lattice is a twisted version of ρ( Γnat) in order to get an orthogonal lattice. The twisting does not affect the normalised minimum determinant.

3.4 Codes with Reduced ML Decoding Complexity

Using multiple antennas for increased diversity—and additionally enabling temporal diversity—comes at the cost of a higher complexity in decoding. The worst-case complexity of maximum-likelihood (ML) decoding is upper bounded by that of exhaustive search, and is often computationally too expensive for practical use for higher-dimensional code lattices. A fast-decodable space–time code is, in colloquial terms, simply a space–time code whose worst-case ML decoding complexity is lower than that of exhaustive search.

Yet, independently of the actual decoder used, the ML decoding complexity of a space–time code can sometimes be reduced by algebraic means, allowing for parallelisation in the ML search. If the underlying code lattice is of rank k, this requires in principle joint decoding of k information symbols. One way to achieve fast-decodability (this is also how we define fast decodability more formally below) is then to reduce the dimensionality of the (e.g., sphere) decoder, that is, to enable parallelisation where each parallel set contains less than k symbols to be jointly decoded.

In this section we introduce the technique of ML decoding and revise criteria for a space–time code to be fast-decodable. We further specify different families of fast-decodable codes and study their potential decoding complexity reduction.

3.4.1 Maximum-Likelihood Decoding

In the previous sections, we have seen what properties a space–time code should exhibit to potentially ensure a reasonable performance, at least in terms of reliability. There are however more aspects of the communication process which need to be taken into consideration. Orthogonal lattices allow for efficient encoding of the information symbols and bit-labelling of the codewords, while not necessarily yielding the best possible error performance. On the other hand, a too complicated lattice structure makes it more complex to encode a signal in the first place, and may require brute force bit labelling of the codewords.

On the receiver’s side, the structure of the code lattice determines the complexity of the decoding process. Indeed, as already mentioned, the major bottleneck in effective implementation of algebraic space–time codes has been their decoding complexity. The concept of fast-decodability was introduced in [9] in order to address the possibility for reducing the dimensionality of the ML decoding problem (cf. (3.2)) without having to resort to suboptimal decoding methods.

Given a finite signalling alphabet \(S \subset \mathbb {Z}\), the ML decoding complexity of a rank-k space–time code \(\mathcal {X}\) is defined as the minimum number of values that have to be computed for finding the solution to (3.2). The upper bound is the worst-case decoding complexity that we denote by \(\mathfrak {D}(S)\), which for its part is upper bounded by the exhaustive search complexity, \(\mathfrak {D}(S)\le |S|{ }^k\). The following definition is hence straightforward.

Definition 3.16

A space–time code \(\mathcal {X}\) is said to be fast-decodable if its ML decoding complexity is upper bounded by

where k′ < k is the number of symbols to be jointly decoded and c ≤ k is a constant describing the number of parallel symbol groups to be decoded. If c = k, this means that we can decode symbol-wise (k′ = 1) with linear complexity. We refer to k′ as the complexity order.

We will mostly drop the constant c in the rest of the chapter and concentrate only on the order k′, and also by abuse of notation write \(\mathfrak {D}(S) = |S|{ }^{k'}\) without the constant.

Now let us proceed to investigate how to determine the complexity order of a space–time code \(\mathcal {X}\). Let \(\left \{B_i\right \}_{i=1}^k\) be a basis of \(\mathcal {X}\) over \(\mathbb {Z}\), and \(X \in \mathcal {X}\) the transmitted signal. Recall the isometry (3.1), which allows us to identify the space–time code lattice with a lattice in Euclidean space. In addition, for \(c \in \mathbb {C}\) let

From the channel output Y = HX + N, define the matrices

The equivalent received codeword under the isometry can be expressed as ι(HX) = B H s for a coefficient vector s t = (s 1, …, s k) ∈ S k, and we get an equivalent vectorized channel equation

where \(\tilde {H} = (\tilde {h}_{ij})_{i,j}\) and ⊗ denotes the Kronecker product.

We go on to perform QR-decomposition on B H, or equivalently on \((I_T\otimes \tilde {H})B\). We write B H = QR with \(Q \in \operatorname {\mathrm {Mat}}(2n_r T \times k,\mathbb {R})\) unitary and \(R \in \operatorname {\mathrm {Mat}}(k,\mathbb {R})\) upper triangular. More precisely, if we write

the matrix R is precisely given by

where

Since the receiver has channel state information, and as the noise is zero-mean, the decoding process, as we have already seen, requires to solve the minimisation problem

Using the QR decomposition, we can solve the equivalent problem

a problem which can be solved using a real sphere-decoder [41]. It is now clear that the structure of the matrix R determines the complexity of decoding. With zero entries at specific places, the involved variables can be decoded independently of each other, allowing for parallelisation in the decoding process, and potentially reducing the decoding complexity.

Moreover, different orderings of the weight matrices B i, or equivalently of the symbols s i, result in different zero patterns in the matrix R. An algorithm for the optimal ordering of the weight matrices resulting in the minimum possible decoding complexity is given in [20], and was implemented in [18]. We use the implementation found in the latter article for the explicit computation of the decoding complexity reduction of the example codes exposed in the remaining of this section.

Before we move on to define more specialized families of fast-decodable codes, we present the usual approach to give sufficient conditions for a code to be fast-decodable. This so-called Hurwitz-Radon quadratic form approach is discussed in [19, 20, 36], among others. The idea behind the Hurwitz-Radon approach on which the quadratic form is based is to give a criterion for when two variables of the considered code can be decoded independently. More specifically, the variables s i, s j can be decoded independently if their corresponding weight matrices B i, B j are mutually orthogonal, i.e.,

To be more precise, we give the following result

Proposition 3.1 ([36, Thm. 2][8, Thm. 1])

Let \(\mathcal {X}\) be a space–time code of rank k with weight matrices \(\left \{B_i\right \}_{i=1}^{k}\) . The matrices B i and B j are mutually orthogonal, if and only if the columns b i and b j of B H are orthogonal.

In particular, the entry (i, j) of the associated matrix R is zero. Relating to this condition, the Hurwitz-Radon quadratic form is a tool which allows to deduce the actual ML decoding complexity of a space–time code based on the mutually orthogonality of the weight matrices. In particular, the criterion based on the quadratic form shows that fast decodability can be achieved solely by designing the weight matrices cleverly, and is independent of the channel and number of antennas. We give the following definition.

Definition 3.17

Let \(\mathcal {X}\) be a space–time code of rank k, and let \(X \in \mathcal {X}\). The Hurwitz-Radon quadratic form is the map

where \(m_{ij} := \|B_i B_j^\dagger + B_j B_i^\dagger \|{ }_F^2\).

Note that B i, B j are mutually orthogonal if and only if m ij = 0.

3.4.1.1 Multi-Group Decodable Codes

We begin with the family of multi-group decodable codes.

Definition 3.18

Consider a space–time code \(\mathcal {X}\) defined by the weight matrices \(\left \{B_i\right \}_{i=1}^k\).

-

(i)

The code is g-group decodable if there exists a partition of \(\left \{1,\ldots ,k\right \}\) into g non-empty subsets Γ1, …, Γk such that for i ∈ Γu, j ∈ Γv with u ≠ v, the matrices B i and B j are mutually orthogonal.

-

(ii)

The code is conditionallyg-group decodable if there exists a partition of \(\left \{1,\ldots ,k\right \}\) into g + 1 non-empty subsets Γ1, …, Γg, Γ such that for i ∈ Γu, j ∈ Γv with 1 ≤ u < v ≤ g, the matrices B i and B j are mutually orthogonal.

The family of codes which we refer to as conditionally g-group decodable codes are in the literature also known as fast ML decodable codes. We use the terminology of conditionally g-group decodable so as to not confuse the general notion of fast decodability with this specific family of fast-decodable codes.

In the following, we consider a space–time code \(\mathcal {X}\) with weight matrices \(\left \{B_i\right \}_{i=1}^k\) and corresponding real information symbols s 1, …, s k ∈ S. For \(\mathcal {X}\ g\)-group decodable or conditionally g-group decodable, we may without loss of generality order the variables according to the g groups Γ1, …, Γg, i.e.,

We have the following result, which will be helpful in determining the decoding complexity of a code (cf. Theorem 3.7).

Proposition 3.2 ([19, Lemma 1])

Let\(\mathcal {X}\)be a g-group decodable space–time code, and let M = (m ij)i,jbe the Hurwitz-Radon quadratic form matrix (cf. Definition3.17) and R = (r ij)i,jthe R-matrix from the QR decomposition of B H . Then, m ij = r ij = 0 for i < j whenever s i ∈ Γ uand s j ∈ Γ vwith u ≠ v. In particular, the R-matrix takes the form

where\(D_i \in \operatorname {\mathrm {Mat}}(|\Gamma _i|,\mathbb {R})\)is upper triangular, 1 ≤ i ≤ g, and the empty spaces are filled with zeros.

Example 3.13

The first example we give is the complexity order of the Alamouti code \(\mathcal {X}_A\) (cf. Sect. 3.2). We recall that this code consists of codewords

where \((x_1,x_2,x_3,x_4) \in \mathbb {Z}^4\) are usually taken to be integers to guarantee non-vanishing determinants.

The R-matrix associated with this code is in fact a diagonal 4 × 4 matrix with equal diagonal entries. Hence, \(\mathcal {X}_A\) is 4-group decodable, and exhibits a complexity order k′ = 1. In other words, it is single-symbol decodable.

Example 3.14

We recall the code constructed for multiple-access channels in [3, Ex. 6]. Consider the cyclic division algebra

where \(F = \mathbb {Q}(\sqrt {5})\) and \(\sigma :\sqrt {-3} \mapsto -\sqrt {-3}\) but fixes F(i). Let τ be a generator of the cyclic Galois group \( \operatorname {\mathrm {Gal}}(F(i)/F)\), i.e., τ(i) = −i. Let us extend the action of τ from F(i) to \(F(i,\sqrt {-3},\sqrt {-\gamma })\) by letting it act as identity on both \(\sqrt {-3}\) and \(\sqrt {-\gamma }\), as justified by the isomorphism extension theorem. Consider codewords of the form

where τ acts element-wise, and for \(\theta = \frac {1+\sqrt {-3}}{2}\) and k = 1, 2 we have

with \(x_{k,j}\in \mathcal {O}_{F(i)}\). Hence, each X k corresponds to the left-regular representation of an element in the natural order \(\Gamma _{nat}\subseteq \mathcal {C}\), after balancing the effect of γ by spreading it on the diagonal.Footnote 5

The complexity of exhaustive search for a signalling alphabet S is |S|32. The above code, however, is 2-group decodable. In fact, the associated R-matrix is of the form

with \(D_i \in \operatorname {\mathrm {Mat}}(16,\mathbb {R})\) upper triangular. The code hence exhibits a complexity order k′ = 16, resulting in a reduction of 50%.

In the case of conditionally g-group decodable codes, i.e., where we have a further non-empty group Γ, the R matrix is not entirely block-diagonal. Instead, we have the following result.

Proposition 3.3 ([7, Lem. 2])

Let\(\mathcal {X}\)be a conditionally g-group decodable code, and let M = (m ij)i,jbe the Hurwitz-Radon quadratic form matrix and R = (r ij)i,jthe R-matrix from the QR decomposition. Then, m ij = r ij = 0 for i < j whenever s i ∈ Γ uand s j ∈ Γ vwith 1 ≤ u < v ≤ g. In particular, the R-matrix takes the form

with\(D_i \in \operatorname {\mathrm {Mat}}(|\Gamma _i|,\mathbb {R})\)and\(N \in \operatorname {\mathrm {Mat}}(|\Gamma |,\mathbb {R})\)are upper triangular, and\(N_i \in \operatorname {\mathrm {Mat}}(|\Gamma _i|\times |\Gamma |,\mathbb {R})\).

Example 3.15

As an example of a conditionally g-group space–time code we recall the famous Silver code [16, 32]. The code is contained as a subset in the cyclic division algebra

Note that σ is not just complex conjugation, as σ(i) = −i and \(\sigma (\sqrt {7}) = -\sqrt {7}\). With γ = −1, the algebra is division, and the resulting code is fully diverse and has non-vanishing determinants. The Silver code is however not directly constructed as a subset of ρ( Γ) for Γ an order of \(\mathcal {C}\). Instead, it is defined as

where \(x_1,\ldots ,x_4 \in \mathbb {Z}[i]\) and

In particular, a codeword is of the form

Using the optimal ordering of the weight matrices, we find that the complexity order of the Silver code is k′ = 5, resulting in a complexity reduction of 37.5%.

Example 3.16

As a second example, we recall the Srinath-Rajan code, originally proposed in [36] for a 4 × 2-MIMO channel. To the best of the authors’ knowledge, this is the best performing code known for a 4 × 2 system among codes with the same complexity order. We recall the construction illustrated in [37], where the underlying algebraic structure was discovered.

Let L∕F be a cyclic Galois extension with cyclic Galois group \( \operatorname {\mathrm {Gal}}(L/F) = \langle \tau \rangle \), and consider a cyclic division algebra \(\mathcal {C}' = (L/F,\tau ,\gamma ')\). Moreover, let \(\mathcal {C} = (L/K,\sigma ,\gamma )\) be a cyclic division algebra of degree n, where K ≠ F and τσ = στ. We require γ ∈ K ∩ F and γ′∈ F∖K.

For the 4 × 2 Srinath-Rajan code, we make the choices

-

(i)

\(L = \mathbb {Q}(i,\sqrt {5}),\ K = \mathbb {Q}(\sqrt {5}),\ F = \mathbb {Q}(i)\).

-

(ii)

\(\mathcal {C}' = (L/F,\tau ,\gamma ')\) with γ′ = i∉K and \(\tau :\sqrt {5} \mapsto -\sqrt {5}\). This cyclic division algebra gives rise to the Golden code.

-

(iii)

\(\mathcal {C} = (L/K,\sigma ,\gamma )\) with γ = −1 and σ : i↦ − i.

Fix the F-basis \(\left \{\theta _1,\theta _2\right \}\) of L, with \(\theta _1 = 1+i(1-\theta ), \theta _2 = \theta _1\theta \in \mathcal {O}_L\), where \(\theta = \frac {1+\sqrt {5}}{2}\). Codewords are of the form

where x i = x i1 θ 1 + x i2 θ 2 with \(x_{ij} \in \mathbb {Z}[i]\).

This code is conditionally 4-group decodable, where 8 real variables need to be conditioned, and the remaining 8 variables can be grouped in 4 groups of 2. This can be seen from the structure of the R-matrix, which for this code takes the form

where D i are 2 × 2 upper triangular matrices, N i are 2 × 8 matrices, and N is an 8 × 8 upper triangular matrix. This yields a decoding complexity order k′ = 10. This is a reduction in complexity of 37.5%.

To summarize, we observe that the R matrix allows to directly read the decoding complexity of a g-group decodable and conditionally g-group decodable code. After conditioning the last | Γ| variables, the variables in each group Γi can be decoded independently of the other groups. This is summarized in the following result.

Theorem 3.7

The decoding complexity order of a (conditionally) g-group decodable code \(\mathcal {X}\) with possibly empty subset Γ is given by

Unfortunately, there is a trade-off between the maximum rate and maximum decoding complexity reduction of space–time codes. The recent work [8] treats both these questions for multi-group decodable codes by analysing the mutually orthogonality of matrices in central simple subalgebras of \( \operatorname {\mathrm {Mat}}(n,\mathbb {C})\) over number fields. The authors show on one hand that there is a lower bound for the decoding complexity of full-rate n × n space–time codes. They furthermore derive an upper bound on the number of groups of a multi-group decodable code. We summarise the results relevant to our chapter in the following theorem. For a more general setting, see Theorems 7–8 and Corollary 16 in [8].

Theorem 3.8 ([8])

Let\(\mathcal {X}\)be an n × n space–time code defined by the weight matrices\(\left \{B_i\right \}_{i=1}^{2k^2}\) , and let S denote the employed real signalling alphabet.

-

(i)

If\(\mathcal {X}\)is full-rate, then the decoding complexity order is not better than n 2 + 1.

-

(ii)

If\(\mathcal {X}\)is multi-group decodable and the weight matrices are chosen from a K-central division algebra with K a number field, we have g ≤ 4.

3.4.1.2 Fast-Group Decodable Codes

Fast-group decodable codes combine the structure of the block-diagonal R-matrix with further parallelisation within each of the independent groups. We start with the formal definition.

Definition 3.19

Consider a space–time code \(\mathcal {X}\) defined by the weight matrices \(\left \{B_i\right \}_{i=1}^k\). The code is fast-group decodable if

-

(a)

There is a partition of \(\left \{1,\ldots ,k\right \}\) into g non-empty subsets Γ1, …, Γg such that whenever i ∈ Γu, j ∈ Γv with u ≠ v, the matrices B i and B j are mutually orthogonal.

-

(b)

In addition, for at least one group Γi, we have \(\langle {\mathbf {q}}_{l_1},{\mathbf {b}}_{l_2}\rangle = 0\), where l 1 = 1, …L i − 1 and l 2 = l 1 + 1, …, L i with L i ≤| Γi|.

Consider a fast-group decodable space–time code \(\mathcal {X}\), and denote by Γ1, …, Γg the groups in which the corresponding symbols can be jointly decoded. Assume that the variables s 1, …, s k are without loss of generality ordered according to their groups, as described above (3.3).

Proposition 3.4 ([19, Lem. 3])

Let\(\mathcal {X}\)be a g fast-group decodable space–time code, and let M = (m ij)i,jbe the Hurwitz-Radon quadratic form matrix and R = (r ij)i,jthe R-matrix from the QR decomposition. Then, m ij = r ij = 0 for i < j whenever s i ∈ Γ u, s j ∈ Γ vwith u ≠ v. Furthermore, each group Γ iadmits to remove L ilevels from the sphere-decoder tree if\(m_{i_{l_1} i_{l_2}} = 0\) , where l 1 = 1, …, L i − 1 and l 2 = l 1 + 1, …, L i , with L i ≤| Γ i|. In particular, the R-matrix takes the form

where the empty spaces are filled with zeros. Each of the matrices \(R_i \in \operatorname {\mathrm {Mat}}(|\Gamma _i|,\mathbb {R})\) is of the form

with \(D_i \in \operatorname {\mathrm {Mat}}(L_i,\mathbb {R})\) is diagonal, \(B_{i_2}\) is a square upper triangular matrix and \(B_{i_1}\) is a rectangular matrix.

Theorem 3.9

The decoding complexity of a g fast-group decodable space–time code \(\mathcal {X}\) with real signalling alphabet S is given by

Example 3.17

The authors in [33] construct a 4 × 4 fast-group decodable code based on an orthogonal space–time code. Codewords are of the form

where x i are real symbols. We refer to the original paper for more details on the explicit construction. The algebraic structure of this code allows to remove 5 levels from the sphere decoding tree. In particular, the decoding complexity order is k′ = 12, resulting in a reduction in decoding complexity of ∼30%.

3.4.1.3 Block Orthogonal Codes

The last family of fast-decodable codes that we treat are block orthogonal codes. We define this family by means of the structure of the associated R-matrix.

Definition 3.20

Let \(\mathcal {X}\) be a space–time code. The code is said to be g-block orthogonal if the associated R-matrix has the structure

where the empty spaces are filled with zeros and the matrices B ij are non-zero rectangular matrices. Further, the matrices R i are block diagonal matrices of the form

with each of the blocks U i,j is a square upper triangular matrix.

Assuming that each of the matrices R i has the same number of blocks k, we can determine a block orthogonal code by the three parameters (g, k, p), where g is the number of matrices R i, k denotes the number of block matrices which compose each matrix R i and p is the number of diagonal entries in the block matrices U i,j.

Example 3.18

The aforementioned Golden code is a (2, 2, 2) block orthogonal code. However, as its decoding complexity order is k′ = 6 < 8 = k, it is not fast-decodable by the requirement of a strict inequality as per Definition 3.16.

As an example of a fast-decodable block orthogonal code, we consider the (2, 4, 2) block orthogonal code from [21]. For a signalling vector (s 1, …, s 16), a codeword is of the form

where

Remark 3.3

Recall that the property of fast decodability relates to the reduction in decoding complexity without resorting to suboptimal decoding methods. By modifying the decoding algorithm used, the decoding complexity of certain codes can be lowered. For example, the main algorithm of [35] reduces the complexity order of the Golden code from k = 6, corresponding to the complexity of ML-decoding, to k′ = 4, while maintaining nearly-ML performance. The algorithm is specific to the Golden code, but has been generalized to the 3 × 3 and 4 × 4 perfect codes in, respectively, [2, 17].

In contrast to the previously introduced families, the approach via the Hurwitz-Radon quadratic form does not capture the complexity reduction for block orthogonal codes. This was recently addressed in [26], where relaxed conditions are derived for classifying codes into the here treated families of fast-decodable codes. More precisely, for block orthogonal codes we do not have an analogue of Proposition 3.3 or 3.4 relating the matrix M of the quadratic form to the R-matrix in the QR decomposition of B H.

3.4.2 Inheriting Fast Decodability

Crucial for space–time codes to exhibit desirable properties is the underlying algebraic framework. Constructing codes for larger number of antennas means dealing with higher degree field extensions and algebras, which are harder to handle. We briefly recall an iterative space–time code construction proposed in [25] which, starting with an n × n space–time code, results in a new 2n × 2n space–time code with the same code rate and double (lattice) rank. The advantage of this construction is that when applied carefully, the resulting codes inherit good properties from the original space–time codes.

As the general setup, consider the tower of extensions depicted in Fig. 3.2.

The cyclic Galois group of L∕K is generated by σ, i.e., \( \operatorname {\mathrm {Gal}}(L/K) = \langle \sigma \rangle \), and we denote the left-regular representation by \(\rho : \mathcal {C} \to \operatorname {\mathrm {Mat}}(n,L)\). Let \(\tau \in \operatorname {\mathrm {Aut}}(L)\) be an automorphism of L, and make the following assumptions:

By the above assumptions we have τρ = ρτ. Moreover, τ can be extended to an automorphism of \(\mathcal {C}\) and \(\rho (\mathcal {C})\), respectively, by

We can now fix an element \(\theta \in \mathcal {C}\), as well as a \(\mathbb {Q}\)-automorphism of \(L,\ \tau \in \operatorname {\mathrm {Aut}}_{\mathbb {Q}}(L)\), and have the following important definition.

Definition 3.21

Let K be a finite Galois extension of \(\mathbb {Q}\) and \(\mathcal {C} = (L/K,\sigma ,\gamma )\) be a cyclic division algebra of degree n. Fix \(\theta \in \mathcal {C}\) and \(\tau \in \operatorname {\mathrm {Aut}}_{\mathbb {Q}}(L)\) as above.

-

(a)

Define the function

$$\displaystyle \begin{aligned} \alpha_{\tau,\theta}: \operatorname{\mathrm{Mat}}(n,L)\times\operatorname{\mathrm{Mat}}(n,L) &\to \operatorname{\mathrm{Mat}}(2n,L) \\ (X,Y) &\mapsto \begin{bmatrix} X & \theta\tau(Y) \\ Y & \tau(X) \end{bmatrix}. \end{aligned} $$ -

(b)

If θ = ζθ′ is totally real or totally imaginary, θ′ > 0 and \(\zeta \in \left \{\pm 1, \pm i\right \}\), define the alike function

$$\displaystyle \begin{aligned} \tilde{\alpha}_{\tau,\theta}: \operatorname{\mathrm{Mat}}(n,L)\times\operatorname{\mathrm{Mat}}(n,L) &\to \operatorname{\mathrm{Mat}}(2n,L) \\ (X,Y) &\mapsto \begin{bmatrix} X & \zeta\sqrt{\theta'}\tau(Y) \\ \sqrt{\theta'}Y & \tau(X) \end{bmatrix}. \end{aligned} $$

The defined maps restrict to \(\mathcal {C}\times \mathcal {C} \to \operatorname {\mathrm {Mat}}(2,\mathcal {C})\) by identifying \(x,y \in \mathcal {C}\) with their representation X = ρ(x), Y = ρ(y).

Suppose that the algebra \(\mathcal {C}\) gives rise to a rank-k space–time code \(\mathcal {X}\) defined via matrices \(\left \{ B_i \right \}_{i=1}^{k}\). Then, the matrices \(\left \{ \alpha _{\tau ,\theta }(B_i,0), \alpha _{\tau ,\theta }(0,B_i)\right \}_{i=1}^{k}\) (or applying \(\tilde {\alpha }_{\tau ,\theta }(\cdot ,\cdot )\), respectively) define a rank-2k code

We summarise the main results of [25] in the following proposition.

Proposition 3.5 ([25, Thm. 1, Thm. 2])

Let\(\mathcal {C} = (L/K, \sigma , \gamma )\)be a cyclic division algebra giving rise to a rank-k space–time code\(\mathcal {X}\)defined by the matrices\(\left \{ B_i \right \}_{i=1}^{k}\) . Assume that\(\tau \in \operatorname {\mathrm {Aut}}_{\mathbb {Q}}(L)\)commutes with σ and complex conjugation, and further\(\tau (\gamma ) = \gamma ,\ \tau ^2 = \operatorname {\mathrm {id}}\) . Fix θ ∈ K 〈τ〉 , where K 〈τ〉is the subfield of K fixed by τ. Identifying an element of\(\mathcal {C}\)with its left-regular representation ρ, we have:

-

(i)

The image\(\mathcal {I} = \alpha _{\tau ,\theta }(\mathcal {C},\mathcal {C})\)is an algebra and is division if and only if θ ≠ zτ(z) for all\(z \in \mathcal {C}\) . Moreover, for any\(\alpha _{\tau ,\theta }(x,y) \in \mathcal {I}\) , we have det(α τ,θ(x, y)) ∈ K 〈τ〉.

-

(ii)

If in addition θ = ζθ′ is totally real or totally imaginary, the image\(\tilde {\mathcal {I}} = \tilde {\alpha }_{\theta }(\mathcal {C},\mathcal {C})\)retains both the full-diversity and non-vanishing determinant property. If for some\(i,j,\ B_i B_j^{\dagger } + B_j B_i^{\dagger } = 0\) , we have

$$\displaystyle \begin{aligned} \tilde{\alpha}_{\tau,\theta}(B_i,0)\tilde{\alpha}_{\tau,\theta}(B_j,0)^{\dagger} + \tilde{\alpha}_{\tau,\theta}(B_j,0)\tilde{\alpha}_{\tau,\theta}(B_i,0)^{\dagger} &= 0\,, \\ \tilde{\alpha}_{\tau,\theta}(0,B_i)\tilde{\alpha}_{\tau,\theta}(0,B_j)^{\dagger} + \tilde{\alpha}_{\tau,\theta}(0,B_j)\tilde{\alpha}_{\tau,\theta}(0,B_i)^{\dagger} &= 0. \end{aligned} $$

The second part of Proposition 3.5, in particular, states that under appropriate conditions, fast decodability is inherited from the rank-k space–time code \(\mathcal {X}\) to the iterated code \(\mathcal {X}_{\mathrm {it}}\).

3.5 Explicit Constructions

All the notions and concepts introduced in the previous sections lead to this last part. To conclude the chapter, we focus on explicit construction methods for fast-decodable space–time codes.

Throughout this chapter, we have provided multiple examples of space–time codes with reduced ML decoding complexity. Such examples can sometimes be found by chance, but most often a clever design gives rise to infinite families of codes with reduced decoding complexity. In the following, we turn our attention to communication setups for which such general results are known. To the best of the authors’ knowledge, the constructions presented here are the only general fast-decodable algebraic constructions found in literature.

3.5.1 Asymmetric Space–Time Codes

Above we have exemplified the 4 × 2 Srinath-Rajan code, the best performing code for this channel among codes with the same complexity order. Here, we discuss a methodology for constructing well-performing fast-decodable space–time codes for the 4 × 2 MIMO channel, offering a reduction in decoding complexity of up to 37.5%.

The motivation behind the following construction is the structure of the Alamouti code (cf. Example 3.13). As we have seen, the decoding complexity of the Alamouti code equals the size of the employed real signaling alphabet, \(\mathfrak {D}(S) = |S|\) (or more precisely \(\mathfrak {D}(S) = 4|S|\) as we are decoding each of the 4 real symbols in parallel). Motivated by this observation, it is of interest to study space–time codes which are subsets of the rings \( \operatorname {\mathrm {Mat}}(k,\mathbb {H})\). This motivates the next result.

Theorem 3.10 ([40])

Let\(\mathcal {C}\)be a cyclic division algebra of degree n, with center K of signature (r, s), r + 2s = m. There exists an injection

where each n × n block is mapped to the corresponding diagonal block of a matrix in\( \operatorname {\mathrm {Mat}}(mn,\mathbb {C})\) . Here, w is the number of places which ramify in\(\mathcal {C}\).

In particular, \(\mathcal {C}\) can be embedded into \( \operatorname {\mathrm {Mat}}(n/2,\mathbb {H})\) if

-

(i)

The center K is totally real, i.e., r = m.

-

(ii)

The infinite places of K are ramified in\(\mathcal {C}\).

The ramification assumptions of places in the considered algebra are rather technical, and the interested reader is referred to [40] for further details.

While the above result guarantees the existence of an injection into \( \operatorname {\mathrm {Mat}}(n/2,\mathbb {H})\) when the conditions are satisfied, it does not make the embedding explicit. This is achieved in the following result.

Theorem 3.11 ([40, Prop. 11.1])

Let \(\mathcal {C} = (L/\mathbb {Q},\sigma ,\gamma )\) be a cyclic division algebra satisfying the requirements from Theorem 3.10 . Given for \(x \in \mathcal {C}\) an element \(X = \rho (x) \in \mathcal {X}\) , where \(\mathcal {X}\) is a space–time code arising from the algebra \(\mathcal {C}\) , we have an explicit map

where P = (p ij)i,jis a permutation matrix with entries

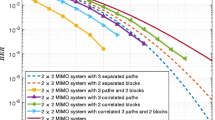

and\(B = \operatorname {\mathrm {diag}}(\sqrt {|\gamma |},|\gamma |,\ldots ,\sqrt {|\gamma |},|\gamma |)\).