Abstract

We study a random walk (Markov chain) in an unbounded planar domain bounded by two curves of the form \(x_2 = a^+ x_1^{\beta ^+}\) and \(x_2 = -a^- x_1^{\beta ^-}\), with x 1 ≥ 0. In the interior of the domain, the random walk has zero drift and a given increment covariance matrix. From the vicinity of the upper and lower sections of the boundary, the walk drifts back into the interior at a given angle α + or α − to the relevant inwards-pointing normal vector. Here we focus on the case where α + and α − are equal but opposite, which includes the case of normal reflection. For 0 ≤ β +, β − < 1, we identify the phase transition between recurrence and transience, depending on the model parameters, and quantify recurrence via moments of passage times.

Dedicated to our colleague Vladas Sidoravicius (1963–2019)

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Reflected random walk

- Generalized parabolic domain

- Recurrence

- Transience

- Passage-time moments

- Normal reflection

- Oblique reflection

AMS Subject Classification

1 Introduction and Main Results

1.1 Description of the Model

We describe our model and then state our main results: see Sect. 1.4 for a discussion of related literature. Write \(x \in {\mathbb R}^2\) in Cartesian coordinates as x = (x 1, x 2). For parameters a +, a − > 0 and β +, β −≥ 0, define, for z ≥ 0, functions \(d^+ ( z ) := a^+ z^{\beta ^+}\) and \(d^- ( z ) := a^- z^{\beta ^-}\). Set

Write ∥ ⋅ ∥ for the Euclidean norm on \({\mathbb R}^2\). For \(x \in {\mathbb R}^2\) and \(A \subseteq {\mathbb R}^2\), write d(x, A) :=infy ∈ A∥x − y∥ for the distance from x to A. Suppose that there exist B ∈ (0, ∞) and a subset \({\mathcal D}_B\) of \({\mathcal D}\) for which every \(x \in {\mathcal D}_B\) has \(d(x, {\mathbb R}^2 \setminus {\mathcal D} ) \leq B\). Let \({\mathcal D}_I := {\mathcal D} \setminus {\mathcal D}_B\); we call \({\mathcal D}_B\) the boundary and \({\mathcal D}_I\) the interior. Set \({\mathcal D}^\pm _B := \{ x \in {\mathcal D}_B : \pm x_2 > 0\}\) for the parts of \({\mathcal D}_B\) in the upper and lower half-plane, respectively.

Let ξ := (ξ 0, ξ 1, …) be a discrete-time, time-homogeneous Markov chain on state-space \(S \subseteq {\mathcal D}\). Set \(S_I := S \cap {\mathcal D}_I\), \(S_B := S \cap {\mathcal D}_B\), and \(S^\pm _B := S \cap {\mathcal D}^\pm _B\). Write \({\mathbb P}_{x}\) and \(\mathbb {E}_{x}\) for conditional probabilities and expectations given ξ 0 = x ∈ S, and suppose that \({\mathbb P}_x ( \xi _n \in S \text{ for all } n \geq 0 ) = 1\) for all x ∈ S. Set Δ := ξ 1 − ξ 0. Then \({\mathbb P} ( \xi _{n+1} \in A \mid \xi _n = x ) = {\mathbb P}_{x} ( x + \varDelta \in A )\) for all x ∈ S, all measurable \(A \subseteq {\mathcal D}\), and all \(n \in {\mathbb Z}_+\). In what follows, we will always treat vectors in \({\mathbb R}^2\) as column vectors.

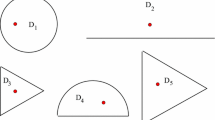

We will assume that ξ has uniformly bounded p > 2 moments for its increments, that in S I it has zero drift and a fixed increment covariance matrix, and that it reflects in S B, meaning it has drift away from \(\partial {\mathcal D}\) at a certain angle relative to the inwards-pointing normal vector. In fact we permit perturbations of this situation that are appropriately small as the distance from the origin increases. See Fig. 1 for an illustration.

To describe the assumptions formally, for x 1 > 0 let n +(x 1) denote the inwards-pointing unit normal vector to \(\partial {\mathcal D}\) at (x 1, d +(x 1)), and let n −(x 1) be the corresponding normal at (x 1, −d −(x 1)); then n +(x 1) is a scalar multiple of \((a^+ \beta ^+ x_1^{\beta ^+-1}, -1)\), and n −(x 1) is a scalar multiple of \((a^- \beta ^- x_1^{\beta ^--1}, 1)\). Let n +(x 1, α) denote the unit vector obtained by rotating n +(x 1) by angle α anticlockwise. Similarly, let n −(x 1, α) denote the unit vector obtained by rotating n −(x 1) by angle α clockwise. (The orientation is such that, in each case, reflection at angle α < 0 is pointing on the side of the normal towards 0.)

We write ∥ ⋅ ∥op for the matrix (operator) norm defined by ∥M∥op :=supu∥Mu∥, where the supremum is over all unit vectors \(u \in {\mathbb R}^2\). We take ξ 0 = x 0 ∈ S fixed, and impose the following assumptions for our main results.

-

(N) Suppose that \({\mathbb P}_x ( \limsup _{n \to \infty } \| \xi _n \| = \infty ) =1\) for all x ∈ S.

-

(Mp) There exists p > 2 such that

$$\displaystyle \begin{aligned} \sup_{x \in S} \mathbb{E}_x ( \| \varDelta \|{}^p ) < \infty . \end{aligned} $$(1) -

(D) We have that \(\sup _{x \in S_I : \| x \| \geq r } \| \mathbb {E}_x \varDelta \| = o (r^{-1})\) as r →∞.

-

(R) There exist angles α ±∈ (−π∕2, π∕2) and functions \(\mu ^\pm : S^\pm _B \to {\mathbb R}\) with liminf∥x∥→∞ μ ±(x) > 0, such that, as r →∞,

$$\displaystyle \begin{aligned} \sup_{x \in S_B^+ : \| x \| \geq r } \| \mathbb{E}_x \varDelta - \mu^+ (x) n^+(x_1,\alpha^+) \| & = O ( r^{-1}) ; \end{aligned} $$(2)$$\displaystyle \begin{aligned} \sup_{x \in S_B^- : \| x \| \geq r } \| \mathbb{E}_x \varDelta - \mu^- (x) n^-(x_1,\alpha^-) \| & = O( r^{-1} ) .\end{aligned} $$(3) -

(C) There exists a positive-definite, symmetric 2 × 2 matrix Σ for which

We write the entries of Σ in (C) as

Here ρ is the asymptotic increment covariance, and, since Σ is positive definite, σ 1 > 0, σ 2 > 0, and \(\rho ^2 < \sigma _1^2 \sigma _2^2\).

To identify the critically recurrent cases, we need slightly sharper control of the error terms in the drift assumption (D) and covariance assumption (C). In particular, we will in some cases impose the following stronger versions of these assumptions:

-

(D+) There exists ε > 0 such that

as r →∞.

as r →∞. -

(C+) There exists ε > 0 and a positive definite symmetric 2 × 2 matrix Σ for which

Without loss of generality, we may use the same constant  for both (D+) and (C+).

for both (D+) and (C+).

The non-confinement condition (N) ensures our questions of recurrence and transience (see below) are non-trivial, and is implied by standard irreducibility or ellipticity conditions: see [26] and the following example.

Example 1

Let \(S = {\mathbb Z}^2 \cap {\mathcal D}\), and take \({\mathcal D}_B\) to be the set of \(x \in {\mathcal D}\) for which x is within unit ℓ ∞-distance of some \(y \in {\mathbb Z}^2 \setminus {\mathcal D}\). Then S B contains those points of S that have a neighbour outside of \({\mathcal D}\), and S I consists of those points of S whose neighbours are all in \({\mathcal D}\). If ξ is irreducible on S, then (N) holds (see e.g. Corollary 2.1.10 of [26]). If β + > 0, then, for all ∥x∥ sufficiently large, every point of \(x \in S_B^+\) has its neighbours to the right and below in S, so if α + = 0, for instance, we can achieve the asymptotic drift required by (2) using only nearest-neighbour jumps if we wish; similarly in \(S_B^-\).

Under the non-confinement condition (N), the first question of interest is whether liminfn→∞∥ξ n∥ is finite or infinite. We say that ξ is recurrent if there exists \(r_0 \in {\mathbb R}_+\) for which liminfn→∞∥ξ n∥≤ r 0, a.s., and that ξ is transient if limn→∞∥ξ n∥ = ∞, a.s. The first main aim of this paper is to classify the process into one or other of these cases (which are not a priori exhaustive) depending on the parameters. Further, in the recurrent cases it is of interest to quantify the recurrence by studying the tails (or moments) of return times to compact sets. This is the second main aim of this paper.

In the present paper we focus on the case where α + + α − = 0, which we call ‘opposed reflection’. This case is the most subtle from the point of view of recurrence/transience, and, as we will see, exhibits a rich phase diagram depending on the model parameters. We emphasize that the model in the case α + + α − = 0 is near-critical in that both recurrence and transience are possible, depending on the parameters, and moreover (i) in the recurrent cases, return-times to bounded sets have heavy tails being, in particular, non-integrable, and so stationary distributions will not exist, and (ii) in the transient cases, escape to infinity will be only diffusive. There is a sense in which the model studied here can be viewed as a perturbation of zero-drift random walks, in the manner of the seminal work of Lamperti [19]: see e.g. [26] for a discussion of near-critical phenomena. We leave for future work the case α + + α −≠ 0, in which very different behaviour will occur: if β ± < 1, then the case α + + α − > 0 gives super-diffusive (but sub-ballistic) transience, while the case α + + α − < 0 leads to positive recurrence.

Opposed reflection includes the special case where α + = α − = 0, which is ‘normal reflection’. Since the results are in the latter case more easily digested, and since it is an important case in its own right, we present the case of normal reflection first, in Sect. 1.2. The general case of opposed reflection we present in Sect. 1.3. In Sect. 1.4 we review some of the extensive related literature on reflecting processes. Then Sect. 1.5 gives an outline of the remainder of the paper, which consists of the proofs of the results in Sects. 1.2–1.3.

1.2 Normal Reflection

First we consider the case of normal (i.e., orthogonal) reflection.

Theorem 1

Suppose that (N) , (Mp) , (D) , (R) , and (C) hold with α + = α − = 0.

-

(a)

Suppose that β +, β −∈ [0, 1). Let \(\beta := \max ( \beta ^+, \beta ^-)\) . Then the following hold.

-

(b)

Suppose that (D+) and (C+) hold, and β +, β − > 1. Then ξ is recurrent.

Remark 1

-

(i)

Omitted from Theorem 1 is the case when at least one of β ± is equal to 1, or their values fall each each side of 1. Here we anticipate behaviour similar to [5].

-

(ii)

If \(\sigma _1^2 / \sigma _2^2 < 1\), then Theorem 1 shows a striking non-monotonicity property: there exist regions \({\mathcal D}_1 \subset {\mathcal D}_2 \subset {\mathcal D}_3\) such that the reflecting random walk is recurrent on \({\mathcal D}_1\) and \({\mathcal D}_3\), but transient on \({\mathcal D}_2\). This phenomenon does not occur in the classical case when Σ is the identity: see [28] for a derivation of monotonicity in the case of normally reflecting Brownian motion in unbounded domains in \({\mathbb R}^d\), d ≥ 2.

-

(iii)

Note that the correlation ρ and the values of a +, a − play no part in Theorem 1; ρ will, however, play a role in the more general Theorem 3 below.

Let \(\tau _r := \min \{ n \in {\mathbb Z}_+ : \| \xi _n \| \leq r \}\). Define

Our next result concerns the moments of τ r. Since most of our assumptions are asymptotic, we only make statements about r sufficiently large; with appropriate irreducibility assumptions, this restriction could be removed.

Theorem 2

Suppose that (N) , (Mp) , (D) , (R) , and (C) hold with α + = α − = 0.

-

(a)

Suppose that β +, β −∈ [0, 1). Let \(\beta := \max ( \beta ^+, \beta ^-)\) . Then the following hold.

-

(i)

If \(\beta < \sigma _1^2 / \sigma _2^2\) , then \(\mathbb {E}_x ( \tau _r^s ) < \infty \) for all s < s 0 and all r sufficiently large, but \(\mathbb {E}_x ( \tau _r^s ) = \infty \) for all s > s 0 and all x with ∥x∥ > r for r sufficiently large.

-

(ii)

If \(\beta \geq \sigma _1^2 / \sigma _2^2\) , then \(\mathbb {E}_x ( \tau _r^s ) = \infty \) for all s > 0 and all x with ∥x∥ > r for r sufficiently large.

-

(i)

-

(b)

Suppose that β +, β − > 1. Then \(\mathbb {E}_x ( \tau _r^s ) = \infty \) for all s > 0 and all x with ∥x∥ > r for r sufficiently large.

Remark 2

-

(i)

Note that if \(\beta < \sigma _1^2 /\sigma _2^2\), then s 0 > 0, while s 0 < 1∕2 for all β > 0, in which case the return time to a bounded set has a heavier tail than that for one-dimensional simple symmetric random walk.

-

(ii)

The transience result in Theorem 1(a)(ii) is essentially stronger than the claim in Theorem 2(a)(ii) for \(\beta < \sigma _1^2 / \sigma _2^2\), so the borderline (recurrent) case \(\beta = \sigma _1^2 / \sigma _2^2\) is the main content of the latter.

-

(iii)

Part (b) shows that the case β ± > 1 is critical: no moments of return times exist, as in the case of, say, simple symmetric random walk in \({\mathbb Z}^2\) [26, p. 77].

1.3 Opposed Reflection

We now consider the more general case where α + + α − = 0, i.e., the two reflection angles are equal but opposite, relative to their respective normal vectors. For α + = −α −≠ 0, this is a particular example of oblique reflection. The phase transition in β now depends on ρ and α in addition to \(\sigma _1^2\) and \(\sigma _2^2\). Define

The next result gives the key properties of the critical threshold function β c which are needed for interpreting our main result.

Proposition 1

For a fixed, positive-definite Σ such that \(|\sigma _1^2 - \sigma _2^2| + |\rho | > 0\) , the function α↦β c(Σ, α) over the interval \([ -\frac {\pi }{2}, \frac {\pi }{2}]\) is strictly positive for |α|≤ π∕2, with two stationary points, one in \((-\frac {\pi }{2},0)\) and the other in \((0,\frac {\pi }{2})\) , at which the function takes its maximum/minimum values of

The exception is the case where \(\sigma _1^2 - \sigma _2^2 = \rho = 0\) , when β c = 1 is constant.

Here is the recurrence classification in this setting.

Theorem 3

Suppose that (N) , (Mp) , (D) , (R) , and (C) hold with α + = −α − = α for |α| < π∕2.

-

(a)

Suppose that β +, β −∈ [0, 1). Let \(\beta := \max ( \beta ^+, \beta ^-)\) . Then the following hold.

-

(b)

Suppose that (D+) and (C+) hold, and β +, β − > 1. Then ξ is recurrent.

Remark 3

-

(i)

The threshold (5) is invariant under the map (α, ρ)↦(−α, −ρ).

-

(ii)

For fixed Σ with \(| \sigma _1^2 - \sigma _2^2 | + |\rho | >0\), Proposition 1 shows that β c is non-constant and has exactly one maximum and exactly one minimum in \((-\frac {\pi }{2}, \frac {\pi }{2})\). Since \(\beta _{\mathrm {c}} (\varSigma , \pm \frac {\pi }{2} ) =1\), it follows from uniqueness of the minimum that the minimum is strictly less than 1, and so Theorem 3 shows that there is always an open interval of α for which there is transience.

-

(iii)

Since β c > 0 always, recurrence is certain for small enough β.

-

(iv)

In the case where \(\sigma _1^2 = \sigma _2^2\) and ρ = 0, then β c = 1, so recurrence is certain for all β +, β − < 1 and all α.

-

(v)

If α = 0, then \(\beta _{\mathrm {c}} = \sigma _1^2/\sigma _2^2\), so Theorem 3 generalizes Theorem 1.

Next we turn to passage-time moments. We generalize (4) and define

with β c given by (5). The next result includes Theorem 2 as the special case α = 0.

Theorem 4

Suppose that (N) , (Mp) , (D) , (R) , and (C) hold with α + = −α − = α for |α| < π∕2.

-

(a)

Suppose that β +, β −∈ [0, 1). Let \(\beta := \max ( \beta ^+, \beta ^-)\) . Then the following hold.

-

(i)

If β < β c , then s 0 ∈ (0, 1∕2], and \(\mathbb {E}_x ( \tau _r^s ) < \infty \) for all s < s 0 and all r sufficiently large, but \(\mathbb {E}_x ( \tau _r^s ) = \infty \) for all s > s 0 and all x with ∥x∥ > r for r sufficiently large.

-

(ii)

If β ≥ β c , then \(\mathbb {E}_x ( \tau _r^s ) = \infty \) for all s > 0 and all x with ∥x∥ > r for r sufficiently large.

-

(i)

-

(b)

Suppose that β +, β − > 1. Then \(\mathbb {E}_x ( \tau _r^s ) = \infty \) for all s > 0 and all x with ∥x∥ > r for r sufficiently large.

1.4 Related Literature

The stability properties of reflecting random walks or diffusions in unbounded domains in \({\mathbb R}^d\) have been studied for many years. A pre-eminent place in the development of the theory is occupied by processes in the quadrant \({\mathbb R}_+^2\) or quarter-lattice \({\mathbb Z}_+^2\), due to applications arising in queueing theory and other areas. Typically, the process is assumed to be maximally homogeneous in the sense that the transition mechanism is fixed in the interior and on each of the two half-lines making up the boundary. Distinct are the cases where the motion in the interior of the domain has non-zero or zero drift.

It was in 1961, in part motivated by queueing models, that Kingman [18] proposed a general approach to the non-zero drift problem on \({\mathbb Z}_+^2\) via Lyapunov functions and Foster’s Markov chain classification criteria [14]. A formal statement of the classification was given in the early 1970s by Malyshev, who developed both an analytic approach [22] as well as the Lyapunov function one [23] (the latter, Malyshev reports, prompted by a question of Kolmogorov). Generically, the classification depends on the drift vector in the interior and the two boundary reflection angles. The Lyapunov function approach was further developed, so that the bounded jumps condition in [23] could be relaxed to finiteness of second moments [10, 27, 29] and, ultimately, of first moments [13, 30, 33]. The analytic approach was also subsequently developed [11], and although it seems to be not as robust as the Lyapunov function approach (the analysis in [22] was restricted to nearest-neighbour jumps), when it is applicable it can yield very precise information: see e.g. [15] for a recent application in the continuum setting. Intrinsically more complicated results are available for the non-zero drift case in \({\mathbb Z}_+^3\) [24] and \({\mathbb Z}_+^4\) [17].

The recurrence classification for the case of zero-drift reflecting random walk in \({\mathbb Z}_+^2\) was given in the early 1990s in [6, 12]; see also [13]. In this case, generically, the classification depends on the increment covariance matrix in the interior as well as the two boundary reflection angles. Subsequently, using a semimartingale approach extending work of Lamperti [19], passage-time moments were studied in [5], with refinements provided in [2, 3].

Parallel continuum developments concern reflecting Brownian motion in wedges in \({\mathbb R}^2\). In the zero-drift case with general (oblique) reflections, in the 1980s Varadhan and Williams [31] had showed that the process was well-defined, and then Williams [32] gave the recurrence classification, thus preceding the random walk results of [6, 12], and, in the recurrent cases, asymptotics of stationary measures (cf. [4] for the discrete setting). Passage-time moments were later studied in [7, 25], by providing a continuum version of the results of [5], and in [2], using discrete approximation [1]. The non-zero drift case was studied by Hobson and Rogers [16], who gave an analogue of Malyshev’s theorem in the continuum setting.

For domains like our \({\mathcal D}\), Pinsky [28] established recurrence in the case of reflecting Brownian motion with normal reflections and standard covariance matrix in the interior. The case of general covariance matrix and oblique reflection does not appear to have been considered, and neither has the analysis of passage-time moments. The somewhat related problem of the asymptotics of the first exit time τ e of planar Brownian motion from domains like our \({\mathcal D}\) has been considered [8, 9, 20]: in the case where β + = β − = β ∈ (0, 1), then \(\log {\mathbb P} ( \tau _e > t )\) is bounded above and below by constants times − t (1−β)∕(1+β): see [20] and (for the case β = 1∕2) [9].

1.5 Overview of the Proofs

The basic strategy is to construct suitable Lyapunov functions \(f : {\mathbb R}^2 \to {\mathbb R}\) that satisfy appropriate semimartingale (i.e., drift) conditions on \(\mathbb {E}_x [ f (\xi _1 ) - f(\xi _0 ) ]\) for x outside a bounded set. In fact, since the Lyapunov functions that we use are most suitable for the case where the interior increment covariance matrix is Σ = I, the identity, we first apply a linear transformation T of \({\mathbb R}^2\) and work with Tξ. The linear transformation is described in Sect. 2. Of course, one could combine these two steps and work directly with the Lyapunov function given by the composition f ∘ T for the appropriate f. However, for reasons of intuitive understanding and computational convenience, we prefer to separate the two steps.

Let β ± < 1. Then for α + = α − = 0, the reflection angles are both pointing essentially vertically, with an asymptotically small component in the positive x 1 direction. After the linear transformation T, the reflection angles are no longer almost vertical, but instead are almost opposed at some oblique angle, where the deviation from direct opposition is again asymptotically small, and in the positive x 1 direction. For this reason, the case α + = −α − = α ≠ 0 is not conceptually different from the simpler case where α = 0, because after the linear transformation, both cases are oblique. In the case α ≠ 0, however, the details are more involved as both α and the value of the correlation ρ enter into the analysis of the Lyapunov functions, which is presented in Sect. 3, and is the main technical work of the paper. For β ± > 1, intuition is provided by the case of reflection in the half-plane (see e.g. [32] for the Brownian case).

Once the Lyapunov function estimates are in place, the proofs of the main theorems are given in Sect. 4, using some semimartingale results which are variations on those from [26]. The appendix contains the proof of Proposition 1 on the properties of the threshold function β c defined at (5).

2 Linear Transformation

The inwards pointing normal vectors to \(\partial {\mathcal D}\) at (x 1, d ±(x 1)) are

Define

Recall that n ±(x 1, α ±) is the unit vector at angle α ± to n ±(x 1), with positive angles measured anticlockwise (for n +) or clockwise (for n −). Then (see Fig. 2 for the case of n +) we have \(n^\pm (x_1, \alpha ^\pm ) = n^\pm (x_1) \cos \alpha ^\pm + n_\perp ^\pm (x_1) \sin \alpha ^\pm \), so

In particular, if α + = −α − = α,

Recall that Δ = ξ 1 − ξ 0. Write Δ = (Δ 1, Δ 2) in components.

Lemma 1

Suppose that (R) holds, with α + = −α − = α and β +, β −≥ 0. If β ± < 1, then, for \(x \in S_B^\pm \) , as ∥x∥→∞,

If β ± > 1, then, for \(x \in S_B^\pm \) , as ∥x∥→∞,

Proof

Suppose that \(x \in S^\pm _B\). By (2), we have that \(\| \mathbb {E}_x \varDelta - \mu ^\pm (x) n^\pm (x_1,\alpha ^\pm )\| = O( \|x\|{ }^{-1})\). First suppose that 0 ≤ β ± < 1. Then, \(1/r^\pm (x_1) = 1 + O (x_1^{2\beta ^\pm -2})\), and hence, by (8),

Then, since ∥x∥ = x 1 + o(x 1) as ∥x∥→∞ with \(x \in {\mathcal D}\), we obtain (9) and (10).

On the other hand, if β ± > 1, then

and hence, by (8),

The expressions (11) and (12) follow. □

It is convenient to introduce a linear transformation of \({\mathbb R}^2\) under which the asymptotic increment covariance matrix Σ appearing in (C) is transformed to the identity. Define

recall that σ

2, s > 0, since Σ is positive definite. The choice of T is such that  (the identity), and x↦Tx leaves the horizontal direction unchanged. Explicitly,

(the identity), and x↦Tx leaves the horizontal direction unchanged. Explicitly,

Note that T is positive definite, and so ∥Tx∥ is bounded above and below by positive constants times ∥x∥. Also, if \(x \in {\mathcal D}\) and β +, β − < 1, the fact that |x 2| = o(x 1) means that Tx has the properties (i) (Tx)1 > 0 for all x 1 sufficiently large, and (ii) |(Tx)2| = o(|(Tx)1|) as x 1 →∞. See Fig. 3 for a picture.

The next result describes the increment moment properties of the process under the transformation T. For convenience, we set \(\tilde \varDelta := T \varDelta \) for the transformed increment, with components \(\tilde \varDelta _i = ( T \varDelta )_i\).

Lemma 2

Suppose that (D) , (R) , and (C) hold, with α + = −α − = α, and β +, β −≥ 0. Then, if ∥x∥→∞ with x ∈ S I,

If, in addition, (D+) and (C+) hold with ε > 0, then, if ∥x∥→∞ with x ∈ S I,

If β ± < 1, then, as ∥x∥→∞ with \(x \in S_B^\pm \),

If β ± > 1, then, as ∥x∥→∞ with \(x \in S_B^\pm \),

Proof

By linearity,

which, by (D) or (D+), is, respectively, o(∥x∥−1) or O(∥x∥−1−ε) for x ∈ S

I. Also, since  , we have

, we have

For x ∈ S I, the middle matrix in the last product here has norm o(1) or O(∥x∥−ε), by (C) or (C+). Thus we obtain (14) and (15). For \(x \in S^\pm _B\), the claimed results follow on using (20), (13), and the expressions for \(\mathbb {E}_x \varDelta \) in Lemma 1. □

3 Lyapunov Functions

For the rest of the paper, we suppose that α + = −α − = α for some |α| < π∕2. Our proofs will make use of some carefully chosen functions of the process. Most of these functions are most conveniently expressed in polar coordinates.

We write x = (r, θ) in polar coordinates, with angles measured relative to the positive horizontal axis: r := r(x) := ∥x∥ and θ := θ(x) ∈ (−π, π] is the angle between the ray through 0 and x and the ray in the Cartesian direction (1, 0), with the convention that anticlockwise angles are positive. Then \(x_1 = r \cos \theta \) and \(x_2 = r \sin \theta \).

For \(w \in {\mathbb R}\), θ 0 ∈ (−π∕2, π∕2), and \(\gamma \in {\mathbb R}\), define

where T is the linear transformation described at (13). The functions h w were used in analysis of processes in wedges in e.g. [5, 21, 29, 31]. Since the h w are harmonic for the Laplacian (see below for a proof), Lemma 2 suggests that h w(Tξ n) will be approximately a martingale in S I, and the choice of the geometrical parameter θ 0 gives us the flexibility to try to arrange things so that the level curves of h w are incident to the boundary at appropriate angles relative to the reflection vectors. The level curves of h w cross the horizontal axis at angle θ 0: see Fig. 4, and (33) below. In the case β ± < 1, the interest is near the horizontal axis, and we take θ 0 to be such that the level curves cut \(\partial {\mathcal D}\) at the reflection angles (asymptotically), so that h w(Tξ n) will be approximately a martingale also in S B. Then adjusting w and γ will enable us to obtain a supermartingale with the properties suitable to apply some Foster–Lyapunov theorems. This intuition is solidified in Lemma 4 below, where we show that the parameters w, θ 0, and γ can be chosen so that \(f^\gamma _w (\xi _n)\) satisfies an appropriate supermartingale condition outside a bounded set. For the case β ± < 1, since we only need to consider θ ≈ 0, we could replace these harmonic functions in polar coordinates by suitable polynomial approximations in Cartesian components, but since we also want to consider β ± > 1, it is convenient to use the functions in the form given. When β ± > 1, the recurrence classification is particularly delicate, so we must use another function (see (57) below), although the functions at (21) will still be used to study passage time moments in that case.

If β +, β − < 1, then θ(x) → 0 as ∥x∥→∞ with \(x \in {\mathcal D}\), which means that, for any |θ 0| < π∕2, h w(x) ≥ δ∥x∥w for some δ > 0 and all x ∈ S with ∥x∥ sufficiently large. On the other hand, for β +, β − > 1, we will restrict to the case with w > 0 sufficiently small such that \(\cos ( w \theta - \theta _0 )\) is bounded away from zero, uniformly in θ ∈ [−π∕2, π∕2], so that we again have the estimate h w(x) ≥ δ∥x∥w for some δ > 0 and all \(x \in {\mathcal D}\), but where now \({\mathcal D}\) is close to the whole half-plane (see Remark 4). In the calculations that follow, we will often use the fact that h w(x) is bounded above and below by a constant times ∥x∥w as ∥x∥→∞ with \(x \in {\mathcal D}\).

We use the notation \(D_i := \frac {{\mathrm d}}{{\mathrm d} x_i}\) for differentials, and for \(f : {\mathbb R}^2 \to {\mathbb R}\) write Df for the vector with components (Df)i = D i f. We use repeatedly

Define

For β ± > 1, we will also need

and θ 3 := θ 3(Σ, α) ∈ (−π, π) for which

where

The geometric interpretation of θ 1, θ 2, and θ 3 is as follows.

-

The angle between (0, ±1) and T(0, ±1) has magnitude θ 2. Thus, if β ± < 1, then θ 2 is, as x 1 →∞, the limiting angle of the transformed inwards pointing normal at x 1 relative to the vertical. On the other hand, if β ± > 1, then θ 2 is, as x 1 →∞, the limiting angle, relative to the horizontal, of the inwards pointing normal to \(T \partial {\mathcal D}\). See Fig. 3.

-

The angle between (0, −1) and \(T (\sin \alpha , -\cos \alpha )\) is θ 1. Thus, if β ± < 1, then θ 1 is, as x 1 →∞, the limiting angle between the vertical and the transformed reflection vector. Since the normal in the transformed domain remains asymptotically vertical, θ 1 is in this case the limiting reflection angle, relative to the normal, after the transformation.

-

The angle between (1, 0) and \(T ( \cos \alpha , \sin \alpha )\) is θ 3. Thus, if β ± > 1, then θ 3 is, as x 1 →∞, the limiting angle between the horizontal and the transformed reflection vector. Since the transformed normal is, asymptotically, at angle θ 2 relative to the horizontal, the limiting reflection angle, relative to the normal, after the transformation is in this case θ 3 − θ 2.

We need two simple facts.

Lemma 3

We have (i) \(\inf _{\alpha \in [-\frac {\pi }{2},\frac {\pi }{2}]} d (\varSigma ,\alpha ) >0\) , and (ii) |θ 3 − θ 2| < π∕2.

Proof

For (i), from (26) we may write

If \(\sigma _1^2 \neq \sigma _2^2\), then, by Lemma 11, the extrema over \(\alpha \in [-\frac {\pi }{2},\frac {\pi }{2}]\) of (27) are

Hence

which is strictly positive since \(\rho ^2 < \sigma _1^2 \sigma _2^2\). If \(\sigma _1^2 = \sigma _2^2\), then \(d^2 \geq \sigma _2^2 - | \rho |\), and \(| \rho | < | \sigma _1 \sigma _2 | = \sigma _2^2\), so d is also strictly positive in that case.

For (ii), we use the fact that \(\cos (\theta _3 - \theta _2 ) = \cos \theta _3 \cos \theta _2 + \sin \theta _3 \sin \theta _2\), where, by (24), \(\sin \theta _2 = \frac {\rho }{\sigma _1\sigma _2}\) and \(\cos \theta _2 = \frac {s}{\sigma _1\sigma _2}\), and (25), to get \(\cos (\theta _3 - \theta _2 ) = \frac {s}{\sigma _1 d} \cos \alpha > 0\). Since |θ 3 − θ 2| < 3π∕2, it follows that |θ 3 − θ 2| < π∕2, as claimed. □

We estimate the expected increments of our Lyapunov functions in two stages: the main term comes from a Taylor expansion valid when the jump of the walk is not too big compared to its current distance from the origin, while we bound the (smaller) contribution from big jumps using the moments assumption (Mp). For the first stage, let \(B_b (x) := \{ z \in {\mathbb R}^2 : \| x - z \| \leq b \}\) denote the (closed) Euclidean ball centred at x with radius b ≥ 0. We use the multivariable Taylor theorem in the following form. Suppose that \(f : {\mathbb R}^2 \to {\mathbb R}\) is thrice continuously differentiable in B b(x). Recall that Df(x) is the vector function whose components are D i f(x). Then, for y ∈ B b(x),

where, for all y ∈ B b(x), |R(x, y)|≤ C∥y∥3 R(x) for an absolute constant C < ∞ and

For dealing with the large jumps, we observe the useful fact that if p > 2 is a constant for which (1) holds, then for some constant C < ∞, all δ ∈ (0, 1), and all q ∈ [0, p],

for all ∥x∥ sufficiently large. To see (29), write ∥Δ∥q = ∥Δ∥p∥Δ∥q−p and use the fact that ∥Δ∥≥∥x∥δ to bound the second factor.

Here is our first main Lyapunov function estimate.

Lemma 4

Suppose that (Mp) , (D) , (R) , and (C) hold, with p > 2, α + = −α − = α for |α| < π∕2, and β +, β −≥ 0. Let \(w, \gamma \in {\mathbb R}\) be such that 2 − p < γw < p. Take θ 0 ∈ (−π∕2, π∕2). Then as ∥x∥→∞ with x ∈ S I,

We separate the boundary behaviour into two cases.

-

(i)

If 0 ≤ β ± < 1, take θ 0 = θ 1 given by (23). Then, as ∥x∥→∞ with \(x \in S^\pm _B\),

$$\displaystyle \begin{aligned} & {} \mathbb{E} [ f^\gamma_w(\xi_{n+1}) - f^\gamma_w( \xi_n) \mid \xi_n = x ] \\ & {} \quad {} = \gamma w \| Tx \|{}^{w-1} \left( h_w (Tx ) \right)^{\gamma-1} \frac{a^\pm \mu^\pm (x) \sigma_2 \cos \theta_1 }{s \cos \alpha} \left(\beta^\pm - (1-w) \beta_{\mathrm{c}} \right) x_1^{\beta^\pm-1} \\ & {} \qquad {} + o( \| x\|{}^{w \gamma + \beta^\pm-2} ) , \end{aligned} $$(31)where β c is given by (5).

-

(ii)

If β ± > 1, suppose that w ∈ (0, 1∕2) and θ 0 = θ 0(Σ, α, w) = θ 3 − (1 − w)θ 2 , where θ 2 and θ 3 are given by (24) and (25), such that \(\sup _{\theta \in [-\frac {\pi }{2},\frac {\pi }{2}]} |w \theta - \theta _0 | < \pi /2\) . Then, with d = d(Σ, α) as defined at (26), as ∥x∥→∞ with \(x \in S^\pm _B\),

$$\displaystyle \begin{aligned} & {} \mathbb{E} [ f^\gamma_w(\xi_{n+1}) - f^\gamma_w( \xi_n) \mid \xi_n = x ] \\ & {} \quad {}= \gamma w \| Tx \|{}^{w-1} \left( h_w (Tx ) \right)^{\gamma-1} \frac{d \mu^\pm (x)}{s} \left( \cos ( (1-w) (\pi/2) ) + o(1) \right) .\end{aligned} $$(32)

Remark 4

We can choose w > 0 small enough so that |θ 3 − (1 − w)θ 2| < π∕2, by Lemma 3(ii), and so if θ 0 = θ 3 − (1 − w)θ 2, we can always choose w > 0 small enough so that \(\sup _{\theta \in [-\frac {\pi }{2},\frac {\pi }{2}]} |w \theta - \theta _0 | < \pi /2\), as required for the β ± > 1 part of Lemma 4.

Proof (of Lemma 4 )

Differentiating (21) and using (22) we see that

Moreover,

verifying that h w is harmonic. Also, for any i, j, k, |D i D j D k h w(x)| = O(r w−3). Writing \(h_w^\gamma (x) := ( h_w (x) )^\gamma \), we also have that \(D_i h_w^\gamma (x) = \gamma h_w^{\gamma -1} (x) D_i h_w (x)\), that

and \(| D_i D_j D_k h_w^\gamma (x) | = O ( r^{\gamma w -3} )\). We apply Taylor’s formula (28) in the ball B r∕2(x) together with the harmonic property of h w, to obtain, for y ∈ B r∕2(x),

where |R(x, y)|≤ C∥y∥3∥x∥γw−3, using the fact that h w(x) is bounded above and below by a constant times ∥x∥w.

Let E x := {∥Δ∥ < ∥x∥δ}, where we fix a constant δ satisfying

such a choice of δ is possible since p > 2 and 2 − p < γw < p. If ξ 0 = x and E x occurs, then \(Tx + \tilde \varDelta \in B_{r/2} (Tx)\) for all ∥x∥ sufficiently large. Thus, conditioning on ξ 0 = x, on the event E x we may use the expansion in (34) for \(h^\gamma _w (Tx + \tilde \varDelta )\), which, after taking expectations, yields

Let p′ = p ∧ 3, so that (1) also holds for p′∈ (2, 3]. Then, writing \(\| \tilde \varDelta \|{ }^3 = \| \tilde \varDelta \|{ }^{p'} \| \tilde \varDelta \|{ }^{3-p'}\),

since (3 − p′)δ < 1. If x ∈ S I, then (14) shows \(| \mathbb {E}_x \langle D h_w(Tx), \tilde \varDelta \rangle | = o ( \| x \|{ }^{w-2} )\), so

Note that, by (35), \(\delta > \frac {2}{p} > \frac {1}{p-1}\). Then, using the q = 1 case of (29), we get

A similar argument using the q = 2 case of (29) gives

If x ∈ S I, then (14) shows that \(\mathbb {E}_x (\tilde \varDelta _1^2 - \tilde \varDelta _2^2)\) and \(\mathbb {E}_x ( \tilde \varDelta _1 \tilde \varDelta _2 )\) are both o(1), and hence, by the q = 2 case of (29) once more, we see that \(\mathbb {E}_x [ | \tilde \varDelta _1^2 - \tilde \varDelta _2^2| {\mathbf 1}_ {E_x} ]\) and \(\mathbb {E}_x [| \tilde \varDelta _1 \tilde \varDelta _2 | {\mathbf 1}_ {E_x} ]\) are both o(1). Moreover, (14) also shows that

Putting all these estimates into (36) we get, for x ∈ S I,

On the other hand, given ξ 0 = x, if γw ≥ 0, by the triangle inequality,

It follows from (39) that \(| f_w^\gamma (\xi _1) -f_w^\gamma (x) | {\mathbf 1}_ {E_x^{\mathrm {c}} } \leq C \| \varDelta \|{ }^{\gamma w /\delta }\), for some constant C < ∞ and all ∥x∥ sufficiently large. Hence

Since \(\delta > \frac {\gamma w}{p}\), by (35), we may apply (29) with \(q = \frac {\gamma w}{\delta }\) to get

since \(\delta > \frac {2}{p}\). If wγ < 0, then we use the fact that \(f_w^\gamma \) is uniformly bounded to get

by the q = 0 case of (29). Thus (40) holds in this case too, since γw > 2 − δp by choice of δ at (35). Then (30) follows from combining (38) and (40) with (33).

Next suppose that x ∈ S B. Truncating (34), we see that for all y ∈ B r∕2(x),

where now |R(x, y)|≤ C∥y∥2∥x∥γw−2. It follows from (41) and (Mp) that

By the q = 1 case of (29), since \(\delta > \frac {1}{p-1}\), we see that \(\mathbb {E}_x [ \langle D h_w(Tx), \tilde \varDelta \rangle {\mathbf 1}_ {E^{\mathrm {c}}_x} ] = o ( \| x \|{ }^{w-2} )\), while the estimate (40) still applies, so that

From (33) we have

First suppose that β ± < 1. Then, by (13), for \(x \in S_B^\pm \), \(x_2 = \pm a^\pm x_1^{\beta ^\pm } + O(1)\) and

Since \(\arcsin z = z + O (z^3)\) as z → 0, it follows that

Hence

Then (43) with (16) and (17) shows that

where, for |θ 0| < π∕2, \(A_1 = \sigma ^2_2 \tan \alpha + \rho - s \tan \theta _0\), and

Now take θ 0 = θ 1 as given by (23), so that \(s \tan \theta _0 = \sigma _2^2 \tan \alpha + \rho \). Then A 1 = 0, eliminating the leading order term in (44). Moreover, with this choice of θ 0 we get, after some further cancellation and simplification, that

with β c as given by (5). Thus with (44) and (42) we verify (31).

Finally suppose that β ± > 1, and restrict to the case w ∈ (0, 1∕2). Let θ 2 ∈ (−π∕2, π∕2) be as given by (24). Then if x = (0, x 2), we have \(\theta (Tx) = \theta _2 - \frac {\pi }{2}\) if x 2 < 0 and \(\theta (Tx) = \theta _2 + \frac {\pi }{2}\) if x 2 > 0 (see Fig. 3). It follows from (13) that

as ∥x∥→∞ (and x 1 →∞). Now (43) with (18) and (19) shows that

Set \(\phi := (1-w) \frac {\pi }{2}\). Choose θ 0 = θ 3 − (1 − w)θ 2, where θ 3 ∈ (−π, π) satisfies (25). Then we have that, for \(x \in S_B^\pm \),

Similarly, for \(x \in S_B^\pm \),

Using (46) and (47) in (45), we obtain

where

by (25), and, similarly,

Then with (42) we obtain (32). □

In the case where β +, β − < 1 with β + ≠ β −, we will in some circumstances need to modify the function \(f_w^\gamma \) so that it can be made insensitive to the behaviour near the boundary with the smaller of β +, β −. To this end, define for \(w, \gamma , \nu , \lambda \in {\mathbb R}\),

We state a result for the case β − < β +; an analogous result holds if β + < β −.

Lemma 5

Suppose that (Mp) , (D) , (R) , and (C) hold, with p > 2, α + = −α − = α for |α| < π∕2, and 0 ≤ β − < β + < 1. Let \(w, \gamma \in {\mathbb R}\) be such that 2 − p < γw < p. Take θ 0 = θ 1 ∈ (−π∕2, π∕2) given by (23). Suppose that

Then as ∥x∥→∞ with x ∈ S I,

As ∥x∥→∞ with \(x \in S^+_B\),

As ∥x∥→∞ with \(x \in S^-_B\),

Proof

Suppose that 0 ≤ β − < β + < 1. As in the proof of Lemma 4, let E x = {∥Δ∥ < ∥x∥δ}, where δ ∈ (0, 1) satisfies (35). Set v ν(x) := x 2∥Tx∥2ν. Then, using Taylor’s formula in one variable, for \(x, y \in {\mathbb R}^2\) with y ∈ B r∕2(x),

where |R(x, y)|≤ C∥y∥2∥x∥2ν−2. Thus, for x ∈ S with y ∈ B r∕2(x) and x + y ∈ S,

where now \(| R(x,y) | \leq C \| y \|{ }^2 \| x \|{ }^{2 \nu + \beta ^+ -2}\), using the fact that both |x 2| and |y 2| are \(O ( \| x \|{ }^{\beta ^+} )\). Taking x = ξ 0 and y = Δ so \(Ty = \tilde \varDelta \), we obtain

Suppose that x ∈ S I. Similarly to (37), we have \(\mathbb {E}_x [ \langle T x, \tilde \varDelta \rangle {\mathbf 1}_ { E_x } ] = o(1)\), and, by similar arguments using (29), \(\mathbb {E} [ \varDelta _2 {\mathbf 1}_ { E_x } ] = o ( \| x \|{ }^{-1})\), \(\mathbb {E}_x | \varDelta _2 \langle T x, \tilde \varDelta \rangle {\mathbf 1}_ { E^{\mathrm {c}}_x } | = o ( \| x \| )\), and \(\mathbb {E}_x | R(x, \varDelta ) {\mathbf 1}_ { E_x } | = o ( \|x \|{ }^{2\nu -1} )\), since β + < 1. Also, by (13),

Here, by (14), \(\mathbb {E}_x ( \tilde \varDelta _1 \tilde \varDelta _2 ) = o (1)\) and \(\mathbb {E}_x ( \tilde \varDelta _2^2 ) = O(1)\), while \( \sigma _2 (Tx)_2 = x_2 = O ( \| x \|{ }^{\beta ^+})\). Thus \(\mathbb {E}_x ( \varDelta _2 \langle T x, \tilde \varDelta \rangle ) = o ( \| x \| )\). Hence also

Thus from (53) we get that, for x ∈ S I,

On the other hand, since \(| v_\nu (x+y ) - v_\nu (x) | \leq C ( \| x \| + \| y \| )^{2\nu + \beta ^+}\) we get

Here 2ν + β + < 2ν + 1 < γw < δp, by choice of ν and (35), so we may apply (29) with q = (2ν + β +)∕δ to get

since δp > 2, by (35). Combining (54), (55) and (30), we obtain (49), provided that 2ν − 1 < γw − 2, which is the case since 2ν < γw + β + − 2 and β + < 1.

Now suppose that \(x \in S_B^\pm \). We truncate (52) to see that, for x ∈ S with y ∈ B r∕2(x) and x + y ∈ S,

where now \(| R(x,y) | \leq C \| y \| \| x \|{ }^{2\nu + \beta ^\pm -1}\), using the fact that for \(x \in S_B^\pm \), \(|x_2| = O ( \| x \|{ }^{\beta ^\pm } )\). It follows that, for \(x \in S_B^\pm \),

By (29) and (35) we have that \(\mathbb {E} [ |\varDelta _2| {\mathbf 1}_ {E^{\mathrm {c}}_x } ] = O( \| x \|{ }^{-\delta (p-1)} ) = o( \| x\|{ }^{-1} )\), while if \(x \in S_B^\pm \), then, by (10), \(\mathbb {E}_x \varDelta _2 = \mp \mu ^\pm (x) \cos \alpha + O( \|x\|{ }^{\beta ^\pm -1})\). On the other hand, the estimate (55) still applies, so we get, for \(x \in S_B^\pm \),

If we choose ν such that 2ν < γw + β + − 2, then we combine (56) and (31) to get (50), since the term from (31) dominates. If we choose ν such that 2ν > γw + β −− 2, then the term from (56) dominates that from (31), and we get (51). □

In the critically recurrent cases, where \(\max ( \beta ^+,\beta ^- ) = \beta _{\mathrm {c}} \in (0,1)\) or β +, β − > 1, in which no passage-time moments exist, the functions of polynomial growth based on h w as defined at (21) are not sufficient to prove recurrence. Instead we need functions which grow more slowly. For \(\eta \in {\mathbb R}\) let

where we understand \(\log y\) to mean \(\max (1,\log y)\). The function h is again harmonic (see below) and was used in the context of reflecting Brownian motion in a wedge in [31]. Set

Lemma 6

Suppose that (Mp) , (D+) , (R) , and (C+) hold, with p > 2, ε > 0, α + = −α − = α for |α| < π∕2, and β +, β −≥ 0. For any \(\eta \in {\mathbb R}\) , as ∥x∥→∞ with x ∈ S I,

If 0 ≤ β ± < 1, take η = η 0 as defined at (58). Then, as ∥x∥→∞ with \(x \in S^\pm _B\),

If β ± > 1, take η = η 1 as defined at (58). Then as ∥x∥→∞ with \(x \in S^\pm _B\),

Proof

Given \(\eta \in {\mathbb R}\), for \(r_0 = r_0 (\eta ) = \exp ( {\mathrm {e}} + | \eta | \pi )\), we have from (58) that both h and \(\log h\) are infinitely differentiable in the domain \({\mathcal R}_{r_0} := \{ x \in {\mathbb R}^2 : x_1 > 0, \, r (x) > r_0 \}\). Differentiating (58) and using (22) we obtain, for \(x \in {\mathcal R}_{r_0}\),

We verify that h is harmonic in \({\mathcal R}_{r_0}\), since

Also, for any i, j, k, |D i D j D k h(x)| = O(r −3). Moreover, \(D_i \log h(x) = (h(x))^{-1} D_i h(x)\),

and \(| D_i D_j D_k \log h(x) | = O (r^{-3} (\log r )^{-1})\). Recall that Dh(x) is the vector function whose components are D i h(x). Then Taylor’s formula (28) together with the harmonic property of h shows that for \(x \in {\mathcal R}_{2r_0}\) and y ∈ B r∕2(x),

where \(| R(x,y) | \leq C \| y \|{ }^3 \| x \|{ }^{-3} (\log \| x \|)^{-1}\) for some constant C < ∞, all y ∈ B r∕2(x), and all ∥x∥ sufficiently large. As in the proof of Lemma 4, let E x = {∥Δ∥ < ∥x∥δ} for \(\delta \in (\frac {2}{p},1)\). Then applying the expansion in (63) to \(\log h(Tx + \tilde \varDelta )\), conditioning on ξ 0 = x, and taking expectations, we obtain, for ∥x∥ sufficiently large,

Let p′∈ (2, 3] be such that (1) holds. Then

for some ε′ > 0.

Suppose that x ∈ S I. By (15), \(\mathbb {E}_x ( \tilde \varDelta _1 \tilde \varDelta _2 ) = O ( \| x \|{ }^{-\varepsilon } )\) and, by (29), \(\mathbb {E}_x | \tilde \varDelta _1 \tilde \varDelta _2{\mathbf 1}_ {E_x^{\mathrm {c}}} | \leq C \mathbb {E} [ \| \varDelta \|{ }^2 {\mathbf 1}_ {E_x^{\mathrm {c}}} ] = O ( \| x \|{ }^{-\varepsilon '} )\), for some ε′ > 0. Thus \(\mathbb {E}_x ( \tilde \varDelta _1 \tilde \varDelta _2{\mathbf 1}_ {E_x} ) = O ( \| x \|{ }^{-\varepsilon '} )\). A similar argument gives the same bound for \(\mathbb {E}_x [ ( \tilde \varDelta _1^2 - \tilde \varDelta _2^2) {\mathbf 1}_ { E_x} ]\). Also, from (15) and (62), \(\mathbb {E}_x ( \langle D h(Tx), \tilde \varDelta \rangle ) = O ( \| x \|{ }^{-2-\varepsilon } )\) and, by (29), \(\mathbb {E}_x | \langle D h(Tx), \tilde \varDelta \rangle {\mathbf 1}_ {E_x^{\mathrm {c}}} | = O ( \| x \|{ }^{-2-\varepsilon '} )\) for some ε′ > 0. Hence \(\mathbb {E}_x [ \langle D h(Tx), \tilde \varDelta \rangle {\mathbf 1}_ { E_x} ] = O ( \| x \|{ }^{-2-\varepsilon '} )\). Finally, by (15) and (62),

while, by (29), \( \mathbb {E}_x | \langle D h(Tx), \tilde \varDelta \rangle ^2 {\mathbf 1}_ {E_x^{\mathrm {c}} } | = O ( \| x \|{ }^{-2-\varepsilon '} )\). Putting all these estimates into (64) gives

for some ε′ > 0. On the other hand, for all ∥x∥ sufficiently large, \(| \ell (x+y) - \ell (x) | \leq C \log \log \| x \| + C \log \log \| y \|\). For any p > 2 and \(\delta \in (\frac {2}{p}, 1)\), we may (and do) choose q > 0 sufficiently small such that δ(p − q) > 2, and then, by (29),

for some ε′ > 0. Thus we conclude that

for some ε′ > 0. Then (59) follows from (62).

Next suppose that x ∈ S B. Truncating (63), we have for \(x \in {\mathcal R}_{2r_0}\) and y ∈ B r∕2(x),

where now \(|R(x,y)| \leq C \| y \|{ }^2 \| x \|{ }^{-2} (\log \| x \|)^{-1}\) for ∥x∥ sufficiently large. Hence

Then by (65) and the fact that \(\mathbb {E}_x | \langle D h(Tx), \tilde \varDelta \rangle {\mathbf 1}_ {E_x^{\mathrm {c}}} | = O ( \| x \|{ }^{-2-\varepsilon '} )\) (as above),

From (62) we have

using (13). If β ± < 1 and \(x \in S_B^\pm \), we have from (16) and (17) that

Taking η = η 0 as given by (58), the ± x 1 term vanishes; after simplification, we get

Using (67) in (66) gives (60).

On the other hand, if β ± > 1 and \(x \in S_B^\pm \), we have from (18) and (19) that

Taking η = η 1 as given by (58), the \(\pm x_1^{\beta ^\pm }\) term vanishes, and we get

as ∥x∥→∞ (and x 1 →∞). Then using the last display in (66) gives (61). □

The function ℓ is not by itself enough to prove recurrence in the critical cases, because the estimates in Lemma 6 do not guarantee that ℓ satisfies a supermartingale condition for all parameter values of interest. To proceed, we modify the function slightly to improve its properties near the boundary. In the case where \(\max (\beta ^+,\beta ^-) = \beta _{\mathrm {c}} \in (0,1)\), the following function will be used to prove recurrence,

where the parameter η in ℓ is chosen as η = η 0 as given by (58).

Lemma 7

Suppose that (Mp) , (D+) , (R) , and (C+) hold, with p > 2, ε > 0, α + = −α − = α for |α| < π∕2, and β +, β −∈ (0, 1) with β +, β −≤ β c . Let η = η 0 , and suppose

Then as ∥x∥→∞ with x ∈ S I,

Moreover, as ∥x∥→∞ with \(x \in S_B^\pm \),

Proof

Set u γ(x) := u γ(r, θ) := θ 2(1 + r)−γ, and note that, by (22), for x 1 > 0,

and |D i D j u γ(x)| = O(r −2−γ) for any i, j. So, by Taylor’s formula (28), for all y ∈ B r∕2(x),

where |R(x, y)|≤ C∥y∥2∥x∥−2−γ for all ∥x∥ sufficiently large. Once more define the event E x = {∥Δ∥ < ∥x∥δ}, where now \(\delta \in ( \frac {2+\gamma }{p},1)\). Then

Moreover, \(\mathbb {E}_x | \langle D u_\gamma (x), \varDelta \rangle {\mathbf 1}_ {E^{\mathrm {c}}_x } | \leq C \| x \|{ }^{-1-\gamma } \mathbb {E}_x ( \| \varDelta \|{\mathbf 1}_ {E^{\mathrm {c}}_x } ) = O ( \| x \|{ }^{-2-\gamma })\), by (29) and the fact that \(\delta > \frac {2}{p} > \frac {1}{p-1}\). Also, since u γ is uniformly bounded,

by (29). Since pδ > 2 + γ, it follows that

For x ∈ S I, it follows from (70) and (D+) that \(\mathbb {E}_x [ u_\gamma (\xi _1) - u_\gamma (\xi _0) ] = O (\|x\|{ }^{-2-\gamma })\), and combining this with (59) we get (68).

Let \(\beta = \max (\beta ^+, \beta ^- ) < 1\). For x ∈ S, |θ(x)| = O(r β−1) as ∥x∥→∞, so (70) gives

If \(x \in S_B^\pm \) then \(\theta = \pm a^\pm (1+o(1)) x_1^{\beta ^\pm -1}\) and, by (10), \(\mathbb {E}_x \varDelta _2 = \mp \mu ^\pm (x) \cos \alpha +o(1)\), so

For η = η 0 and β +, β −≤ β c, we have from (60) that

Combining this with (71), we obtain (69), provided that we choose γ such that β ±− 2 − γ > 2β ±− 3 and β ±− 2 − γ > −2, that is, γ < 1 − β ± and γ < β ±. □

In the case where β +, β − > 1, we will use the function

where the parameter η in ℓ is now chosen as η = η 1 as defined at (58). A similar function was used in [6].

Lemma 8

Suppose that (Mp) , (D+) , (R) , and (C+) hold, with p > 2, ε > 0, α + = −α − = α for |α| < π∕2, and β +, β − > 1 Let η = η 1 , and suppose that

Then as ∥x∥→∞ with x ∈ S I,

Moreover, as ∥x∥→∞ with \(x \in S^\pm _B\),

Proof

Let q γ(x) := x 1(1 + ∥x∥2)−γ. Then

and |D i D j q γ(x)| = O(∥x∥−1−2γ) for any i, j. Thus by Taylor’s formula, for y ∈ B r∕2(x),

where |R(x, y)|≤ C∥y∥2∥x∥−1−2γ for ∥x∥ sufficiently large. Once more let E x = {∥Δ∥ < ∥x∥δ}, where now we take \(\delta \in (\frac {1+2\gamma }{p}, 1)\). Then

Moreover, we get from (29) that \(\mathbb {E}_x | \langle D q_\gamma (x), \varDelta \rangle {\mathbf 1}_ { E^{\mathrm {c}}_x} | = O ( \| x \|{ }^{-2\gamma -\delta (p-1) })\), where δ(p − 1) > 2γ > 1, and, since q γ is uniformly bounded for γ > 1∕2,

where pδ > 1 + 2γ. Thus

If x ∈ S I, then (D+) gives \(\mathbb {E}_x \langle D q_\gamma (x), \varDelta \rangle = O ( \| x\|{ }^{-1-2\gamma })\) and with (59) we get (72), since γ > 1∕2. On the other hand, suppose that \(x \in S_B^\pm \) and β ± > 1. Then \(\| x\| \geq c x_1^{\beta ^\pm }\) for some c > 0, so \(x_1 = O ( \| x \|{ }^{1/\beta ^\pm } )\). So, by (74),

Moreover, by (11), \(\mathbb {E}_x \varDelta _1 = \mu ^\pm (x) \cos \alpha + o(1)\). Combined with (61), this yields (73), provided that 2γ ≤ 2 − (1∕β ±), again using the fact that \(x_1 = O ( \| x \|{ }^{1/\beta ^\pm } )\). This completes the proof. □

4 Proofs of Main Results

We obtain our recurrence classification and quantification of passage-times via Foster–Lyapunov criteria (cf. [14]). As we do not assume any irreducibility, the most convenient form of the criteria are those for discrete-time adapted processes presented in [26]. However, the recurrence criteria in [26, §3.5] are formulated for processes on \({\mathbb R}_+\), and, strictly, do not apply directly here. Thus we present appropriate generalizations here, as they may also be useful elsewhere. The following recurrence result is based on Theorem 3.5.8 of [26].

Lemma 9

Let X 0, X 1, … be a stochastic process on \({\mathbb R}^d\) adapted to a filtration \({\mathcal F}_0, {\mathcal F}_1, \ldots \) . Let \(f : {\mathbb R}^d \to {\mathbb R}_+\) be such that f(x) →∞ as ∥x∥→∞, and \(\mathbb {E} f(X_0) < \infty \) . Suppose that there exist \(r_0 \in {\mathbb R}_+\) and C < ∞ for which, for all \(n \in {\mathbb Z}_+\),

Then if \({\mathbb P} ( \limsup _{n \to \infty } \| X_n \| = \infty ) =1\) , we have \({\mathbb P} ( \liminf _{n \to \infty } \| X_n \| \leq r_0 ) = 1\).

Proof

By hypothesis, \(\mathbb {E} f(X_n) < \infty \) for all n. Fix \(n \in {\mathbb Z}_+\) and let \(\lambda _{n} := \min \{ m \geq n : \| X_m \| \leq r_0 \}\) and, for some r > r 0, set \(\sigma _n := \min \{ m \geq n : \| X_m \| \geq r \}\). Since limsupn→∞∥X n∥ = ∞ a.s., we have that σ n < ∞, a.s. Then \(f(X_{m \wedge \lambda _n \wedge \sigma _n } )\), m ≥ n, is a non-negative supermartingale with \(\lim _{m \to \infty } f(X_{m \wedge \lambda _n \wedge \sigma _n } ) = f(X_{\lambda _n \wedge \sigma _n } )\), a.s. By Fatou’s lemma and the fact that f is non-negative,

So

Since r > r 0 was arbitrary, and infy:∥y∥≥r f(y) →∞ as r →∞, it follows that, for fixed \(n \in {\mathbb Z}_+\), \({\mathbb P} ( \inf _{m \geq n} \| X_m \| \leq r_0 ) = 1\). Since this holds for all \(n \in {\mathbb Z}_+\), the result follows. □

The corresponding transience result is based on Theorem 3.5.6 of [26].

Lemma 10

Let X 0, X 1, … be a stochastic process on \({\mathbb R}^d\) adapted to a filtration \({\mathcal F}_0, {\mathcal F}_1, \ldots \) . Let \(f : {\mathbb R}^d \to {\mathbb R}_+\) be such that supx f(x) < ∞, f(x) → 0 as ∥x∥→∞, and infx:∥x∥≤r f(x) > 0 for all \(r \in {\mathbb R}_+\) . Suppose that there exists \(r_0 \in {\mathbb R}_+\) for which, for all \(n \in {\mathbb Z}_+\),

Then if \({\mathbb P} ( \limsup _{n \to \infty } \| X_n \| = \infty ) =1\) , we have that \({\mathbb P} ( \lim _{n \to \infty } \| X_n \| = \infty ) = 1\).

Proof

Since f is bounded, \(\mathbb {E} f(X_n) < \infty \) for all n. Fix \(n \in {\mathbb Z}_+\) and r 1 ≥ r 0. For \(r \in {\mathbb Z}_+\) let \(\sigma _r := \min \{ n \in {\mathbb Z}_+ : \| X_n \| \geq r \}\). Since \({\mathbb P} ( \limsup _{n \to \infty } \| X_n \| = \infty ) =1\), we have σ r < ∞, a.s. Let \(\lambda _{r} := \min \{ n \geq \sigma _r : \| X_n \| \leq r_1 \}\). Then \(f(X_{n \wedge \lambda _r} )\), n ≥ σ r, is a non-negative supermartingale, which converges, on {λ r < ∞}, to \(f(X_{\lambda _r} )\). By optional stopping (e.g. Theorem 2.3.11 of [26]), a.s.,

So

which tends to 0 as r →∞, by our hypotheses on f. Thus,

Since r 1 ≥ r 0 was arbitrary, we get the result. □

Now we can complete the proof of Theorem 3, which includes Theorem 1 as the special case α = 0.

Proof (of Theorem 3 )

Let \(\beta = \max (\beta ^+,\beta ^-)\), and recall the definition of β c from (5) and that of s 0 from (7). Suppose first that 0 ≤ β < 1 ∧ β c. Then s 0 > 0 and we may (and do) choose w ∈ (0, 2s 0). Also, take γ ∈ (0, 1); note 0 < γw < 1. Consider the function \(f_w^\gamma \) with θ 0 = θ 1 given by (23). Then from (30), we see that there exist c > 0 and r 0 < ∞ such that, for all x ∈ S I,

By choice of w, we have β − (1 − w)β c < 0, so (31) shows that, for all \(x \in S_B^\pm \),

for some c > 0 and all ∥x∥ sufficiently large. In particular, this means that (75) holds throughout S. On the other hand, it follows from (39) and (Mp) that there is a constant C < ∞ such that

Since w, γ > 0, we have that \( f_w^\gamma (x) \to \infty \) as ∥x∥→∞. Then by Lemma 9 with the conditions (75) and (76) and assumption (N), we establish recurrence.

Next suppose that β c < β < 1. If β + = β − = β, we use the function \(f_w^\gamma \), again with θ 0 = θ 1 given by (23). We may (and do) choose γ ∈ (0, 1) and w < 0 with w > −2|s 0| and γw > w > 2 − p. By choice of w, we have β − (1 − w)β c > 0. We have from (30) and (31) that (75) holds in this case also, but now \(f_w^\gamma (x) \to 0\) as ∥x∥→∞, since γw < 0. Lemma 10 then gives transience when β + = β −.

Suppose now that β c < β < 1 with β + ≠ β −. Without loss of generality, suppose that β = β + > β −. We now use the function \(F_w^{\gamma ,\nu }\) defined at (48), where, as above, we take γ ∈ (0, 1) and w ∈ (−2|s 0|, 0), and we choose the constants λ, ν with λ < 0 and γw + β −− 2 < 2ν < γw + β + − 2. Note that 2ν < γw − 1, so \(F_w^{\gamma ,\nu } (x) = f_w^\gamma (x) ( 1 + o(1))\). With θ 0 = θ 1 given by (23), and this choice of ν, Lemma 5 applies. The choice of γ ensures that the right-hand side of (49) is eventually negative, and the choice of w ensures the same for (50). Since λ < 0, the right-hand side of (51) is also eventually negative. Combining these three estimates shows, for all x ∈ S with ∥x∥ large enough,

Since \(F_w^{\gamma ,\nu } (x) \to 0\) as ∥x∥→∞, Lemma 10 gives transience.

Of the cases where β +, β − < 1, it remains to consider the borderline case where β = β c ∈ (0, 1). Here Lemma 7 together with Lemma 9 proves recurrence. Finally, if β +, β − > 1, we apply Lemma 8 together with Lemma 9 to obtain recurrence. Note that both of these critical cases require (D+) and (C+). □

Next we turn to moments of passage times: we prove Theorem 4, which includes Theorem 2 as the special case α = 0. Here the criteria we apply are from [26, §2.7], which are heavily based on those from [5].

Proof (of Theorem 4 )

Again let \(\beta = \max (\beta ^+,\beta ^-)\). First we prove the existence of moments part of (a)(i). Suppose that 0 ≤ β < 1 ∧ β c, so s 0 as defined at (7) satisfies s 0 > 0. We use the function \(f_w^\gamma \), with γ ∈ (0, 1) and w ∈ (0, 2s 0) as in the first part of the proof of Theorem 3. We saw in that proof that for these choices of γ, w we have that (75) holds for all x ∈ S. Rewriting this slightly, using the fact that \(f_w^\gamma (x)\) is bounded above and below by constants times ∥x∥γw for all ∥x∥ sufficiently large, we get that there are constants c > 0 and r 0 < ∞ for which

Then we may apply Corollary 2.7.3 of [26] to get \(\mathbb {E}_x ( \tau _r^s ) < \infty \) for any r ≥ r 0 and any s < γw∕2. Taking γ < 1 and w < 2s 0 arbitrarily close to their upper bounds, we get \(\mathbb {E}_x ( \tau _r^s ) < \infty \) for all s < s 0.

Next suppose that 0 ≤ β ≤ β c. Let s > s 0. First consider the case where β + = β −. Then we consider \(f_w^\gamma \) with γ > 1, w > 2s 0 (so w > 0), and 0 < wγ < 2. Then, since β − (1 − w)β c = β c − β + (w − 2s 0)β c > 0, we have from (30) and (31) that

for all x ∈ S with ∥x∥ sufficiently large. Now set \(Y_n := f_w^{1/w} ( \xi _n)\), and note that Y n is bounded above and below by constants times ∥ξ n∥, and \(Y_n^{\gamma w} = f_w^\gamma (\xi _n)\). Write \({\mathcal F}_n = \sigma ( \xi _0, \xi _1, \ldots , \xi _n)\). Then we have shown in (78) that

for some r 1 sufficiently large. Also, from the γ = 1∕w case of (30) and (31),

for some B < ∞ and r 2 sufficiently large. (The right-hand side of (31) is still eventually positive, while the right-hand-side of (30) will be eventually negative if γ < 1.) Again let E x = {∥Δ∥ < ∥x∥δ} for δ ∈ (0, 1). Then from the γ = 1∕w case of (41),

while from the γ = 1∕w case of (39) we have

Taking δ ∈ (2∕p, 1), it follows from (Mp) that for some C < ∞, a.s.,

The three conditions (79)–(81) show that we may apply Theorem 2.7.4 of [26] to get \(\mathbb {E}_x ( \tau _r^s ) = \infty \) for all s > γw∕2, all r sufficiently large, and all x ∈ S with ∥x∥ > r. Hence, taking γ > 1 and w > 2s 0 arbitrarily close to their lower bounds, we get \(\mathbb {E}_x ( \tau _r^s ) = \infty \) for all s > s 0 and appropriate r, x. This proves the non-existence of moments part of (a)(i) in the case β + = β −.

Next suppose that 0 ≤ β +, β −≤ β c with β + ≠ β −. Without loss of generality, suppose that 0 ≤ β − < β + = β ≤ β c. Then 0 ≤ s 0 < 1∕2. We consider the function \(F_w^{\gamma ,\nu }\) given by (48) with θ 0 = θ 1 given by (23), λ > 0, w ∈ (2s 0, 1), and γ > 1 such that γw < 1. Also, take ν for which γw + β −− 2 < 2ν < γw + β + − 2. Then by choice of γ and w, we have that the right-hand sides of (49) and (50) are both eventually positive. Since λ > 0, the right-hand side of (51) is also eventually positive. Thus

for all x ∈ S with ∥x∥ sufficiently large. Take \(Y_n := ( F_w^{\gamma ,\nu } (\xi _n) )^{1/(\gamma w)}\). Then we have shown that, for this Y n, the condition (79) holds. Moreover, since γw < 1 we have from convexity that (80) also holds. Again let E x = {∥Δ∥ < ∥x∥δ}. From (41) and (52),

for all y ∈ B r∕2(x). Then, by another Taylor’s theorem calculation,

for all y ∈ B r∕2(x). It follows that \(\mathbb {E}_x [ ( Y_1 - Y_0 )^2 {\mathbf 1}_ { E_x } ] \leq C\). Moreover, by a similar argument to (40), |Y 1 − Y 0|2 ≤ C∥Δ∥2γw∕δ on \(E_x^{\mathrm {c}}\), so taking δ ∈ (2∕p, 1) and using the fact that γw < 1, we get \(\mathbb {E}_x [ ( Y_1 - Y_0 )^2 {\mathbf 1}_ { E^{\mathrm {c}}_x } ] \leq C\) as well. Thus we also verify (81) in this case. Then we may again apply Theorem 2.7.4 of [26] to get \(\mathbb {E}_x ( \tau _r^s ) = \infty \) for all s > γw∕2, and hence all s > s 0. This completes the proof of (a)(i).

For part (a)(ii), suppose first that β + = β − = β, and that β c ≤ β < 1. We apply the function \(f_w^\gamma \) with w > 0 and γ > 1. Then we have from (30) and (31) that (78) holds. Repeating the argument below (78) shows that \(\mathbb {E}_x ( \tau _r^s ) = \infty \) for all s > γw∕2, and hence all s > 0. The case where β + ≠ β − is similar, using an appropriate \(F_w^{\gamma ,\nu }\). This proves (a)(ii).

It remains to consider the case where β +, β − > 1. Now we apply \(f_w^\gamma \) with γ > 1 and w ∈ (0, 1∕2) small enough, noting Remark 4. In this case (30) with (32) and Lemma 3 show that (78) holds, and repeating the argument below (78) shows that \(\mathbb {E}_x ( \tau _r^s ) = \infty \) for all s > 0. This proves part (b). □

References

Aspandiiarov, S.: On the convergence of 2-dimensional Markov chains in quadrants with boundary reflection. Stochastics Stochastics Rep. 53, 275–303 (1995)

Aspandiiarov, S., Iasnogorodski, R.: Tails of passage-times and an application to stochastic processes with boundary reflection in wedges. Stochastic Process. Appl. 66, 115–145 (1997)

Aspandiiarov, S., Iasnogorodski, R.: General criteria of integrability of functions of passage-times for nonnegative stochastic processes and their applications. Theory Probab. Appl. 43, 343–369 (1999). Translated from Teor. Veroyatnost. i Primenen. 43, 509–539 (1998) (in Russian)

Aspandiiarov, S., Iasnogorodski, R.: Asymptotic behaviour of stationary distributions for countable Markov chains, with some applications. Bernoulli 5, 535–569 (1999)

Aspandiiarov, S., Iasnogorodski, R., Menshikov, M.: Passage-time moments for nonnegative stochastic processes and an application to reflected random walks in a quadrant. Ann. Probab. 24, 932–960 (1996)

Asymont, I.M., Fayolle, G., Menshikov, M.V.: Random walks in a quarter plane with zero drifts: transience and recurrence. J. Appl. Probab. 32, 941–955 (1995)

Balaji, S., Ramasubramanian, S.: Passage time moments for multidimensional diffusions. J. Appl. Probab. 37, 246–251 (2000)

Bañuelos, R., Carroll, T.: Sharp integrability for Brownian motion in parabola-shaped regions. J. Funct. Anal. 218, 219–253 (2005)

Bañuelos, R., DeBlassie, R.D., Smits, R.: The first exit time of a planar Brownian motion from the interior of a parabola. Ann. Probab. 29, 882–901 (2001)

Fayolle, G.: On random walks arising in queueing systems: ergodicity and transience via quadratic forms as Lyapunov functions – Part I. Queueing Syst. 5, 167–184 (1989)

Fayolle, G., Iasnogorodski, R., Malyshev, V.: Random Walks in the Quarter-Plane. 2nd edn. Springer, Berlin (2017)

Fayolle, G., Malyshev, V.A., Menshikov, M.V.: Random walks in a quarter plane with zero drifts. I. Ergodicity and null recurrence. Ann. Inst. Henri Poincaré 28, 179–194 (1992)

Fayolle, G., Malyshev, V.A., Menshikov, M.V.: Topics in the Constructive Theory of Countable Markov Chains. Cambridge University Press, Cambridge (1995)

Foster, F.G.: On the stochastic matrices associated with certain queuing processes. Ann. Math. Stat. 24, 355–360 (1953)

Franceschi, S., Raschel, K.: Integral expression for the stationary distribution of reflected Brownian motion in a wedge. Bernoulli 25, 3673–3713 (2019)

Hobson, D.G., Rogers, L.C.G.: Recurrence and transience of reflecting Brownian motion in the quadrant. Math. Proc. Cambridge Philos. Soc. 113, 387–399 (1993)

Ignatyuk, I.A., Malyshev, V.A.: Classification of random walks in \({\mathbb Z}_+^4\). Selecta Math. 12, 129–194 (1993)

Kingman, J.F.C.: The ergodic behaviour of random walks. Biometrika 48, 391–396 (1961)

Lamperti, J.: Criteria for stochastic processes II: passage-time moments. J. Math. Anal. Appl. 7, 127–145 (1963)

Li, W.V.: The first exit time of a Brownian motion from unbounded convex domains. Ann. Probab. 31, 1078–1096 (2003)

MacPhee, I.M., Menshikov, M.V., Wade, A.R.: Moments of exit times from wedges for non-homogeneous random walks with asymptotically zero drifts. J. Theoret. Probab. 26, 1–30 (2013)

Malyshev, V.A.: Random Walks, The Wiener-Hopf Equation in a Quarter Plane, Galois Automorphisms. Moscow State University, Moscow (1970) (in Russian)

Malyshev, V.A.: Classification of two-dimensional positive random walks and almost linear semimartingales. Soviet Math. Dokl. 13, 136–139 (1972). Translated from Dokl. Akad. Nauk SSSR 202, 526–528 (1972) (in Russian)

Menshikov, M.V.: Ergodicity and transience conditions for random walks in the positive octant of space. Soviet Math. Dokl. 15, 1118–1121 (1974). Translated from Dokl. Akad. Nauk SSSR 217, 755–758 (1974) (in Russian)

Menshikov, M., Williams, R.J.: Passage-time moments for continuous non-negative stochastic processes and applications. Adv. Appl. Probab. 28, 747–762 (1996)

Menshikov, M., Popov, S., Wade, A.: Non-homogeneous random walks. Cambridge University Press, Cambridge (2017)

Mikhailov, V.A.: Methods of Random Multiple Access, Thesis. Dolgoprudny, Moscow, 1979 (in Russian)

Pinsky, R.G.: Transience/recurrence for normally reflected Brownian motion in unbounded domains. Ann. Probab. 37, 676–686 (2009)

Rosenkrantz, W.A.: Ergodicity conditions for two-dimensional Markov chains on the positive quadrant. Probab. Theory Relat. Fields 83, 309–319 (1989)

Vaninskii, K.L., Lazareva, B.V.: Ergodicity and nonrecurrence conditions of a homogeneous Markov chain in the positive quadrant. Probl. Inf. Transm. 24, 82–86 (1988). Translated from Problemy Peredachi Informatsii 24, 105–110 (1988) (in Russian)

Varadhan, S.R.S., Williams, R.: Brownian motion in a wedge with oblique reflection. Comm. Pure Appl. Math. 38, 405–443 (1985)

Williams, R.J.: Recurrence classification and invariant measures for reflected Brownian motion in a wedge. Ann. Probab. 13, 758–778 (1985)

Zachary, S.: On two-dimensional Markov chains in the positive quadrant with partial spatial homogeneity. Markov Process. Related Fields 1, 267–280 (1995)

Acknowledgements

A.M. is supported by The Alan Turing Institute under the EPSRC grant EP/N510129/1 and by the EPSRC grant EP/P003818/1 and the Turing Fellowship funded by the Programme on Data-Centric Engineering of Lloyd’s Register Foundation. Some of the work reported in this paper was undertaken during visits by M.M. and A.W. to The Alan Turing Institute, whose hospitality is gratefully acknowledged. The authors also thank two referees for helpful comments and suggestions.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendix: Properties of the Threshold Function

Appendix: Properties of the Threshold Function

For a constant b ≠ 0, consider the function

Set \(\alpha _0 := \frac {1}{2} \arctan ( -2b)\), which has 0 < |α 0| < π∕4.

Lemma 11

There are two stationary points of ϕ in \([-\frac {\pi }{2},\frac {\pi }{2}]\) . One of these is a local minimum at α 0 , with

The other is a local maximum, at \(\alpha _1 = \alpha _0 + \frac {\pi }{2}\) if b > 0, or at \(\alpha _1 = \alpha _0 - \frac {\pi }{2}\) if b < 0, with

Proof

We compute \(\phi ' (\alpha ) = \sin 2 \alpha + 2 b \cos 2 \alpha \) and \(\phi ^{\prime \prime }(\alpha ) = 2 \cos 2 \alpha - 4b \sin 2 \alpha \). Then ϕ′(α) = 0 if and only if \(\tan 2 \alpha = -2b\). Thus the stationary values of ϕ are \(\alpha _0 + k \frac {\pi }{2}\), \(k \in {\mathbb Z}\). Exactly two of these values fall in \([-\frac {\pi }{2},\frac {\pi }{2}]\), namely α 0 and α 1 as defined in the statement of the lemma. Also

so α 0 is a local minimum. Similarly, if |δ| = π∕2, then \(\sin 2 \delta = 0\) and \(\cos 2 \delta = -1\), so

and hence the stationary point at α 1 is a local maximum. Finally, to evaluate the values of ϕ at the stationary points, note that

and use the fact that \(2 \sin ^2 \alpha _0 = 1- \cos 2 \alpha _0\) to get ϕ(α 0), and that \(2 \cos ^2 \alpha _0 = \cos 2 \alpha _0 +1\) to get \(\phi (\alpha _1 ) = \cos ^2 \alpha _0 - b \sin 2 \alpha _0 = 1 - \phi (\alpha _0)\). □

Proof (of Proposition 1 )

By Lemma 11 (and considering separately the case \(\sigma _1^2 = \sigma _2^2\)) we see that the extrema of β c(Σ, α) over \(\alpha \in [ - \frac {\pi }{2}, \frac {\pi }{2}]\) are

as claimed at (6). It remains to show that the minimum is strictly positive, which is a consequence of the fact that

since \(\rho ^2 < \sigma _1^2 \sigma _2^2\) (as Σ is positive definite). □

Rights and permissions

Copyright information

© 2021 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Cite this chapter

Menshikov, M.V., Mijatović, A., Wade, A.R. (2021). Reflecting Random Walks in Curvilinear Wedges. In: Vares, M.E., Fernández, R., Fontes, L.R., Newman, C.M. (eds) In and Out of Equilibrium 3: Celebrating Vladas Sidoravicius. Progress in Probability, vol 77. Birkhäuser, Cham. https://doi.org/10.1007/978-3-030-60754-8_26

Download citation

DOI: https://doi.org/10.1007/978-3-030-60754-8_26

Published:

Publisher Name: Birkhäuser, Cham

Print ISBN: 978-3-030-60753-1

Online ISBN: 978-3-030-60754-8

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)

as r →∞.

as r →∞.