Abstract

In the current era of unprecedented cultural and political tension, the growing problem of misinformation has exacerbated social unrest within the online space. Rectifying this issue requires a robust understanding of the underlying factors that lead social media users to believe and spread misinformation. We investigate a set of neurophysiological measures as they relate to users interacting with misinformation, delivered via social media. A rating task, requiring participants to assess the validity of news headlines, reveals a stark contrast between their performance when engaging analytical thinking processes versus automatic thinking processes. We utilize this observation to theorize intervention methods that encourage more analytical thinking processes.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The topic of fake news (a subset of the broader concepts of misinformation and disinformation henceforth referred to as misinformation) has become extremely prevalent in the realm of professional and social discourse. Mainstream media and popular social media platforms (e.g. Facebook, Twitter, etc.) have faced waves of criticism for their inability to effectively block the dissemination of misinformation [1]. This is partially the result of the problem’s magnitude, whereby almost all social communication and media genres have been infected with various forms of misinformation. The political space was shook during the 2016 United States election where at least 25% of Americans opened a relevant misinformation webpage in the months leading to the election [1]. Additionally, we can see the cultural impact through the recent spread of extremely dangerous, and false, rhetoric regarding the dangers of vaccinations [2].

The technological aspects of misinformation research include (a) developing algorithmic approaches to distinguish misinformation and (b) designating information technology interventions to help users detect misinformation. In the past few years, the majority of research has been conducted on the first theme in which researchers utilize a combination of linguistic cues supplemented with machine learning network analysis, to analyze commonly spread online misinformation [3]. The literature under Theme (b) suggests that the current social media features such as fact-checkers and flagging systems are not fully effective in reducing users' belief in misinformation. One potential explanation is that these IT interventions are not addressing the real cause. For instance, flagging a piece of misinformation may not influence ideologically motivated users when it matches their pre-existing beliefs, a phenomenon referred to as confirmation bias [4].

People have a propensity to believe misinformation for numerous situational and dispositional factors, such as limited cognitive capacity and over-reliance on heuristics that makes individuals more susceptible to cognitive biases [5]. To effectively address the issue of misinformation we must first identify the key cognitive factors that lead to the belief in misinformation. This will enable the design of more effective interventions in order to reduce the incidence of social media users falling prey to misinformation.

The current study attempts to identify some of these key behavioral and cognitive factors that are correlated with a participant’s likelihood to believe in misinformation. To this end, we utilize a set of physiological tools as potential predictors of participant performance in identifying misinformation. Building upon the dual-process theories of cognition, we use Electroencephalogram (EEG) to interpret System 1 (automatic, reflective, and effortless) and System 2 (deliberate, analytical, and effortful) thinking processes during decision making [6]. Further, eye-tracking pupillometry is used to assess fluctuations in pupil dilation, which indicates the intensity of users’ cognitive load [7]. Identifying highly predictive factors amongst these measures will enable us to create a more robust experimental design, capable of conveying why people are susceptible to misinformation and how we can begin to rectify the underlying problem. Thus, the current study aims to answer one primary research question: Is there any neurophysiological evidence to support the notion that System 1 thinking processes are associated with the belief in misinformation? And if so, how can we leverage this to reduce the belief and spread of misinformation?

2 Literature Review

2.1 Dual Process Models of Cognition

The dual-process models point to the existence of two information processing modes in the human cognitive system that play a central role in evaluating arguments and forming impressions and beliefs [8]. The two modes have different processing principles and serve two distinct evolutionary purposes. The “associative processing mode” (i.e., System 1) relies on long-term memory to retrieve similarity-based information and form an impression about a stimulus [9]. This mode is a fast process that responds to an environmental cue in less than one second [10]. It searches for similar information to that cue in the long-term memory representation of one’s experiences built up over the years [8]. In contrast, the “rule-based processing mode” (i.e., System 2) engages in an effortful and time-consuming process of searching for evidence and logic to make a judgment about a statement [8]. This processing mode is a slow and conscious process, which is under our deliberate control and has much fewer cognitive resources than the associative mode [11]. As cognitive misers, individuals are reluctant to engage in deliberative and effortful rule-based cognition [12].

The effect of cognitive biases on decision-making can be explained by the dual process model that recognizes the two contradictory impression formation modes: intuitive and analytical. Cognitive biases are more associated with intuitive and automatic processing of information in which users do not analytically evaluate an argument and trust their intuition or “gut-feeling”. Intuitive processing is not only a main culprit of confirmation bias but also other biases that increase the likelihood that social media users will believe misinformation. (e.g., belief bias) [13].

Very few studies have been conducted to identify the neural correlates of System 1 and System 2. Pupillometry is considered as a measure of mental effort and cognitive states [7]. A study by Kahneman et al. [14] shows that utilizing system 2 resources is associated with an increase in pupil dilation. A recent EEG study was performed by Williams et al. [15] which replicated the Kahneman et al. experiment. They found that System 2 thinking is associated with frontal (Fz) theta rhythms (4–8 Hz) while System1 activities are correlated with increased parietal (Cpz) alpha rhysthms (10–12 Hz).

2.2 Research on Misinformation

Although research on misinformation existed for a long time, recently more researchers are showing interest in studying this phenomenon [16]. For example, in a recent study, participants were presented with articles containing misinformation, in the Facebook feed format and asked to indicate their accuracy. The results revealed via survey that most participants utilized personal judgement and familiarity with the articles source to distinguish misinformation [16]. A related study analyzed the effect of enabling co-annotations (i.e., the ability to make personal edits/additions) on social media news articles and how it impacts a user’s interaction with misinformation [18]. The limited scale of the study demonstrated that the additional medium for discourse reduced the likelihood of perpetuating false information.

3 Methodology

An experiment was designed to study the cognitive mechanisms associated with belief in misinformation. Headlines were generated within two distinct categories. Control Headlines, in which the headline could be very easily identified as true or false (e.g., Ottawa is the capital of Canada), and polarizing headlines, in which the primary topic or figure mentioned is politically polarizing (e.g. Donald Trump, Barak Obama, Climate change, Abortion, etc.). A pilot study was designed to identify a list of politically polarizing terms. Fifty volunteers rated terms (e.g., Trump, Obama, climate change, planned parenthood, etc.) based on a 5-point scale, ranging from highly positive, to highly negative conveying personal viewpoints. The top 10 most divisive (strong view in either direction) terms were used to generate the politically polarizing headlines. The headlines were constructed based on popular controversial news articles published on social media and fact checked by Snopes websiteFootnote 1. For each headline condition (Control/Polarizing), half the constructed headlines were false and the remaining half were true. In the main experiment, the headlines were presented to the participants in a template, which was constructed to closely mimic the format used in many social media and news website platforms (Fig. 1). Participants were instructed to rate the accuracy of the presented headlines on a 4-point scale. Each participant evaluated 20 and 40 news headlines in the control and polarizing conditions, respectively.

Each participant session was audio and video recorded. We report the characteristics of the measurement tools according to the guidelines recommended by Müller-Putz, Riedl. Participants were fitted with the Cognionics (Cognionics Inc., CA) Quick-20 dry EEG headset with 20 electrodes located according to the 10–20 system, capable of sampling at 500 Hz. This device has a wireless amplifier with 24-bit AD resolution. An unobtrusive TOBII X2–60 (Tobii Technology AB) eye tracking module was attached to the participants’ testing screen, capturing various metrics including gaze vectors, fixation points, and pupil dilation at 60 Hz. Thirteen participants, four female and nine male, participated in the preliminary study. Participant ages ranged between 18 and 55. Education level amongst participants ranged from a Bachelor’s degree to Doctorate. Participant recruitment was conducted via email, as well as TV/newsletter advertisement. Full data collection is currently underway. Ethics approval was secured from the Ethics Research Board at the authors’ university prior to any data collection.

3.1 Data Analysis

The EEG was preprocessed then fed into a decision tree classifier trained to predict the participant’s belief in each presented headline (Fig. 2). The goal of applying a classifier to EEG data is to identify the EEG components associated with correct/wrong responses and investigate whether such components include the correlates of system1/system2. In the first step, the continuous EEG was epoched by segmenting the last 2 s of each trial. Then the preprocessing was performed by (1) applying an FIR filter (0.1 Hz–40 Hz) to remove the noise, (2) rejecting bad channels, (3) removing noisy epochs, (3) running independent component analysis (ICA) to identify artifacts such as eye blinks using ADJUST plugin [19], and (4) rejecting bad components and reconstructing the EEG signal. We used Fast Fourier Transformation (FFT) to quantify the signal power based on four frequency ranges. Delta 0 to < 4 Hz, Theta 4 to < 8 Hz, Alpha 8 to 13 Hz, and Beta > 13 Hz. A total number of eighty features (20 Channels X four frequency powers) were fed into a decision tree classifier, which is a powerful binary classifier that maximizes the classification accuracy using information theory concepts such as entropy and mutual information [20]. 80% of the collected data was used for training and the remaining 20% for testing.

Eye tracking data was prepossessed by removing blinks, distorted pupil recordings (e.g. participant looking away from the screen), as well as data points in which the tracker was unable to record one of the participant’s eyes. A blank gray screen is presented for two seconds before each trial. This transition/reference screen is utilized as a baseline for each trial, allowing EEG and pupil values to stabilize from the previous trial. The baseline dilation for each trial is measured from the average, across both eyes, during this transition. The second measure is comprised of the average, across both eyes, dilation in the last 2 s of each trial (up to decision point). The pupil dilation difference (conveyed as a percentage), for each trial, is then calculated from these two measures.

4 Preliminary Results

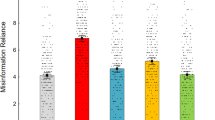

Participants were able to correctly identify misinformation, for control headlines, at 96% accuracy. In contrast, performance for the politically polarizing headlines was significantly lower at 58% accuracy (P < 0.05). Similar performance patterns were observed in participants’ response time where the average response time for control headlines was 6.7 s, while polarizing headlines trailed at 8.9 s (P < 0.05).

The two physiological measures, EEG and eye tracking, yielded varying results. The EEG decision tree classifier, constructed with 7 usable participant data sets, yielded a mean accuracy of 70%. EEG results also revealed that a majority incorrect responses were associated with parietal alpha activity (System 1 processes), while the majority of correct responses were associated with frontal theta activity (System 2 processes). Eye tracking pupil analysis yielded no distinct pattern or separation amongst control headlines, correct responses, and incorrect responses.

5 Discussion

EEG results suggest a distinction between System 1 (automatic) cognitive processes for incorrect responses and System 2 (analytical) for correct responses. This implies that when participants engage their analytical thinking they are much more likely to correctly identify misinformation. This result suggests that intervention methods that induce or encourage analytical thinking patterns are likely to reduce the acceptance and spread of misinformation. Having a user take an outsider’s perspective, as well as critically approaching the topic from an opposing viewpoint, has shown to elicit System 2 processes [21]. Utilizing this notion of encouraging System 2 thinking processes as an intervention method provides a stark contrast to the ineffective methods attempted thus far (e.g., Fact checkers and flagging systems). Such methods merely declare whether a claim is likely fake with minimal effect on misinformation acceptance, as opposed to having the reader analytically critique their own perspective on the presented headlines as suggested by the preliminary results of this study.

5.1 Next Steps

The experimental design used in our study can be amended to apply and test the efficacy of intervention methods designed to induce System 2 activation. This can be accomplished in two varying methods. First, participants could be required to complete a training session using a software application prior to completing the experiment, or presented half way through. This software would train participants to think critically, providing the tools to breakdown each headline and approach it from multiple perspectives. The second method would incorporate a performance breakdown of how accurate the participant has been with regard to correctly identifying misinformation. This text box would include an analytical breakdown of each headline, sourced from agencies of varying political and social biases, as well as a percentage indicating general performance. These intervention methods can be assessed by having a select portion of future participants undergo this training, or be presented with the performance breakdown. We can then contrast their accuracy of identifying misinformation, as well as analyze their neurophysiological response, with participants who didn’t undergo training or receive the performance breakdown to discern effectiveness of these interventions.

References

Guess, A.M., Nyhan, B., Reifler, J.: Exposure to untrustworthy websites in the 2016 U.S. election. 38.

Kata, A.: A postmodern pandora’s box: anti-vaccination misinformation on the internet. Vaccine. 28, 1709–1716 (2010). https://doi.org/10.1016/j.vaccine.2009.12.022

Conroy, N.J., Rubin, V.L., Chen, Y.: Automatic deception detection: Methods for finding fake news. Proc. Assoc. Inf. Sci. Tech. 52, 1–4 (2015)

Moravec, P., Kim, A., Dennis, A.: Flagging Fake News: System 1 vs. System 2 (2018).

Arceneaux, K.: Cognitive biases and the strength of political arguments. Am. J. Political Sci. 56, 271–285 (2012). https://doi.org/10.1111/j.1540-5907.2011.00573.x

Kahneman, D.: Thinking, Fast and Slow. Farrar Straus and Giroux, New York (2011)

Mathôt, S.: Pupillometry: psychology, physiology, and function. J. Cogn. 1, 16 (2018)

Smith, E.R., DeCoster, J.: Dual-process models in social and cognitive psychology: conceptual integration and links to underlying memory systems. Pers. Soc. Psychol. Rev. (2016). https://doi.org/10.1207/S15327957PSPR0402_01

Wilson, T.D., Lindsey, S., Schooler, T.Y.: A model of dual attitudes. Psychol. Rev. 107, 101–126 (2000). https://doi.org/10.1037/0033-295X.107.1.101

Bargh, J.A., Ferguson, M.J.: Beyond behaviorism: on the automaticity of higher mental processes. Psychol. Bull. 126, 925–945 (2000). https://doi.org/10.1037/0033-2909.126.6.925

Evans, J.S.B.T., Stanovich, K.E.: Dual-process theories of higher cognition: advancing the debate. Perspect. Psychol. Sci. 8, 223–241 (2013)

Fiske, S.T., Taylor, S.E.: Social Cognition. Mcgraw-Hill Book Company, New York (1991)

Torrens, D.: Individual differences and the belief bias effect: mental models, logical necessity, and abstract reasoning. Thinking Reasoning. 5, 1–28 (1999). https://doi.org/10.1080/135467899394066

Kahneman, D., Peavler, W.S., Onuska, L.: Effects of verbalization and incentive on the pupil response to mental activity. Can. J. Psychol. Revue canadienne de psychologie. 22, 186–196 (1968). https://doi.org/10.1037/h0082759

Williams, C., Kappen, M., Hassall, C.D., Wright, B., Krigolson, O.E.: Thinking theta and alpha: mechanisms of intuitive and analytical reasoning. NeuroImage 189, 574–580 (2019)

Porter, E., Wood, T.J., Kirby, D.: Sex trafficking, Russian infiltration, birth certificates, and pedophilia: a survey experiment correcting fake news. J. Exp. Political Sci. 5, 159–164 (2018)

Wood, G., Long, K.S., Feltwell, T., et al.: Rethinking engagement with online news through social and visual co-annotation. In: Proceedings of the 36th Annual ACM Conference on Human Factors in Computing Systems, CHI’18, pp. 1–12 (2018)

Flintham, M., Karner, C., Bachour, K., Creswick, H., Gupta, N., Moran, S.: Falling for fake news: investigating the consumption of news via social media. In: Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, pp. 376:1–376:10. ACM, New York (2018). https://doi.org/https://doi.org/10.1145/3173574.3173950.

Mognon, A., Jovicich, J., Bruzzone, L., Buiatti, M.: ADJUST: An automatic EEG artifact detector based on the joint use of spatial and temporal features. Psychophysiology 48, 229–240 (2011)

Polat, K., Güneş, S.: Classification of epileptiform EEG using a hybrid system based on decision tree classifier and fast Fourier transform. Appl. Math. Comput. 187, 1017–1026 (2007). https://doi.org/10.1016/j.amc.2006.09.022

Milkman, K.L., Chugh, D., Bazerman, M.H.: How can decision making be improved? Perspect. Psychol. Sci. 4, 379–383 (2009). https://doi.org/10.1111/j.1745-6924.2009.01142.x

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 The Editor(s) (if applicable) and The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Early, S., Mirhoseini, S., El Shamy, N., Hassanein, K. (2020). Relying on System 1 Thinking Leaves You Susceptible to the Peril of Misinformation. In: Davis, F.D., Riedl, R., vom Brocke, J., Léger, PM., Randolph, A.B., Fischer, T. (eds) Information Systems and Neuroscience. NeuroIS 2020. Lecture Notes in Information Systems and Organisation, vol 43. Springer, Cham. https://doi.org/10.1007/978-3-030-60073-0_5

Download citation

DOI: https://doi.org/10.1007/978-3-030-60073-0_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-60072-3

Online ISBN: 978-3-030-60073-0

eBook Packages: Computer ScienceComputer Science (R0)