Abstract

In this paper, we propose a penalized maximum likelihood method for variable selection in joint mean and covariance models for longitudinal data. Under certain regularity conditions, we establish the consistency and asymptotic normality of the penalized maximum likelihood estimators of parameters in the models. We further show that the proposed estimation method can correctly identify the true models, as if the true models would be known in advance. We also carry out real data analysis and simulation studies to assess the small sample performance of the new procedure, showing that the proposed variable selection method works satisfactorily.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

13.1 Introduction

In longitudinal studies, one of the main objectives is to find out how the average value of the response varies over time and how the average response profile is affected by different treatments or various explanatory variables of interest. Traditionally the within-subject covariance matrices are treated as nuisance parameters or assumed to have a very simple parsimonious structure, which inevitably leads to a misspecification of the covariance structure. Although the misspecification need not affect the consistency of the estimators of the parameters in the mean, it can lead to a great loss of efficiency of the estimators. In some circumstances, for example, when missing data are present, the estimators of the mean parameters can be severely biased if the covariance structure is misspecified. Therefore, correct specification of the covariance structure is really important.

On the other hand, the within-subject covariance structure itself may be of scientific interest, for example, in prediction problems arising in econometrics and finance. Moreover, like the mean, the covariances may be dependent on various explanatory variables. A natural constraint for modelling of covariance structures is that the estimated covariance matrices must be positive definite, making the covariance modelling rather challenging. Chiu et al. [2] proposed to solve this problem by using a matrix logarithmic transformation, defined as the inverse of the matrix exponential transformation by taking the spectral decomposition of the covariance matrix. Since there are no constraints on the upper triangular elements of the matrix logarithm, any structures of interest may be imposed on the elements of the matrix logarithm. But the limitation of this approach is that the matrix logarithm is lack a clear statistical interpretation. An alternative method to deal with the positive definite constraint of covariance matrices is to work on the modified Cholesky decomposition advocated by Pourahmadi [9, 10], and use regression formulations to model the unconstrained elements in the decomposition. The key idea is that any covariance matrix can be diagonalized by a unique lower triangular matrix with 1’s as its diagonal elements. The elements of the lower triangular matrix and the diagonal matrix enjoy a very clear statistical interpretation in terms of autoregressive coefficients and innovation variances, see, e.g., Pan and MacKenzie [8]. Ye and Pan [13] proposed an approach for joint modelling of mean and covariance structures for longitudinal data within the framework of generalized estimation equations, which does not require any distribution assumptions and only assumes the existence of the first four moments of the responses. However, a challenging issue for modelling joint mean and covariance structures is the high-dimensional problem, which arises frequently in many fields such as genomics, gene expression, signal processing, image analysis and finance. For example, the number of explanatory variables may be very large. Intuitively, all these variables should be included in the initial model in order to reduce the modelling bias. But it is very likely that only a small number of these explanatory variables contribute to the model fitting and the majority of them do not. Accordingly, these insignificant variables should be excluded from the initial model to increase prediction accuracy and avoid overfitting problem. Variable selection thus can improve estimation accuracy by effectively identifying the important subset of the explanatory variables, which may be just tens out of several thousands of predictors with a sample size being in tens or hundreds.

There are many variable selection criteria existing in the literature. Traditional variable selection criteria such as Mallow’s C p criteria, Akaike Information Criterion (AIC) and Bayes Information Criterion (BIC) all involve a combinatorial optimization problem, with computational loads increasing exponentially with the number of explanatory variables. This intensive computation problem hampers the use of traditional procedures. Fan and Li [4] discussed a class of penalized likelihood based methods for variable selection, including the bridge regression by Frank and Friedman [6], Lasso by Tibshirani [11] and smoothly clipped absolute deviation by Fan and Li [4]. In the setting of finite parameters, [4] further studied oracle properties for non-concave penalized likelihood estimators in the sense that the penalized maximum likelihood estimator can correctly identify the true model as if we would know it in advance. Fan and Peng [5] extended the results by letting the number of parameters have the order o(n 1∕3) and showed that the oracle properties still hold in this case. Zou [14] proposed an adaptive Lasso in a finite parameter setting and showed that the Lasso does not have oracle properties as conjectured by Fan and Li [4], but the adaptive Lasso does.

In this paper we aim to develop an efficient penalized likelihood based method to select important explanatory variables that make a significant contribution to the joint modelling of mean and covariance structures for longitudinal data. We show that the proposed approach produces good estimation results and can correctly identify zero regression coefficients for the joint mean and covariance models, simultaneously. The rest of the paper is organized as follows. In Sect. 13.2, we first describe a reparameterisation of covariance matrix through the modified Cholesky decomposition and introduce the joint mean and covariance models for longitudinal data. We then propose a variable selection method for the joint models via penalized likelihood function. Asymptotic properties of the resulting estimators are considered. The standard error formula of the parameter estimators and the choice of the tuning parameters are provided. In Sect. 13.3, we study the variable selection method and its sample properties when the number of explanatory variables tends to infinity with the sample size. In Sect. 13.4, we illustrate the proposed method via a real data analysis. In Sect. 13.5, we carry out simulation studies to assess the small sample performance of the method. In Sect. 13.6, we give a further discussion on the proposed variable selection method. Technical details on calculating the penalized likelihood estimators of parameters are given in Appendix A, and theoretical proofs of the theorems that summarize the asymptotic results are presented in Appendix B.

13.2 Variable Selection via Penalized Maximum Likelihood

13.2.1 Joint Mean and Covariance Models

Suppose that there are n independent subjects and the ith subject has m i repeated measurements. Let y ij be the jth measurement of the ith subject and t ij be the time at which the measurement y ij is made. Throughout this paper we assume that \({\mathbf {y}}_{i}=(y_{i1},\ldots ,y_{im_{i}})^{T}\) is a random sample of the ith subject from the multivariate normal distribution with the mean μ i and covariance matrix Σ i, where \(\mu _i=(\mu _{i1},\ldots ,\mu _{im_{i}})^{T}\) is an (m i × 1) vector and Σ i is an (m i × m i) positive definite matrix (i = 1, …, n). We consider the simultaneous variable selection procedure for the mean and covariance structures using penalized maximum likelihood estimation methods.

To deal with the positive definite constraint of the covariance matrices, we design an effective regularization approach to gain statistical efficiency and overcome the high dimensionality problem in the covariance matrices. We actually use a statistically meaningful representation that reparameterizes the covariance matrices by the modified Cholesky decomposition advocated by Pourahmadi [9, 10]. Specifically, any covariance matrix Σ i (1 ≤ i ≤ n) can be diagonalized by a unique lower triangular matrix T i with 1’s as its diagonal elements. In other words,

where D i is a unique diagonal matrix with positive diagonal elements. The elements of T i and D i have a very clear statistical interpretation in terms of autoregressive least square regressions. More precisely, the below-diagonal entries of T i = (−ϕ ijk) are the negatives of the regression coefficients of \(\widehat {y}_{ij}=\mu _{ij}+\sum _{k=1}^{j-1}\phi _{ijk}(y_{ik}-\mu _{ik})\), the linear least square predictor of y ij based on its predecessors y i1, …, y i(j−1), and the diagonal entries of \(D_{i}=\mbox{diag}(\sigma _{i1}^{2},\ldots ,\sigma _{im_{i}}^{2})\) are the prediction error variances \(\sigma _{ij}^{2}=\mbox{var}(y_{ij}-\widehat {y}_{ij})\) (1 ≤ i ≤ n, 1 ≤ j ≤ m i). The new parameters ϕ ijk’s and \(\sigma _{ij}^2\)’s are called generalized autoregressive parameters and innovation variances, respectively. By taking log transformation to the innovation variances, the decomposition (13.1) converts the constrained entries of {Σ i : i = 1, …, n} into two groups of unconstrained autoregressive regression parameters and innovation variances, given by {ϕ ijk : i = 1, …, n;j = 2, …, m i;k = 1, …, (j − 1)} and \(\{\log \sigma _{ij}^{2}: i=1,\ldots ,n, j=1,\ldots ,m_i\}\), respectively.

Based on the modified Cholesky decomposition, the unconstrained parameters μ ij, ϕ ijk and \(\log \sigma _{ij}^2\) are modelled in terms of the linear regression models

where x ij, z ijk and h ij are (p × 1), (q × 1) and (d × 1) covariates vectors, and β, γ and λ are the associated regression coefficients. The covariates x ij, z ijk and h ij may contain baseline covariates, polynomials in time and their interactions, etc. For example, when modelling stationary growth curve data using polynomials in time, the explanatory variables may take the forms \({\mathbf {x}}_{ij}=(1, t_{ij}, t_{ij}^2, \ldots , t_{ij}^{p-1})^T\), z ijk = (1, (t ij − t ik), (t ij − t ik)2, …, (t ij − t ik)q−1)T and \({\mathbf {h}}_{ij}=(1, t_{ij}, t_{ij}^2, \ldots , t_{ij}^{d-1})^T\). An advantage of the model (13.2) is that the resulting estimators of the covariance matrices can be guaranteed to be positive definite. In this paper we assume that the covariates x ij, z ijk and h ij may be of high dimension and we would select the important subsets of the covariates x ij, z ijk and h ij, simultaneously. We first assume all the explanatory variables of interest, and perhaps their interactions as well, are already included into the initial models. We then aim to remove the unnecessary explanatory variables from the models.

13.2.2 Penalized Maximum Likelihood

Many traditional variable selection criteria can be considered as a penalized likelihood which balances modelling biases and estimation variances [4]. Let ℓ(θ) denote the log-likelihood function. For the joint mean and covariance models (13.2), we propose the penalized likelihood function

where θ = (θ 1, …, θ s)T = (β 1, …, β p;γ 1, …, γ q;λ 1, …, λ d)T with s = p + q + d and \(p_{\tau ^{(l)}}(\cdot )\) is a given penalty function with the tuning parameter τ (l) (l = 1, 2, 3). Here we use the same penalty function p(⋅) for all the regression coefficients but with different tuning parameters τ (1), τ (2) and τ (3) for the mean parameters, generalized autoregressive parameters and log-innovation variances, respectively. The function form of p τ(⋅) determines the general behavior of the estimators. Antoniadis [1] defined the hard thresholding rule for variable selection by taking the hard thresholding penalty function as P τ(|t|) = τ 2 − (|t|− τ)2I(|t| < τ), where I(.) is the indicator function. The penality function p τ(⋅) may also be chosen as L p penalty. Especially, the use of L 1 penalty, defined by p τ(t) = τ|t|, leads to the least absolute shrinkage and selection operator (Lasso) proposed by Tibshirani [11]. Fan and Li [4] suggested using the smoothly clipped absolute deviation (SCAD) penalty function, which is defined by

for some a > 2. This penalty function is continuous, symmetric and convex on (0, ∞) but singular at the origin. It improves the Lasso by avoiding excessive estimation biases. Details of penalty functions can be found in [4].

The penalized maximum likelihood estimator of θ, denoted by \(\widehat {\boldsymbol {\theta }}\), maximizes the function Q(θ) in (13.3). With appropriate penalty functions, maximizing Q(θ) with respect to θ leads to certain parameter estimators vanishing from the initial models so that the corresponding explanatory variables are automatically removed. Hence, through maximizing Q(θ) we achieve the goal of selecting important variables and obtaining the parameter estimators, simultaneously. In Appendix A, we provide the technical details and an algorithm for calculating the penalized maximum likelihood estimator \(\widehat {\boldsymbol {\theta }}\).

13.2.3 Asymptotic Properties

In this subsection we consider the consistency and asymptotic normality of the penalized maximum likelihood estimator \(\widehat {\boldsymbol {\theta }}\). To emphasize its dependence on the subject number n, we also denote it by \(\widehat {\boldsymbol {\theta }}_n\). We assume that the number of the parameters, s = p + q + d, is fixed in the first instance. In the next section we will consider the case when s is a variable tending to infinity with n. Denote the true value of θ by θ 0. Without loss of generality, we assume that \(\boldsymbol {\theta }_{0}=((\boldsymbol {\theta }_{0}^{(1)})^{T},(\boldsymbol {\theta }_{0}^{(2)})^{T})^{T}\) where \(\boldsymbol {\theta }_{0}^{(1)}\) and \(\boldsymbol {\theta }_{0}^{(2)}\) are the nonzero and zero components of θ 0, respectively. Otherwise the components of θ 0 can be reordered. Denote the dimension of \(\boldsymbol {\theta }_{0}^{(1)}\) by s 1. In what follows we first show that the penalized maximum likelihood estimator \(\widehat {\boldsymbol {\theta }}_n\) exists and converges to θ 0 at the rate O p(n −1∕2), implying that it has the same consistency rate as the ordinary maximum likelihood estimator. We then prove that the \(\sqrt {n}\)-consistent estimator \(\widehat {\boldsymbol {\theta }}_n\) has the asymptotic normal distribution and possesses the oracle property under certain regularity conditions. The results are summarized in the following two theorems and the detailed proofs are provided in Appendix B. To prove the theorems in this paper, we require the following regularity conditions:

-

(A1)

The covariates x ij, z ijk and h ij are fixed. Also, for each subject the number of repeated measurements, m i, is fixed (i = 1, …, n;j = 1, …, m i;k = 1, …, j − 1).

-

(A2)

The parameter space is compact and the true value θ 0 is in the interior of the parameter space.

-

(A3)

The design matrices x i, z i and h i in the joint models are all bounded, meaning that all the elements of the matrices are bounded by a single finite real number.

-

(A4)

The dimensions of the parameter vectors β, γ, and λ, that is, p n, q n and d n, have the same order as s n.

-

(A5)

The nonzero components of the true parameters \(\theta _{01}^{(1)},\ldots ,\theta _{0s_{1}}^{(1)}\) satisfy

$$\displaystyle \begin{aligned} \begin{array}{rcl} \min_{1\leq j\leq s_{1}}\left\{\frac{|\theta_{0j}^{(1)}|}{\tau_{n}}\right\}\rightarrow \infty ~ ~ (\mbox{as}~ n \rightarrow \infty), \end{array} \end{aligned} $$where τ n is equal to either \(\tau _n^{(1)}\), \(\tau _n^{(2)}\) or \(\tau _n^{(3)}\), depending on whether \(\theta _{0j}^{(1)}\) is a component of β 0, γ 0, and λ 0 (j = 1, …, s 1).

Theorem 13.1

Let

whereθ 0 = (θ 01, …, θ 0s)Tis the true value ofθ, and τ nis equal to either \(\tau ^{(1)}_{n}\), \(\tau ^{(2)}_{n}\)or \(\tau ^{(3)}_{n}\), depending on whether θ 0jis a component ofβ 0, γ 0orλ 0(1 ≤ j ≤ s). Assume a n = O p(n −1∕2), b n → 0 and τ n → 0 as n →∞. Under the conditions (A1)–(A3) above, with probability tending to 1 there must exist a local maximizer \(\widehat {\boldsymbol {\theta }}_n\)of the penalized likelihood function Q(θ) in (13.3) such that \(\widehat {\boldsymbol {\theta }}_n\)is a \(\sqrt {n}\)-consistent estimator ofθ 0.

We now consider the asymptotic normality property of \(\widehat {\theta }_n\). Let

where τ n has the same definition as that in Theorem 13.1, and \(\theta _{0j}^{(1)}\) is the jth component of \(\boldsymbol {\theta }_{0}^{(1)}\) (1 ≤ j ≤ s 1). Denote the Fisher information matrix of θ by \(\mathcal {I}_n(\boldsymbol {\theta })\).

Theorem 13.2

Assume that the penalty function \(p_{\tau _{n}}(t)\) satisfies

and \(\bar {\mathcal {I}}_n=\mathcal {I}_n(\boldsymbol {\theta }_0)/n\)converges to a finite and positive definite matrix \(\mathcal {I}(\boldsymbol {\theta }_0)\)as n →∞. Under the same mild conditions as these given in Theorem 13.1, if τ n → 0 and \(\sqrt {n}\tau _{n}\rightarrow \infty \)as n →∞, then the \(\sqrt {n}\)-consistent estimator \(\widehat {\boldsymbol {\theta }}_n=(\widehat {\boldsymbol {\theta }}^{(1)T}_n, \widehat {\boldsymbol {\theta }}^{(2)T}_n)^{T}\)in Theorem 13.1must satisfy \(\widehat {\boldsymbol {\theta }}^{(2)}_n=0\)and

in distribution, where \(\bar {\mathcal {I}}^{(1)}_n\)is the (s 1 × s 1) submatrix of \(\bar {\mathcal {I}}_n\)corresponding to the nonzero components \(\boldsymbol {\theta }_0^{(1)}\)and \(I_{s_1}\)is the (s 1 × s 1) identity matrix.

Note for the SCAD penalty we can show

for t > 0, where a > 2 and (x)+ = xI(x > 0). Since τ n → 0 as n →∞, we then have a n = 0 and b n = 0 so that c n = 0 and A n = 0 when the sample size n is large enough. It can be verified that in this case the conditions in Theorems 13.1 and 13.2 are all satisfied. Accordingly, we must have

in distribution. This means that the estimator \(\widehat {\boldsymbol {\theta }}_n^{(1)}\) shares the same sampling property as if we would know \(\boldsymbol {\theta }_{0}^{(2)}=\mathbf {0}\) in advance. In other words, the penalized maximum likelihood estimator of θ based on the SCAD penalty can correctly identify the true model as if we would know it in advance. This property is the so-called oracle property by Fan and Li [4]. Similarly, the parameter estimator based on the hard thresholding penalty also possesses the oracle property. For the Lasso penalty, however, the parameter estimator does not have the oracle property. A brief explanation for this is given as follows. Since \(p_{\tau _n}(t)=\tau _n t\) for t > 0 and then \(p^{\prime }_{\tau _n}(t)=\tau _n\), the assumption of a n = O p(n −1∕2) in Theorem 13.1 implies τ n = O p(n −1∕2), leading to \(\sqrt {n}\tau _{n}=O_{p}(1)\). On the other hand, one of the conditions in Theorem 13.2 is \(\sqrt {n}\tau _{n}\rightarrow \infty \) as n →∞, which conflicts the assumption of \(\sqrt {n}\tau _{n}=O_{p}(1)\). Hence the oracle property cannot be guaranteed in this case.

13.2.4 Standard Error Formula

As a consequence of Theorem 13.2, the asymptotic covariance matrix of \(\widehat {\boldsymbol {\theta }}^{(1)}_n\) is

so that the asymptotic standard error for \(\widehat {\boldsymbol {\theta }}^{(1)}_n\) is straightforward. However, \(\bar {\mathcal {I}}^{(1)}_n\) and A n are evaluated at the true value \(\boldsymbol {\theta }_0^{(1)}\), which is unknown. A natural choice is to evaluate \(\bar {\mathcal {I}}^{(1)}_n\) and A n at the estimator \(\widehat {\boldsymbol {\theta }}^{(1)}_n\) so that the estimator of the asymptotic covariance matrix of \(\widehat {\boldsymbol {\theta }}^{(1)}_n\) is obtained through (13.5).

Corresponding to the partition of θ 0, we assume θ = (θ (1)T, θ (2)T)T. Denote

where \(\boldsymbol {\theta }_0=(\boldsymbol {\theta }^{(1)T}_0, \mathbf {0})^T\). Also, let

Using the observed information matrix to approximate the Fisher information matrix, the covariance matrix of \(\widehat {\boldsymbol {\theta }}^{(1)}_n\) can be estimated through

where \(\widehat {\mbox{Cov}}\{\ell '(\widehat {\boldsymbol {\theta }}^{(1)}_n)\}\) is the covariance of ℓ′(θ (1)) evaluated at \(\boldsymbol {\theta }^{(1)}=\widehat {\boldsymbol {\theta }}^{(1)}_n\).

13.2.5 Choosing the Tuning Parameters

The penalty function \(p_{\tau ^{(l)}}(\cdot )\) involves the tuning parameter τ (l) (l = 1, 2, 3) that controls the amount of penalty. We may use K-fold cross-validation or generalized cross-validation [4, 11] to choose the most appropriate tuning parameters τ’s. For the purpose of fast computation, we prefer the K-fold cross-validation approach, which is described briefly as follows. First, we randomly split the full dataset \(\mathcal {D}\) into K subsets which are of about the same sample size, denoted by \(\mathcal {D}^{v}\) (v = 1, …, K). For each v, we use the data in \(\mathcal {D}-\mathcal {D}^{v}\) to estimate the parameters and \(\mathcal {D}^{v}\) to validate the model. We also use the log-likelihood function to measure the performance of the cross-validation method. For each τ = (τ (1), τ (2), τ (3))T, the K-fold likelihood based cross-validation criterion is defined by

where I v is the index set of the data in \(\mathcal {D}^{v}\), and \(\widehat {\boldsymbol {\beta }}^{-v}\) and \(\widehat {\varSigma }_{i}^{-v}\) are the estimators of the mean parameter β and the covariance matrix Σ i obtained by using the training dataset \(\mathcal {D}-\mathcal {D}^{v}\). We then choose the most appropriate tuning parameter τ by minimizing CV(τ). In general, we may choose the number of data subsets as K = 5 or K = 10.

13.3 Variable Selection when the Number of Parameters s = s n →∞

In the previous section, we assume that the numbers of the parameters β, γ, and λ, i.e., p, q and d and therefore s, are fixed. In some circumstances, it is not uncommon that the number of explanatory variables increase with the sample size. In this section we consider the case where the number of parameters s n is a variable, which goes to infinity as the sample size n tends to infinity. In what follows, we study the asymptotic properties of the penalized maximum likelihood estimator in this case.

As before, we assume that \(\boldsymbol {\theta }_{0}=(\boldsymbol {\theta }_{0}^{(1)T}, \boldsymbol {\theta }_{0}^{(2)T})^{T}\) is the true value of θ where \(\boldsymbol {\theta }_{0}^{(1)}\) and \(\boldsymbol {\theta }_{0}^{(2)}\) are the nonzero and zero components of θ 0, respectively. Also, we denote the dimension of θ 0 by s n, which increases with the sample size n this time. Similar to the previous section, we first show that there exists a consistent penalized maximum likelihood estimator \(\widehat {\boldsymbol {\theta }}_n\) that converges to θ 0 at the rate \(O_{p}(\sqrt {s_{n}/n})\). We then show that the \(\sqrt {n/s_{n}}\)-consistent estimator \(\widehat {\boldsymbol {\theta }}_n\) has an asymptotic normal distribution and possesses the oracle property.

Theorem 13.3

Let

where \(\boldsymbol {\theta }_0=(\theta _{01},\ldots ,\theta _{0s_n})^T\)is the true value ofθ, and τ nis equal to either \(\tau ^{(1)}_{n}\), \(\tau ^{(2)}_{n}\)or \(\tau ^{(3)}_{n}\), depending on whether θ 0jis a component of β 0, γ 0or λ 0(1 ≤ j ≤ s). Assume \(a_{n}^{*}=O_{p}(n^{-1/2})\), \(b_{n}^{*}\rightarrow 0\), τ n → 0 and \(s_{n}^{4}/n\rightarrow 0\)as n →∞. Under the conditions (A1)–(A5) above, with probability tending to one there exists a local maximizer \(\widehat {\boldsymbol {\theta }}_n\)of the penalized likelihood function Q(θ) in (13.3) such that \(\widehat {\boldsymbol {\theta }}_n\)is a \(\sqrt {n/s_{n}}\)-consistent estimator ofθ 0.

In what follows we consider the asymptotic normality property of the estimator \(\widehat {\boldsymbol {\theta }}_n\). Denote the number of nonzero components of θ 0 by s 1n(≤ s n). Let

where τ n is equal to either \(\tau ^{(1)}_{n}\), \(\tau ^{(2)}_{n}\) or \(\tau ^{(3)}_{n}\), depending on whether θ 0j is a component of β 0, γ 0 or λ 0 (1 ≤ j ≤ s), and \(\theta _{0j}^{(1)}\) is the jth component of \(\boldsymbol {\theta }_{0}^{(1)}\) (1 ≤ j ≤ s 1n). Denote the Fisher information matrix of θ by \(\mathcal {I}_n(\boldsymbol {\theta })\).

Theorem 13.4

Assume that the penalty function \(p_{\tau _{n}}(t)\) satisfies

and \(\bar {\mathcal {I}}_n=\mathcal {I}_n(\boldsymbol {\theta }_0)/n\)converges to a finite and positive definite matrix \(\mathcal {I}(\boldsymbol {\theta }_0)\)as n →∞. Under the same mild conditions as these in Theorem 13.3, if τ n → 0, \(s_{n}^{5}/n\rightarrow 0\)and \(\tau _n\sqrt {n/s_{n}}\rightarrow \infty \)as n →∞, then the \(\sqrt {n/s_{n}}\)-consistent estimator \(\widehat {\boldsymbol {\theta }}_n=(\widehat {\boldsymbol {\theta }}^{(1)T}_n, \widehat {\boldsymbol {\theta }}^{(2)T}_n)^{T}\)in Theorem 13.3must satisfy \(\widehat {\boldsymbol {\theta }}^{(2)}_n=0\)and

in distribution, where \(\bar {\mathcal {I}}^{(1)}_n\)is the (s 1n × s 1n) submatrix of \(\bar {\mathcal {I}}_n\)corresponding to the nonzero components \(\boldsymbol {\theta }_0^{(1)}\), M nis an (k × s 1n) matrix satisfying \(M_{n}M_{n}^{T}\rightarrow \boldsymbol {G}\)as n →∞,Gis an (k × k) positive definite matrix and k(≤ s 1n) is a constant.

The technical proofs of Theorems 13.3 and 13.4 are provided in Appendix B. Similar to the finite parameters setting, for the SCAD penalty and hard thresholding penalty functions, it can be verified that the conditions in Theorems 13.3 and 13.4 are all satisfied. In this case, we have

in distribution. That means the estimator \(\widehat {\boldsymbol {\theta }}^{(1)}_n\) shares the same sampling property as if we would know \(\boldsymbol {\theta }_{0}^{(2)}=0\) in advance. In other words, the estimation procedures based on the SCAD and hard thresholding penalty have the oracle property. However, the L 1-based penalized maximum likelihood estimator like Lasso does not have this property. Based on Theorem 13.4, similar to the finite parameters case the asymptotic covariance estimator of \(\widehat {\boldsymbol {\theta }}^{(1)}\) can also be constructed but the details are omitted.

13.4 Real Data Analysis

In this section, we apply the proposed procedure to the well known CD4+ cell data analysis, of which the data details can be found in [3]. The human immune deficiency virus (HIV) causes AIDS by reducing a person’s ability to fight infection. The HIV attacks an immune cell called the CD4+ cell which orchestrates the body’s immunoresponse to infectious agents. An uninfected individual usually has around 1100 cells per millilitre of blood. When infected, the CD4+ cells decrease in number with time and an infected person’s CD4+ cell number can be used to monitor the disease progression. The data set we analyzed consists of 369 HIV-infected men. Altogether there are 2376 values of CD4+ cell numbers, with several repeated measurements being made for each individual at different times covering a period of approximately eight and a half years.

For this unbalanced longitudinal data set, information from several explanatory variables is recorded, including X 1 = time, X 2 = age, X 3 = smoking habit (the number of packs of cigarettes smoked per day), X 4 = recreational drug use (1, yes; 0, no), X 5 = number of sexual partners, and X 6 = score on center for epidemiological studies of depression scale. The objectives of our analysis are: (a) to identify covariates that really affect the CD4+ cell numbers in the sense that they are statistically significant in either the mean or covariance models, and (b) to estimate the average time course for the HIV-infected men by taking account of measurement errors in the CD4+ cell collection. Ye and Pan [13] analyzed the CD4+ count data with a focus on the second objective and did not include the explanatory variables except the time. Following [13], we propose to use three polynomials in time, one of degree 6 and two cubics, to model the mean μ ij, the generalized autoregressive parameters ϕ ijk and the log-innovation variances \(\log \sigma _{ij}^{2}\). In the meantime, the explanatory variables X 2, …, X 6 above and the intercept X 0 are also included in the initial models for the selection purpose. The ordinary maximum likelihood estimation and the penalized maximum likelihood estimation methods using the SCAD, Lasso and Hard-thresholding penalty functions are all considered. The unknown tuning parameters τ (l) (l = 1, 2, 3) of the penalty functions are estimated through using the 5-fold cross-validation principle described in Sect. 13.2.5, and the resulting estimators are summarized in Table 13.1. It is noted that the SCAD penalty function given in (13.4) also involves another parameter a. Here we choose a = 3.7 as suggested by Fan and Li [4].

For the mean, generalized autoregressive parameters and log-innovation variances, the estimated regression coefficients and their associated standard errors, in parentheses, by different penalty estimation methods, are presented in Tables 13.2, 13.3, and 13.4. It is noted that in Table 13.3γ 1, …, γ 4 correspond to the coefficients of the cubic polynomial in time lag, γ 5 is associated with the time-independent covariate X 2, and the other coefficients γ 6, …, γ 13 correspond to the time-dependent covariates X 3, …, X 6 measured at two different time points, denoted by (X 31, X 32),…,(X 61, X 62).

From Tables 13.2, 13.3, and 13.4, it is clear that for the mean structure the estimated regression coefficients of the sixth power polynomial in time are statistically significant. For the generalized autoregressive parameters and the innovation variances, the estimated regression coefficients of cubic polynomials in time are significant. This confirms the conclusion drawn by Ye and Pan [13]. Furthermore, Table 13.2 shows that there is little evidence for the association between age and immune response, but the smoking habit and the use of recreational drug have significant positive effects on the CD4+ numbers. In addition, the number of sexual partners seems to have little effect on the immune response, although it shows some evidence of negative association. Also, there is a negative association between depression symptoms (score) and immune response.

Interestingly, Table 13.3 clearly indicates that except the cubic polynomial in time lag all other covariates do not have significant influences to the generalized autoregressive parameters, implying that the generalized autoregressive parameters are characterized only by the cubic polynomial in time lag. For the log-innovation variances, however, Table 13.4 shows that in addition to the cubic polynomial in time, the smoking habit, the use of recreational drug, and the number of sexual partners do have significant effects, implying that the innovation variances and therefore the within-subject covariances are not homogeneous and are actually dependent on the covariates of interests. Finally, we notice that in this data example the SCAD, Lasso and Hard thresholding penalty based methods perform very similarly in terms of the selected variables.

13.5 Simulation Study

In this section we conduct a simulation study to assess the small sample performance of the proposed procedures. We simulate 100 subjects, each of which has five observations drawn from the multivariate normal distribution \(\mathcal {N}_5(\mu _i, \varSigma _{i})\), where the mean μ i and the within-subject covariance matrix Σ i are formed by the joint models (13.2) in the framework of the modified Cholesky decomposition. We choose the true values of the parameters in the mean, generalized autoregressive parameters and log-innovation variances to be β = (3, 0, 0, −2, 1, 0, 0, 0, 0, −4)T, γ = (−4, 0, 0, 2, 0, 0, 0)T and λ = (0, 1, 0, 0, 0, −2, 0)T, respectively. We form the mean covariates \({\mathbf {x}}_{ij}=(x_{ijt})_{t=1}^{10}\) by drawing random samples from the multivariate normal distribution with mean 0 and covariance matrix of AR(1) structure with σ 2 = 1 and ρ = 0.5 (i = 1, 2, …, 100;j = 1, 2, …, 5). We then form the covariates \({\mathbf {z}}_{ijk}=(x_{ijt}-x_{ikt})^{7}_{t=1}\) and \({\mathbf {h}}_{ij}=(x_{ijt})^{7}_{t=1}\) for the generalized autoregressive parameters and the log-innovation variances. Using these values, the mean μ i and covariance matrix Σ i are constructed through the modified Cholesky decomposition. The responses y i are then drawn from the multivariate normal distribution \(\mathcal {N}(\mu _i, \varSigma _{i})\) (i = 1, 2, …, 100).

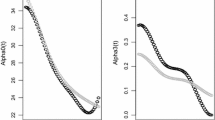

In the simulation study, 1000 repetitions of random samples are generated by using the above data generation procedure. For each simulated data set, the proposed estimation procedures for finding out the ordinary maximum likelihood estimators and penalized maximum likelihood estimators with SCAD, Lasso and Hard-thresholding penalty functions are considered. The unknown tuning parameters τ (l), l = 1, 2, 3 for the penalty functions are chosen by a 5-fold cross-validation criterion in the simulation. For each of these methods, the average of zero coefficients over the 1000 simulated data sets is reported in Table 13.5. Note that ‘True’ in Table 13.5 means the average number of zero regression coefficients that are correctly estimated as zero, and ‘Wrong’ depicts the average number of non-zero regression coefficients that are erroneously set to zero. In addition, the non-zero parameter estimators, and their associated standard errors as well, are provided in Table 13.6. From those simulation results, it is clear that the SCAD penalty method outperforms the Lasso and Hard thresholding penalty approaches in the sense of correct variable selection rate, which significantly reduces the model uncertainty and complexity.

13.6 Discussion

Within the framework of joint modelling of mean and covariance structures for longitudinal data, we proposed a variable selection method based on penalized likelihood approaches. Like the mean, the covariance structures may be dependent on various explanatory variables of interest so that simultaneous variable selection to the mean and covariance structures becomes fundamental to avoid the modelling biases and reduce the model complexities.

We have shown that under mild conditions the proposed penalized maximum likelihood estimators of the parameters in the mean and covariance models are asymptotically consistent and normally distributed. Also, we have shown that the SCAD and Hard thresholding penalty based estimation approaches have the oracle property. In other words, they can correctly identify the true models as if the true models would be known in advance. In contrast, the Lasso penalty based estimation method does not share the oracle property. We also considered the case when the number of explanatory variables goes to infinity with the sample size and obtained similar results to the case with finite number of variables.

The proposed method differs from [7] where they only addressed the issue of variable selection in the mean model without modelling the generalized autoregressive parameters and innovation variances. It is also different from [12] where a different decomposition of the covariance matrix, namely moving average coefficient based model, was employed, and the variable selection issue was discussed under the decomposition but with the number of explanatory variables fixed. In contrast, the proposed models and methods in this paper are more flexible, interpretable and practicable.

References

Antoniadis, A.: Wavelets in statistics: a review (with discussion). J. Ital. Stat. Assoc. 6, 97–144 (1997)

Chiu, T.Y.M., Leonard, T., Tsui, K.W.: The matrix-logarithm covariance model. J. Am. Stat. Assoc. 91, 198–210 (1996)

Diggle, P.J., Heagerty, P.J., Liang, K.Y., Zeger, S.L.: Analysis of Longitudinal Data, 2nd edn. Oxford University, Oxford (2002)

Fan, J., Li, R.: Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Stat. Assoc. 96, 1348–60 (2001)

Fan, J., Peng, H.: Nonconcave Penalized likelihood with a diverging number of parameters. Ann. Stat. 32, 928–61 (2004)

Frank, I.E., Friedman, J.H.: A statistical view of some chemometrics regression tools. Technometrics 35, 109–148 (1993)

Lee, J., Kim, S., Jhong, J., Koo, J.: Variable selection and joint estimation of mean and covariance models with an application to eQTL data. Comput. Math. Methods Med. 2018, 13 (2018)

Pan, J., MacKenzie, G.: Model selection for joint mean-covariance structures in longitudinal studies. Biometrika 90, 239–44 (2003)

Pourahmadi, M.: Joint mean-covariance models with applications to lontidinal data: unconstrained parameterisation. Biometrika 86, 677–90 (1999)

Pourahmadi, M.: Maximum likelihood estimation fo generalised linear models for multivariate normal covariance matrix. Biometrika 87, 425–35 (2000)

Tibshirani, R.: Regression shrinkage and selection via the Lasso. J. R. Stat. Soc. B 58, 267–88 (1996)

Xu, D., Zhang, Z., Wu, L.: Joint variable selection of mean-covariance model for longitudinal data. Open J. Stat. 3, 27–35 (2013)

Ye, H.J., Pan, J.: Modelling of covariance structures in generalized estimating equations for longitudinal data. Biometrika 93, 927–41 (2006)

Zou, H.: The adaptive Lasso and its oracle properties. J. Am. Stat. Assoc. 101, 1418–29 (2006)

Acknowledgements

We would like to thank the editors and an anonymous referee for their very constructive comments and suggestions, which makes the paper significantly improved. This research is supported by a research grant from the Royal Society of the UK (R124683).

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Appendices

Appendix A: Penalized Maximum Likelihood Estimation

Firstly, note the first two derivatives of the log-likelihood function ℓ(θ) are continuous. Around a given point θ 0, the log-likelihood function can be approximated by

Also, given an initial value t 0 we can approximate the penalty function \(p_{r}^{\prime }(t)\) by a quadratic function [4]

In other words,

Therefore, the penalized likelihood function (13.3) can be locally approximated, apart from a constant term, by

where

where θ = (θ 1, …, θ s)T = (β 1, …, β p, γ 1, …, γ q, λ 1, …, λ d)T and θ 0 = (θ 01, …, θ 0s)T = (β 01, …, β 0p, γ 01, …, γ 0q, λ 01, …, λ 0d)T. Accordingly, the quadratic maximization problem for Q(θ) leads to a solution iterated by

Secondly, as the data are normally distributed the log-likelihood function ℓ(θ) can be written as

where

Therefore, the resulting score functions are

where

where

According to [13], the Fisher information matrix \(\mathcal {I}_n(\boldsymbol {\theta })\) must be block diagonal. In other words, \(\mathcal {I}_n(\boldsymbol {\theta })=\mbox{diag}(\mathcal {I}_{11}, \mathcal {I}_{22}, \mathcal {I}_{33})\), where

By using the Fisher information matrix to approximate the observed information matrix, we obtain the following iteration solution

Since \(\mathcal {I}_n(\boldsymbol {\theta })\) is block diagonal, the above iteration solution is equivalent to

where all the relevant quantities on the right hand side are evaluated at θ = θ 0, and

Finally, the following algorithm summarizes the computation of the penalized maximum likelihood estimators of the parameters in the joint mean and covariance models.

Algorithm

-

0.

Take the ordinary least squares estimators (without penalty) β (0), γ (0) and λ (0) of β, γ and λ as their initial values.

-

1.

Given the current values {β (s), γ (s), λ (s)}, update

$$\displaystyle \begin{aligned} \begin{array}{rcl} \boldsymbol{r}_{i}^{(s)}={\mathbf{y}}_{i}-{\mathbf{x}}_{i}\boldsymbol{\beta}^{(s)},~\phi_{ijk}^{(s)}={\mathbf{z}}_{ijk}^{T}\boldsymbol{\gamma}^{(s)},~\log[(\sigma_{ij}^{2})^{(s)}]={\mathbf{h}}_{ij}^{T}\boldsymbol{\lambda}^{(s)}, \end{array} \end{aligned} $$and then use the above iteration solutions to update γ and λ until convergence. Denote the updated results by γ (s+1) and λ (s+1).

-

2.

For the updated values γ (s+1) and λ (s+1), form

$$\displaystyle \begin{aligned}\phi_{ijk}^{(s+1)}={\mathbf{z}}_{ijk}^{T}\boldsymbol{\gamma}^{(s+1)}, ~ \mbox{and} ~\log [(\sigma_{ij}^{2})^{(s+1)}]={\mathbf{h}}_{ij}^{T}\boldsymbol{\lambda}^{(s+1)},\end{aligned}$$and construct

$$\displaystyle \begin{aligned}\varSigma_{i}^{(s+1)}=(T_{i}^{(s+1)})^{-1}D_{i}^{(s+1)}[(T_{i}^{(s+1)})^{T}]^{-1}.\end{aligned}$$Then update β according to

$$\displaystyle \begin{aligned} \begin{array}{rcl} \boldsymbol{\beta}^{(s+1)}&\displaystyle =&\displaystyle \left\{\sum_{i=1}^{n}{\mathbf{x}}_{i}^{T}(\varSigma_{i}^{(s+1)})^{-1}{\mathbf{x}}_{i}+ n\varSigma_{\tau^{(1)}}(\boldsymbol{\beta}^{(s)})\right\}^{-1} \left\{\sum_{i=1}^{n}{\mathbf{x}}_{i}^{T}(\varSigma_{i}^{(s+1)})^{-1}{\mathbf{y}}_{i}\right\}. \end{array} \end{aligned} $$ -

3.

Repeat Step 1 and Step 2 above until certain convergence criteria are satisfied. For example, it can be considered as convergence if the L 2-norm of the difference of the parameter vectors between two adjacent iterations is sufficiently small.

Appendix B: Proofs of Theorems

Proof of Theorem 13.1

Note that \(p_{\tau _n}(0)=0\) and \(p_{\tau _n}(\cdot )>0\). Obviously, we have

We consider K 1 first. By using Taylor expansion, we know

where θ ∗ lies between θ 0 and θ 0 + n −1∕2u. Note the fact that n −1∕2∥ℓ′(θ 0)∥ = O p(1). By applying Cauchy-Schwartz inequality, we obtain

According to Chebyshev’s inequality, we know that for any ε > 0,

so that n −1∥ℓ ′′(θ 0) − Eℓ ′′(θ 0)∥ = o p(1). It then follows directly that

Therefore we conclude that K 12 dominates K 11 uniformly in ∥u∥ = C if the constant C is sufficiently large.

We then study the term K 2. It follows from Taylor expansion and Cauchy-Schwartz inequality that

Since it is assumed that a n = O p(n −1∕2) and b n → 0, we conclude that K 12 dominates K 2 if we choose a sufficiently large C. Therefore for any given ε > 0, there exists a large constant C such that

implying that there exists a local maximizer \(\widehat {\boldsymbol {\theta }}_n\) such that \(\widehat {\boldsymbol {\theta }}_n\) is a \(\sqrt {n}\)-consistent estimator of θ 0. The proof of Theorem 13.1 is completed. □

Proof of Theorem 13.2

First, we prove that under the conditions of Theorem 13.2, for any given θ (1) satisfying \(\boldsymbol {\theta }^{(1)}-\boldsymbol {\theta }^{(1)}_0=O_p(n^{-1/2})\) and any constant C > 0, we have

In fact, for any θ j (j = s 1 + 1, …, s), using Taylor’s expansion we obtain

where θ ∗ lies between θ and θ 0. By using the standard argument, we know

Note ∥θ −θ 0∥ = O p(n −1∕2). We then have

According to the assumption in Theorem 13.2, we obtain

so that

Therefore Q(θ) achieves its maximum at θ = ((θ (1))T, 0 T)T and the first part of Theorem 13.2 has been proved. □

Second, we discuss the asymptotic normality of \(\widehat {\boldsymbol {\theta }}^{(1)}_n\). From Theorem 13.1 and the first part of Theorem 13.2, there exists a penalized maximum likelihood estimator \(\widehat {\boldsymbol {\theta }}^{(1)}_n\) that is the \(\sqrt {n}\)-consistent local maximizer of the function Q{((θ (1))T, 0 T)T}. The estimator \(\widehat {\boldsymbol {\theta }}^{(1)}_n\) must satisfy

In other words, we have

Using the Liapounov form of the multivariate central limit theorem, we obtain

in distribution. Note that

it follows immediately by using Slustsky’s theorem that

in distribution. The proof of Theorem 13.2 is complete. □

Proof of Theorem 13.3

Let α n = (n∕s n)−1∕2. Note \(p_{\tau _{n}}(0)=0\) and \(p_{\tau _{n}}(\cdot )>0\). We then have

Using Taylor’s expansion, we obtain

where \(\boldsymbol {\theta }_{0}^{*}\) lies between θ 0 and θ 0 + α nu. Note that \(\|\ell '(\boldsymbol {\theta }_{0})\|=O_{p}(\sqrt {ns_{n}})\). By using Cauchy-Schwartz inequality, we conclude that

According to Chebyshev’s inequality, for any ε > 0 we have

which implies that \(\frac {s_{n}}{n}\left \|\ell ^{\prime \prime }(\boldsymbol {\theta }_{0})-E\ell ^{\prime \prime }(\boldsymbol {\theta }_{0})\right \|=o_{p}(1)\). It then follows that

Therefore we know that K 12 dominates K 11 uniformly in ∥u∥ = C for a sufficiently large constant C.

We now turn to K 2. It follows from Taylor’s expansion that

Since \(b_{n}^{*}\rightarrow 0\) as n→0, it is clear that K 12 dominates K 2 if a sufficiently large constant C is chosen. In other words, for any given ε > 0 there exists a large constant C such that

as long as n is large enough. This implies that there exists a local maximizer \(\widehat {\boldsymbol {\theta }}_{n}\) in the ball {θ 0 + α nu : ∥u∥≤ C} such that \(\widehat {\boldsymbol {\theta }}_{n}\) is a \(\sqrt {n/s_{n}}\)-consistent estimator of θ 0. The proof of Theorem 13.3 is completed. □

Proof of Theorem 13.4

The proof of Theorem 13.4 is similar to that of Theorem 13.2. In what follows we only give a very brief proof. First, it is easy to show that under the conditions of Theorem 13.4, for any given θ (1) satisfying \(\|\boldsymbol {\theta }^{(1)}-\boldsymbol {\theta }^{(1)}_{0}\|=O_p((n/s_{n})^{-1/2})\) and any constant C, the following equality holds

Based on this fact and Theorem 13.3, there exists an \(\sqrt {n/s_{n}}\)-consistent estimator \(\widehat {\boldsymbol {\theta }}_{n}^{(1)}\) that is the local maximizer of Q{((θ (1))T, 0 T)T}. Let \(\bar {\mathcal {I}}_{n}^{(1)}=\mathcal {I}_{n}^{(1)}/n\). Similar to the proof of Theorem 13.2, we can show that

so that

By using Lindeberg-Feller central limit theorem, we can show that

has an asymptotic multivariate normal distribution. The result in Theorem 13.4 follows immediately according to Slustsky’s theorem. The proof of Theorem 13.4 is complete. □

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this chapter

Cite this chapter

Kou, C., Pan, J. (2020). Variable Selection in Joint Mean and Covariance Models. In: Holgersson, T., Singull, M. (eds) Recent Developments in Multivariate and Random Matrix Analysis. Springer, Cham. https://doi.org/10.1007/978-3-030-56773-6_13

Download citation

DOI: https://doi.org/10.1007/978-3-030-56773-6_13

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-56772-9

Online ISBN: 978-3-030-56773-6

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)