Abstract

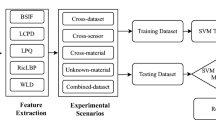

Recently, fingerprint recognition systems are widely deployed in our daily life. However, spoofing via using special materials such as silica, gelatin, Play-Doh, clay, etc., is one of the most common methods of attacking fingerprint recognition systems. To handle the above defects, a fingerprint liveness detection (FLD) technique is proposed. In this paper, we propose a novel structure to discriminate genuine or fake fingerprints. First, to describe the subtle differences between them and make full use of each algorithm, this paper extracts three types of different fine-grained texture features, such as SIFT, LBP, HOG. Next, we developed a feature fusion rule, including five fusion operations, to better integrate the above features. Finally, those fused features are fed into an SVM classifier for the subsequent classification. Experimental results on the benchmark LivDet 2013 fingerprints indicate that the classification performance of our method outperforms other FLD methods proposed in recent literature.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

With the rapid development of multimedia and digital imaging technology, it is recently possible to collect large amounts of high-resolution images using sophisticated digital cameras or other high-resolution sensors. Because of the convenience and security of biometrics, biometric recognition techniques have a broad application prospect in the field of identity authentication and network security. There are a variety of biometric authentication methods, including fingerprint, face, and iris, among which fingerprint recognition is the most common. Fingerprints are characterized by uniqueness, stability, and invariability. Moreover, compared with traditional authentication methods, fingerprint recognition requires no password to be remembered. Accordingly, personal identities can be associated with a fingerprint [1]. Afterward, we can verify the authenticity of a user’s identity by comparing his or her fingerprint features with pre-saved features in a database. Fingerprints are claimed to be safe and hard to steal and copy. However, with the increasing demand for high-level security in some smart devices, research points out that these fingerprint authentication devices are unsafe and easily spoofed by some artificial replicas made from wax, moldable plastic, Play-Doh, clay or gelatin [2] when the simulation performance is high enough and the texture is clear enough. Thus, the security of fingerprint recognition systems is threatened. To cope with the above issues, a fingerprint liveness detection (FLD) [3] method has been proposed.

SIFT [4] (Scale-Invariant Feature transform) has the characteristics of invariant rotation, invariant scale and invariant brightness, which are conducive to the effective and efficient expression of target texture information. However, it fails to extract those features from smoothing targets. LBP [5] (Local Binary Pattern) has the advantages of invariant rotation and invariant gray to some extent. However, it does not meet invariant scale. HOG [6] (Histogram of Oriented Gradient) can represent the structural texture information of an edge (gradient), and it can reflect the local shape information. Because of the neglect of the influence of light brightness in the image, the extracted feature adopting HOG contains a lot of noise. In addition, due to the use of block and unit processing ideas, the relationship between the center and adjacent pixels is well represented. In order to describe the subtle differences between genuine or fake fingerprints and take advantage of each feature algorithm, this paper proposes a novel structure for fingerprint liveness detection.

The remainder of this paper is organized as follows. Section 2 describes the related work to FLD in recent years. Section 3 presents the Methodology. Experiments are given in Sect. 4. Finally, conclusions are drawn in Sect. 5.

2 Related Work

In modern times, fingerprint recognition systems are widely deployed in mobile devices, such as tablets, laptops, and smart cellphones. However, one of the common problems with these devices is that they neglect to verify the authenticity of fingerprints before identification. Namely, they do not have the ability to distinguish between genuine and fake fingerprint images [7], which has led to the emergence of FLD technology to solve the problems of spoofing attacks. Researchers and scholars have devoted considerable effort to differentiating genuine fingerprints from fake ones during the past several years by analyzing different physical or psychological traits [7]. Through the research of FLD methods at home and abroad, we note that the existing FLD methods fall into two categories: hardware-based FLD methods and software-based FLD methods. Some recent studies have depicted that anti-spoofing FLD methods based on hardware can discriminate those genuine fingerprints from fake ones only by measuring physiological characteristics, such as pulse oximetry, skin resistance, blood oxygen, temperature, electrocardiogram, etc. Although the above biometrics can recognize genuine and fake fingerprints, the drawback is that these instruments are bulky, expensive and require trained professionals to perform measurements [8]. Hence, to save costs and simplify operations, an ideal anti-spoofing detection method is to exploit as few additional hardware devices as possible and assign all the work to the computer, and some novel software based FLD methods without any additional sensors are proposed. Moreover, late maintenance based on a software detection strategy is also very convenient only via a simple software upgrade.

Texture is an important visual trait that describes the homogeneity phenomenon of the image, and it reflects the arrangement property of the surface structure with slow change. It can reflect different texture phenomenon for those genuine and fake fingerprints, such as morphology, smoothness, and orientation; hence, the texture representation has been used in FLD and is the main content of this paper. At present, how to better represent the texture pattern of the fingerprints and achieve a higher classification accuracy is the hotspot of FLD. Recently, Abhyankar et al. [9] proposed a novel FLD method by combining the multiresolution texture analysis and the interring frequency analysis. They attempted to use different texture information to quantify how the pixel value distribution of the fingerprints change when the physical structure changes. Next, two feature extraction methods, including a multiresolution texture analysis and a ridge-frequency analysis, are utilized. Finally, they exploit the Fuzzy C-means classifier for those combined features to distinguish genuine fingers from fake ones. In 2012, Yuan [10] assessed the recently introduced Local Phase Quantization (LPQ) algorithm and applied it to FLD. A series of experiments based on several common feature extraction algorithms were performed, including LPQ, LBP, LBP’s variants [11], and results also demonstrated that LPQ based detection performance was optimal.

3 Methodology

3.1 Feature Extraction

In order to distinguish the genuine fingerprints from the fake ones, the key is to extract the differentiable features between them. Accordingly, this paper designs a novel fingerprint liveness detection structure analyzing three epidemic feature extractors, including SIFT, LBP, and HOG, to extract subtle differences between them. On one hand, the above three methods can extract some different fine-grained (local) texture features of fingerprints; On the other hand, after feature fusion, the advantages of each algorithm can be fully utilized to make up for the detailed information that cannot be observed by a single feature. Experimental results also demonstrate that the performance of feature fusion is better than that of a single feature extractor.

SIFT: Scale-invariant feature transform, which is a kind of local feature descriptor, can detect the key subtle information between genuine and fake fingerprints. As a stable local feature descriptor, SIFT remains unchanged when these images are rotated and zoomed, even when the intensity changes. First, the image scale is reconstructed using the gray-scale transformation to gain the multi-scale space representation sequences of images, and the main contour of the scale space is extracted from these sequences, which are regarded as a feature vector to realize the extraction of key points in edge and corner detection at different resolutions. Then, to ensure that the detected key points are local extremum points in the scale space and two-dimensional image space, each pixel point is compared with its adjacent pixel points. In addition, the stable extremum points are extracted in different scale space to guarantee the scale invariance of the key points. Moreover, to make the key points invariable to the image angle and rotation, the direction assignment is operated by finding the gradient of each extremum. Finally, the key point descriptor is to generate a unique vector by dividing the pixel area around the key point into blocks, calculating the gradient histogram within the key point. This vector is an abstract representation of the image (Fig. 1).

During the above calculation, the scale space L(x, y, σ) denotes convolutional operation between an original image I(x, y) and a variable-scale 2D Gauss function G(x, y, σ). The two-dimensional Gaussian distribution formula is as follows:

The scale space of the image is the calculation of convolution operation using two-dimensional Gaussian distribution and the original image. The scale space expression is as follows:

LBP: LBP [12, 13] is an operator used to describe local texture features of images, and it has the obvious advantages of rotation invariance and gray invariance. The goal is to measure the local contrast of the fingerprints and describe the local texture information of the image.

Before constructing the local texture, we need to preprocess the given image, then transform the image into grey-scale image and analyze the relationship between central pixel and adjacent pixels. The LBP operator is defined in the window of size 3 × 3, and the threshold is the central pixel in the window. Next, the central pixel is compared with its adjacent 8 pixels. If the adjacent pixel is larger than the central pixel, the position of the pixel is marked as 1; otherwise it is marked as 0. In this way, an 8-bit binary number has been generated by comparing with the adjacent 8 points in a window of size 3 × 3 and arranging them in sequence to form a binary number. Take this value as the new value of the pixel in the center of the window to reflect the texture information. It worth nothing that there are 256 kinds of LBP values and each value can represent a different texture.

Because LBP records the difference between the central pixel and its adjacent pixel, when the light changes cause the gray value of the pixel to increase and decrease at the same time, the change in LBP is not obvious. Therefore, it can be considered that LBP is not sensitive to the change of illumination. LBP only detects the texture information of images, so it can further make histogram statistics of LBP which are used as the feature operator of texture analysis. Generally, the image after LBP operation is divided into many square regions, such as 4 × 4, 10 × 10 or 16 × 16, and we can get 16, 100, 256 histograms representing the feature of fingerprint images by means of the above regions.

HOG [14] is short for histogram of oriented gradient, and it consists of local features formed by calculating the gradient histogram of the given images. Since HOG denotes the structural feature of an edge (gradient), it can describe the local shape information; thus, it is a commonly used feature descriptor. The quantization of position and direction space can restrain the influence of translation and rotation to some extent. Moreover, after normalizing the histogram in the local region, the influence of illumination change can be partially offset.

The detailed implementation steps are as follows:

-

Step 1: Before calculation, grayscale and gamma correction are carried on reducing the influence of local shadow and light changes in the image. Meanwhile, to some extent, the interference of noise is suppressed;

-

Step 2: To obtain a histogram of gradient, the horizontal and the vertical gradients of the image by the convolution of the filter and the image are calculated;

-

Step 3: Next, the magnitude and direction of each pixel are calculated;

-

Step 4: After that, each cell consists of 4 × 4 pixels, and the histogram of gradients is computed for each pixel in the cell;

-

Step 5: Before feature generation, to make the generated feature robust to light, shadow and edge changes, it is necessary to normalize the HOG features of the block. Finally, make 4 × 4 cells denote a block and concatenate the features of the block to get the final feature of image, which is employed for subsequent classification.

As shown in Fig. 2, visual images of the genuine and fake feature fingerprints using the HOG method are listed. Among them, the genuine fingerprint features are evenly distributed, the fake fingerprints are damaged more, and there are stains and other fuzzy states.

3.2 Feature Fusion Rule

By analyzing the features extracted using the above three algorithms, the ways of feature fusion are diverse. Thus, to describe the difference between genuine and fake fingerprints, in this paper, we develop a new feature fusion rule to fuse the extracted features. Because the dimensions of extracted features are different, it is difficult to directly splice them. Hence, to piece together these features of different dimensions, we need to make up 0 for the features of the above different dimensions before concatenation. In this paper, five types of different feature fusion rules are set, including an addition operation, maximum operation, minimum operation, average operation and concatenation operation. Table 1 reports the specific operation for each feature fusion rule, where F denotes the feature, the SIFT, LBP and HOG in the subscript are the corresponding features, and the addition operation, maximum operation, minimum operation, average operation and concatenation operation are abbreviated as Add, Max, Min, Ave and Con. The detailed operations are shown in Table 1.

3.3 Parameter Optimization

After fusing the features using our proposed rule, the generated features will be fed into an SVM (Support Vector Machine) classifier for the subsequent training and testing. However, in order to obtain a better model classifier, it is necessary to perform parameter optimization before model training.

SVM is a learning method based on the criterion of structural risk minimization, which is divided into two categories: linear SVM and nonlinear SVM, depending on the nuclear function used. To eliminate the influence of outliers between features and limit the features to a certain range, a standardization operation should be performed first so as to eliminate the adverse effects caused by outliers’ samples and dimensions. Then, to gain a robust and effective model classifier, optimization of the parameters C and gamma (that is <C, g>) for the fused features, which are constructed via our proposed fusion rule, is necessary. Figure 3 gives the result images of parameter optimization in the Biometrika dataset, and we can obtain the optimal parameter pair (<C, g>) from each figure. Finally, these fused features are trained via an SVM classifier with the optimal parameter pair <C, g>.

4 Experiments

4.1 Database

The detection performance of our proposed schema is verified using the benchmark fingerprint image set LivDet2013 [1], which consists of a total of 16853 genuine and fake fingerprints and constructed via adoption of four different flat optical sensors, including Biometrika, CrossMatch, Italdata and Swipe. In addition, two types of fingerprints are included: A training dataset with a total of 8450 images and a testing dataset with a total of 8403 images. The training image set is used to learn and obtain a model classifier, and the performance evaluation of the model classifier is evaluated using the testing dataset. Note that it is hard to observe the slight difference between the real fingerprints and the fake ones by the naked eye. The detailed distribution of the LivDet 2013 dataset is shown in Table 2. From Table 2, we can find that the ratio of genuine fingerprints and fake fingerprints is 1:1 approximately, and the sizes/scales of given fingerprints are varied from 315 × 372 to 1500 × 208.

4.2 Experimental Process and Performance Evaluation

First, to eliminate the influence of light and other factors for the fingerprints, an image gray processing operation has been performed. Next, the features of the fingerprints are extracted using three feature extraction algorithms, including SIFT, LBP and HOG. The features extracted based on the above three algorithms are only sensitive to some feature changes, but not to other features. Hence, the classification performance of the fingerprint liveness detection adopting a single feature method is unsatisfactory, and the experimental results confirm this point in this paper. To solve the problem, one possible solution is to fuse the features to make up for the shortcomings of a single feature method. Thus, the feature fusion operation, combined with the strengths of each algorithm, can reach the goal of complementary weaknesses. In addition, feature fusion for multiple different algorithms can also enhance final performance.

The specific feature fusion rules involved in this paper are classified into five types: addition operation, maximum operation, minimum operation, average operation and concatenation operation. Due to the difference between the above three algorithms, the dimensions of the feature extracted are inconsistent. To perform successfully the above five feature fusion operations, insufficient parts need to be filled with 0.

Since the distribution and range of each feature are different, it is necessary to map these features extracted to the same interval by normalization operations to make the components of features consistent. Moreover, rescaling to the appropriate range can make training and testing faster. Before gaining a robust model classifier, parameter optimization needs to be carried out to find the optimal parameter pair <C, g> ; the optimal parameter is then exploited for the subsequent model training and testing. Finally, the classification result is obtained by using a trained model classifier.

In order to verify the performance of the feature extraction algorithm in the paper, we adopt average classification error (ACE) [15,16,17] as a metric. The formula is defined as follows:

In formula 3, FAR denotes the probability that a fake fingerprint is mistaken as the genuine fingerprint, and FRR is the probability that a genuine fingerprint is mistaken as the fake one. The outcome of fingerprint liveness detection may be any value between 0 and 100. Suppose that the given threshold is 50, and the value of a detected image is more than 50. This image can be recognized as a genuine fingerprint. If not, it will be recognized as a fake fingerprint. Finally, we can obtain the performance of our proposed algorithm by using formula 3.

4.3 Results

First, we analyze and evaluate the performance of our method on the LivDet 2013 dataset when adopting different feature fusion rules, including addition operation, maximum operation, minimum operation, average operation and concatenation operation. The results are reported in Table 3. From Table 3, we can find that, in general, the detection performance after feature fusion is better than that of a single feature algorithm. For example, in the Biometrika dataset, the classification accuracies of SIFT, LBP and HOG are 86.7, 94.0 and 93.8 respectively. After performing the feature fusion operation, the classification accuracy of LBP+HOG is 99.9. Namely, feature fusion can improve the detection performance of genuine and fake fingerprints. In the CrossMatch dataset, the classification results of SIFT, LBP and HOG are 88.8, 90.6 and 90.5, respectively. After performing the feature fusion operation, the classification accuracy of SIFT+LBP is 93.6, and the FLD performance has been further improved. In addition, the time after testing all datasets is also listed in Table 3, and is quite acceptable. Moreover, the task of testing a fingerprint is basically done without our even knowing it, showing that our method is also applicable to real life.

Table 4 lists the detailed comparison results when adopting different concatenation operations. In Table 4, the ACEs of our proposed method are the lowest. To compare the performance of different algorithms, the optimal results for each sensor are all highlighted in bold in each row. The result of the Biometrika sensor in LivDet 2013 is close to 0, and the ACE of our method is 0.02 lower than the second result of [21]. The FLD method based on convolutional neural networks (CNN) achieves the state-of-the-art performance in Crossmatch, but it has some drawbacks. For example, the training time of the model is long, the interpretability of the features based on CNN is weak, a large number of training samples are required, and it relies on high-performance computers. However, the texture feature algorithms, which can capture these subtle differences between genuine and fake fingerprints, are used to solve those shortcomings of CNN. In addition, our results of Italdata and Crossmatch are 2.75 and 2.8 higher than the two results of [20, 21] in Table 4, respectively; however, the results of Biometrika and Swipe sensors are 0.7 and 2.55, respectively, lower than that of [20, 21]. Table 3 shows that different feature fusion methods should be used to obtain a better detection performance when the types of fingerprint scanners are known.

5 Conclusion

It is well known that the SIFT feature descriptor is characterized by invariant rotation, scale and brightness; The HOG feature descriptor ignored the influence of light on the image, reducing the dimension of the feature for the image; The LBP feature descriptor is insensitive to light and fast to operate. Combining the advantages of SIFT features, LBP features, and HOG features can make up for the shortcomings of each algorithm and improve the final detection performance. Finally, these fused features are fed into the SVM classifier for the subsequent training and testing. Contrasted by experiment, the classification performance based on fused features by using SIFT, HOG and LBP is better than other FLD methods, and our method is more suitable for fingerprint liveness detection to prevent spoof attacks related to these artificial replicas.

References

Ghiani, L., et al.: Livdet 2013 fingerprint liveness detection competition 2013. International Conference on Biometrics (ICB), pp. 1–6, Madrid, Spain (2013)

Yuan, C., Xia, Z., Sun, X., Sun, D., Lv, R.: Fingerprint liveness detection using multiscale difference co-occurrence matrix. Opt. Eng. 55(6), 1–10 (2016)

Zhang, Y., Tian, J., Chen, X., Yang, X., Shi, P.: Fake finger detection based on thin-plate spline distortion model. In: Lee, S.-W., Li, S.Z. (eds.) ICB 2007. LNCS, vol. 4642, pp. 742–749. Springer, Heidelberg (2007). https://doi.org/10.1007/978-3-540-74549-5_78

Lowe, D.G.: Object recognition from local scale-invariant features. In: International Conference on Computer Vision, Corfu, Greece, pp. 1150–1157 (1999)

Ojala, T., Pietikäinen, M., Mäenpää, T.: Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern Anal. Mach. Intell. 24(7), 971–987 (2002)

Dalal, N., Triggs, B.: Histograms of oriented gradients for human detection. In: Proceedings of IEEE Conference CVPR, San Diego, United States, pp. 886–893 (2005)

Maltoni, D., Maio, D., Jain, A., Prabhakar, S.: Synthetic fingerprint generation. In: Handbook of Fingerprint Recognition, vol. 33, no. 5, p. 1314 (2005)

Marasco, E., Sansone, C.: Combining perspiration- and morphology-based static features for fingerprint liveness detection. Pattern Recogn. Lett. 33(9), 1148–1156 (2012)

Abhyankar, A., Schuckers, S.: Fingerprint liveness detection using local ridge frequencies and multiresolution texture analysis techniques. In: International Conference on Image Processing. Atlanta, GA, USA (2007)

Yuan, C., Sun, X., Lv, R.: Fingerprint liveness detection based on multi-scale LPQ and PCA. China Commun. 13(7), 60–65 (2016)

Nogueira, R.F., Lotufo, R.D.A., Machado, R.C.: Evaluating software-based fingerprint liveness detection using convolutional networks and local binary patterns. In: Biometric Measurements and Systems for Security and Medical Applications, pp. 22–29 (2014)

Beamer, L.J., Carroll, S.F., Eisenberg, D.: The BPI/LBP family of proteins: a structural analysis of conserved regions. Protein Sci. 7(4), 906–914 (2010)

Tan, X., Triggs, B.: Fusing Gabor and LBP feature sets for Kernel-based face recognition. In: Zhou, S.K., Zhao, W., Tang, X., Gong, S. (eds.) AMFG 2007. LNCS, vol. 4778, pp. 235–249. Springer, Heidelberg (2007). https://doi.org/10.1007/978-3-540-75690-3_18

Saito, H., Tatebayashi, K.: Regulation of the osmoregulatory HOG MAPK cascade in yeast. J. Biochem. 136(3), 267–272 (2004)

Lee, H.-S., Maeng, H.-J., Bae, Y.-S.: Fake finger detection using the fractional Fourier transform. In: Fierrez, J., Ortega-Garcia, J., Esposito, A., Drygajlo, A., Faundez-Zanuy, M. (eds.) BioID 2009. LNCS, vol. 5707, pp. 318–324. Springer, Heidelberg (2009). https://doi.org/10.1007/978-3-642-04391-8_41

Nogueira, R.F., Lotufo, R.D.A., Machado, R.C.: Evaluating software-based fingerprint liveness detection using convolutional networks and local binary patterns. In: IEEE Workshop on Biometric Measurements & Systems for Security & Medical Applications, Rome, Italy. IEEE (2014)

Yuan, C., Sun, X., Wu, Q.M.J.: Difference co-occurrence matrix using BP neural network for fingerprint liveness detection. Soft. Comput. 23(13), 5157–5169 (2018). https://doi.org/10.1007/s00500-018-3182-1

Gottschlich, C., Marasco, E., Yang, A., Cuick, B.: Fingerprint liveness detection based on histograms of invariant gradients. In: IEEE International Joint Conference on Biometrics, Clearwater, FL, USA, pp. 1–7 (2014)

Jiang Y., Liu, X.: Uniform local binary pattern for fingerprint liveness detection in the Gaussian pyramid. J. Electr. Comput. Eng., 1–9 (2018)

Yuan, C., Xia, Z., Sun, X., Wu, Q.M.J.: Deep residual network with adaptive learning framework for fingerprint liveness detection. IEEE Trans. Cogn. Dev. Syst., 1–13 (2019)

Nogueira, R.F., Lotufo, R.D.A., Machado, R.C.: Fingerprint liveness detection using convolutional neural networks. IEEE Trans. Inf. Forensics Secur. 11(6), 1206–1213 (2016)

Acknowledgements

The authors are grateful for the anonymous reviewers who made valuable comments and improvements. Furthermore, many thanks to Xinting Li and Weijin Cheng for helping us polish the article and make suggestions. This research was funded by the Canada Research Chair Program and the NSERC Discovery Grant; by the Startup Foundation for Introducing Talent of NUIST (2020r015); by the Priority Academic Program Development of Jiangsu Higher Education Institutions (PAPD) fund; by the Collaborative Innovation Center of Atmospheric Environment and Equipment Technology (CICAEET) fund, China.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Yuan, C., Jonathan Wu, Q.M. (2020). Fingerprint Liveness Detection Based on Multi-modal Fine-Grained Feature Fusion. In: McDaniel, T., Berretti, S., Curcio, I., Basu, A. (eds) Smart Multimedia. ICSM 2019. Lecture Notes in Computer Science(), vol 12015. Springer, Cham. https://doi.org/10.1007/978-3-030-54407-2_35

Download citation

DOI: https://doi.org/10.1007/978-3-030-54407-2_35

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-54406-5

Online ISBN: 978-3-030-54407-2

eBook Packages: Computer ScienceComputer Science (R0)