Abstract

Whole blood resuscitation was the primary treatment for hemorrhagic shock for half a century beginning with World War I. The development of blood separation techniques and component therapy was intended to improve blood availability and patient outcomes. However, these advances, in conjunction with theories about the need to replace extracellular fluid losses, resulted in the overuse of crystalloid and red blood cell (RBC) resuscitation, to the detriment of hemorrhaging patients for decades. As studies revealed higher ratios of plasma and platelet transfusions to RBCs were necessary to prevent and correct coagulopathy in traumatic hemorrhage, there was a return to proven strategies of the past, employing permissive hypotension, prompt surgical control of bleeding, and blood product resuscitation with component ratios approximating the composition of whole blood. Today, most institutions have adopted a massive transfusion protocol that employs whole blood approximation with a 1:1:1 or 1:1:2 ratio of platelet to plasma to RBC component transfusion. However, whole blood resuscitation has made a resurgence in recent years, and it may return as the product of choice for severe traumatic hemorrhage. Future studies are needed to determine the efficacy and safety of this pendulum swing, and many questions are still unanswered. However, continued inquiry and innovation remain in the quest for improved survival in traumatic hemorrhage.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

The Birth of Transfusion

William Harvey first described the circulation of blood in 1628 as “the beat of the heart and arteries, the transfer of blood from veins to arteries, and its distribution to the body.” Through experimentation with animal vein ligation, he noted “two kinds of death, failure from a lack [of blood flow] and suffocation from excess” [1]. This led to a few scattered reports of experiments involving blood transfusion in subsequent years, including those of Richard Lower, who performed experiments on dogs with exsanguinating hemorrhage at Oxford, England, in 1666 [2]. However, it would be another two centuries before the understanding of circulation would result in the first recorded human blood transfusion and with it the potential to save people from exsanguinating hemorrhage.

In 1817, during a time when bloodletting to cure disease was rampant, John Henry Leacock questioned, “what is there repugnant to the idea of trying to cure diseases arising from an opposite cause [as exsanguinating hemorrhage] by an opposite remedy, to wit by transfusion?” [3, 4]. A year later, the hope of survival in severe hemorrhage was introduced by the British obstetrician James Blundell. After experimenting with blood transfusion in dogs, he performed a series of human blood transfusions for women who bled during childbirth, with a 50% success rate [5, 6]. Over the next half century, reports of transfusion are scarce, with only a handful appearing in the literature. These include transfusions by Samuel Choppin at Charity Hospital in 1854, Daniel Brainard in Chicago in 1860, and US Civil War doctors who transfused at least four Union soldiers [3]. Blood transfusion as the treatment for exsanguinating hemorrhage remained rare due to limited donors, no ability to store blood, and a lack of an effective method of transfusion. As a result, saline intravenous fluids became the standard of care in severe hemorrhage [7].

There was a resurgence of interest in blood transfusions in the 1870s and 1880s with the development of multiple transfusion instruments; however, it was not until the discovery of blood groups by Karl Landsteiner and Reuben Ottenberg early in the twentieth century that reduced transfusion reactions and decreased morbidity and mortality became possible [3, 6, 8, 9]. In 1915, Richard Lewinsohn discovered that sodium citrate with dextrose was a safe preservative, thus enabling blood storage [2, 6].

The Era of the World Wars

The outbreak of World War I (WWI) in 1914 led to a large cadre of severely injured combatants, and the shortcomings of crystalloid resuscitation for hemorrhagic shock became evident. It was demonstrated to result in only a transient response and was ultimately found to be detrimental. Instead, blood transfusions were discovered to be the “most effective means of dealing with cases of continued low blood pressure, whether due to hemorrhage or shock” [10]. The British Expeditionary Force, as well as some American Expeditionary Force hospitals, used whole blood transfusions for resuscitation, and it is estimated that several tens of thousands of transfusions were performed in 1918 alone, saving many lives [6, 8]. At the end of WWI, the Royal Army Medical Corps deemed that advances in blood transfusions were the “most important medical advance of the war” [11].

Following the Great War, the first civilian blood donor service was established in London by the British Red Cross in 1921, employing volunteers to promptly donate fresh blood when contacted [2]. Subsequently, Bernard Fantus at Cook County Hospital in Chicago established the first blood bank in 1937, with whole blood collected in bottles and stored in a refrigerator for up to 10 days [12]. The use of plasma began in 1936, when John Elliot devised a method to separate plasma from red blood cells (RBCs), hailing plasma as a blood substitute for hemorrhagic shock [13, 14]. In WWII, whole blood as well as plasma was used as the primary treatment of hemorrhagic shock [2]. In 1945, Henry Beecher published his recommendations for continued treatment of traumatic hemorrhage based on experience in WWII. He declared whole blood as the superior treatment and advocated administering plasma to “temporarily sustain blood pressure at a level compatible with life for a limited time” to enable “more time to get whole blood into the patient.” He also cited a resuscitation goal of warm skin and “good color,” which often occurred at a systolic blood pressure around 85 mmHg, noting increased blood loss from resuscitation to higher blood pressures [15].

Following WWII, the military-built national blood program in the United States collapsed. As the use of crystalloid resuscitation in traumatic shock increased, Beecher published an editorial to reiterate the lessons learned from the World Wars, stating, “for the sake of both civil and military medicine it will be well to review recent practices and their foundations. It will be tragic if the medical historians can look back on the WWII period and write of it as a time when so much was learned and so little remembered” [8, 16].

Component Therapy and an Abundance of Crystalloid

In 1951, Edwin Joseph Cohn invented a “blood cell separator” that employed centrifugal force to separate whole blood into layers of RBCs, plasma, and platelets. Promoting his work and coining the term component therapy, Cohn further popularized the use of blood fractionation and the transfusion of blood product components in lieu of whole blood [2]. Component therapy was hailed as economical, because it allowed maximal use of a limited resource, enabling multiple patients to be treated with each unit of whole blood. In addition, the transmission of hepatitis through plasma was becoming increasingly evident [14, 17]. Component transfusion would soon largely replace whole blood transfusion, in spite of a lack of supporting clinical outcome data to support its use.

In the late 1950s and early 1960s, studies performed in elective surgery patients noted extracellular fluid loss and edema at sites of tissue injury in addition to acute blood loss [18, 19]. To further evaluate these fluid shifts in traumatic hemorrhagic shock, Tom Shires et al. performed a study in dogs, concluding that whole blood should remain the replacement for lost blood in hemorrhagic shock. However, the investigators also stated a “judicious amount” of adjunctive Ringer’s lactate (LR) could be of value to replace additional fluid losses [20]. This spawned a resurgence of crystalloid use in surgery and trauma patients in an attempt to replace these losses.

In response to the increased use of crystalloid in hemorrhagic shock, Francis Moore and Shires wrote an editorial asking for a return to “moderation” in the use of crystalloid, stating “no conceivable interpretation of these data would justify the use of such excessive balanced salt solution for early replacement in hemorrhage. Neither is the use of saline solutions meant to be a substitute for whole blood. Whole blood is still the primary therapy for blood loss shock” [21]. In spite of these preeminent surgeons’ continued emphasis that acute blood loss should be replaced with whole blood, as well as a continued lack of clinical data supporting the use of fractionated blood, in 1970, the American Medical Association published their recommendation that whole blood no longer be routinely used, stating that patients in hemorrhagic shock should be transfused with RBCs supplemented by balanced salt solutions or plasma expanders, and this became the standard of care [22]. Practice thus shifted to primarily RBC transfusions and an increased use of crystalloid for hemorrhagic shock. Large-volume crystalloid resuscitation became increasingly common during the Vietnam War and subsequently spread to civilian trauma centers, despite a paucity of studies validating its safety and efficacy in treating exsanguinating hemorrhage [23, 24].

In 1976, C. James Carrico and Shires outlined a therapeutic plan for traumatic hemorrhage, including an infusion of 1–2 l of LR until whole blood was available for transfusion [25]. Similar to Moore and Shires editorial in 1967, their recommendations were also interpreted as an endorsement for crystalloid resuscitation in acute blood loss and further popularized large-volume crystalloid resuscitation in the treatment of hemorrhagic shock [23]. Defending the resuscitation strategy of RBCs and large volumes of crystalloid, Shackford et al. performed a study comparing RBCs and crystalloid resuscitation to whole blood and crystalloid, finding RBCs and LR could be used (with an average of 6.5 units of RBCs) without producing coagulopathy [26]. However, others remained hesitant to adopt this strategy, noting those with severe trauma were often thrombocytopenic and coagulopathic. They expressed a concern that RBC and crystalloid resuscitation would result in a further dilutional coagulopathy and continued to propose those in hemorrhagic shock should be given whole blood [27].

In the late 1970s and 1980s, studies touting the benefit of “supratherapeutic resuscitation” appeared in the surgical literature. These papers proposed the benefit of maintaining normal values of systolic blood pressure (SBP), urine output (UOP), base deficit, hemoglobin, and cardiac index in an attempt to optimize tissue perfusion [28,29,30,31,32,33]. This exacerbated the trend toward large-volume crystalloid administration and further away from the concepts expressed by Cannon and Beecher based on their experiences during the Great Wars [15, 34]. However, when supratherapeutic resuscitation was studied decades later in a prospective and randomized setting, it was shown to be of no benefit and resulted in increased isotonic fluid infusion, diminished intestinal perfusion, as well as a greater frequency of abdominal compartment syndrome, multiple organ failure, and mortality [35,36,37].

As studies were recognizing the adverse effects of large-volume crystalloid infusion, a resurgence of interest in traumatic coagulopathy occurred. In 1982, the “bloody vicious cycle,” now known as the “lethal triad,” was coined, citing a frequent downward spiral in severe trauma involving coagulopathy, acidosis, and hypothermia (Fig. 27.1) [38]. While a large percentage of patients who arrived in the emergency department after severe trauma were already coagulopathic, portending a poor outcome [39,40,41,42], large-volume crystalloid exacerbated all aspects of this deadly triad [38]. Volumes of crystalloid greater than 1.5 l result in increased mortality [43]. Hypothermia, exacerbated by the administration of unwarmed fluids, also reduces platelet function, coagulation factor activity, and fibrinogen synthesis [44,45,46]. Crystalloids high in chloride compound cause acidosis, and as a result, significantly more blood products are required to achieve hemodynamically normal parameters. Acidosis also affects platelet aggregation and significantly impairs coagulation factor activity [46, 47]. As surgeons recognized the lethality of the bloody vicious cycle, ways to prevent and treat it were investigated and adopted.

The bloody vicious cycle. (Adapted from Kashuk et al. [38])

Damage Control Resuscitation

The next watershed event in the treatment of severe trauma was the concept of damage control resuscitation (DCR). Returning to the principles of hypotensive and hemostatic resuscitation previously advocated and performed in the eras of WWI and WWII, the focus changed to rapidly controlling hemorrhage, limiting crystalloid use, and infusing blood products to a safe, but lower than normal, blood pressure until operative control of bleeding is established. The protective effect of permissive hypotension prior to hemorrhage control was subsequently confirmed in a randomized controlled trial, which revealed control of bleeding prior to resuscitation to a normal blood pressure resulted in improved outcomes in penetrating and blunt trauma patients [48, 49]. As institutions adopted these strategies, studies investigating DCR protocols revealed a decrease in crystalloid and RBC use, less incidence of the “lethal triad,” and improved 24-hour and 30-day survival [50,51,52].

The Change in Component Ratios: Reinitiation of Plasma and Platelet Resuscitation

In addition to a return to hypotensive resuscitation and prompt surgical control of bleeding, transfusion protocols shifted to include early administration of platelets and plasma in addition to RBCs. This was sparked by studies performed in computer-based simulations, as well as animal studies, that revealed higher ratios of plasma and platelets to RBCs were necessary to prevent and correct coagulopathy in traumatic hemorrhage [53,54,55,56,57]. Moreover, additional beneficial effects of plasma in hemorrhagic shock were being discovered, such as the promotion of vascular stability and restoration of the endothelial glycocalyx, which decrease extracellular fluid loss in shock [58,59,60].

In 2004, US combat hospitals shifted to an initial massive transfusion ratio of 1:1:1 plasma, platelets, and RBCs. This spawned a retrospective review at a US Army combat hospital in Iraq, which revealed a mortality reduction as the plasma to RBC ratio increased, noting a greater than 50% reduction of mortality in those who received a 1:1.4 ratio of plasma to red blood cells compared to those who received 1:8. This mortality benefit was primarily due to decreasing early death from hemorrhage [61]. Subsequent retrospective and cohort trials were performed in both military and civilian trauma, confirming improved outcomes in those who received an increased ratio of plasma (Table 27.1) [40, 62,63,64,65,66,67,68,69,70] and platelet transfusions (Table 27.2) [23, 40, 62, 64, 71,72,73]. In addition, these studies demonstrated the importance of prompt, effective resuscitation, revealing uncontrolled hemorrhagic deaths to occur within 6 hours of injury [52, 61, 64, 74,75,76].

While it became established that increased plasma and platelet transfusions were associated with improved survival, the exact ratio for this effect on mortality remained unknown. Varying ratios of blood products were used in these studies, and they did not agree on whether increased administration of plasma and platelets resulted in an increased incidence of complications, such as transfusion-related acute lung injury (TRALI), acute respiratory distress syndrome (ARDS), and multiorgan system failure (MODS). Also, a limitation of these retrospective studies was highlighted by Snyder et al., who focused on the time it took to obtain and administer varying blood components. Plasma takes time to be prepared and platelets must be constantly agitated during storage; therefore, they are only available to those who survive long enough to receive them from the blood bank. Noting the potential flaw of survival bias inherent in retrospective analysis, this work indicated the likelihood of receiving a high ratio of plasma to RBCs increased with survival time [68].

As a result, the Prospective, Observational, Multicenter, Major Trauma Transfusion (PROMMTT) study was designed as a prospective, multicenter trial to further evaluate resuscitation ratios being used at high-volume trauma centers and monitor outcomes. As seen in the previous retrospective studies, hemorrhagic deaths occurred quickly, with 60% of hemorrhagic deaths occurring within the first 3 hours of admission. In spite of clinicians attempting to transfuse a constant ratio, neither plasma nor platelet ratios were consistent across the first 24 hours, and timing of transfusions varied. Thirty minutes after admission, 67% had not received plasma and 99% had not received platelets. Notably, 3 hours after admission, past the time most patients would have already died from hemorrhage, 10% had not received plasma and 28% had not received platelets. However, the study did confirm a survival advantage at 24 hours for higher plasma and platelet ratios early in resuscitation [77].

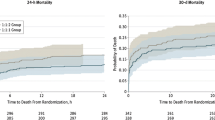

Subsequently, the Pragmatic, Randomized Optimal Platelet and Plasma Ratios (PROPPR) trial was designed to further evaluate the outcomes of frequently used resuscitation ratios. While it did not show an improvement in overall 24-hour survival for a balanced ratio of 1:1:1 (plasma to platelets to packed red blood cells) in comparison with 1:1:2, it revealed a decreased mortality at 3 hours (the timeframe by which most die of hemorrhage) as well as increased hemostasis and decreased death due to hemorrhage at 24 hours (9.2% of death due to hemorrhage when given 1:1:1 versus 14.6% death due to hemorrhage when given 1:1:2). Providing some allay of concern for increased transfusion complications with increased plasma and platelet administration, this study found there is no difference in the development of ARDS, MODS, venous thromboembolism, or sepsis between the ratios [78]. Further reducing apprehension, recent data has indicated that the incidence of TRALI has decreased with current blood banking practices, and while it still occurs, the rate remains low despite an increase in the use of plasma and platelets in traumatic hemorrhagic shock (1 case of TRALI per 20,000 units of plasma) [79]. Providing further evidence of the value of a return to early platelet transfusion in hemorrhage, a subsequent subanalysis of the PROPPR trial revealed those who received platelets were more likely to achieve hemostasis (94.9% vs. 73.4%) and had significantly decreased 24-hour (5.8% vs. 16.9%) and 30-day mortality (9.5% vs. 20.2%) [80]. As a result of these studies, balanced ratios to approximate whole blood were largely adopted as standard of care, and by 2015, more than 80% of American College of Surgeons Trauma Quality Improvement Program (ACS TQIP) trauma centers targeted a balanced ratio in their massive transfusion protocols [81,82,83].

Massive Transfusion Protocol Initiation and Practice

Throughout the years, there have been a variety of predictors proposed to identify those in need of massive transfusion. There are risks associated with blood transfusion, including transfusion reactions [84], pulmonary and renal dysfunction [85], and multiorgan failure [86]. Additionally, studies have revealed transfusion does not benefit those who are hemodynamically stable, even in the critically ill and trauma population [87, 88]. In WWII, Beecher used vital signs and “cool skin” as indicators of a need for transfusion [15], and this method continued as studies repeatedly confirmed physical exam and radial pulse could reliably predict the need for intervention [89]. Since that time, there have been a variety of MTP prediction scores, including the Field Triage Score [90], the Shock Index [91], and the Assessment of Blood Consumption score (ABC score) [92]. While these and other proposed algorithms remain important tools [93,94,95,96], there remains no universally accepted method for the initiation of massive transfusion, and most MTP activations are based on the provider’s experience and an “overall clinical gestalt” to facilitate quick decisions and prompt care [97] (see Chap. 15).

In order to facilitate prompt initiation of blood product resuscitation and decrease preventable death from hemorrhagic shock, massive transfusion protocols and DCR techniques have been adopted to the prehospital arena. When employed, prehospital plasma and RBC transfusion in lieu of early crystalloid resuscitation has shown to prevent coagulopathy and improve early outcomes through earlier initiation of lifesaving transfusion [98,99,100]. Sparking this important change in the care of severely injured trauma patients in the prehospital setting, Sperry and associates performed the Prehospital Air Medical Plasma (PAMPer) trial in 2018. While most major trauma centers had transitioned to DCR techniques and early balanced resuscitation, the prehospital care lagged behind, with continued crystalloid “standard-care” resuscitation (see Chap. 30). This landmark PAMPer trial examined the efficacy and safety of prehospital thawed plasma in those at risk for hemorrhagic shock (defined as at least one episode of SBP <90 mmHg and HR >108 bpm or SBP <70 mmHg), revealing a lower 30-day mortality (23.2% vs. 33%) and increased hemostasis when plasma was given prior to medical center arrival [99]. A secondary analysis of the study was later performed in 2019, revealing those given red blood cells and plasma to have an even larger mortality benefit and setting the stage for a continued move toward prehospital balanced resuscitation and whole blood transfusion [100].

A Return to Whole Blood

As the benefits of transfusing balanced component ratios became evident in recent years, there has been a push to return to whole blood transfusions for severe traumatic hemorrhage. As Beecher reported in 1945, “men who have lost whole blood need whole blood replacement” [15]. Since 2002, the US military has transfused thousands of units of fresh whole blood (FWB), and published data suggests use of FWB is superior to component therapy, even in a balanced ratio [23, 101,102,103]. Fresh whole blood resuscitation has shown improved survival compared to component therapy and is known to be a more concentrated, functional product [104]. When component products are recombined in a ratio of 1:1:1 of plasma, platelets, and packed red blood cells, the resulting fluid has approximately a hematocrit of 29%, viable platelet concentration of 8.8 × 108 per liter (around half the platelets of whole blood), and plasma coagulation activity of 65% (Fig. 27.2) [107]. “Thus, during massive transfusion with only blood components given in the optimal 1:1:1 ratio, the blood concentrations for each of the components lead to an anemic, thrombocytopenic and coagulopathic state near the transfusion triggers for each of the components, and administration of any one component in excess only results in dilution of the other two” [4].

In addition, current techniques for the separation and storage of component blood products have been shown to be detrimental and do not prevent a decrease in functionality over time. Plasma coagulation factor activity is known to significantly decline during storage. Thawed plasma can only be stored in liquid form for a maximum of 5 days, because the activity of “labile factors” V and VII is significantly impaired. Liquid plasma only exhibits approximately 35% of factor V activity and 10% of factor VII activity at a storage time of 26 days [108, 109]. Storage temperatures and included preservatives exacerbate hypothermia and acidosis. The longer the blood is banked (especially after 21 days), the worse the resultant acidosis, with a typical 21-day-old unit of RBCs having a pH of 6.3 [110]. In addition, a recent study has indicated current component separation and blood banking practices result in the release of cellular debris in plasma in significant enough quantity to elicit a consequential pro-inflammatory response [111].

Whole blood resuscitation, as used in the Great World Wars, was historically the primary treatment for hemorrhagic shock. The development of blood separation techniques and component therapy was intended to improve blood availability and patient outcomes. However, these advancements, in conjunction with a misguided theory of a need to replace extracellular fluid losses, resulted in the overuse of crystalloid and RBC resuscitation, to the detriment of many patients for decades. In recent years, there has been a return to proven strategies of the past, employing permissive hypotension, prompt surgical control of bleeding, and blood product resuscitation with component ratios approximating the composition of whole blood. Recent conflicts in Iraq and Afghanistan reignited an interest in whole blood transfusion, and in 2014, the US Tactical Combat Casualty Care Committee recommended whole blood should return as the treatment for hemorrhagic shock [102, 112]. Military medicine is subsequently transitioning away from blood product components with a return to warm fresh whole blood (WFWB) and cold stored low titer group O whole blood (LTOWB) [113,114,115].

In the United States, there remains a fractionated, for-profit blood banking system that largely produces component blood products, and for 40 years whole blood was generally unavailable for the civilian population. Most institutions have a massive transfusion protocol in place that employs a 1:1:1 or 1:1:2 ratio of component transfusion. However, the availability of LTOWB is increasing, and it is being used at more than 20 institutions and prehospital settings across the country [102, 116, 117]. In 2011, the Trauma and Hemostasis Oxygenation Research (THOR) Network was established as an international community of experts in traumatic hemorrhage, promoting continued investigation and improvement in the treatment of hemorrhagic shock. In recent years, there have been multiple advances in the field including improved storage methods such as cold platelets and the reinstitution of liquid and dried plasma, as well as protocol development for the implementation of whole blood transfusion in both prehospital and hospital environments [118]. Does the future lie in the past [119, 120]? Future studies are needed to determine the efficacy and safety of this pendulum swing, but while many questions remain unanswered, continued inquiry, innovation, and a hope for improved survival in traumatic hemorrhage remain.

Abbreviations

- ABC score:

-

Assessment of Blood Consumption score

- ACS TQIP:

-

American College of Surgeons Trauma Quality Improvement Program

- ARDS:

-

Acute respiratory distress syndrome

- DCR:

-

Damage control resuscitation

- FWB:

-

Fresh whole blood

- LR:

-

Ringer’s lactate

- LTOWB:

-

Cold stored low titer group O whole blood

- MODS:

-

Multiorgan system failure

- PAMPer:

-

Prehospital Air Medical Plasma trial

- PROMMTT:

-

The Prospective, Observational, Multicenter, Major Trauma Transfusion Study

- PROPPR:

-

Pragmatic, Randomized Optimal Platelet and Plasma Ratios Trial

- RBCs:

-

Red blood cells

- SBP:

-

Systolic blood pressure

- THOR:

-

Trauma Hemostasis and Oxygenation Research Network

- TRALI:

-

Transfusion-related acute lung injury

- UOP:

-

Urine output

- WFWB:

-

Warm fresh whole blood

- WWI:

-

World War I

References

Harvey W. Exercitatio anatomica de motu cordis et sanguinis in animalibus. Frankfurt am Main. 1628. pt. 1. Facsimile of the original Latin ed. (1628)-pt. 2. The English translation by Chauncey Depew. Publisher Springfield, Ill.: Thomas; 1928.

Giangrande PL. The history of blood transfusion. Br J Haematol. 2000;100:758–67.

Schmidt PJ. Transfusion in America in the eighteenth and nineteenth centuries. N Engl J Med. 1968;279(24):1319–20.

Schmidt PJ, Leacock AG. Forgotten transfusion history: John Leacock of Barbados. BMJ. 2002;325(7378):1485–7.

Blundell J. Some account of a case of obstinate vomiting, in which an attempt was made to prolong life by the injection of blood into the veins. Med Chir Trans. 1819;10(Pt 2):296–311.

Kendrick DB. Blood program in World War II. Office of the Surgeon General, Department of the Army; 1964.

Jennings CE. The intra-venous injection of fluid for severe hæmorrhage. Lancet. 1883;121(3102):228–9.

Hess JR, Thomas MJ. Blood use in war and disaster: lessons from the past century. Transfusion. 2003;43(11):1622–33.

Robertson OH. Transfusion with preserved red blood cells. Br Med J. 1918;1(2999):691.

Cannon WB. Traumatic shock. New York: D. Appleton; 1923.

Stansbury LG, Dutton RP, Stein DM, Bochicchio GV, Scalea TM, Hess JR. Controversy in trauma resuscitation: do ratios of plasma to red blood cells matter? Transfus Med Rev. 2009;23(4):255–65.

Fantus B. The therapy of the Cook County Hospital. J Am Med Assoc. 1937;109:128–31.

Elliott J. A preliminary report of a new method of blood transfusion. South Med Surg. 1936;97:7–10.

Schmidt P. The plasma wars: a history. Transfusion. 2012;52:2S–4S.

Beecher HK. Preparation of battle casualties for surgery. Ann Surg. 1945;121(6):769–92.

Beecher HK. Early care of the seriously wounded man. J Am Med Assoc. 1951;145(4):193–200.

Watson J, Pati S, Schreiber M. Plasma transfusion: history, current realities, and novel improvements. Shock. 2016;46(5):468–79.

Moore FD. Should blood be whole or in parts? N Engl J Med. 1969;280(6):327–8.

Shires T, Williams J, Brown F. Acute change in extracellular fluids associated with major surgical procedures. Ann Surg. 1961;154:803–10.

Shires T, Coln D, Carrico J, Lightfoot S. Fluid therapy in hemorrhagic shock. Arch Surg. 1964;88:688–93.

Moore FD, Shires G. Moderation. Ann Surg. 1967;166:300–1.

AMA Committee on Transfusion and Transplantation. Red blood cell transfusions. JAMA. 1970;212(1):147.

Holcomb JB. Fluid resuscitation in modern combat casualty care: lessons learned from Somalia. J Trauma. 2003;54(5 Suppl):S46–51.

Holcomb JB, Zarzabal LA, Michalek JE, Kozar RA, Spinella PC, Perkins JG, Matijevic N, Dong JF, Pati S, Wade CE, Trauma Outcomes Group, Holcomb JB, Wade CE, Cotton BA, Kozar RA, Brasel KJ, Vercruysse GA, MacLeod JB, Dutton RP, Hess JR, Duchesne JC, McSwain NE, et al. Increased platelet: RBC ratios are associated with improved survival after massive transfusion. J Trauma. 2011;71(2 Suppl 3):S318–28.

Carrico CJ, Canizaro PC, Shires GT. Fluid resuscitation following injury: rationale for the use of balanced salt solutions. Crit Care Med. 1976;4(2):46–54.

Shackford SR, Virgilio RW, Peters RM. Whole blood versus packed-cell transfusions: a physiologic comparison. Ann Surg. 1981;193(3):337–40.

Counts RB, Haisch C, Simon TL, Maxwell NG, Heimbach DM, Carrico CJ. Hemostasis in massively transfused trauma patients. Ann Surg. 1979;190(1):91.

Bishop MH, Wo CCJ, Appel PL, et al. Relationship between supranormal circulatory values, time delays, and outcome in severely traumatized patients. Crit Care Med. 1993;21:56–60.

Bishop MH, Shoemaker WC, Appel PL, Meade P, Ordog GJ, Wasserberger J, Wo CJ, Rimle DA, Kram HB, Umali R. Prospective, randomized trial of survivor values of cardiac index, oxygen delivery, and oxygen consumption as resuscitation endpoints in severe trauma. J Trauma. 1995;38(5):780–7.

Bland R, Shoemaker WC, Shabot MM. Physiologic monitoring goals for the critically ill patient. Surg Gynecol Obstet. 1978;147(6):833–41.

Boyd O, Grounds RM, Bennett ED. A randomized clinical trial of the effect of deliberate perioperative increase of oxygen delivery on mortality in high-risk surgical patients. JAMA. 1993;270(22):2699–707.

Fleming A, Bishop M, Shoemaker W, Appel P, Sufficool W, Kuvhenguwha A, Kennedy F, Wo CJ. Prospective trial of supranormal values as goals of resuscitation in severe trauma. Arch Surg. 1992;127:1175–9; discussion 1179–81.

Shoemaker WC, Appel PL, Kram HB, Waxman K, Lee TS. Prospective trial of supranormal values of survivors as therapeutic goals in high-risk surgical patients. Chest J. 1988;94(6):1176–86.

Cannon WB, Fraser J, Cowell EM. The preventive treatment of wound shock. JAMA. 1918;70:618–21.

Gattinoni L, Brazzi L, Pelosi P, Latini R, Tognoni G, Pesenti A, Fumagalli R. A trial of goal-oriented hemodynamic therapy in critically ill patients. N Engl J Med. 1995;333(16):1025–32.

Velmahos GC, Demetriades D, Shoemaker WC, Chan LS, Tatevossian R, Wo CC, Vassiliu P, Cornwell EE III, Murray JA, Roth B, Belzberg H. Endpoints of resuscitation of critically injured patients: normal or supranormal?: a prospective randomized trial. Ann Surg. 2000;232(3):409–18.

Balogh Z, McKinley BA, Cocanour CS, Kozar RA, Valdivia A, Sailors RM, Moore FA. Supranormal trauma resuscitation causes more cases of abdominal compartment syndrome. Arch Surg. 2003;138:637–42.

Kashuk JL, Moore EE, Millikan JS, Moore JB. Major abdominal vascular trauma--a unified approach. J Trauma. 1982;22(8):672–9.

Brohi K, Singh J, Heron M, Coats T. Acute traumatic coagulopathy. J Trauma. 2003;54:1127–30.

Gunter OL Jr, Au BK, Isbell JM, Mowery NT, Young PP, Cotton BA. Optimizing outcomes in damage control resuscitation: identifying blood product ratios associated with improved survival. J Trauma Inj Infect Crit Care. 2008;65(3):527–34.

MacLeod JB, Lynn M, McKenney MG, Cohn SM, Murtha M. Early coagulopathy predicts mortality in trauma. J Trauma. 2003;55(1):39–44.

Pidcoke HF, Aden JK, Mora AG, Borgman MA, Spinella PC, Dubick MA, Blackbourne LH, Cap AP. Ten-year analysis of transfusion in Operation Iraqi Freedom and Operation Enduring Freedom: increased plasma and platelet use correlates with improved survival. J Trauma Acute Care Surg. 2012;73(6):S445–52.

Ley EJ, Clond MA, Srour MK, Barnajian M, Mirocha J, Margulies DR, Salim A. Emergency department crystalloid resuscitation of 1.5 L or more is associated with increased mortality in elderly and nonelderly trauma patients. J Trauma Acute Care Surg. 2011;70(2):398–400.

Martini WZ, Holcomb JB. Acidosis and coagulopathy: the differential effects on fibrinogen synthesis and breakdown in pigs. Ann Surg. 2007;246(5):831–5.

Riha GM, Schreiber MA. Update and new developments in the management of the exsanguinating patient. J Intensive Care Med. 2013;28:46.

Waters JH, Gottlieb A, Schoenwald P, Popovich MJ, Sprung M, Nelson DR. Normal saline versus lactated ringer’s solution for intraoperative fluid management in patients undergoing abdominal aortic aneurysm repair: an outcome study. Anesth Analg. 2001;93:817–22.

Bickell WH, Wall MJ Jr, Pepe PE, Martin RR, Ginger VF, Allen MK, Mattox KL. Immediate versus delayed fluid resuscitation for hypotensive patients with penetrating torso injuries. N Engl J Med. 1994;331(17):1105–9.

Dutton RP, Mackenzie CF, Scalea TM. Hypotensive resuscitation during active hemorrhage: impact on in-hospital mortality. J Trauma. 2002;52:1141–6.

Cotton BA, Reddy N, Hatch QM, LeFebvre E, Wade CE, Kozar RA, Gill BS, Albarado R, McNutt MK, Holcomb JB. Damage control resuscitation is associated with a reduction in resuscitation volumes and improvement in survival in 390 damage control laparotomy patients. Ann Surg. 2011;254(4):598–605.

Duke MD, Guidry C, Guice J, Stuke L, Marr AB, Hunt JP, Meade P, McSwain NE, Duchesne JC. Restrictive fluid resuscitation in combination with damage control resuscitation: time for adaptation. J Trauma Acute Care Surg. 2012;73(3):674–8.

Oyeniyi BT, Fox EE, Scerbo M, Tomasek JS, Wade CE, Holcomb JB. Trends in 1029 trauma deaths at a level 1 trauma center: impact of a bleeding control bundle of care. Injury. 2017;48(1):5–12.

Cosgriff NM, Ernest E, Sauaia A, Kenny-Moynihan M, Burch JM, Galloway B. Predicting life-threatening coagulopathy in the massively transfused patient: hypothermia and acidosis revisited. J Trauma Inj Infect Crit Care. 1997;42(5):857–62.

Cinat ME, Wallace WC, Nastanski F, et al. Improved survival following massive transfusion in patients who have undergone trauma. Arch Surg. 1999;134:964–8, discussion 968–970.

Hirshberg A, Dugas M, Banez EI, Scott BG, Wall MJ Jr, Mattox KL. Minimizing dilutional coagulopathy in exsanguinating hemorrhage: a computer simulation. J Trauma. 2003;54:454–63.

Ledgerwood AM, Lucas CE. A review of studies on the effects of hemorrhagic shock and resuscitation on the coagulation profile. J Trauma. 2003;54(5 Suppl):S68–74.

Ho AM, Dion PW, Cheng CA, Karmakar MK, Cheng G, Peng Z, Ng YW. A mathematical model for fresh frozen plasma transfusion strategies during major trauma resuscitation with ongoing hemorrhage. Can J Surg. 2005;48:470–8.

Kozar R, Peng Z, Zhang R, Holcomb J, Pati S, Park P, et al. Plasma restoration of endothelial glycocalyx in a rodent model of hemorrhagic shock. Anesth Analg. 2011;112(6):1289–95.

Peng Z, Pati S, Potter D, Brown R, Holcomb J, Grill R, et al. Fresh frozen plasma lessens pulmonary endothelial inflammation and hyperpermeability after hemorrhagic shock and is associated with loss of syndecan 1. Shock. 2013;40(3):195–202.

Kozar R, Pati S. Syndecan-1 restitution by plasma after hemorrhagic shock. J Trauma Acute Care Surg. 2015;78:S83–6.

Borgman MA, Spinella PC, Perkins JG, Grathwohl KW, Repine T, Beekley AC, Sebesta J, Jenkins D, Wade CE, Holcomb JB. The ratio of blood products transfused affects mortality in patients receiving massive transfusions at a combat support hospital. J Trauma. 2007;63(4):805–13.

Duchesne JC, Hunt JP, Wahl G, et al. Review of current blood transfusions strategies in a mature level I trauma center: were we wrong for the last 60 years? J Trauma. 2008;65:272–6; discussion 276–278.

Perkins JG, Cap AP, Spinella PC, et al. An evaluation of the impact of apheresis platelets used in the setting of massively transfused trauma patients. J Trauma. 2009;66:S77–85.

Holcomb JB, Wade CE, Michalek JE, Chisholm GB, Zarzabal LA, Schreiber MA, Gonzalez EA, Pomper GJ, Perkins JG, Spinella PC, Williams KL, Park MS. Increased plasma and platelet to red blood cell ratios improves outcome in 466 massively transfused civilian trauma patients. Ann Surg. 2008;248(3):447–58.

Kashuk JL, Moore EE, Johnson JL, Haenel J, Wilson M, Moore JB, Cothren CC, Biffl WL, Banerjee A, Sauaia A. Postinjury life threatening coagulopathy: is 1: 1 fresh frozen plasma: packed red blood cells the answer? J Trauma Acute Care Surg. 2008;65(2):261–71.

Maegele M, Lefering R, Paffrath T, Tjardes T, Simanski C, Bouillon B, Working Group on Polytrauma of the German Society of Trauma Surgery (DGU). Red-blood-cell to plasma ratios transfused during massive transfusion are associated with mortality in severe multiple injury: a retrospective analysis from the Trauma Registry of the Deutsche Gesellschaft für Unfallchirurgie. Vox Sang. 2008;95(2):112–9.

Scalea TM, Bochicchio KM, Lumpkins K, et al. Early aggressive use of fresh frozen plasma does not improve outcome in critically injured trauma patients. Ann Surg. 2008;248:578–84.

Snyder CW, Weinberg JA, McGwin G Jr, et al. The relationship of blood product ratio to mortality: survival benefit or survival bias. J Trauma Inj Infect Crit Care. 2009;66(2):358–62.

Sperry JL, Ochoa JB, Gunn SR, et al. A FFP: PRBC transfusion ratio /1:1.5 is associated with a lower risk of mortality after massive transfusion. J Trauma. 2008;65:986–93.

Spinella PC, Perkins JG, Grathwohl KW, Beekley AC, Niles SE, McLaughlin DF, Wade CE, Holcomb JB. Effect of plasma and red blood cell transfusions on survival in patients with combat related traumatic injuries. J Trauma. 2008;64(2 Suppl):S69–77; discussion S77–8.

Zink KA, Sambasivan CN, Holcomb JB, Chisholm G, Schreiber MA. A high ratio of plasma and platelets to packed red blood cells in the first 6 hours of massive transfusion improves outcomes in a large multicenter study. Am J Surg. 2009;197(5):565–70; discussion 570.

Shaz BH, Dente CJ, Nicholas J, et al. Increased number of coagulation products in relationship to red blood cell products transfused improves mortality in trauma patients. Transfusion. 2010;50:493–500.

Inaba K, Lustenberger T, Rhee P, et al. The impact of platelet transfusion in massively transfused trauma patients. J Am Coll Surg. 2010;211:573–9.

Sauaia A, Moore FA, Moore EE, et al. Epidemiology of trauma deaths: a reassessment. J Trauma. 1995;38:185–93.

Heckbert SR, Vedder NB, Hoffman W, et al. Outcome after hemorrhagic shock in trauma patients. J Trauma. 1998;45:545–9.

Kauvar DS, Lefering R, Wade CE. Impact of hemorrhage on trauma outcome: an overview of epidemiology, clinical presentations, and therapeutic considerations. J Trauma. 2006;60:S3–S11.

Holcomb JB, del Junco DJ, Fox EE, Wade CE, Cohen MJ, Schreiber MA, Alarcon LH, Bai Y, Brasel KJ, Bulger EM, Cotton BA, Matijevic N, Muskat P, Myers JG, Phelan HA, White CE, Zhang J, Rahbar MH, PROMMTT Study Group. The prospective, observational, multicenter, major trauma transfusion (PROMMTT) study: comparative effectiveness of a time-varying treatment with competing risks. JAMA Surg. 2013;148(2):127–36.

Holcomb JB, Tilley BC, Baraniuk S, Fox EE, Wade CE, Podbielski JM, del Junco DJ, Brasel KJ, Bulger EM, Callcut RA, Cohen MJ, Cotton BA, Fabian TC, Inaba K, Kerby JD, Muskat P, O’Keeffe T, Rizoli S, Robinson BR, Scalea TM, Schreiber MA, Stein DM, et al. Transfusion of plasma, platelets, and red blood cells in a 1:1:1 vs a 1:1:2 ratio and mortality in patients with severe trauma: the PROPPR randomized clinical trial. JAMA. 2015;313(5):471–82.

Meyer DE, Reynolds JW, Hobbs R, Bai Y, Hartwell B, Pommerening MJ, Fox EE, Wade CE, Holcomb JB, Cotton BA. The incidence of transfusion-related acute lung injury at a large, urban tertiary medical center: a decade’s experience. Anesth Analg. 2018;127(2):444–9.

Cardenas JC, Zhang X, Fox EE, Cotton BA, Hess JR, Schreiber MA, Wade CE, Holcomb JB, PROPPR Study Group. Platelet transfusions improve hemostasis and survival in a substudy of the prospective, randomized PROPPR trial. Blood Adv. 2018;2(14):1696–704.

Camazine MN, Hemmila MR, Leonard JC, Jacobs RA, Horst JA, Kozar RA, Bochicchio GV, Nathens AB, Cryer HM, Spinella PC. Massive transfusion policies at trauma centers participating in the American College of Surgeons Trauma Quality Improvement Program. J Trauma Acute Care Surg. 2015;78(6):S48–53.

Cannon JW, Khan MA, Raja AS, Cohen MJ, Como JJ, Cotton BA, Dubose JJ, Fox EE, Inaba K, Rodriguez CJ, Holcomb JB, Duchesne JC. Damage control resuscitation in patients with severe traumatic hemorrhage: a practice management guideline from the Eastern Association for the Surgery of Trauma. J Trauma Acute Care Surg. 2017;82(3):605–17.

National Clinical Guideline Centre (UK). Major trauma: assessment and initial management. London: National Institute for Health and Care Excellence (UK); 2016.

Murdock AD, Berséus O, Hervig T, Strandenes G, Lunde TH. Whole blood: the future of traumatic hemorrhagic shock resuscitation. Shock. 2014;41:62–9.

Ferraris VA, Davenport DL, Saha SP, Austin PC, Zwischenberger JB. Surgical outcomes and transfusion of minimal amounts of blood in the operating room. Arch Surg. 2012;147(1):49–55.

Inaba K, Branco BC, Rhee P, Blackbourne LH, Holcomb JB, Teixeira PG, Shulman I, Nelson J, Demetriades D. Impact of plasma transfusion in trauma patients who do not require massive transfusion. J Am Coll Surg. 2010;210(6):957–65.

Hébert PC, Wells G, Blajchman MA, Marshall J, Martin C, Pagliarello G, Tweeddale M, Schweitzer I, Yetisir E. A multicenter, randomized, controlled clinical trial of transfusion requirements in critical care. N Engl J Med. 1999;340(6):409–17.

McIntyre L, Hebert PC, Wells G, Fergusson D, Marshall J, Yetisir E, Blajchman M, Canadian Critical Care Trials Group. Is a restrictive transfusion strategy safe for resuscitated and critically ill trauma patients? J Trauma Inj Infect Crit Care. 2004;57(3):563–8.

Holcomb JB. Manual vital signs reliably predict need for lifesaving interventions in trauma patients. J Trauma. 2005;59:821–9.

Eastridge BJ, Butler F, Wade CE, Holcomb JB, Salinas J, Champion HR, Blackbourne LH. Field triage score (FTS) in battlefield casualties: validation of a novel triage technique in a combat environment. Am J Surg. 2010;200(6):724–7; discussion 727.

DeMuro JP, Simmons S, Jax J, Gianelli SM. Application of the Shock Index to the prediction of need for hemostasis intervention. Am J Emerg Med. 2013;31(8):1260–3.

Nunez TC, Voskresensky IV, Dossett LA, Shinall R, Dutton WD, Cotton BA. Early prediction of massive transfusion in trauma: simple as ABC (assessment of blood consumption). J Trauma. 2009;66:346.

Schreiber MA, Perkins J, Kiraly L, Underwood S, Wade C, Holcomb JB. Early predictors of massive transfusion in combat casualties. J Am Coll Surg. 2007;205(4):541–5.

Cancio LC, Wade CE, West SA, Holcomb JB. Prediction of mortality and of the need for massive transfusion in casualties arriving at combat support hospitals in Iraq. J Trauma. 2008;64(2 Suppl):S51–5; discussion S55–6.

Cancio LC, Batchinsky AI, Salinas J, Kuusela T, Convertino VA, Wade CE, Holcomb JB. Heart-rate complexity for prediction of prehospital lifesaving interventions in trauma patients. J Trauma. 2008;65(4):813–9.

Liu NT, Holcomb JB, Wade CE, Batchinsky AI, Cancio LC, Darrah MI, Salinas J. Development and validation of a machine learning algorithm and hybrid system to predict the need for life-saving interventions in trauma patients. Med Biol Eng Comput. 2014;52(2):193–203.

Pommerening MJ, Goodman MD, Holcomb JB, Wade CE, Fox EE, Del Junco DJ, Brasel KJ, Bulger EM, Cohen MJ, Alarcon LH, Schreiber MA, Myers JG, Phelan HA, Muskat P, Rahbar M, Cotton BA, MPH on behalf of the PROMMTT Study Group. Clinical gestalt and the prediction of massive transfusion after trauma. Injury. 2015;46(5):807–13.

Holcomb JB, Donathan DP, Cotton BA, del Junco DJ, Brown G, Wenckstern TV, Podbielski JM, Camp EA, Hobbs R, Bai Y, Brito M. Prehospital transfusion of plasma and red blood cells in trauma patients. Prehosp Emerg Care. 2015;19(1):1–9.

Sperry JL, Guyette FX, Brown JB, Yazer MH, Triulzi DJ, Early-Young BJ, Adams PW, Daley BJ, Miller RS, Harbrecht BG, Claridge JA. Prehospital plasma during air medical transport in trauma patients at risk for hemorrhagic shock. N Engl J Med. 2018;379(4):315–26.

Guyette FX, Sperry JL, Peitzman AB, Billiar TR, Daley BJ, Miller RS, Harbrecht BG, Claridge JA, Putnam T, Duane TM, Phelan HA. Prehospital blood product and crystalloid resuscitation in the severely injured patient: a secondary analysis of the prehospital air medical plasma trial. Ann Surg. 2019. Apr. https://doi.org/10.1097/sla.0000000000003324.

Holcomb JB, Spinella PC. Optimal use of blood in trauma patients. Biologicals. 2010;38(1):72–7. Review.

Spinella PC, Cap AP. Whole blood: back to the future. Curr Opin Hematol. 2016;23:536–42.

Spinella PC, Pidcoke HF, Strandenes G, et al. Whole blood for hemostatic resuscitation of major bleeding. Transfusion. 2016;56(Suppl 2):S190–202.

Spinella PC, Perkins JG, Grathwohl KW, Beekley AC, Holcomb JB. Warm fresh whole blood is independently associated with improved survival for patients with combat-related traumatic injuries. J Trauma. 2009;66(Suppl. 4):S69–76.

Repine TB, Perkins JG, Kauvar DS, Blackborne L. The use of fresh whole blood in massive transfusion. J Trauma Acute Care Surg. 2006;60(6):S59–69.

D’Angelo MR, Dutton RP. Management of trauma-induced coagulopathy: trends and practices. AANA J. 2010;78(1):35–40.

Hess JR, Holcomb JB. Resuscitating PROPPRly. Transfusion. 2015;55(6):1362–4.

Hess JR, Holcomb JB. Transfusion practice in military trauma. Transfus Med. 2008;18(3):143–50.

Downes KA, Wilson E, Yomtovian R, Sarode R. Serial measurement of clotting factors in thawed plasma stored for 5 days. Transfusion. 2001;41:570.

Duchesne JC, McSwain NE Jr, Cotton BA, et al. Damage control resuscitation: the new face of damage control. J Trauma. 2010;69:976–90.

Koch CG, Li L, Sessler DI, et al. Duration of red-cell storage and complications after cardiac surgery. N Engl J Med. 2008;358:1229–39.

Tan YB, Rieske RR, Audia JP, Pastukh VM, Capley GC, Gillespie MN, Smith AA, Tatum DM, Duchesne JC, Kutcher ME, Kerby JD, Simmons JD. Plasma transfusion products and contamination with cellular and associated pro-inflammatory debris. J Am Coll Surg. 2019; https://doi.org/10.1016/j.jamcollsurg.2019.04.017.

Butler FK, Holcomb JB, Schreiber MA, Kotwal RS, Jenkins DA, Champion HR, Bowling F, Cap AP, Dubose JJ, Dorlac WC, Dorlac GR, McSwain NE, Timby JW, Blackbourne LH, Stockinger ZT, Strandenes G, Weiskopf RB, Gross KR, Bailey JA. Fluid resuscitation for hemorrhagic shock in tactical combat casualty care: TCCC guidelines change 14-01--2 June 2014. J Spec Oper Med. 2014;14(3):13–38.

Daniel Y, Sailliol A, Pouget T, Peyrefitte S, Ausset S, Martinaud C. Whole blood transfusion closest to the point-of-injury during French remote military operations. J Trauma Acute Care Surg. 2017;82(6):1138–46.

Fishes A, Carius B, Corley J, Dodge P, Miles E, Taylor A. Conducting fresh whole blood transfusion training. J Trauma Acute Care Surg. 2019. https://doi.org/10.1097/TA.0000000000002323.

Vanderspurt CK, Spinella PC, Cap AP, Hill R, Matthews SA, Corley JB, Gurney JM. The use of whole blood in US military operations in Iraq, Syria, and Afghanistan since the introduction of low-titer Type O whole blood: feasibility, acceptability, challenges. Transfusion. 2019;59(3):965–70.

Stubbs JR, Zielinski MD, Jenkins D. The state of the science of whole blood: lessons learned at Mayo Clinic. Transfusion. 2016;56:S173–81.

Young PP, Borge PD Jr. Making whole blood for trauma available (again): the AMERICAN Red Cross experience. Transfusion. 2019;59(S2):1439–45.

Zielinski MD, Stubbs JR, Berns KS, Glassberg E, Murdock AD, Shinar E, Sunde GA, Williams S, Yazer MH, Zietlow S, Jenkins DH. Prehospital blood transfusion programs: capabilities and lessons learned. J Trauma Acute Care Surg. 2017;82(6S):S70–8.

Holcomb JB, Jenkins DH. Get ready: whole blood is back and it’s good for patients. Transfusion. 2018;58(8):1821–3.

Zhu CS, Pokorny DM, Eastridge BJ, Nicholson SE, Epley E, Forcum J, Long T, Miramontes D, Schaefer R, Shiels M, Stewart RM, Stringfellow M, Summers R, Winckler CJ, Jenkins DH. Give the trauma patient what they bleed, when and where they need it: establishing a comprehensive regional system of resuscitation based on patient need utilizing cold-stored, low-titer O+ whole blood. Transfusion. 2019;59(S2):1429–38.

Conflicts of Interest

There are no conflicts of interest.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this chapter

Cite this chapter

Stephenson, K.J., Kalkwarf, K.J., Holcomb, J.B. (2021). Ratio-Driven Massive Transfusion Protocols. In: Moore, H.B., Neal, M.D., Moore, E.E. (eds) Trauma Induced Coagulopathy. Springer, Cham. https://doi.org/10.1007/978-3-030-53606-0_27

Download citation

DOI: https://doi.org/10.1007/978-3-030-53606-0_27

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-53605-3

Online ISBN: 978-3-030-53606-0

eBook Packages: MedicineMedicine (R0)