Abstract

The paper proposes an approach to solving multiclass pattern recognition problem in a geometric formulation based on convex hulls and convex separable sets (CS-sets). The advantage of the proposed method is the uniqueness of the resulting solution and the uniqueness of assigning each point of the source space to one of the classes. The approach also allows you to uniqelly filter the sourse data for the outliers in the data. Computational experiments using the developed approach were carried out using academic examples and test data from public libraries.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

Introduction

The paper deals with multiclass pattern recognition problem in a geometric formulation. Different approaches to solving such a problem could be found in [1, 2, 5, 8, 12, 15, 18, 19, 21]. Mathematical models for solving applied pattern recognition problems are considered in [1,2,3,4, 12, 13]. In this paper there is proposed a method for solving this problem which is based on the idea of separability of convex hulls of sets of training sample. The convex-hulls and other efficient linear approaches for solving similar problems were also proposed in [2, 6, 7, 17]. To implement this method, two auxiliary problems are considered: the problem of selecting extreme points in a finite set of points in the space \({{\mathbb {R}}^{n}}\), and the problem of determining the distance from a given point to the convex hull of a finite set of points in the space \({{\mathbb {R}}^{n}}\) using tools of known software packages for solving mathematical programming problems. An efficiency and power of the proposed approach are demonstrated on classical Irises Fischer problem [16, 22] as well as on several applied economical problems.

Let a set of n-dimensional vectors be given in the space \({{\mathbb {R}}^{n}}\)

and let there also be given a separation of this set into m classes

You need to construct a decision rule for assigning an arbitrary vector \({{{\text {a}}}_{i}}\) to one of the m classes.

There are a number of methods [14, 23] for solving this multiclass pattern recognition problem in a geometric formulation: linear classifiers, committee constructions, multiclass logistic regression, methods of support vectors, nearest neighbors, and potential functions. These methods are related to metric classification methods and are based on the ability to measure the distance between classified objects, or the distance between objects and hypersurfaces that separate classes in the feature space. This paper develops an approach related to convex hulls of subsets \({{A}_{i}},\ \text { }i=\left[ 1,m \right] \), of the family \({\text {A}}\).

1 Multiclass Pattern Recognition Algorithm Based on Convex Hulls

The main idea of the proposed approach is as follows.

Let for the given family of points \(\mathrm {A}\), which is separated into m classes \(A_i\) where \(i\in \left[ 1 , m \right] \), corresponding convex hulls \(conv\ A_{i}\) contain only points from classes \({{A}_i}\) respectively. Then it is natural to assume that any point \(x\in conv\ A_i\) represents a vector belonging to the class \(A_i\). Below, we will extend this idea for the general case.

Definition 1

The set \({{A}_{i}}\) from (2), where \(i\in \left[ 1, m\right] \), is named a convex separable set (CS-set, CSS), if the following holds

If the family \(\mathrm {A}=\left\{ A_1,\ldots ,A_m\right\} \) contains a CSS \(A_{i_0}\), then it is natural to assume that each point \(x\in conv{A_{i_0}}\) belongs to the corresponding set \(A_{i_0}\). In such a case the set \(A_{i_0}\) can be excluded from the further process of constructing the decision rule. In other words, the condition \(x\in conv A_{i_0}\) must be checked first, and further process on the assigning point x to one of classes from training sample, must continue if and only if \(x\not \in conv A_{i_0}\).

An interesting case of families (1) is when you can specify a sequence \(({{i}_{1}}, {{i}_{2}},\ldots , {{i}_{m}})\), which is a permutation for the sequence \((1,2,\ldots , m)\), and such that

The problem of constructing a decision rule for the family (1) with properties (4) will be called as CSS-solvable.

We denote by class(x) the class number of [1, m], to which the point x belongs. Thus, if \(x\in {{A}_{i}}\), \(i\in [1, m]\), then \(class(x)=i\). For the point \(x\not \in \text {A}\), the problem of pattern recognition in the geometric formulation is to construct a decision rule for determining class(x) for \(x\in {{\mathbb {R}}^{n}}{\setminus } \text {A}\).

Let’s consider the case of \(m=2\), i.e. \({\text {A}}={{A}_{1}}\,\dot{\cup }\,{{A}_{2}}\). Let’s construct convex hulls \(conv\ {{A}_{1}}\) and \(conv\ {{A}_{2}}\). It is natural to assume that if \(x\in conv\ {{A}_{1}}{\setminus } conv\ {{A}_{2}}\), then \(class(x)=1\).

Similarly, if \(x\in conv\ {{A}_{2}}{\setminus } conv\ {{A}_{1}}\), then we assume that \(class(x)=2\). If \(x\notin conv\ {{A}_{1}}\cup conv\ {{A}_{2}}\), it is natural to assume that the point x belongs to such a class whose convex hull is located closer to the point x.

Let’s denote by \( \rho \left( x, conv\ {{A}^{'}} \right) \) the distance from the point x to the convex hull of a finite set \({{A}^{'}}\subset {{\mathbb {R}}^{n}}\). Then we have

Finally, let’s consider the case of \(x\in conv\ {{A}_{1}}\cap conv\ {{A}_{2}}\).

Let’s consider the following two sets.

Logically there are possible cases:

Following the assumption mentioned above, i.e. \({x\in conv\ {{A}_{1}}\cap conv\ {{A}_{2}}}\), we have:

Case 4 leads us to the following situation.

We have a family of two subsets \({\text {A}}^{'}=\left\{ A_1^{'},A_2^{'} \right\} \), which locate inside the set \(conv\ A_1\cap conv\ A_2\). You need to construct a decision rule for assigning the vector \(x\in conv\ A_1\cap conv\ A_2\) to one of the two classes \(A_1^{'}, A_2^{'}\) and, respectively, \(A_1,A_2\).

This problem corresponds to the original one, and therefore the proposed algorithm can be re-applied. Repeating the process we become to situation when for regular sets of the form (5) there holds \(conv\ A_{1}^{''}\cap conv\ A_{2}^{''}=\varnothing \), and thus the process will be completed.

Proposition 1

If for the sets \({{A}_{1}}, {{A}_{2}}\) we have \({{A}_{1}}\cap {{A}_{2}}=\varnothing \), then algorithm described above converges, i.e. for any point x from \({{A}_{1}}\cup {{A}_{2}}\) it will lead to the case 1, 2 or 3 (*).

Proof

Let’s consider the following chain of pairs of sets

\(A={{A}_{1}}\cup {{A}_{2}},\ \ {{C}_{1}}=conv\ {{A}_{1}},{{C}_{2}}=conv\ {{A}_{2}}\):

\(A_{1}^{(1)}={{A}_{1}}\cap {{C}_{1}}\cap {{C}_{2}}\)

\(A_{2}^{(1)}={{A}_{2}}\cap {{C}_{1}}\cap {{C}_{2}}\)

\({{A}^{(1)}}=A_{1}^{(1)}\cup A_{2}^{(1)},\ \ C_{1}^{(1)}=conv\ A_{1}^{(1)},C_{2}^{(1)}=conv\text { }A_{2}^{(1)}\)

\(A_{1}^{(2)}=A_{1}^{(1)}\cap C_{1}^{(1)}\cap C_{2}^{(1)}\)

\(A_{2}^{(2)}=A_{2}^{(1)}\cap C_{1}^{(1)}\cap C_{2}^{(1)}\)

\(\ldots \)

\({{A}^{(k-1)}}=A_{1}^{(k-1)}\cup A_{2}^{(k-1)},\ \ C_{1}^{(k-1)}=conv\ A_{1}^{(k-1)},C_{2}^{(k-1)}=conv\ A_{2}^{(k-1)}\)

\(A_{1}^{(k)}=A_{1}^{(k-1)}\cap C_{1}^{(k-1)}\cap C_{2}^{(k-1)}\)

\(A_{2}^{(k)}=A_{2}^{(k-1)}\cap C_{1}^{(k-1)}\cap C_{2}^{(k-1)}\)

\({{A}^{(k)}}=A_{1}^{(k)}\cup A_{2}^{(k)},\ \ C_{1}^{(k)}=conv\ A_{1}^{(k)},C_{2}^{(k)}=conv\ A_{2}^{(k)}\)

\(\ldots \)

Let’s show that at some step one of the conditions \(A_{1}^{(k)}=\varnothing \) or \(A_{2}^{(k)}=\varnothing \) will be hold, which means that the proposed algorithm converges.

Let’s show that at any step we will have \(\left| A_{1}^{(k+1}\cup A_{2}^{(k+1)} \right| <\left| A_{1}^{(k)}\cup A_{2}^{(k)} \right| \). Since \({{A}_{1}}\cap {{A}_{2}}=\varnothing \), then \(A_{1}^{(k)}\cap A_{2}^{(k)}=\varnothing \).

On the other hand,

Let’s show that there is a point \(x\in A_{1}^{(k)}\cup A_{2}^{(k)}\) such that \(x\in conv\ A_{1}^{(k)}\cap conv\ A_{2}^{(k)}\). Let’s assume the opposite:

Therefore, we have

On the other hand, by the definition of a convex hull, we get

From (8) and (9) there follows that

From (10) there follows that

Hence, \(A_{1}^{(k)}\cap A_{2}^{(k)}\ne \varnothing \), which contradicts the assumption above. Thus, the proposition is proved.

Let’s consider the case \(m>2\).

Just as in the case of \(m=2\), the solution of the multiclass pattern recognition problem is reduced to solving a series of similar problems characterized by a sequential decreasing their dimensions. To characterize such a problem, we need to specify the following.

Let’s denote by \(\left\langle x^{'}, X^{'}, J^{'}, \mathrm {A}^{'}, C\left( \mathrm {A}^{'}\right) \right\rangle \) the problem of determining whether a point \(x^{'}\in X^{'}\) belongs to one of the classes \({{J}^{'}}\subset J\), provided by training sample \(\mathrm {A}^{'}\) with a set of convex hulls \(C\left( \mathrm {A}^{'}\right) \).

Further classification of the point \({{x}^{'}}\in {{X}^{'}}\) will be determined by the value

and will break up into 3 cases: \(M^{'}=0,\ {{M}^{'}}=1\) and \({{M}^{'}}>1\). Let rules of obtaining the problem \(\left\langle {{x}^{''}},{{X}^{''}},{{J}^{''}},\mathrm {A}^{''}, C\left( \mathrm {A}^{''}\right) , M^{''}\right\rangle \) in case \(\left| M^{'}\right| >1\) are as following:

Thus, the decision rule for a multiclass pattern recognition problem based on convex hulls can be represented as a hierarchical tree of basic problems of the form (12). And the root of this tree is the problem of the form \(Z=\left\langle x,\text { }{{\mathbb {R}}^{n}},\ J=[1, m], \mathrm {A}, C\left( \mathrm {A}\right) \right\rangle \).

Let’s denote by \(Z\left( {{J}^{'}} \right) \) a problem of the form (13), which is obtained from the problem Z for the set \(J^{'}\subseteq J\) such that

Let \(\left\{ J_{1}^{'},\ldots ,J_{{{k}_{1}}}^{'} \right\} \) be the family of all subsets of \({{J}^{'}}\subseteq J\) satisfying (14). Then for the problem Z of the first level, we get \(k_1\) problems of the form \(Z\left( J_i^ {'}\right) \), \(i\in [1, k_1]\), of the second level. For each second-level problem of the form \(Z\left( J_{i}^{'} \right) \), a series of next-level problems of the form \(Z\left( J_{i}^{'} \right) \left( J_{i}^{''} \right) \) will be obtained, and so on. A vertex in such a hierarchical tree becomes terminal if the subsample \(\mathrm {A}\) involved in its formulation is included in no more than in one convex hulls involved in its formulation. Thus, to construct a decision rule, you need to construct a hierarchical graph of problems of the form (12) by constructing convex hulls for obtaining subsamples located in at least two convex hulls of the generating problem. To implement such an algorithm for constructing a decision rule, it is necessary to have effective algorithms for solving the following problems.

-

(1)

Let a finite set \(\mathrm {A}\subseteq {{\mathbb {R}}^{n}}\) be given. You need to find all extreme points of its convex hull \(ext\left( conv\ A \right) \).

To detect either a point x is an extreme one for a finite set \(\mathrm {A}\), you could to solve a following problem LP1 from [20] (see also [24]).

Let \(a_j\) denote an element of \(\mathrm {A}\).

$$ \min x_j:\sum _{i\in I}x_ia_i=a_j, \sum _{i\in I}x_i=1, x_i\geqslant 0\;\forall \;i\in I, $$where I denotes the set \(\left\{ 1, 2, \dots , n\right\} \).

It also should be mentioned that [20] provide an efficient algorithm to solving a problem on the detecting all extreme points of a finite set \(\mathrm {A}\) by solving a sequence of problems of the form LP1.

-

(2)

Let a point x and a set \(ext\left( M \right) \) of extreme points of the polyhedron M be given. You need to determine whether the point x belongs to the polyhedron M, i.e. is it true that \(x\in conv \ exe\left( M \right) \)?

The LP 2 problem can be used to solve this problem.

Let \(x\in \mathbb {R}^n\) and \(\mathrm {A}=\left\{ a_1, a_2, \dots , a_m\right\} \subseteq \mathbb {R}^n\), and let you need to determine either a point x will belongs to \(conv\mathrm {A}\).

Let’s consider the following system.

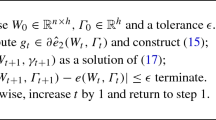

$$\begin{aligned} {\left\{ \begin{array}{ll} \sum \limits _{i=1}^m \alpha _i a_i=x,\\ \sum \limits _{i=1}^m \alpha _i=1,\\ \alpha _i\geqslant 0, i\in [1, m]. \end{array}\right. } \end{aligned}$$(15)It’s obvious that \(x\in conv\mathrm {A}\) if and only if a system above is feasible. From the other hand, such a system could be transformed into linear program LP2 of the form:

$$\begin{aligned} {\left\{ \begin{array}{ll} v+w\;\longrightarrow \;\min ,\\ \sum \limits _{i=1}^m \alpha _i a_i=x,\\ \sum \limits _{i=1}^m \alpha _i+v-w=1,\\ \alpha _i\geqslant 0, i\in [1,m],\\ v\geqslant 0, w\geqslant 0. \end{array}\right. } \end{aligned}$$(16)where v and w are correcting variables in case a system (15) is infeasible. So, a point x will belongs to \(conv\mathrm {A}\) if and only if \(g=0\).

-

(3)

Let a point b and a set \(ext\left( M \right) \) of extreme points of the polyhedron M be given.

You need to find the shortest distance from the point x to M, i.e. \(\rho \left( x, M \right) = \min \left\{ \rho \left( x, y \right) :y\in M \right\} \).

The following quadratic programming problem can be used to solve this problem:

$$\begin{aligned} {\left\{ \begin{array}{ll} \sum \limits _{i=1}^{n}{{{\left( {{x}_{i}}-{{b}_{i}} \right) }^{2}}}\ \rightarrow \ \min , \\ \sum \limits _{j=1}^{m}{{{\alpha }_{j}}}\cdot {{a}_{j}}=x, \\ \sum \limits _{j=1}^{m}{{{\alpha }_{i}}}=1, \\ {{\alpha }_{j}}\text { }\ 0,\text { }j\in [1,m]. \\ \end{array}\right. } \end{aligned}$$(17)Then we get that the required shortest distance from the point b to the convex hull of a finite set A in the space \(\mathbb {R}^n\) is equal to the following:

$$ \rho \left( b,conv\ A \right) =\sqrt{\sum \limits _{i=1}^{n}{{{\left( {{x}_{i}}-{{b}_{i}} \right) }^{2}}}}. $$

2 Application of the CSS Machine Learning Algorithm

Let’s consider several applied problems, for which proposed CSS machine learning algorithm could be used. Such problems are the problem on the bank scoring [9], analysis of financial markets [10, 11], medical diagnostics, non-destructive control, and search for reference clients for marketing activities in social networks.

Problem 1. A Classical Problem of Irises Fisher [16]

There is a training sample of 150 objects in the space \({{\mathbb {R}}^{n}}\), which is divided into 3 classes: class \({{A}_{1}}\)—Setosa, class \({{A}_{2}}\)—Versicolor and class \(A_3\)—Virginica, and each class contains 50 objects. It turns out that this well-known classical problem is CSS-solvable:

In this case, the class(x) decision rule looks as following:

Problem 2

The proposed approach was used to develop a strategy for trading shares of the Bank of the Russian FederationFootnote 1\(^,\)Footnote 2. 5 stock market indicators were selected as input parameters. Table 1 below provides a description of these parameters.

The following object classes were required to be recognized:

-

1.

Class Yes—the set of positions on the trading strategy that were closed with a profit and the profit was greater than the maximum loss for the period of holding the position.

-

2.

Class No—the set of positions on the trading strategy that were closed with a loss or the profit was less than the maximum loss for the period of holding the position.

Corresponding classes were formed based on real data obtained in the period from 26.02.10 until 03.10.19.

Description of cardinality of obtained sets, as well as the number of extreme points and belonging to convex hulls, are shown in the following Table 2.

From the table above you can conclude that a position needs to be open if and only if current status corresponds to the convex hull of the class Yes of the Level 1 or 2. And in other cases the risk is very high.

Problem 3

Convex hulls method was used for solving the problems on the bank scoring. Let’s describe the most representative examples of favorable and unfavorable cases we had meet.

Favorable Case. There are 6 input parameters, and all of them are related with financial well-being of the borrower. Data from the first stage of calculations are shown in Table 3.

Further the procedure needs to be repeating for the next 9858 non-default and 242 default items. We will not explain all stages, but it should be mentioned that an acceptable solution was obtained with 7 iterations.

Unfavorable Case. There are 5 input parameters (loan amount, loan term, borrower age, loan amount-to-age ratio, loan amount-to-loan term ratio). Data from the first stage of calculations are shown in Table 4.

In this case, the convex hulls of default and non-default sets are significantly intersected, which is due to the specifics of the problem (the share of default loans is 2.1%), as well as to small number of explanatory features. Further we plan to develop a method for solving similar problems (if one set is fully belongs to the another one and strongly blurred in it). In particular, we plan to consider a problem on the determining the balance between the percentage of points included in the convex hull and the size of this convex hull.

It is naturall that practical situations are much more complicated, but the sequence of actions described above allows you to get an efficient desicion rule.

Conclusion

The paper proposes an approach to solving multiclass pattern recognition problems in geometric formulation based on convex hulls and convex separable sets (CS-sets). Such problems often arise in the field of financial mathematics, for example, in problems of bank scoring and market analysis, as well as in various areas of diagnostics and forecasting. The main idea of the proposed approach is as follows. If for the given family of points \(\mathrm {A}\), which is separated into m classes \(A_i\) where \(i\in \left[ 1 , m \right] \), each convex hull \(conv\ A_{i}\) contains only points from class \({{A}_i}\), then we suppose that any point \(x\in conv\ A_i\) represents a vector belonging to the class \(A_i\). In the paper is introduced key definition of convex separable set (CSS) for the family of \(\mathrm {A}=\left\{ A_1,\ldots ,A_m\right\} \) subsets of \({{\mathbb {R}}^{n}}\). Based on this definition another important for this approach definition of CSS-solvable family \(\mathrm {A}=\left\{ A_1,\ldots ,A_m\right\} \) is introduced. The advantage of the proposed method is the uniqueness of the resulting solution and the uniqueness of assigning each point of the source space to one of the classes. The approach also allows you to uniqelly filter the sourse data for the outliers in the data. Computational experiments using the developed approach were carried out using academic examples and test data from public libraries. An efficiency and power of the proposed approach are demonstrated on classical Irises Fischer problem [16] as well as on several applied ecomonical problems. It is shown that classical Irises Fischer problem [16] is CSS-solvable. Such a fact allows you to expect a high efficiency of the proposed method from the applied point of view.

Notes

- 1.

When opening a position on the exchange, the position is constantly re-evaluated at current prices. Accordingly, the maximum loss on the position is the maximum amount of reduction in the value of the position relative to the value of the position when opening.

- 2.

Position hold period is the time from the moment of initial purchase or sale of a certain amount of financial instrument to the moment of reverse in relation to the first trading operation. For more information about the concept of opening and closing positions, see https://www.metatrader5.com/ru/mobile-trading/android/help/trade/positions_manage/open_positions (accessed 01.09.2019).

References

Bel’skii, A.B., Choban, V.M.: Matematicheskoe modelirovanie i algoritmy raspoznavaniya celej na izobrazheniyakh, formiruemykh pricel‘nymi sistemami letatel‘nogo apparata. Trudy MAI (66) (2013)

Gainanov, D.N.: Kombinatornaya geometriya i grafy v analize nesovmestnykh sistem i raspoznavanii obrazov, 173 p. Nauka, Moscow (2014). (English: Combinatorial Geometry and Graphs in the Infeasible Systems Theory and Pattern Recognition)

Geil, D.: Teoriya linejnykh ekonomicheskikh modelei, 418 p. Izd-vo inostrannoi literatury, Moscow (1963). English: Linear Economics Models

Godovskii, A.A.: Chislennye modeli prognozirovaniya kontaktnykh zon v rezul‘tate udarnogo vzaimodejstviya aviacionnykh konstrukcii s pregradoi pri avariinykh situaciyakh. Trudy MAI (107) (2019)

Danilenko, A.N.: Razrabotka metodov i algoritmov intellektual‘noi podderzhki prinyatiya reshenii v sistemakh upravleniya kadrami. Trudy MAI (46) (2011)

Eremin, I.I., Astaf’ev, N.N.: Vvedenie v teoriyu lineinogo i vypuklogo programmirovaniya. 191 p. Nauka, Moscow (1970). English: Introduction to the Linear and Convex Programming

Eremin, I.I.: Teoriya lineinoi optimizacii, 312 p. Izd-vo “Ekaterinburg”, Ekaterinburg (1999). English: Linear Optimization Theory

Zhuravel’, A.A., Troshko, N., E’dzhubov, L.G.: Ispol’zovanie algoritma obobshhennogo portreta dlya opoznavaniya obrazov v sudebnom pocherkovedenii. Pravovaya kibernetika, pp. 212–227. Nauka, Moscow (1970)

Zakirov, R.G.: Prognozirovanie tekhnicheskogo sostoyaniya bortovogo radioelektronnogo oborudovaniya. Trudy MAI (85) (2015)

Mazurov, V.D.: Protivorechivye situacii modelirovaniya zakonomernostei. Vychislitel‘nye sistemy, vypusk 88 (1981). English: The Committees Method in Optimization and Classification Problems. https://b-ok.xyz/book/2409123/007689

Mazurov, V.D.: Lineinaya optimizaciya i modelirovanie. UrGU, Sverdlovsk (1986). English: Linear Optimization and Modelling

Nikonov, O.I., Chernavin, F.P.: Postroenie reitingovykh grupp zaemshhikov fizicheskikh licz s primeneniem metoda komitetov. Den’gi i Kredit (11), 52–55 (2014)

Syrin, S.A., Tereshhenko, T.S., Shemyakov, A.O.: Analiz prognozov nauchno-tekhnologicheskogo razvitiya Rossii, SShA, Kitaya i Evropeiskogo Soyuza kak liderov mirovoi raketnokosmicheskoi promyshlennosti. Trudy MAI (82) (2015)

Flakh, P.: Mashinnoe obuchenie. Nauka i iskusstvo postroeniya algoritmov, kotorye izvlekayut znaniya iz dannykh. DMK Press (2015). English: Machine Learning. The Science and Art on Algorithms Construction, which Derive Knowledges from Data

Chernavin, N.P., Chernavin, F.P.: Primenenie metoda komitetov k prognozirovaniyu dvizheniya fondovykh indeksov. In: NAUKA MOLODYKH sbornik materialov mezhdunarodnoj nauchnoj konferencii, pp. 307–320. Izd-vo OOO “Rusal’yans Sova", Moscow (2015)

Chernavin, N.P., Chernavin, F.P.: Primenenie metoda komitetov v tekhnicheskom analize instrumentov finansovykh rynkov. In: Sovremennye nauchnye issledovaniya v sfere ekonomiki: sbornik rezul’tatov nauchnykh issledovanii, pp. 1052–1062. Izd-vo MCITO, Kirov (2018)

Chernikov, S.I.: Linejnye neravenstva, 191 p. Nauka, Moscow (1970). English: Linear Inequalities Theory

Fernandes-Francos, D., Fontela-Romero, J., Alonso-Betanzos, A.: One-class classification algorithm based on convex hull. Department of Computer Science, University of a Coruna, Spain (2016)

Gainanov, D.N., Mladenović, N., Dmitriy, B.: Dichotomy algorithms in the multi-class problem of pattern recognition. In: Mladenović, N., Sifaleras, A., Kuzmanović, M. (eds.) Advances in Operational Research in the Balkans. SPBE, pp. 3–14. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-21990-1_1

Pardalos, P.M., Li, Y., Hager, W.W.: Linear programming approaches to the convex hull problem in \(\mathbb{R}^m\). Comput. Math. Appl. 29(7), 23–29 (1995)

Liu, Z., Liu, J.G., Pan, C., Wang, G.: A novel geometric approach to binary classification based on scaled convex hulls. IEEE Trans. Neural Netw. 20(7), 1215–1220 (2009)

http://archive.ics.uci.edu/ml/machine-learning-databases/iris/

Koel’o, L.P., Richard, V.: Postroenie sistem mashinnogo obucheniya na yazyke Python, 302 p. DMK Press, Moscow (2016). English: Building Machine Learning Systems with Python. https://ru.b-ok.cc/book/2692544/c6f386

Rosen, J.B., Xue, G.L., Phillips, A.T.: Efficient computation of convex hull in \(\mathbb{R}^d\). In: Pardalos, P.M. (ed.) Advances in Optimization and Parallel Computing, pp. 267–292. North-Holland, Amsterdam (1992)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Gainanov, D.N., Chernavin, P.F., Rasskazova, V.A., Chernavin, N.P. (2020). Convex Hulls in Solving Multiclass Pattern Recognition Problem. In: Kotsireas, I., Pardalos, P. (eds) Learning and Intelligent Optimization. LION 2020. Lecture Notes in Computer Science(), vol 12096. Springer, Cham. https://doi.org/10.1007/978-3-030-53552-0_35

Download citation

DOI: https://doi.org/10.1007/978-3-030-53552-0_35

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-53551-3

Online ISBN: 978-3-030-53552-0

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)